Ubuntu datasophon1.2.1 二开之五:解决HIVE安装问题

- 背景

- 问题及解决

-

-

- 为hive增加HA参数

- [java.lang.NoClassDefFoundError: org/apache/tez/dag/api/TezConfiguration](#java.lang.NoClassDefFoundError: org/apache/tez/dag/api/TezConfiguration)

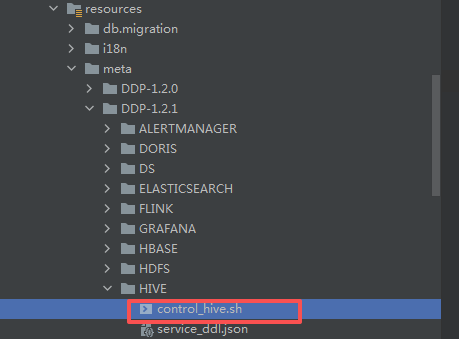

- 安装后,找不到control_hive.sh

- [hive-3.1.3 启动时报:bin/hiveserver2](#hive-3.1.3 启动时报:bin/hiveserver2)

- 在HA环境下,初始化schema失败

- [hive 初始化 schema 数据库表为空的问题](#hive 初始化 schema 数据库表为空的问题)

- ddp2,执行初始化schema失败

- [ddp1启动hiveserver2,hivemetastore没问题,但是ddp2 启动失败](#ddp1启动hiveserver2,hivemetastore没问题,但是ddp2 启动失败)

-

- 最后

背景

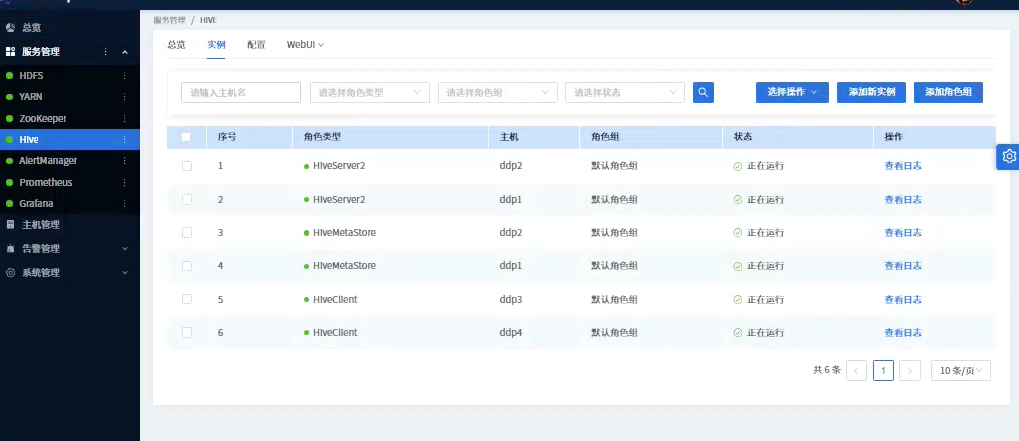

安装完YARN之后,继续往下安装HIVE。这个组件问题不少,下面逐一说明

问题及解决

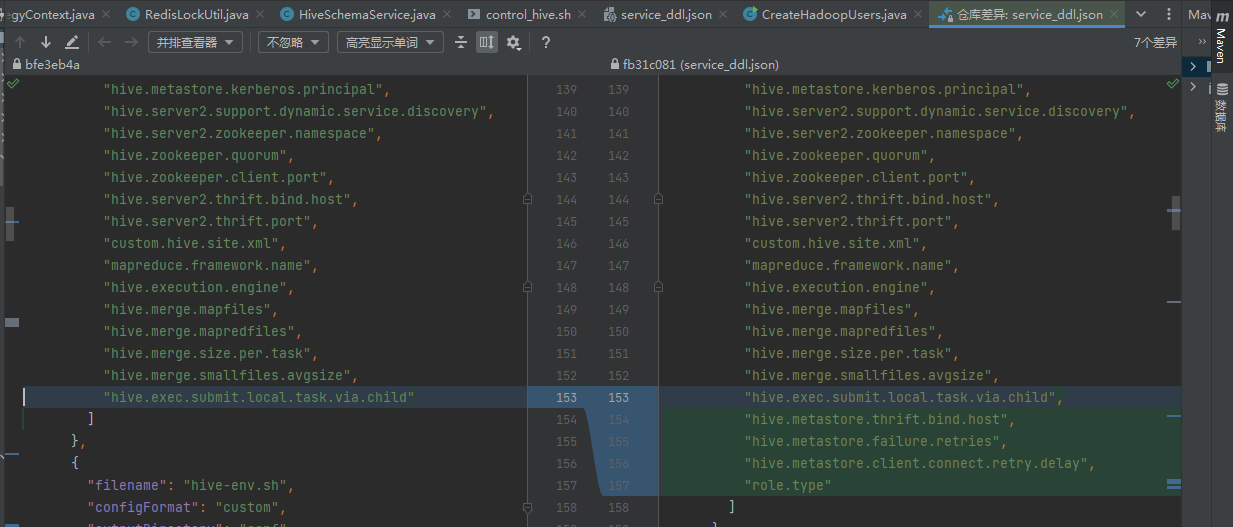

为hive增加HA参数

解决办法:我看原来的service_ddl.json,缺失HA参数,增加了几个如下截图:

javascript

{

"name": "hive.server2.thrift.bind.host",

"label": "HiveServer2绑定主机",

"description": "HiveServer2服务绑定到的主机名或IP,0.0.0.0表示绑定所有网络接口",

"required": true,

"type": "select",

"value": "0.0.0.0",

"configurableInWizard": true,

"hidden": false,

"defaultValue": "0.0.0.0",

"selectValue": ["0.0.0.0", "${host}", "localhost", "127.0.0.1"]

},

{

"name": "hive.metastore.thrift.bind.host",

"label": "MetaStore绑定主机",

"description": "Hive MetaStore服务绑定到的主机名或IP,0.0.0.0表示绑定所有网络接口",

"required": true,

"type": "select",

"value": "0.0.0.0",

"configurableInWizard": true,

"hidden": false,

"defaultValue": "0.0.0.0",

"selectValue": ["0.0.0.0", "${host}", "localhost", "127.0.0.1"]

},

{

"name": "hive.metastore.uris",

"label": "Hive MetaStore服务地址",

"description": "多个MetaStore用逗号分隔,用于HA。对于MetaStore节点应留空或注释",

"required": false,

"type": "input",

"value": "thrift://ddp1:9083,thrift://ddp2:9083",

"configurableInWizard": true,

"hidden": false,

"defaultValue": ""

},

{

"name": "hive.metastore.failure.retries",

"label": "MetaStore连接重试次数",

"description": "连接MetaStore失败时的重试次数",

"required": false,

"type": "input",

"value": "3",

"configurableInWizard": true,

"hidden": false,

"defaultValue": "3"

},

{

"name": "hive.metastore.client.connect.retry.delay",

"label": "MetaStore重试延迟",

"description": "连接MetaStore失败时的重试延迟时间",

"required": false,

"type": "input",

"value": "5s",

"configurableInWizard": true,

"hidden": false,

"defaultValue": "5s"

},

{

"name": "role.type",

"label": "角色类型",

"description": "标识节点角色类型,用于条件配置",

"required": false,

"type": "select",

"value": "combined",

"configurableInWizard": true,

"hidden": true,

"defaultValue": "combined",

"selectValue": ["metastore", "hiveserver2", "combined", "client"]

},

{

"name": "hive.metastore.server.max.threads",

"label": "MetaStore最大线程数",

"description": "MetaStore服务处理请求的最大线程数",

"required": false,

"type": "input",

"value": "100",

"configurableInWizard": true,

"hidden": false,

"defaultValue": "100"

}java.lang.NoClassDefFoundError: org/apache/tez/dag/api/TezConfiguration

at org.apache.hadoop.hive.ql.exec.tez.TezSessionPoolSession$AbstractTriggerValidator.startTriggerValidator(TezSessionPoolSession.java:74)

at org.apache.hadoop.hive.ql.exec.tez.TezSessionPoolManager.initTriggers(TezSessionPoolManager.java:207)

at org.apache.hadoop.hive.ql.exec.tez.TezSessionPoolManager.startPool(TezSessionPoolManager.java:114)

at org.apache.hive.service.server.HiveServer2.initAndStartTezSessionPoolManager(HiveServer2.java:839)

at org.apache.hive.service.server.HiveServer2.startOrReconnectTezSessions(HiveServer2.java:822)

at org.apache.hive.service.server.HiveServer2.start(HiveServer2.java:745)

at org.apache.hive.service.server.HiveServer2.startHiveServer2(HiveServer2.java:1037)

at org.apache.hive.service.server.HiveServer2.access$1600(HiveServer2.java:140)

at org.apache.hive.service.server.HiveServer2$StartOptionExecutor.execute(HiveServer2.java:1305)

at org.apache.hive.service.server.HiveServer2.main(HiveServer2.java:1149)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)解决办法:这个问题,是因为hive版本跟hadoop版本不匹配,原来hive版本为3.1.0 hadoop 3.3.3 导致启动时报类找不到或者方法错误之类,后来升级到hive 3.1.3才能解决

安装后,找不到control_hive.sh

将上面的control_hive.sh 打包到hive.tar.gz.先解压原来的hive.tar.gz到临时目录,然后上传control_hive.sh 到解包后的根目录,然后

javascript

tar -zcvf hive-3.1.3.tar.gz xxxx/hive-3.1.3/然后复制hive-3.1.3.tar.gz 到/opt/datasophon/DDP/package,然后生成md5:

md5sum hive-3.1.3.tar.gz | awk '{print $1}' > hive-3.1.3.tar.gz.md5

hive-3.1.3 启动时报:bin/hiveserver2

Error opening zip file or JAR manifest missing : bin/.../jmx/jmx_prometheus_javaagent-0.16.1.jar

Unable to determine Hadoop version information.

'hadoop version' returned:

Error opening zip file or JAR manifest missing : bin/.../jmx/jmx_prometheus_javaagent-0.16.1.jar

Error occurred during initialization of VM agent library failed to init: instrument

解决:

1.在hive-3.1.3目录下创建jmx目录,然后下载jmx_prometheus_javaagent-0.16.1.jar到该目录

2.在该目录下配置hiveserver2.yaml和metastore.yaml,内容如下:

hiveserver2.yaml

javascript

startDelaySeconds: 0

ssl: false

lowercaseOutputName: false

lowercaseOutputLabelNames: false

rules:

- pattern: ".*"metastore.yaml

javascript

# Hive Metastore JMX Exporter Configuration

startDelaySeconds: 0

ssl: false

lowercaseOutputName: false

lowercaseOutputLabelNames: false

rules:

- pattern: ".*"在HA环境下,初始化schema失败

只是在HA环境下,多节点同时(也有可能先后)初始化schema失败,比如我安装时:分配服务Master角色:

HiveServer2:ddp1,ddp2

HiveMetaStore:ddp1,ddp2

ddp1,ddp2都执行初始化失败,基于二开环境已经引入redis,节点之间加锁,只允许一个节点初始化就比较简单,涉及代码如下:

RedisLockUtil.java

javascript

// RedisLockUtil.java - 添加详细日志版本

package com.datasophon.common.redis.utils;

import com.datasophon.common.redis.manager.RedisManager;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.util.UUID;

public class RedisLockUtil {

private static final Logger logger = LoggerFactory.getLogger(RedisLockUtil.class);

// Lua脚本:原子获取锁

private static final String ACQUIRE_LOCK_SCRIPT =

"local lockKey = KEYS[1]\n" +

"local lockValue = ARGV[1]\n" +

"local timeout = ARGV[2]\n" +

"local result = redis.call('setnx', lockKey, lockValue)\n" +

"if result == 1 then\n" +

" redis.call('expire', lockKey, timeout)\n" +

" return 1\n" +

"else\n" +

" local currentValue = redis.call('get', lockKey)\n" +

" if currentValue == lockValue then\n" +

" redis.call('expire', lockKey, timeout)\n" +

" return 1\n" +

" else\n" +

" local ttl = redis.call('ttl', lockKey)\n" +

" if ttl == -1 then\n" +

" redis.call('expire', lockKey, timeout)\n" +

" end\n" +

" return 0\n" +

" end\n" +

"end";

// Lua脚本:原子释放锁

private static final String RELEASE_LOCK_SCRIPT =

"local lockKey = KEYS[1]\n" +

"local lockValue = ARGV[1]\n" +

"local currentValue = redis.call('get', lockKey)\n" +

"if currentValue == lockValue then\n" +

" return redis.call('del', lockKey)\n" +

"else\n" +

" return 0\n" +

"end";

/**

* 尝试获取分布式锁(带详细日志)

*/

public static String tryLock(String lockKey, long timeout, long waitTime) {

String lockValue = UUID.randomUUID().toString();

long startTime = System.currentTimeMillis();

logger.info("🔐 开始获取Redis锁: {}", lockKey);

logger.info(" 锁值: {}", lockValue);

logger.info(" 超时: {}秒, 等待时间: {}毫秒", timeout, waitTime);

int attempt = 0;

while (System.currentTimeMillis() - startTime < waitTime) {

attempt++;

logger.info(" 尝试获取锁 (第{}次)", attempt);

try {

// 使用RedisManager执行Lua脚本获取锁

Long result = RedisManager.execute(jedis ->

(Long) jedis.eval(ACQUIRE_LOCK_SCRIPT,

1, lockKey, lockValue, String.valueOf(timeout))

);

logger.info(" Redis执行结果: {}", result);

if (result != null && result == 1) {

logger.info("✅ 成功获取锁: {}, 值: {}", lockKey, lockValue);

return lockValue;

} else {

logger.info("⚠️ 获取锁失败,锁可能被其他进程持有");

// 检查当前锁的状态

try {

String currentHolder = RedisManager.execute(jedis -> jedis.get(lockKey));

long ttl = RedisManager.execute(jedis -> jedis.ttl(lockKey));

logger.info(" 当前锁持有者: {}, TTL: {}秒", currentHolder, ttl);

} catch (Exception e) {

logger.warn(" 无法获取锁状态: {}", e.getMessage());

}

}

// 等待后重试

long sleepTime = 100L;

logger.info(" 等待 {}ms 后重试...", sleepTime);

Thread.sleep(sleepTime);

} catch (Exception e) {

logger.error("❌ 获取锁时发生异常", e);

logger.error(" 异常详情: ", e);

// 如果是连接异常,可能是Redis服务问题

if (e.getMessage() != null &&

(e.getMessage().contains("Connection") ||

e.getMessage().contains("timeout"))) {

logger.error(" ⚠️ 可能是Redis连接问题,请检查Redis服务状态");

}

try {

Thread.sleep(100);

} catch (InterruptedException ie) {

Thread.currentThread().interrupt();

break;

}

}

}

logger.warn("⏰ 在 {}ms 内未能获取锁: {}", waitTime, lockKey);

return null;

}

/**

* 释放分布式锁(带详细日志)

*/

public static boolean unlock(String lockKey, String lockValue) {

logger.info("🔓 尝试释放锁: {}", lockKey);

logger.info(" 期望锁值: {}", lockValue);

if (lockValue == null) {

logger.warn("⚠️ 锁值为null,无法释放");

return false;

}

try {

// 先获取当前锁的值

String currentValue = RedisManager.execute(jedis -> jedis.get(lockKey));

logger.info(" 当前锁的值: {}", currentValue);

Long result = RedisManager.execute(jedis ->

(Long) jedis.eval(RELEASE_LOCK_SCRIPT,

1, lockKey, lockValue)

);

boolean success = result != null && result == 1;

if (success) {

logger.info("✅ 成功释放锁: {}", lockKey);

} else {

logger.warn("⚠️ 释放锁失败或不是锁的持有者");

logger.info(" 当前值: {}, 期望值: {}", currentValue, lockValue);

if (currentValue != null && !currentValue.equals(lockValue)) {

logger.warn(" ⚠️ 锁值不匹配,可能是锁已过期或被其他进程持有");

}

}

return success;

} catch (Exception e) {

logger.error("❌ 释放锁时发生异常: {}", lockKey, e);

logger.error(" 异常堆栈: ", e);

return false;

}

}

/**

* 执行带锁的操作(带详细日志)

*/

public static <T> T executeWithLock(String lockKey, long lockTimeout, long waitTime,

LockOperation<T> operation) throws Exception {

logger.info("🔄 =========================================");

logger.info("🔄 开始执行带锁操作");

logger.info("🔄 锁键: {}", lockKey);

logger.info("🔄 锁超时: {}秒", lockTimeout);

logger.info("🔄 等待时间: {}毫秒", waitTime);

logger.info("🔄 =========================================");

String lockValue = null;

try {

// 1. 获取锁

logger.info("1️⃣ 阶段: 获取锁");

lockValue = tryLock(lockKey, lockTimeout, waitTime);

if (lockValue == null) {

logger.error("❌ 无法获取锁,操作中止");

throw new RuntimeException("Failed to acquire lock for: " + lockKey);

}

logger.info("✅ 成功获取锁,准备执行操作");

// 2. 执行实际操作

logger.info("2️⃣ 阶段: 执行操作");

T result = operation.execute();

logger.info("✅ 操作执行完成,结果: {}", result);

return result;

} catch (Exception e) {

logger.error("❌ 执行带锁操作时发生异常", e);

logger.error(" 操作类型: {}", operation.getClass().getSimpleName());

logger.error(" 异常详情: ", e);

throw e;

} finally {

// 3. 释放锁

logger.info("3️⃣ 阶段: 释放锁");

if (lockValue != null) {

boolean released = unlock(lockKey, lockValue);

if (released) {

logger.info("✅ 锁已释放");

} else {

logger.warn("⚠️ 锁释放失败,可能需要手动清理");

}

} else {

logger.info("ℹ️ 无需释放锁(未成功获取)");

}

logger.info("🔄 =========================================");

logger.info("🔄 带锁操作执行完成");

logger.info("🔄 =========================================");

}

}

@FunctionalInterface

public interface LockOperation<T> {

T execute() throws Exception;

}

/**

* 测试Redis连接

*/

public static boolean testRedisConnection() {

logger.info("🛠️ 测试Redis连接...");

try {

String result = RedisManager.execute(jedis -> jedis.ping());

boolean connected = "PONG".equals(result);

if (connected) {

logger.info("✅ Redis连接正常: {}", result);

} else {

logger.warn("⚠️ Redis连接异常,返回: {}", result);

}

return connected;

} catch (Exception e) {

logger.error("❌ Redis连接测试失败", e);

return false;

}

}

/**

* 查看锁状态

*/

public static void checkLockStatus(String lockKey) {

logger.info("🔍 检查锁状态: {}", lockKey);

try {

String value = RedisManager.execute(jedis -> jedis.get(lockKey));

long ttl = RedisManager.execute(jedis -> jedis.ttl(lockKey));

if (value == null) {

logger.info(" 锁不存在或已过期");

} else {

logger.info(" 锁值: {}", value);

logger.info(" TTL: {}秒", ttl);

if (ttl == -1) {

logger.warn(" ⚠️ 锁没有设置过期时间(永不过期)");

} else if (ttl == -2) {

logger.info(" 锁已过期但未删除");

} else if (ttl < 60) {

logger.info(" 锁将在 {} 秒后过期", ttl);

}

}

} catch (Exception e) {

logger.error("❌ 检查锁状态失败", e);

}

}

}这个文件是新增的

HiveSchemaService.java

javascript

// HiveSchemaService.java - 修正编译错误版本

package com.datasophon.worker.service;

import com.datasophon.common.Constants;

import com.datasophon.common.redis.utils.RedisLockUtil;

import com.datasophon.common.utils.ExecResult;

import com.datasophon.common.utils.ShellUtils;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.util.ArrayList;

import java.util.HashMap;

import java.util.Map;

public class HiveSchemaService {

private static final Logger logger = LoggerFactory.getLogger(HiveSchemaService.class);

// Redis锁配置

private static final String SCHEMA_LOCK_KEY = "datasophon:hive:schema:init:lock";

private static final long LOCK_TIMEOUT = 300; // 锁超时时间(秒)

private static final long LOCK_WAIT_TIME = 30000; // 等待锁的时间(毫秒)

/**

* 初始化Hive Schema(带详细日志)

*/

public static boolean initSchemaWithLock(String installPath, String packageName) {

logger.info("🚀 =========================================");

logger.info("🚀 Hive Schema 初始化开始");

logger.info("🚀 安装路径: {}", installPath);

logger.info("🚀 包名: {}", packageName);

logger.info("🚀 Redis锁键: {}", SCHEMA_LOCK_KEY);

logger.info("🚀 =========================================");

try {

// 1. 先测试Redis连接

logger.info("1️⃣ 测试Redis连接...");

boolean redisConnected = RedisLockUtil.testRedisConnection();

if (!redisConnected) {

logger.warn("⚠️ Redis连接测试失败,尝试直接初始化");

return initSchemaDirect(installPath, packageName);

}

// 2. 检查是否已初始化

logger.info("2️⃣ 检查Schema是否已初始化...");

if (isSchemaInitialized(installPath, packageName)) {

logger.info("✅ Schema已经初始化,跳过初始化过程");

return true;

}

// 3. 使用Redis锁执行初始化

logger.info("3️⃣ 使用Redis锁执行Schema初始化...");

boolean result = RedisLockUtil.executeWithLock(

SCHEMA_LOCK_KEY,

LOCK_TIMEOUT,

LOCK_WAIT_TIME,

() -> {

logger.info("🔒 在锁保护区内,开始执行Schema初始化");

// 双重检查

if (isSchemaInitialized(installPath, packageName)) {

logger.info("✅ 在等待锁期间,Schema已被其他节点初始化");

return true;

}

return initSchemaDirect(installPath, packageName);

}

);

logger.info("🎉 Schema初始化结果: {}", result);

return result;

} catch (Exception e) {

logger.error("❌ Schema初始化过程中发生异常", e);

logger.error(" 异常堆栈: ", e);

// 异常降级:尝试直接初始化

logger.warn("🔄 尝试直接初始化(绕过Redis锁)...");

try {

boolean directResult = initSchemaDirect(installPath, packageName);

logger.info("直接初始化结果: {}", directResult);

return directResult;

} catch (Exception ex) {

logger.error("❌ 直接初始化也失败了", ex);

return false;

}

} finally {

logger.info("🚀 =========================================");

logger.info("🚀 Hive Schema 初始化结束");

logger.info("🚀 =========================================");

}

}

/**

* 直接初始化Schema(无Redis锁)

*/

public static boolean initSchemaDirect(String installPath, String packageName) {

logger.info("🔧 开始直接初始化Schema(无锁)");

String workDir = installPath + Constants.SLASH + packageName;

logger.info(" 工作目录: {}", workDir);

try {

// 1. 检查schematool是否存在

logger.info(" 检查schematool...");

ArrayList<String> checkCmd = new ArrayList<>();

checkCmd.add("bin/schematool");

checkCmd.add("-help");

// 修正点1:统一使用4个参数的方法

ExecResult checkResult = ShellUtils.execWithStatus(workDir, checkCmd, 10L);

if (!checkResult.getExecResult()) {

logger.error("❌ schematool不存在或无法执行");

return false;

}

// 2. 执行初始化

logger.info(" 执行Schema初始化...");

ArrayList<String> commands = new ArrayList<>();

commands.add("bin/schematool");

commands.add("-dbType");

commands.add("mysql");

commands.add("-initSchema");

// 设置环境变量

Map<String, String> env = new HashMap<>();

env.put("HIVE_HOME", workDir);

String hadoopHome = System.getenv("HADOOP_HOME");

if (hadoopHome != null) {

env.put("HADOOP_HOME", hadoopHome);

env.put("PATH", workDir + "/bin:" + hadoopHome + "/bin:" + System.getenv("PATH"));

}

logger.info(" 命令: {}", String.join(" ", commands));

logger.info(" 环境变量: {}", env);

// 修正点2:使用正确的参数顺序

// 注意:这里需要查看ShellUtils类的实际方法签名

// 假设ShellUtils有这个方法:execWithStatus(workDir, commands, env, timeout)

ExecResult execResult = ShellUtils.execWithStatus(workDir, commands, 300L,logger);

if (execResult.getExecResult()) {

logger.info("✅ Schema初始化成功!");

if (execResult.getExecOut() != null) {

logger.debug("输出: {}", execResult.getExecOut());

}

return true;

} else {

logger.error("❌ Schema初始化失败");

if (execResult.getExecErrOut() != null) {

logger.error("错误输出: {}", execResult.getExecErrOut());

}

return false;

}

} catch (Exception e) {

logger.error("🔥 Schema初始化异常", e);

return false;

}

}

/**

* 检查Schema是否已初始化

*/

public static boolean isSchemaInitialized(String installPath, String packageName) {

try {

ArrayList<String> commands = new ArrayList<>();

commands.add("bin/schematool");

commands.add("-dbType");

commands.add("mysql");

commands.add("-info");

String workDir = installPath + Constants.SLASH + packageName;

// 修正点3:统一参数调用

ExecResult execResult = ShellUtils.execWithStatus(workDir, commands, 30L);

if (execResult.getExecResult()) {

String output = execResult.getExecOut();

boolean initialized = output != null && output.contains("Verification completed");

logger.info("Schema初始化检查: {}", initialized ? "已初始化" : "未初始化");

return initialized;

}

logger.warn("Schema检查失败");

return false;

} catch (Exception e) {

logger.warn("检查Schema状态时异常", e);

return false;

}

}

}这个文件也是新增的

HiveServer2HandlerStrategy.java

javascript

package com.datasophon.worker.strategy;

import cn.hutool.core.io.FileUtil;

import com.datasophon.common.Constants;

import com.datasophon.common.cache.CacheUtils;

import com.datasophon.common.command.ServiceRoleOperateCommand;

import com.datasophon.common.enums.CommandType;

import com.datasophon.common.utils.ExecResult;

import com.datasophon.common.utils.PropertyUtils;

import com.datasophon.common.utils.ShellUtils;

import com.datasophon.worker.handler.ServiceHandler;

import com.datasophon.worker.service.HiveSchemaService;

import com.datasophon.worker.utils.KerberosUtils;

import java.util.ArrayList;

public class HiveServer2HandlerStrategy extends AbstractHandlerStrategy implements ServiceRoleStrategy {

public HiveServer2HandlerStrategy(String serviceName, String serviceRoleName) {

super(serviceName, serviceRoleName);

}

@Override

public ExecResult handler(ServiceRoleOperateCommand command) {

ExecResult startResult = new ExecResult();

final String workPath = Constants.INSTALL_PATH + Constants.SLASH + command.getDecompressPackageName();

ServiceHandler serviceHandler = new ServiceHandler(command.getServiceName(), command.getServiceRoleName());

if (command.getEnableRangerPlugin()) {

logger.info("start to enable hive hdfs plugin");

ArrayList<String> commands = new ArrayList<>();

commands.add("sh");

commands.add("./enable-hive-plugin.sh");

if (!FileUtil.exist(Constants.INSTALL_PATH + Constants.SLASH + command.getDecompressPackageName()

+ "/ranger-hive-plugin/success.id")) {

ExecResult execResult = ShellUtils.execWithStatus(Constants.INSTALL_PATH + Constants.SLASH

+ command.getDecompressPackageName() + "/ranger-hive-plugin", commands, 30L, logger);

if (execResult.getExecResult()) {

logger.info("enable ranger hive plugin success");

FileUtil.writeUtf8String("success", Constants.INSTALL_PATH + Constants.SLASH

+ command.getDecompressPackageName() + "/ranger-hive-plugin/success.id");

} else {

logger.info("enable ranger hive plugin failed");

return execResult;

}

}

}

logger.info("command is slave : {}", command.isSlave());

if (command.getCommandType().equals(CommandType.INSTALL_SERVICE) && !command.isSlave()) {

// 使用分布式锁初始化Hive database

logger.info("start to init hive schema with distributed lock");

// 先检查是否已初始化

if (HiveSchemaService.isSchemaInitialized(

Constants.INSTALL_PATH,

command.getDecompressPackageName())) {

logger.info("hive schema already initialized, skipping...");

} else {

// 使用Redis分布式锁进行初始化

boolean initSuccess = HiveSchemaService.initSchemaWithLock(

Constants.INSTALL_PATH,

command.getDecompressPackageName());

if (!initSuccess) {

logger.info("init hive schema failed or was executed by another node");

// 这里不返回失败,因为可能是其他节点正在初始化

// 可以记录日志并继续

} else {

logger.info("init hive schema success");

}

}

}

if (command.getEnableKerberos()) {

logger.info("start to get hive keytab file");

String hostname = CacheUtils.getString(Constants.HOSTNAME);

KerberosUtils.createKeytabDir();

if (!FileUtil.exist("/etc/security/keytab/hive.service.keytab")) {

KerberosUtils.downloadKeytabFromMaster("hive/" + hostname, "hive.service.keytab");

}

}

if (command.getCommandType().equals(CommandType.INSTALL_SERVICE)) {

String hadoopHome = PropertyUtils.getString("HADOOP_HOME");

ShellUtils.exceShell("sudo -u hdfs " + hadoopHome + "/bin/hdfs dfs -mkdir -p /user/hive/warehouse");

ShellUtils.exceShell("sudo -u hdfs " + hadoopHome + "/bin/hdfs dfs -mkdir -p /tmp/hive");

ShellUtils

.exceShell("sudo -u hdfs " + hadoopHome + "/bin/hdfs dfs -chown hive:hadoop /user/hive/warehouse");

ShellUtils.exceShell("sudo -u hdfs " + hadoopHome + "/bin/hdfs dfs -chown hive:hadoop /tmp/hive");

ShellUtils.exceShell("sudo -u hdfs " + hadoopHome + "/bin/hdfs dfs -chmod 777 /tmp/hive");

// 存在 tez 则创建软连接

final String tezHomePath = Constants.INSTALL_PATH + Constants.SLASH + "tez";

if (FileUtil.exist(tezHomePath)) {

ShellUtils.exceShell("ln -s " + tezHomePath + "/conf/tez-site.xml " + workPath + "/conf/tez-site.xml");

}

}

startResult = serviceHandler.start(command.getStartRunner(), command.getStatusRunner(),

command.getDecompressPackageName(), command.getRunAs());

return startResult;

}

}主要改了这几行:

javascript

if (command.getCommandType().equals(CommandType.INSTALL_SERVICE) && !command.isSlave()) {

// 使用分布式锁初始化Hive database

logger.info("start to init hive schema with distributed lock");

// 先检查是否已初始化

if (HiveSchemaService.isSchemaInitialized(

Constants.INSTALL_PATH,

command.getDecompressPackageName())) {

logger.info("hive schema already initialized, skipping...");

} else {

// 使用Redis分布式锁进行初始化

boolean initSuccess = HiveSchemaService.initSchemaWithLock(

Constants.INSTALL_PATH,

command.getDecompressPackageName());

if (!initSuccess) {

logger.info("init hive schema failed or was executed by another node");

// 这里不返回失败,因为可能是其他节点正在初始化

// 可以记录日志并继续

} else {

logger.info("init hive schema success");

}

}hive 初始化 schema 数据库表为空的问题

解决:即使上面redis锁加上后,hive数据库依然是空的,后来手工执行初始化发现缺少mysql8驱动,原来的jdbc 驱动类已经改成mysql8,下载jdbc驱动到hive-3.1.3/lib目录,重新打tar包,重新安装

ddp2,执行初始化schema失败

解决:上面加完驱动后,ddp1初始化成功,但是ddp2失败,我就感到奇怪,后来在ddp2上,单独执行初始化命令,发现hive用户没创建,只能回头修改hdfs安装时,创建hadoop用户,在原来基础上增加hive用户,然后编译打包(datasophon-worker),删除yarn,hdfs,hive 重头安装

代码如下:

javascript

package com.datasophon.worker.service;

import java.io.*;

import java.time.LocalDateTime;

import java.time.format.DateTimeFormatter;

import java.util.ArrayList;

import java.util.List;

public class CreateHadoopUsers {

private static final DateTimeFormatter dtf = DateTimeFormatter.ofPattern("yyyy-MM-dd HH:mm:ss");

public static void main(String[] args) {

System.out.println("=== 创建Hadoop用户(完整正确配置) ===");

try {

// 先清理(可选)

// cleanupExisting();

// 创建所有需要的组

createGroup("hadoop");

createGroup("hdfs");

createGroup("yarn");

createGroup("mapred");

// 创建用户(一次性正确配置)

createHadoopUser("hdfs", "hadoop", "hdfs");

createHadoopUser("yarn", "hadoop", "yarn");

createHadoopUser("mapred", "hadoop", "mapred");

// 新增:创建 hive 用户

createHadoopUser("hive", "hadoop", "hdfs"); // 主组 hadoop,附加组 hdfs(用于访问 HDFS)

// 详细验证

verifyCompleteSetup();

// 显示最终摘要

showFinalSummary();

log("=== Hadoop用户创建完成 ===");

} catch (Exception e) {

logError("程序异常: " + e.getMessage());

e.printStackTrace();

}

}

private static void log(String message) {

System.out.println("[" + LocalDateTime.now().format(dtf) + "] " + message);

}

private static void logError(String message) {

System.err.println("[" + LocalDateTime.now().format(dtf) + "] ERROR: " + message);

}

private static boolean executeCommand(List<String> command) throws Exception {

log("执行: " + String.join(" ", command));

ProcessBuilder pb = new ProcessBuilder(command);

Process process = pb.start();

BufferedReader reader = new BufferedReader(new InputStreamReader(process.getInputStream()));

BufferedReader errorReader = new BufferedReader(new InputStreamReader(process.getErrorStream()));

StringBuilder output = new StringBuilder();

StringBuilder errorOutput = new StringBuilder();

String line;

while ((line = reader.readLine()) != null) {

output.append(line).append("\n");

}

while ((line = errorReader.readLine()) != null) {

errorOutput.append(line).append("\n");

}

int exitCode = process.waitFor();

if (!output.toString().isEmpty()) {

log("输出: " + output.toString().trim());

}

if (!errorOutput.toString().isEmpty()) {

log("错误: " + errorOutput.toString().trim());

}

return exitCode == 0;

}

private static void cleanupExisting() throws Exception {

log("=== 清理现有配置 ===");

// 删除用户(包括 hive)

String[] users = {"hdfs", "yarn", "mapred", "hive"};

for (String user : users) {

log("清理用户: " + user);

List<String> command = new ArrayList<>();

command.add("userdel");

command.add("-r");

command.add("-f");

command.add(user);

executeCommand(command);

}

// 删除组(稍后创建)

String[] groups = {"hdfs", "yarn", "mapred", "hadoop"};

for (String group : groups) {

log("清理组: " + group);

List<String> command = new ArrayList<>();

command.add("groupdel");

command.add(group);

executeCommand(command);

}

}

private static void createGroup(String groupName) throws Exception {

log("创建组: " + groupName);

List<String> command = new ArrayList<>();

command.add("groupadd");

command.add(groupName);

if (executeCommand(command)) {

log("✓ 组创建成功: " + groupName);

} else {

log("提示: 组 " + groupName + " 可能已存在");

}

}

private static void createHadoopUser(String userName, String primaryGroup, String secondaryGroup) throws Exception {

log("创建用户: " + userName + " (主组: " + primaryGroup + ", 附加组: " + secondaryGroup + ")");

List<String> command = new ArrayList<>();

command.add("useradd");

command.add("-r"); // 系统用户

command.add("-m"); // 创建家目录

command.add("-s"); // 登录shell

command.add("/bin/bash");

command.add("-g"); // 主要组

command.add(primaryGroup);

command.add("-G"); // 附加组(可以有多个)

command.add(secondaryGroup);

command.add(userName);

if (executeCommand(command)) {

log("✓ 用户创建成功: " + userName);

showUserDetails(userName);

} else {

// 如果用户已存在,删除后重试

log("用户可能已存在,尝试删除后重新创建");

// 删除现有用户

List<String> delCommand = new ArrayList<>();

delCommand.add("userdel");

delCommand.add("-r");

delCommand.add("-f");

delCommand.add(userName);

executeCommand(delCommand);

// 重新创建

if (executeCommand(command)) {

log("✓ 用户重新创建成功: " + userName);

showUserDetails(userName);

} else {

logError("用户创建失败: " + userName);

}

}

}

private static void showUserDetails(String userName) throws Exception {

List<String> command = new ArrayList<>();

command.add("id");

command.add(userName);

ProcessBuilder pb = new ProcessBuilder(command);

Process process = pb.start();

BufferedReader reader = new BufferedReader(new InputStreamReader(process.getInputStream()));

String info = reader.readLine();

process.waitFor();

if (info != null) {

log("用户信息: " + info);

}

// 显示家目录

command.clear();

command.add("ls");

command.add("-ld");

command.add("/home/" + userName);

pb = new ProcessBuilder(command);

process = pb.start();

reader = new BufferedReader(new InputStreamReader(process.getInputStream()));

String homeDir = reader.readLine();

process.waitFor();

if (homeDir != null) {

log("家目录: " + homeDir);

}

}

private static void verifyCompleteSetup() throws Exception {

log("=== 完整配置验证 ===");

// 更新:包含 hive 用户

String[][] userConfigs = {

{"hdfs", "hadoop", "hdfs"},

{"yarn", "hadoop", "yarn"},

{"mapred", "hadoop", "mapred"},

{"hive", "hadoop", "hdfs"} // hive 用户验证

};

boolean allCorrect = true;

for (String[] config : userConfigs) {

String userName = config[0];

String expectedPrimary = config[1];

String expectedSecondary = config[2];

log("验证用户: " + userName);

// 检查用户是否存在

List<String> checkCmd = new ArrayList<>();

checkCmd.add("id");

checkCmd.add(userName);

ProcessBuilder pb = new ProcessBuilder(checkCmd);

Process process = pb.start();

int exitCode = process.waitFor();

if (exitCode != 0) {

logError("✗ 用户不存在: " + userName);

allCorrect = false;

continue;

}

// 获取详细组信息

List<String> groupCmd = new ArrayList<>();

groupCmd.add("id");

groupCmd.add("-gn");

groupCmd.add(userName);

pb = new ProcessBuilder(groupCmd);

process = pb.start();

BufferedReader reader = new BufferedReader(new InputStreamReader(process.getInputStream()));

String primaryGroup = reader.readLine();

process.waitFor();

groupCmd.set(1, "-Gn");

pb = new ProcessBuilder(groupCmd);

process = pb.start();

reader = new BufferedReader(new InputStreamReader(process.getInputStream()));

String allGroups = reader.readLine();

process.waitFor();

// 验证

boolean primaryOk = expectedPrimary.equals(primaryGroup);

boolean secondaryOk = allGroups != null && allGroups.contains(expectedSecondary);

if (primaryOk && secondaryOk) {

log("✓ 配置正确: 主组=" + primaryGroup + ", 包含组=" + expectedSecondary);

} else {

logError("✗ 配置错误:");

if (!primaryOk) {

logError(" 期望主组: " + expectedPrimary + ", 实际主组: " + primaryGroup);

}

if (!secondaryOk) {

logError(" 期望包含组: " + expectedSecondary + ", 实际组: " + allGroups);

}

allCorrect = false;

}

}

// 验证所有组

log("验证所有组...");

String[] groups = {"hadoop", "hdfs", "yarn", "mapred"};

for (String group : groups) {

List<String> cmd = new ArrayList<>();

cmd.add("getent");

cmd.add("group");

cmd.add(group);

ProcessBuilder pb = new ProcessBuilder(cmd);

Process process = pb.start();

int exitCode = process.waitFor();

if (exitCode == 0) {

log("✓ 组存在: " + group);

} else {

logError("✗ 组不存在: " + group);

allCorrect = false;

}

}

if (allCorrect) {

log("✓ 所有配置验证通过");

} else {

logError("存在配置问题,请检查上述错误");

}

}

private static void showFinalSummary() throws Exception {

log("=== 最终配置摘要 ===");

// 用户信息

log("用户信息:");

String[] users = {"hdfs", "yarn", "mapred", "hive"}; // 包含 hive

for (String user : users) {

List<String> cmd = new ArrayList<>();

cmd.add("id");

cmd.add(user);

ProcessBuilder pb = new ProcessBuilder(cmd);

Process process = pb.start();

BufferedReader reader = new BufferedReader(new InputStreamReader(process.getInputStream()));

String info = reader.readLine();

process.waitFor();

if (info != null) {

log(" " + info);

}

}

// 组信息

log("组信息:");

String[] groups = {"hadoop", "hdfs", "yarn", "mapred"};

for (String group : groups) {

List<String> cmd = new ArrayList<>();

cmd.add("getent");

cmd.add("group");

cmd.add(group);

ProcessBuilder pb = new ProcessBuilder(cmd);

Process process = pb.start();

BufferedReader reader = new BufferedReader(new InputStreamReader(process.getInputStream()));

String info = reader.readLine();

process.waitFor();

if (info != null) {

log(" " + info);

}

}

// 家目录

log("家目录:");

for (String user : users) {

List<String> cmd = new ArrayList<>();

cmd.add("ls");

cmd.add("-ld");

cmd.add("/home/" + user);

ProcessBuilder pb = new ProcessBuilder(cmd);

Process process = pb.start();

BufferedReader reader = new BufferedReader(new InputStreamReader(process.getInputStream()));

String info = reader.readLine();

process.waitFor();

if (info != null) {

log(" " + info);

}

}

// 额外:显示关键目录权限

log("关键目录权限:");

String[] importantDirs = {

"/opt/datasophon/hive-3.1.3",

"/opt/datasophon/hadoop-3.3.3",

"/user/hive/warehouse",

"/tmp/hive"

};

for (String dir : importantDirs) {

List<String> cmd = new ArrayList<>();

cmd.add("ls");

cmd.add("-ld");

cmd.add(dir);

ProcessBuilder pb = new ProcessBuilder(cmd);

Process process = pb.start();

BufferedReader reader = new BufferedReader(new InputStreamReader(process.getInputStream()));

String info = reader.readLine();

process.waitFor();

if (info != null) {

log(" " + info);

} else {

log(" 目录不存在: " + dir);

}

}

}

}ddp1启动hiveserver2,hivemetastore没问题,但是ddp2 启动失败

满以为上面的问题就是最后一个问题。结果发现ddp2启动这两服务失败,跟踪发现control_hive.sh的status()方法检测进程是否存在有问题(应该跟以前的hdfs 安装的脚本保持一致),修改内容如下:

javascript

status(){

if [ -f $pid ]; then

ARGET_PID=`cat $pid`

kill -0 $ARGET_PID

if [ $? -eq 0 ]

then

echo "$command is running "

else

echo "$command is not running"

exit 1

fi

else

echo "$command pid file is not exists"

exit 1

fi

}最后

安装hive之前,我觉得问题不大,没想到搞得天翻地覆,问题重重。还好坚持下来。 如需沟通:lita2lz