1. 什么是分布式锁

1.1 单机锁

想在写一个单机程序时,有两个线程同时要修改同一个变量 count:

cpp

int count = 0;

// 线程 A

count++;

// 线程 B

count++;如果不加保护,count 可能只加了 1 次(因为 ++ 不是原子操作)。这时我们会用 std::mutex:

cpp

std::mutex m;

m.lock();

count++;

m.unlock();作用:保证同一时刻,只有一个线程能执行 count++。

1.2 分布式场景

现在程序部署了 3 台服务器 (进程 A、进程 B、进程 C),它们都要修改 同一个数据库记录(比如库存)。

- 进程 A 在本机加 std::mutex,只能锁住 A 自己。

- 进程 B 和 C 完全不知道 A 在操作,它们也会同时修改数据库。

- 结果:库存超卖,数据错乱。

1.3 分布式锁

分布式锁:一个在分布式系统环境下,控制不同进程/服务器对共享资源互斥访问的机制。

- 核心目标:保证在集群环境下,同一时刻只有一个客户端能持有锁。

- 常见实现:基于 Redis(最常用)、基于 ZooKeeper、基于数据库。

- 本模块选择 :基于 Redis 实现。

1.4 分布式锁必须满足的条件

- 互斥性:任意时刻,只有一个客户端能持有锁。

- 防死锁:即使客户端崩溃,锁也能自动释放(通过超时机制)。

- 安全性:只能由加锁的客户端解锁(不能误删别人的锁)。

- 容错性:Redis 挂了怎么办?(本模块简化版暂不处理)。

2. 分布式锁的实现

2.1 基础版本:SETNX

Redis 有一个命令 SETNX (SET if Not eXists)。

cpp

SETNX lock_key unique_id- 如果 key 不存在,设置成功,返回 1(获得锁)。

- 如果 key 已存在,设置失败,返回 0(没抢到锁)。

问题:如果客户端拿到锁后崩溃了,key 永远存在,死锁!

2.2 改进版本:过期时间

cpp

SETNX lock_key unique_id

EXPIRE lock_key 30 # 30 秒后自动删除问题:两条命令不是原子的。如果 SETNX 成功,EXPIRE 之前程序挂了,还是死锁。

2.3 标准版本:原子设置

Redis 2.6.12+ 支持扩展参数:

cpp

SET lock_key unique_id NX PX 30000- NX:Not Exists(不存在才设置)。

- PX 30000:设置过期时间为 30000 毫秒。

- 原子性:要么同时成功,要么同时失败。

2.4 安全释放:Lua 脚本

释放锁时,不能直接 DEL,因为可能锁已经过期,被别的客户端拿走了。

必须校验:锁里的值是不是自己的 unique_id?

Lua

-- Lua 脚本保证原子性

if redis.call("GET", KEYS[1]) == ARGV[1] then

return redis.call("DEL", KEYS[1])

else

return 0

end2.5 可靠性保障:看门狗 (Watchdog)

如果业务执行了 40 秒,但锁 30 秒就过期了,怎么办?

- 机制:启动一个后台线程,每隔 10 秒(租期的 1/3)检查一下。

- 动作:如果我还持有锁,就把过期时间重置回 30 秒。

- 本模块体现:watchdogLoop 函数。

3. DistributedLock 类实现

3.1 类成员

| 成员变量 | 类型 | 作用 | 对应代码行 |

|---|---|---|---|

| lockName_ | string | Redis 中的 Key 名 | 私有成员 |

| lockId_ | string | 唯一标识 (UUID) | generateLockId() |

| locked_ | atomic<bool> | 本地标记:我是否持有锁 | 原子变量,无锁读 |

| holdCount_ | atomic<int> | 重入计数:同一线程加了几次 | 支持嵌套加锁 |

| ownerThreadId_ | thread::id | 记录是谁拿了锁 | 防止跨线程释放 |

| watchdogThread_ | thread* | 后台续期线程 | startWatchdog() |

| acquireCallback_ | function | 注入点:实际执行 Redis SET | 依赖注入设计 |

3.2 核心流程

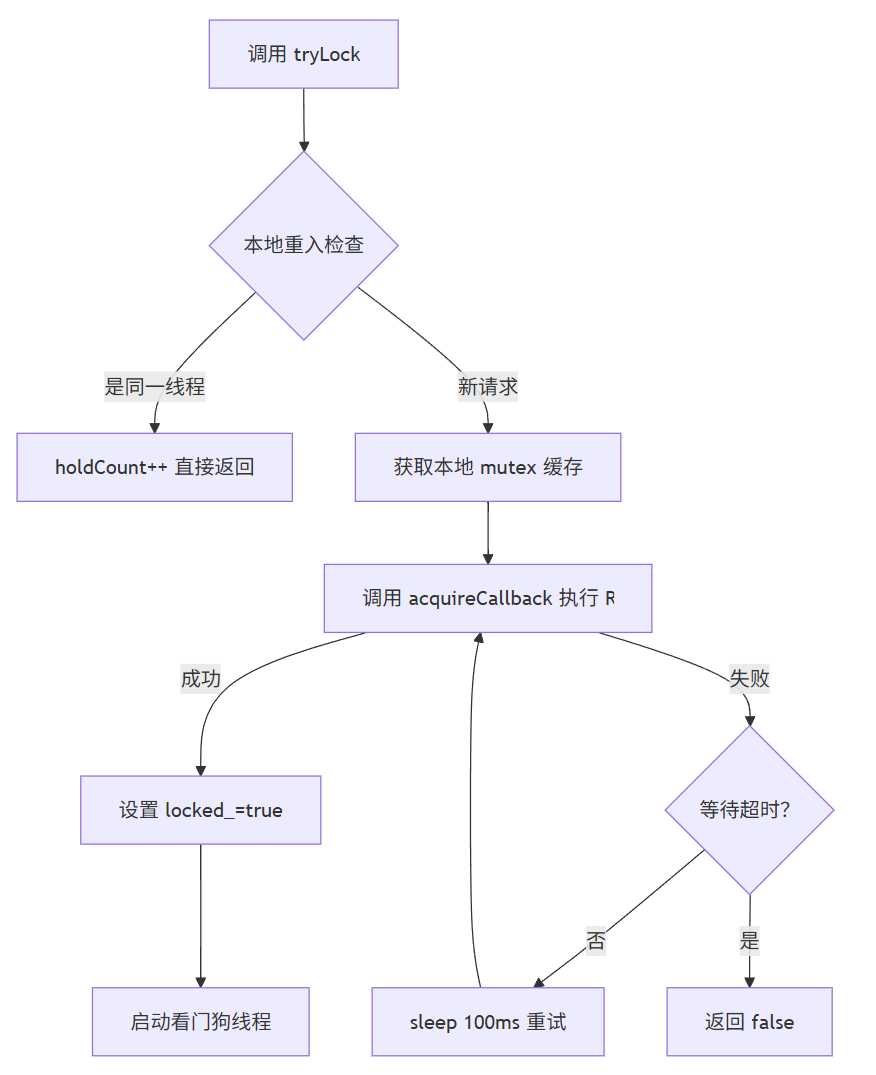

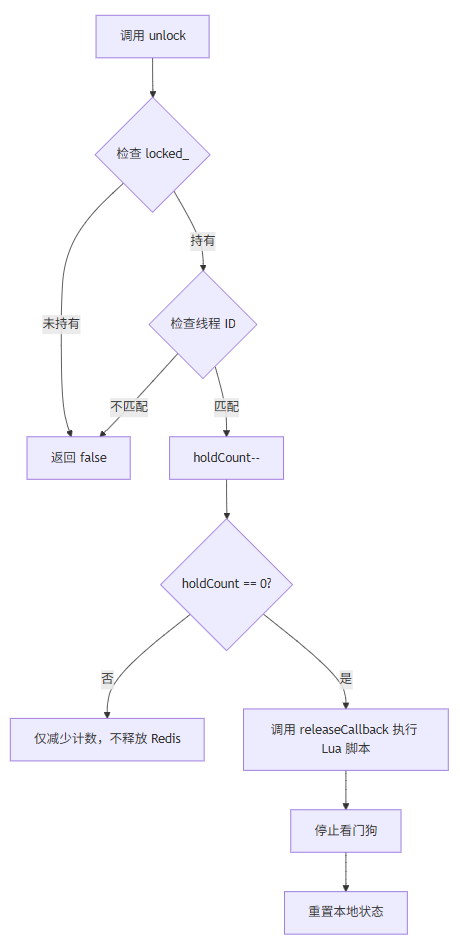

3.2.1 加锁流程

3.2.2 解锁流程

3.3 源码

3.3.1 distributed_lock.h

cpp

/**

* @file distributed_lock.h

* @brief 分布式锁模块头文件(简化版)

*

* 【模块说明】

* 基于Redis实现的分布式锁,支持4种锁类型:

* - REENTRANT: 可重入锁(同一线程可重复获取)

* - READ: 读锁(共享锁,多读并发)

* - WRITE: 写锁(排他锁,读写互斥)

* - FAIR: 公平锁(FIFO顺序获取)

*

* 【核心机制】

* 1. 锁标识(lockId):防止误释放他人持有的锁

* 2. 看门狗(watchdog):后台线程定期续期,防止业务超时丢锁

* 3. 本地缓存:同进程内用std::mutex减少Redis网络调用

* 4. 回调注入:Redis操作通过函数回调实现,便于测试和适配

*

* 【使用示例】

* @code

* // 基础用法

* DistributedLock lock("order:123");

* if (lock.lock()) {

* try {

* // 临界区业务

* processOrder();

* } catch (...) {

* lock.unlock(); // 异常时确保释放

* throw;

* }

* lock.unlock();

* }

*

* // 限时获取 + 自定义租期

* if (lock.tryLock(5000, 20000)) { // 等5秒,租20秒

* // 业务逻辑...

* lock.unlock();

* }

*

* // 注入Redis操作(对接实际客户端)

* lock.setRedisCallbacks(

* [](const string& k, const string& v, long ttl) {

* return redis_setnx_px(k, v, ttl); // 原子设置

* },

* [](const string& k, const string& v) {

* return redis_del_if_match(k, v); // Lua脚本校验后删除

* },

* [](const string& k, const string& v, long ttl) {

* return redis_pexpire_if_match(k, v, ttl); // 续期

* }

* );

* @endcode

*

* @author DFS Team

* @version 1.0

*/

#ifndef DISTRIBUTED_LOCK_H

#define DISTRIBUTED_LOCK_H

#include <string>

#include <memory>

#include <mutex>

#include <condition_variable>

#include <atomic>

#include <thread>

#include <chrono>

#include <functional>

#include <unordered_map>

namespace dfs {

namespace redis {

/**

* @enum LockType

* @brief 锁类型枚举 - 决定锁的并发控制语义

* 【选择指南】

* - 90%场景用 REENTRANT 即可

* - 读写分离场景:读多用READ,写多用WRITE

* - 公平性要求高:用FAIR(性能略低)

*/

enum class LockType {

REENTRANT, // 可重入锁:同一线程可多次获取,内部计数

READ, // 读锁:共享模式,多读并发,与写锁互斥

WRITE, // 写锁:排他模式,读写/写写均互斥

FAIR // 公平锁:按请求顺序排队,避免线程饥饿

};

/**

* @class DistributedLock

* @brief 分布式锁核心类

*

* 【线程安全说明】

* - 所有公共方法线程安全,可被多线程并发调用

* - 内部使用atomic保证状态变量原子性

* - 使用mutex+condition_variable实现阻塞/唤醒

* - 静态缓存用cacheMutex保护

*

* 【状态流转】

* 未持有 → tryAcquireLock()成功 → locked_=true, holdCount_=1 → 业务执行

* 持有中 → unlock() → holdCount_-- → 归零时真正释放Redis锁

*

* 【异常处理】

* - 所有方法返回bool表示成功/失败,调用方需检查返回值决定重试或降级

*/

class DistributedLock {

public:

// ==================== 默认常量 ====================

/// Redis键前缀,避免不同业务锁名冲突

static constexpr const char* DEFAULT_LOCK_PREFIX = "distributed:lock:";

/// 默认等待时间:0ms=立即返回

static constexpr long DEFAULT_WAIT_TIME_MS = 0L;

/// 默认租期:30秒,超时自动释放

static constexpr long DEFAULT_LEASE_TIME_MS = 30000L;

/// 重试间隔:避免忙等,减少Redis压力

static constexpr long DEFAULT_RETRY_INTERVAL_MS = 100L;

/**

* @brief 构造函数

* @param lockName 业务锁名(不含前缀),如 "order:123"

* @param lockType 锁类型,默认REENTRANT

* @param lockPrefix Redis键前缀,默认"distributed:lock:"

* @param waitTimeMs 获取锁最大等待时间(ms),0=立即返回,-1=无限等待

* @param leaseTimeMs 锁租期(ms),超时自动释放,建议≥业务执行时间

*

* @note 构造时生成唯一lockId,用于释放时校验所有权

*/

DistributedLock(const std::string& lockName,

LockType lockType = LockType::REENTRANT,

const std::string& lockPrefix = DEFAULT_LOCK_PREFIX,

long waitTimeMs = DEFAULT_WAIT_TIME_MS,

long leaseTimeMs = DEFAULT_LEASE_TIME_MS);

// 析构函数

~DistributedLock();

// 禁止拷贝

DistributedLock(const DistributedLock&) = delete;

DistributedLock& operator=(const DistributedLock&) = delete;

// 允许移动

DistributedLock(DistributedLock&& other) noexcept;

DistributedLock& operator=(DistributedLock&& other) noexcept;

/**

* @brief 1.阻塞获取锁(无限等待)

* @return true-获取成功,false-获取失败(如Redis异常)

* @note 内部调用tryLock(-1),成功则启动看门狗

*/

bool lock();

/**

* @brief 2.限时尝试获取锁(使用构造时配置的租期)

* @param waitTimeMs 最大等待时间(ms),0=立即返回,-1=无限等待

* @return true-成功,false-超时或竞争失败

*/

bool tryLock(long waitTimeMs = 0);

/**

* @brief 3.限时尝试获取锁(显式指定租期)

* @param waitTimeMs 等待时间(ms)

* @param leaseTimeMs 租期时间(ms),覆盖构造时的配置

* @return true-成功,false-失败

* @note 租期≤0时不启动看门狗,需确保业务在租期内完成

*/

bool tryLock(long waitTimeMs, long leaseTimeMs);

/**

* @brief 4.释放锁

* @return true-释放成功,false-未持有锁或非当前线程持有

* @note 重入锁需holdCount归零才真正释放Redis锁

*/

bool unlock();

/**

* @brief 5.强制释放锁(跳过权限校验)

* @note 谨慎使用!可能释放他人持有的锁,仅用于异常恢复

*/

void forceUnlock();

// 6.检查锁是否被当前线程持有

bool isHeldByCurrentThread() const;

// 7.检查锁是否被持有(仅查本地状态,非Redis实时状态)

bool isLocked() const;

// 8.获取当前线程的重入次数,未持有时返回0

int getHoldCount() const;

// 9.获取锁的完整Redis键名(含前缀)

const std::string& getLockName() const;

// 10.获取锁类型

LockType getLockType() const;

// 11.获取锁唯一标识(用于Redis值校验,防止误删)

const std::string& getLockId() const;

/**

* @brief 12.获取锁持有时长

* @return 毫秒数,未持有时返回0

* @note 基于steady_clock,不受系统时间调整影响

*/

long getHoldTime() const;

/**

* @brief 13.设置Redis操作回调 - 依赖注入核心

* @param acquireFunc 获取锁: (key, value, ttl_ms) -> bool

* @param releaseFunc 释放锁: (key, value) -> bool

* @param renewFunc 续期锁: (key, value, ttl_ms) -> bool

*

* @note

* - 回调应保证线程安全和原子性(建议用Lua脚本)

* - 返回false表示操作失败,调用方会重试或返回

* - 未设置回调时,doAcquire等方法返回true(测试模式)

*/

void setRedisCallbacks(

std::function<bool(const std::string&, const std::string&, long)> acquireFunc,

std::function<bool(const std::string&, const std::string&)> releaseFunc,

std::function<bool(const std::string&, const std::string&, long)> renewFunc

);

private:

// ==================== 成员变量 ====================

// --- 配置与标识 ---

std::string lockName_; // Redis完整键名:prefix + businessName

std::string lockId_; // 唯一标识:32位随机hex,防止误释放

LockType lockType_; // 锁类型:决定获取/释放逻辑

std::string lockPrefix_; // Redis键前缀

long waitTimeMs_; // 默认等待时间(ms)

long leaseTimeMs_; // 默认租期(ms)

// --- 状态管理(原子变量,无锁读)---

std::atomic<bool> locked_; // 当前线程是否持有锁

std::atomic<int> holdCount_; // 重入计数(REENTRANT模式)

std::atomic<long> acquireTime_; // 获取锁的时间戳(ms)

std::thread::id ownerThreadId_; // 持有锁的线程ID

// --- 线程同步 ---

std::mutex mutex_; // 配合condition_variable使用

std::condition_variable cv_; // 等待/唤醒机制,避免忙等

// --- 看门狗机制 ---

std::unique_ptr<std::thread> watchdogThread_; // 后台续期线程

std::atomic<bool> watchdogRunning_; // 看门狗运行标志

// --- Redis回调(依赖注入)---

std::function<bool(const std::string&, const std::string&, long)> acquireCallback_;

std::function<bool(const std::string&, const std::string&)> releaseCallback_;

std::function<bool(const std::string&, const std::string&, long)> renewCallback_;

// --- 静态缓存(进程内共享,需cacheMutex保护)---

/// 本地锁缓存:lockName -> std::mutex*,减少同进程内Redis竞争

static std::unordered_map<std::string, std::mutex*> localLockCache_;

/// 保护上述缓存的互斥锁

static std::mutex cacheMutex_;

/// 公平锁等待队列:lockName -> condition_variable*

static std::unordered_map<std::string, std::condition_variable*> fairLockQueue_;

/// 公平锁等待计数:lockName -> 等待线程数

static std::unordered_map<std::string, std::atomic<int>> fairLockCount_;

/// 写锁持有标志:lockName -> 是否被写锁持有

static std::unordered_map<std::string, std::atomic<bool>> writeLockHeld_;

/// 读锁计数:lockName -> 当前读锁持有数

static std::unordered_map<std::string, std::atomic<int>> readLockCount_;

// ==================== 私有方法 ====================

/// @brief 生成唯一lockId:32位十六进制随机串

std::string generateLockId();

/// @brief 获取本地mutex:缓存复用,避免重复创建

std::mutex* getLocalLock();

/// @brief 尝试获取锁:根据lockType分发到具体实现

bool tryAcquireLock();

/// @brief 释放锁:根据lockType分发到具体实现

bool releaseLock();

/// @brief 启动看门狗线程:定期续期防止锁过期

void startWatchdog(long leaseTimeMs);

/// @brief 停止看门狗:设置标志+唤醒+join

void stopWatchdog();

/// @brief 看门狗主循环:sleep→renew→check

void watchdogLoop(long leaseTimeMs);

// --- 四种锁类型的获取实现 ---

bool doAcquireReentrantLock(); // 可重入锁获取

bool doAcquireReadLock(); // 读锁获取:检查无写锁

bool doAcquireWriteLock(); // 写锁获取:检查无读/写锁

bool doAcquireFairLock(); // 公平锁获取:排队等待

// --- 四种锁类型的释放实现 ---

bool doReleaseReentrantLock(); // 可重入锁释放

bool doReleaseReadLock(); // 读锁释放:读计数--

bool doReleaseWriteLock(); // 写锁释放:唤醒等待者

bool doReleaseFairLock(); // 公平锁释放:唤醒下一个

/// @brief 续期锁:调用renewCallback

bool doRenewLock(long leaseTimeMs);

};

} // namespace redis

} // namespace dfs

#endif // DISTRIBUTED_LOCK_H3.3.2 distributed_lock.cpp

cpp

/**

* @file distributed_lock.cpp

* @brief 分布式锁模块实现文件

*

* 【实现要点】

* 1. 所有Redis操作通过回调注入,本文件不包含具体Redis客户端代码

* 2. 线程安全:atomic变量+mutex+condition_variable组合

* 3. 异常安全:所有方法返回bool,不抛异常(简化版设计)

* 4. 资源清理:析构函数确保看门狗停止,避免悬空指针

*/

#include "distributed_lock.h"

#include <sstream> // 字符串流(生成lockId)

#include <random> // 随机数生成

#include <iomanip> // hex格式输出

namespace dfs {

namespace redis {

// ============================================================================

// 静态成员定义(类外初始化)

// ============================================================================

std::unordered_map<std::string, std::mutex*> DistributedLock::localLockCache_;

std::mutex DistributedLock::cacheMutex_;

std::unordered_map<std::string, std::condition_variable*> DistributedLock::fairLockQueue_;

std::unordered_map<std::string, std::atomic<int>> DistributedLock::fairLockCount_;

std::unordered_map<std::string, std::atomic<bool>> DistributedLock::writeLockHeld_;

std::unordered_map<std::string, std::atomic<int>> DistributedLock::readLockCount_;

// ============================================================================

// 构造函数 / 析构函数 / 移动语义

// ============================================================================

/**

* @brief 构造函数实现

*

* 【初始化流程】

* 1. 成员初始化列表设置基础参数

* 2. 构建Redis完整键名:prefix + lockName

* 3. 生成唯一lockId(32位随机hex)

* 4. 初始化原子状态:locked_=false, holdCount_=0等

*/

DistributedLock::DistributedLock(const std::string& lockName,

LockType lockType,

const std::string& lockPrefix,

long waitTimeMs,

long leaseTimeMs)

: lockType_(lockType),

lockPrefix_(lockPrefix),

waitTimeMs_(waitTimeMs),

leaseTimeMs_(leaseTimeMs),

locked_(false), // 初始未持有锁

holdCount_(0), // 重入计数为0

acquireTime_(0), // 无时间戳

watchdogRunning_(false) { // 看门狗未启动

// 构建Redis键:如 "distributed:lock:order:123"

lockName_ = lockPrefix_ + lockName;

// 生成唯一标识,用于释放时校验所有权

lockId_ = generateLockId();

// 初始化线程ID(默认构造表示无效ID)

ownerThreadId_ = std::thread::id();

}

/**

* @brief 析构函数

*

* 【清理顺序】

* 1. 先停止看门狗线程,避免访问已销毁的成员

* 2. 如果仍持有锁,强制释放(防止忘记unlock导致死锁)

*

* @note 生产环境建议记录"未正常释放"的警告日志

*/

DistributedLock::~DistributedLock() {

stopWatchdog(); // 1. 停止后台线程

if (locked_) { // 2. 若仍持有锁

forceUnlock(); // 强制释放,避免资源泄漏

}

}

/**

* @brief 移动构造函数

* @note 转移资源所有权,原对象置为安全状态(避免重复释放)

*/

DistributedLock::DistributedLock(DistributedLock&& other) noexcept

: lockName_(std::move(other.lockName_)),

lockId_(std::move(other.lockId_)),

lockType_(other.lockType_),

lockPrefix_(std::move(other.lockPrefix_)),

waitTimeMs_(other.waitTimeMs_),

leaseTimeMs_(other.leaseTimeMs_),

locked_(other.locked_.load()), // 原子值拷贝

holdCount_(other.holdCount_.load()),

acquireTime_(other.acquireTime_.load()),

ownerThreadId_(other.ownerThreadId_),

watchdogThread_(std::move(other.watchdogThread_)), // 线程指针移动

watchdogRunning_(other.watchdogRunning_.load()),

acquireCallback_(std::move(other.acquireCallback_)), // 函数对象移动

releaseCallback_(std::move(other.releaseCallback_)),

renewCallback_(std::move(other.renewCallback_)) {

// 原对象置为"已移动"状态

other.locked_ = false;

other.holdCount_ = 0;

other.watchdogRunning_ = false;

// watchdogThread_移动后为空,无需额外处理

}

/**

* @brief 移动赋值运算符

* @note 先清理自身资源,再转移对方资源,最后置空对方

*/

DistributedLock& DistributedLock::operator=(DistributedLock&& other) noexcept {

if (this != &other) { // 自赋值检查

// 1. 清理当前资源

stopWatchdog();

if (locked_) {

forceUnlock();

}

// 2. 转移对方资源

lockName_ = std::move(other.lockName_);

lockId_ = std::move(other.lockId_);

lockType_ = other.lockType_;

lockPrefix_ = std::move(other.lockPrefix_);

waitTimeMs_ = other.waitTimeMs_;

leaseTimeMs_ = other.leaseTimeMs_;

locked_ = other.locked_.load();

holdCount_ = other.holdCount_.load();

acquireTime_ = other.acquireTime_.load();

ownerThreadId_ = other.ownerThreadId_;

watchdogThread_ = std::move(other.watchdogThread_);

watchdogRunning_ = other.watchdogRunning_.load();

acquireCallback_ = std::move(other.acquireCallback_);

releaseCallback_ = std::move(other.releaseCallback_);

renewCallback_ = std::move(other.renewCallback_);

// 3. 原对象置空,避免析构时重复释放

other.locked_ = false;

other.holdCount_ = 0;

other.watchdogRunning_ = false;

}

return *this;

}

// ============================================================================

// 核心锁操作实现

// ============================================================================

/**

* @brief 阻塞获取锁:委托给tryLock(-1)

*/

bool DistributedLock::lock() {

return tryLock(-1); // -1表示无限等待

}

/**

* @brief 限时获取锁(使用配置租期)

* @note 委托给三参数版本,保持代码复用

*/

bool DistributedLock::tryLock(long waitTimeMs) {

return tryLock(waitTimeMs, leaseTimeMs_);

}

/**

* @brief 核心获取逻辑:限时+指定租期

*

* 【算法流程】

* 1. 计算deadline:waitTime>0则设置超时点

* 2. 获取本地锁:同进程内先竞争本地mutex,减少Redis压力

* 3. 循环尝试:

* a. 调用tryAcquireLock()尝试获取(含重入判断)

* b. 成功则启动看门狗,返回true

* c. 失败且waitTime=0则立即返回false

* d. 未超时则wait_for重试,避免CPU忙等

*

* 【线程安全】

* - 本地锁保证同进程内串行竞争

* - 原子变量+CAS保证跨进程正确性

* - condition_variable避免空转消耗CPU

*/

bool DistributedLock::tryLock(long waitTimeMs, long leaseTimeMs) {

// 1. 计算超时时间点(使用steady_clock避免系统时间调整)

auto startTime = std::chrono::steady_clock::now();

auto deadline = (waitTimeMs > 0)

? startTime + std::chrono::milliseconds(waitTimeMs)

: std::chrono::steady_clock::time_point::max(); // 无限等待

// 2. 获取本地互斥锁(进程内优化:减少Redis网络调用)

std::mutex* localLock = getLocalLock();

std::unique_lock<std::mutex> localLockGuard(*localLock, std::defer_lock);

// 3. 尝试获取本地锁,失败说明同进程内已有线程在竞争

if (!localLockGuard.try_lock()) {

// 立即返回模式:不等待

if (waitTimeMs == 0) return false;

// 检查是否已超时

auto now = std::chrono::steady_clock::now();

if (now >= deadline) return false;

// 否则继续,让后续循环处理等待

}

// 4. 主循环:尝试获取分布式锁

while (true) {

// 4.1 尝试获取(含重入逻辑 + Redis竞争)

if (tryAcquireLock()) {

// 4.2 获取成功:启动看门狗自动续期

startWatchdog(leaseTimeMs);

return true;

}

// 4.3 获取失败:检查是否立即返回模式

if (waitTimeMs == 0) {

return false;

}

// 4.4 检查是否超时

auto now = std::chrono::steady_clock::now();

if (now >= deadline) {

return false;

}

// 4.5 未超时:等待后重试(避免忙等)

std::unique_lock<std::mutex> lock(mutex_);

// wait_for: 超时或cv_.notify_one()唤醒时返回

cv_.wait_for(lock, std::chrono::milliseconds(DEFAULT_RETRY_INTERVAL_MS));

// 循环继续,重新尝试获取

}

}

/**

* @brief 释放锁

*

* 【校验逻辑】

* 1. 检查locked_:未持有则返回false

* 2. 检查线程ID:非持有线程释放返回false

* 3. 重入计数--:未归零则仅减少计数,不真正释放Redis锁

* 4. 归零时:调用releaseLock() + 停看门狗 + 重置状态

*

* @return true-释放成功,false-未持有或非当前线程

*/

bool DistributedLock::unlock() {

// 1. 检查是否持有锁

if (!locked_) {

return false;

}

// 2. 检查线程权限:防止A线程释放B线程的锁

if (std::this_thread::get_id() != ownerThreadId_) {

return false;

}

// 3. 重入计数递减

--holdCount_;

// 4. 仅当计数归零时真正释放Redis锁

if (holdCount_ == 0) {

releaseLock(); // 调用Redis释放逻辑

stopWatchdog(); // 停止续期线程

// 重置本地状态

locked_ = false;

ownerThreadId_ = std::thread::id();

acquireTime_ = 0;

}

return true;

}

/**

* @brief 强制释放锁(跳过权限校验)

* @note 谨慎使用!可能释放他人持有的锁,仅用于异常恢复场景

*/

void DistributedLock::forceUnlock() {

releaseLock(); // 调用Redis释放

stopWatchdog(); // 停看门狗

// 重置所有状态(无论之前状态如何)

locked_ = false;

holdCount_ = 0;

ownerThreadId_ = std::thread::id();

acquireTime_ = 0;

}

// ============================================================================

// 状态查询方法(只读,线程安全)

// ============================================================================

bool DistributedLock::isHeldByCurrentThread() const {

// 双重检查:locked_为true 且 线程ID匹配

return locked_ && std::this_thread::get_id() == ownerThreadId_;

}

bool DistributedLock::isLocked() const {

// 注意:仅检查本地状态,非Redis实时状态

// 如需精确判断,需调用Redis GET key(有网络开销)

return locked_;

}

int DistributedLock::getHoldCount() const {

return holdCount_; // 原子加载,无锁读

}

const std::string& DistributedLock::getLockName() const {

return lockName_; // const引用,零拷贝

}

LockType DistributedLock::getLockType() const {

return lockType_;

}

const std::string& DistributedLock::getLockId() const {

return lockId_;

}

/**

* @brief 计算锁持有时长

* @note 使用steady_clock,不受系统时间回拨/跳跃影响

*/

long DistributedLock::getHoldTime() const {

if (!locked_ || acquireTime_ == 0) {

return 0; // 未持有则时长为0

}

auto now = std::chrono::steady_clock::now();

// 获取当前时间戳(ms)

auto duration = std::chrono::duration_cast<std::chrono::milliseconds>(

now.time_since_epoch()).count();

return duration - acquireTime_; // 差值即持有时长

}

/**

* @brief 设置Redis回调 - 依赖注入核心

* @note 使用std::move避免函数对象拷贝开销

*/

void DistributedLock::setRedisCallbacks(

std::function<bool(const std::string&, const std::string&, long)> acquireFunc,

std::function<bool(const std::string&, const std::string&)> releaseFunc,

std::function<bool(const std::string&, const std::string&, long)> renewFunc) {

acquireCallback_ = std::move(acquireFunc);

releaseCallback_ = std::move(releaseFunc);

renewCallback_ = std::move(renewFunc);

}

// ============================================================================

// 私有工具方法

// ============================================================================

/**

* @brief 生成唯一lockId

* @return 32位十六进制随机串,如 "a1b2c3d4e5f6..."

*

* 【设计目的】

* - 释放锁时校验Redis中的value是否匹配lockId

* - 防止A线程误删B线程持有的锁(网络延迟导致)

* - 128位熵,碰撞概率极低

*/

std::string DistributedLock::generateLockId() {

std::random_device rd; // 真随机种子

std::mt19937 gen(rd()); // Mersenne Twister随机引擎

std::uniform_int_distribution<> dis(0, 15); // 0-15均匀分布(hex字符)

std::ostringstream oss;

oss << std::hex; // 十六进制输出

for (int i = 0; i < 32; ++i) { // 32字符 = 128位

oss << dis(gen);

}

return oss.str();

}

/**

* @brief 获取本地mutex - 缓存复用

*

* 【缓存策略】

* - key: lockName_ (Redis完整键)

* - value: std::mutex* (堆分配)

* - 保护: cacheMutex_ 全局锁

*

* 【注意】

* - 本地锁仅优化同进程内竞争,跨进程仍需Redis

* - 未实现缓存清理,长期运行需注意内存(生产环境可加LRU)

*/

std::mutex* DistributedLock::getLocalLock() {

std::lock_guard<std::mutex> guard(cacheMutex_); // 保护缓存访问

auto it = localLockCache_.find(lockName_);

if (it != localLockCache_.end()) {

return it->second; // 缓存命中,直接返回

}

// 缓存未命中:创建新mutex(堆分配,生命周期与程序相同)

auto* mutex = new std::mutex();

localLockCache_[lockName_] = mutex;

return mutex;

}

/**

* @brief 尝试获取锁 - 根据lockType分发

*/

bool DistributedLock::tryAcquireLock() {

switch (lockType_) {

case LockType::REENTRANT:

return doAcquireReentrantLock();

case LockType::READ:

return doAcquireReadLock();

case LockType::WRITE:

return doAcquireWriteLock();

case LockType::FAIR:

return doAcquireFairLock();

default:

return doAcquireReentrantLock(); // 默认降级为可重入锁

}

}

/**

* @brief 释放锁 - 根据lockType分发

*/

bool DistributedLock::releaseLock() {

switch (lockType_) {

case LockType::REENTRANT:

return doReleaseReentrantLock();

case LockType::READ:

return doReleaseReadLock();

case LockType::WRITE:

return doReleaseWriteLock();

case LockType::FAIR:

return doReleaseFairLock();

default:

return doReleaseReentrantLock();

}

}

// ============================================================================

// 四种锁类型的获取实现

// ============================================================================

/**

* @brief 可重入锁获取

*

* 【重入逻辑】

* - 若当前线程已持有:holdCount++,直接返回true(不操作Redis)

* - 否则:调用acquireCallback竞争Redis锁

*

* 【状态更新】

* - 获取成功后设置locked_=true, holdCount_=1, 记录线程ID和时间戳

*/

bool DistributedLock::doAcquireReentrantLock() {

// 1. 重入检查:同一线程重复获取

if (locked_ && std::this_thread::get_id() == ownerThreadId_) {

++holdCount_; // 仅增加计数,不操作Redis

return true;

}

// 2. 竞争Redis锁

bool acquired = false;

if (acquireCallback_) {

// 调用方注入的Redis操作:SET key value NX PX ttl

acquired = acquireCallback_(lockName_, lockId_, leaseTimeMs_);

} else {

// 测试模式:模拟成功(实际应返回!isLocked()查Redis)

acquired = !locked_;

}

// 3. 获取成功:更新本地状态

if (acquired) {

locked_ = true;

holdCount_ = 1; // 首次获取,计数为1

ownerThreadId_ = std::this_thread::get_id();

acquireTime_ = std::chrono::duration_cast<std::chrono::milliseconds>(

std::chrono::steady_clock::now().time_since_epoch()).count();

}

return acquired;

}

/**

* @brief 读锁获取(共享锁)

*

* 【并发规则】

* - 多个读锁可并发持有

* - 与写锁互斥:有写锁时读锁需等待

*

* 【实现要点】

* - 检查writeLockHeld_:有写锁则返回false

* - 获取成功后readLockCount_++

*/

bool DistributedLock::doAcquireReadLock() {

// 1. 重入检查

if (locked_ && std::this_thread::get_id() == ownerThreadId_) {

++holdCount_;

return true;

}

// 2. 检查是否有写锁持有

bool writeHeld = false;

{

std::lock_guard<std::mutex> guard(cacheMutex_);

// 初始化静态map(首次访问)

if (writeLockHeld_.find(lockName_) == writeLockHeld_.end()) {

writeLockHeld_[lockName_] = false;

}

if (readLockCount_.find(lockName_) == readLockCount_.end()) {

readLockCount_[lockName_] = 0;

}

writeHeld = writeLockHeld_[lockName_].load();

}

// 3. 有写锁则竞争失败

if (writeHeld) {

return false;

}

// 4. 竞争Redis锁(实际场景应校验无写锁+原子增加读计数)

bool acquired = false;

if (acquireCallback_) {

acquired = acquireCallback_(lockName_, lockId_, leaseTimeMs_);

} else {

acquired = true; // 测试模式

}

// 5. 获取成功:更新本地状态 + 读计数++

if (acquired) {

std::lock_guard<std::mutex> guard(cacheMutex_);

readLockCount_[lockName_]++; // 读锁计数+1

locked_ = true;

holdCount_ = 1;

ownerThreadId_ = std::this_thread::get_id();

acquireTime_ = std::chrono::duration_cast<std::chrono::milliseconds>(

std::chrono::steady_clock::now().time_since_epoch()).count();

}

return acquired;

}

/**

* @brief 写锁获取(排他锁)

*

* 【并发规则】

* - 与读锁、写锁均互斥

* - 获取时需确保无读锁、无写锁

*

* 【实现要点】

* - 检查writeLockHeld_和readLockCount_

* - 获取成功后writeLockHeld_=true

*/

bool DistributedLock::doAcquireWriteLock() {

// 1. 重入检查

if (locked_ && std::this_thread::get_id() == ownerThreadId_) {

++holdCount_;

return true;

}

// 2. 检查是否有其他锁持有

bool writeHeld = false;

int readCount = 0;

{

std::lock_guard<std::mutex> guard(cacheMutex_);

if (writeLockHeld_.find(lockName_) == writeLockHeld_.end()) {

writeLockHeld_[lockName_] = false;

}

if (readLockCount_.find(lockName_) == readLockCount_.end()) {

readLockCount_[lockName_] = 0;

}

writeHeld = writeLockHeld_[lockName_].load();

readCount = readLockCount_[lockName_].load();

}

// 3. 有读锁或写锁则竞争失败

if (writeHeld || readCount > 0) {

return false;

}

// 4. 竞争Redis锁

bool acquired = false;

if (acquireCallback_) {

acquired = acquireCallback_(lockName_, lockId_, leaseTimeMs_);

} else {

acquired = true;

}

// 5. 获取成功:更新状态 + 标记写锁持有

if (acquired) {

std::lock_guard<std::mutex> guard(cacheMutex_);

writeLockHeld_[lockName_] = true; // 标记写锁已持有

locked_ = true;

holdCount_ = 1;

ownerThreadId_ = std::this_thread::get_id();

acquireTime_ = std::chrono::duration_cast<std::chrono::milliseconds>(

std::chrono::steady_clock::now().time_since_epoch()).count();

}

return acquired;

}

/**

* @brief 公平锁获取(FIFO)

*

* 【实现说明】

* - 简化版:仅用fairLockCount_记录等待数,未实现严格队列

* - 实际生产建议用Redis LIST + Lua脚本实现真正FIFO

*

* 【当前逻辑】

* - 等待计数++ → 尝试获取 → 成功则计数--,失败也计数--

* - 释放时notify_one唤醒一个等待者

*/

bool DistributedLock::doAcquireFairLock() {

// 1. 重入检查

if (locked_ && std::this_thread::get_id() == ownerThreadId_) {

++holdCount_;

return true;

}

// 2. 初始化/获取条件变量

std::condition_variable* cv = nullptr;

{

std::lock_guard<std::mutex> guard(cacheMutex_);

if (fairLockQueue_.find(lockName_) == fairLockQueue_.end()) {

fairLockQueue_[lockName_] = new std::condition_variable();

}

if (fairLockCount_.find(lockName_) == fairLockCount_.end()) {

fairLockCount_[lockName_] = 0;

}

fairLockCount_[lockName_]++; // 等待计数+1

cv = fairLockQueue_[lockName_];

}

// 3. 竞争Redis锁(实际应检查队列头部是否是自己)

bool acquired = false;

if (acquireCallback_) {

acquired = acquireCallback_(lockName_, lockId_, leaseTimeMs_);

} else {

acquired = !locked_; // 简化判断

}

// 4. 更新等待计数 + 状态

if (acquired) {

locked_ = true;

holdCount_ = 1;

ownerThreadId_ = std::this_thread::get_id();

acquireTime_ = std::chrono::duration_cast<std::chrono::milliseconds>(

std::chrono::steady_clock::now().time_since_epoch()).count();

std::lock_guard<std::mutex> guard(cacheMutex_);

fairLockCount_[lockName_]--; // 获取成功,等待计数-1

} else {

std::lock_guard<std::mutex> guard(cacheMutex_);

fairLockCount_[lockName_]--; // 获取失败,等待计数也-1

}

return acquired;

}

// ============================================================================

// 四种锁类型的释放实现

// ============================================================================

bool DistributedLock::doReleaseReentrantLock() {

bool released = false;

if (releaseCallback_) {

// Lua脚本:if redis.call("GET", key) == value then return redis.call("DEL", key) end

released = releaseCallback_(lockName_, lockId_);

} else {

released = true; // 测试模式

}

return released;

}

bool DistributedLock::doReleaseReadLock() {

bool released = false;

if (releaseCallback_) {

released = releaseCallback_(lockName_, lockId_);

} else {

released = true;

}

// 释放成功:读锁计数--

if (released) {

std::lock_guard<std::mutex> guard(cacheMutex_);

readLockCount_[lockName_]--;

// 注意:读锁释放不唤醒写锁,写锁需自行轮询或监听

}

return released;

}

bool DistributedLock::doReleaseWriteLock() {

bool released = false;

if (releaseCallback_) {

released = releaseCallback_(lockName_, lockId_);

} else {

released = true;

}

if (released) {

std::lock_guard<std::mutex> guard(cacheMutex_);

writeLockHeld_[lockName_] = false; // 清除写锁标记

// 唤醒一个等待的公平锁线程(简化版)

if (fairLockQueue_.find(lockName_) != fairLockQueue_.end()) {

fairLockQueue_[lockName_]->notify_one();

}

}

return released;

}

bool DistributedLock::doReleaseFairLock() {

bool released = false;

if (releaseCallback_) {

released = releaseCallback_(lockName_, lockId_);

} else {

released = true;

}

if (released) {

std::lock_guard<std::mutex> guard(cacheMutex_);

// 唤醒下一个等待者(FIFO需Redis队列支持,此处简化)

if (fairLockQueue_.find(lockName_) != fairLockQueue_.end()) {

fairLockQueue_[lockName_]->notify_one();

}

}

return released;

}

// ============================================================================

// 看门狗机制实现

// ============================================================================

/**

* @brief 启动看门狗线程

* @param leaseTimeMs 续期间隔(ms),通常为租期的1/3

*

* 【线程模型】

* - 独立std::thread运行watchdogLoop

* - 通过watchdogRunning_原子标志控制启停

* - 使用condition_variable实现优雅退出

*/

void DistributedLock::startWatchdog(long leaseTimeMs) {

if (watchdogRunning_) {

return; // 避免重复启动

}

watchdogRunning_ = true;

// 创建后台线程,绑定成员函数

watchdogThread_ = std::make_unique<std::thread>(&DistributedLock::watchdogLoop, this, leaseTimeMs);

}

/**

* @brief 停止看门狗

*

* 【优雅退出流程】

* 1. 设置watchdogRunning_=false

* 2. notify_all唤醒可能wait的线程

* 3. join()等待线程实际退出,避免悬空指针

*/

void DistributedLock::stopWatchdog() {

watchdogRunning_ = false; // 1. 设置退出标志

cv_.notify_all(); // 2. 唤醒等待的线程

// 3. 等待线程退出(若可join)

if (watchdogThread_ && watchdogThread_->joinable()) {

watchdogThread_->join(); // 阻塞直到线程结束

}

}

/**

* @brief 看门狗主循环

* @param leaseTimeMs 续期间隔

*

* 【循环逻辑】

* 1. renewalInterval = leaseTimeMs / 3(提前续期,留缓冲)

* 2. while(运行中且持有锁):

* a. wait_for(renewalInterval):睡眠或提前唤醒

* b. 若被唤醒且需退出:break

* c. 否则调用doRenewLock()续期

*

* 【异常处理】

* - 续期失败不抛异常,避免看门狗崩溃

* - 由业务侧通过isLocked()监控或日志告警

*/

void DistributedLock::watchdogLoop(long leaseTimeMs) {

long renewalInterval = leaseTimeMs / 3; // 1/3租期续期,留2/3缓冲

while (watchdogRunning_ && locked_) {

std::unique_lock<std::mutex> lock(mutex_);

// wait_for返回true表示被notify唤醒,false表示超时

if (cv_.wait_for(lock, std::chrono::milliseconds(renewalInterval),

[this] { return !watchdogRunning_ || !locked_; })) {

break; // 需退出:标志变更或锁已释放

}

// 仍持有锁且需运行:执行续期

if (locked_ && watchdogRunning_) {

doRenewLock(leaseTimeMs); // 调用回调续期

}

}

}

/**

* @brief 续期锁:调用renewCallback

* @note 续期失败不抛异常,由调用方监控

*/

bool DistributedLock::doRenewLock(long leaseTimeMs) {

if (renewCallback_) {

// PEXPIRE key ttl (需先校验value)

return renewCallback_(lockName_, lockId_, leaseTimeMs);

}

return true; // 测试模式

}

} // namespace redis

} // namespace dfs4. 使用示例

4.1 目录结构

cpp

.

├── bloom_filter.cpp

├── bloom_filter.h

├── distributed_lock.cpp

├── distributed_lock.h

├── local_cache.cpp

├── local_cache.h

├── redis_cache.cpp

├── redis_cache.h

├── redis_connection.cpp

├── redis_connection.h

├── redis_connection_pool.cpp

└── redis_connection_pool.h4.2 源码

cpp

/**

* @file distributed_lock_example.cpp

* @brief 分布式锁模块完整使用示例

* @author DFS Team

* @date 2025-02-18

*

* 【示例内容】

* 1. 基础分布式锁使用(可重入锁)

* 2. 读写锁使用场景

* 3. 公平锁使用示例

* 4. 结合RedisCache的实际应用

* 5. 结合LocalCache的多级缓存

* 6. 结合BloomFilter防止缓存穿透

* 7. 多线程并发测试

*/

#include "distributed_lock.h"

#include "redis_cache.h"

#include "redis_connection_pool.h"

#include "local_cache.h"

#include "bloom_filter.h"

#include <iostream>

#include <thread>

#include <vector>

#include <chrono>

#include <memory>

#include <sstream>

#include <iomanip>

using namespace dfs::redis;

std::shared_ptr<RedisConnectionPool> g_redisPool;

std::shared_ptr<RedisCache> g_redisCache;

std::shared_ptr<LocalCache> g_localCache;

std::shared_ptr<BloomFilter> g_bloomFilter;

void initRedisEnvironment() {

RedisConfig config;

config.host = "127.0.0.1";

config.port = 6379;

config.password = "";

config.database = 0;

config.connectionTimeout = 5000;

config.socketTimeout = 3000;

g_redisPool = std::make_shared<RedisConnectionPool>(config, 10, 2);

if (!g_redisPool->init()) {

std::cerr << "[ERROR] Redis连接池初始化失败" << std::endl;

return;

}

g_redisCache = std::make_shared<RedisCache>(g_redisPool);

g_localCache = std::make_shared<LocalCache>(10000, 300);

BloomFilterConfig bfConfig;

bfConfig.expectedItems = 1000000;

bfConfig.falsePositiveRate = 0.01;

g_bloomFilter = std::make_shared<BloomFilter>(bfConfig);

std::cout << "[INFO] Redis环境初始化完成" << std::endl;

}

void example1_basicReentrantLock() {

std::cout << "\n========== 示例1: 基础可重入锁使用 ==========" << std::endl;

DistributedLock lock("order:12345", LockType::REENTRANT);

auto acquireCallback = [](const std::string& key, const std::string& value, long ttl) -> bool {

if (g_redisCache) {

return g_redisCache->setNx(key, value, static_cast<int>(ttl / 1000));

}

return true;

};

auto releaseCallback = [](const std::string& key, const std::string& value) -> bool {

if (g_redisCache) {

std::string script = R"(

if redis.call("GET", KEYS[1]) == ARGV[1] then

return redis.call("DEL", KEYS[1])

else

return 0

end

)";

int64_t result = 0;

std::vector<std::string> keys = {key};

std::vector<std::string> args = {value};

g_redisCache->executeLuaScript(script, keys, args, result);

return result == 1;

}

return true;

};

auto renewCallback = [](const std::string& key, const std::string& value, long ttl) -> bool {

if (g_redisCache) {

std::string script = R"(

if redis.call("GET", KEYS[1]) == ARGV[1] then

return redis.call("PEXPIRE", KEYS[1], ARGV[2])

else

return 0

end

)";

int64_t result = 0;

std::vector<std::string> keys = {key};

std::vector<std::string> args = {value, std::to_string(ttl)};

g_redisCache->executeLuaScript(script, keys, args, result);

return result == 1;

}

return true;

};

lock.setRedisCallbacks(acquireCallback, releaseCallback, renewCallback);

if (lock.tryLock(5000, 30000)) {

std::cout << "[SUCCESS] 获取锁成功: " << lock.getLockName() << std::endl;

std::cout << " - Lock ID: " << lock.getLockId() << std::endl;

std::cout << " - Lock Type: REENTRANT" << std::endl;

std::cout << " - Hold Count: " << lock.getHoldCount() << std::endl;

if (lock.lock()) {

std::cout << "[SUCCESS] 重入锁成功, Hold Count: " << lock.getHoldCount() << std::endl;

}

std::this_thread::sleep_for(std::chrono::milliseconds(100));

lock.unlock();

std::cout << "[INFO] 第一次释放, Hold Count: " << lock.getHoldCount() << std::endl;

lock.unlock();

std::cout << "[INFO] 第二次释放, 锁已完全释放" << std::endl;

} else {

std::cout << "[FAILED] 获取锁失败" << std::endl;

}

}

void example2_readWriteLock() {

std::cout << "\n========== 示例2: 读写锁使用 ==========" << std::endl;

DistributedLock readLock1("resource:data", LockType::READ);

DistributedLock readLock2("resource:data", LockType::READ);

DistributedLock writeLock("resource:data", LockType::WRITE);

auto acquireCallback = [](const std::string& key, const std::string& value, long ttl) -> bool {

if (g_redisCache) {

return g_redisCache->setNx(key, value, static_cast<int>(ttl / 1000));

}

return true;

};

auto releaseCallback = [](const std::string& key, const std::string& value) -> bool {

if (g_redisCache) {

return g_redisCache->del(key);

}

return true;

};

auto renewCallback = [](const std::string& key, const std::string& value, long ttl) -> bool {

return true;

};

readLock1.setRedisCallbacks(acquireCallback, releaseCallback, renewCallback);

readLock2.setRedisCallbacks(acquireCallback, releaseCallback, renewCallback);

writeLock.setRedisCallbacks(acquireCallback, releaseCallback, renewCallback);

if (readLock1.tryLock(1000, 30000)) {

std::cout << "[SUCCESS] 读锁1获取成功" << std::endl;

if (readLock2.tryLock(1000, 30000)) {

std::cout << "[SUCCESS] 读锁2获取成功(多个读锁可并发)" << std::endl;

readLock2.unlock();

}

if (!writeLock.tryLock(100)) {

std::cout << "[EXPECTED] 写锁获取失败(有读锁持有,写锁需等待)" << std::endl;

}

readLock1.unlock();

std::cout << "[INFO] 读锁释放完成" << std::endl;

}

if (writeLock.tryLock(1000, 30000)) {

std::cout << "[SUCCESS] 写锁获取成功" << std::endl;

writeLock.unlock();

}

}

void example3_fairLock() {

std::cout << "\n========== 示例3: 公平锁使用 ==========" << std::endl;

DistributedLock fairLock("queue:task", LockType::FAIR);

auto acquireCallback = [](const std::string& key, const std::string& value, long ttl) -> bool {

if (g_redisCache) {

return g_redisCache->setNx(key, value, static_cast<int>(ttl / 1000));

}

return true;

};

auto releaseCallback = [](const std::string& key, const std::string& value) -> bool {

if (g_redisCache) {

return g_redisCache->del(key);

}

return true;

};

fairLock.setRedisCallbacks(acquireCallback, releaseCallback, [](auto, auto, auto) { return true; });

if (fairLock.tryLock(5000, 30000)) {

std::cout << "[SUCCESS] 公平锁获取成功: " << fairLock.getLockName() << std::endl;

std::cout << " - Lock ID: " << fairLock.getLockId() << std::endl;

std::this_thread::sleep_for(std::chrono::milliseconds(50));

fairLock.unlock();

std::cout << "[INFO] 公平锁释放完成" << std::endl;

}

}

void example4_withRedisCache() {

std::cout << "\n========== 示例4: 结合RedisCache的库存扣减 ==========" << std::endl;

if (!g_redisCache) {

std::cout << "[WARN] Redis未连接,跳过此示例" << std::endl;

return;

}

std::string stockKey = "stock:product:1001";

g_redisCache->set(stockKey, "100");

DistributedLock lock("stock:product:1001", LockType::REENTRANT);

auto acquireCallback = [](const std::string& key, const std::string& value, long ttl) -> bool {

return g_redisCache->setNx(key, value, static_cast<int>(ttl / 1000));

};

auto releaseCallback = [](const std::string& key, const std::string& value) -> bool {

std::string script = R"(

if redis.call("GET", KEYS[1]) == ARGV[1] then

return redis.call("DEL", KEYS[1])

else

return 0

end

)";

int64_t result = 0;

std::vector<std::string> keys = {key};

std::vector<std::string> args = {value};

g_redisCache->executeLuaScript(script, keys, args, result);

return result == 1;

};

auto renewCallback = [](const std::string& key, const std::string& value, long ttl) -> bool {

return true;

};

lock.setRedisCallbacks(acquireCallback, releaseCallback, renewCallback);

if (lock.tryLock(3000, 10000)) {

std::cout << "[SUCCESS] 获取库存锁成功,开始扣减库存" << std::endl;

auto stockValue = g_redisCache->get(stockKey);

if (stockValue.has_value()) {

int stock = std::stoi(stockValue.value());

if (stock > 0) {

stock--;

g_redisCache->set(stockKey, std::to_string(stock));

std::cout << "[INFO] 库存扣减成功,剩余: " << stock << std::endl;

} else {

std::cout << "[WARN] 库存不足" << std::endl;

}

}

lock.unlock();

std::cout << "[INFO] 库存锁释放完成" << std::endl;

} else {

std::cout << "[FAILED] 获取库存锁失败" << std::endl;

}

}

void example5_multiLevelCache() {

std::cout << "\n========== 示例5: 多级缓存 + 分布式锁 ==========" << std::endl;

std::string cacheKey = "user:info:10086";

g_bloomFilter->add(cacheKey);

std::cout << "[INFO] 布隆过滤器添加: " << cacheKey << std::endl;

if (!g_bloomFilter->mightContain(cacheKey)) {

std::cout << "[INFO] 布隆过滤器判断不存在,直接返回" << std::endl;

return;

}

auto localValue = g_localCache->get(cacheKey);

if (localValue.has_value()) {

std::cout << "[INFO] L1本地缓存命中: " << localValue.value() << std::endl;

return;

}

DistributedLock lock("cache:user:10086", LockType::REENTRANT);

auto acquireCallback = [](const std::string& key, const std::string& value, long ttl) -> bool {

if (g_redisCache) {

return g_redisCache->setNx(key, value, static_cast<int>(ttl / 1000));

}

return true;

};

auto releaseCallback = [](const std::string& key, const std::string& value) -> bool {

if (g_redisCache) {

return g_redisCache->del(key);

}

return true;

};

lock.setRedisCallbacks(acquireCallback, releaseCallback, [](auto, auto, auto) { return true; });

if (lock.tryLock(3000, 10000)) {

std::cout << "[SUCCESS] 获取缓存重建锁成功" << std::endl;

std::string userData = R"({"id":10086,"name":"张三","age":25})";

g_localCache->set(cacheKey, userData, 300);

std::cout << "[INFO] 数据写入L1本地缓存" << std::endl;

if (g_redisCache) {

g_redisCache->set("redis:" + cacheKey, userData, 600);

std::cout << "[INFO] 数据写入L2 Redis缓存" << std::endl;

}

lock.unlock();

std::cout << "[INFO] 缓存重建锁释放完成" << std::endl;

}

auto finalValue = g_localCache->get(cacheKey);

if (finalValue.has_value()) {

std::cout << "[SUCCESS] 最终从本地缓存获取: " << finalValue.value() << std::endl;

}

}

void example6_concurrentTest() {

std::cout << "\n========== 示例6: 多线程并发测试 ==========" << std::endl;

const int threadCount = 5;

std::vector<std::thread> threads;

std::atomic<int> successCount{0};

std::atomic<int> failCount{0};

auto acquireCallback = [](const std::string& key, const std::string& value, long ttl) -> bool {

if (g_redisCache) {

return g_redisCache->setNx(key, value, static_cast<int>(ttl / 1000));

}

return true;

};

auto releaseCallback = [](const std::string& key, const std::string& value) -> bool {

if (g_redisCache) {

std::string script = R"(

if redis.call("GET", KEYS[1]) == ARGV[1] then

return redis.call("DEL", KEYS[1])

else

return 0

end

)";

int64_t result = 0;

std::vector<std::string> keys = {key};

std::vector<std::string> args = {value};

g_redisCache->executeLuaScript(script, keys, args, result);

return result == 1;

}

return true;

};

auto renewCallback = [](const std::string& key, const std::string& value, long ttl) -> bool {

return true;

};

auto worker = [&](int threadId) {

DistributedLock lock("concurrent:test", LockType::REENTRANT);

lock.setRedisCallbacks(acquireCallback, releaseCallback, renewCallback);

if (lock.tryLock(2000, 5000)) {

successCount++;

std::cout << "[Thread-" << threadId << "] 获取锁成功" << std::endl;

std::this_thread::sleep_for(std::chrono::milliseconds(100));

lock.unlock();

std::cout << "[Thread-" << threadId << "] 释放锁" << std::endl;

} else {

failCount++;

std::cout << "[Thread-" << threadId << "] 获取锁失败" << std::endl;

}

};

for (int i = 0; i < threadCount; ++i) {

threads.emplace_back(worker, i);

}

for (auto& t : threads) {

t.join();

}

std::cout << "\n[统计] 成功: " << successCount << ", 失败: " << failCount << std::endl;

}

void example7_lockStatus() {

std::cout << "\n========== 示例7: 锁状态监控 ==========" << std::endl;

DistributedLock lock("monitor:test", LockType::REENTRANT);

auto acquireCallback = [](const std::string& key, const std::string& value, long ttl) -> bool {

return true;

};

auto releaseCallback = [](const std::string& key, const std::string& value) -> bool {

return true;

};

lock.setRedisCallbacks(acquireCallback, releaseCallback, [](auto, auto, auto) { return true; });

std::cout << "[状态] 锁获取前:" << std::endl;

std::cout << " - isLocked: " << (lock.isLocked() ? "true" : "false") << std::endl;

std::cout << " - isHeldByCurrentThread: " << (lock.isHeldByCurrentThread() ? "true" : "false") << std::endl;

std::cout << " - holdCount: " << lock.getHoldCount() << std::endl;

std::cout << " - holdTime: " << lock.getHoldTime() << "ms" << std::endl;

if (lock.lock()) {

std::cout << "\n[状态] 锁获取后:" << std::endl;

std::cout << " - isLocked: " << (lock.isLocked() ? "true" : "false") << std::endl;

std::cout << " - isHeldByCurrentThread: " << (lock.isHeldByCurrentThread() ? "true" : "false") << std::endl;

std::cout << " - holdCount: " << lock.getHoldCount() << std::endl;

std::cout << " - holdTime: " << lock.getHoldTime() << "ms" << std::endl;

std::cout << " - lockName: " << lock.getLockName() << std::endl;

std::cout << " - lockId: " << lock.getLockId() << std::endl;

std::this_thread::sleep_for(std::chrono::milliseconds(100));

std::cout << "\n[状态] 100ms后:" << std::endl;

std::cout << " - holdTime: " << lock.getHoldTime() << "ms" << std::endl;

lock.unlock();

}

}

void example8_bloomFilterIntegration() {

std::cout << "\n========== 示例8: 布隆过滤器集成 ==========" << std::endl;

std::vector<std::string> validIds = {"user:1001", "user:1002", "user:1003"};

for (const auto& id : validIds) {

g_bloomFilter->add(id);

}

std::cout << "[INFO] 添加有效用户ID到布隆过滤器" << std::endl;

auto checkAndLock = [](const std::string& userId) -> bool {

if (!g_bloomFilter->mightContain(userId)) {

std::cout << "[BLOOM] " << userId << " 一定不存在,跳过查询" << std::endl;

return false;

}

std::cout << "[BLOOM] " << userId << " 可能存在,继续处理" << std::endl;

DistributedLock lock("user:query:" + userId, LockType::REENTRANT);

auto acquireCallback = [](const std::string& key, const std::string& value, long ttl) -> bool {

return true;

};

auto releaseCallback = [](const std::string& key, const std::string& value) -> bool {

return true;

};

lock.setRedisCallbacks(acquireCallback, releaseCallback, [](auto, auto, auto) { return true; });

if (lock.tryLock(1000, 5000)) {

std::cout << "[LOCK] " << userId << " 获取查询锁成功" << std::endl;

lock.unlock();

return true;

}

return false;

};

checkAndLock("user:1001");

checkAndLock("user:9999");

}

int main() {

std::cout << "========================================" << std::endl;

std::cout << " 分布式锁模块使用示例程序" << std::endl;

std::cout << " DFS Redis Module Examples" << std::endl;

std::cout << "========================================" << std::endl;

initRedisEnvironment();

example1_basicReentrantLock();

example2_readWriteLock();

example3_fairLock();

example4_withRedisCache();

example5_multiLevelCache();

example6_concurrentTest();

example7_lockStatus();

example8_bloomFilterIntegration();

std::cout << "\n========================================" << std::endl;

std::cout << " 所有示例执行完成" << std::endl;

std::cout << "========================================" << std::endl;

if (g_redisPool) {

g_redisPool->close();

}

return 0;

}4.3 结构

cpp

┌─────────────────────────────────────────────────────────────┐

│ distributed_lock_example.cpp │

├─────────────────────────────────────────────────────────────┤

│ [全局变量] │

│ g_redisPool, g_redisCache, g_localCache, g_bloomFilter │

├─────────────────────────────────────────────────────────────┤

│ [初始化] │

│ initRedisEnvironment() -> 连接 Redis, 初始化组件 │

├─────────────────────────────────────────────────────────────┤

│ [8 个示例场景] │

│ 1. 基础锁 (Hello World) │

│ 2. 读写锁 (并发控制) │

│ 3. 公平锁 (排队机制) │

│ 4. 业务锁 (库存扣减) │

│ 5. 性能优化 (多级缓存) │

│ 6. 压力测试 (多线程) │

│ 7. 监控 (状态查询) │

│ 8. 安全 (布隆过滤器) │

├─────────────────────────────────────────────────────────────┤

│ [主入口] │

│ main() -> 依次执行上述函数 │

└─────────────────────────────────────────────────────────────┘