前言

Sealos 是一个以 Kubernetes 为内核的云操作系统 。它的目标很明确,就是让使用云基础设施变得像使用个人电脑一样简单,同时把底层复杂的技术细节隐藏起来

1:2台机子 ( 一台master 192.168.5.30 一台 work node 192.168.5.31) 安装

ubuntu22.0.4 TLS ubuntu 123

使用干净的环境,不要额外安装docker,初始密码保持一样,主机名不能重复不要带下划线,可以使用下面命令修改主机名

主机: hostnamectl set-hostname master-node

work机: hostnamectl set-hostname worker-node-01

官方文档 https://sealos.run/docs/k8s/quick-start/install-cli

更新

sudo apt update && sudo apt upgrade -y

安装 sudo apt install -y curl wget git (没有就安装,有就不用了)

查看whereis curl whereis wget whereis git

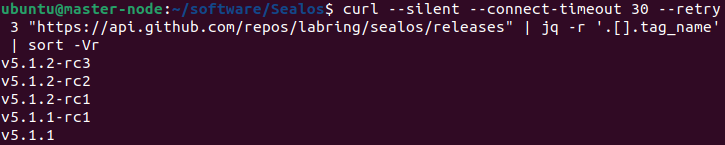

查看版本

curl --silent --connect-timeout 30 --retry 3 "https://api.github.com/repos/labring/sealos/releases" | jq -r '.[].tag_name' | sort -Vr

安装sh脚本

powershell

#!/bin/bash

set -e # 遇到错误立即退出

# 1. 定义代理前缀

export PROXY_PREFIX="https://ghfast.top"

# 2. 获取最新版本号(过滤出正式版,优先选择不带rc的版本)

echo "正在获取sealos最新版本号..."

VERSION=$(curl --silent --connect-timeout 30 --retry 3 "https://api.github.com/repos/labring/sealos/releases" | \

jq -r '.[].tag_name' | \

sort -Vr | \

grep -v "rc" | # 过滤掉预发布版本(rc版本),如果需要rc版本可删除这行

head -n 1)

# 检查版本是否获取成功

if [ -z "$VERSION" ]; then

echo "错误:未能获取到sealos版本号"

exit 1

fi

echo "获取到最新版本:$VERSION"

# 3. 拼接下载链接并下载安装包

# 去除版本号前缀的v(例如v5.1.1转为5.1.1)

VERSION_WITHOUT_V=${VERSION#v}

DOWNLOAD_URL="${PROXY_PREFIX}/https://github.com/labring/sealos/releases/download/${VERSION}/sealos_${VERSION_WITHOUT_V}_linux_amd64.tar.gz"

echo "开始下载安装包:$DOWNLOAD_URL"

wget --timeout=60 --tries=3 "$DOWNLOAD_URL" -O "sealos_${VERSION_WITHOUT_V}_linux_amd64.tar.gz"

# 4. 解压并安装sealos

echo "解压并安装sealos..."

tar zxvf "sealos_${VERSION_WITHOUT_V}_linux_amd64.tar.gz" sealos

chmod +x sealos

sudo mv sealos /usr/bin/

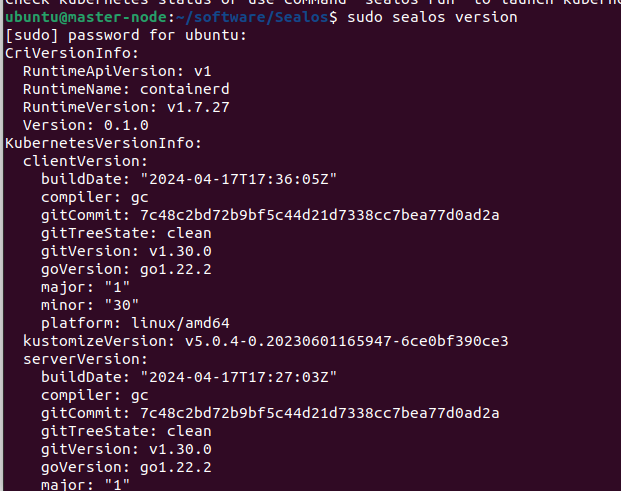

# 5. 验证安装

if command -v sealos &>/dev/null; then

echo "sealos安装成功!当前版本:"

sealos version

else

echo "错误:sealos安装失败"

exit 1

fi

# 清理临时文件

#rm -f "sealos_${VERSION_WITHOUT_V}_linux_amd64.tar.gz"

echo "安装完成,临时文件已清理"

2:部署k8s

用sealos先拉取三个镜像分别是kubernetes、helm和cilium。

这里加了sudo 了

sudo sealos pull registry.cn-shanghai.aliyuncs.com/labring/kubernetes:v1.30.0

sudo sealos pull registry.cn-shanghai.aliyuncs.com/labring/helm:v3.9.4

sudo sealos pull registry.cn-shanghai.aliyuncs.com/labring/cilium:v1.13.4

sudo sealos images ##检查镜像

安装

sealos run registry.cn-shanghai.aliyuncs.com/labring/kubernetes:v1.30.0 registry.cn-shanghai.aliyuncs.com/labring/helm:v3.9.4 registry.cn-shanghai.aliyuncs.com/labring/cilium:v1.13.4

--masters 192.168.5.30

--nodes 192.168.5.31 --user ubuntu --passwd 123

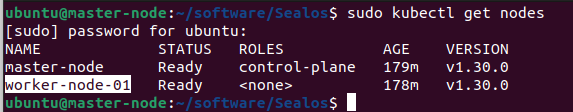

查看node 情况 节点是否都属于Ready状态

kubectl get nodes

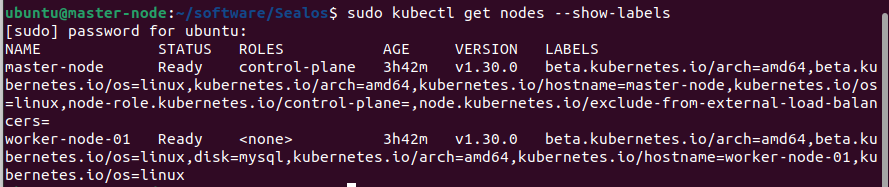

增加标签

给 worker-node-01 添加一个标签 disk=mysql(表示该节点有 SSD 磁盘,适合数据库):

kubectl label nodes worker-node-01 disk=mysql

验证标签是否添加成功:

kubectl get nodes --show-labels

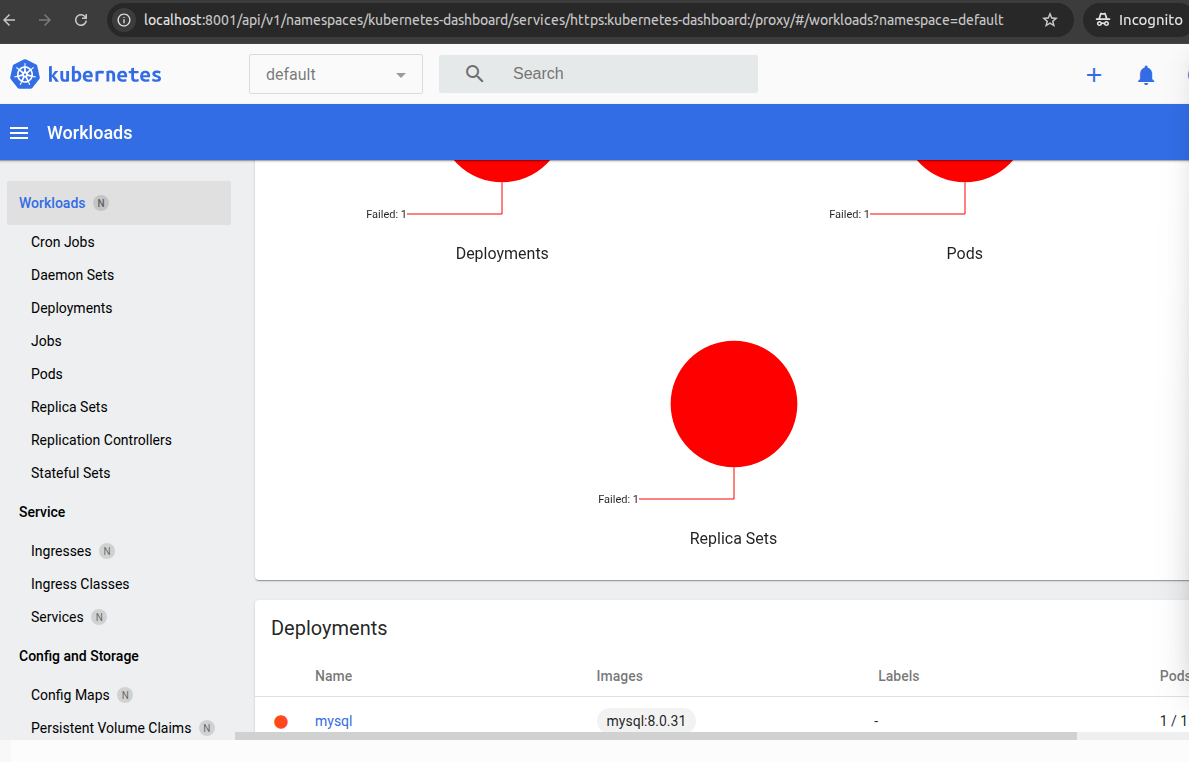

3:部署 MySQL 并指定节点

mysql.yaml

yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

# 节点选择器:指定调度到含有 disk=ssd 标签的节点

nodeSelector:

disk: ssd

containers:

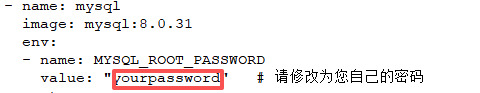

- name: mysql

image: mysql:8.0.31

env:

- name: MYSQL_ROOT_PASSWORD

value: "yourpassword" # 请修改为您自己的密码

ports:

- containerPort: 3306

volumeMounts:

- name: mysql-data

mountPath: /var/lib/mysql

volumes:

- name: mysql-data

emptyDir: {} # 临时存储,Pod删除后数据丢失。如需持久化请改用 PersistentVolumeClaim

---

apiVersion: v1

kind: Service

metadata:

name: mysql

spec:

ports:

- port: 3306

targetPort: 3306

selector:

app: mysql然后应用该文件:

kubectl apply -f mysql.yaml

验证 MySQL 是否运行在指定节点

kubectl get pods -o wide | grep mysql

3:错误修正

如果因为网络访问不了导致失败,可以参考如下修正或采用其他方式

所以work node 失败了

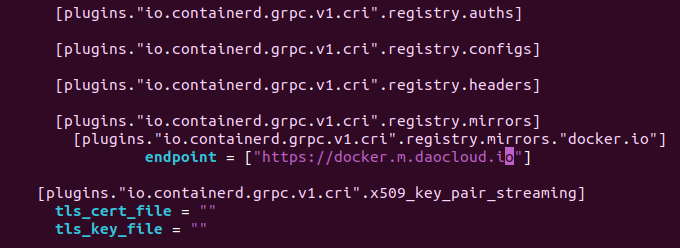

修改ubuntu@worker-node-01:~$ sudo vim /etc/containerd/config.toml

找到 [plugins."io.containerd.grpc.v1.cri".registry.mirrors] 部分

修改 endpoint 列表或替换为 DaoCloud 地址

endpoint = ["https://docker.m.daocloud.io"]

重启 containerd 使配置生效 sudo systemctl restart containerd

拉官方MySQL sudo crictl pull mysql:8.0.31 成功

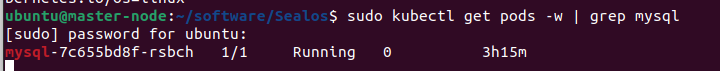

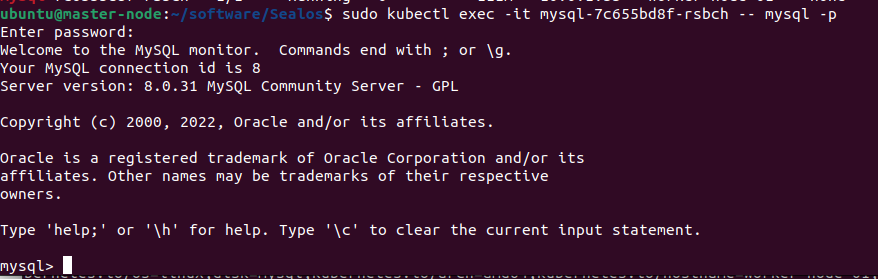

验证 MySQL 是否正常运行

执行 sudo kubectl exec -it -- mysql -p

密码为 自行修改

4:部署dashboard

1>sudo vim /tmp/dashboard.yaml 内容如下

yaml

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: registry.cn-hangzhou.aliyuncs.com/google_containers/dashboard:v2.7.0

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

spec:

containers:

- name: dashboard-metrics-scraper

image: registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-scraper:v1.0.8

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}2>sudo bash -c 'kubectl apply -f /tmp/dashboard.yaml --kubeconfig=/etc/kubernetes/admin.conf'

3>验证 Dashboard 部署状态

sudo bash -c 'kubectl get pods -n kubernetes-dashboard --kubeconfig=/etc/kubernetes/admin.conf'

4>创建管理员账户

bash

sudo bash -c 'cat <<EOF | kubectl apply -f - --validate=false --kubeconfig=/etc/kubernetes/admin.conf

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

EOF'5>生成登录 Token

复制输出的 Token 字符串(长串字符),保存好。

powershell

sudo bash -c 'kubectl -n kubernetes-dashboard create token admin-user --duration=86400s --kubeconfig=/etc/kubernetes/admin.conf'6>启动 proxy 并访问 Dashboard

启动 proxy(保持终端窗口打开)

powershell

sudo bash -c 'kubectl proxy --kubeconfig=/etc/kubernetes/admin.conf'打开浏览器访问:

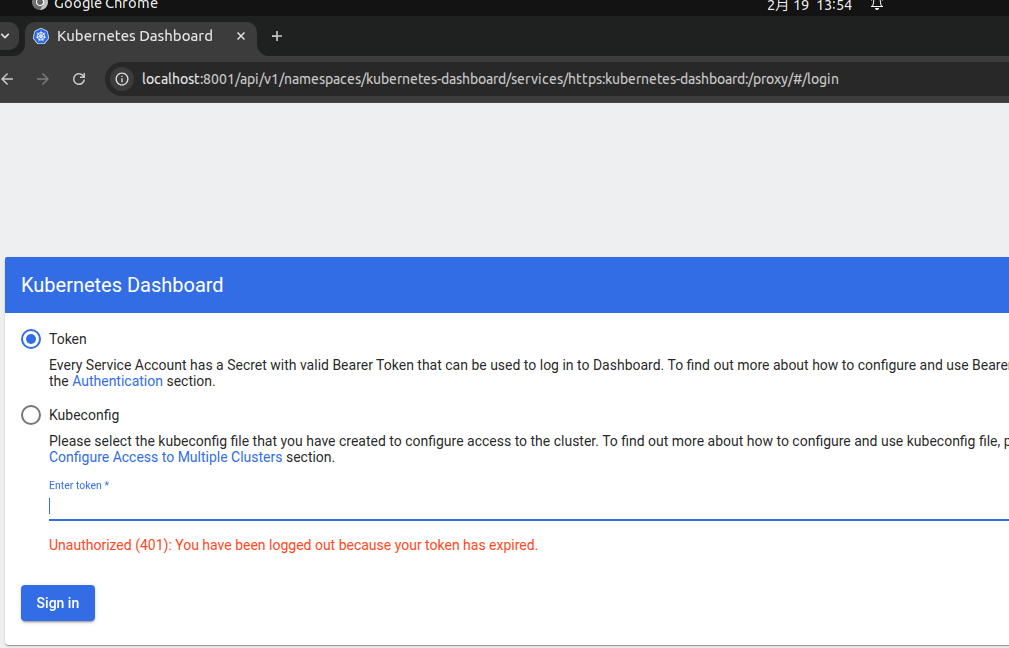

选择「Token」选项,粘贴步骤 5 复制的 Token,点击「Sign in」即可登录

7>如出现

Dashboard 的两个 Pod 状态都是 Pending(待调度),这说明 Pod 无法被调度到集群节点上,核心原因通常是节点存在污点(Taint) 或资源不足

(1)排查 Pending 原因(精准定位)

powershell

# 查看 dashboard-metrics-scraper Pod 的调度失败原因

sudo bash -c 'kubectl describe pod dashboard-metrics-scraper-5f99bcc478-mjdxd -n kubernetes-dashboard --kubeconfig=/etc/kubernetes/admin.conf'

# 查看 kubernetes-dashboard Pod 的调度失败原因

sudo bash -c 'kubectl describe pod kubernetes-dashboard-5998dd5fb6-mjvtz -n kubernetes-dashboard --kubeconfig=/etc/kubernetes/admin.conf'

#如有如下错误 执行 1 2 步骤

Warning FailedScheduling 3m41s (x2 over 8m41s) default-scheduler 0/2 nodes are available: 1 node(s) had untolerated taint {node-role.kubernetes.io/control-plane: }, 1 node(s) had untolerated taint {node.kubernetes.io/unreachable: }. preemption: 0/2 nodes are available: 2 Preemption is not helpful for scheduling.

# 1. 给 dashboard-metrics-scraper 添加 control-plane 污点容忍

sudo bash -c 'kubectl patch deployment dashboard-metrics-scraper -n kubernetes-dashboard --type=json -p '\''[{"op":"add","path":"/spec/template/spec/tolerations/-","value":{"key":"node-role.kubernetes.io/control-plane","effect":"NoSchedule"}}]'\'' --kubeconfig=/etc/kubernetes/admin.conf'

# 2. 给 kubernetes-dashboard 添加 control-plane 污点容忍

sudo bash -c 'kubectl patch deployment kubernetes-dashboard -n kubernetes-dashboard --type=json -p '\''[{"op":"add","path":"/spec/template/spec/tolerations/-","value":{"key":"node-role.kubernetes.io/control-plane","effect":"NoSchedule"}}]'\'' --kubeconfig=/etc/kubernetes/admin.conf'

检查并修复 unreachable 节点(worker 节点不可达)

sudo bash -c 'kubectl get nodes --kubeconfig=/etc/kubernetes/admin.conf'

如果输出中有节点状态为 NotReady/Unknown(对应 unreachable),执行以下命令恢复:

# 1. 查看节点详细状态(替换 <node-name> 为不可达节点名称)

sudo bash -c 'kubectl describe node <node-name> --kubeconfig=/etc/kubernetes/admin.conf'

# 2. 重启 Sealos 集群网络(修复节点连通性)

sudo sealos exec -t all -- systemctl restart containerd

sudo sealos exec -t all -- systemctl restart cilium

# 3. 等待节点恢复(约 1 分钟)

sleep 60

sudo bash -c 'kubectl get nodes --kubeconfig=/etc/kubernetes/admin.conf'

删除旧 Pending Pod,让 Deployment 重建

sudo bash -c 'kubectl delete pods -n kubernetes-dashboard --all --kubeconfig=/etc/kubernetes/admin.conf'

验证 Pod 调度状态

等待 1-2 分钟,执行命令查看 Pod 是否变为 Running:

sudo bash -c 'kubectl get pods -n kubernetes-dashboard --kubeconfig=/etc/kubernetes/admin.conf'

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-5f99bcc478-xxxx 1/1 Running 0 1m

kubernetes-dashboard-5998dd5fb6-xxxx 1/1 Running 0 1m

验证端点并访问 Dashboard

# 1. 检查 Service 端点是否可用

sudo bash -c 'kubectl get endpoints kubernetes-dashboard -n kubernetes-dashboard --kubeconfig=/etc/kubernetes/admin.conf'

# 2. 重启 kubectl proxy(先关闭旧的,按 Ctrl+C)

sudo bash -c 'kubectl proxy --kubeconfig=/etc/kubernetes/admin.conf'

再次访问 http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/,即可正常显示登录界面。

选择token ,输入上面的toke

5:如果觉得有用,麻烦点个赞,加个收藏