文章目录

深度学习Week17------优化器对比实验

一、前言

二、我的环境

三、前期工作

1、配置环境

2、导入数据

2.1 加载数据

2.2 检查数据

2.3 配置数据集

2.4 数据可视化

四、构建模型

五、训练模型

1、将其嵌入model中

2、在Dataset数据集中进行数据增强

六、模型评估

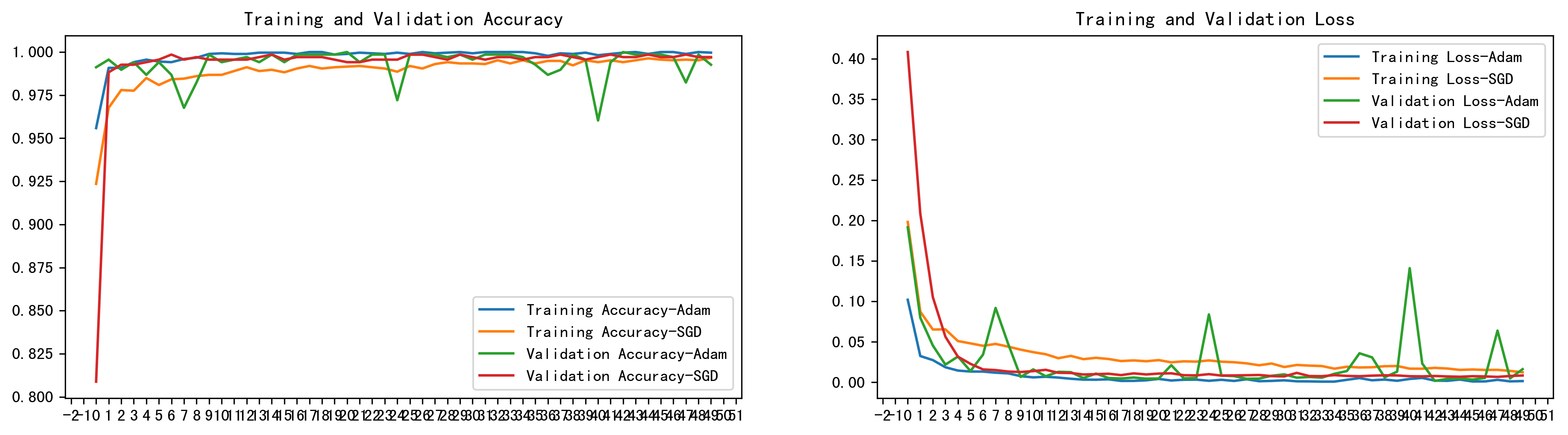

1、Accuracy与Loss图

2、模型评估

一、前言

- 🍨 本文为🔗365天深度学习训练营 中的学习记录博客

- 🍖 原作者:K同学啊 | 接辅导、项目定制

终于,基础篇打卡已经基本结束,深度学习的基础学习到了很多,相关函数已经可以比较熟练的使用,最后一篇主要是探究不同优化器、以及不同参数配置对模型的影响,在论文当中我们也可以进行优化器的比对,以增加论文工作量。

我记得在今年4月份,我和学长一同发表了一篇EI会议论文,里面关于优化器的对比就占了一定的篇幅,那么今天,通过学习,我一定也会自己清楚如何弄清楚优化器对比,本次学习由于临近期末周,仅做了基础学习,没有过多扩展,未来会抽一周补全。

二、我的环境

- 电脑系统:Windows 10

- 语言环境:Python 3.8.0

- 编译器:Pycharm2023.2.3

深度学习环境:TensorFlow

显卡及显存:RTX 3060 8G

三、前期工作

1、配置环境

python

import tensorflow as tf

gpus = tf.config.list_physical_devices("GPU")

if gpus:

gpu0 = gpus[0] #如果有多个GPU,仅使用第0个GPU

tf.config.experimental.set_memory_growth(gpu0, True) #设置GPU显存用量按需使用

tf.config.set_visible_devices([gpu0],"GPU")

from tensorflow import keras

import matplotlib.pyplot as plt

import pandas as pd

import numpy as np

import warnings,os,PIL,pathlib

warnings.filterwarnings("ignore") #忽略警告信息

plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

plt.rcParams['axes.unicode_minus'] = False # 用来正常显示负号2、 导入数据

导入所有猫狗图片数据,依次分别为训练集图片(train_images)、训练集标签(train_labels)、测试集图片(test_images)、测试集标签(test_labels),数据集来源于K同学啊

2.1 加载数据

py

data_dir = "/home/mw/input/dogcat3675/365-7-data"

data_dir = pathlib.Path(data_dir)

image_count = len(list(data_dir.glob('*/*')))

print("图片总数为:",image_count)图片总数为: 3400

py

img_height = 256

img_width = 256

batch_size = 32

py

"""

关于image_dataset_from_directory()的详细介绍可以参考文章:https://mtyjkh.blog.csdn.net/article/details/117018789

"""

train_ds = tf.keras.preprocessing.image_dataset_from_directory(

data_dir,

validation_split=0.2,

subset="training",

seed=12,

image_size=(img_height, img_width),

batch_size=batch_size)输出:

Found 3400 files belonging to 2 classes.

Using 2720 files for training.

py

"""

关于image_dataset_from_directory()的详细介绍可以参考文章:https://mtyjkh.blog.csdn.net/article/details/117018789

"""

val_ds = tf.keras.preprocessing.image_dataset_from_directory(

data_dir,

validation_split=0.2,

subset="validation",

seed=12,

image_size=(img_height, img_width),

batch_size=batch_size)Found 3400 files belonging to 2 classes.

Using 680 files for validation.我们可以通过class_names输出数据集的标签。标签将按字母顺序对应于目录名称。

py

class_names = train_ds.class_names

print(class_names)'cat', 'dog'

2.2 检查数据

py

for image_batch, labels_batch in train_ds:

print(image_batch.shape)

print(labels_batch.shape)

break(32, 256, 256, 3)

(32,)2.3 配置数据集

py

AUTOTUNE = tf.data.AUTOTUNE

def train_preprocessing(image,label):

return (image/255.0,label)

train_ds = (

train_ds.cache()

.shuffle(1000)

.map(train_preprocessing) # 这里可以设置预处理函数

# .batch(batch_size) # 在image_dataset_from_directory处已经设置了batch_size

.prefetch(buffer_size=AUTOTUNE)

)

val_ds = (

val_ds.cache()

.shuffle(1000)

.map(train_preprocessing) # 这里可以设置预处理函数

# .batch(batch_size) # 在image_dataset_from_directory处已经设置了batch_size

.prefetch(buffer_size=AUTOTUNE)

)2.4 数据可视化

py

plt.figure(figsize=(10, 8)) # 图形的宽为10高为5

plt.suptitle("数据展示")

for images, labels in train_ds.take(1):

for i in range(15):

plt.subplot(4, 5, i + 1)

plt.xticks([])

plt.yticks([])

plt.grid(False)

# 显示图片

plt.imshow(images[i])

# 显示标签

plt.xlabel(class_names[labels[i]])

plt.show()

四 、构建模型

py

from tensorflow.keras.layers import Dropout,Dense,BatchNormalization

from tensorflow.keras.models import Model

def create_model(optimizer='adam'):

# 加载预训练模型

vgg16_base_model = tf.keras.applications.vgg16.VGG16(weights='imagenet',

include_top=False,

input_shape=(img_width, img_height, 3),

pooling='avg')

for layer in vgg16_base_model.layers:

layer.trainable = False

X = vgg16_base_model.output

X = Dense(170, activation='relu')(X)

X = BatchNormalization()(X)

X = Dropout(0.5)(X)

output = Dense(len(class_names), activation='softmax')(X)

vgg16_model = Model(inputs=vgg16_base_model.input, outputs=output)

vgg16_model.compile(optimizer=optimizer,

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

return vgg16_model

model1 = create_model(optimizer=tf.keras.optimizers.Adam())

model2 = create_model(optimizer=tf.keras.optimizers.SGD())

model2.summary()Downloading data from https://storage.googleapis.com/tensorflow/keras-applications/vgg16/vgg16_weights_tf_dim_ordering_tf_kernels_notop.h5

58892288/58889256 [==============================] - 3s 0us/step

Model: "model_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_2 (InputLayer) [(None, 256, 256, 3)] 0

_________________________________________________________________

block1_conv1 (Conv2D) (None, 256, 256, 64) 1792

_________________________________________________________________

block1_conv2 (Conv2D) (None, 256, 256, 64) 36928

_________________________________________________________________

block1_pool (MaxPooling2D) (None, 128, 128, 64) 0

_________________________________________________________________

block2_conv1 (Conv2D) (None, 128, 128, 128) 73856

_________________________________________________________________

block2_conv2 (Conv2D) (None, 128, 128, 128) 147584

_________________________________________________________________

block2_pool (MaxPooling2D) (None, 64, 64, 128) 0

_________________________________________________________________

block3_conv1 (Conv2D) (None, 64, 64, 256) 295168

_________________________________________________________________

block3_conv2 (Conv2D) (None, 64, 64, 256) 590080

_________________________________________________________________

block3_conv3 (Conv2D) (None, 64, 64, 256) 590080

_________________________________________________________________

block3_pool (MaxPooling2D) (None, 32, 32, 256) 0

_________________________________________________________________

block4_conv1 (Conv2D) (None, 32, 32, 512) 1180160

_________________________________________________________________

block4_conv2 (Conv2D) (None, 32, 32, 512) 2359808

_________________________________________________________________

block4_conv3 (Conv2D) (None, 32, 32, 512) 2359808

_________________________________________________________________

block4_pool (MaxPooling2D) (None, 16, 16, 512) 0

_________________________________________________________________

block5_conv1 (Conv2D) (None, 16, 16, 512) 2359808

_________________________________________________________________

block5_conv2 (Conv2D) (None, 16, 16, 512) 2359808

_________________________________________________________________

block5_conv3 (Conv2D) (None, 16, 16, 512) 2359808

_________________________________________________________________

block5_pool (MaxPooling2D) (None, 8, 8, 512) 0

_________________________________________________________________

global_average_pooling2d_1 ( (None, 512) 0

_________________________________________________________________

dense_2 (Dense) (None, 170) 87210

_________________________________________________________________

batch_normalization_1 (Batch (None, 170) 680

_________________________________________________________________

dropout_1 (Dropout) (None, 170) 0

_________________________________________________________________

dense_3 (Dense) (None, 2) 342

=================================================================

Total params: 14,802,920

Trainable params: 87,892

Non-trainable params: 14,715,028

_________________________________________________________________五、训练模型

py

NO_EPOCHS = 50

history_model1 = model1.fit(train_ds, epochs=NO_EPOCHS, verbose=1, validation_data=val_ds)

history_model2 = model2.fit(train_ds, epochs=NO_EPOCHS, verbose=1, validation_data=val_ds)Epoch 1/50

85/85 [==============================] - 40s 230ms/step - loss: 0.2183 - accuracy: 0.8948 - val_loss: 0.1916 - val_accuracy: 0.9912

Epoch 2/50

85/85 [==============================] - 15s 182ms/step - loss: 0.0372 - accuracy: 0.9901 - val_loss: 0.0799 - val_accuracy: 0.9956

Epoch 3/50

85/85 [==============================] - 16s 184ms/step - loss: 0.0213 - accuracy: 0.9941 - val_loss: 0.0456 - val_accuracy: 0.9897

Epoch 4/50

85/85 [==============================] - 16s 185ms/step - loss: 0.0191 - accuracy: 0.9916 - val_loss: 0.0220 - val_accuracy: 0.9941

Epoch 5/50

85/85 [==============================] - 16s 187ms/step - loss: 0.0177 - accuracy: 0.9938 - val_loss: 0.0319 - val_accuracy: 0.9868

Epoch 6/50

85/85 [==============================] - 16s 188ms/step - loss: 0.0135 - accuracy: 0.9952 - val_loss: 0.0139 - val_accuracy: 0.9941

Epoch 7/50

85/85 [==============================] - 16s 189ms/step - loss: 0.0108 - accuracy: 0.9965 - val_loss: 0.0343 - val_accuracy: 0.9868

Epoch 8/50

85/85 [==============================] - 16s 190ms/step - loss: 0.0088 - accuracy: 0.9979 - val_loss: 0.0919 - val_accuracy: 0.9676

Epoch 9/50

85/85 [==============================] - 16s 191ms/step - loss: 0.0141 - accuracy: 0.9958 - val_loss: 0.0476 - val_accuracy: 0.9824

Epoch 10/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0093 - accuracy: 0.9985 - val_loss: 0.0067 - val_accuracy: 0.9985

Epoch 11/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0055 - accuracy: 0.9995 - val_loss: 0.0160 - val_accuracy: 0.9941

Epoch 12/50

85/85 [==============================] - 16s 194ms/step - loss: 0.0085 - accuracy: 0.9986 - val_loss: 0.0076 - val_accuracy: 0.9956

Epoch 13/50

85/85 [==============================] - 17s 195ms/step - loss: 0.0073 - accuracy: 0.9982 - val_loss: 0.0131 - val_accuracy: 0.9971

Epoch 14/50

85/85 [==============================] - 17s 195ms/step - loss: 0.0060 - accuracy: 0.9999 - val_loss: 0.0126 - val_accuracy: 0.9941

Epoch 15/50

85/85 [==============================] - 17s 196ms/step - loss: 0.0031 - accuracy: 0.9993 - val_loss: 0.0053 - val_accuracy: 0.9985

Epoch 16/50

85/85 [==============================] - 17s 196ms/step - loss: 0.0031 - accuracy: 0.9999 - val_loss: 0.0108 - val_accuracy: 0.9941

Epoch 17/50

85/85 [==============================] - 17s 196ms/step - loss: 0.0038 - accuracy: 0.9989 - val_loss: 0.0054 - val_accuracy: 0.9985

Epoch 18/50

85/85 [==============================] - 17s 195ms/step - loss: 0.0014 - accuracy: 1.0000 - val_loss: 0.0048 - val_accuracy: 0.9985

Epoch 19/50

85/85 [==============================] - 17s 195ms/step - loss: 0.0017 - accuracy: 1.0000 - val_loss: 0.0060 - val_accuracy: 0.9985

Epoch 20/50

85/85 [==============================] - 17s 195ms/step - loss: 0.0023 - accuracy: 0.9988 - val_loss: 0.0045 - val_accuracy: 0.9985

Epoch 21/50

85/85 [==============================] - 16s 194ms/step - loss: 0.0066 - accuracy: 0.9980 - val_loss: 0.0048 - val_accuracy: 1.0000

Epoch 22/50

85/85 [==============================] - 16s 194ms/step - loss: 0.0019 - accuracy: 0.9999 - val_loss: 0.0212 - val_accuracy: 0.9941

Epoch 23/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0038 - accuracy: 0.9990 - val_loss: 0.0049 - val_accuracy: 0.9985

Epoch 24/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0037 - accuracy: 0.9987 - val_loss: 0.0054 - val_accuracy: 0.9985

Epoch 25/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0017 - accuracy: 1.0000 - val_loss: 0.0839 - val_accuracy: 0.9721

Epoch 26/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0037 - accuracy: 0.9983 - val_loss: 0.0084 - val_accuracy: 0.9985

Epoch 27/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0022 - accuracy: 1.0000 - val_loss: 0.0078 - val_accuracy: 0.9985

Epoch 28/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0030 - accuracy: 0.9992 - val_loss: 0.0044 - val_accuracy: 0.9985

Epoch 29/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0011 - accuracy: 0.9999 - val_loss: 0.0047 - val_accuracy: 0.9971

Epoch 30/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0023 - accuracy: 1.0000 - val_loss: 0.0082 - val_accuracy: 0.9985

Epoch 31/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0015 - accuracy: 0.9998 - val_loss: 0.0099 - val_accuracy: 0.9956

Epoch 32/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0014 - accuracy: 1.0000 - val_loss: 0.0058 - val_accuracy: 0.9985

Epoch 33/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0012 - accuracy: 1.0000 - val_loss: 0.0067 - val_accuracy: 0.9985

Epoch 34/50

85/85 [==============================] - 16s 193ms/step - loss: 9.7591e-04 - accuracy: 1.0000 - val_loss: 0.0058 - val_accuracy: 0.9985

Epoch 35/50

85/85 [==============================] - 16s 193ms/step - loss: 6.5250e-04 - accuracy: 1.0000 - val_loss: 0.0106 - val_accuracy: 0.9971

Epoch 36/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0016 - accuracy: 0.9997 - val_loss: 0.0140 - val_accuracy: 0.9926

Epoch 37/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0070 - accuracy: 0.9960 - val_loss: 0.0359 - val_accuracy: 0.9868

Epoch 38/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0023 - accuracy: 0.9996 - val_loss: 0.0309 - val_accuracy: 0.9897

Epoch 39/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0023 - accuracy: 0.9991 - val_loss: 0.0062 - val_accuracy: 0.9985

Epoch 40/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0014 - accuracy: 0.9999 - val_loss: 0.0133 - val_accuracy: 0.9956

Epoch 41/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0028 - accuracy: 0.9986 - val_loss: 0.1411 - val_accuracy: 0.9603

Epoch 42/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0036 - accuracy: 0.9991 - val_loss: 0.0233 - val_accuracy: 0.9941

Epoch 43/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0025 - accuracy: 0.9995 - val_loss: 0.0018 - val_accuracy: 1.0000

Epoch 44/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0015 - accuracy: 1.0000 - val_loss: 0.0043 - val_accuracy: 0.9985

Epoch 45/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0040 - accuracy: 0.9988 - val_loss: 0.0049 - val_accuracy: 0.9985

Epoch 46/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0011 - accuracy: 1.0000 - val_loss: 0.0034 - val_accuracy: 0.9985

Epoch 47/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0012 - accuracy: 1.0000 - val_loss: 0.0055 - val_accuracy: 0.9971

Epoch 48/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0023 - accuracy: 0.9989 - val_loss: 0.0639 - val_accuracy: 0.9824

Epoch 49/50

85/85 [==============================] - 16s 193ms/step - loss: 8.3466e-04 - accuracy: 1.0000 - val_loss: 0.0049 - val_accuracy: 0.9985

Epoch 50/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0021 - accuracy: 0.9993 - val_loss: 0.0163 - val_accuracy: 0.9926

Epoch 1/50

85/85 [==============================] - 17s 194ms/step - loss: 0.3235 - accuracy: 0.8649 - val_loss: 0.4082 - val_accuracy: 0.8088

Epoch 2/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0971 - accuracy: 0.9641 - val_loss: 0.2085 - val_accuracy: 0.9882

Epoch 3/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0705 - accuracy: 0.9773 - val_loss: 0.1053 - val_accuracy: 0.9926

Epoch 4/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0675 - accuracy: 0.9780 - val_loss: 0.0565 - val_accuracy: 0.9926

Epoch 5/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0510 - accuracy: 0.9841 - val_loss: 0.0317 - val_accuracy: 0.9941

Epoch 6/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0466 - accuracy: 0.9802 - val_loss: 0.0229 - val_accuracy: 0.9956

Epoch 7/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0424 - accuracy: 0.9869 - val_loss: 0.0160 - val_accuracy: 0.9985

Epoch 8/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0488 - accuracy: 0.9843 - val_loss: 0.0152 - val_accuracy: 0.9956

Epoch 9/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0405 - accuracy: 0.9892 - val_loss: 0.0134 - val_accuracy: 0.9971

Epoch 10/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0398 - accuracy: 0.9875 - val_loss: 0.0128 - val_accuracy: 0.9956

Epoch 11/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0387 - accuracy: 0.9856 - val_loss: 0.0139 - val_accuracy: 0.9956

Epoch 12/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0334 - accuracy: 0.9900 - val_loss: 0.0155 - val_accuracy: 0.9956

Epoch 13/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0321 - accuracy: 0.9915 - val_loss: 0.0119 - val_accuracy: 0.9956

Epoch 14/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0358 - accuracy: 0.9878 - val_loss: 0.0116 - val_accuracy: 0.9971

Epoch 15/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0270 - accuracy: 0.9898 - val_loss: 0.0098 - val_accuracy: 0.9985

Epoch 16/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0233 - accuracy: 0.9936 - val_loss: 0.0102 - val_accuracy: 0.9956

Epoch 17/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0274 - accuracy: 0.9915 - val_loss: 0.0106 - val_accuracy: 0.9971

Epoch 18/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0233 - accuracy: 0.9942 - val_loss: 0.0090 - val_accuracy: 0.9971

Epoch 19/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0284 - accuracy: 0.9894 - val_loss: 0.0111 - val_accuracy: 0.9971

Epoch 20/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0232 - accuracy: 0.9934 - val_loss: 0.0098 - val_accuracy: 0.9956

Epoch 21/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0262 - accuracy: 0.9928 - val_loss: 0.0108 - val_accuracy: 0.9941

Epoch 22/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0239 - accuracy: 0.9940 - val_loss: 0.0112 - val_accuracy: 0.9941

Epoch 23/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0251 - accuracy: 0.9927 - val_loss: 0.0089 - val_accuracy: 0.9956

Epoch 24/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0251 - accuracy: 0.9910 - val_loss: 0.0086 - val_accuracy: 0.9956

Epoch 25/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0254 - accuracy: 0.9904 - val_loss: 0.0102 - val_accuracy: 0.9956

Epoch 26/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0245 - accuracy: 0.9916 - val_loss: 0.0084 - val_accuracy: 0.9985

Epoch 27/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0226 - accuracy: 0.9932 - val_loss: 0.0086 - val_accuracy: 0.9985

Epoch 28/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0289 - accuracy: 0.9905 - val_loss: 0.0088 - val_accuracy: 0.9971

Epoch 29/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0219 - accuracy: 0.9928 - val_loss: 0.0091 - val_accuracy: 0.9956

Epoch 30/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0215 - accuracy: 0.9943 - val_loss: 0.0077 - val_accuracy: 0.9985

Epoch 31/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0178 - accuracy: 0.9952 - val_loss: 0.0074 - val_accuracy: 0.9971

Epoch 32/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0231 - accuracy: 0.9929 - val_loss: 0.0117 - val_accuracy: 0.9956

Epoch 33/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0183 - accuracy: 0.9962 - val_loss: 0.0078 - val_accuracy: 0.9971

Epoch 34/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0186 - accuracy: 0.9950 - val_loss: 0.0076 - val_accuracy: 0.9971

Epoch 35/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0185 - accuracy: 0.9949 - val_loss: 0.0089 - val_accuracy: 0.9956

Epoch 36/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0199 - accuracy: 0.9924 - val_loss: 0.0080 - val_accuracy: 0.9971

Epoch 37/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0165 - accuracy: 0.9960 - val_loss: 0.0076 - val_accuracy: 0.9971

Epoch 38/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0180 - accuracy: 0.9945 - val_loss: 0.0083 - val_accuracy: 0.9985

Epoch 39/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0219 - accuracy: 0.9904 - val_loss: 0.0087 - val_accuracy: 0.9971

Epoch 40/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0179 - accuracy: 0.9971 - val_loss: 0.0086 - val_accuracy: 0.9956

Epoch 41/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0166 - accuracy: 0.9937 - val_loss: 0.0076 - val_accuracy: 0.9971

Epoch 42/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0159 - accuracy: 0.9946 - val_loss: 0.0074 - val_accuracy: 0.9985

Epoch 43/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0161 - accuracy: 0.9942 - val_loss: 0.0079 - val_accuracy: 0.9971

Epoch 44/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0173 - accuracy: 0.9957 - val_loss: 0.0073 - val_accuracy: 0.9971

Epoch 45/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0149 - accuracy: 0.9965 - val_loss: 0.0069 - val_accuracy: 0.9985

Epoch 46/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0157 - accuracy: 0.9963 - val_loss: 0.0076 - val_accuracy: 0.9971

Epoch 47/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0153 - accuracy: 0.9959 - val_loss: 0.0074 - val_accuracy: 0.9971

Epoch 48/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0162 - accuracy: 0.9957 - val_loss: 0.0068 - val_accuracy: 0.9985

Epoch 49/50

85/85 [==============================] - 16s 193ms/step - loss: 0.0132 - accuracy: 0.9955 - val_loss: 0.0079 - val_accuracy: 0.9971

Epoch 50/50

85/85 [==============================] - 16s 192ms/step - loss: 0.0131 - accuracy: 0.9954 - val_loss: 0.0085 - val_accuracy: 0.9971六、评估模型

1、Accuracy与Loss图

py

from matplotlib.ticker import MultipleLocator

plt.rcParams['savefig.dpi'] = 300 #图片像素

plt.rcParams['figure.dpi'] = 300 #分辨率

acc1 = history_model1.history['accuracy']

acc2 = history_model2.history['accuracy']

val_acc1 = history_model1.history['val_accuracy']

val_acc2 = history_model2.history['val_accuracy']

loss1 = history_model1.history['loss']

loss2 = history_model2.history['loss']

val_loss1 = history_model1.history['val_loss']

val_loss2 = history_model2.history['val_loss']

epochs_range = range(len(acc1))

plt.figure(figsize=(16, 4))

plt.subplot(1, 2, 1)

plt.plot(epochs_range, acc1, label='Training Accuracy-Adam')

plt.plot(epochs_range, acc2, label='Training Accuracy-SGD')

plt.plot(epochs_range, val_acc1, label='Validation Accuracy-Adam')

plt.plot(epochs_range, val_acc2, label='Validation Accuracy-SGD')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

# 设置刻度间隔,x轴每1一个刻度

ax = plt.gca()

ax.xaxis.set_major_locator(MultipleLocator(1))

plt.subplot(1, 2, 2)

plt.plot(epochs_range, loss1, label='Training Loss-Adam')

plt.plot(epochs_range, loss2, label='Training Loss-SGD')

plt.plot(epochs_range, val_loss1, label='Validation Loss-Adam')

plt.plot(epochs_range, val_loss2, label='Validation Loss-SGD')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

# 设置刻度间隔,x轴每1一个刻度

ax = plt.gca()

ax.xaxis.set_major_locator(MultipleLocator(1))

plt.show()

2、模型评估

py

def test_accuracy_report(model):

score = model.evaluate(val_ds, verbose=0)

print('Loss function: %s, accuracy:' % score[0], score[1])

test_accuracy_report(model2)Loss function: 0.008488086983561516, accuracy: 0.9970588088035583