说明:基于logstash采集日志

环境:

物理机192.168.31.151

一.启动2个测试实例,每5-10s随机生成一条订单日志

实例一

包位置:/home/logtest/one/log-test-0.0.1-SNAPSHOT.jar

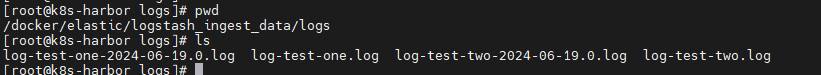

日志位置:/docker/elastic/logstash_ingest_data/logs/log-test-one.log

实例二

包位置:/home/logtest/two/log-test-0.0.1-SNAPSHOT.jar

日志位置:/docker/elastic/logstash_ingest_data/logs/log-test-two.log

二.配置/docker/elastic/logstash.conf

注:

- 配置文件中采集的/usr/share/logstash/ingest_data/logs是logstash容器内路径,与宿主机日志路径/docker/elastic/logstash_ingest_data/logs是bind关系

- 日志会被采集到es中的log-test-%{+YYYY.MM.dd}索引

- logstash采集时会自动给日志加上timestamp hostname等元数据,在kibana展示时就可以通过这些元数据筛选,排序

示例配置:

input {

# 输入插件配置,例如:file, beats, etc.

}

filter {

# 过滤器插件配置,例如:grok, date, mutate, etc.

}

output {

# 输出插件配置,例如:elasticsearch, stdout, etc.

}实际配置:

input {

file {

path => "/usr/share/logstash/ingest_data/logs/log-test-*.log"

start_position => "beginning"

}

}

filter {

}

output {

elasticsearch {

index => "log-test-%{+YYYY.MM.dd}"

hosts => "${ELASTIC_HOSTS}"

user => "${ELASTIC_USER}"

password => "${ELASTIC_PASSWORD}"

cacert => "certs/ca/ca.crt"

}

file {

path => "/opt/mytest-%{+YYYY.MM.dd}.log"

}

}重启logstash,日志开始采集。

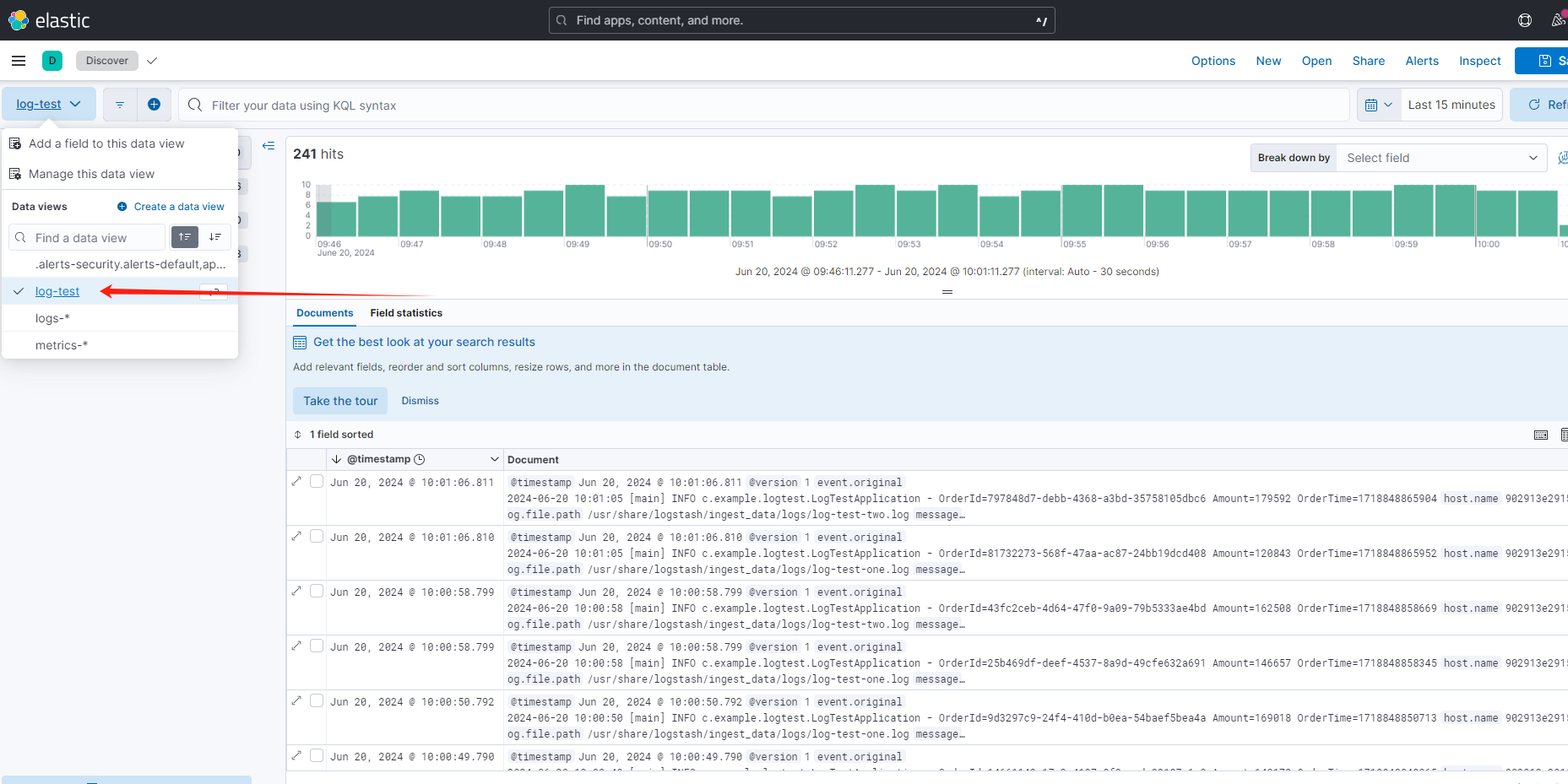

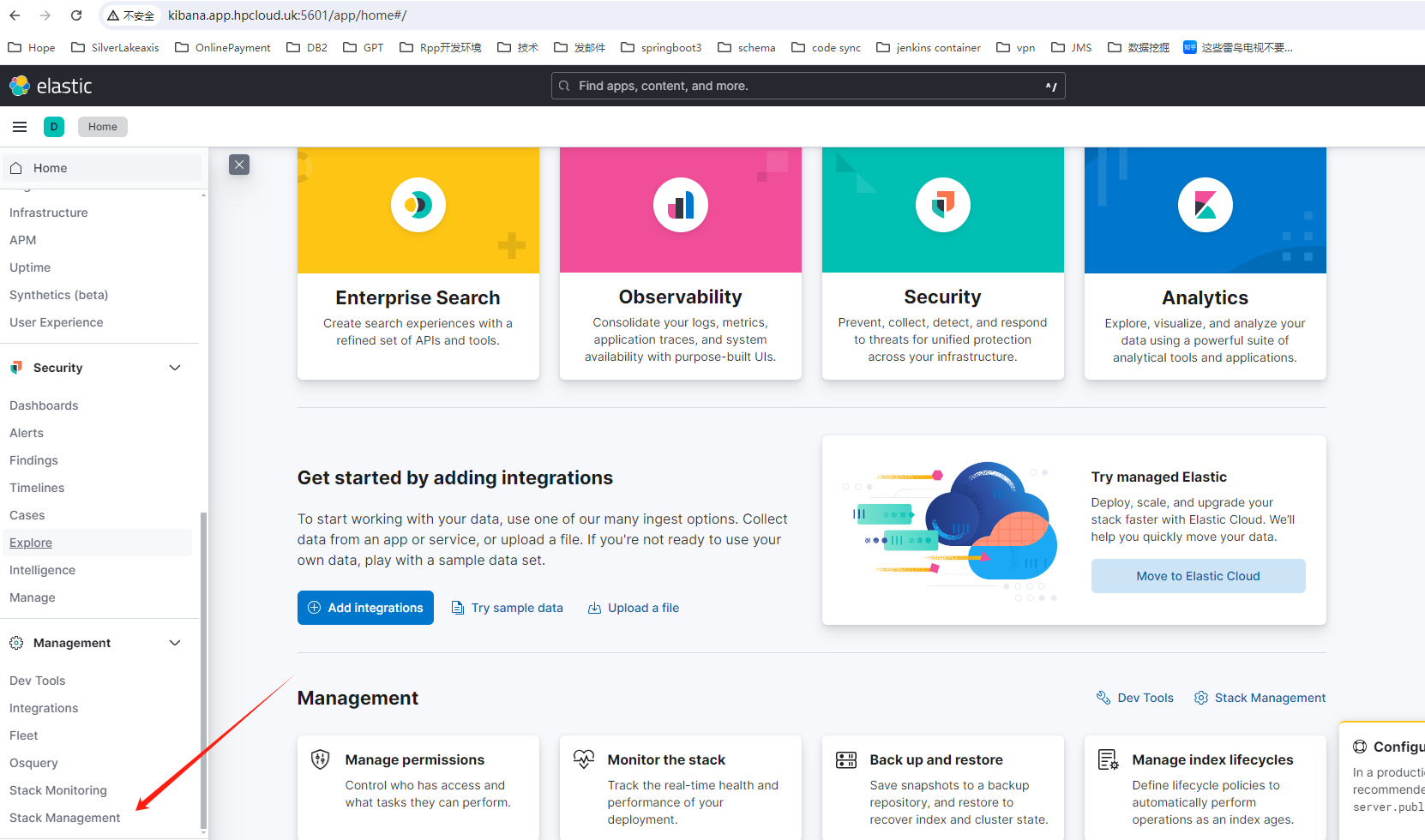

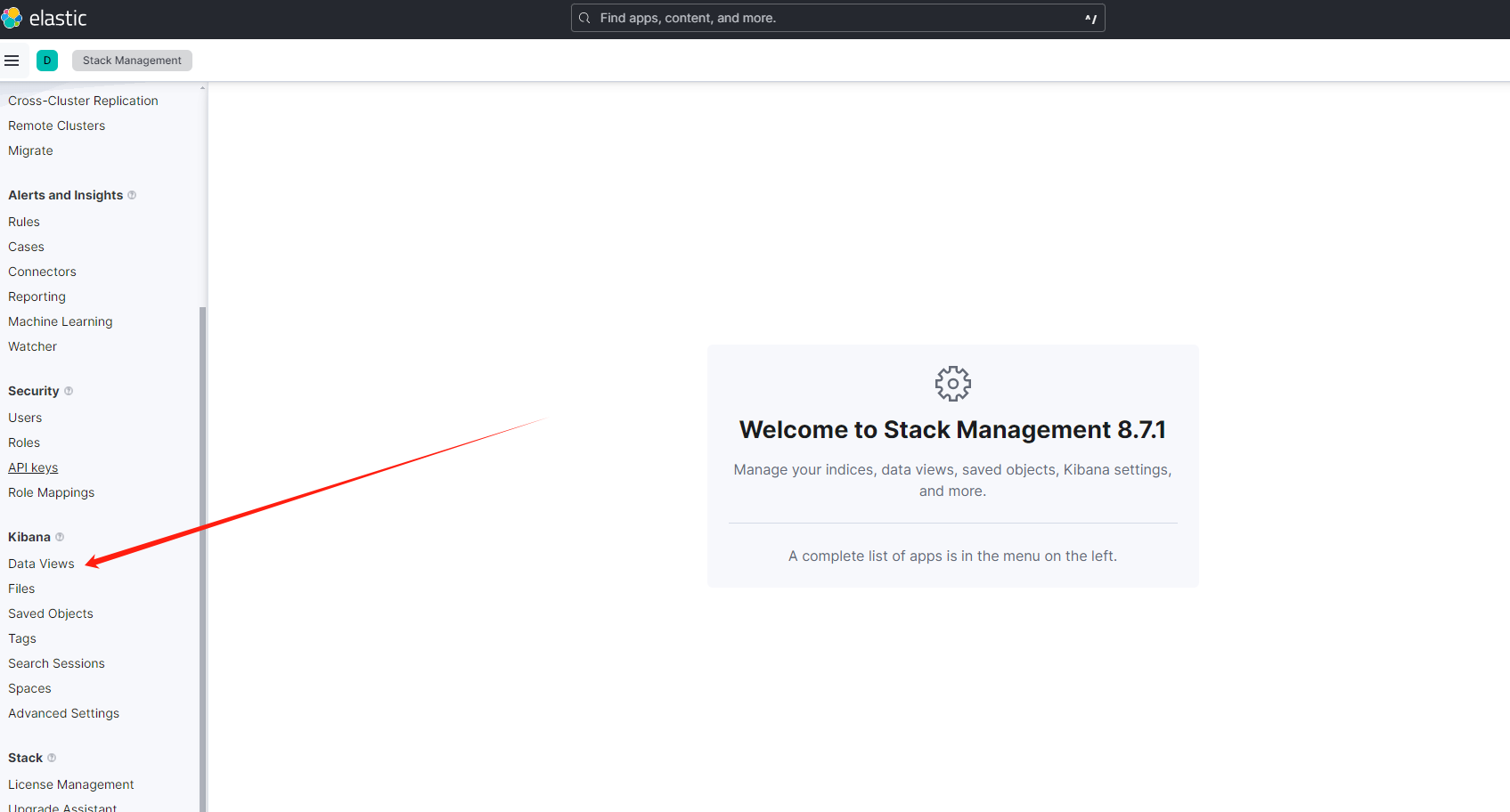

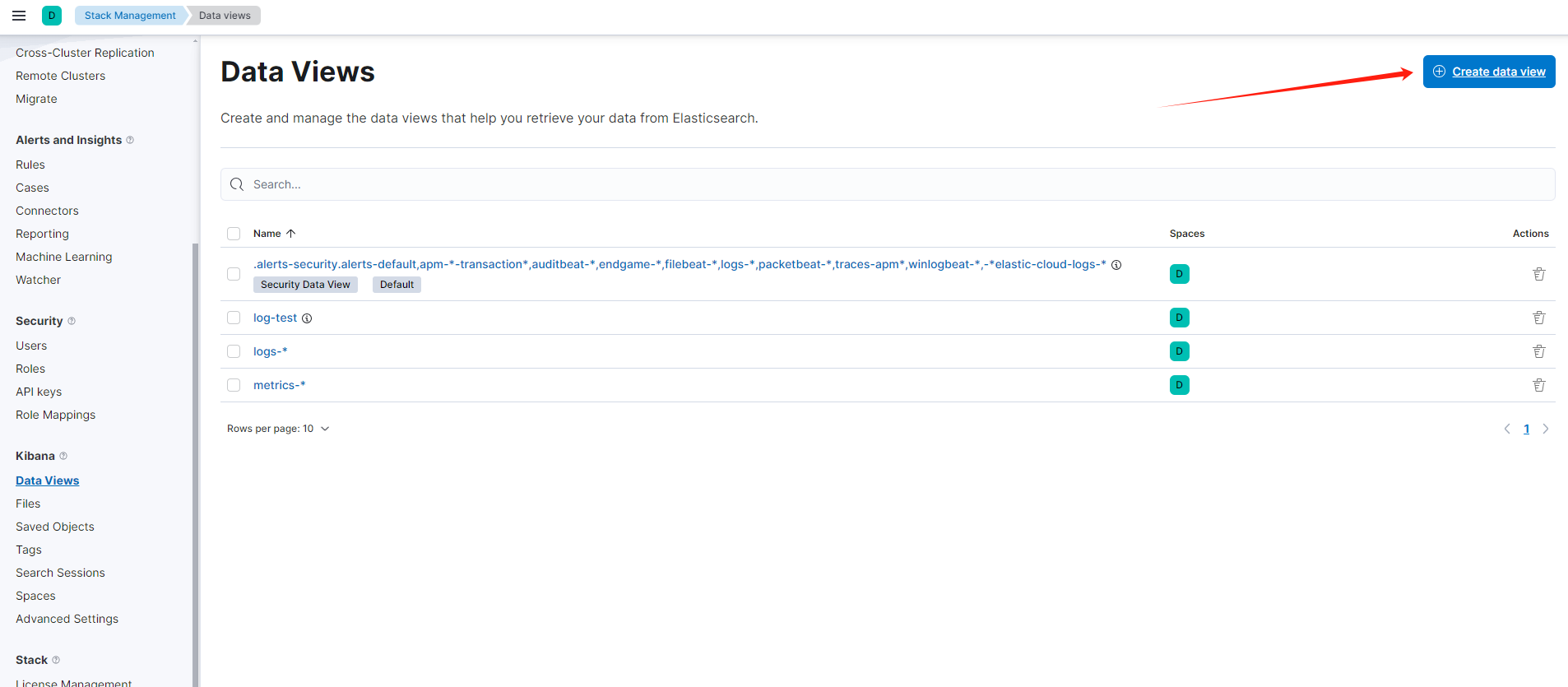

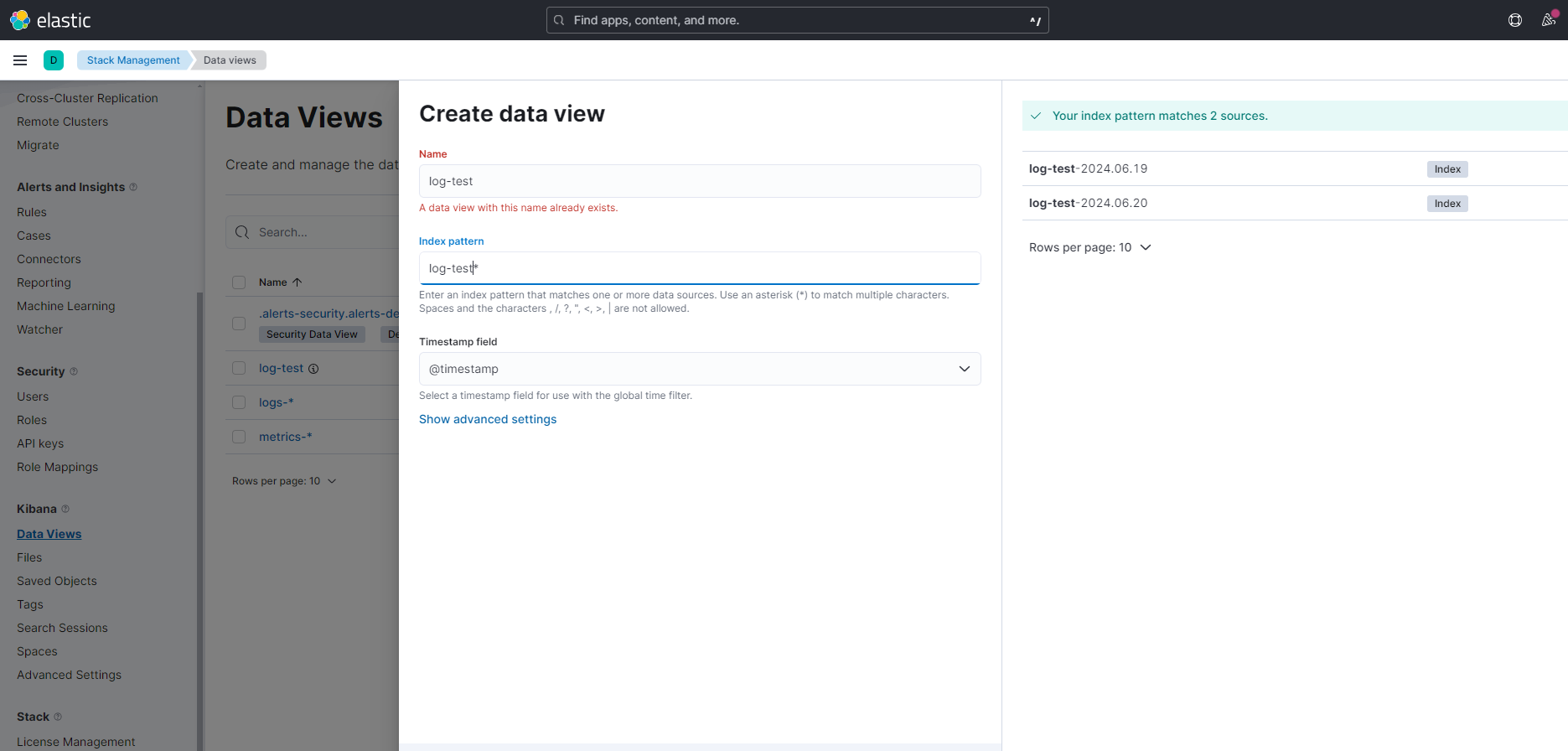

三.Kibana创建Data View(Index pattern设置为log-test*,用于匹配log-test-2024.06.20.log log-test-2024.06.19等日志)

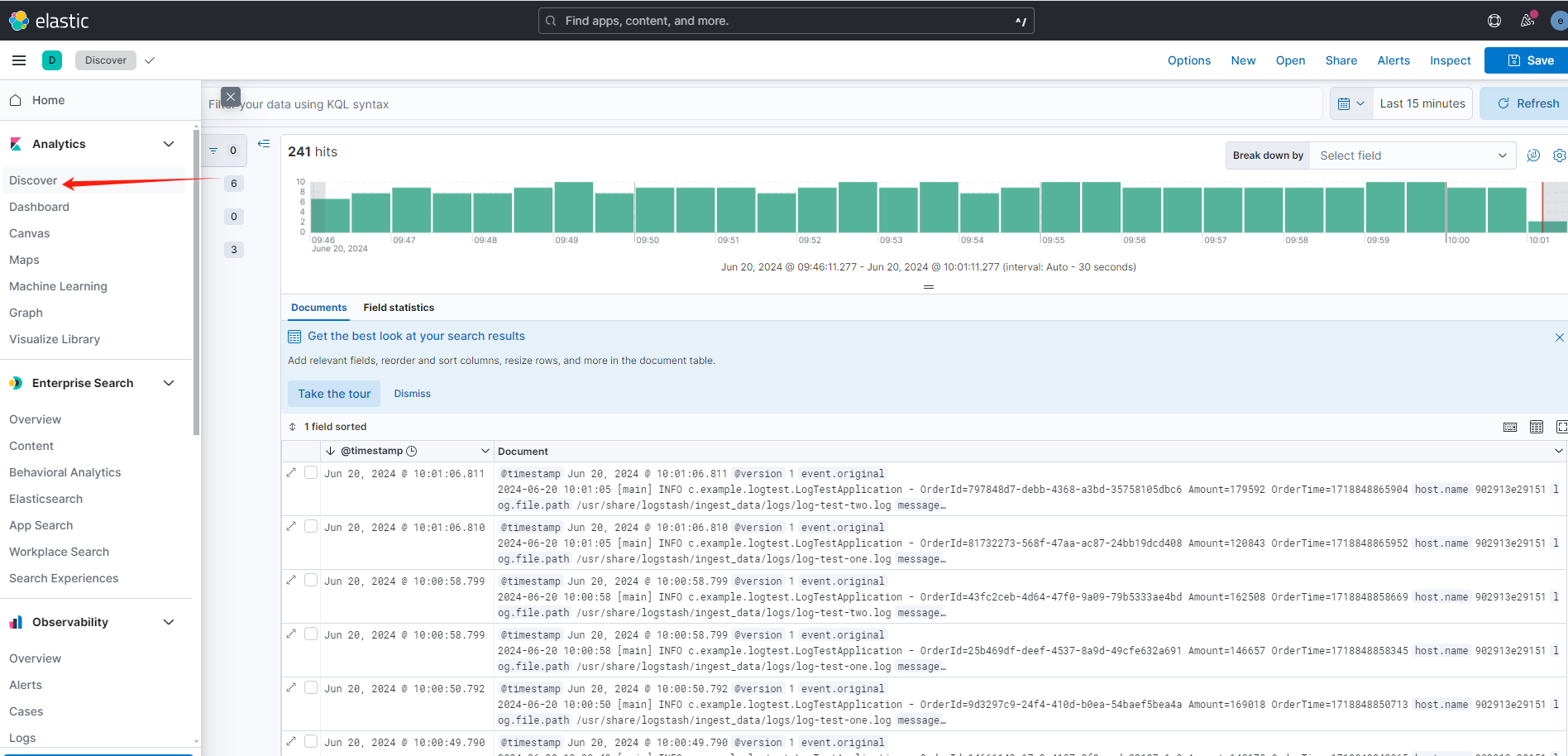

四.查看日志