1.Numpy array和Pytorch tensor的区别

笔记来源:

1.Comparison between Pytorch Tensor and Numpy Array

4.Tensors for Neural Networks, Clearly Explained!!!

5.What is a Tensor in Machine Learning?

1.1 Numpy Array

Numpy array can only hold elements of a single data type.

Create NumPy ndarray (1D array)

python

import numpy as np

arr_1D = np.array([1,2,3])

print(arr_1D)

Create NumPy ndarray (2D array)

python

import numpy as np

arr_2D = np.array([[1,2,3],[1,2,3],[1,2,3]])

print(arr_2D)

Create NumPy ndarray (3D array)

python

import numpy as np

arr_3D = np.array([[[1,2,3],[1,2,3],[1,2,3],],[[1,2,3],[1,2,3],[1,2,3],],[[1,2,3],[1,2,3],[1,2,3]]])

print(arr_3D)

1.2 Pytorch Tensor

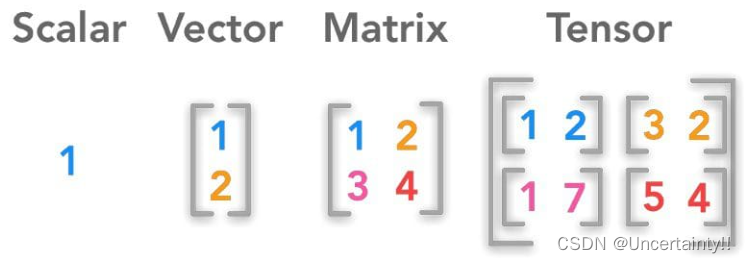

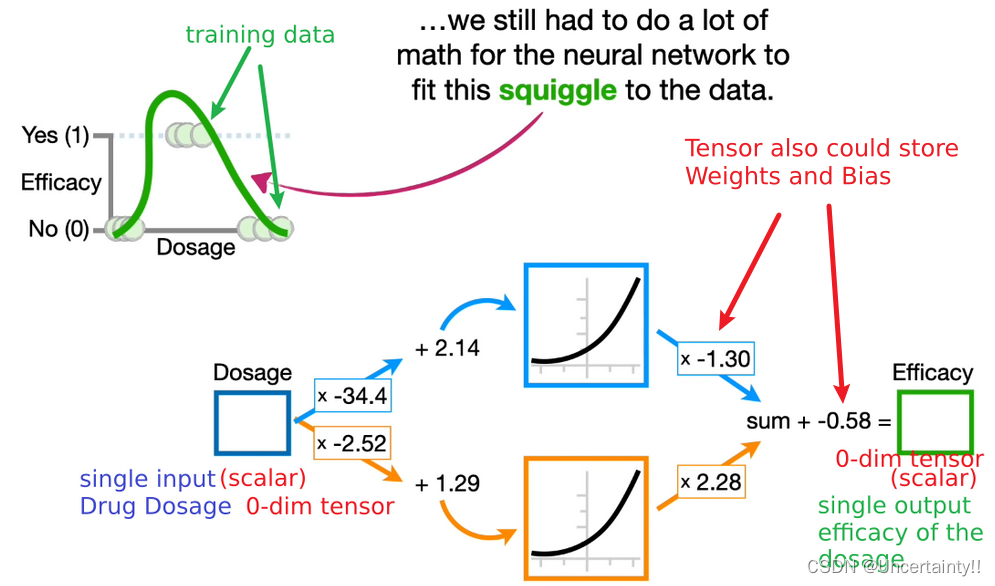

A torch.Tensor is a multi-dimensional matrix containing elements of a single data type.

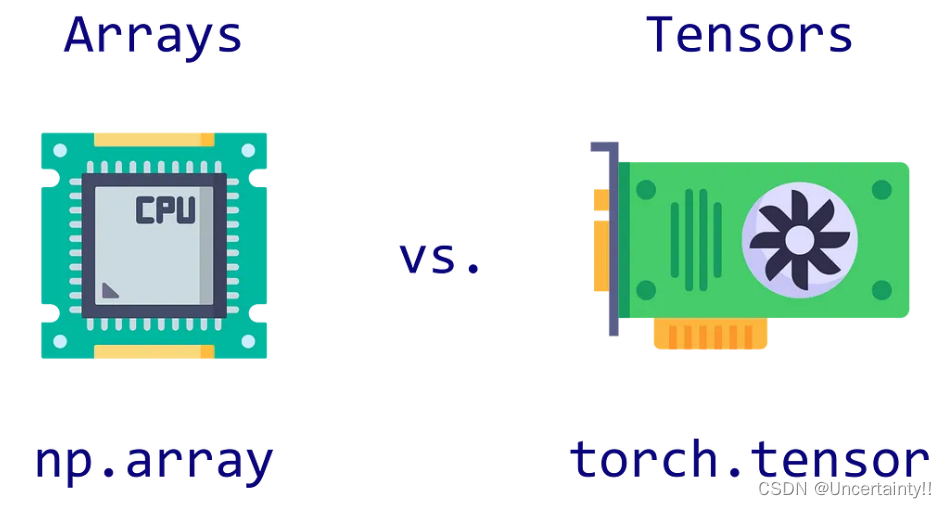

Pytorch tensors are similar to numpy arrays, but can also be operated on CUDA-capable Nvidia GPU.

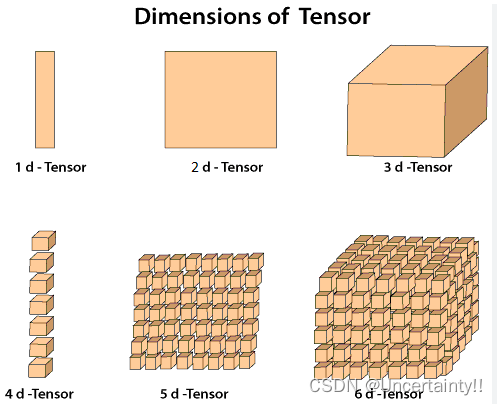

0-dimensional Tensor

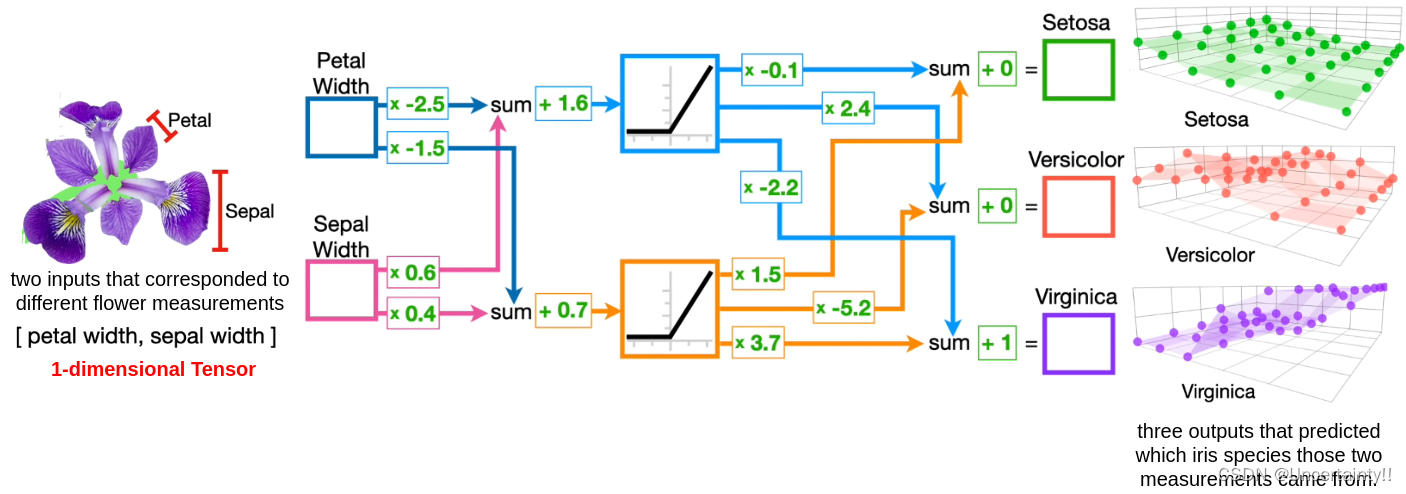

1-dimensional Tensor

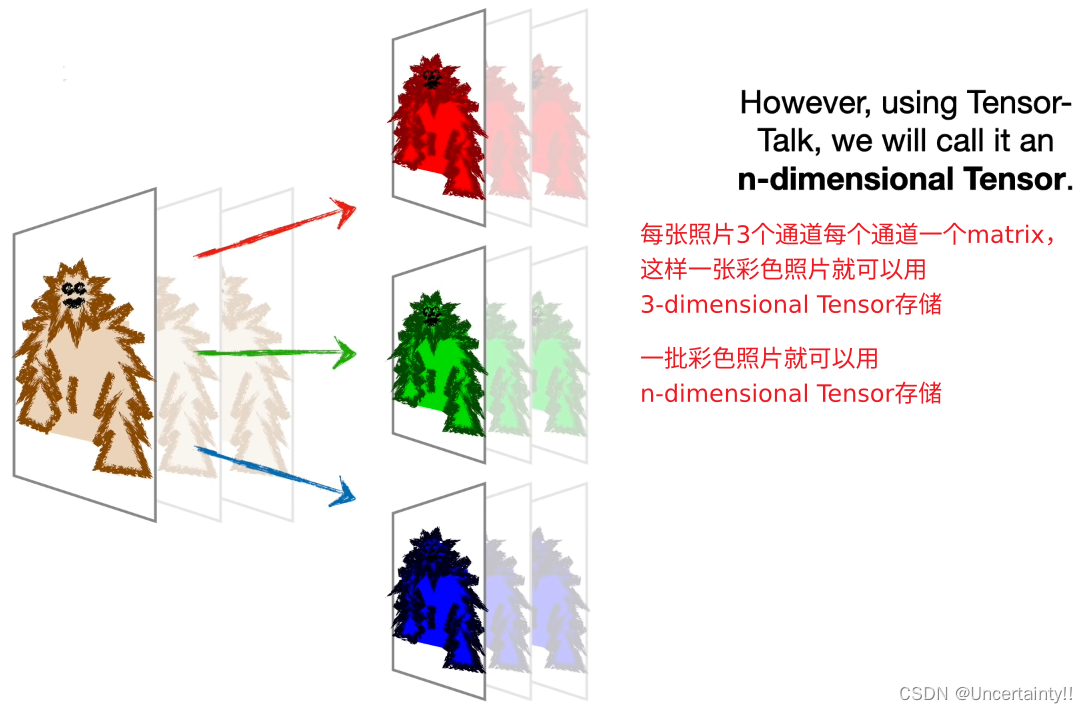

2-dimensional Tensor

n-dimensional Tensor

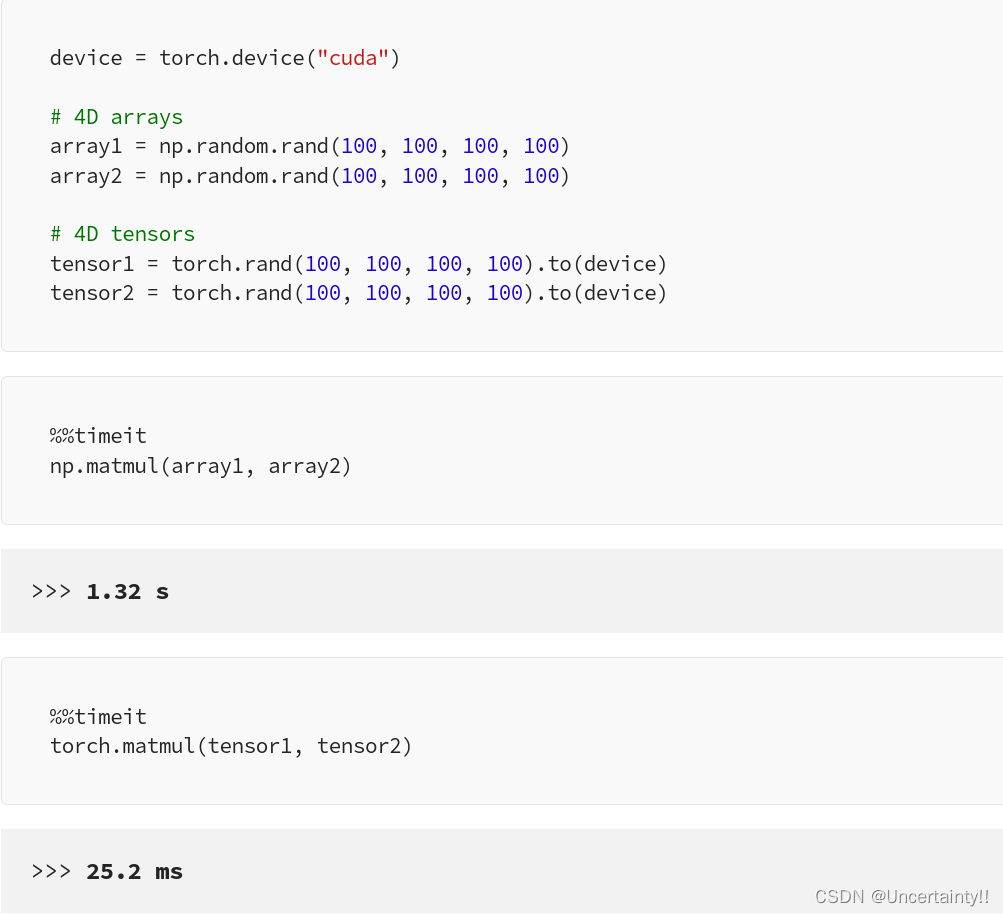

1.3 Difference

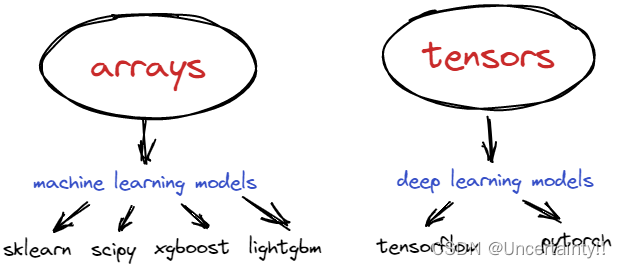

1.Numpy arrays are mainly used in typical machine learning algorithms (such as k-means or Decision Tree in scikit-learn) whereas pytorch tensors are mainly used in deep learning which requires heavy matrix computation.

2.The numpy arrays are the core functionality of the numpy package designed to support faster mathematical operations. Unlike python's inbuilt list data structure, they can only hold elements of a single data type. Library like pandas which is used for data preprocessing is built around the numpy array. Pytorch tensors are similar to numpy arrays, but can also be operated on CUDA-capable Nvidia GPU.The biggest difference between a numpy array and a PyTorch Tensor is that a PyTorch Tensor can run on either CPU or GPU.

3.Unlike numpy arrays, while creating pytorch tensor, it also accepts two other arguments called the device_type (whether the computation happens on CPU or GPU) and the requires_grad (which is used to compute the derivatives).