识别框架还是沿用之前的了MediaPipe Hand。

背景知识不摘重复,参见之前的:亚博microros小车-原生ubuntu支持系列:10-画笔-CSDN博客

手指控制

src/yahboom_esp32_mediapipe/yahboom_esp32_mediapipe/目录下新建文件10_HandCtrl.py,代码如下:

python

#!/usr/bin/env python3

# encoding: utf-8

import math

import time

import cv2 as cv

import numpy as np

import mediapipe as mp

import rclpy

from rclpy.node import Node

from cv_bridge import CvBridge

from sensor_msgs.msg import Image, CompressedImage

from rclpy.time import Time

import datetime

volPer = value = index = 0

effect = ["color", "thresh", "blur", "hue", "enhance"]

volBar = 400

class handDetector:

def __init__(self, mode=False, maxHands=2, detectorCon=0.5, trackCon=0.5):

self.tipIds = [4, 8, 12, 16, 20]

self.mpHand = mp.solutions.hands

self.mpDraw = mp.solutions.drawing_utils

self.hands = self.mpHand.Hands(#模型初始化

static_image_mode=mode,

max_num_hands=maxHands,

min_detection_confidence=detectorCon,

min_tracking_confidence=trackCon

)

self.lmDrawSpec = mp.solutions.drawing_utils.DrawingSpec(color=(0, 0, 255), thickness=-1, circle_radius=15)

self.drawSpec = mp.solutions.drawing_utils.DrawingSpec(color=(0, 255, 0), thickness=10, circle_radius=10)

#距离计算

def get_dist(self, point1, point2):

x1, y1 = point1

x2, y2 = point2

return abs(math.sqrt(math.pow(abs(y1 - y2), 2) + math.pow(abs(x1 - x2), 2)))

#计算角度

def calc_angle(self, pt1, pt2, pt3):

point1 = self.lmList[pt1][1], self.lmList[pt1][2]

point2 = self.lmList[pt2][1], self.lmList[pt2][2]

point3 = self.lmList[pt3][1], self.lmList[pt3][2]

a = self.get_dist(point1, point2)

b = self.get_dist(point2, point3)

c = self.get_dist(point1, point3)

try:#余弦定理

radian = math.acos((math.pow(a, 2) + math.pow(b, 2) - math.pow(c, 2)) / (2 * a * b))

angle = radian / math.pi * 180#弧度转角度

except:

angle = 0

return abs(angle)

def findHands(self, frame, draw=True):

img = np.zeros(frame.shape, np.uint8)

img_RGB = cv.cvtColor(frame, cv.COLOR_BGR2RGB)#图像格式转换

self.results = self.hands.process(img_RGB)#检测

if self.results.multi_hand_landmarks:

for handLms in self.results.multi_hand_landmarks:#输出关键点

if draw: self.mpDraw.draw_landmarks(img, handLms, self.mpHand.HAND_CONNECTIONS)

return img

def findPosition(self, frame, draw=True):

self.lmList = []

if self.results.multi_hand_landmarks:

for id, lm in enumerate(self.results.multi_hand_landmarks[0].landmark):

# print(id,lm)

h, w, c = frame.shape

cx, cy = int(lm.x * w), int(lm.y * h)

# print(id, lm.x, lm.y, lm.z)

self.lmList.append([id, cx, cy])#记录指点序号与坐标

if draw: cv.circle(frame, (cx, cy), 15, (0, 0, 255), cv.FILLED)

return self.lmList

def frame_combine(slef,frame, src):

if len(frame.shape) == 3:

frameH, frameW = frame.shape[:2]

srcH, srcW = src.shape[:2]

dst = np.zeros((max(frameH, srcH), frameW + srcW, 3), np.uint8)

dst[:, :frameW] = frame[:, :]

dst[:, frameW:] = src[:, :]

else:

src = cv.cvtColor(src, cv.COLOR_BGR2GRAY)

frameH, frameW = frame.shape[:2]

imgH, imgW = src.shape[:2]

dst = np.zeros((frameH, frameW + imgW), np.uint8)

dst[:, :frameW] = frame[:, :]

dst[:, frameW:] = src[:, :]

return dst

class MY_Picture(Node):

def __init__(self, name):

super().__init__(name)

self.bridge = CvBridge()

self.sub_img = self.create_subscription(

CompressedImage, '/espRos/esp32camera', self.handleTopic, 1) #获取esp32传来的图像

self.hand_detector = handDetector()

self.volPer = self.value = self.index = 0

self.effect = ["color", "thresh", "blur", "hue", "enhance"]

self.volBar = 400

self.last_stamp = None

self.new_seconds = 0

self.fps_seconds = 1

def handleTopic(self, msg):

self.last_stamp = msg.header.stamp

if self.last_stamp:

total_secs = Time(nanoseconds=self.last_stamp.nanosec, seconds=self.last_stamp.sec).nanoseconds

delta = datetime.timedelta(seconds=total_secs * 1e-9)

seconds = delta.total_seconds()*100

if self.new_seconds != 0:

self.fps_seconds = seconds - self.new_seconds

self.new_seconds = seconds#保留这次的值

start = time.time()

frame = self.bridge.compressed_imgmsg_to_cv2(msg)

frame = cv.resize(frame, (640, 480))

action = cv.waitKey(1) & 0xFF

img = self.hand_detector.findHands(frame)

lmList = self.hand_detector.findPosition(frame, draw=False)

if len(lmList) != 0:

angle = self.hand_detector.calc_angle(4, 0, 8)#计算拇指,0点,食指尖的角度

x1, y1 = lmList[4][1], lmList[4][2]

x2, y2 = lmList[8][1], lmList[8][2]

cx, cy = (x1 + x2) // 2, (y1 + y2) // 2

cv.circle(img, (x1, y1), 15, (255, 0, 255), cv.FILLED)

cv.circle(img, (x2, y2), 15, (255, 0, 255), cv.FILLED)

cv.line(img, (x1, y1), (x2, y2), (255, 0, 255), 3)

cv.circle(img, (cx, cy), 15, (255, 0, 255), cv.FILLED)

if angle <= 10: cv.circle(img, (cx, cy), 15, (0, 255, 0), cv.FILLED)

self.volBar = np.interp(angle, [0, 70], [400, 150])

self.volPer = np.interp(angle, [0, 70], [0, 100])

self.value = np.interp(angle, [0, 70], [0, 255])

# print("angle: {},self.value: {}".format(angle, self.value))

print(f'mode:{self.effect[self.index]}')

# 进行阈值二值化操作,大于阈值value的,使用255表示,小于阈值value的,使用0表示

if self.effect[self.index]=="thresh":

gray = cv.cvtColor(frame, cv.COLOR_BGR2GRAY)

frame = cv.threshold(gray, self.value, 255, cv.THRESH_BINARY)[1]

# 进行高斯滤波,(21, 21)表示高斯矩阵的长与宽都是21,标准差取value

elif self.effect[self.index]=="blur":

frame = cv.GaussianBlur(frame, (21, 21), np.interp(self.value, [0, 255], [0, 11]))

# 色彩空间的转化,HSV转换为BGR

elif self.effect[self.index]=="hue":

frame = cv.cvtColor(frame, cv.COLOR_BGR2HSV)

frame[:, :, 0] += int(self.value)

frame = cv.cvtColor(frame, cv.COLOR_HSV2BGR)

# 调节对比度

elif self.effect[self.index]=="enhance":

enh_val = self.value / 40

clahe = cv.createCLAHE(clipLimit=enh_val, tileGridSize=(8, 8))

lab = cv.cvtColor(frame, cv.COLOR_BGR2LAB)

lab[:, :, 0] = clahe.apply(lab[:, :, 0])

frame = cv.cvtColor(lab, cv.COLOR_LAB2BGR)

if action == ord('f'):

self.index += 1

if self.index >= len(self.effect): self.index = 0

end = time.time()

fps = 1 / ((end - start)+self.fps_seconds)

text = "FPS : " + str(int(fps))

cv.putText(frame, text, (20, 30), cv.FONT_HERSHEY_SIMPLEX, 0.9, (0, 0, 255), 1)

cv.rectangle(img, (50, 150), (85, 400), (255, 0, 0), 3)

cv.rectangle(img, (50, int(self.volBar)), (85, 400), (0, 255, 0), cv.FILLED)

cv.putText(img, f'{int(self.volPer)}%', (40, 450), cv.FONT_HERSHEY_COMPLEX, 1, (0, 255, 0), 3)

dst = self.hand_detector.frame_combine(frame, img)

cv.imshow('dst', dst)

def main():

print("start it")

rclpy.init()

esp_img = MY_Picture("My_Picture")

try:

rclpy.spin(esp_img)

except KeyboardInterrupt:

pass

finally:

esp_img.destroy_node()

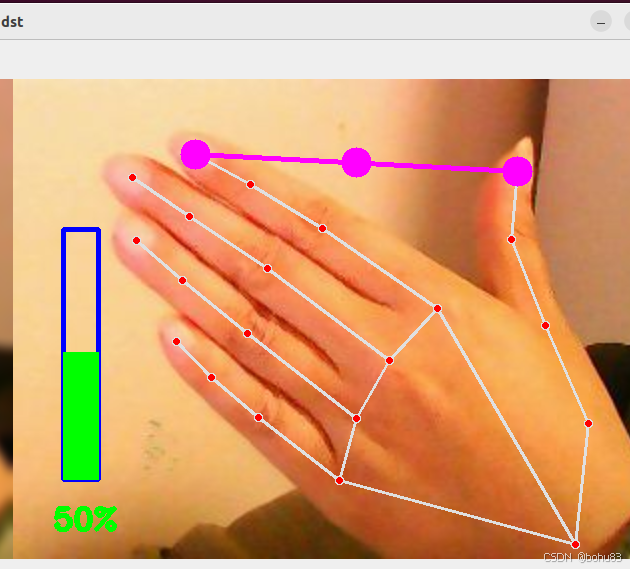

rclpy.shutdown()订阅esp32传出来的图像后,通过MediaPipe去做相关的识别后,再通过记录手指的点坐标,计算角4-0-8之间度数。本节与之前不同,增加了opencv输出的格式,"color", "thresh", "blur", "hue", "enhance"。默认是color,还有阈值化输出,高斯模糊等其他效果。按F键切换

构建后运行:

ros2 run yahboom_esp32_mediapipe HandCtrl

效果如下:

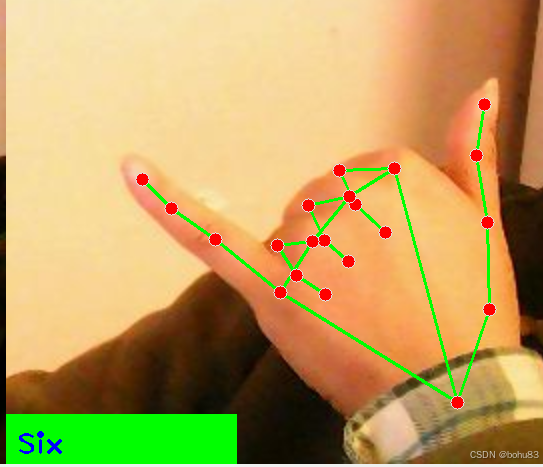

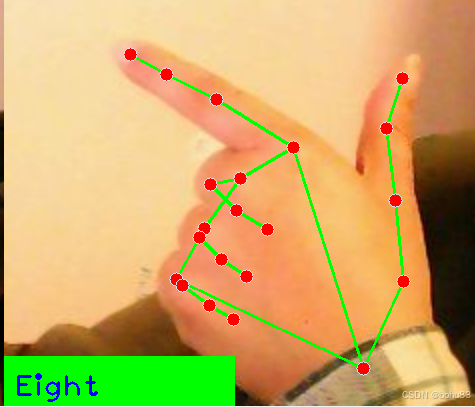

手势识别

src/yahboom_esp32_mediapipe/yahboom_esp32_mediapipe/目录下新建文件11_GestureRecognition.py,代码如下

python

#!/usr/bin/env python3

# encoding: utf-8

import math

import time

import cv2 as cv

import numpy as np

import mediapipe as mp

import rclpy

from rclpy.node import Node

from cv_bridge import CvBridge

from sensor_msgs.msg import Image, CompressedImage

from rclpy.time import Time

import datetime

class handDetector:

def __init__(self, mode=False, maxHands=2, detectorCon=0.5, trackCon=0.5):

self.tipIds = [4, 8, 12, 16, 20]

self.mpHand = mp.solutions.hands

self.mpDraw = mp.solutions.drawing_utils

self.hands = self.mpHand.Hands(

static_image_mode=mode,

max_num_hands=maxHands,

min_detection_confidence=detectorCon,

min_tracking_confidence=trackCon

)

self.lmList = []

self.lmDrawSpec = mp.solutions.drawing_utils.DrawingSpec(color=(0, 0, 255), thickness=-1, circle_radius=6)

self.drawSpec = mp.solutions.drawing_utils.DrawingSpec(color=(0, 255, 0), thickness=2, circle_radius=2)

def get_dist(self, point1, point2):

x1, y1 = point1

x2, y2 = point2

return abs(math.sqrt(math.pow(abs(y1 - y2), 2) + math.pow(abs(x1 - x2), 2)))

def calc_angle(self, pt1, pt2, pt3):

point1 = self.lmList[pt1][1], self.lmList[pt1][2]

point2 = self.lmList[pt2][1], self.lmList[pt2][2]

point3 = self.lmList[pt3][1], self.lmList[pt3][2]

a = self.get_dist(point1, point2)

b = self.get_dist(point2, point3)

c = self.get_dist(point1, point3)

try:

radian = math.acos((math.pow(a, 2) + math.pow(b, 2) - math.pow(c, 2)) / (2 * a * b))

angle = radian / math.pi * 180

except:

angle = 0

return abs(angle)

def findHands(self, frame, draw=True):

self.lmList = []

img = np.zeros(frame.shape, np.uint8)

img_RGB = cv.cvtColor(frame, cv.COLOR_BGR2RGB)

self.results = self.hands.process(img_RGB)

if self.results.multi_hand_landmarks:

for i in range(len(self.results.multi_hand_landmarks)):

if draw: self.mpDraw.draw_landmarks(frame, self.results.multi_hand_landmarks[i], self.mpHand.HAND_CONNECTIONS, self.lmDrawSpec, self.drawSpec)

self.mpDraw.draw_landmarks(img, self.results.multi_hand_landmarks[i], self.mpHand.HAND_CONNECTIONS, self.lmDrawSpec, self.drawSpec)

for id, lm in enumerate(self.results.multi_hand_landmarks[i].landmark):

h, w, c = frame.shape

cx, cy = int(lm.x * w), int(lm.y * h)

self.lmList.append([id, cx, cy])

return frame, img

def frame_combine(slef,frame, src):

if len(frame.shape) == 3:

frameH, frameW = frame.shape[:2]

srcH, srcW = src.shape[:2]

dst = np.zeros((max(frameH, srcH), frameW + srcW, 3), np.uint8)

dst[:, :frameW] = frame[:, :]

dst[:, frameW:] = src[:, :]

else:

src = cv.cvtColor(src, cv.COLOR_BGR2GRAY)

frameH, frameW = frame.shape[:2]

imgH, imgW = src.shape[:2]

dst = np.zeros((frameH, frameW + imgW), np.uint8)

dst[:, :frameW] = frame[:, :]

dst[:, frameW:] = src[:, :]

return dst

def fingersUp(self):

fingers=[]

# Thumb

if (self.calc_angle(self.tipIds[0],

self.tipIds[0] - 1,

self.tipIds[0] - 2) > 150.0) and (

self.calc_angle(

self.tipIds[0] - 1,

self.tipIds[0] - 2,

self.tipIds[0] - 3) > 150.0): fingers.append(1)

else:

fingers.append(0)

# 4 finger

for id in range(1, 5):

if self.lmList[self.tipIds[id]][2] < self.lmList[self.tipIds[id] - 2][2]:

fingers.append(1)

else:

fingers.append(0)

return fingers

def get_gesture(self):

gesture = ""

fingers = self.fingersUp()

if self.lmList[self.tipIds[0]][2] > self.lmList[self.tipIds[1]][2] and \

self.lmList[self.tipIds[0]][2] > self.lmList[self.tipIds[2]][2] and \

self.lmList[self.tipIds[0]][2] > self.lmList[self.tipIds[3]][2] and \

self.lmList[self.tipIds[0]][2] > self.lmList[self.tipIds[4]][2] : gesture = "Thumb_down"

elif self.lmList[self.tipIds[0]][2] < self.lmList[self.tipIds[1]][2] and \

self.lmList[self.tipIds[0]][2] < self.lmList[self.tipIds[2]][2] and \

self.lmList[self.tipIds[0]][2] < self.lmList[self.tipIds[3]][2] and \

self.lmList[self.tipIds[0]][2] < self.lmList[self.tipIds[4]][2] and \

self.calc_angle(self.tipIds[1] - 1, self.tipIds[1] - 2, self.tipIds[1] - 3) < 150.0 : gesture = "Thumb_up"

if fingers.count(1) == 3 or fingers.count(1) == 4:

if fingers[0] == 1 and (

self.get_dist(self.lmList[4][1:], self.lmList[8][1:])<self.get_dist(self.lmList[4][1:], self.lmList[5][1:])

): gesture = "OK"

elif fingers[2] == fingers[3] == 0: gesture = "Rock"

elif fingers.count(1) == 3: gesture = "Three"

else: gesture = "Four"

elif fingers.count(1) == 0: gesture = "Zero"

elif fingers.count(1) == 1: gesture = "One"

elif fingers.count(1) == 2:

if fingers[0] == 1 and fingers[4] == 1: gesture = "Six"

elif fingers[0] == 1 and self.calc_angle(4, 5, 8) > 90: gesture = "Eight"

elif fingers[0] == fingers[1] == 1 and self.get_dist(self.lmList[4][1:], self.lmList[8][1:]) < 50: gesture = "Heart_single"

else: gesture = "Two"

elif fingers.count(1)==5:gesture = "Five"

if self.get_dist(self.lmList[4][1:], self.lmList[8][1:]) < 60 and \

self.get_dist(self.lmList[4][1:], self.lmList[12][1:]) < 60 and \

self.get_dist(self.lmList[4][1:], self.lmList[16][1:]) < 60 and \

self.get_dist(self.lmList[4][1:], self.lmList[20][1:]) < 60 : gesture = "Seven"

if self.lmList[self.tipIds[0]][2] < self.lmList[self.tipIds[1]][2] and \

self.lmList[self.tipIds[0]][2] < self.lmList[self.tipIds[2]][2] and \

self.lmList[self.tipIds[0]][2] < self.lmList[self.tipIds[3]][2] and \

self.lmList[self.tipIds[0]][2] < self.lmList[self.tipIds[4]][2] and \

self.calc_angle(self.tipIds[1] - 1, self.tipIds[1] - 2, self.tipIds[1] - 3) > 150.0 : gesture = "Eight"

return gesture

class MY_Picture(Node):

def __init__(self, name):

super().__init__(name)

self.bridge = CvBridge()

self.sub_img = self.create_subscription(

CompressedImage, '/espRos/esp32camera', self.handleTopic, 1) #获取esp32传来的图像

self.hand_detector = handDetector(detectorCon=0.75)

self.last_stamp = None

self.new_seconds = 0

self.fps_seconds = 1

def handleTopic(self, msg):

self.last_stamp = msg.header.stamp

if self.last_stamp:

total_secs = Time(nanoseconds=self.last_stamp.nanosec, seconds=self.last_stamp.sec).nanoseconds

delta = datetime.timedelta(seconds=total_secs * 1e-9)

seconds = delta.total_seconds()*100

if self.new_seconds != 0:

self.fps_seconds = seconds - self.new_seconds

self.new_seconds = seconds#保留这次的值

start = time.time()

frame = self.bridge.compressed_imgmsg_to_cv2(msg)

frame = cv.resize(frame, (640, 480))

cv.waitKey(1)

frame, img = self.hand_detector.findHands(frame, draw=False)

if len(self.hand_detector.lmList) != 0:

totalFingers = self.hand_detector.get_gesture()

cv.rectangle(frame, (0, 430), (230, 480), (0, 255, 0), cv.FILLED)

cv.putText(frame, str(totalFingers), (10, 470), cv.FONT_HERSHEY_PLAIN, 2, (255, 0, 0), 2)

end = time.time()

fps = 1 / ((end - start)+self.fps_seconds)

text = "FPS : " + str(int(fps))

cv.putText(frame, text, (20, 30), cv.FONT_HERSHEY_SIMPLEX, 0.9, (0, 0, 255), 1)

dist = self.hand_detector.frame_combine(frame, img)

cv.imshow('dist', dist)

'''

Zero One Two Three Four Five Six Seven Eight

Ok: OK

Rock: rock

Thumb_up : 点赞

Thumb_down: 拇指向下

Heart_single: 单手比心

'''

def main():

print("start it")

rclpy.init()

esp_img = MY_Picture("My_Picture")

try:

rclpy.spin(esp_img)

except KeyboardInterrupt:

pass

finally:

esp_img.destroy_node()

rclpy.shutdown()网上有不少这个例子,差异点可能在手势识别哪里,前面的hand模型都是一样的。

根据你预计的指点判断角度或者漏出的手指组合判断含义。有的也不太准确,大部分能识别。

构建后运行:ros2 run yahboom_esp32_mediapipe GestureRecognition