大家好,我是Edison。

微软在2024年11月就发布了新的AI核心库Microsoft.Extensions.AI,虽然目前还是一个预览版,但其可以大大简化我们的AI集成和开发工作。

Microsoft.Extensions.AI介绍

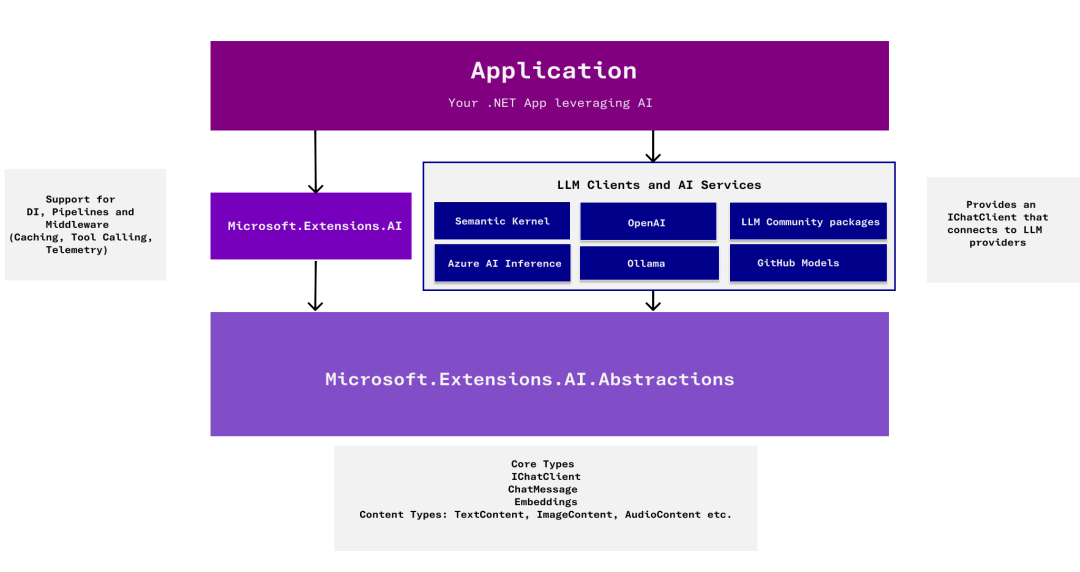

Microsoft.Extensions.AI 是一组核心 .NET 库 ,是在与整个 .NET 生态系统(包括语义内核)的开发人员协作中创建的。 这些库提供统一的 C# 抽象层 ,用于与 AI 服务交互,例如小型和大型语言模型(SLA 和 LLM)、嵌入和中间件。

Microsoft.Extensions.AI 提供可由各种服务实现的抽象,所有这些概念都遵循相同的核心概念。 此库不旨在提供针对任何特定提供商服务定制的 API。

Microsoft.Extensions.AI 目标是在 .NET 生态系统中充当一个统一层 ,使开发人员能够选择他们的首选框架和库,同时确保整个生态系统之间的无缝集成和协作。

画外音>开发者可以节省时间下来专注自己的应用程序的业务逻辑实现,从而不必花过多时间去做AI服务的集成调试,点个大大的赞!

我能使用哪些服务实现?

Microsoft.Extensions.AI 通过 NuGet 包提供了以下服务的实现:

- OpenAI

- Azure OpenAI

- Azure AI Inference

- Ollama

将来,这些抽象的服务实现都将会是客户端库的一部分。

基本使用

安装NuGet包:

Microsoft.Extensions.AI (9.1.0-preview)

Microsoft.Extensions.AI.OpenAI (9.1.0-preivew)这里我们使用SiliconCloud提供的 DeepSeek-R1-Distill-Llama-8B 模型,这是一个使用DeepSeek-R1开发的基于Llama-3.1-8B的蒸馏模型,免费好用。

注册SiliconCloud:https://cloud.siliconflow.cn/i/DomqCefW

简单对话:

var openAIClientOptions = new OpenAIClientOptions();

openAIClientOptions.Endpoint = new Uri("https://api.siliconflow.cn/v1");

var client = new OpenAIClient(new ApiKeyCredential("sk-xxxxxxxxxx"), openAIClientOptions);

var chatClient = client.AsChatClient("deepseek-ai/DeepSeek-R1-Distill-Llama-8B");

var response = await chatClient.CompleteAsync("Who are you?");

Console.WriteLine(response.Message);封装的IChatClient对象可以十分方便地屏蔽差异,用起来十分方便。

函数调用

要想实现函数调用(Function Calling),则需要调整一下:

var openAIClientOptions = new OpenAIClientOptions();

openAIClientOptions.Endpoint = new Uri("https://api.siliconflow.cn/v1");

[Description("Get the current time")]

string GetCurrentTime() => DateTime.Now.ToLocalTime().ToString();

var client = new ChatClientBuilder()

.UseFunctionInvocation()

.Use(new OpenAIClient(new ApiKeyCredential("sk-xxxxxxxxx"), openAIClientOptions)

.AsChatClient("deepseek-ai/DeepSeek-R1-Distill-Llama-8B"));

var response = await client.CompleteAsync(

"What's the time now?",

new() { Tools = [AIFunctionFactory.Create(GetCurrentTime)] });

Console.Write(response);可以看到,需要主动使用 UseFunctionInvocation 方法 及 提供 Tools 注册列表,就能使用我们封装的 Tools 了。

多模型使用

很多时候,我们希望Chat入口用一个模型,业务处理则用另一个模型,我们完全可以对其进行独立配置。

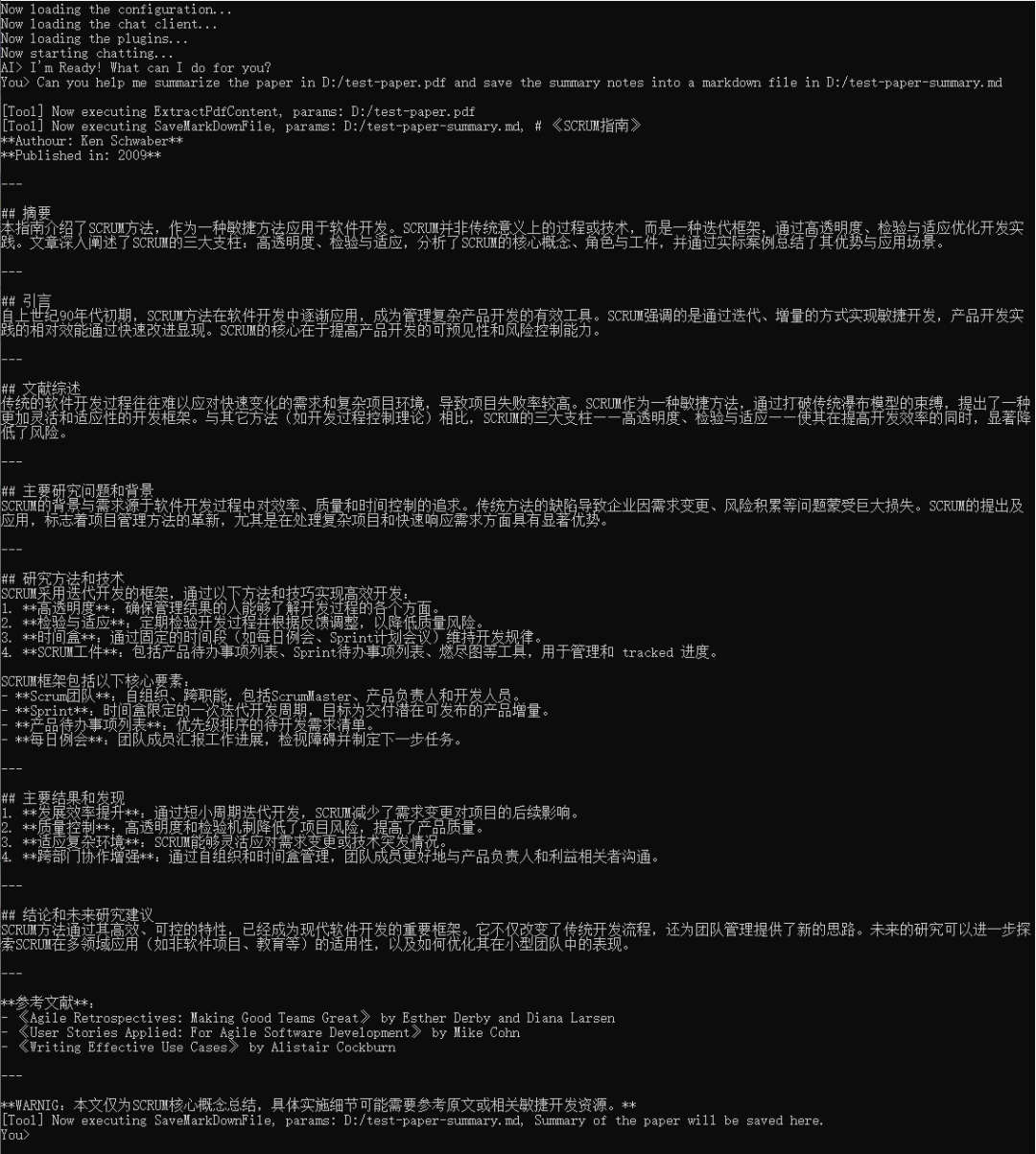

例如,这里参考mingupupu大佬的PaperAssistant,我也实现了一个。

在配置文件中,配置多AI模型:

{

// For Paper Smmary

"PaperSummaryModel": {

"ModelId": "deepseek-ai/DeepSeek-R1-Distill-Llama-8B",

"ApiKey": "sk-xxxxxxxxxx",

"EndPoint": "https://api.siliconflow.cn"

},

// For Main Chat

"MainChatModel": {

"ModelId": "Qwen/Qwen2.5-7B-Instruct",

"ApiKey": "sk-xxxxxxxxxx",

"EndPoint": "https://api.siliconflow.cn"

}

}对于某个业务处理,将其封装为Plugin,并使用 DeepSeek-R1-Distill-Llama-8B 模型:

public sealed class PaperAssistantPlugins

{

public PaperAssistantPlugins(IConfiguration config)

{

var apiKeyCredential = new ApiKeyCredential(config["PaperSummaryModel:ApiKey"]);

var aiClientOptions = new OpenAIClientOptions();

aiClientOptions.Endpoint = new Uri(config["PaperSummaryModel:EndPoint"]);

var aiClient = new OpenAIClient(apiKeyCredential, aiClientOptions)

.AsChatClient(config["PaperSummaryModel:ModelId"]);

ChatClient = new ChatClientBuilder(aiClient)

.UseFunctionInvocation()

.Build();

}

public IChatClient ChatClient { get; }

[Description("Read the PDF content from the file path")]

[return: Description("PDF content")]

public string ExtractPdfContent(string filePath)

{

Console.WriteLine($"[Tool] Now executing {nameof(ExtractPdfContent)}, params: {filePath}");

var pdfContentBuilder = new StringBuilder();

using (var document = PdfDocument.Open(filePath))

{

foreach (var page in document.GetPages())

pdfContentBuilder.Append(page.Text);

}

return pdfContentBuilder.ToString();

}

[Description("Create a markdown note file by file path")]

public void SaveMarkDownFile([Description("The file path to save")] string filePath, [Description("The content of markdown note")] string content)

{

Console.WriteLine($"[Tool] Now executing {nameof(SaveMarkDownFile)}, params: {filePath}, {content}");

try

{

if (!File.Exists(filePath))

File.WriteAllText(filePath, content);

else

File.WriteAllText(filePath, content);

}

catch (Exception ex)

{

Console.WriteLine($"[Error] An error occurred: {ex.Message}");

}

}

[Description("Generate one summary of one paper and save the summary to a local file by file path")]

public async Task GeneratePaperSummary(string sourceFilePath, string destFilePath)

{

var pdfContent = this.ExtractPdfContent(sourceFilePath);

var prompt = """

You're one smart agent for reading the content of a PDF paper and summarizing it into a markdown note.

User will provide the path of the paper and the path to create the note.

Please make sure the file path is in the following format:

"D:\Documents\xxx.pdf"

"D:\Documents\xxx.md"

Please summarize the abstract, introduction, literature review, main points, research methods, results, and conclusion of the paper.

The tile should be 《[Title]》, Authour should be [Author] and published in [Year].

Please make sure the summary should include the following:

(1) Abstrat

(2) Introduction

(3) Literature Review

(4) Main Research Questions and Background

(5) Research Methods and Techniques Used

(6) Main Results and Findings

(7) Conclusion and Future Research Directions

""";

var history = new List<ChatMessage>

{

new ChatMessage(ChatRole.System, prompt),

new ChatMessage(ChatRole.User, pdfContent)

};

var result = await ChatClient.CompleteAsync(history);

this.SaveMarkDownFile(destFilePath, result.ToString());

}

}对于对话主入口,则使用 Qwen2.5-7B-Instruct 模型即可:

Console.WriteLine("Now loading the configuration...");

var config = new ConfigurationBuilder()

.AddJsonFile($"appsettings.json")

.Build();

Console.WriteLine("Now loading the chat client...");

var apiKeyCredential = new ApiKeyCredential(config["MainChatModel:ApiKey"]);

var aiClientOptions = new OpenAIClientOptions();

aiClientOptions.Endpoint = new Uri(config["MainChatModel:EndPoint"]);

var aiClient = new OpenAIClient(apiKeyCredential, aiClientOptions)

.AsChatClient(config["MainChatModel:ModelId"]);

var chatClient = new ChatClientBuilder(aiClient)

.UseFunctionInvocation()

.Build();

Console.WriteLine("Now loading the plugins...");

var plugins = new PaperAssistantPlugins(config);

var chatOptions = new ChatOptions()

{

Tools =

[

AIFunctionFactory.Create(plugins.ExtractPdfContent),

AIFunctionFactory.Create(plugins.SaveMarkDownFile),

AIFunctionFactory.Create(plugins.GeneratePaperSummary)

]

};

Console.WriteLine("Now starting chatting...");

var prompt = """

You're one smart agent for reading the content of a PDF paper and summarizing it into a markdown note.

User will provide the path of the paper and the path to create the note.

Please make sure the file path is in the following format:

"D:\Documents\xxx.pdf"

"D:\Documents\xxx.md"

Please summarize the abstract, introduction, literature review, main points, research methods, results, and conclusion of the paper.

The tile should be 《[Title]》, Authour should be [Author] and published in [Year].

Please make sure the summary should include the following:

(1) Abstrat

(2) Introduction

(3) Literature Review

(4) Main Research Questions and Background

(5) Research Methods and Techniques Used

(6) Main Results and Findings

(7) Conclusion and Future Research Directions

""";

var history = new List<ChatMessage>

{

new ChatMessage(ChatRole.System, prompt)

};

bool isComplete = false;

Console.WriteLine("AI> I'm Ready! What can I do for you?");

do

{

Console.Write("You> ");

string? input = Console.ReadLine();

if (string.IsNullOrWhiteSpace(input))

continue;

if (input.Trim().Equals("EXIT", StringComparison.OrdinalIgnoreCase))

{

isComplete = true;

break;

}

if (input.Trim().Equals("Clear", StringComparison.OrdinalIgnoreCase))

{

history.Clear();

Console.WriteLine("Cleared our chatting history successfully!");

continue;

}

history.Add(new ChatMessage(ChatRole.User, input));

Console.WriteLine();

var result = await chatClient.CompleteAsync(input, chatOptions);

Console.WriteLine(result.ToString());

history.Add(new ChatMessage(ChatRole.Assistant, result.ToString() ?? string.Empty));

} while (!isComplete);这里测试一下,我让它帮我总结一个pdf并将总结内容生成到一个md文件中输出到我指定的目录下保存。

可以看出,它成功地调用了Plugin完成了PDF读取、内容提取总结 和 生成Markdown文件。

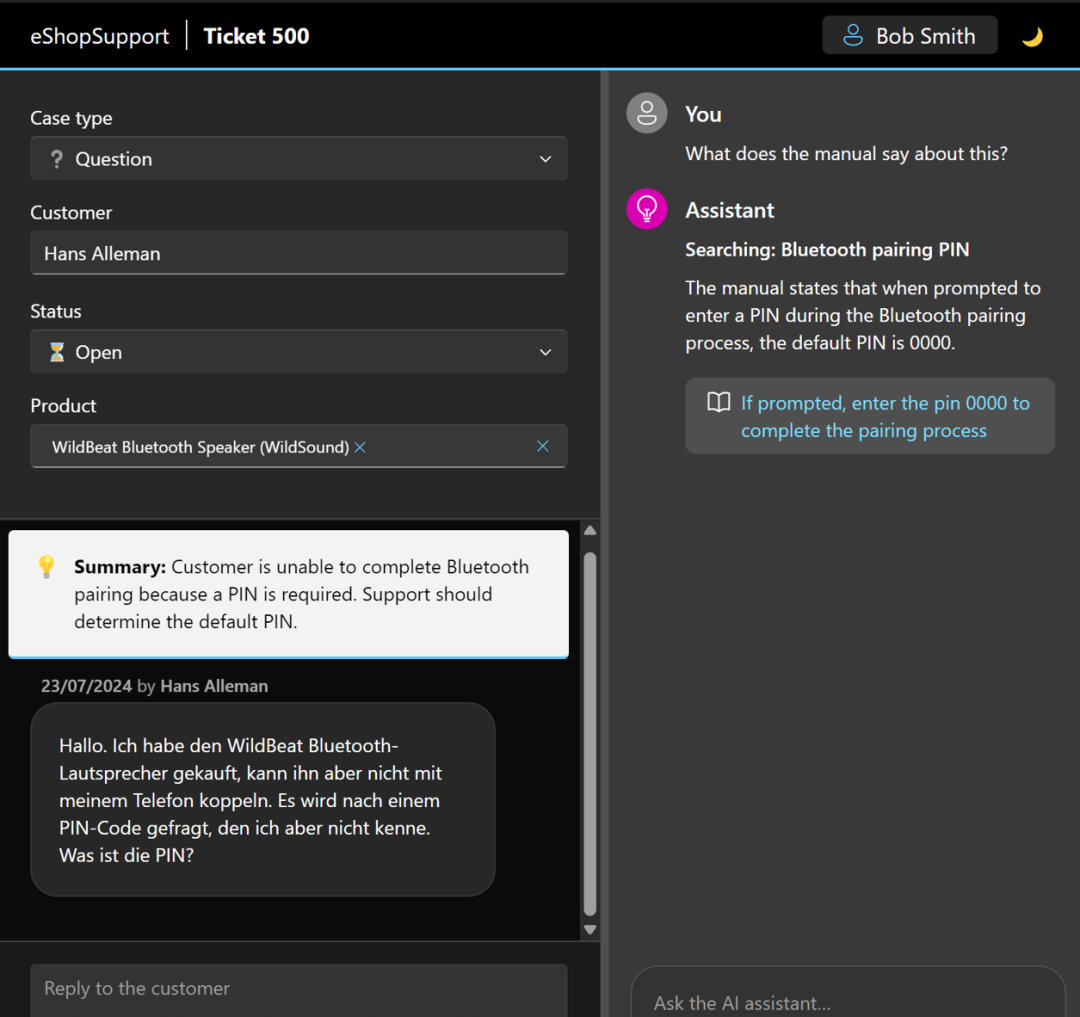

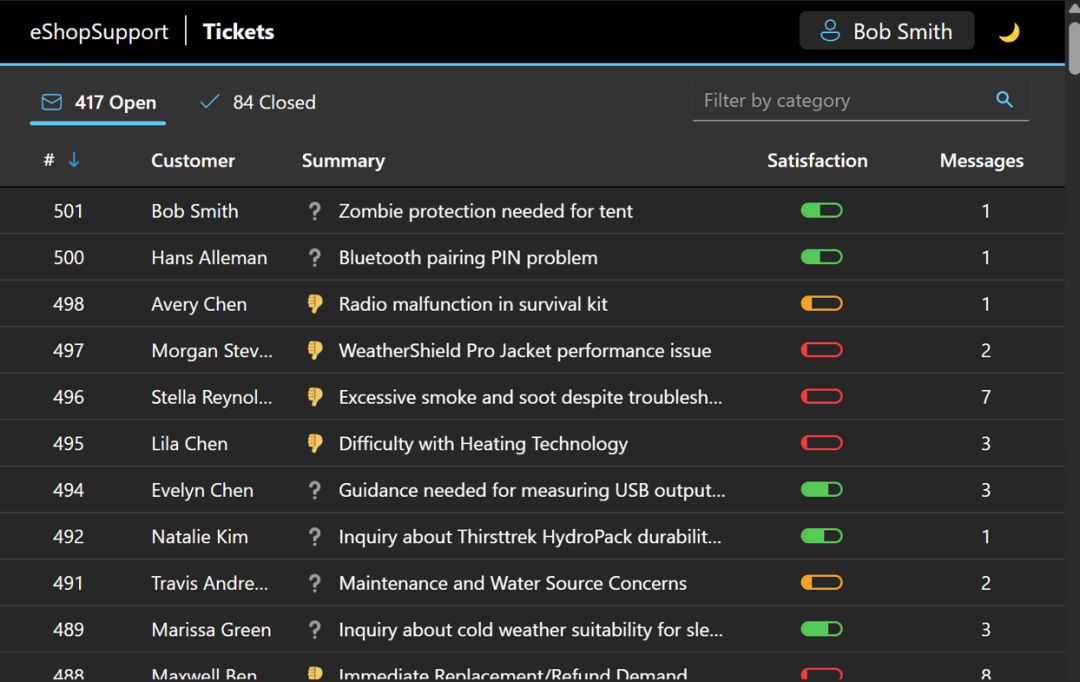

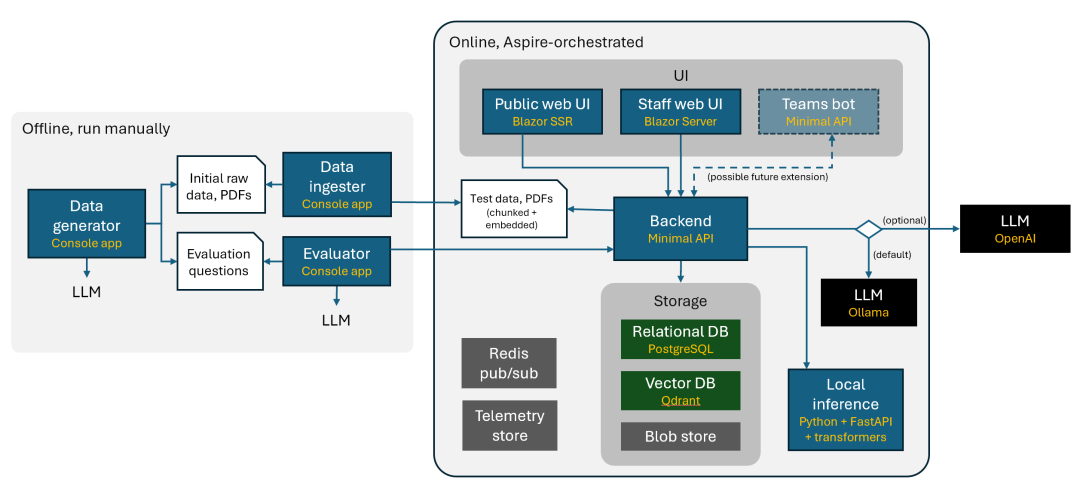

eShopSupport

ShopSupport 是一个开源的AI示例应用程序,客户可以使用它来与AI客户对话查询产品,实现网站系统的"智能客服"的场景。

这个开源项目就使用了 Microsoft.Extensions.AI 作为和AI服务集成的抽象层,值得我们参考学习。

值得一提的是,它并没有说全部统一.NET技术栈,而是保留了Python作为机器学习模型训练和推理的,展示了技术异构在这个场景下的融合。

此外,基于Aspire来生成可观察和可靠的云原生应用也是这个项目带来的一个亮点,可以学习下。

小结

本文介绍了Microsoft.Extensions.AI的基本概念 和 基本使用,如果你也是.NET程序员希望参与AI应用的开发,那就快快了解和使用起来吧。

示例源码

GitHub:https://github.com/Coder-EdisonZhou/EDT.Agent.Demos

参考内容

mingupupu 的文章:https://www.cnblogs.com/mingupupu/p/18651932

更多

Microsoft Learn: https://learn.microsoft.com/zh-cn/dotnet/ai/ai-extensions

eShopSupport: https://github.com/dotnet/eShopSupport

作者:周旭龙

出处:https://edisonchou.cnblogs.com

本文版权归作者和博客园共有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文链接。