这篇笔记瞄准的是AutoGen库中 Examples 章节的 Literature Review 示例,实现的功能是对 Arxiv 文献进行检索

- 官网链接: https://microsoft.github.io/autogen/stable/user-guide/agentchat-user-guide/examples/literature-review.html ;

获取 Google API KEY

如何获取Google API KEY我们在之前的几篇文章中已经详细介绍过了,参考以下链接:

- OPENAI_API_KEY:万能淘宝,5刀足够跑完整个系列学习笔记的demo;

- GOOGLE_API_KEY: 博文 smolagents学习笔记系列(五)Tools-in-depth-guide ; 中的

Use LangChain tools部分: - GOOGLE_SEARCH_ENGINE_ID:博文 AutoGen学习笔记系列(十六)Examples - Company Research 中的

申请Google Search API部分;

【注意】:这三个API KEY是必要的。

Literature Review

这个demo通过下面的Agent组成的Team对一个领域的论文进行总结并形成文献综述:

Arxiv Search Agent:使用Arxiv API搜索指定议题的关联论文并返回;Google Search Agent:使用Google Search api搜索指定议题的关联论文并返回;Report Agent:根据上面两个Agent搜索到的结果汇总并形成报告;

首先要安装依赖:

python

$ pip install arxivDefining Tools

【注意】:官网在这里有两处笔误,两个工具的函数名写的是 google_search arxiv_search,但下面调用时使用的是 google_search_tool 和 arxiv_search_tool,我这里统一成 _tool 结尾:

- 给

Google Search Agent使用的工具google_search_tool:

python

def google_search_tool(query: str, num_results: int = 2, max_chars: int = 500) -> list: # type: ignore[type-arg]

import os

import time

import requests

from bs4 import BeautifulSoup

from dotenv import load_dotenv

load_dotenv()

api_key = os.getenv("GOOGLE_API_KEY")

search_engine_id = os.getenv("GOOGLE_SEARCH_ENGINE_ID")

if not api_key or not search_engine_id:

raise ValueError("API key or Search Engine ID not found in environment variables")

url = "https://www.googleapis.com/customsearch/v1"

params = {"key": api_key, "cx": search_engine_id, "q": query, "num": num_results}

response = requests.get(url, params=params) # type: ignore[arg-type]

if response.status_code != 200:

print(response.json())

raise Exception(f"Error in API request: {response.status_code}")

results = response.json().get("items", [])

def get_page_content(url: str) -> str:

try:

response = requests.get(url, timeout=10)

soup = BeautifulSoup(response.content, "html.parser")

text = soup.get_text(separator=" ", strip=True)

words = text.split()

content = ""

for word in words:

if len(content) + len(word) + 1 > max_chars:

break

content += " " + word

return content.strip()

except Exception as e:

print(f"Error fetching {url}: {str(e)}")

return ""

enriched_results = []

for item in results:

body = get_page_content(item["link"])

enriched_results.append(

{"title": item["title"], "link": item["link"], "snippet": item["snippet"], "body": body}

)

time.sleep(1) # Be respectful to the servers

return enriched_results- 给

Arxiv Search Agent使用的工具arxiv_search_tool:

python

def arxiv_search_tool(query: str, max_results: int = 2) -> list: # type: ignore[type-arg]

"""

Search Arxiv for papers and return the results including abstracts.

"""

import arxiv

client = arxiv.Client()

search = arxiv.Search(query=query, max_results=max_results, sort_by=arxiv.SortCriterion.Relevance)

results = []

for paper in client.results(search):

results.append(

{

"title": paper.title,

"authors": [author.name for author in paper.authors],

"published": paper.published.strftime("%Y-%m-%d"),

"abstract": paper.summary,

"pdf_url": paper.pdf_url,

}

)

# Write results to a file

with open('arxiv_search_results.json', 'w') as f:

json.dump(results, f, indent=2)

return resultsDefining Agents

定义三个Agent:

Google Search Agent:

python

google_search_agent = AssistantAgent(

name="Google_Search_Agent",

tools=[google_search_tool],

model_client=OpenAIChatCompletionClient(model="gpt-4o-mini"),

description="An agent that can search Google for information, returns results with a snippet and body content",

system_message="You are a helpful AI assistant. Solve tasks using your tools.",

)Arxiv Search Agent:

python

arxiv_search_agent = AssistantAgent(

name="Arxiv_Search_Agent",

tools=[arxiv_search_tool],

model_client=OpenAIChatCompletionClient(model="gpt-4o-mini"),

description="An agent that can search Arxiv for papers related to a given topic, including abstracts",

system_message="You are a helpful AI assistant. Solve tasks using your tools. Specifically, you can take into consideration the user's request and craft a search query that is most likely to return relevant academi papers.",

)Report Agent:

python

report_agent = AssistantAgent(

name="Report_Agent",

model_client=OpenAIChatCompletionClient(model="gpt-4o-mini"),

description="Generate a report based on a given topic",

system_message="You are a helpful assistant. Your task is to synthesize data extracted into a high quality literature review including CORRECT references. You MUST write a final report that is formatted as a literature review with CORRECT references. Your response should end with the word 'TERMINATE'",

)Creating the Team

创建Team并定义任务:

python

termination = TextMentionTermination("TERMINATE")

team = RoundRobinGroupChat(

participants=[google_search_agent, arxiv_search_agent, report_agent], termination_condition=termination

)完整代码

python

from autogen_agentchat.agents import AssistantAgent

from autogen_agentchat.conditions import TextMentionTermination

from autogen_agentchat.teams import RoundRobinGroupChat

from autogen_agentchat.ui import Console

from autogen_core.tools import FunctionTool

from autogen_ext.models.openai import OpenAIChatCompletionClient

import json, os, asyncio

os.environ["GOOGLE_SEARCH_ENGINE_ID"] = "你的GOOGLE_SEARCH_ENGINE_ID" # Google

os.environ["GOOGLE_API_KEY"] = "你的GOOGLE_API_KEY" # Google

os.environ["OPENAI_API_KEY"] = "你的OPENAI_API_KEY" # OpenAI

#-----------------------------------------------------------#

# Step1. 定义两个Agent使用的工具tool:

def google_search_tool(query: str, num_results: int = 2, max_chars: int = 500) -> list: # type: ignore[type-arg]

import os

import time

import requests

from bs4 import BeautifulSoup

from dotenv import load_dotenv

load_dotenv()

api_key = os.getenv("GOOGLE_API_KEY")

search_engine_id = os.getenv("GOOGLE_SEARCH_ENGINE_ID")

if not api_key or not search_engine_id:

raise ValueError("API key or Search Engine ID not found in environment variables")

url = "https://www.googleapis.com/customsearch/v1"

params = {"key": api_key, "cx": search_engine_id, "q": query, "num": num_results}

response = requests.get(url, params=params) # type: ignore[arg-type]

if response.status_code != 200:

print(response.json())

raise Exception(f"Error in API request: {response.status_code}")

results = response.json().get("items", [])

def get_page_content(url: str) -> str:

try:

response = requests.get(url, timeout=10)

soup = BeautifulSoup(response.content, "html.parser")

text = soup.get_text(separator=" ", strip=True)

words = text.split()

content = ""

for word in words:

if len(content) + len(word) + 1 > max_chars:

break

content += " " + word

return content.strip()

except Exception as e:

print(f"Error fetching {url}: {str(e)}")

return ""

enriched_results = []

for item in results:

body = get_page_content(item["link"])

enriched_results.append(

{"title": item["title"], "link": item["link"], "snippet": item["snippet"], "body": body}

)

time.sleep(1) # Be respectful to the servers

return enriched_results

def arxiv_search_tool(query: str, max_results: int = 2) -> list: # type: ignore[type-arg]

"""

Search Arxiv for papers and return the results including abstracts.

"""

import arxiv

client = arxiv.Client()

search = arxiv.Search(query=query, max_results=max_results, sort_by=arxiv.SortCriterion.Relevance)

results = []

for paper in client.results(search):

results.append(

{

"title": paper.title,

"authors": [author.name for author in paper.authors],

"published": paper.published.strftime("%Y-%m-%d"),

"abstract": paper.summary,

"pdf_url": paper.pdf_url,

}

)

# Write results to a file

with open('arxiv_search_results.json', 'w') as f:

json.dump(results, f, indent=2)

return results

#-----------------------------------------------------------#

# Step2. 定义三个Agent

google_search_agent = AssistantAgent(

name="Google_Search_Agent",

tools=[google_search_tool],

model_client=OpenAIChatCompletionClient(model="gpt-4o-mini"),

description="An agent that can search Google for information, returns results with a snippet and body content",

system_message="You are a helpful AI assistant. Solve tasks using your tools.",

)

arxiv_search_agent = AssistantAgent(

name="Arxiv_Search_Agent",

tools=[arxiv_search_tool],

model_client=OpenAIChatCompletionClient(model="gpt-4o-mini"),

description="An agent that can search Arxiv for papers related to a given topic, including abstracts",

system_message="You are a helpful AI assistant. Solve tasks using your tools. Specifically, you can take into consideration the user's request and craft a search query that is most likely to return relevant academi papers.",

)

report_agent = AssistantAgent(

name="Report_Agent",

model_client=OpenAIChatCompletionClient(model="gpt-4o-mini"),

description="Generate a report based on a given topic",

system_message="You are a helpful assistant. Your task is to synthesize data extracted into a high quality literature review including CORRECT references. You MUST write a final report that is formatted as a literature review with CORRECT references. Your response should end with the word 'TERMINATE'",

)

#-----------------------------------------------------------#

# Step3. 定义终止条件与Team

termination = TextMentionTermination("TERMINATE")

team = RoundRobinGroupChat(

participants=[google_search_agent, arxiv_search_agent, report_agent], termination_condition=termination

)

#-----------------------------------------------------------#

# Step4. 运行Task

asyncio.run(

Console(

team.run_stream(

task="Write a literature review on no code tools for building multi agent ai systems",

)

)

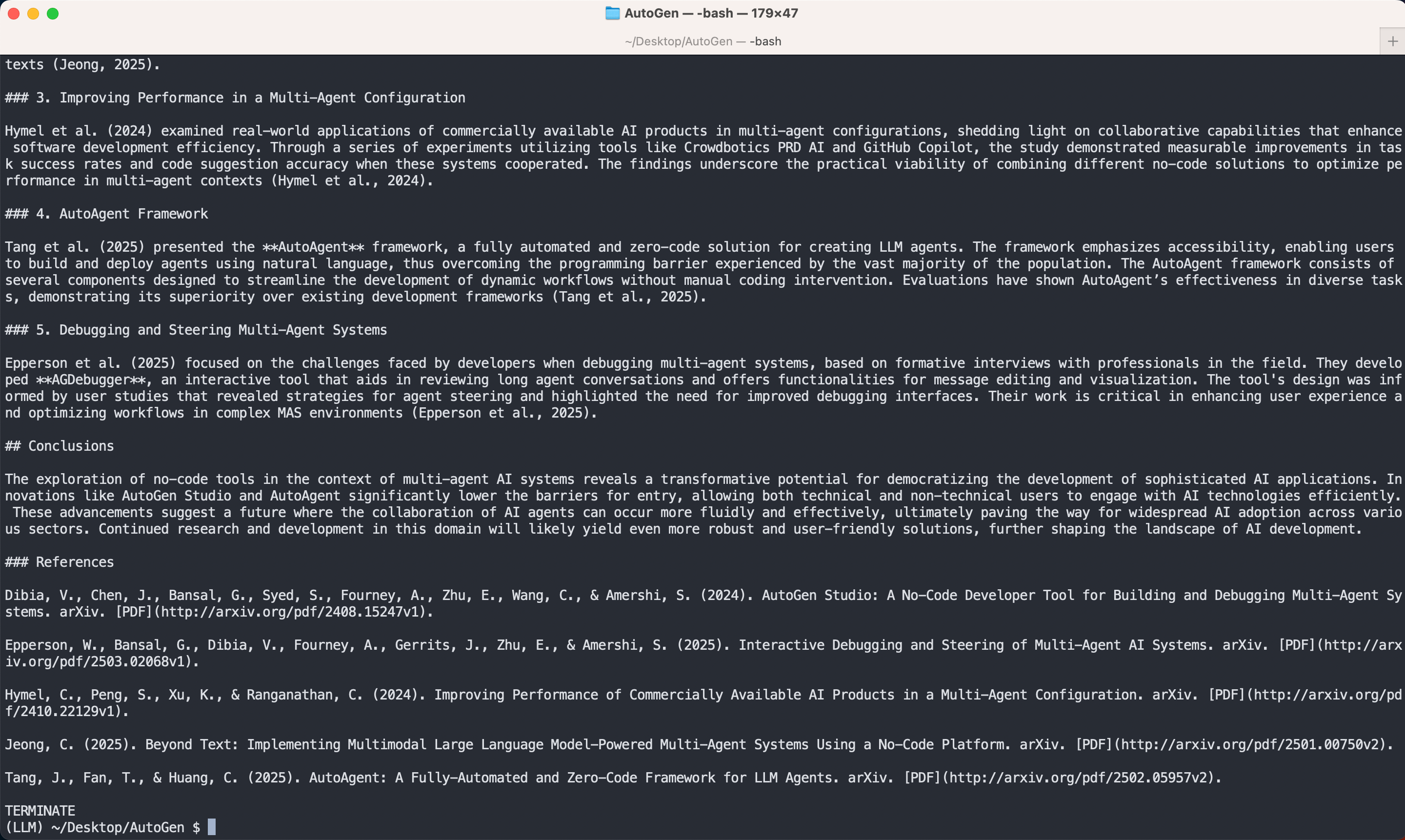

)运行结果如下:

bash

$ python demo.py

【注意】:上面完整代码中在 arxiv_search_tool 函数里我将官网的写入本地文件注释解开了,因此会在同级目录下生成一个 arxiv_search_results.json 文件,由于 Arxiv 的API接口得到的是 真实且明确 的信息,即搜索出来的内容和 Arxiv 数据库中的一致,而非LLM幻觉产生,因此这个工具是一个非常好用的函数,如果单独调用也不需要LLM和Agent。

arxiv_search_results.json 内容如下:

json

[

{

"title": "AutoGen Studio: A No-Code Developer Tool for Building and Debugging Multi-Agent Systems",

"authors": [

"Victor Dibia",

"Jingya Chen",

"Gagan Bansal",

"Suff Syed",

"Adam Fourney",

"Erkang Zhu",

"Chi Wang",

"Saleema Amershi"

],

"published": "2024-08-09",

"abstract": "Multi-agent systems, where multiple agents (generative AI models + tools)\ncollaborate, are emerging as an effective pattern for solving long-running,\ncomplex tasks in numerous domains. However, specifying their parameters (such\nas models, tools, and orchestration mechanisms etc,.) and debugging them\nremains challenging for most developers. To address this challenge, we present\nAUTOGEN STUDIO, a no-code developer tool for rapidly prototyping, debugging,\nand evaluating multi-agent workflows built upon the AUTOGEN framework. AUTOGEN\nSTUDIO offers a web interface and a Python API for representing LLM-enabled\nagents using a declarative (JSON-based) specification. It provides an intuitive\ndrag-and-drop UI for agent workflow specification, interactive evaluation and\ndebugging of workflows, and a gallery of reusable agent components. We\nhighlight four design principles for no-code multi-agent developer tools and\ncontribute an open-source implementation at\nhttps://github.com/microsoft/autogen/tree/main/samples/apps/autogen-studio",

"pdf_url": "http://arxiv.org/pdf/2408.15247v1"

},

{

"title": "Beyond Text: Implementing Multimodal Large Language Model-Powered Multi-Agent Systems Using a No-Code Platform",

"authors": [

"Cheonsu Jeong"

],

"published": "2025-01-01",

"abstract": "This study proposes the design and implementation of a multimodal LLM-based\nMulti-Agent System (MAS) leveraging a No-Code platform to address the practical\nconstraints and significant entry barriers associated with AI adoption in\nenterprises. Advanced AI technologies, such as Large Language Models (LLMs),\noften pose challenges due to their technical complexity and high implementation\ncosts, making them difficult for many organizations to adopt. To overcome these\nlimitations, this research develops a No-Code-based Multi-Agent System designed\nto enable users without programming knowledge to easily build and manage AI\nsystems. The study examines various use cases to validate the applicability of\nAI in business processes, including code generation from image-based notes,\nAdvanced RAG-based question-answering systems, text-based image generation, and\nvideo generation using images and prompts. These systems lower the barriers to\nAI adoption, empowering not only professional developers but also general users\nto harness AI for significantly improved productivity and efficiency. By\ndemonstrating the scalability and accessibility of No-Code platforms, this\nstudy advances the democratization of AI technologies within enterprises and\nvalidates the practical applicability of Multi-Agent Systems, ultimately\ncontributing to the widespread adoption of AI across various industries.",

"pdf_url": "http://arxiv.org/pdf/2501.00750v2"

},

{

"title": "Improving Performance of Commercially Available AI Products in a Multi-Agent Configuration",

"authors": [

"Cory Hymel",

"Sida Peng",

"Kevin Xu",

"Charath Ranganathan"

],

"published": "2024-10-29",

"abstract": "In recent years, with the rapid advancement of large language models (LLMs),\nmulti-agent systems have become increasingly more capable of practical\napplication. At the same time, the software development industry has had a\nnumber of new AI-powered tools developed that improve the software development\nlifecycle (SDLC). Academically, much attention has been paid to the role of\nmulti-agent systems to the SDLC. And, while single-agent systems have\nfrequently been examined in real-world applications, we have seen comparatively\nfew real-world examples of publicly available commercial tools working together\nin a multi-agent system with measurable improvements. In this experiment we\ntest context sharing between Crowdbotics PRD AI, a tool for generating software\nrequirements using AI, and GitHub Copilot, an AI pair-programming tool. By\nsharing business requirements from PRD AI, we improve the code suggestion\ncapabilities of GitHub Copilot by 13.8% and developer task success rate by\n24.5% -- demonstrating a real-world example of commercially-available AI\nsystems working together with improved outcomes.",

"pdf_url": "http://arxiv.org/pdf/2410.22129v1"

},

{

"title": "AutoAgent: A Fully-Automated and Zero-Code Framework for LLM Agents",

"authors": [

"Jiabin Tang",

"Tianyu Fan",

"Chao Huang"

],

"published": "2025-02-09",

"abstract": "Large Language Model (LLM) Agents have demonstrated remarkable capabilities\nin task automation and intelligent decision-making, driving the widespread\nadoption of agent development frameworks such as LangChain and AutoGen.\nHowever, these frameworks predominantly serve developers with extensive\ntechnical expertise - a significant limitation considering that only 0.03 % of\nthe global population possesses the necessary programming skills. This stark\naccessibility gap raises a fundamental question: Can we enable everyone,\nregardless of technical background, to build their own LLM agents using natural\nlanguage alone? To address this challenge, we introduce AutoAgent-a\nFully-Automated and highly Self-Developing framework that enables users to\ncreate and deploy LLM agents through Natural Language Alone. Operating as an\nautonomous Agent Operating System, AutoAgent comprises four key components: i)\nAgentic System Utilities, ii) LLM-powered Actionable Engine, iii) Self-Managing\nFile System, and iv) Self-Play Agent Customization module. This lightweight yet\npowerful system enables efficient and dynamic creation and modification of\ntools, agents, and workflows without coding requirements or manual\nintervention. Beyond its code-free agent development capabilities, AutoAgent\nalso serves as a versatile multi-agent system for General AI Assistants.\nComprehensive evaluations on the GAIA benchmark demonstrate AutoAgent's\neffectiveness in generalist multi-agent tasks, surpassing existing\nstate-of-the-art methods. Furthermore, AutoAgent's Retrieval-Augmented\nGeneration (RAG)-related capabilities have shown consistently superior\nperformance compared to many alternative LLM-based solutions.",

"pdf_url": "http://arxiv.org/pdf/2502.05957v2"

},

{

"title": "Interactive Debugging and Steering of Multi-Agent AI Systems",

"authors": [

"Will Epperson",

"Gagan Bansal",

"Victor Dibia",

"Adam Fourney",

"Jack Gerrits",

"Erkang Zhu",

"Saleema Amershi"

],

"published": "2025-03-03",

"abstract": "Fully autonomous teams of LLM-powered AI agents are emerging that collaborate\nto perform complex tasks for users. What challenges do developers face when\ntrying to build and debug these AI agent teams? In formative interviews with\nfive AI agent developers, we identify core challenges: difficulty reviewing\nlong agent conversations to localize errors, lack of support in current tools\nfor interactive debugging, and the need for tool support to iterate on agent\nconfiguration. Based on these needs, we developed an interactive multi-agent\ndebugging tool, AGDebugger, with a UI for browsing and sending messages, the\nability to edit and reset prior agent messages, and an overview visualization\nfor navigating complex message histories. In a two-part user study with 14\nparticipants, we identify common user strategies for steering agents and\nhighlight the importance of interactive message resets for debugging. Our\nstudies deepen understanding of interfaces for debugging increasingly important\nagentic workflows.",

"pdf_url": "http://arxiv.org/pdf/2503.02068v1"

}

]Take a breath

恭喜,到达此处说明你又完成了一个阶段的学习,至此你已经完成了 AutoGen 官方教程中的 Tutorial、Advanced、Examples 这三大模块,官网链接中教程我们已经完整过了一遍,后续我会再开一个新的章节,主要是其源码中的 samples 中的示例,这部分的难度会上升一个档次,但不要担心,我会先将所有的雷都趟一遍为你保驾护航。

革命尚未成功,同志仍需努力。