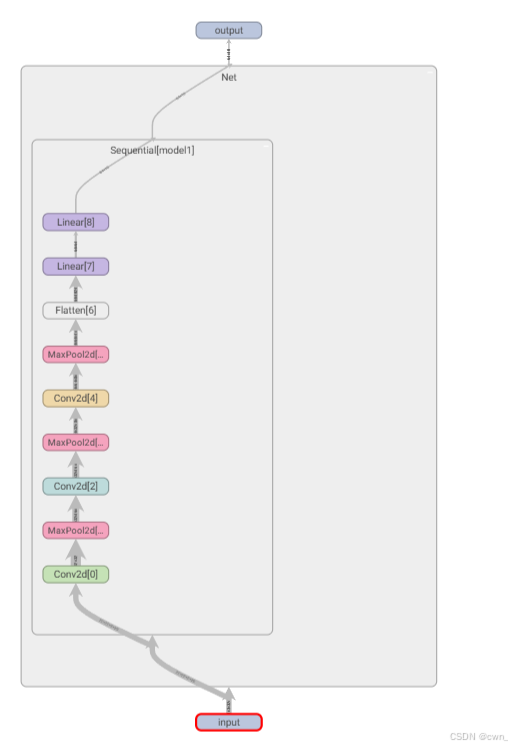

Sequential & example1

感觉和compose()好像

串联起来 方便调用

python

def __init()__(self):

super(Net,self).__init__()

self.model1 = Sequential(

Conv2d(3,32,5,padding=2),

MaxPool2d(2),

Conv2d(32,32,5,padding=2),

MaxPool2d(2),

Conv2d(32,64,5,padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024,64),

Linear(64,10)

)

def forward(self,x):

x = self.model1(x)

return x可以输出graph查看:

python

writer = SummaryWriter('../logs')

writer.add_graph(net,input)

writer.close()终于明白好多论文上的图是怎么来的了 好权威啊

完整版代码:

code:

python

import torch

from torch import nn

from torch.nn import Sequential, Conv2d, MaxPool2d, Flatten, Linear

from torch.utils.tensorboard import SummaryWriter

class Net(nn.Module):

def __init__(self):

super(Net,self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self,x):

x = self.model1(x)

return x

net = Net()

print(net)

input = torch.ones((64,3,32,32))

output = net(input)

print(output.shape)

writer = SummaryWriter('../logs')

writer.add_graph(net,input)

writer.close()损失函数 反向传播

损失函数:

python

import torch

from torch import float32

from torch.nn import L1Loss

from torch import nn

inputs = torch.tensor([1,2,3],dtype=float32)

targets = torch.tensor([1,2,5],dtype=float32)

inputs = torch.reshape(inputs,(1,1,1,3))

targets = torch.reshape(targets,(1,1,1,3))

loss = L1Loss()

result = loss(inputs,targets)

print(result)

loss = nn.MSELoss()

result = loss(inputs,targets)

print(result)

x = torch.tensor([0.1,0.2,0.3])

y = torch.tensor([1])

x = torch.reshape(x,(1,3))

loss_cross = nn.CrossEntropyLoss()

result_cross = loss_cross(x,y)

print(result_cross)损失函数例子+反向传播(更新参数)

python

import torch

from torch import nn

from torch.nn import Sequential, Conv2d, MaxPool2d, Flatten, Linear

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

from torchvision import transforms

from torchvision.datasets import ImageFolder

#数据预处理

transform = transforms.Compose([

transforms.Resize((32,32)),

transforms.ToTensor(),

transforms.Normalize(

mean = [0.5,0.5,0.5],

std = [0.5,0.5,0.5]

)

])

#加载数据集

folder_path = '../images'

dataset = ImageFolder(folder_path,transform=transform)

dataloader = DataLoader(dataset,batch_size=1)

class Net(nn.Module):

def __init__(self):

super(Net,self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self,x):

x = self.model1(x)

return x

net = Net()

loss = nn.CrossEntropyLoss()

for data in dataloader:

img,label = data

print(img.shape)

output = net(img)

result_loss = loss(output,label)

print(result_loss)

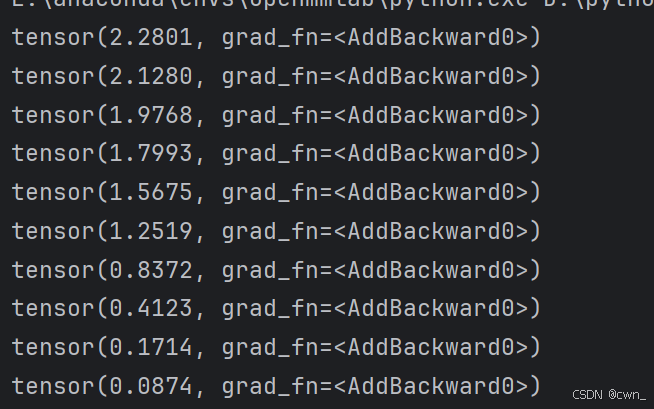

result_loss.backward()优化器

随机梯度下降SGD

python

import torch

from torch import nn

from torch.nn import Sequential, Conv2d, MaxPool2d, Flatten, Linear

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

from torchvision import transforms

from torchvision.datasets import ImageFolder

#数据预处理

transform = transforms.Compose([

transforms.Resize((32,32)),

transforms.ToTensor(),

transforms.Normalize(

mean = [0.5,0.5,0.5],

std = [0.5,0.5,0.5]

)

])

#加载数据集

folder_path = '../images'

dataset = ImageFolder(folder_path,transform=transform)

dataloader = DataLoader(dataset,batch_size=1)

class Net(nn.Module):

def __init__(self):

super(Net,self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self,x):

x = self.model1(x)

return x

net = Net()

loss = nn.CrossEntropyLoss()

optim = torch.optim.SGD(net.parameters(),lr=0.01)

for epoch in range(10):

running_loss = 0.0

for data in dataloader:

img, label = data

output = net(img)

result_loss = loss(output, label)

optim.zero_grad()

result_loss.backward()

optim.step()

#每次训练数据的损失和

running_loss += result_loss

print(running_loss)

现有模型的使用和修改

maybe可以称为迁移学习???

example net: vgg16

python

#不预训练

vgg16_false = torchvision.models.vgg16(pretrained=False)

#预训练

vgg16_true = torchvision.models.vgg16(pretrained=True)

print(vgg16_true)

#添加一个模块 在vgg16的classifier里面加一个

vgg16_true.classifier.add_module('add_linear',nn.Linear(1000,10))

#修改模块中的数据

vgg16_false.classifier[6] = nn.Linear(4096,10)模型保存和加载

-

现有模型:vgg16

pythonvgg16 = torchvision.models.vgg16(pretrained=False)python#保存:模型结构+参数 torch.save(vgg16,"vgg16_method1.pth") #加载: model = torch.load("vgg16_method1.pth")python#保存:模型参数(官推) #保存成字典模式 torch.save(vgg16.state_dict(),"vgg16_method2.pth") #加载 vgg16 = torchvision.models.vgg16(pretrained=False) vgg16.load_state_dict(torch.load("vgg16_method2.pth")) -

自定义模型

python#保存 class Net(nn.Module): ... ... net = Net() torch.save(net,"net.pth")python#加载 class Net(nn.Module): ... ... model = torch.load("net.pth")