- 🍨 本文为 🔗365天深度学习训练营中的学习记录博客

- 🍖 原作者: K同学啊

一、准备工作

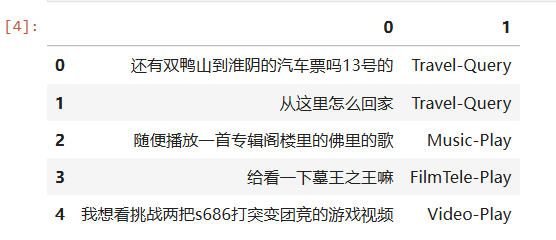

数据格式:

import torch

from torch import nn

import torchvision

from torchvision import transforms,datasets

import os,PIL,pathlib,warnings

warnings.filterwarnings("ignore")

device = torch.device("cuda" if torch.cuda.is_available else "cpu")

import pandas as pd

# CSV 格式通常为 无表头(header=None),以制表符(sep='\t')分隔

train_data = pd.read_csv('./data/train.csv',sep='\t',header=None)

train_data.head()

# 构造数据集迭代器

def custom_data_iter(texts,labels):

for x,y in zip(texts,labels):

yield x,y

train_iter = custom_data_iter(train_data[0].values[:],train_data[1].values[:])二、数据预处理

from torchtext.data.utils import get_tokenizer

from torchtext.vocab import build_vocab_from_iterator

import jieba

# 中文分词方法

tokenizer = jieba.lcut

def yield_tokens(data_iter):

for text,_ in data_iter:

yield tokenizer(text)

vocab = build_vocab_from_iterator(yield_tokens(train_iter),specials=["<unk>"])

vocab.set_default_index(vocab["<unk>"])

label_name = list(set(train_data[1].values[:]))

text_pipeline = lambda x:vocab(tokenizer(x))

label_pipeline = lambda x:label_name.index(x)三、模型搭建

from torch import nn

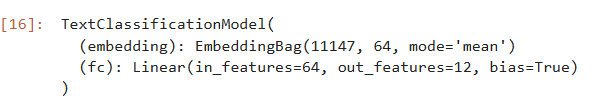

class TextClassificationModel(nn.Module):

def __init__(self,vocab_size,embed_dim,num_class):

super(TextClassificationModel,self).__init__()

self.embedding = nn.EmbeddingBag(vocab_size,embed_dim)

self.fc = nn.Linear(embed_dim,num_class)

self.init_weights()

def init_weights(self):

initrange = 0.5

self.embedding.weight.data.uniform_(-initrange,initrange)

self.fc.weight.data.uniform_(-initrange,initrange)

self.fc.bias.data.zero_()

def forward(self,text,offsets):

embedded = self.embedding(text,offsets)

return self.fc(embedded)

num_class = len(label_name)

vocab_size = len(vocab)

em_size = 64

model = TextClassificationModel(vocab_size,em_size,num_class).to(device)

model

import time

def train(dataloader):

model.train()

total_acc,train_loss,total_count = 0,0,0

log_interval = 50

start_time = time.time()

for idx,(text,label,offsets) in enumerate(dataloader):

predicted_label = model(text,offsets)

optimizer.zero_grad()

loss = criterion(predicted_label,label)

loss.backward()

torch.nn.utils.clip_grad_norm_(model.parameters(),0.1) # 梯度裁剪

optimizer.step()

total_acc += (predicted_label.argmax(1)==label).sum().item()

train_loss += loss.item()*label.size(0)

total_count += label.size(0)

if idx % log_interval == 0 and idx > 0:

elapsed = time.time() - start_time

print('| epoch {:1d} | {:4d}/{:4d} batches '

'| train_acc {:4.3f} train_loss {:4.5f}'.format(epoch, idx, len(dataloader),

total_acc/total_count, train_loss/total_count))

total_acc, train_loss, total_count = 0, 0, 0

start_time = time.time()

def evaluate(dataloader):

model.eval()

total_acc,test_loss,total_count =0,0,0

with torch.no_grad():

for idx,(text,label,offsets) in enumerate(dataloader):

predicted_label = model(text,offsets)

loss = criterion(predicted_label,label)

total_acc += (predicted_label.argmax(1)==label).sum().item()

test_loss += loss.item()*label.size(0)

total_count += label.size(0)

return total_acc/total_count,test_loss/total_count四、训练模型

from torch.utils.data.dataset import random_split

from torchtext.data.functional import to_map_style_dataset

# 超参数

EPOCHS = 10

LR = 5

BATCH_SIZE = 64

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(model.parameters(),lr=LR)

scheduler = torch.optim.lr_scheduler.StepLR(optimizer,1.0,gamma=0.1)

total_accu = None

train_iter = custom_data_iter(train_data[0].values[:],train_data[1].values[:])

train_dataset = to_map_style_dataset(train_iter)

num_train = int(len(train_dataset)*0.8)

split_train,split_valid = random_split(train_dataset,[num_train,len(train_dataset)-num_train])

train_dataloader = DataLoader(split_train,batch_size=BATCH_SIZE,shuffle=True,collate_fn=collate_batch)

valid_dataloader = DataLoader(split_valid,batch_size=BATCH_SIZE,shuffle=True,collate_fn=collate_batch)

for epoch in range(1,EPOCHS+1):

epoch_start_time = time.time()

train(train_dataloader)

val_acc,val_loss = evaluate(valid_dataloader)

lr = optimizer.state_dict()['param_groups'][0]['lr']

if total_accu is not None and total_accu > val_acc:

scheduler.step()

else:

total_accu = val_acc

print('-' * 69)

print('| epoch {:1d} | time: {:4.2f}s | '

'valid_acc {:4.3f} valid_loss {:4.3f} | lr {:4.6f}'.format(epoch,time.time()-epoch_start_time,val_acc,val_loss,lr))

print('-' * 69)

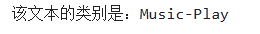

def predict(text):

with torch.no_grad():

text = torch.tensor(text_pipeline(text)).to(device)

output = model(text,torch.tensor([0]).to(device))

return output.argmax(1).item()

# ex_text_str = "还有南昌到哈尔滨西的火车票吗?"

ex_text_str = "我想听TWICE的新曲"

print("该文本的类别是:%s" %label_name[predict(ex_text_str)])

总结

本次学习对中文文本实现了分类,主要代码和N1周基本一致。