🌟prometheus-operator部署监控K8S集群

下载源代码

bash

wget https://github.com/prometheus-operator/kube-prometheus/archive/refs/tags/v0.11.0.tar.gz解压目录

bash

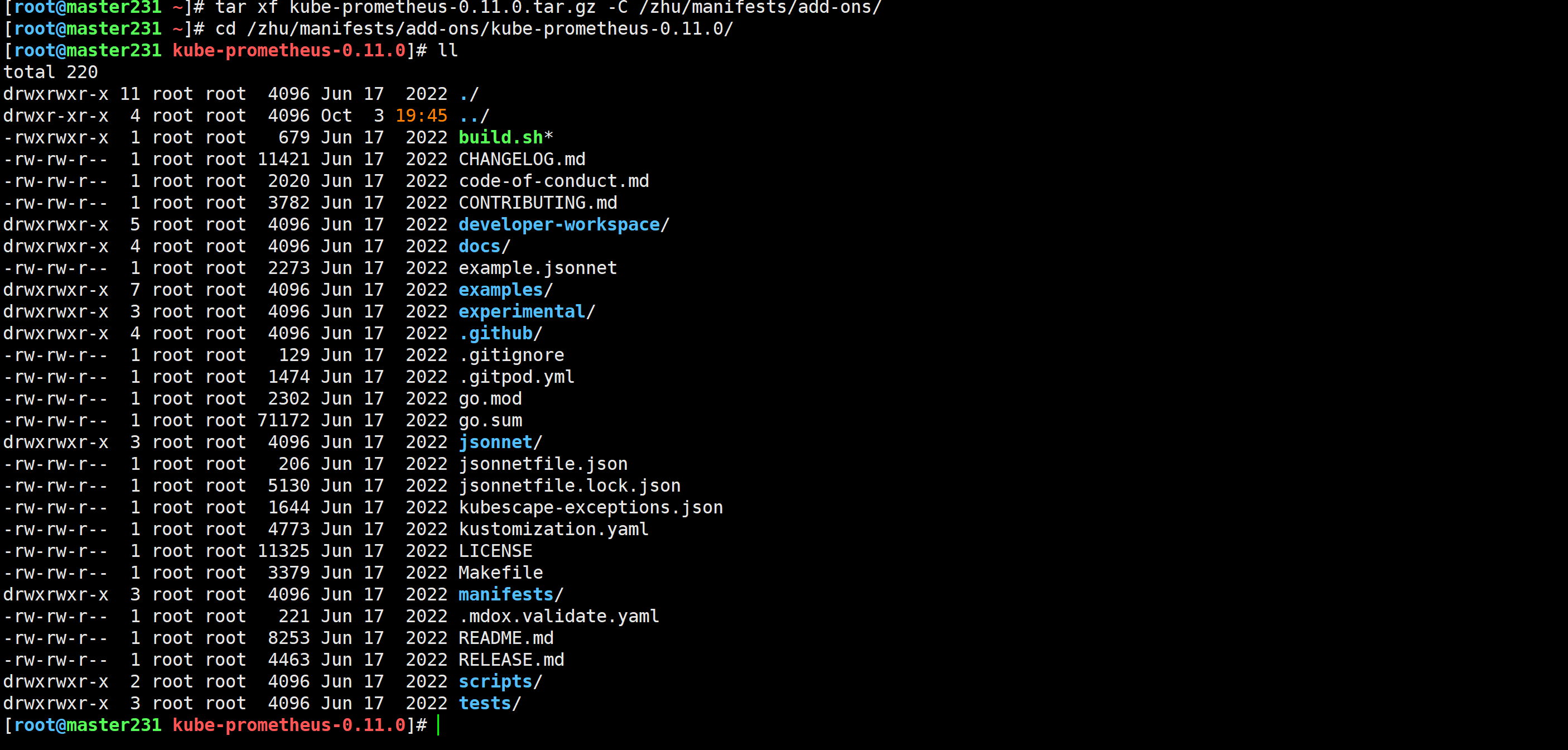

[root@master231 ~]# tar xf kube-prometheus-0.11.0.tar.gz -C /zhu/manifests/add-ons/

[root@master231 ~]# cd /zhu/manifests/add-ons/kube-prometheus-0.11.0/

[root@master231 kube-prometheus-0.11.0]#

导入镜像

bash

alertmanager-v0.24.0.tar.gz

blackbox-exporter-v0.21.0.tar.gz

configmap-reload-v0.5.0.tar.gz

grafana-v8.5.5.tar.gz

kube-rbac-proxy-v0.12.0.tar.gz

kube-state-metrics-v2.5.0.tar.gz

node-exporter-v1.3.1.tar.gz

prometheus-adapter-v0.9.1.tar.gz

prometheus-config-reloader-v0.57.0.tar.gz

prometheus-operator-v0.57.0.tar.gz

prometheus-v2.36.1.tar.gz安装Prometheus-Operator

bash

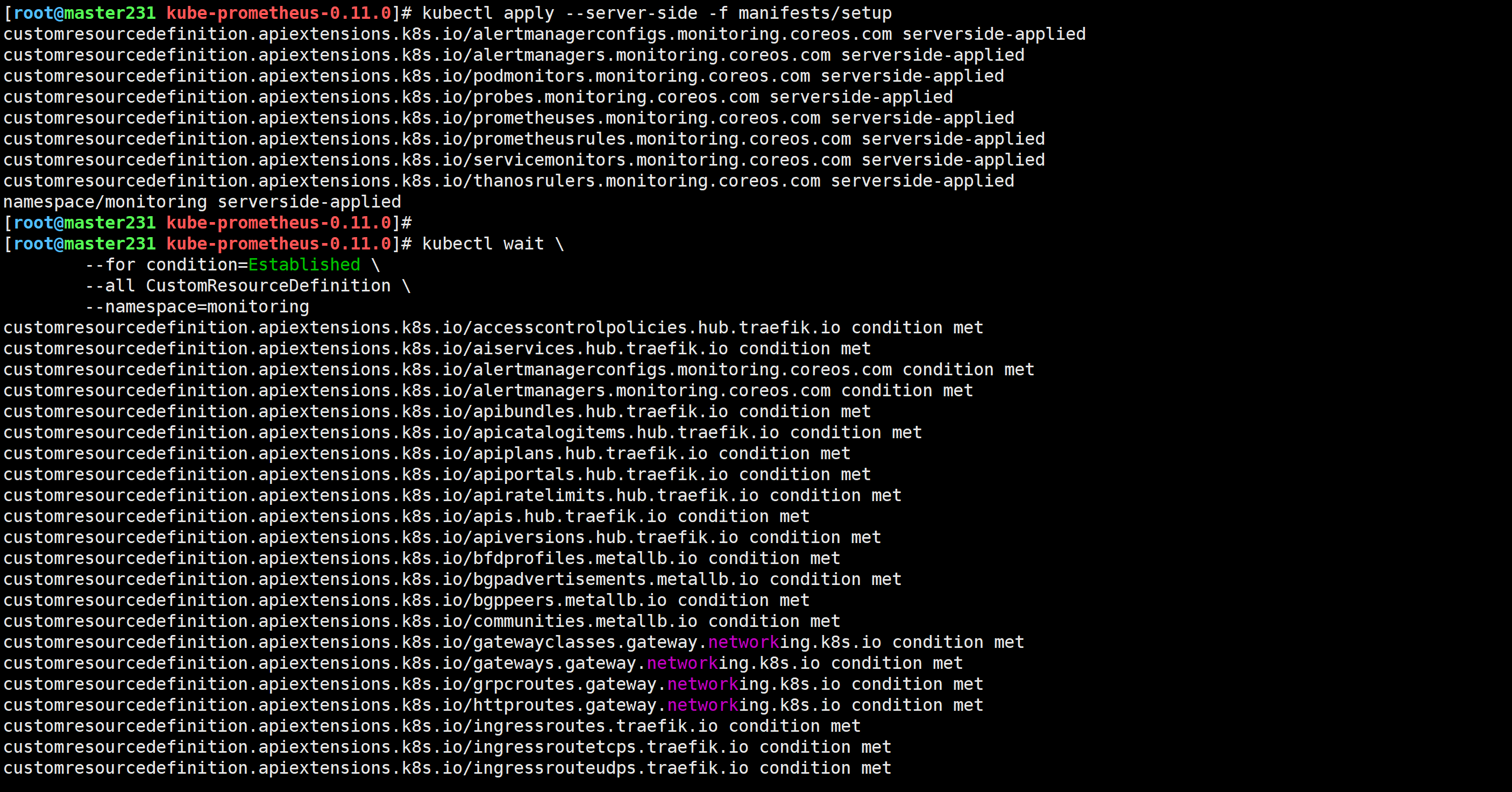

[root@master231 kube-prometheus-0.11.0]# kubectl apply --server-side -f manifests/setup

kubectl wait \

--for condition=Established \

--all CustomResourceDefinition \

--namespace=monitoring

[root@master231 kube-prometheus-0.11.0]# kubectl apply -f manifests/

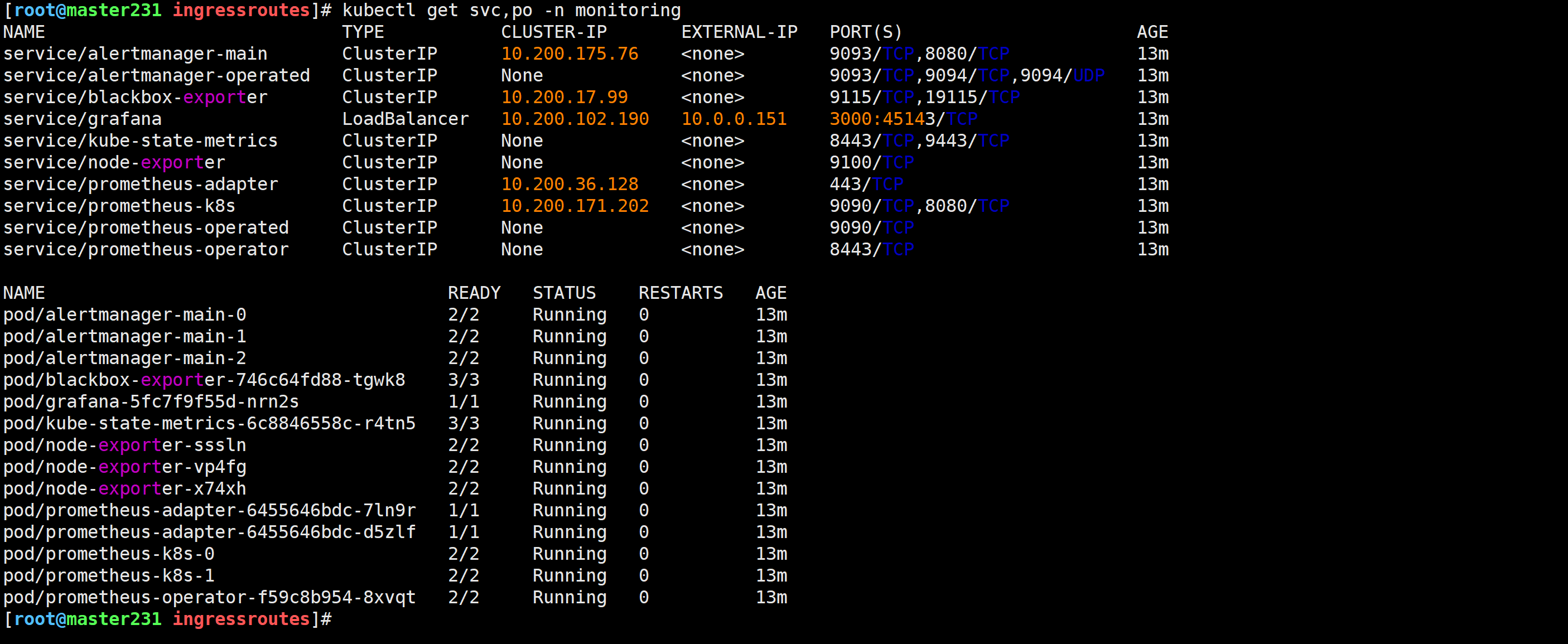

检查Prometheus是否部署成功

bash

[root@master231 kube-prometheus-0.11.0]# kubectl get pods -n monitoring -o wide

修改Grafana的svc

bash

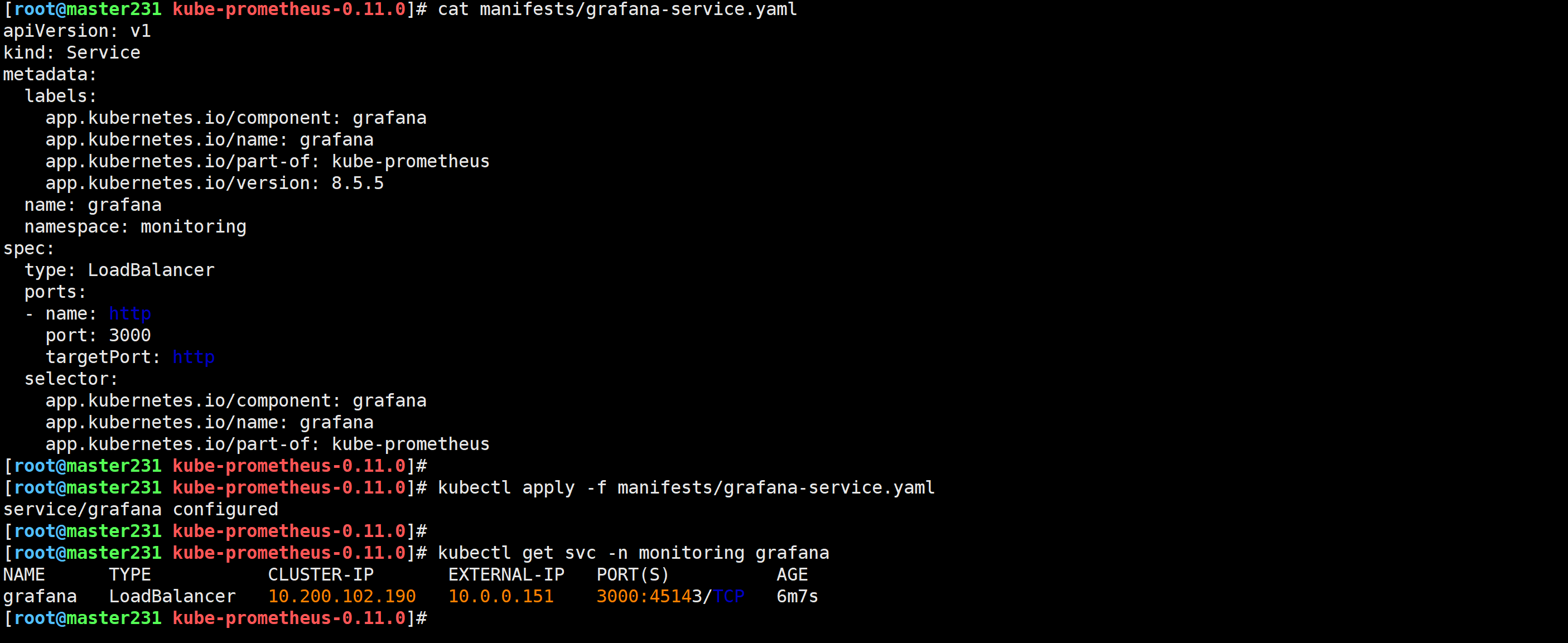

[root@master231 kube-prometheus-0.11.0]# cat manifests/grafana-service.yaml

apiVersion: v1

kind: Service

metadata:

...

name: grafana

namespace: monitoring

spec:

type: LoadBalancer

...

[root@master231 kube-prometheus-0.11.0]#

[root@master231 kube-prometheus-0.11.0]# kubectl apply -f manifests/grafana-service.yaml

service/grafana configured

[root@master231 kube-prometheus-0.11.0]#

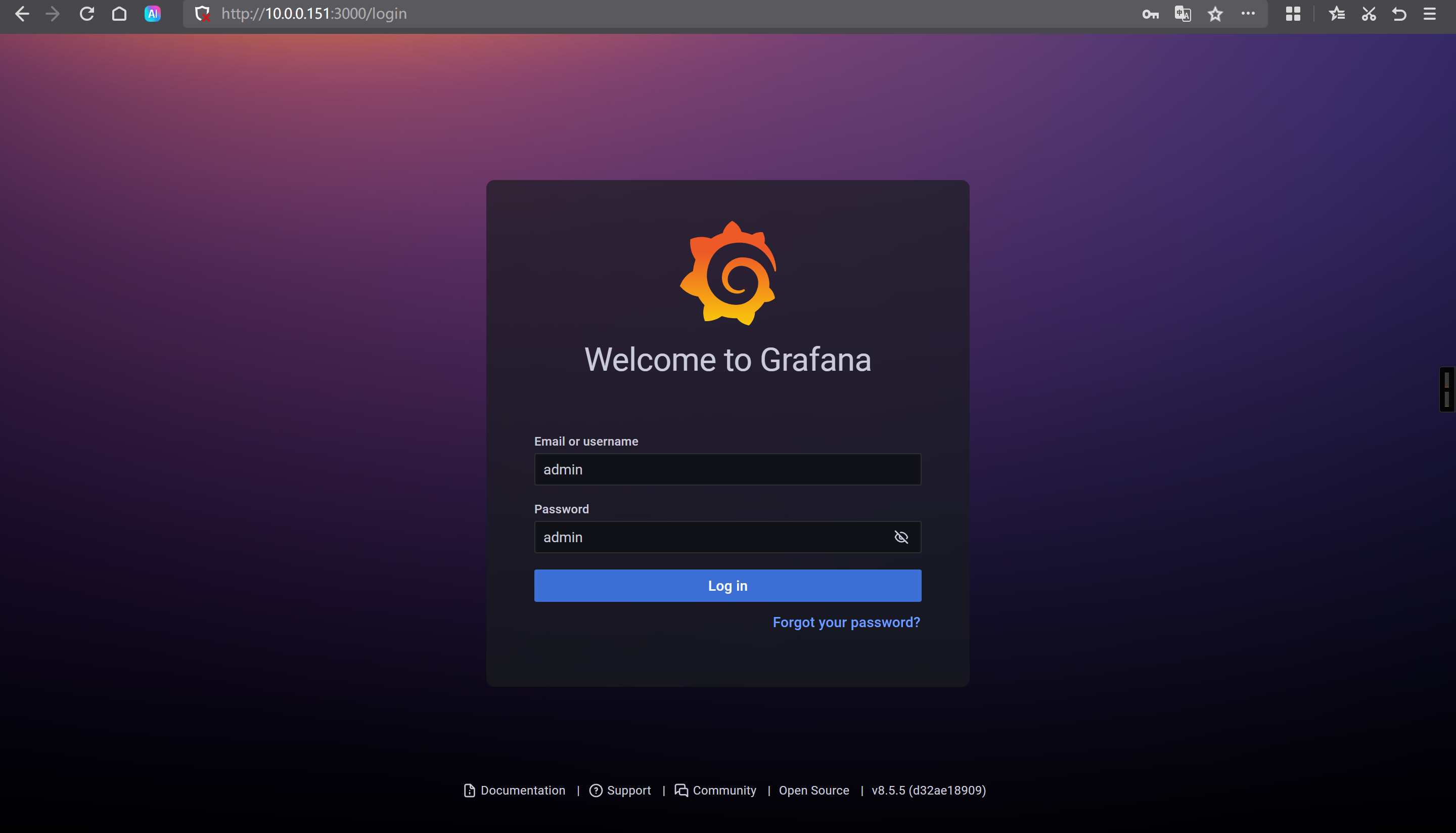

访问Grafana的WebUI

bash

http://10.0.0.151:3000/

默认的用户名和密码: admin/admin

🌟使用traefik暴露Prometheus的WebUI到K8S集群外部

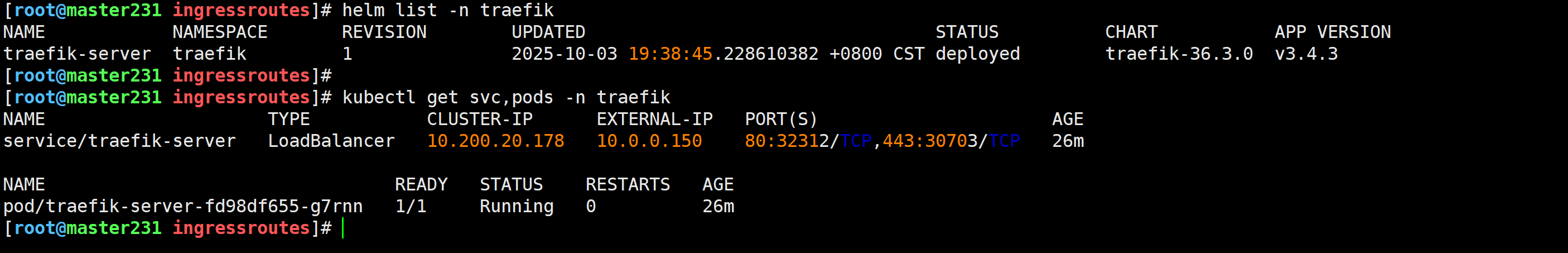

检查traefik组件是否部署

bash

[root@master231 ingressroutes]# helm list -n traefik

[root@master231 ingressroutes]# kubectl get svc,pods -n traefik

编写资源清单

yaml

[root@master231 ingressroutes]# cat 19-ingressRoute-prometheus.yaml

apiVersion: traefik.io/v1alpha1

kind: IngressRoute

metadata:

name: ingressroute-prometheus

namespace: monitoring

spec:

entryPoints:

- web

routes:

- match: Host(`prom.zhubaolin.com`) && PathPrefix(`/`)

kind: Rule

services:

- name: prometheus-k8s

port: 9090

[root@master231 ingressroutes]# kubectl apply -f 19-ingressRoute-prometheus.yaml

ingressroute.traefik.io/ingressroute-prometheus created

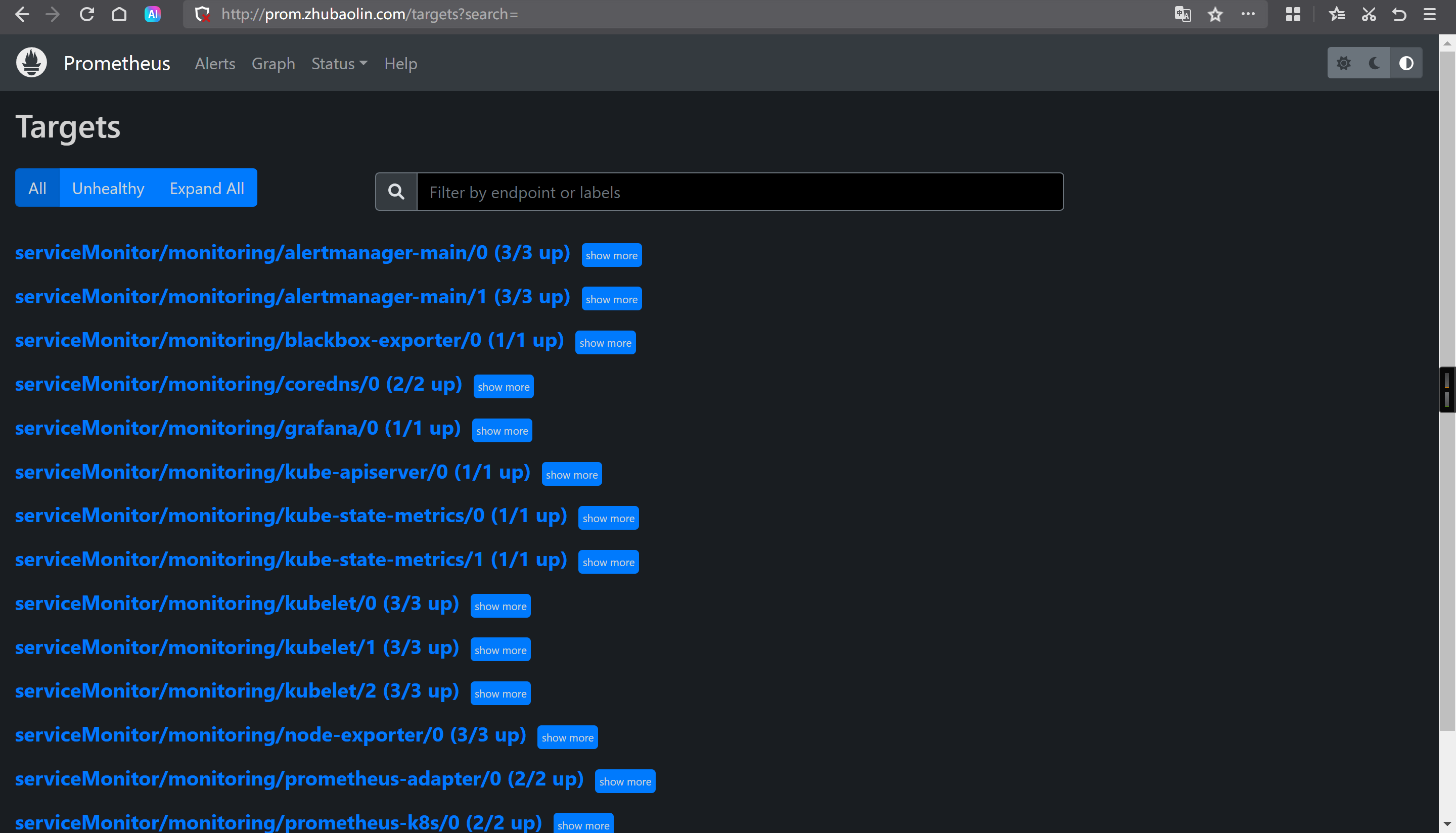

[root@master231 ingressroutes]# 浏览器访问测试

bash

http://prom.zhubaolin.com/targets?search=

其他方案

bash

hostNetwork

hostPort

port-forward

NodePort

LoadBalancer

Ingress

IngressRoute🌟Prometheus监控云原生应用etcd案例

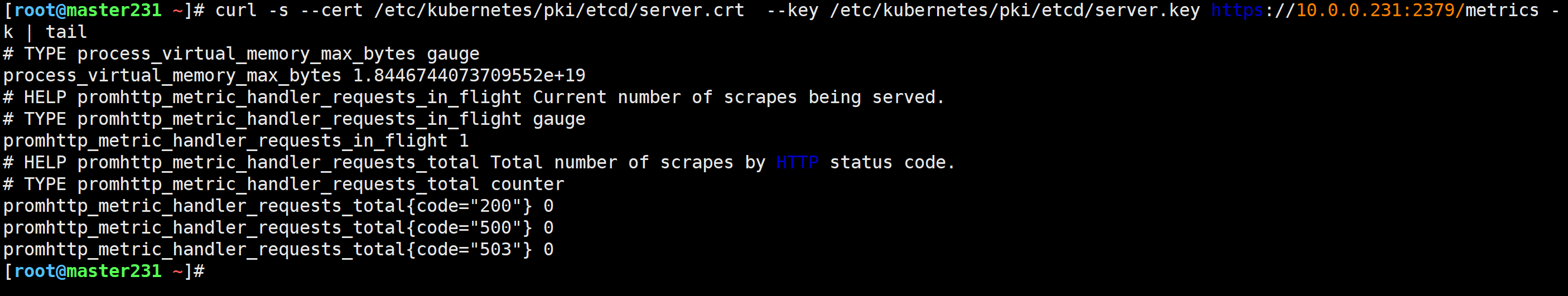

测试ectd metrics接口

查看etcd证书存储路径

bash

[root@master231 ~]# egrep "\--key-file|--cert-file|--trusted-ca-file" /etc/kubernetes/manifests/etcd.yaml

- --cert-file=/etc/kubernetes/pki/etcd/server.crt

- --key-file=/etc/kubernetes/pki/etcd/server.key

- --trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt测试etcd证书访问的metrics接口

bash

[root@master231 ~]# curl -s --cert /etc/kubernetes/pki/etcd/server.crt --key /etc/kubernetes/pki/etcd/server.key https://10.0.0.231:2379/metrics -k | tail

创建etcd证书的secrets并挂载到Prometheus server

查找需要挂载etcd的证书文件路径

bash

[root@master231 ~]# egrep "\--key-file|--cert-file|--trusted-ca-file" /etc/kubernetes/manifests/etcd.yaml

- --cert-file=/etc/kubernetes/pki/etcd/server.crt

- --key-file=/etc/kubernetes/pki/etcd/server.key

- --trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt根据etcd的实际存储路径创建secrets

bash

[root@master231 ~]# kubectl create secret generic etcd-tls --from-file=/etc/kubernetes/pki/etcd/server.crt --from-file=/etc/kubernetes/pki/etcd/server.key --from-file=/etc/kubernetes/pki/etcd/ca.crt -n monitoring

secret/etcd-tls created

[root@master231 ~]#

[root@master231 ~]# kubectl -n monitoring get secrets etcd-tls

NAME TYPE DATA AGE

etcd-tls Opaque 3 12s

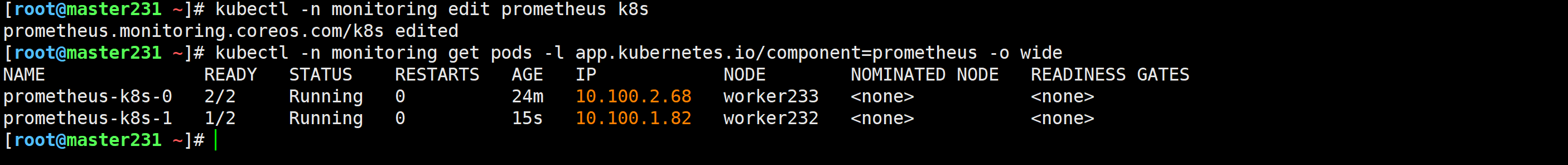

[root@master231 ~]# 修改Prometheus的资源,修改后会自动重启

bash

[root@master231 ~]# kubectl -n monitoring edit prometheus k8s

...

spec:

secrets:

- etcd-tls

...

[root@master231 ~]# kubectl -n monitoring get pods -l app.kubernetes.io/component=prometheus -o wide

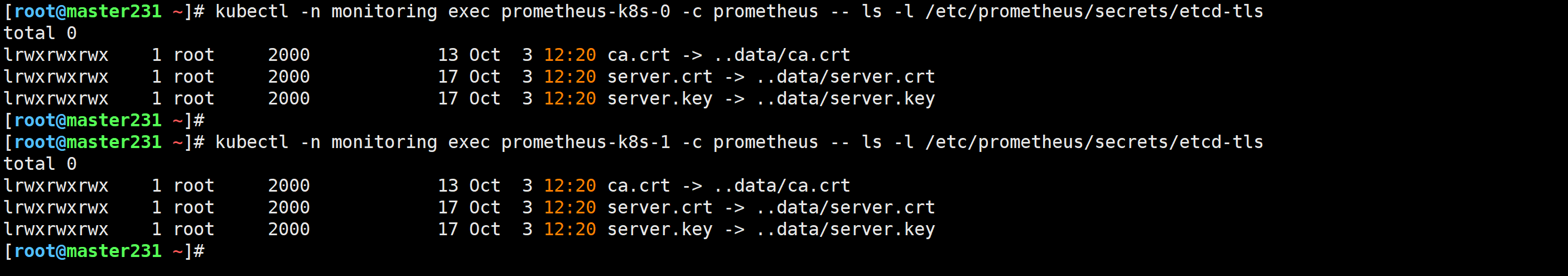

查看证书是否挂载成功

bash

[root@master231 yinzhengjie]# kubectl -n monitoring exec prometheus-k8s-0 -c prometheus -- ls -l /etc/prometheus/secrets/etcd-tls

[root@master231 yinzhengjie]# kubectl -n monitoring exec prometheus-k8s-1 -c prometheus -- ls -l /etc/prometheus/secrets/etcd-tls

编写资源清单

yaml

[root@master231 servicemonitors]# cat 01-smon-svc-etcd.yaml

apiVersion: v1

kind: Service

metadata:

name: etcd-k8s

namespace: kube-system

labels:

apps: etcd

spec:

selector:

component: etcd

ports:

- name: https-metrics

port: 2379

targetPort: 2379

type: ClusterIP

---

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: smon-etcd

namespace: monitoring

spec:

# 指定job的标签,可以不设置。

jobLabel: kubeadm-etcd-k8s

# 指定监控后端目标的策略

endpoints:

# 监控数据抓取的时间间隔

- interval: 3s

# 指定metrics端口,这个port对应Services.spec.ports.name

port: https-metrics

# Metrics接口路径

path: /metrics

# Metrics接口的协议

scheme: https

# 指定用于连接etcd的证书文件

tlsConfig:

# 指定etcd的CA的证书文件

caFile: /etc/prometheus/secrets/etcd-tls/ca.crt

# 指定etcd的证书文件

certFile: /etc/prometheus/secrets/etcd-tls/server.crt

# 指定etcd的私钥文件

keyFile: /etc/prometheus/secrets/etcd-tls/server.key

# 关闭证书校验,毕竟咱们是自建的证书,而非官方授权的证书文件。

insecureSkipVerify: true

# 监控目标Service所在的命名空间

namespaceSelector:

matchNames:

- kube-system

# 监控目标Service目标的标签。

selector:

# 注意,这个标签要和etcd的service的标签保持一致哟

matchLabels:

apps: etcd

[root@master231 servicemonitors]#

[root@master231 servicemonitors]#

[root@master231 servicemonitors]# kubectl apply -f 01-smon-svc-etcd.yaml

service/etcd-k8s created

servicemonitor.monitoring.coreos.com/smon-etcd created

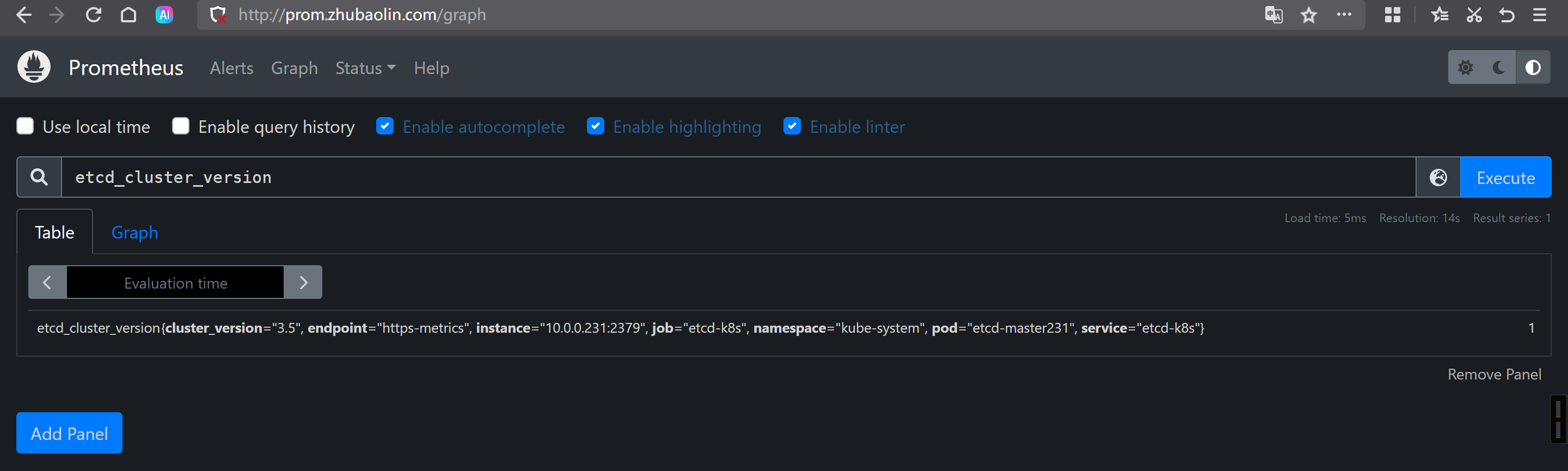

[root@master231 servicemonitors]# Prometheus查看数据

bash

etcd_cluster_version

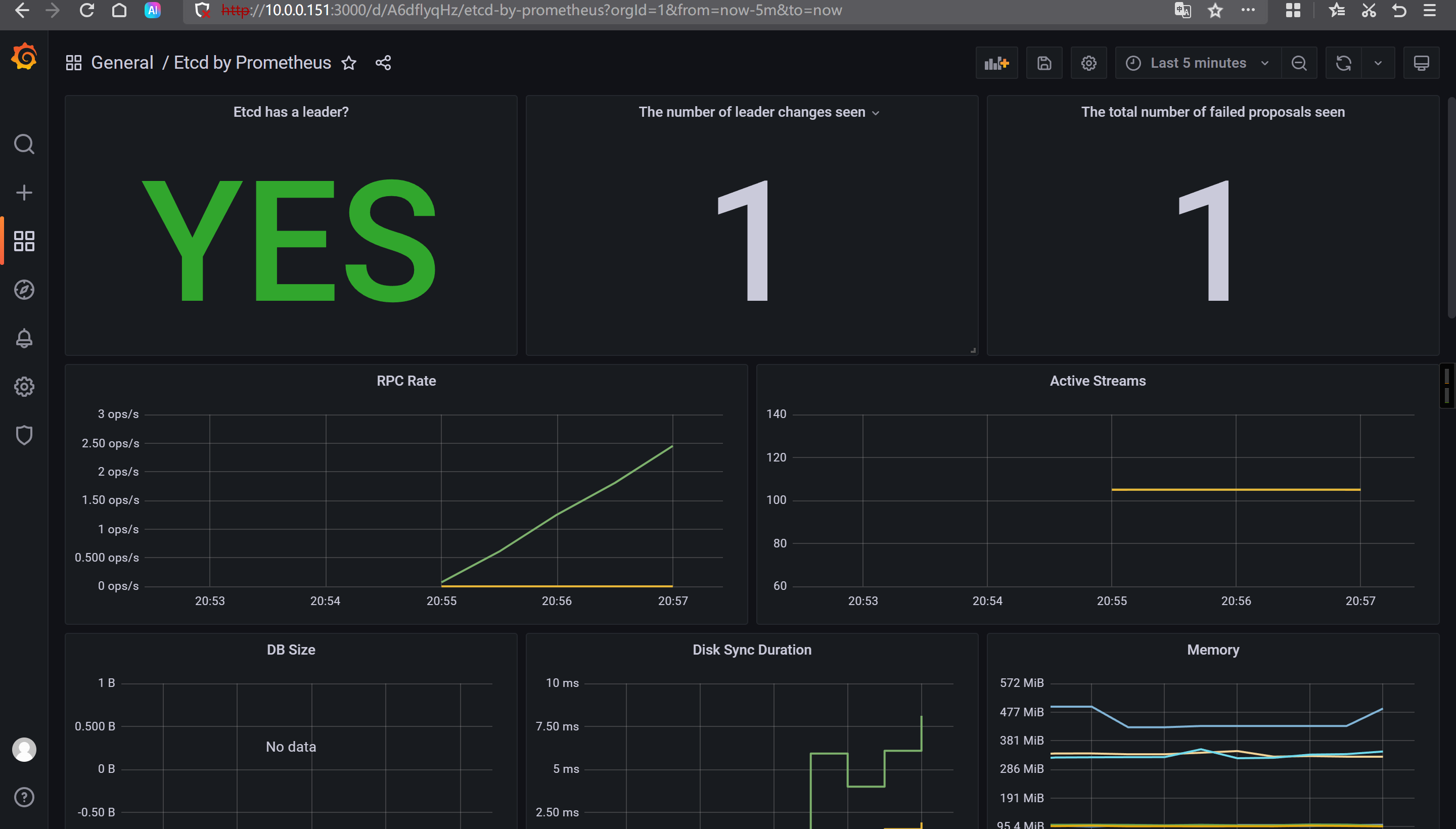

Grafana导入模板

bash

3070

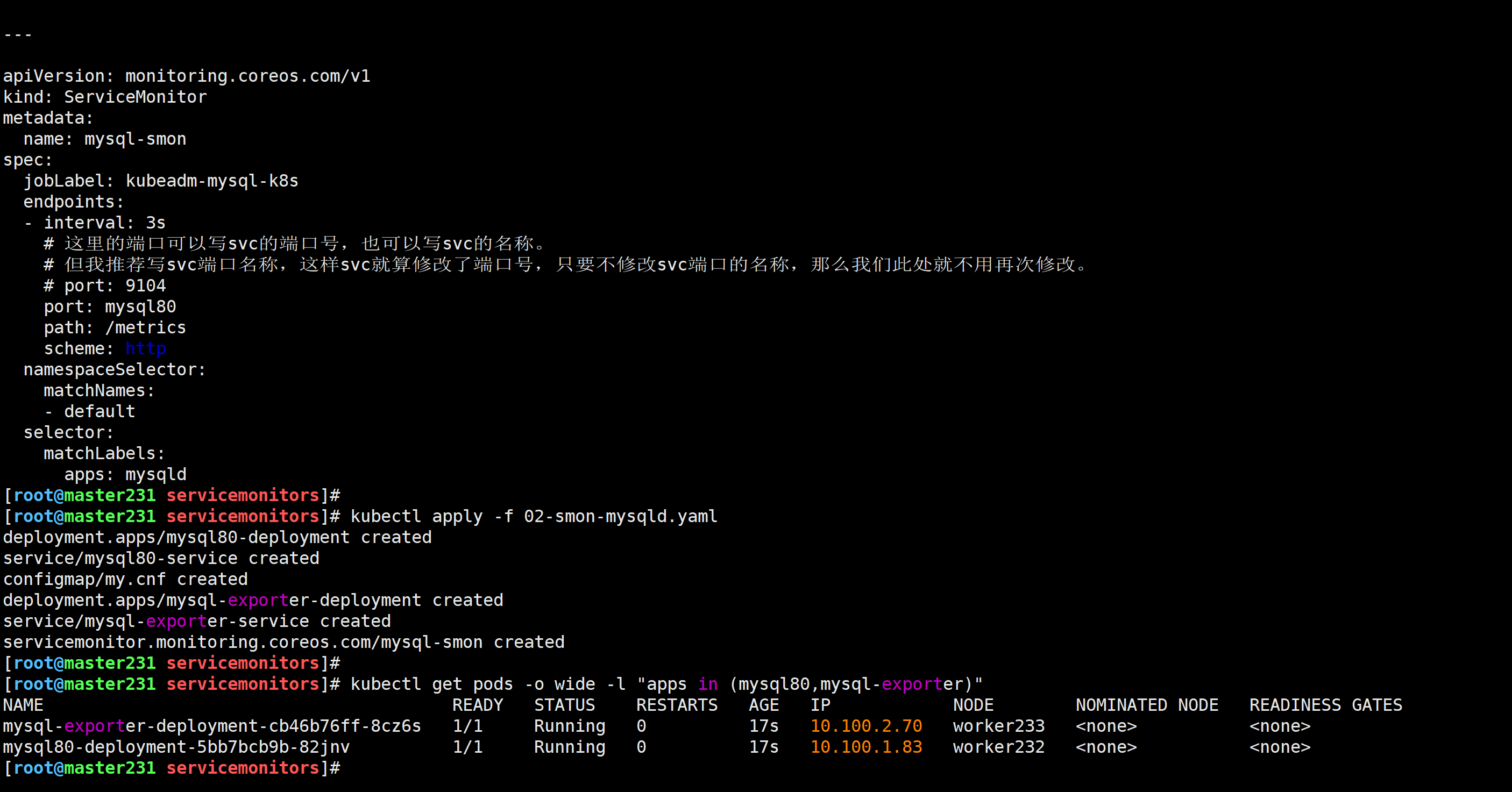

🌟Prometheus监控非云原生应用MySQL

编写资源清单

yaml

[root@master231 servicemonitors]# cat > 02-smon-mysqld.yaml <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql80-deployment

spec:

replicas: 1

selector:

matchLabels:

apps: mysql80

template:

metadata:

labels:

apps: mysql80

spec:

containers:

- name: mysql

image: harbor250.zhubl.xyz/zhubl-db/mysql:8.0.36-oracle

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: zhubaolin

- name: MYSQL_USER

value: zhu

- name: MYSQL_PASSWORD

value: "zhu"

---

apiVersion: v1

kind: Service

metadata:

name: mysql80-service

spec:

selector:

apps: mysql80

ports:

- protocol: TCP

port: 3306

targetPort: 3306

---

apiVersion: v1

kind: ConfigMap

metadata:

name: my.cnf

data:

.my.cnf: |-

[client]

user = zhu

password = zhu

[client.servers]

user = zhu

password = zhu

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql-exporter-deployment

spec:

replicas: 1

selector:

matchLabels:

apps: mysql-exporter

template:

metadata:

labels:

apps: mysql-exporter

spec:

volumes:

- name: data

configMap:

name: my.cnf

items:

- key: .my.cnf

path: .my.cnf

containers:

- name: mysql-exporter

image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/mysqld-exporter:v0.15.1

command:

- mysqld_exporter

- --config.my-cnf=/root/my.cnf

- --mysqld.address=mysql80-service.default.svc.zhubl.xyz:3306

securityContext:

runAsUser: 0

ports:

- containerPort: 9104

#env:

#- name: DATA_SOURCE_NAME

# value: mysql_exporter:zhubaolin@(mysql80-service.default.svc.zhubl.xyz:3306)

volumeMounts:

- name: data

mountPath: /root/my.cnf

subPath: .my.cnf

---

apiVersion: v1

kind: Service

metadata:

name: mysql-exporter-service

labels:

apps: mysqld

spec:

selector:

apps: mysql-exporter

ports:

- protocol: TCP

port: 9104

targetPort: 9104

name: mysql80

---

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: mysql-smon

spec:

jobLabel: kubeadm-mysql-k8s

endpoints:

- interval: 3s

# 这里的端口可以写svc的端口号,也可以写svc的名称。

# 但我推荐写svc端口名称,这样svc就算修改了端口号,只要不修改svc端口的名称,那么我们此处就不用再次修改。

# port: 9104

port: mysql80

path: /metrics

scheme: http

namespaceSelector:

matchNames:

- default

selector:

matchLabels:

apps: mysqld

EOF

[root@master231 servicemonitors]# kubectl apply -f 02-smon-mysqld.yaml

[root@master231 servicemonitors]#

[root@master231 servicemonitors]# kubectl get pods -o wide -l "apps in (mysql80,mysql-exporter)"

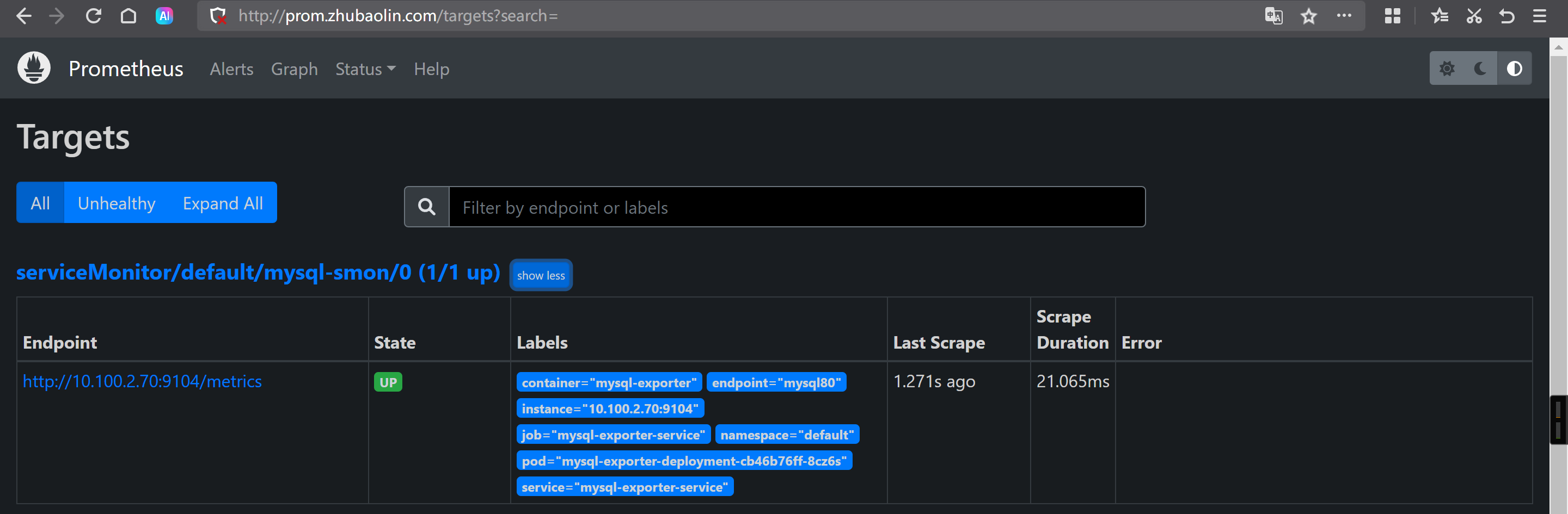

Prometheus访问测试

bash

mysql_up[30s]

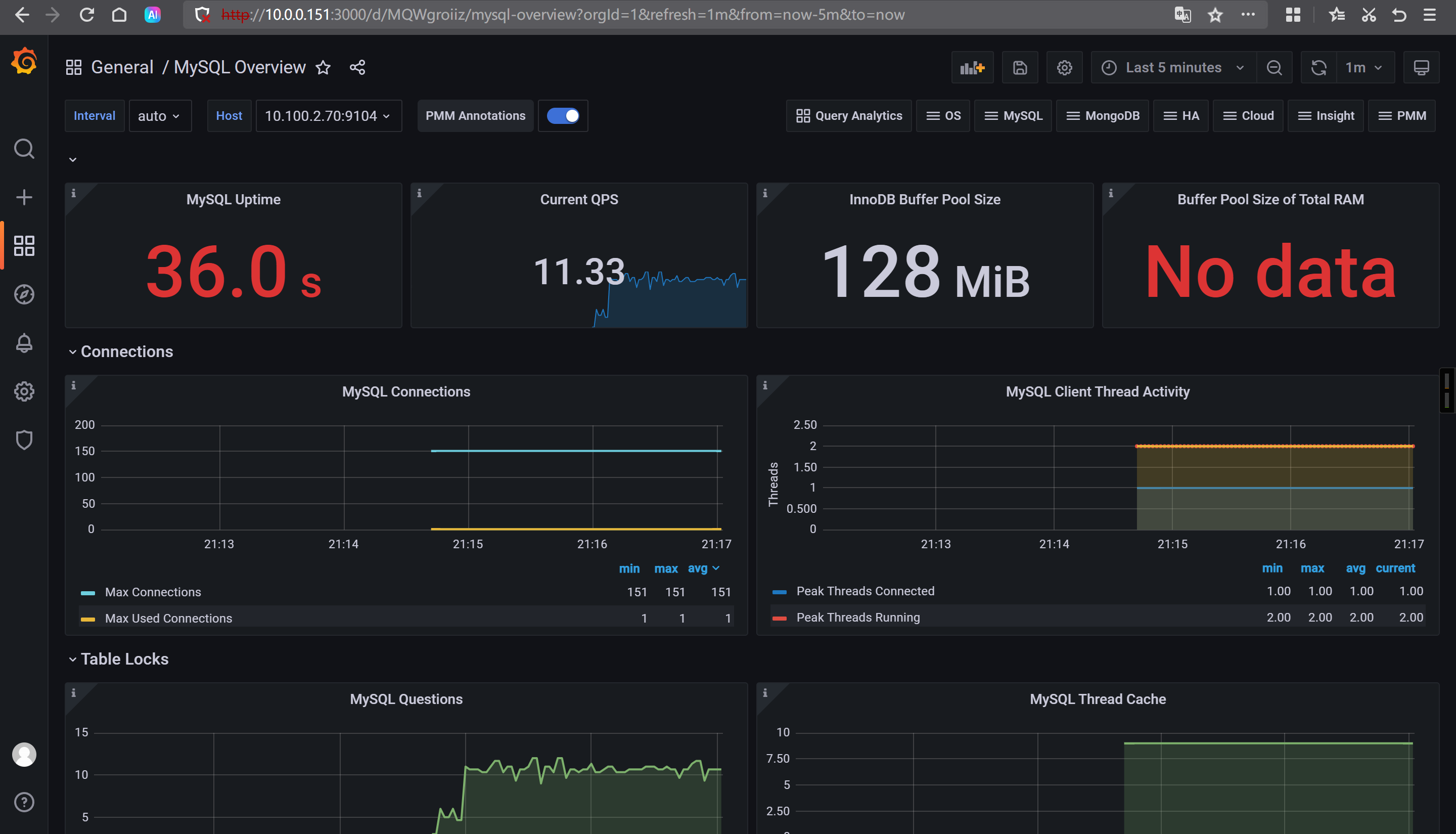

Grafana导入模板

bash

7362

14057

17320

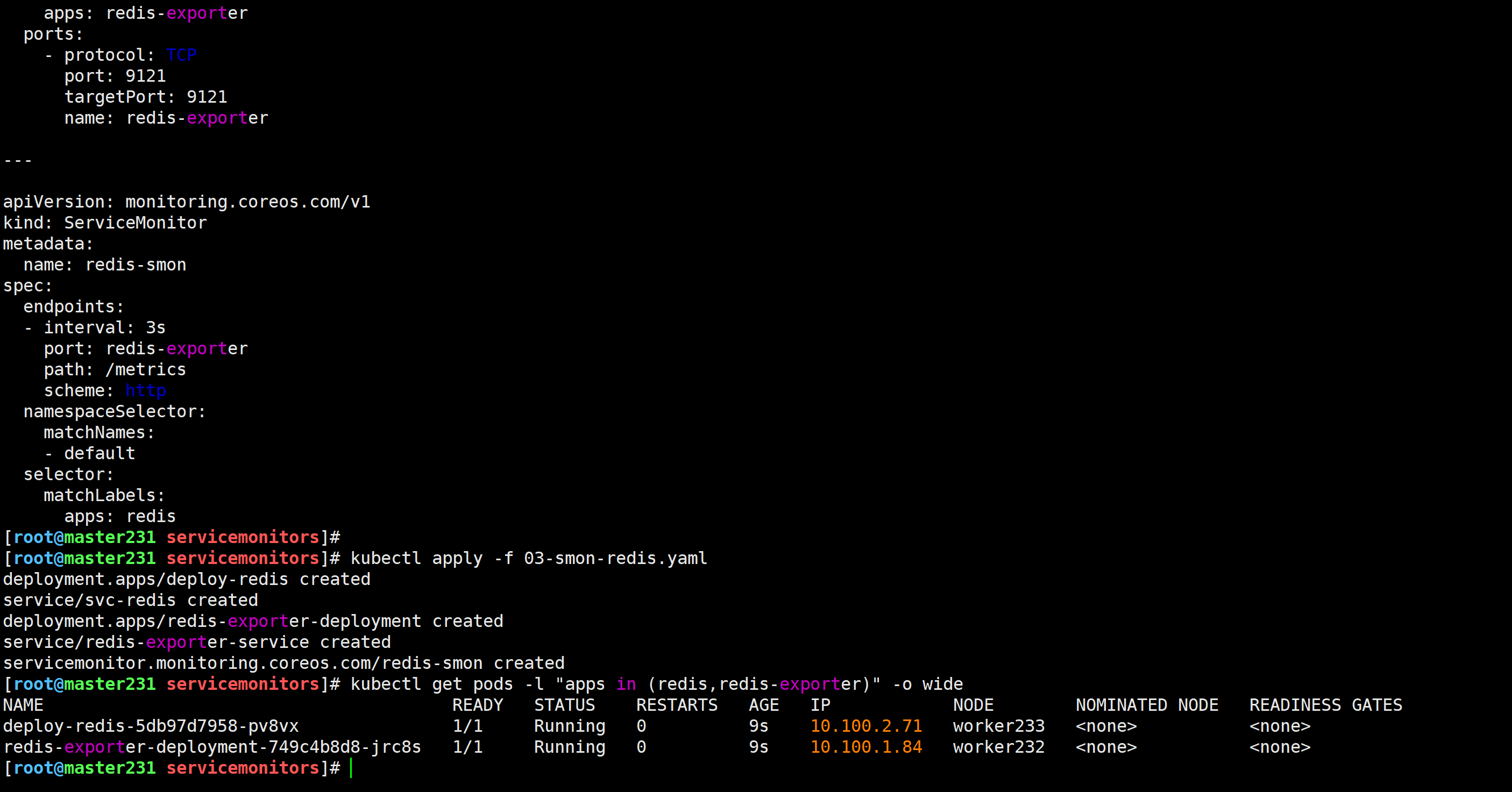

🌟smon监控redis实战

- 在k8s集群部署redis服务

- 使用smon资源监控Redis服务

- 使用Grafana出图展示

导入redis-exporter镜像并推送到harbor仓库

bash

docker load -i redis_exporter-v1.74.0-alpine.tar.gz

docker tag oliver006/redis_exporter:v1.74.0-alpine harbor250.zhubl.xyz/redis/redis_exporter:v1.74.0-alpine

docker push harbor250.zhubl.xyz/redis/redis_exporter:v1.74.0-alpine使用Smon监控redis服务

yaml

[root@master231 servicemonitors]# cat 03-smon-redis.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-redis

spec:

replicas: 1

selector:

matchLabels:

apps: redis

template:

metadata:

labels:

apps: redis

spec:

containers:

- image: harbor250.zhubl.xyz/redis/redis:6.0.5

name: db

ports:

- containerPort: 6379

---

apiVersion: v1

kind: Service

metadata:

name: svc-redis

spec:

ports:

- port: 6379

selector:

apps: redis

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: redis-exporter-deployment

spec:

replicas: 1

selector:

matchLabels:

apps: redis-exporter

template:

metadata:

labels:

apps: redis-exporter

spec:

containers:

- name: redis-exporter

image: harbor250.zhubl.xyz/redis/redis_exporter:v1.74.0-alpine

env:

- name: REDIS_ADDR

value: redis://svc-redis.default.svc:6379

- name: REDIS_EXPORTER_WEB_TELEMETRY_PATH

value: /metrics

- name: REDIS_EXPORTER_WEB_LISTEN_ADDRESS

value: :9121

#command:

#- redis_exporter

#args:

#- -redis.addr redis://svc-redis.default.svc:6379

#- -web.telemetry-path /metrics

#- -web.listen-address :9121

ports:

- containerPort: 9121

---

apiVersion: v1

kind: Service

metadata:

name: redis-exporter-service

labels:

apps: redis

spec:

selector:

apps: redis-exporter

ports:

- protocol: TCP

port: 9121

targetPort: 9121

name: redis-exporter

---

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: redis-smon

spec:

endpoints:

- interval: 3s

port: redis-exporter

path: /metrics

scheme: http

namespaceSelector:

matchNames:

- default

selector:

matchLabels:

apps: redis

[root@master231 servicemonitors]# kubectl apply -f 03-smon-redis.yaml

deployment.apps/deploy-redis created

service/svc-redis created

deployment.apps/redis-exporter-deployment created

service/redis-exporter-service created

servicemonitor.monitoring.coreos.com/redis-smon created

[root@master231 servicemonitors]#

[root@master231 servicemonitors]# kubectl get pods -l "apps in (redis,redis-exporter)" -o wide

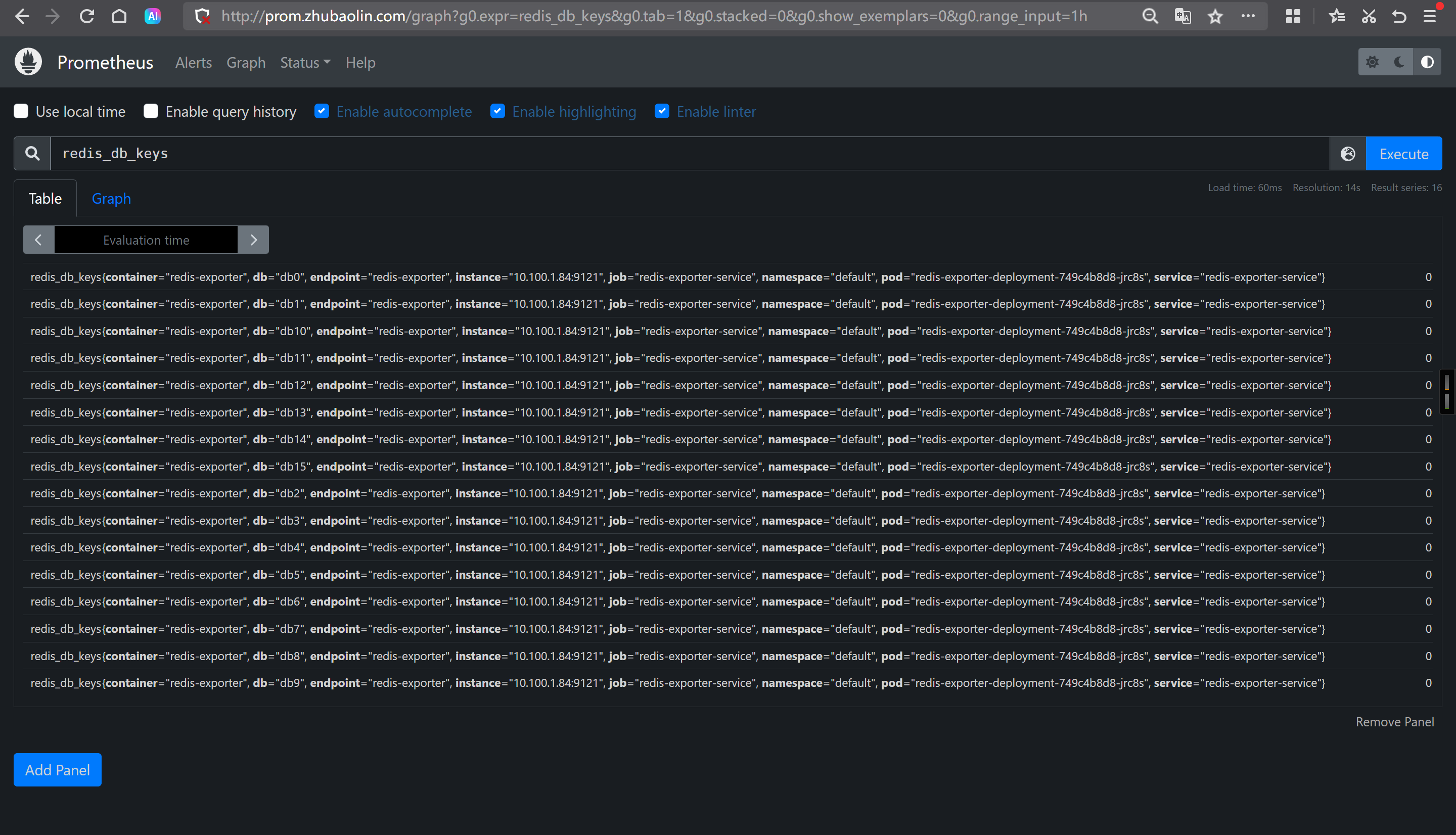

访问Prometheus的WebUI

bash

http://prom.zhubl.xyz/targets?search=

测试key: redis_db_keys

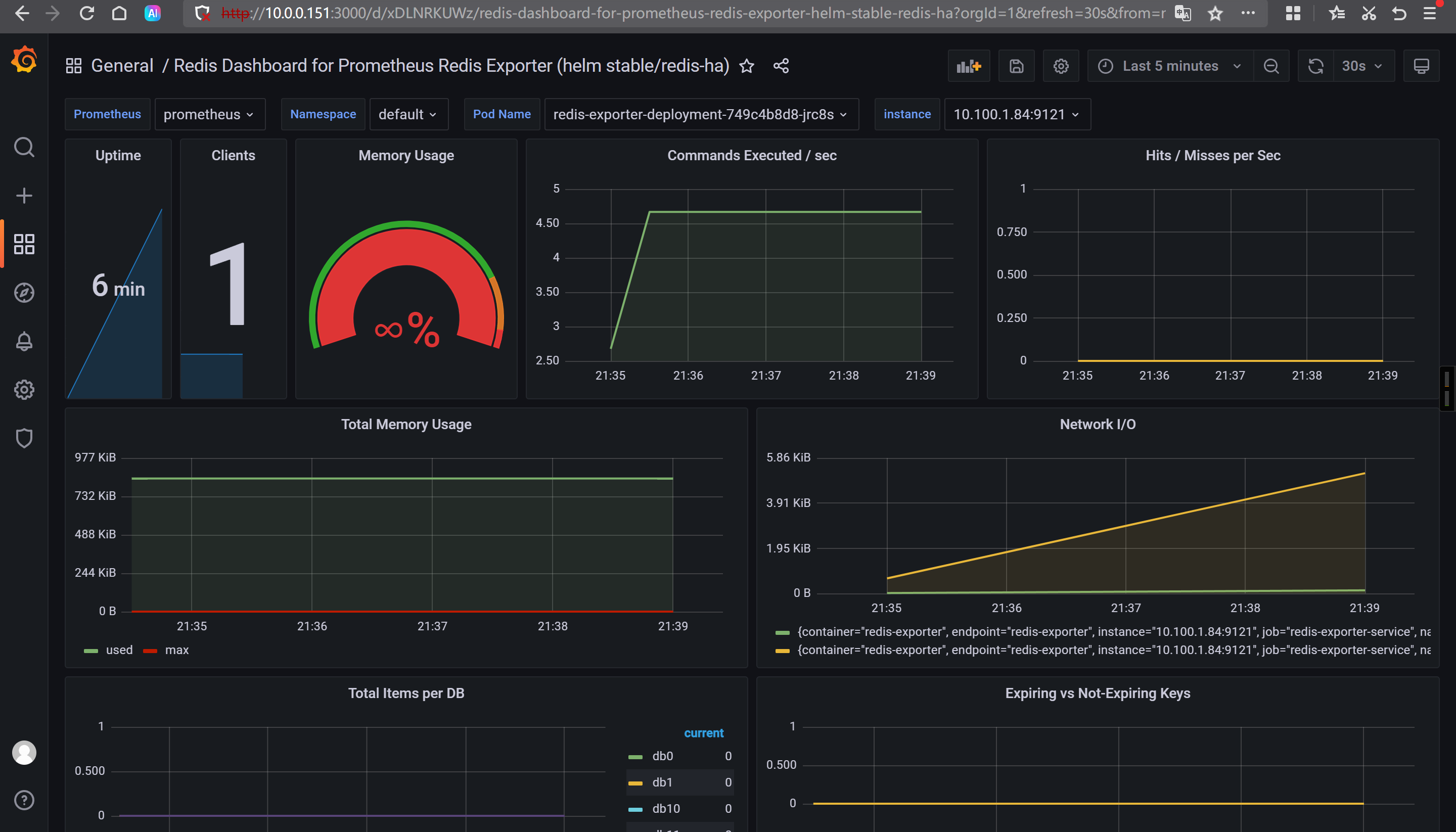

导入Grafana的ID

bash

11835

14091

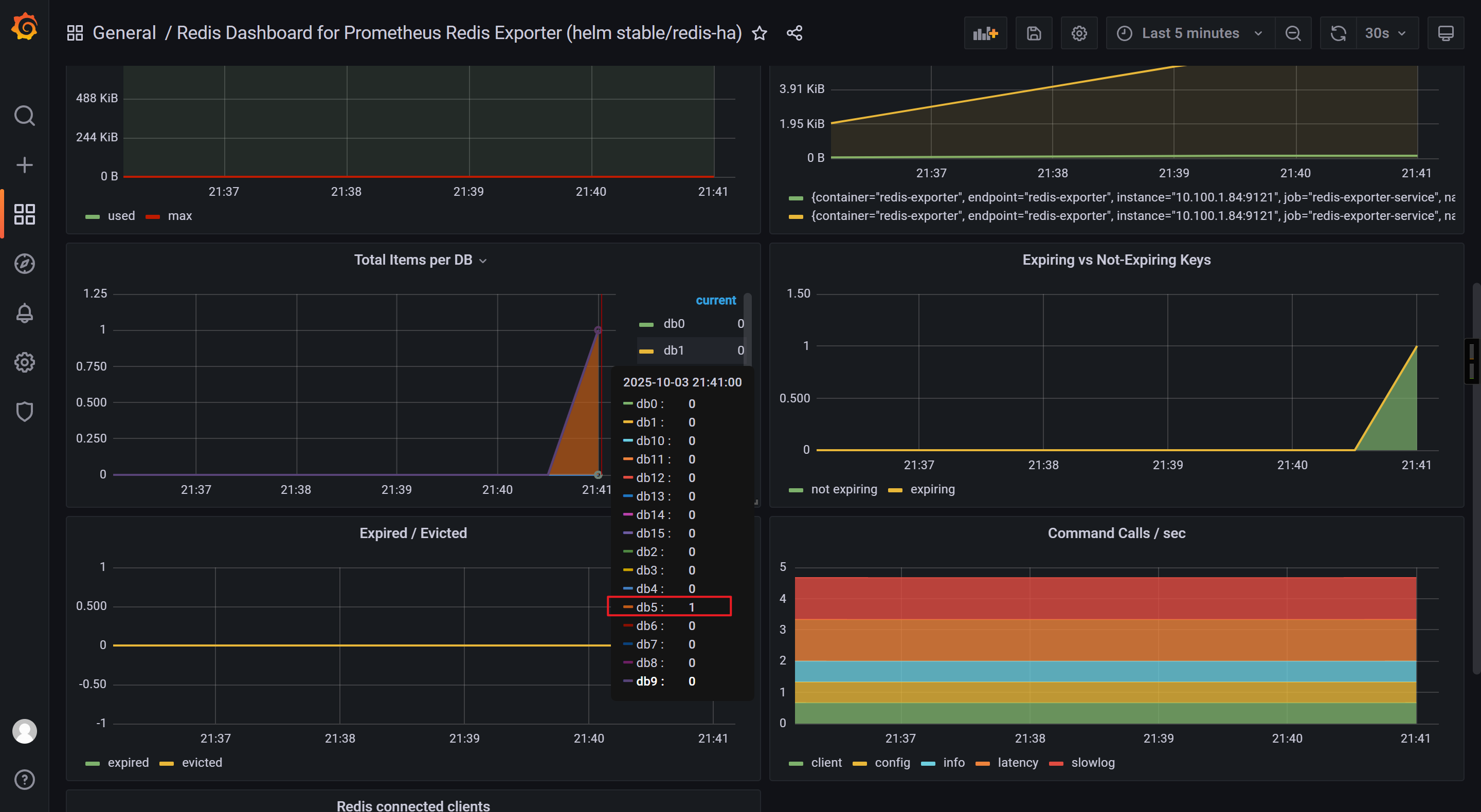

测试验证准确性

bash

[root@master231 servicemonitors]# kubectl exec -it deploy-redis-5db97d7958-pv8vx -- redis-cli -n 5 --raw

127.0.0.1:6379[5]> KEYS *

127.0.0.1:6379[5]> set zhu xixi

OK写入后再次观察Grafana的数据是否准确。

🌟Alertmanager的配置文件使用自定义模板和配置文件定义

参考目录

bash

[root@master231 kube-prometheus-0.11.0]# pwd

/zhu/manifests/add-ons/kube-prometheus-0.11.0参考文件

bash

[root@master231 kube-prometheus-0.11.0]# ll manifests/alertmanager-secret.yaml

-rw-rw-r-- 1 root root 1443 Jun 17 2022 manifests/alertmanager-secret.yamlAlertmanager引用cm资源

局部参考

bash

[root@master231 kube-prometheus-0.11.0]# cat manifests/alertmanager-alertmanager.yaml

apiVersion: monitoring.coreos.com/v1

kind: Alertmanager

metadata:

...

name: main

namespace: monitoring

spec:

volumes:

- name: data

configMap:

name: cm-alertmanager

items:

- key: zhu.tmpl

path: zhu.tmpl

volumeMounts:

- name: data

mountPath: /zhu/softwares/alertmanager/tmpl

image: quay.io/prometheus/alertmanager:v0.24.0

...Prometheus的配置文件

bash

[root@master231 kube-prometheus-0.11.0]# ll manifests/prometheus-prometheus.yaml

-rw-rw-r-- 1 root root 1238 Jun 17 2022 manifests/prometheus-prometheus.yaml🌟Prometheus监控自定义程序

编写资源清单

yaml

[root@master231 servicemonitors]# cat 04-smon-golang-login.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-login

spec:

replicas: 1

selector:

matchLabels:

apps: login

template:

metadata:

labels:

apps: login

spec:

containers:

- name: c1

image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:login

resources:

requests:

memory: 100Mi

cpu: 100m

limits:

cpu: 200m

memory: 200Mi

ports:

- containerPort: 8080

name: login-api

---

apiVersion: v1

kind: Service

metadata:

name: svc-login

labels:

apps: login

spec:

ports:

- port: 8080

targetPort: login-api

name: login

selector:

apps: login

type: ClusterIP

---

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: smon-login

spec:

endpoints:

- interval: 3s

port: "login"

namespaceSelector:

matchNames:

- default

selector:

matchLabels:

apps: login测试验证

bash

[root@master231 servicemonitors]# kubectl apply -f 04-smon-golang-login.yaml

deployment.apps/deploy-login created

service/svc-login created

servicemonitor.monitoring.coreos.com/smon-login created

[root@master231 servicemonitors]#

[root@master231 servicemonitors]#

[root@master231 servicemonitors]# kubectl get svc svc-login

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc-login ClusterIP 10.200.42.196 <none> 8080/TCP 6m41s

[root@master231 servicemonitors]#

[root@master231 servicemonitors]# curl 10.200.35.71:8080/login

https://www.zhubl.xyz/zhubaolin

[root@master231 servicemonitors]#

[root@master231 servicemonitors]# for i in `seq 10`; do curl 10.200.35.71:8080/login;done

https://www.zhubl.xyz/zhubaolin

https://www.zhubl.xyz/zhubaolin

https://www.zhubl.xyz/zhubaolin

https://www.zhubl.xyz/zhubaolin

https://www.zhubl.xyz/zhubaolin

https://www.zhubl.xyz/zhubaolin

https://www.zhubl.xyz/zhubaolin

https://www.zhubl.xyz/zhubaolin

https://www.zhubl.xyz/zhubaolin

https://www.zhubl.xyz/zhubaolin

[root@master231 servicemonitors]#

[root@master231 servicemonitors]# curl -s 10.200.35.71:8080/metrics | grep application_login_api

# HELP application_login_api Count the number of visits to the /login interface

# TYPE application_login_api counter

yinzhengjie_application_login_api 11

[root@master231 servicemonitors]# Grafana出图展示

相关的查询语句:

application_login_api

apps请求总数。

increase(application_login_api[1m])

每分钟请求数量曲线QPS。

irate(application_login_api[1m])

每分钟请求量变化率曲线

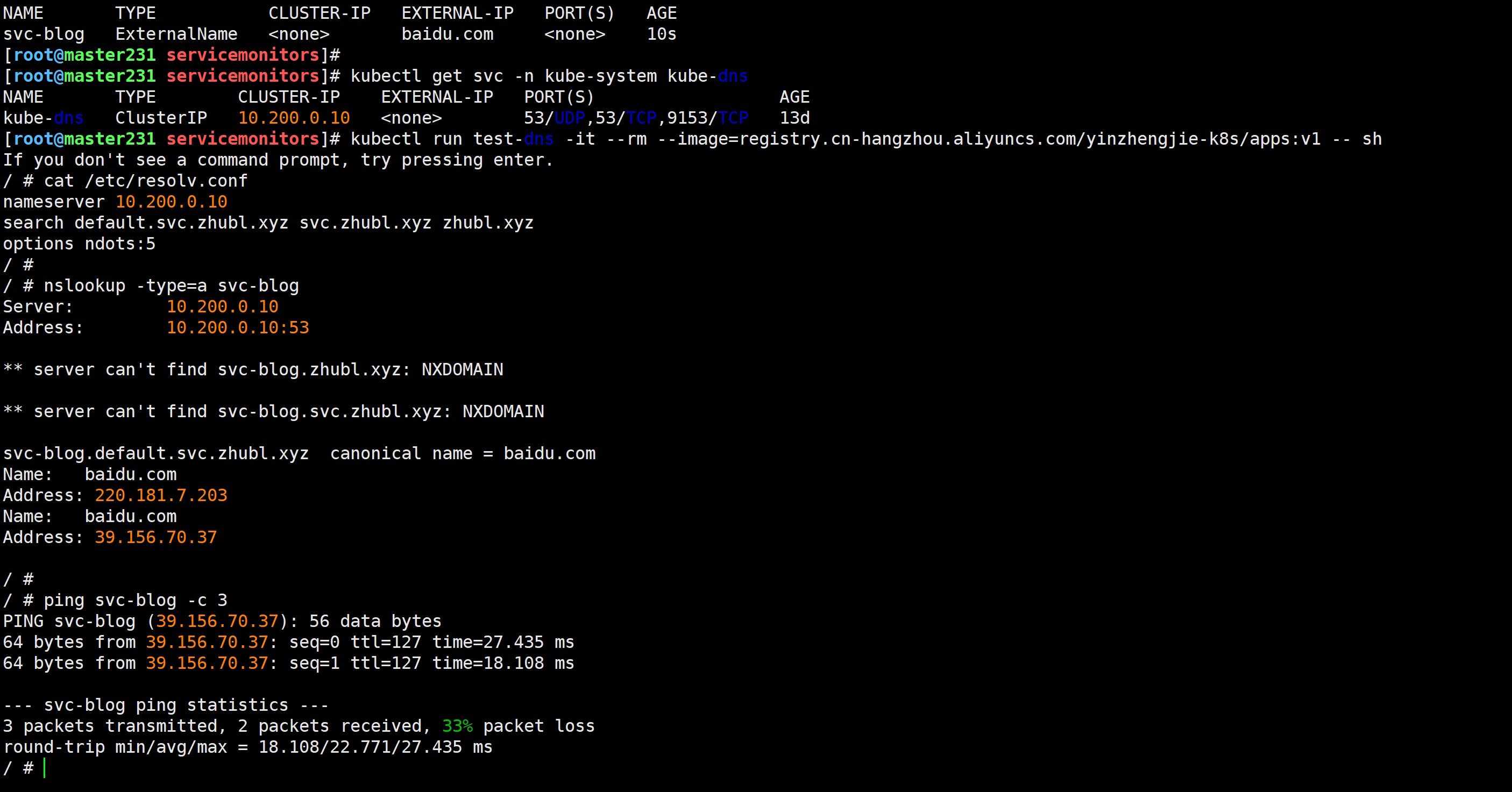

🌟ExternalName类型

ExternalName简介

ExternalName的主要作用就是将K8S集群外部的服务映射到K8S集群内部。

ExternalName是没有CLUSTER-IP地址。

创建svc

bash

[root@master231 servicemonitors]# cat 05-svc-ExternalName.yaml

apiVersion: v1

kind: Service

metadata:

name: svc-blog

spec:

type: ExternalName

externalName: baidu.com

[root@master231 servicemonitors]#

[root@master231 servicemonitors]# kubectl apply -f 05-svc-ExternalName.yaml

service/svc-blog created

[root@master231 servicemonitors]# kubectl get svc svc-blog

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc-blog ExternalName <none> baidu.com <none> 10s

[root@master231 servicemonitors]# 测试验证

bash

[root@master231 services]# kubectl get svc -n kube-system kube-dns

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.200.0.10 <none> 53/UDP,53/TCP,9153/TCP 14d

[root@master231 services]#

[root@master231 services]# kubectl run test-dns -it --rm --image=registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1 -- sh

If you don't see a command prompt, try pressing enter.

/ #

/ # cat /etc/resolv.conf

nameserver 10.200.0.10

/ # nslookup -type=a svc-blog

/ # ping svc-blog -c 3

Session ended, resume using 'kubectl attach test-dns -c test-dns -i -t' command when the pod is running

pod "test-dns" deleted

温馨提示: 如果服务在K8S集群外部,且服务不在公网,而是在公司内部,则需要我们修改coreDNS的A记录。

🌟endpoints端点映射MySQL

什么是endpoints

所谓的endpoints简称为ep,除了ExternalName外的其他svc类型,每个svc都会关联一个ep资源。

当删除Service资源时,会自动删除与Service同名称的endpoints资源。

如果想要映射k8s集群外部的服务,可以先定义一个ep资源,而后再创建一个同名称的svc资源即可。

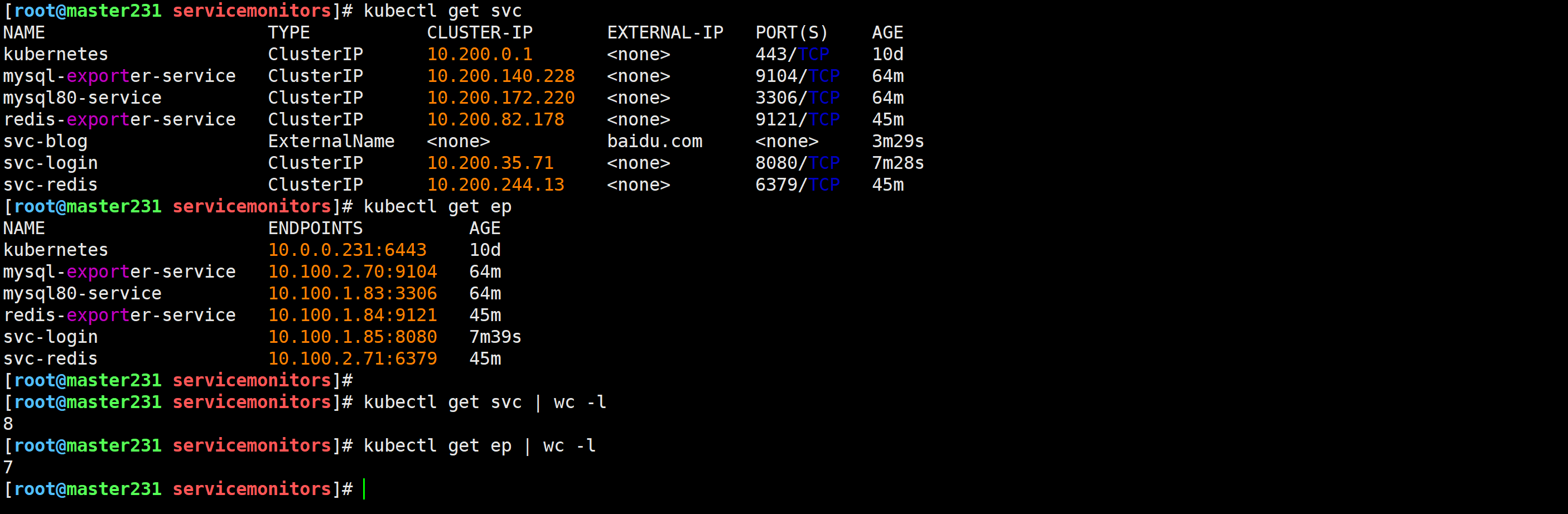

验证svc关联相应的ep资源

查看svc和ep的关联性

bash

[root@master231 servicemonitors]# kubectl get svc

[root@master231 servicemonitors]#

[root@master231 servicemonitors]# kubectl get ep

[root@master231 servicemonitors]# kubectl get svc | wc -l

8

[root@master231 servicemonitors]# kubectl get ep | wc -l

7

[root@master231 servicemonitors]#

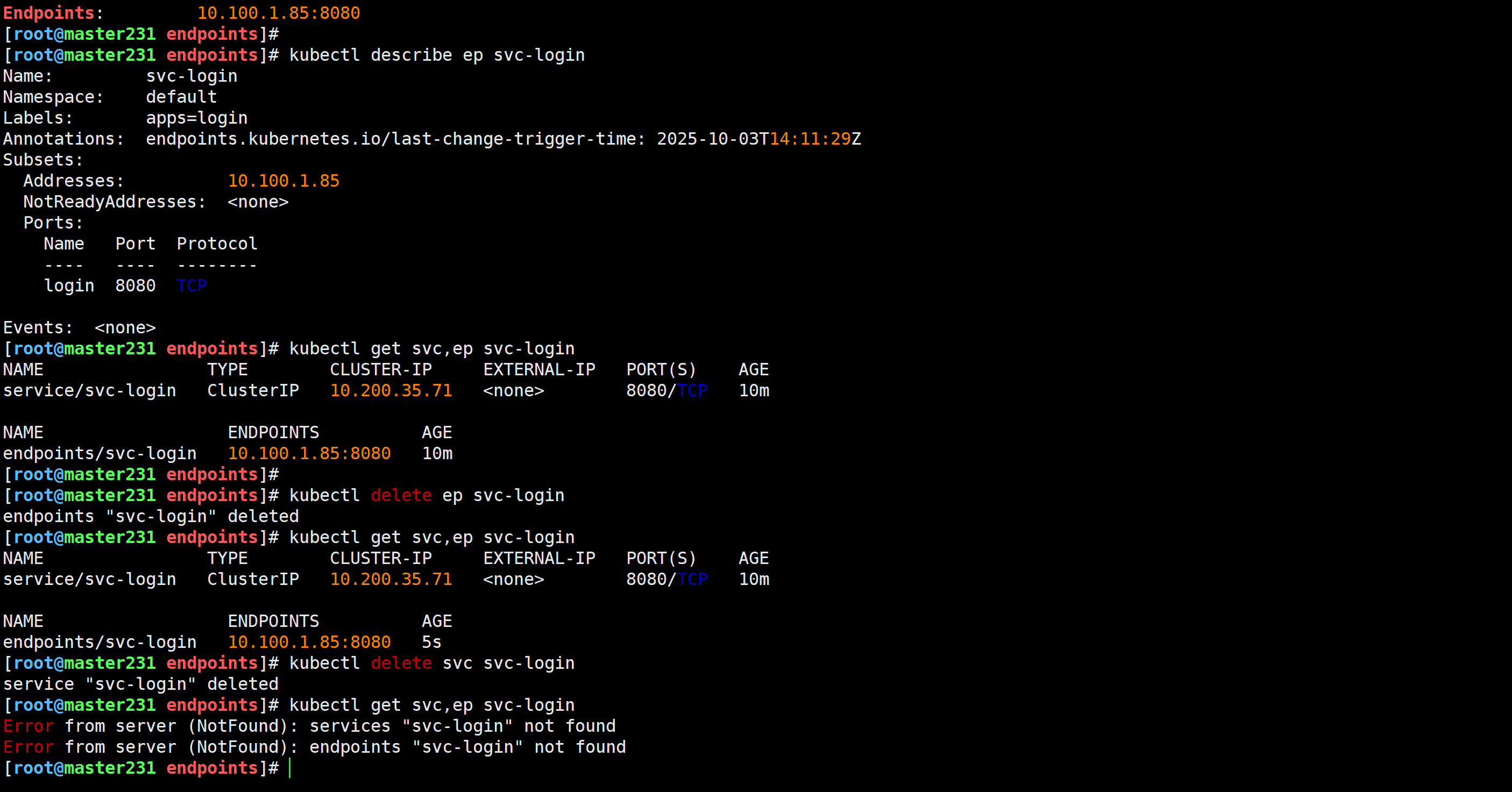

删除svc时会自动删除同名称的ep资源

bash

[root@master231 endpoints]# kubectl describe svc svc-login | grep Endpoints

Endpoints: 10.100.2.156:8080

[root@master231 endpoints]#

[root@master231 endpoints]# kubectl describe ep svc-login

Name: svc-login

Namespace: default

Labels: apps=login

Annotations: endpoints.kubernetes.io/last-change-trigger-time: 2025-10-03T04:21:37Z

Subsets:

Addresses: 10.100.2.156

NotReadyAddresses: <none>

Ports:

Name Port Protocol

---- ---- --------

login 8080 TCP

Events: <none>

[root@master231 endpoints]#

[root@master231 endpoints]# kubectl get svc,ep svc-login

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/svc-login ClusterIP 10.200.205.119 <none> 8080/TCP 153m

NAME ENDPOINTS AGE

endpoints/svc-login 10.100.2.156:8080 153m

[root@master231 endpoints]#

[root@master231 endpoints]# kubectl delete ep svc-login

endpoints "svc-login" deleted

[root@master231 endpoints]#

[root@master231 endpoints]# kubectl get svc,ep svc-login

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/svc-login ClusterIP 10.200.205.119 <none> 8080/TCP 153m

NAME ENDPOINTS AGE

endpoints/svc-login 10.100.2.156:8080 5s

[root@master231 endpoints]#

[root@master231 endpoints]# kubectl delete svc svc-login

service "svc-login" deleted

[root@master231 endpoints]#

[root@master231 endpoints]# kubectl get svc,ep svc-login

Error from server (NotFound): services "svc-login" not found

Error from server (NotFound): endpoints "svc-login" not found

[root@master231 endpoints]#

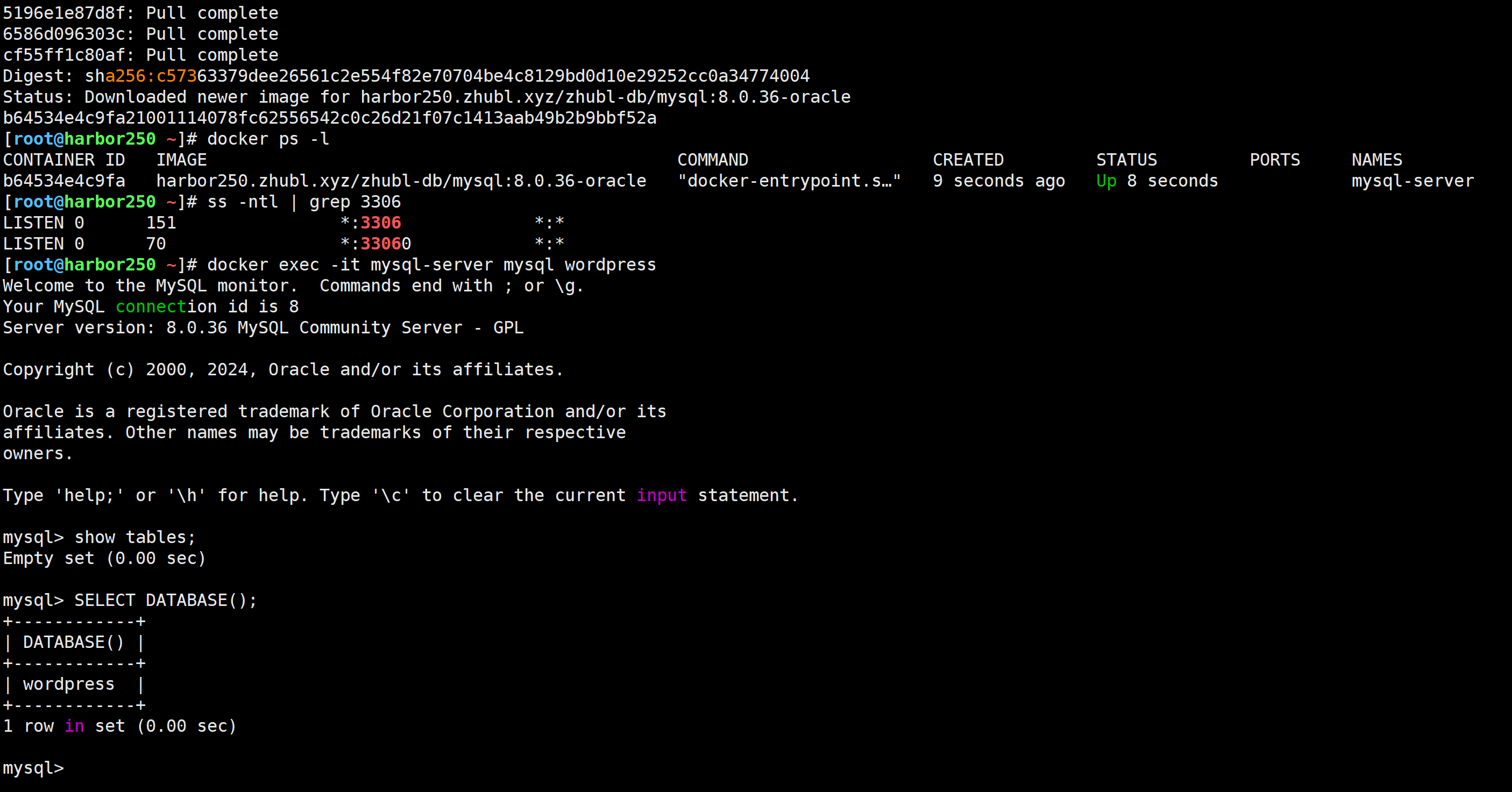

endpoint实战

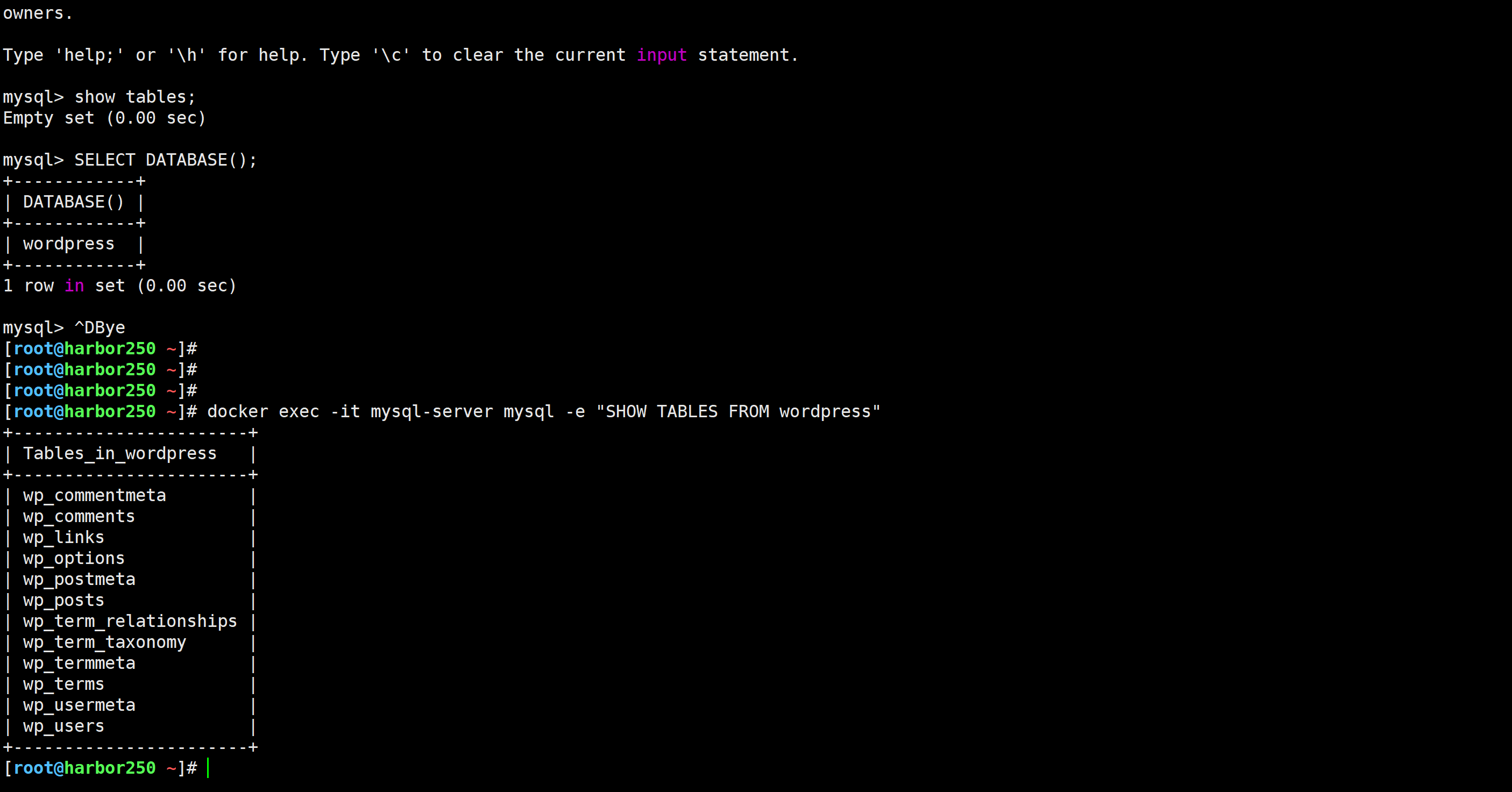

在K8S集群外部部署MySQL数据库

bash

[root@harbor250 ~]# docker run --network host -d --name mysql-server -e MYSQL_ALLOW_EMPTY_PASSWORD="yes" -e MYSQL_DATABASE=wordpress -e MYSQL_USER=wordpress -e MYSQL_PASSWORD=wordpress harbor250.zhubl.xyz/zhubl-db/mysql:8.0.36-oracle

[root@harbor250 ~]# docker exec -it mysql-server mysql wordpress

mysql> SHOW TABLES;

Empty set (0.00 sec)

mysql> SELECT DATABASE();

+------------+

| DATABASE() |

+------------+

| wordpress |

+------------+

1 row in set (0.00 sec)

mysql>

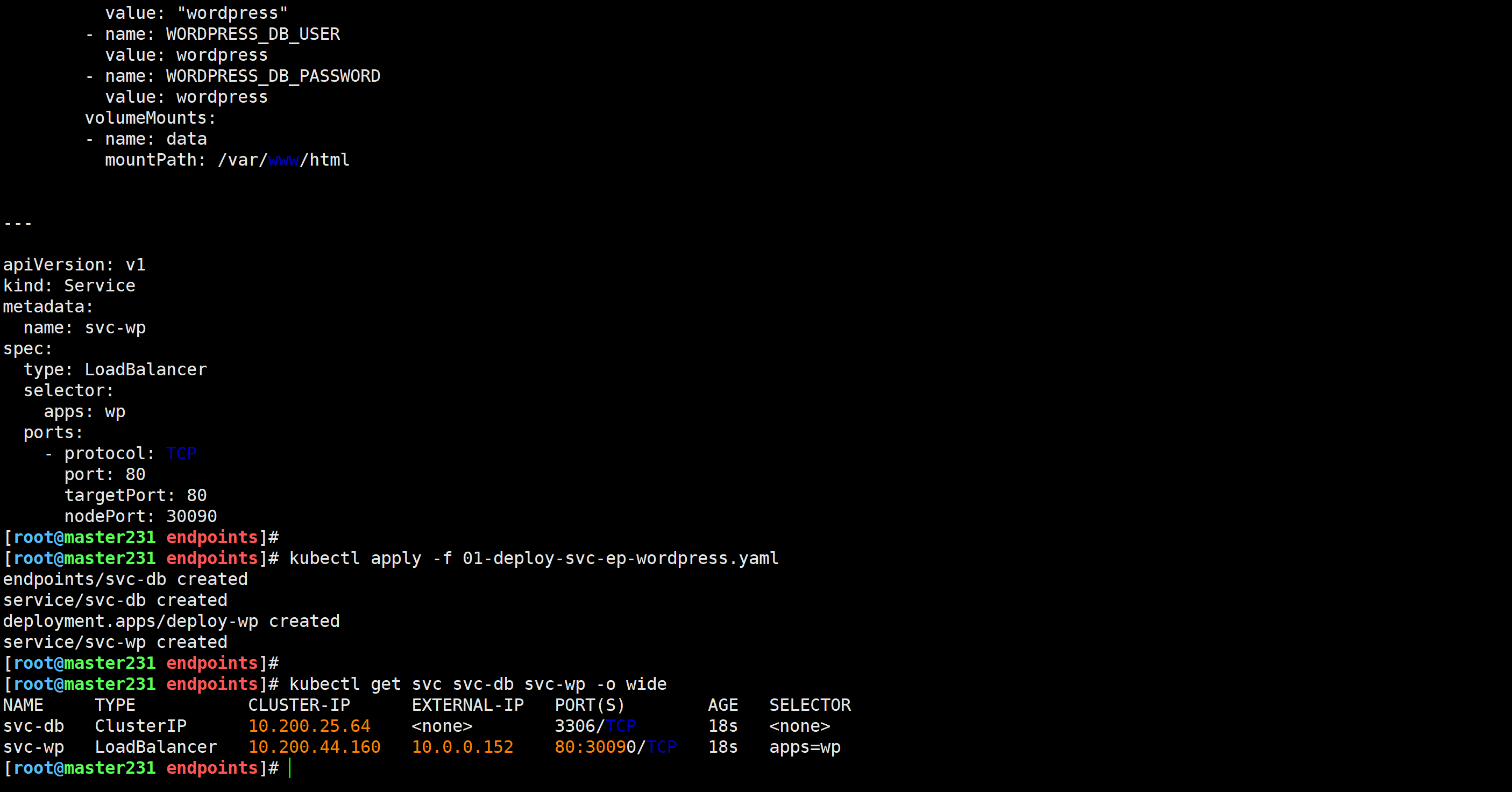

k8s集群内部部署wordpress

yaml

[root@master231 endpoints]# cat 01-deploy-svc-ep-wordpress.yaml

apiVersion: v1

kind: Endpoints

metadata:

name: svc-db

subsets:

- addresses:

- ip: 10.0.0.250

ports:

- port: 3306

---

apiVersion: v1

kind: Service

metadata:

name: svc-db

spec:

type: ClusterIP

ports:

- protocol: TCP

port: 3306

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-wp

spec:

replicas: 1

selector:

matchLabels:

apps: wp

template:

metadata:

labels:

apps: wp

spec:

volumes:

- name: data

nfs:

server: 10.0.0.231

path: /zhu/data/nfs-server/casedemo/wordpress/wp

containers:

- name: wp

image: harbor250.zhubl.xyz/zhubl-wordpress/wordpress:6.7.1-php8.1-apache

env:

- name: WORDPRESS_DB_HOST

value: "svc-db"

- name: WORDPRESS_DB_NAME

value: "wordpress"

- name: WORDPRESS_DB_USER

value: wordpress

- name: WORDPRESS_DB_PASSWORD

value: wordpress

volumeMounts:

- name: data

mountPath: /var/www/html

---

apiVersion: v1

kind: Service

metadata:

name: svc-wp

spec:

type: LoadBalancer

selector:

apps: wp

ports:

- protocol: TCP

port: 80

targetPort: 80

nodePort: 30090

访问测试

bash

http://10.0.0.231:30090/

http://10.0.0.152/初始化wordpress

验证下数据库是否有数据

bash

[root@harbor250 ~]# docker exec -it mysql-server mysql -e "SHOW TABLES FROM wordpress"

🌟EFK架构分析k8s集群日志

k8s日志采集方案

边车模式(sidecar):

可以在原有的容器基础上添加一个新的容器,新的容器称为边车容器,该容器可以负责日志采集,监控,流量代理等功能。

优点: 不需要修改原有的架构,就可以实现新的功能。

缺点:

- 1.相对来说比较消耗资源;

- 2.获取K8S集群的Pod元数据信息相对麻烦,需要开发相关的功能;

守护进程(ds)

每个工作节点仅有一个pod。

优点: 相对边车模式更加节省资源。

缺点: 需要学习K8S的RBAC认证体系。

产品内置

说白了缺啥功能直接让开发实现即可。

优点: 运维人员省事,无需安装任何组件。

缺点: 推动较慢,因为大多数开发都是业务开发。要么就需要单独的运维开发人员来解决。

🌟ElasticStack对接K8S集群

ES集群环境准备

bash

[root@elk91 ~]# curl -k -u elastic:123456 https://10.0.0.91:9200/_cat/nodes

10.0.0.92 81 50 1 0.19 0.14 0.12 cdfhilmrstw - elk92

10.0.0.91 83 66 4 0.04 0.13 0.17 cdfhilmrstw - elk91

10.0.0.93 78 53 1 0.34 0.18 0.15 cdfhilmrstw * elk93

[root@elk91 ~]# 验证kibana环境

bash

http://10.0.0.91:5601/启动zookeeper集群

bash

[root@elk91 ~]# zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /usr/local/apache-zookeeper-3.8.4-bin/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[root@elk91 ~]#

[root@elk91 ~]# zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /usr/local/apache-zookeeper-3.8.4-bin/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: leader

[root@elk91 ~]#

[root@elk92 ~]# zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /usr/local/apache-zookeeper-3.8.4-bin/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[root@elk92 ~]#

[root@elk92 ~]# zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /usr/local/apache-zookeeper-3.8.4-bin/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: follower

[root@elk92 ~]#

[root@elk93 ~]# zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /usr/local/apache-zookeeper-3.8.4-bin/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[root@elk93 ~]#

[root@elk93 ~]# zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /usr/local/apache-zookeeper-3.8.4-bin/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: follower

[root@elk93 ~]# 启动kafka集群

bash

[root@elk91 ~]# kafka-server-start.sh -daemon $KAFKA_HOME/config/server.properties

[root@elk91 ~]#

[root@elk91 ~]# ss -ntl |grep 9092

LISTEN 0 50 [::ffff:10.0.0.91]:9092 *:*

[root@elk91 ~]#

[root@elk92 ~]# kafka-server-start.sh -daemon $KAFKA_HOME/config/server.properties

[root@elk92 ~]#

[root@elk92 ~]# ss -ntl | grep 9092

LISTEN 0 50 [::ffff:10.0.0.92]:9092 *:*

[root@elk92 ~]#

[root@elk93 ~]# kafka-server-start.sh -daemon $KAFKA_HOME/config/server.properties

[root@elk93 ~]#

[root@elk93 ~]# ss -ntl | grep 9092

LISTEN 0 50 [::ffff:10.0.0.93]:9092 *:*

[root@elk93 ~]# 检查zookeeper的信息

bash

[root@elk93 ~]# zkCli.sh -server 10.0.0.91:2181,10.0.0.92:2181,10.0.0.93:2181

Connecting to 10.0.0.91:2181,10.0.0.92:2181,10.0.0.93:2181

...

WATCHER::

WatchedEvent state:SyncConnected type:None path:null

[zk: 10.0.0.91:2181,10.0.0.92:2181,10.0.0.93:2181(CONNECTED) 0] ls /kafka391/brokers/ids

[91, 92, 93]

[zk: 10.0.0.91:2181,10.0.0.92:2181,10.0.0.93:2181(CONNECTED) 1] 编写资源清单将Pod日志数据写入kafka集群

yaml

[root@master231 elasticstack]# cat 01-sidecar-cm-ep-filebeat.yaml

apiVersion: v1

kind: Endpoints

metadata:

name: svc-kafka

subsets:

- addresses:

- ip: 10.0.0.91

- ip: 10.0.0.92

- ip: 10.0.0.93

ports:

- port: 9092

---

apiVersion: v1

kind: Service

metadata:

name: svc-kafka

spec:

type: ClusterIP

ports:

- protocol: TCP

port: 9092

---

apiVersion: v1

kind: ConfigMap

metadata:

name: cm-filebeat

data:

main: |

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: true

output.kafka:

hosts:

- svc-kafka:9092

topic: "linux99-k8s-external-kafka"

nginx.yml: |

- module: nginx

access:

enabled: true

var.paths: ["/data/access.log"]

error:

enabled: false

var.paths: ["/data/error.log"]

ingress_controller:

enabled: false

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-xiuxian

spec:

replicas: 3

selector:

matchLabels:

apps: elasticstack

version: v3

template:

metadata:

labels:

apps: elasticstack

version: v3

spec:

volumes:

- name: dt

hostPath:

path: /etc/localtime

- name: data

emptyDir: {}

- name: main

configMap:

name: cm-filebeat

items:

- key: main

path: modules-to-es.yaml

- key: nginx.yml

path: nginx.yml

containers:

- name: c1

image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1

volumeMounts:

- name: data

mountPath: /var/log/nginx

- name: dt

mountPath: /etc/localtime

- name: c2

image: harbor250.zhubl.xyz/elasticstack/filebeat:7.17.25

volumeMounts:

- name: dt

mountPath: /etc/localtime

- name: data

mountPath: /data

- name: main

mountPath: /config/modules-to-es.yaml

subPath: modules-to-es.yaml

- name: main

mountPath: /usr/share/filebeat/modules.d/nginx.yml

subPath: nginx.yml

command:

- /bin/bash

- -c

- "filebeat -e -c /config/modules-to-es.yaml --path.data /tmp/xixi"

[root@master231 elasticstack]#

[root@master231 elasticstack]# kubectl apply -f 01-ds-cm-ep-filebeat.yaml

endpoints/svc-kafka created

service/svc-kafka created

configmap/cm-filebeat created

deployment.apps/deploy-xiuxian created

[root@master231 elasticstack]#

[root@master231 elasticstack]# kubectl get pods -o wide -l version=v3

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deploy-xiuxian-59c585c878-hd9lm 2/2 Running 0 8s 10.100.2.163 worker233 <none> <none>

deploy-xiuxian-59c585c878-pdnc6 2/2 Running 0 8s 10.100.2.162 worker233 <none> <none>

deploy-xiuxian-59c585c878-wp9r7 2/2 Running 0 8s 10.100.1.19 worker232 <none> <none>

[root@master231 elasticstack]# 访问业务的Pod日志

bash

[root@master231 services]# curl 10.100.2.163

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8"/>

<title>yinzhengjie apps v1</title>

<style>

div img {

width: 900px;

height: 600px;

margin: 0;

}

</style>

</head>

<body>

<h1 style="color: green">凡人修仙传 v1 </h1>

<div>

<img src="1.jpg">

<div>

</body>

</html>

[root@master231 services]#

[root@master231 services]# curl 10.100.2.163/zhubaolin.html

<html>

<head><title>404 Not Found</title></head>

<body>

<center><h1>404 Not Found</h1></center>

<hr><center>nginx/1.20.1</center>

</body>

</html>

[root@master231 services]# 验证数据

bash

[root@elk92 ~]# kafka-topics.sh --bootstrap-server 10.0.0.93:9092 --list | grep kafka

linux99-k8s-external-kafka

[root@elk92 ~]#

[root@elk92 ~]# kafka-console-consumer.sh --bootstrap-server 10.0.0.93:9092 --topic linux99-k8s-external-kafka --from-beginning

{"@timestamp":"2025-10-03T08:31:46.489Z","@metadata":{"beat":"filebeat","type":"_doc","version":"7.17.25","pipeline":"filebeat-7.17.25-nginx-access-pipeline"},"event":{"dataset":"nginx.access","module":"nginx","timezone":"+00:00"},"agent":{"hostname":"deploy-xiuxian-59c585c878-hd9lm","ephemeral_id":"427bd79b-a24f-4eaf-bea4-e9b3630cba8e","id":"cfe013d3-a60a-4dd6-a766-5b5a4f13711c","name":"deploy-xiuxian-59c585c878-hd9lm","type":"filebeat","version":"7.17.25"},"fileset":{"name":"access"},"ecs":{"version":"1.12.0"},"host":{"name":"deploy-xiuxian-59c585c878-hd9lm"},"message":"10.100.0.0 - - [03/Oct/2025:08:31:37 +0000] \"GET / HTTP/1.1\" 200 357 \"-\" \"curl/7.81.0\" \"-\"","log":{"offset":0,"file":{"path":"/data/access.log"}},"service":{"type":"nginx"},"input":{"type":"log"}}

{"@timestamp":"2025-10-03T08:33:41.495Z","@metadata":{"beat":"filebeat","type":"_doc","version":"7.17.25","pipeline":"filebeat-7.17.25-nginx-access-pipeline"},"host":{"name":"deploy-xiuxian-59c585c878-hd9lm"},"agent":{"name":"deploy-xiuxian-59c585c878-hd9lm","type":"filebeat","version":"7.17.25","hostname":"deploy-xiuxian-59c585c878-hd9lm","ephemeral_id":"427bd79b-a24f-4eaf-bea4-e9b3630cba8e","id":"cfe013d3-a60a-4dd6-a766-5b5a4f13711c"},"log":{"file":{"path":"/data/access.log"},"offset":91},"message":"10.100.0.0 - - [03/Oct/2025:08:33:38 +0000] \"GET /zhubl.html HTTP/1.1\" 404 153 \"-\" \"curl/7.81.0\" \"-\"","service":{"type":"nginx"},"ecs":{"version":"1.12.0"},"input":{"type":"log"},"event":{"dataset":"nginx.access","module":"nginx","timezone":"+00:00"},"fileset":{"name":"access"}}logstash采集并分析数据后写入ES集群

bash

[root@elk93 ~]# cat /etc/logstash/conf.d/11-kafka_k8s-to-es.conf

input {

kafka {

bootstrap_servers => "10.0.0.91:9092,10.0.0.92:9092,10.0.0.93:9092"

group_id => "k8s-006"

topics => ["k8s-external-kafka"]

auto_offset_reset => "earliest"

}

}

filter {

json {

source => "message"

}

mutate {

remove_field => [ "tags","input","agent","@version","ecs" , "log", "host"]

}

grok {

match => {

"message" => "%{HTTPD_COMMONLOG}"

}

}

geoip {

source => "clientip"

database => "/root/GeoLite2-City_20250311/GeoLite2-City.mmdb"

default_database_type => "City"

}

date {

match => [ "timestamp", "dd/MMM/yyyy:HH:mm:ss Z" ]

}

useragent {

source => "message"

target => "useragent"

}

}

output {

# stdout {

# codec => rubydebug

# }

elasticsearch {

hosts => ["https://10.0.0.91:9200","https://10.0.0.92:9200","https://10.0.0.93:9200"]

index => "logstash-kafka-k8s-%{+YYYY.MM.dd}"

api_key => "a-g-qZkB4BpGEtwMU0Mu:Wy9ivXwfQgKbUSPLY-YUhg"

ssl => true

ssl_certificate_verification => false

}

}

[root@elk93 ~]# logstash -rf /etc/logstash/conf.d/11-kafka_k8s-to-es.conf kibana出图展示

🌟基于ds模式采集k8s的Pod日志

删除上一步环境

bash

[root@master231 elasticstack]# kubectl delete -f 01-sidecar-cm-ep-filebeat.yaml

endpoints "svc-kafka" deleted

service "svc-kafka" deleted

configmap "cm-filebeat" deleted

deployment.apps "deploy-xiuxian" deleted编写资源清单

yaml

[root@master231 elasticstack]# cat 02-ds-cm-ep-filebeat.yaml

apiVersion: v1

kind: Endpoints

metadata:

name: svc-kafka

subsets:

- addresses:

- ip: 10.0.0.91

- ip: 10.0.0.92

- ip: 10.0.0.93

ports:

- port: 9092

---

apiVersion: v1

kind: Service

metadata:

name: svc-kafka

spec:

type: ClusterIP

ports:

- protocol: TCP

port: 9092

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-xiuxian

spec:

replicas: 3

selector:

matchLabels:

apps: elasticstack-xiuxian

template:

metadata:

labels:

apps: elasticstack-xiuxian

spec:

volumes:

- name: dt

hostPath:

path: /etc/localtime

containers:

- name: c1

image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1

volumeMounts:

- name: dt

mountPath: /etc/localtime

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: filebeat

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: filebeat

subjects:

- kind: ServiceAccount

name: filebeat

namespace: default

roleRef:

kind: ClusterRole

name: filebeat

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: filebeat

labels:

k8s-app: filebeat

rules:

- apiGroups: [""]

resources:

- namespaces

- pods

- nodes

verbs:

- get

- watch

- list

---

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-config

data:

filebeat.yml: |-

filebeat.config:

inputs:

path: ${path.config}/inputs.d/*.yml

reload.enabled: true

modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: true

output.kafka:

hosts:

- svc-kafka:9092

topic: "k8s-external-kafka-ds"

---

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-inputs

data:

kubernetes.yml: |-

- type: docker

containers.ids:

- "*"

processors:

- add_kubernetes_metadata:

in_cluster: true

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: filebeat

spec:

selector:

matchLabels:

k8s-app: filebeat

template:

metadata:

labels:

k8s-app: filebeat

spec:

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

operator: Exists

serviceAccountName: filebeat

terminationGracePeriodSeconds: 30

containers:

- name: filebeat

image: harbor250.zhubl.xyz/elasticstack/filebeat:7.17.25

args: [

"-c", "/etc/filebeat.yml",

"-e",

]

securityContext:

runAsUser: 0

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 100Mi

volumeMounts:

- name: config

mountPath: /etc/filebeat.yml

readOnly: true

subPath: filebeat.yml

- name: inputs

mountPath: /usr/share/filebeat/inputs.d

readOnly: true

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

volumes:

- name: config

configMap:

defaultMode: 0600

name: filebeat-config

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: inputs

configMap:

defaultMode: 0600

name: filebeat-inputs

[root@master231 elasticstack]# 创建资源

bash

[root@master231 elasticstack]# kubectl get pods -o wide -l k8s-app=filebeat

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

filebeat-8dnxs 1/1 Running 0 56s 10.100.0.4 master231 <none> <none>

filebeat-mdcd2 1/1 Running 0 56s 10.100.2.166 worker233 <none> <none>

filebeat-z4jdr 1/1 Running 0 56s 10.100.1.21 worker232 <none> <none>

[root@master231 elasticstack]#

[root@master231 elasticstack]#

[root@master231 elasticstack]# kubectl get pods -o wide -l apps=elasticstack-xiuxian

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deploy-xiuxian-74d9748b99-2rp8v 1/1 Running 0 83s 10.100.1.20 worker232 <none> <none>

deploy-xiuxian-74d9748b99-mdpnf 1/1 Running 0 83s 10.100.2.165 worker233 <none> <none>

deploy-xiuxian-74d9748b99-psnk7 1/1 Running 0 83s 10.100.2.164 worker233 <none> <none>

[root@master231 elasticstack]#

[root@master231 elasticstack]# kafka验证测试

bash

[root@elk92 ~]# kafka-topics.sh --bootstrap-server 10.0.0.93:9092 --list | grep ds

linux99-k8s-external-kafka-ds

[root@elk92 ~]#

[root@elk92 ~]# kafka-console-consumer.sh --bootstrap-server 10.0.0.93:9092 --topic linux99-k8s-external-kafka-ds --from-beginning logstash写入数据到ES集群

bash

[root@elk93 ~]# cat /etc/logstash/conf.d/12-kafka_k8s_ds-to-es.conf

input {

kafka {

bootstrap_servers => "10.0.0.91:9092,10.0.0.92:9092,10.0.0.93:9092"

group_id => "k8s-001"

topics => ["linux99-k8s-external-kafka-ds"]

auto_offset_reset => "earliest"

}

}

filter {

json {

source => "message"

}

mutate {

remove_field => [ "tags","input","agent","@version","ecs" , "log", "host"]

}

grok {

match => {

"message" => "%{HTTPD_COMMONLOG}"

}

}

geoip {

source => "clientip"

database => "/root/GeoLite2-City_20250311/GeoLite2-City.mmdb"

default_database_type => "City"

}

date {

match => [ "timestamp", "dd/MMM/yyyy:HH:mm:ss Z" ]

}

useragent {

source => "message"

target => "linux99-useragent"

}

}

output {

# stdout {

# codec => rubydebug

# }

elasticsearch {

hosts => ["https://10.0.0.91:9200","https://10.0.0.92:9200","https://10.0.0.93:9200"]

index => "logstash-kafka-k8s-ds-%{+YYYY.MM.dd}"

api_key => "a-g-qZkB4BpGEtwMU0Mu:Wy9ivXwfQgKbUSPLY-YUhg"

ssl => true

ssl_certificate_verification => false

}

}

[root@elk93 ~]#

[root@elk93 ~]# logstash -rf /etc/logstash/conf.d/12-kafka_k8s_ds-to-es.confkibana查询数据并测试验证

访问测试

bash

[root@master231 elasticstack]# curl 10.100.1.20

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8"/>

<title>yinzhengjie apps v1</title>

<style>

div img {

width: 900px;

height: 600px;

margin: 0;

}

</style>

</head>

<body>

<h1 style="color: green">凡人修仙传 v1 </h1>

<div>

<img src="1.jpg">

<div>

</body>

</html>

[root@master231 elasticstack]#

[root@master231 elasticstack]# curl 10.100.1.20/zhu.html

<html>

<head><title>404 Not Found</title></head>

<body>

<center><h1>404 Not Found</h1></center>

<hr><center>nginx/1.20.1</center>

</body>

</html>

[root@master231 elasticstack]# kibana基于KQL查询

bash

kubernetes.pod.name : "deploy-xiuxian-74d9748b99-2rp8v"