医疗人工智能药物信息处理可视化操作界面,通过整合数据处理、智能检索与分类功能于一体,实现了药物信息处理流程的闭环管理,其创新性在于打破传统工具的功能割裂局限,显著降低医疗人员使用 AI 技术的技术门槛,提升药物信息处理效率与准确性。

应用层面,该界面可拓展至中药复方成分解析、民族医药数据库构建及药物不良反应实时监测领域,通过模块化设计适配不同场景需求。伦理安全方面,需建立基于联邦学习的分布式部署架构,在保护患者隐私数据的前提下实现多中心药物信息协同分析,为医疗 AI 系统的合规化应用提供技术范式。本研究通过技术创新回应了医疗场景中药物信息处理的效率与易用性需求,未来需持续在技术深度、应用广度与伦理规范层面推进协同发展。

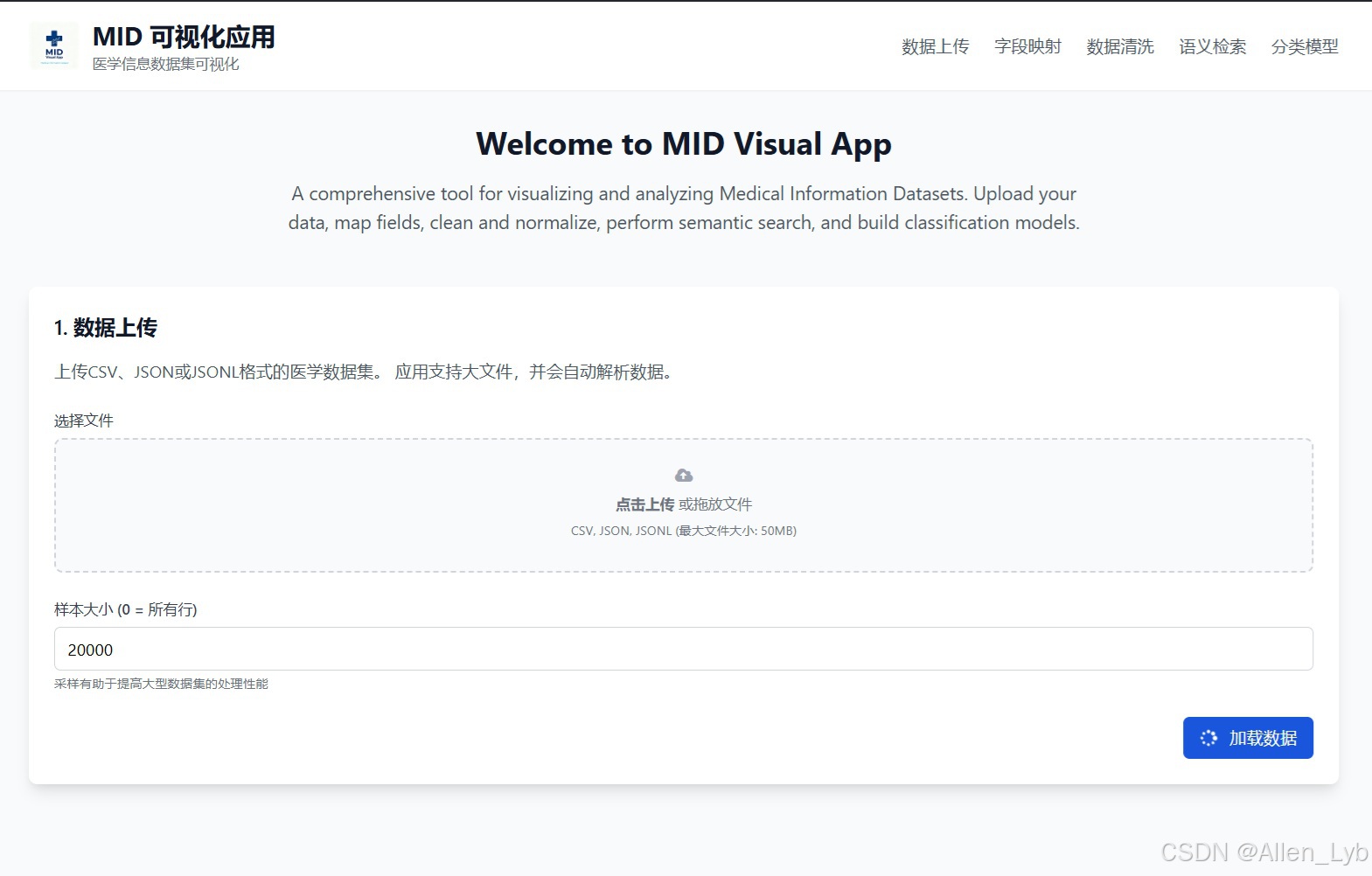

12) 图形化界面

python

# MID Visual App (Streamlit)

# -------------------------------------------------------------

# 使用方法:

# 1) 在终端安装依赖:

# pip install streamlit pandas pyarrow polars numpy scikit-learn imbalanced-learn rapidfuzz sentence-transformers faiss-cpu matplotlib python-dotenv

# 2) 运行:

# streamlit run mid_streamlit_app.py

# -------------------------------------------------------------

import os

import io

import re

import json

from typing import List, Tuple, Optional

import numpy as np

import pandas as pd

import streamlit as st

from dotenv import load_dotenv

# 可选依赖(如果未装则降级功能)

try:

from sentence_transformers import SentenceTransformer

import faiss # type: ignore

_HAS_EMB = True

except Exception:

_HAS_EMB = False

try:

from imblearn.over_sampling import RandomOverSampler

_HAS_IMB = True

except Exception:

_HAS_IMB = False

from rapidfuzz import process, fuzz

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import MultiLabelBinarizer

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.linear_model import LogisticRegression

from sklearn.multiclass import OneVsRestClassifier

from sklearn.pipeline import Pipeline

from sklearn.metrics import f1_score, classification_report, average_precision_score

import matplotlib.pyplot as plt

# ---------------------- 配置与缓存 ---------------------------

load_dotenv()

st.set_page_config(page_title="MID Visual App", layout="wide")

@st.cache_data(show_spinner=False)

def _read_table(upload: bytes, name: str) -> pd.DataFrame:

name = name.lower()

bio = io.BytesIO(upload)

if name.endswith(".parquet") or name.endswith(".pq"):

return pd.read_parquet(bio)

if name.endswith(".csv"):

return pd.read_csv(bio)

if name.endswith(".jsonl"):

return pd.read_json(bio, lines=True)

if name.endswith(".json"):

return pd.read_json(bio)

# 回退:尝试 csv

return pd.read_csv(io.BytesIO(upload))

# ---------------------- 文本工具 -----------------------------

def clean_text(s: Optional[str]) -> str:

if s is None or (isinstance(s, float) and np.isnan(s)):

return ""

s = str(s).replace("\u00A0", " ").strip()

s = re.sub(r"\s+", " ", s)

return s

def normalize_multilabel(x) -> List[str]:

if x is None or (isinstance(x, float) and np.isnan(x)):

return []

parts = re.split(r"[;,|/]+", str(x))

return sorted({p.strip().lower() for p in parts if p and p.strip()})

@st.cache_data(show_spinner=False)

def fuzz_align(values: List[str], score_cutoff: int = 92) -> Tuple[dict, dict]:

"""返回 {raw->std} 与 {std->count} 映射,便于查看对齐效果"""

base = sorted({v.lower() for v in values if isinstance(v, str) and v.strip()})

std_map = {}

freq = {}

for v in base:

best = process.extractOne(v, base, scorer=fuzz.WRatio, score_cutoff=score_cutoff)

key = best[0] if best else v

std_map[v] = key

freq[key] = freq.get(key, 0) + 1

return std_map, freq

# ---------------------- 训练与评估 ---------------------------

@st.cache_resource(show_spinner=False)

def build_clf(ngram=(1,2), min_df=3, max_df=0.9, C=1.0):

pipe = Pipeline([

("tfidf", TfidfVectorizer(ngram_range=ngram, min_df=min_df, max_df=max_df, sublinear_tf=True)),

("ovr", OneVsRestClassifier(LogisticRegression(max_iter=200, n_jobs=-1, class_weight="balanced", C=C)))

])

return pipe

def evaluate_model(y_true: np.ndarray, y_prob: np.ndarray, classes: List[str], threshold: float = 0.5):

y_pred = (y_prob >= threshold).astype(int)

micro = f1_score(y_true, y_pred, average="micro")

macro = f1_score(y_true, y_pred, average="macro")

aps = [average_precision_score(y_true[:, i], y_prob[:, i]) for i in range(y_true.shape[1])]

return micro, macro, float(np.nanmean(aps)), y_pred, aps

def tune_threshold(y_true: np.ndarray, y_prob: np.ndarray, average="macro") -> float:

ths = np.linspace(0.1, 0.9, 17)

best_t, best = 0.5, -1

for t in ths:

pred = (y_prob >= t).astype(int)

score = f1_score(y_true, pred, average=average)

if score > best:

best, best_t = score, t

return float(best_t)

# ---------------------- 侧边栏 ------------------------------

st.sidebar.title("MID 可视化控制台")

st.sidebar.caption("上传数据 → 字段映射 → 清洗 → 训练/检索 → 评估")

uploaded = st.sidebar.file_uploader("上传数据文件 (CSV / Parquet / JSON/JSONL)", type=["csv","parquet","pq","json","jsonl"], accept_multiple_files=False)

sample_n = st.sidebar.number_input("Sampling rows for speed (0=All)", min_value=0, value=20000, step=1000)

st.sidebar.markdown("---")

use_emb = st.sidebar.checkbox("启用语义检索 (Sentence-Transformers + FAISS)", value=False, help="需要安装 sentence-transformers 与 faiss-cpu")

topk = st.sidebar.slider("TopK (检索)", 3, 50, 10)

st.sidebar.markdown("---")

train_enable = st.sidebar.checkbox("启用多标签分类训练", value=True)

train_size = st.sidebar.slider("Train size (%)", 50, 95, 80)

class_balance = st.sidebar.checkbox("过采样长尾 (RandomOverSampler)", value=False, help="需要 imbalanced-learn")

# ---------------------- 主体:数据载入 -----------------------

st.title("🧪 MID Visual App")

st.write("一个面向 MID(Medical Information Dataset)工作流的可视化界面:加载数据、清洗、建模与语义检索。")

if not uploaded:

st.info("请在左侧上传数据文件(示例字段:drug_id, drug_name, indications, description, atc_codes, therapeutic_class/labels)")

st.stop()

with st.spinner("读取数据中..."):

df = _read_table(uploaded.read(), uploaded.name)

if sample_n and sample_n > 0 and len(df) > sample_n:

df = df.sample(sample_n, random_state=42).reset_index(drop=True)

st.success(f"数据已载入:{df.shape[0]} 行 × {df.shape[1]} 列")

st.dataframe(df.head(20))

# ---------------------- 字段映射 -----------------------------

st.header("1) 字段映射 / Field Mapping")

cols = df.columns.tolist()

col_id = st.selectbox("药品唯一ID列 (可选)", [None] + cols, index=0)

col_name = st.selectbox("药品名称列", cols, index=min(1, len(cols)-1))

col_ind = st.selectbox("适应症列 (indications)", [None] + cols, index=0)

col_desc = st.selectbox("描述列 (description)", [None] + cols, index=0)

col_atc = st.selectbox("ATC 码列 (多值)", [None] + cols, index=0)

col_lbl = st.selectbox("标签列 (多标签) 或 治疗类别列", [None] + cols, index=0)

# ---------------------- 清洗与标准化 -------------------------

st.header("2) 清洗 / Cleaning")

work = df.copy()

if col_name:

work[col_name] = work[col_name].apply(clean_text)

if col_ind:

work[col_ind] = work[col_ind].apply(clean_text)

if col_desc:

work[col_desc] = work[col_desc].apply(clean_text)

if col_atc:

work["atc_codes_list"] = work[col_atc].apply(normalize_multilabel)

if col_lbl:

work["labels_list"] = work[col_lbl].apply(normalize_multilabel)

st.write("预览清洗后样本:")

st.dataframe(work.head(20))

# 名称归一(可选)

with st.expander("名称归一 / Name normalization (fuzzy)"):

cutoff = st.slider("匹配阈值 (92=严格)", 70, 100, 92)

if st.button("运行归一化", type="primary"):

mapping, freq = fuzz_align(work[col_name].astype(str).tolist(), score_cutoff=cutoff)

st.write("示例映射:", dict(list(mapping.items())[:20]))

st.write("Top name buckets:")

topk_names = sorted(freq.items(), key=lambda x: x[1], reverse=True)[:20]

fig, ax = plt.subplots()

ax.bar([k for k,_ in topk_names], [v for _,v in topk_names])

ax.set_title("Top Name Buckets (Count)")

ax.set_ylabel("Count")

ax.set_xlabel("Name (bucket)")

plt.xticks(rotation=60, ha="right")

st.pyplot(fig, clear_figure=True)

# ---------------------- 语义检索 -----------------------------

st.header("3) 语义检索 / Semantic Search")

if use_emb and not _HAS_EMB:

st.warning("未检测到 sentence-transformers/faiss,已自动禁用。请安装后重试。")

use_emb = False

if use_emb:

with st.spinner("正在构建向量索引 (可能需要数十秒)..."):

text_source = []

if col_name: text_source.append(work[col_name].fillna(""))

if col_ind: text_source.append(work[col_ind].fillna(""))

if col_desc: text_source.append(work[col_desc].fillna(""))

if not text_source:

st.error("请至少选择一个文本列用于检索")

corpus = (text_source[0] if len(text_source)==1 else pd.concat(text_source, axis=1).agg(" | ".join, axis=1)).tolist()

model = SentenceTransformer("sentence-transformers/all-MiniLM-L6-v2")

emb = model.encode(corpus, batch_size=256, show_progress_bar=True, convert_to_numpy=True, normalize_embeddings=True)

index = faiss.IndexFlatIP(emb.shape[1])

index.add(emb.astype(np.float32))

q = st.text_input("输入查询 Query (英文更佳)", "type 2 diabetes oral therapy")

if st.button("Search", type="primary"):

qv = model.encode([q], normalize_embeddings=True)

D, I = index.search(np.asarray(qv, dtype=np.float32), int(topk))

res = work.iloc[I[0]].copy()

res.insert(0, "score", D[0])

keep_cols = [c for c in [col_id, col_name, col_ind, col_desc, col_atc, col_lbl] if c]

st.dataframe(res[["score"] + keep_cols].head(topk))

# ---------------------- 多标签分类 ---------------------------

st.header("4) 多标签分类 / Multi-label Classification")

if not train_enable:

st.info("已关闭训练功能,可在侧边栏开启。")

else:

# 准备文本与标签

text_cols = [c for c in [col_ind, col_desc] if c]

if not text_cols:

st.warning("请至少映射一个文本列 (indications/description)。")

else:

work["text_join"] = work[text_cols].fillna("").agg(" \n ".join, axis=1)

# 处理标签

label_col = "labels_list" if "labels_list" in work.columns else None

if not label_col and col_lbl:

label_col = col_lbl

# 兜底:如果该列是字符串,则尝试解析为多标签

if work[label_col].dtype == object:

work["labels_list"] = work[label_col].apply(normalize_multilabel)

label_col = "labels_list"

if not label_col:

st.error("未找到标签列。请在字段映射里选择 标签列/治疗类别 列。")

else:

# 过滤空标签

filt = work[label_col].apply(lambda x: len(x) if isinstance(x, list) else (1 if pd.notna(x) else 0)) > 0

sub = work.loc[filt, ["text_join", label_col]].copy()

if len(sub) < 50:

st.warning("有效带标签样本过少 (<50)。请检查标签列或扩大样本。")

else:

# 二值化

if label_col != "labels_list":

labels = [[str(sub[label_col].iloc[i]).strip().lower()] for i in range(len(sub))]

else:

labels = sub[label_col].tolist()

mlb = MultiLabelBinarizer()

Y = mlb.fit_transform(labels)

X_train, X_test, y_train, y_test = train_test_split(

sub["text_join"], Y, test_size=(100-train_size)/100.0, random_state=42,

stratify=(Y.sum(axis=1) > 0)

)

# 过采样(可选)

if class_balance and _HAS_IMB:

ros = RandomOverSampler(random_state=42)

X_train_df = pd.DataFrame({"text": X_train})

X_train_res, y_train = ros.fit_resample(X_train_df, y_train)

X_train = X_train_res["text"].values

elif class_balance and not _HAS_IMB:

st.warning("已勾选过采样,但未安装 imbalanced-learn,已跳过。")

with st.spinner("训练模型中 (TF-IDF + LogisticRegression)..."):

clf = build_clf()

clf.fit(X_train, y_train)

proba = clf.predict_proba(X_test)

# 阈值与评估

best_t = tune_threshold(y_test, proba, average="macro")

micro, macro, mAP, y_pred, aps = evaluate_model(y_test, proba, list(mlb.classes_), threshold=best_t)

st.subheader("Evaluation (English labels)")

st.write({"Best threshold": best_t, "Micro-F1": micro, "Macro-F1": macro, "Mean AP": mAP})

# 前 20 类 AP 柱状图

fig, ax = plt.subplots()

show_k = min(20, len(aps))

ax.bar(np.arange(show_k), aps[:show_k])

ax.set_title("Per-class Average Precision (Top 20)")

ax.set_xlabel("Class Index (Top 20)")

ax.set_ylabel("Average Precision")

st.pyplot(fig, clear_figure=True)

# 预测演示

st.subheader("在线预测 / Inference")

demo_text = st.text_area("输入说明文本 (English preferred)", "oral therapy for type 2 diabetes with fewer gastrointestinal side effects")

if st.button("Predict", type="primary"):

prob = clf.predict_proba([demo_text])[0]

pred_labels = [mlb.classes_[i] for i, p in enumerate(prob) if p >= best_t]

st.write({"labels": pred_labels, "proba": {mlb.classes_[i]: float(p) for i, p in enumerate(prob)}})

# ---------------------- 导出工件 -----------------------------

st.header("5) 导出与保存 / Export")

if st.button("导出当前清洗数据 (CSV)"):

out = work.copy()

csv = out.to_csv(index=False).encode("utf-8")

st.download_button(

label="Download cleaned_data.csv",

data=csv,

file_name="cleaned_data.csv",

mime="text/csv",

)

st.caption("Charts use English labels by default. © 2025")本地运行

安装依赖(建议新虚拟环境):

bash

pip install streamlit pandas pyarrow polars numpy scikit-learn imbalanced-learn rapidfuzz sentence-transformers faiss-cpu matplotlib python-dotenv启动:

bash

streamlit run mid_streamlit_app.py

全文完