OpenCV模型库中有个手势识别的例子,是python版本的,路径是opencv_zoo-main\models\handpose_estimation_mediapipe,现将该例子改写,用OpenCVSharp实现,不依赖其他库,只需要安装OpenCVSharp即可。模型在GitHub,OpenCV官方模型库下载。

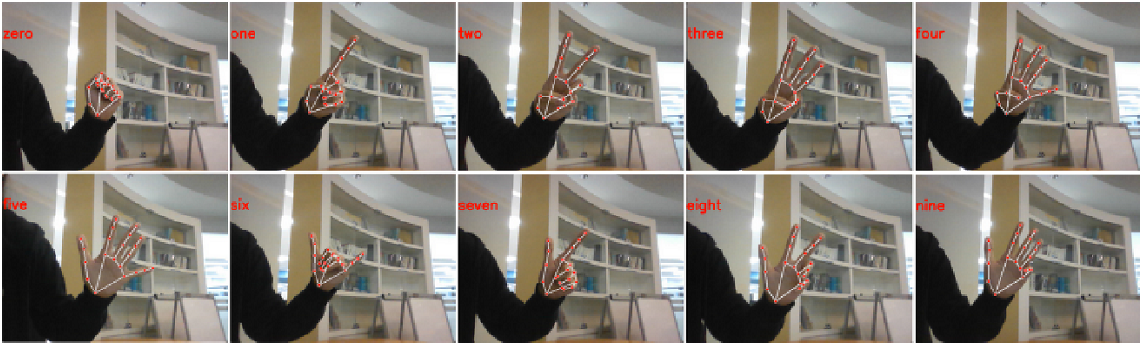

官方效果:

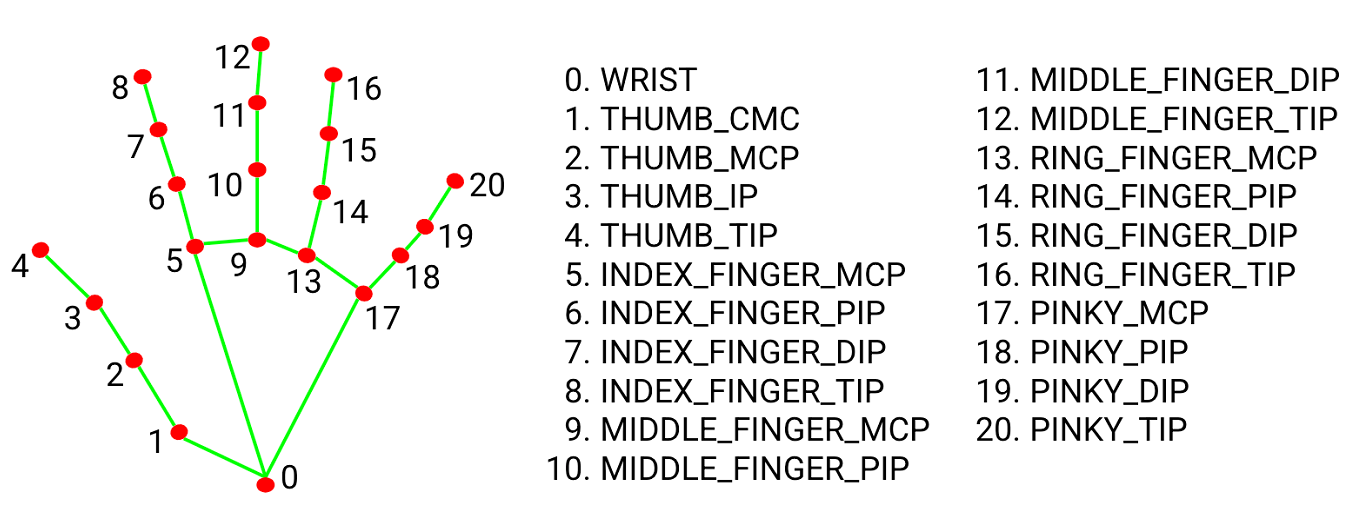

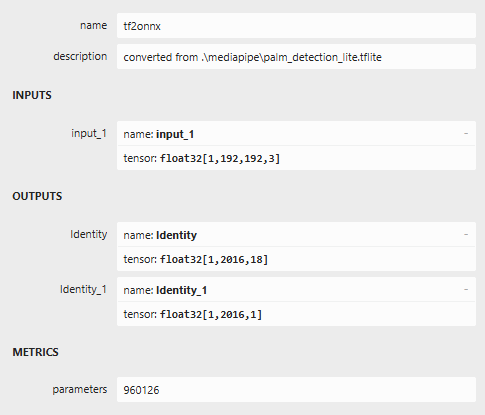

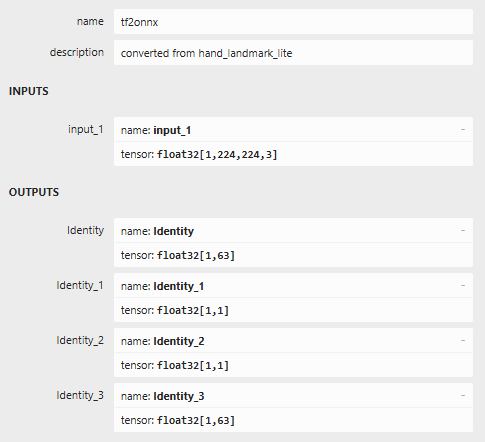

需要用到两个模型,一个是手掌检测,确定手部方向,进行摆正,一个是手部关键点检测

代码如下:

///PalmDetector.cs

using OpenCvSharp;

using OpenCvSharp.Dnn;

using System;

using System.Collections.Generic;

using System.Linq;

using System.Runtime.InteropServices;

using Point = OpenCvSharp.Point;

using Scalar = OpenCvSharp.Scalar;

using Size = OpenCvSharp.Size;

namespace yolo_world_opencvsharp_net4._8

{

public class PalmDetector : IDisposable

{

private string model_path;

private float nms_threshold;

private float score_threshold;

private int topK;

private int backend_id;

private int target_id;

private Size input_size;

private Net model;

private List<Point2f> anchors;

private bool _disposed = false;

public PalmDetector(string modelPath, float nmsThreshold = 0.3f, float scoreThreshold = 0.5f,

int topK = 5000, Backend backend = Backend.OPENCV, Target target = Target.CPU)

{

model_path = modelPath;

nms_threshold = nmsThreshold;

score_threshold = scoreThreshold;

this.topK = topK;

input_size = new Size(192, 192);

// 加载模型

model = CvDnn.ReadNetFromOnnx(model_path);

if (model.Empty())

{

throw new ArgumentException($"Failed to load model from {modelPath}");

}

model.SetPreferableBackend(backend);

model.SetPreferableTarget(target);

anchors = MPPalmAnchors.LoadAnchors();

Console.WriteLine($"PalmDetector initialized with {anchors?.Count ?? 0} anchors");

}

public List<List<float>> Infer(Mat image)

{

if (image == null || image.Empty())

throw new ArgumentException("Input image is null or empty");

var (preprocessed_image, pad_bias) = Preprocess(image);

try

{

model.SetInput(preprocessed_image);

var outputNames = model.GetUnconnectedOutLayersNames();

Mat[] outputs = outputNames.Select(_ => new Mat()).ToArray();

model.Forward(outputs, outputNames);

return Postprocess(outputs, image.Size(), pad_bias);

}

finally

{

preprocessed_image?.Dispose();

}

}

public Mat Visualize(Mat image, List<List<float>> results, bool print_results = false, float fps = 0.0f)

{

Mat output = image.Clone();

if (fps > 0)

{

Cv2.PutText(output, $"FPS: {fps:F1}", new Point(10, 30),

HersheyFonts.HersheySimplex, 0.7, new Scalar(0, 0, 255), 2);

}

for (int i = 0; i < results.Count; i++)

{

List<float> result = results[i];

if (result.Count < 19) continue; // 4 box + 14 landmarks + 1 score

float score = result[result.Count - 1];

// Draw bounding box

Cv2.Rectangle(

output,

new Point((int)result[0], (int)result[1]),

new Point((int)result[2], (int)result[3]),

new Scalar(0, 255, 0), 2);

// Draw confidence score

Cv2.PutText(output, $"{score:F3}",

new Point((int)result[0], (int)result[1] - 10),

HersheyFonts.HersheySimplex, 0.5, new Scalar(0, 255, 0), 1);

// Draw landmarks with different colors

Scalar[] landmarkColors = new Scalar[]

{

new Scalar(255, 0, 0), // Blue

new Scalar(0, 255, 255), // Yellow

new Scalar(255, 0, 255), // Magenta

new Scalar(0, 255, 0), // Green

new Scalar(255, 255, 0), // Cyan

new Scalar(128, 0, 128), // Purple

new Scalar(0, 165, 255) // Orange

};

for (int j = 0; j < 7; j++)

{

Point point = new Point((int)result[4 + j * 2], (int)result[4 + j * 2 + 1]);

Cv2.Circle(output, point, 4, landmarkColors[j], -1);

Cv2.Circle(output, point, 2, new Scalar(255, 255, 255), -1);

// Draw landmark number

Cv2.PutText(output, $"{j + 1}",

new Point(point.X + 6, point.Y - 6),

HersheyFonts.HersheySimplex, 0.4, new Scalar(255, 255, 255), 1);

}

if (print_results)

{

Console.WriteLine($"----------- Palm {i + 1} -----------");

Console.WriteLine($"Score: {score:F4}");

Console.WriteLine($"Box: [{result[0]:F0}, {result[1]:F0}, {result[2]:F0}, {result[3]:F0}]");

Console.WriteLine($"Box Size: {result[2] - result[0]:F0} x {result[3] - result[1]:F0}");

Console.WriteLine("Landmarks:");

for (int j = 0; j < 7; j++)

{

Console.WriteLine($" Point {j + 1}: ({result[4 + j * 2]:F0}, {result[4 + j * 2 + 1]:F0})");

}

Console.WriteLine();

}

}

return output;

}

private (Mat, Point) Preprocess(Mat image)

{

Point pad_bias = new Point(0, 0);

float ratio = Math.Min((float)input_size.Width / image.Width, (float)input_size.Height / image.Height);

Mat processed_image = new Mat();

if (image.Height != input_size.Height || image.Width != input_size.Width)

{

Size ratio_size = new Size((int)(image.Width * ratio), (int)(image.Height * ratio));

Cv2.Resize(image, processed_image, ratio_size, 0, 0, InterpolationFlags.Linear);

int pad_h = input_size.Height - ratio_size.Height;

int pad_w = input_size.Width - ratio_size.Width;

pad_bias.X = pad_w / 2;

pad_bias.Y = pad_h / 2;

Cv2.CopyMakeBorder(processed_image, processed_image, pad_bias.Y, pad_h - pad_bias.Y,

pad_bias.X, pad_w - pad_bias.X, BorderTypes.Constant, new Scalar(0, 0, 0));

}

else

{

processed_image = image.Clone();

}

// Create NHWC blob

processed_image.ConvertTo(processed_image, MatType.CV_32FC3, 1.0 / 255.0);

Cv2.CvtColor(processed_image, processed_image, ColorConversionCodes.BGR2RGB);

float[] imgData = new float[processed_image.Total() * processed_image.Channels()];

Marshal.Copy(processed_image.Data, imgData, 0, imgData.Length);

int[] dims = new int[] { 1, input_size.Height, input_size.Width, 3 };

Mat blob = new Mat(dims, MatType.CV_32FC1, imgData);

processed_image.Dispose();

// Adjust pad bias for original image coordinates

pad_bias.X = (int)(pad_bias.X / ratio);

pad_bias.Y = (int)(pad_bias.Y / ratio);

return (blob, pad_bias);

}

private List<List<float>> Postprocess(Mat[] output_blobs, Size original_size, Point pad_bias)

{

if (output_blobs.Length < 2)

return new List<List<float>>();

Mat scores = output_blobs[1].Reshape(1, (int)(output_blobs[1].Total()));

Mat boxes = output_blobs[0].Reshape(1, (int)(output_blobs[0].Total() / 18));

List<float> score_vec = new List<float>();

List<Rect2f> boxes_vec = new List<Rect2f>();

List<List<Point2f>> landmarks_vec = new List<List<Point2f>>();

float scale = Math.Max(original_size.Height, original_size.Width);

// Process all detections

for (int i = 0; i < scores.Rows; i++)

{

float score = 1.0f / (1.0f + (float)Math.Exp(-scores.At<float>(i, 0)));

if (score < score_threshold)

continue;

// Extract box and landmark deltas

var box_delta = boxes.Row(i).ColRange(0, 4);

var landmark_delta = boxes.Row(i).ColRange(4, 18);

var anchor = anchors[i];

// Decode bounding box coordinates

Point2f cxy_delta = new Point2f(

box_delta.At<float>(0, 0) / input_size.Width,

box_delta.At<float>(0, 1) / input_size.Height);

Point2f wh_delta = new Point2f(

box_delta.At<float>(0, 2) / input_size.Width,

box_delta.At<float>(0, 3) / input_size.Height);

// Calculate box coordinates

Point2f xy1 = new Point2f(

(cxy_delta.X - wh_delta.X / 2 + anchor.X) * scale - pad_bias.X,

(cxy_delta.Y - wh_delta.Y / 2 + anchor.Y) * scale - pad_bias.Y);

Point2f xy2 = new Point2f(

(cxy_delta.X + wh_delta.X / 2 + anchor.X) * scale - pad_bias.X,

(cxy_delta.Y + wh_delta.Y / 2 + anchor.Y) * scale - pad_bias.Y);

// Clip to image boundaries

xy1.X = Math.Max(0, Math.Min(original_size.Width - 1, xy1.X));

xy1.Y = Math.Max(0, Math.Min(original_size.Height - 1, xy1.Y));

xy2.X = Math.Max(0, Math.Min(original_size.Width - 1, xy2.X));

xy2.Y = Math.Max(0, Math.Min(original_size.Height - 1, xy2.Y));

// Only add valid detections

if (xy2.X > xy1.X && xy2.Y > xy1.Y && (xy2.X - xy1.X) > 20 && (xy2.Y - xy1.Y) > 20)

{

score_vec.Add(score);

boxes_vec.Add(new Rect2f(xy1.X, xy1.Y, xy2.X - xy1.X, xy2.Y - xy1.Y));

// Process landmarks

List<Point2f> landmarks = new List<Point2f>();

for (int j = 0; j < 7; j++)

{

float dx = landmark_delta.At<float>(0, j * 2) / input_size.Width + anchor.X;

float dy = landmark_delta.At<float>(0, j * 2 + 1) / input_size.Height + anchor.Y;

// Convert to original image coordinates

dx = dx * scale - pad_bias.X;

dy = dy * scale - pad_bias.Y;

// Clip to image boundaries

dx = Math.Max(0, Math.Min(original_size.Width - 1, dx));

dy = Math.Max(0, Math.Min(original_size.Height - 1, dy));

landmarks.Add(new Point2f(dx, dy));

}

landmarks_vec.Add(landmarks);

}

}

// Perform NMS

List<Rect> boxes_int = boxes_vec.Select(box =>

new Rect((int)box.X, (int)box.Y, (int)box.Width, (int)box.Height)).ToList();

CvDnn.NMSBoxes(boxes_int, score_vec, score_threshold, nms_threshold, out int[] indices);

// Prepare results

List<List<float>> results = new List<List<float>>();

foreach (int idx in indices)

{

List<float> result = new List<float>();

Rect2f box = boxes_vec[idx];

// Bounding box coordinates

result.Add(box.X);

result.Add(box.Y);

result.Add(box.X + box.Width);

result.Add(box.Y + box.Height);

// Landmark coordinates

foreach (Point2f point in landmarks_vec[idx])

{

result.Add(point.X);

result.Add(point.Y);

}

// Confidence score

result.Add(score_vec[idx]);

results.Add(result);

}

// Clean up

scores.Dispose();

boxes.Dispose();

return results;

}

public void Dispose()

{

Dispose(true);

GC.SuppressFinalize(this);

}

protected virtual void Dispose(bool disposing)

{

if (!_disposed)

{

if (disposing)

{

model?.Dispose();

}

_disposed = true;

}

}

~PalmDetector()

{

Dispose(false);

}

}

}

///MPHandPose.cs

using OpenCvSharp;

using OpenCvSharp.Dnn;

using System;

using System.Collections.Generic;

using System.Linq;

namespace yolo_world_opencvsharp_net4._8

{

public class MPHandPose : IDisposable

{

private string model_path;

private float conf_threshold;

private int backend_id;

private int target_id;

private Size input_size;

private Net model;

private bool _disposed = false;

// Constants from the Python version

private readonly int[] PALM_LANDMARK_IDS = { 0, 5, 9, 13, 17, 1, 2 };

private const int PALM_LANDMARKS_INDEX_OF_PALM_BASE = 0;

private const int PALM_LANDMARKS_INDEX_OF_MIDDLE_FINGER_BASE = 2;

private readonly double[] PALM_BOX_PRE_SHIFT_VECTOR = { 0, 0 };

private const double PALM_BOX_PRE_ENLARGE_FACTOR = 4;

private readonly double[] PALM_BOX_SHIFT_VECTOR = { 0, -0.4 };

private const double PALM_BOX_ENLARGE_FACTOR = 3;

private readonly double[] HAND_BOX_SHIFT_VECTOR = { 0, -0.1 };

private const double HAND_BOX_ENLARGE_FACTOR = 1.65;

public MPHandPose(string modelPath, float confThreshold = 0.8f, Backend backend = Backend.OPENCV, Target target = Target.CPU)

{

model_path = modelPath;

conf_threshold = confThreshold;

backend_id = (int)backend;

target_id = (int)target;

input_size = new Size(224, 224);

model = CvDnn.ReadNetFromOnnx(model_path);

if (model.Empty())

{

throw new ArgumentException($"Failed to load hand pose model from {modelPath}");

}

model.SetPreferableBackend(backend);

model.SetPreferableTarget(target);

Console.WriteLine($"MPHandPose initialized with input size: {input_size}");

}

public void SetBackendAndTarget(Backend backend, Target target)

{

backend_id = (int)backend;

target_id = (int)target;

model.SetPreferableBackend(backend);

model.SetPreferableTarget(target);

}

private (Mat, Rect, Point) CropAndPadFromPalm(Mat image, Rect palmBbox, bool forRotation = false)

{

// Shift bounding box

var whPalmBbox = new Point(palmBbox.Width, palmBbox.Height);

double[] shiftVector = forRotation ? PALM_BOX_PRE_SHIFT_VECTOR : PALM_BOX_SHIFT_VECTOR;

var shift = new Point(whPalmBbox.X * shiftVector[0], whPalmBbox.Y * shiftVector[1]);

var shiftedBbox = new Rect(

palmBbox.X + (int)shift.X,

palmBbox.Y + (int)shift.Y,

palmBbox.Width,

palmBbox.Height

);

// Enlarge bounding box

var centerPalmBbox = new Point(

shiftedBbox.X + shiftedBbox.Width / 2,

shiftedBbox.Y + shiftedBbox.Height / 2

);

double enlargeScale = forRotation ? PALM_BOX_PRE_ENLARGE_FACTOR : PALM_BOX_ENLARGE_FACTOR;

var newHalfSize = new Size(

(int)(whPalmBbox.X * enlargeScale / 2),

(int)(whPalmBbox.Y * enlargeScale / 2)

);

var enlargedBbox = new Rect(

centerPalmBbox.X - newHalfSize.Width,

centerPalmBbox.Y - newHalfSize.Height,

newHalfSize.Width * 2,

newHalfSize.Height * 2

);

// Clip to image boundaries

enlargedBbox.X = Math.Max(0, Math.Min(image.Width - 1, enlargedBbox.X));

enlargedBbox.Y = Math.Max(0, Math.Min(image.Height - 1, enlargedBbox.Y));

enlargedBbox.Width = Math.Min(enlargedBbox.Width, image.Width - enlargedBbox.X);

enlargedBbox.Height = Math.Min(enlargedBbox.Height, image.Height - enlargedBbox.Y);

// Crop to the size of interest

Mat croppedImage = new Mat(image, enlargedBbox);

// Pad to ensure corner pixels won't be cropped

int sideLen = forRotation ?

(int)Math.Sqrt(croppedImage.Width * croppedImage.Width + croppedImage.Height * croppedImage.Height) :

Math.Max(croppedImage.Width, croppedImage.Height);

int padH = sideLen - croppedImage.Height;

int padW = sideLen - croppedImage.Width;

int left = padW / 2;

int top = padH / 2;

int right = padW - left;

int bottom = padH - top;

Cv2.CopyMakeBorder(croppedImage, croppedImage, top, bottom, left, right, BorderTypes.Constant, Scalar.Black);

var bias = new Point(enlargedBbox.X - left, enlargedBbox.Y - top);

return (croppedImage, enlargedBbox, bias);

}

private (Mat, Rect, double, Mat, Point) Preprocess(Mat image, List<float> palm)

{

if (palm.Count < 18)

throw new ArgumentException("Palm data must contain at least 18 elements");

// Extract palm bounding box and landmarks

var palmBbox = new Rect(

(int)palm[0], (int)palm[1],

(int)(palm[2] - palm[0]),

(int)(palm[3] - palm[1])

);

// Crop and pad image to interest range

var (croppedImage, rotatedPalmBbox, bias) = CropAndPadFromPalm(image, palmBbox, true);

// Convert to RGB

Cv2.CvtColor(croppedImage, croppedImage, ColorConversionCodes.BGR2RGB);

// Extract palm landmarks

var palmLandmarks = new List<Point2f>();

for (int i = 0; i < 7; i++)

{

palmLandmarks.Add(new Point2f(

palm[4 + i * 2] - bias.X,

palm[4 + i * 2 + 1] - bias.Y

));

}

// Rotate input to have vertically oriented hand image

var p1 = palmLandmarks[PALM_LANDMARKS_INDEX_OF_PALM_BASE];

var p2 = palmLandmarks[PALM_LANDMARKS_INDEX_OF_MIDDLE_FINGER_BASE];

double radians = Math.PI / 2 - Math.Atan2(-(p2.Y - p1.Y), p2.X - p1.X);

radians = radians - 2 * Math.PI * Math.Floor((radians + Math.PI) / (2 * Math.PI));

double angle = radians * (180.0 / Math.PI);

// Get bbox center

var centerPalmBbox = new Point2f(

rotatedPalmBbox.X + rotatedPalmBbox.Width / 2.0f - bias.X,

rotatedPalmBbox.Y + rotatedPalmBbox.Height / 2.0f - bias.Y

);

// Get rotation matrix (注意:这里返回的是CV_64FC1类型)

var rotationMatrix = Cv2.GetRotationMatrix2D(centerPalmBbox, angle, 1.0);

// Get rotated image

Mat rotatedImage = new Mat();

Cv2.WarpAffine(croppedImage, rotatedImage, rotationMatrix, new Size(croppedImage.Width, croppedImage.Height));

// Get rotated palm landmarks - 正确的方法

var rotatedPalmLandmarks = new List<Point2f>();

foreach (var landmark in palmLandmarks)

{

// 正确访问旋转矩阵的双精度元素

double r00 = rotationMatrix.At<double>(0, 0);

double r01 = rotationMatrix.At<double>(0, 1);

double r02 = rotationMatrix.At<double>(0, 2);

double r10 = rotationMatrix.At<double>(1, 0);

double r11 = rotationMatrix.At<double>(1, 1);

double r12 = rotationMatrix.At<double>(1, 2);

double rotatedX = r00 * landmark.X + r01 * landmark.Y + r02;

double rotatedY = r10 * landmark.X + r11 * landmark.Y + r12;

rotatedPalmLandmarks.Add(new Point2f((float)rotatedX, (float)rotatedY));

}

// Get landmark bounding box

float minX = rotatedPalmLandmarks.Min(p => p.X);

float minY = rotatedPalmLandmarks.Min(p => p.Y);

float maxX = rotatedPalmLandmarks.Max(p => p.X);

float maxY = rotatedPalmLandmarks.Max(p => p.Y);

var rotatedPalmBboxFinal = new Rect((int)minX, (int)minY, (int)(maxX - minX), (int)(maxY - minY));

// Final crop

var (finalCrop, finalBbox, _) = CropAndPadFromPalm(rotatedImage, rotatedPalmBboxFinal);

//Cv2.ImShow("123",finalCrop);

// Resize to model input size

Mat blob = new Mat();

Cv2.Resize(finalCrop, blob, input_size, 0, 0, InterpolationFlags.Area);

blob.ConvertTo(blob, MatType.CV_32FC3, 1.0 / 255.0);

// Add batch dimension

blob = blob.Reshape(1, new int[] { 1, input_size.Height, input_size.Width, 3 });

return (blob, finalBbox, angle, rotationMatrix, bias);

}

public List<float> Infer(Mat image, List<float> palm)

{

// Preprocess

var (inputBlob, rotatedPalmBbox, angle, rotationMatrix, padBias) = Preprocess(image, palm);

// Forward

model.SetInput(inputBlob);

var outputNames = model.GetUnconnectedOutLayersNames();

Mat[] outputs = outputNames.Select(_ => new Mat()).ToArray();

model.Forward(outputs, outputNames);

// Postprocess

var results = Postprocess(outputs, rotatedPalmBbox, angle, rotationMatrix, padBias);

// Clean up

inputBlob.Dispose();

foreach (var output in outputs)

{

output.Dispose();

}

return results;

}

private List<float> Postprocess(Mat[] outputs, Rect rotatedPalmBbox, double angle, Mat rotationMatrix, Point padBias)

{

if (outputs.Length < 4)

return null;

// Extract outputs

var landmarks = outputs[0].Reshape(1, 21, 3); // 21 landmarks, 3 coordinates each

var conf = outputs[1].At<float>(0, 0);

var handedness = outputs[2].At<float>(0, 0);

var landmarksWorld = outputs[3].Reshape(1, 21, 3);

if (conf < conf_threshold)

return null;

List<float> result = new List<float>();

// Transform coords back to the input coords

var whRotatedPalmBbox = new Point(rotatedPalmBbox.Width, rotatedPalmBbox.Height);

var scaleFactor = new Point2f(

whRotatedPalmBbox.X / (float)input_size.Width,

whRotatedPalmBbox.Y / (float)input_size.Height

);

float maxScale = Math.Max(scaleFactor.X, scaleFactor.Y);

// Scale landmarks

for (int i = 0; i < 21; i++)

{

landmarks.At<float>(i, 0) = (landmarks.At<float>(i, 0) - input_size.Width / 2.0f) * maxScale;

landmarks.At<float>(i, 1) = (landmarks.At<float>(i, 1) - input_size.Height / 2.0f) * maxScale;

landmarks.At<float>(i, 2) = landmarks.At<float>(i, 2) * maxScale; // depth scaling

}

// Rotate landmarks

var coordsRotationMatrix = Cv2.GetRotationMatrix2D(new Point2f(0, 0), -angle, 1.0);

// 正确访问坐标旋转矩阵的双精度元素

double cr00 = coordsRotationMatrix.At<double>(0, 0);

double cr01 = coordsRotationMatrix.At<double>(0, 1);

double cr10 = coordsRotationMatrix.At<double>(1, 0);

double cr11 = coordsRotationMatrix.At<double>(1, 1);

for (int i = 0; i < 21; i++)

{

float x = landmarks.At<float>(i, 0);

float y = landmarks.At<float>(i, 1);

landmarks.Set<float>(i, 0, (float)(cr00 * x + cr01 * y));

landmarks.Set<float>(i, 1, (float)(cr10 * x + cr11 * y));

}

// Invert rotation - 正确访问原始旋转矩阵的双精度元素

double r00 = rotationMatrix.At<double>(0, 0);

double r01 = rotationMatrix.At<double>(0, 1);

double r02 = rotationMatrix.At<double>(0, 2);

double r10 = rotationMatrix.At<double>(1, 0);

double r11 = rotationMatrix.At<double>(1, 1);

double r12 = rotationMatrix.At<double>(1, 2);

var rotationComponent = new double[2, 2] {

{ r00, r10 }, // 注意:转置矩阵

{ r01, r11 }

};

var translationComponent = new double[] { r02, r12 };

var invertedTranslation = new double[] {

-(rotationComponent[0, 0] * translationComponent[0] + rotationComponent[0, 1] * translationComponent[1]),

-(rotationComponent[1, 0] * translationComponent[0] + rotationComponent[1, 1] * translationComponent[1])

};

var inverseRotationMatrix = new Mat(2, 3, MatType.CV_64FC1);

inverseRotationMatrix.Set<double>(0, 0, rotationComponent[0, 0]);

inverseRotationMatrix.Set<double>(0, 1, rotationComponent[0, 1]);

inverseRotationMatrix.Set<double>(0, 2, invertedTranslation[0]);

inverseRotationMatrix.Set<double>(1, 0, rotationComponent[1, 0]);

inverseRotationMatrix.Set<double>(1, 1, rotationComponent[1, 1]);

inverseRotationMatrix.Set<double>(1, 2, invertedTranslation[1]);

// Get box center

var center = new Point2f(

rotatedPalmBbox.X + rotatedPalmBbox.Width / 2.0f,

rotatedPalmBbox.Y + rotatedPalmBbox.Height / 2.0f

);

// 使用逆旋转矩阵计算原始中心

double ir00 = inverseRotationMatrix.At<double>(0, 0);

double ir01 = inverseRotationMatrix.At<double>(0, 1);

double ir02 = inverseRotationMatrix.At<double>(0, 2);

double ir10 = inverseRotationMatrix.At<double>(1, 0);

double ir11 = inverseRotationMatrix.At<double>(1, 1);

double ir12 = inverseRotationMatrix.At<double>(1, 2);

var originalCenter = new Point2f(

(float)(ir00 * center.X + ir01 * center.Y + ir02),

(float)(ir10 * center.X + ir11 * center.Y + ir12)

);

// Transform landmarks back to original coordinates

for (int i = 0; i < 21; i++)

{

landmarks.Set<float>(i, 0, landmarks.At<float>(i, 0) + originalCenter.X + padBias.X);

landmarks.Set<float>(i, 1, landmarks.At<float>(i, 1) + originalCenter.Y + padBias.Y);

}

// Get bounding box from landmarks

float minX = float.MaxValue, minY = float.MaxValue, maxX = float.MinValue, maxY = float.MinValue;

for (int i = 0; i < 21; i++)

{

minX = Math.Min(minX, landmarks.At<float>(i, 0));

minY = Math.Min(minY, landmarks.At<float>(i, 1));

maxX = Math.Max(maxX, landmarks.At<float>(i, 0));

maxY = Math.Max(maxY, landmarks.At<float>(i, 1));

}

var bbox = new Rect((int)minX, (int)minY, (int)(maxX - minX), (int)(maxY - minY));

// Shift bounding box

var shiftVector = new Point(

(int)(bbox.Width * HAND_BOX_SHIFT_VECTOR[0]),

(int)(bbox.Height * HAND_BOX_SHIFT_VECTOR[1])

);

bbox.X += shiftVector.X;

bbox.Y += shiftVector.Y;

// Enlarge bounding box

var centerBbox = new Point(bbox.X + bbox.Width / 2, bbox.Y + bbox.Height / 2);

var newHalfSize = new Size(

(int)(bbox.Width * HAND_BOX_ENLARGE_FACTOR / 2),

(int)(bbox.Height * HAND_BOX_ENLARGE_FACTOR / 2)

);

bbox = new Rect(

centerBbox.X - newHalfSize.Width,

centerBbox.Y - newHalfSize.Height,

newHalfSize.Width * 2,

newHalfSize.Height * 2

);

// Prepare final result

// [0-3]: hand bounding box

result.Add(bbox.X);

result.Add(bbox.Y);

result.Add(bbox.X + bbox.Width);

result.Add(bbox.Y + bbox.Height);

// [4-66]: screen landmarks (21 landmarks * 3 coordinates)

for (int i = 0; i < 21; i++)

{

result.Add(landmarks.At<float>(i, 0));

result.Add(landmarks.At<float>(i, 1));

result.Add(landmarks.At<float>(i, 2));

}

// [67-129]: world landmarks (21 landmarks * 3 coordinates)

for (int i = 0; i < 21; i++)

{

result.Add(landmarksWorld.At<float>(i, 0));

result.Add(landmarksWorld.At<float>(i, 1));

result.Add(landmarksWorld.At<float>(i, 2));

}

// [130]: handedness

result.Add(handedness);

// [131]: confidence

result.Add(conf);

return result;

}

public void Dispose()

{

Dispose(true);

GC.SuppressFinalize(this);

}

protected virtual void Dispose(bool disposing)

{

if (!_disposed)

{

if (disposing)

{

model?.Dispose();

}

_disposed = true;

}

}

~MPHandPose()

{

Dispose(false);

}

}

}

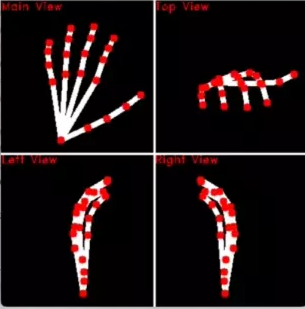

///HandPoseVisualizer

using OpenCvSharp;

using System;

using System.Collections.Generic;

using System.Linq;

namespace yolo_world_opencvsharp_net4._8

{

public static class HandPoseVisualizer

{

public static (Mat, Mat) Visualize(Mat image, List<List<float>> hands, bool printResult = false)

{

Mat displayScreen = image.Clone();

Mat display3d = new Mat(400, 400, MatType.CV_8UC3, Scalar.Black);

// Draw 3D view axes

Cv2.Line(display3d, new Point(200, 0), new Point(200, 400), new Scalar(255, 255, 255), 2);

Cv2.Line(display3d, new Point(0, 200), new Point(400, 200), new Scalar(255, 255, 255), 2);

Cv2.PutText(display3d, "Main View", new Point(0, 12), HersheyFonts.HersheyDuplex, 0.5, new Scalar(0, 0, 255));

Cv2.PutText(display3d, "Top View", new Point(200, 12), HersheyFonts.HersheyDuplex, 0.5, new Scalar(0, 0, 255));

Cv2.PutText(display3d, "Left View", new Point(0, 212), HersheyFonts.HersheyDuplex, 0.5, new Scalar(0, 0, 255));

Cv2.PutText(display3d, "Right View", new Point(200, 212), HersheyFonts.HersheyDuplex, 0.5, new Scalar(0, 0, 255));

bool isDraw = false; // ensure only one hand is drawn

// Gesture classification

var gestureClassifier = new GestureClassification();

for (int idx = 0; idx < hands.Count; idx++)

{

var handpose = hands[idx];

if (handpose.Count < 132) continue;

float conf = handpose[131];

var bbox = new Rect(

(int)handpose[0], (int)handpose[1],

(int)(handpose[2] - handpose[0]),

(int)(handpose[3] - handpose[1])

);

float handedness = handpose[130];

string handednessText = handedness <= 0.5f ? "Left" : "Right";

// Extract landmarks

var landmarksScreen = new List<Point>();

for (int i = 0; i < 21; i++)

{

landmarksScreen.Add(new Point(

(int)handpose[4 + i * 3],

(int)handpose[4 + i * 3 + 1]

));

}

var landmarksWorld = new List<Point3f>();

for (int i = 0; i < 21; i++)

{

landmarksWorld.Add(new Point3f(

handpose[67 + i * 3],

handpose[67 + i * 3 + 1],

handpose[67 + i * 3 + 2]

));

}

// Classify gesture

string gesture = gestureClassifier.Classify(landmarksScreen);

// Print results

if (printResult)

{

Console.WriteLine($"-----------hand {idx + 1}-----------");

Console.WriteLine($"conf: {conf:F2}");

Console.WriteLine($"handedness: {handednessText}");

Console.WriteLine($"gesture: {gesture}");

Console.WriteLine($"hand box: [{bbox.X}, {bbox.Y}, {bbox.X + bbox.Width}, {bbox.Y + bbox.Height}]");

Console.WriteLine("hand landmarks: ");

for (int i = 0; i < landmarksScreen.Count; i++)

{

Console.WriteLine($"\t[{landmarksScreen[i].X}, {landmarksScreen[i].Y}, {handpose[4 + i * 3 + 2]:F2}]");

}

}

// Draw bounding box

Cv2.Rectangle(displayScreen, bbox, new Scalar(0, 255, 0), 2);

// Draw handedness and gesture

Cv2.PutText(displayScreen, handednessText, new Point(bbox.X, bbox.Y + 12),

HersheyFonts.HersheyDuplex, 0.5, new Scalar(0, 0, 255));

Cv2.PutText(displayScreen, gesture, new Point(bbox.X, bbox.Y + 30),

HersheyFonts.HersheyDuplex, 0.5, new Scalar(0, 0, 255));

// Draw hand skeleton

DrawHandSkeleton(displayScreen, landmarksScreen, false);

// Draw landmarks with depth-based size

for (int i = 0; i < landmarksScreen.Count; i++)

{

float depth = handpose[4 + i * 3 + 2];

int radius = Math.Max(5 - (int)(depth / 5), 0);

radius = Math.Min(radius, 14);

Cv2.Circle(displayScreen, landmarksScreen[i], radius, new Scalar(0, 0, 255), -1);

}

// Draw 3D views (only for first hand)

if (!isDraw)

{

isDraw = true;

Draw3DViews(display3d, landmarksWorld);

}

}

return (displayScreen, display3d);

}

private static void DrawHandSkeleton(Mat image, List<Point> landmarks, bool drawPoints = true, int thickness = 2)

{

// Define connections between landmarks

var connections = new List<(int, int)>

{

(0, 1), (1, 2), (2, 3), (3, 4), // Thumb

(0, 5), (5, 6), (6, 7), (7, 8), // Index finger

(0, 9), (9, 10), (10, 11), (11, 12), // Middle finger

(0, 13), (13, 14), (14, 15), (15, 16), // Ring finger

(0, 17), (17, 18), (18, 19), (19, 20) // Pinky finger

};

// Draw connections

foreach (var (start, end) in connections)

{

if (start < landmarks.Count && end < landmarks.Count)

{

Cv2.Line(image, landmarks[start], landmarks[end], new Scalar(255, 255, 255), thickness);

}

}

// Draw points

if (drawPoints)

{

foreach (var point in landmarks)

{

Cv2.Circle(image, point, thickness, new Scalar(0, 0, 255), -1);

}

}

}

private static void Draw3DViews(Mat display3d, List<Point3f> landmarksWorld)

{

// Main view (XY plane)

var landmarksXY = landmarksWorld.Select(p => new Point(

(int)(p.X * 1000 + 100),

(int)(p.Y * 1000 + 100)

)).ToList();

DrawHandSkeleton(display3d, landmarksXY, false, 5);

// Top view (XZ plane)

var landmarksXZ = landmarksWorld.Select(p => new Point(

(int)(p.X * 1000 + 300),

(int)(-p.Z * 1000 + 100)

)).ToList();

DrawHandSkeleton(display3d, landmarksXZ, false, 5);

// Left view (ZY plane)

var landmarksZY = landmarksWorld.Select(p => new Point(

(int)(-p.Z * 1000 + 100),

(int)(p.Y * 1000 + 300)

)).ToList();

DrawHandSkeleton(display3d, landmarksZY, false, 5);

// Right view (YZ plane)

var landmarksYZ = landmarksWorld.Select(p => new Point(

(int)(p.Z * 1000 + 300),

(int)(p.Y * 1000 + 300)

)).ToList();

DrawHandSkeleton(display3d, landmarksYZ, false, 5);

}

}

}

///GestureClassification.cs

using OpenCvSharp;

using System;

using System.Collections.Generic;

using System.Linq;

namespace yolo_world_opencvsharp_net4._8

{

public class GestureClassification

{

private double Vector2Angle(Point v1, Point v2)

{

double normV1 = Math.Sqrt(v1.X * v1.X + v1.Y * v1.Y);

double normV2 = Math.Sqrt(v2.X * v2.X + v2.Y * v2.Y);

if (normV1 == 0 || normV2 == 0) return 0;

double dotProduct = v1.X * v2.X + v1.Y * v2.Y;

double cosAngle = dotProduct / (normV1 * normV2);

cosAngle = Math.Max(-1, Math.Min(1, cosAngle)); // Clamp to avoid floating point errors

double angle = Math.Acos(cosAngle) * (180.0 / Math.PI);

return angle;

}

private List<double> HandAngle(List<Point> hand)

{

var angleList = new List<double>();

// thumb

var angle1 = Vector2Angle(

new Point(hand[0].X - hand[2].X, hand[0].Y - hand[2].Y),

new Point(hand[3].X - hand[4].X, hand[3].Y - hand[4].Y)

);

angleList.Add(angle1);

// index

var angle2 = Vector2Angle(

new Point(hand[0].X - hand[6].X, hand[0].Y - hand[6].Y),

new Point(hand[7].X - hand[8].X, hand[7].Y - hand[8].Y)

);

angleList.Add(angle2);

// middle

var angle3 = Vector2Angle(

new Point(hand[0].X - hand[10].X, hand[0].Y - hand[10].Y),

new Point(hand[11].X - hand[12].X, hand[11].Y - hand[12].Y)

);

angleList.Add(angle3);

// ring

var angle4 = Vector2Angle(

new Point(hand[0].X - hand[14].X, hand[0].Y - hand[14].Y),

new Point(hand[15].X - hand[16].X, hand[15].Y - hand[16].Y)

);

angleList.Add(angle4);

// pinky

var angle5 = Vector2Angle(

new Point(hand[0].X - hand[18].X, hand[0].Y - hand[18].Y),

new Point(hand[19].X - hand[20].X, hand[19].Y - hand[20].Y)

);

angleList.Add(angle5);

return angleList;

}

private List<bool> FingerStatus(List<Point> landmarks)

{

var fingerList = new List<bool>();

var origin = landmarks[0];

var keypointList = new List<(int, int)> { (5, 4), (6, 8), (10, 12), (14, 16), (18, 20) };

foreach (var (point1, point2) in keypointList)

{

var p1 = landmarks[point1];

var p2 = landmarks[point2];

double dist1 = Math.Sqrt(Math.Pow(p1.X - origin.X, 2) + Math.Pow(p1.Y - origin.Y, 2));

double dist2 = Math.Sqrt(Math.Pow(p2.X - origin.X, 2) + Math.Pow(p2.Y - origin.Y, 2));

fingerList.Add(dist2 > dist1);

}

return fingerList;

}

private string ClassifyGesture(List<Point> hand)

{

double thrAngle = 65.0;

double thrAngleThumb = 30.0;

double thrAngleS = 49.0;

string gestureStr = "Undefined";

var angleList = HandAngle(hand);

var fingerStatus = FingerStatus(hand);

bool thumbOpen = fingerStatus[0];

bool firstOpen = fingerStatus[1];

bool secondOpen = fingerStatus[2];

bool thirdOpen = fingerStatus[3];

bool fourthOpen = fingerStatus[4];

// Number gestures

if (angleList[0] > thrAngleThumb && angleList[1] > thrAngle && angleList[2] > thrAngle &&

angleList[3] > thrAngle && angleList[4] > thrAngle &&

!firstOpen && !secondOpen && !thirdOpen && !fourthOpen)

{

gestureStr = "Zero";

}

else if (angleList[0] > thrAngleThumb && angleList[1] < thrAngleS && angleList[2] > thrAngle &&

angleList[3] > thrAngle && angleList[4] > thrAngle &&

firstOpen && !secondOpen && !thirdOpen && !fourthOpen)

{

gestureStr = "One";

}

else if (angleList[0] > thrAngleThumb && angleList[1] < thrAngleS && angleList[2] < thrAngleS &&

angleList[3] > thrAngle && angleList[4] > thrAngle &&

!thumbOpen && firstOpen && secondOpen && !thirdOpen && !fourthOpen)

{

gestureStr = "Two";

}

else if (angleList[0] > thrAngleThumb && angleList[1] < thrAngleS && angleList[2] < thrAngleS &&

angleList[3] < thrAngleS && angleList[4] > thrAngle &&

!thumbOpen && firstOpen && secondOpen && thirdOpen && !fourthOpen)

{

gestureStr = "Three";

}

else if (angleList[0] > thrAngleThumb && angleList[1] < thrAngleS && angleList[2] < thrAngleS &&

angleList[3] < thrAngleS && angleList[4] < thrAngle &&

firstOpen && secondOpen && thirdOpen && fourthOpen)

{

gestureStr = "Four";

}

else if (angleList[0] < thrAngleS && angleList[1] < thrAngleS && angleList[2] < thrAngleS &&

angleList[3] < thrAngleS && angleList[4] < thrAngleS &&

thumbOpen && firstOpen && secondOpen && thirdOpen && fourthOpen)

{

gestureStr = "Five";

}

// Add more gesture classifications as needed...

return gestureStr;

}

public string Classify(List<Point> landmarks)

{

if (landmarks.Count < 21) return "Undefined";

return ClassifyGesture(landmarks);

}

}

}

使用方法示例:

private void button3_Click(object sender, EventArgs e)

{

// 使用手部Pose检测的完整流程

string palmModelPath = @"D:\opencvsharp\1\opencv_zoo-main\opencv_zoo-main\models\palm_detection_mediapipe\palm_detection_mediapipe_2023feb.onnx";

string handPoseModelPath = @"D:\opencvsharp\1\opencv_zoo-main\opencv_zoo-main\models\handpose_estimation_mediapipe\handpose_estimation_mediapipe_2023feb.onnx";

using (var palmDetector = new PalmDetector(palmModelPath, 0.3f, 0.5f))

using (var handPoseDetector = new MPHandPose(handPoseModelPath, 0.7f))

using (var capture = new VideoCapture(0))

{

while (true)

{

using (var frame = new Mat())

{

capture.Read(frame);

if (frame.Empty()) break;

// 手掌检测

var palms = palmDetector.Infer(frame);

var hands = new List<List<float>>();

// 对每个检测到的手掌进行姿态估计

foreach (var palm in palms)

{

var handPose = handPoseDetector.Infer(frame, palm);

if (handPose != null && handPose.Count >= 132)

{

hands.Add(handPose);

}

}

// 可视化结果

var (displayScreen, display3d) = HandPoseVisualizer.Visualize(frame, hands);

Cv2.ImShow("Hand Pose Detection", displayScreen);

Cv2.ImShow("3D Hand Pose", display3d);

displayScreen.Dispose();

display3d.Dispose();

if (Cv2.WaitKey(1) >= 0) break;

}

}

}

}