前言

在现代微服务架构中,异步通信不仅仅是一种选择,它还是可扩展、弹性系统的支柱。但让我们面对现实吧:直接使用 RabbitMQ 或 Kafka 等消息代理通常意味着淹没在样板代码、特定于代理的配置和作复杂性中。

Spring Cloud Stream 是一个改变游戏规则的框架,它将消息传递从技术障碍转变为战略优势。凭借其优雅的抽象层,您可以在服务之间实现强大的事件驱动通信,而无需将代码绑定到特定的代理。

在本文中,我将演示 Spring Cloud Stream 如何使我能够:

1、使用 RabbitMQ 和 Kafka 的相同代码构建与代理无关的生产者/消费者。

2、在 3 行配置中实现企业级消息传递模式(重试、DLQ)。

3、通过单个环境变量更改切换消息传递技术。

4、在框架处理消息传递管道时保持对业务逻辑的关注。

无论您是处理支付处理事件、实时分析还是物联网数据流,Spring Cloud Stream 都能将异步通信从挑战转变为系统的超能力。让我们深入了解一下吧!

演示流程

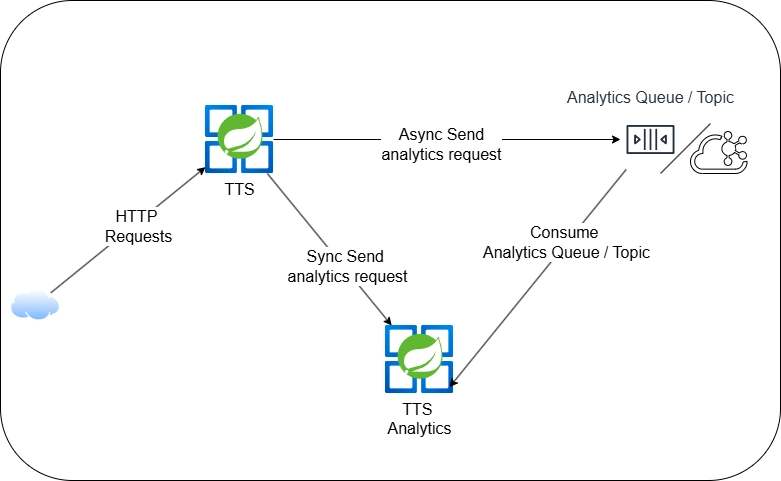

为了能够演示上述内容,我们将使用两个 Spring Boot 应用程序,它们都能够异步和同步通信。

1、TTS,公开一个端点,用于使用 FreeTTS Java 库将用户输入的文本转换为语音。

2、TTS Analytics,一种微服务,其作用是对用户 IP 地址和用户代理进行分析,以提供设备和国家/地区信息(在本实验室中,我们仅模拟此行为)。此外,它还通过 Queue(如果我们使用 RabbitMQ)或 Topic(如果我们使用 Kafka)接收一些后处理场景的 Post TTS Analytics 消息,这些场景在实际应用程序中可能会遇到。

关键组件

sql

┌──────────────────────────────┐

│ Business Logic │

└──────────────┬───────────────┘

│

┌──────────────▼───────────────┐

│ Spring Cloud Stream │

│ ┌────────────────────────┐ │

│ │ StreamBridge │◄───SEND

│ └────────────────────────┘ │

│ ┌────────────────────────┐ │

│ │ @Bean Supplier/ │◄───POLL

│ │ Consumer │ │

│ └────────────────────────┘ │

└──────────────┬───────────────┘

│

┌──────────────▼───────────────┐

│ Binders │

│ ┌───────┐ ┌───────┐ │

│ │Rabbit │ │ Kafka │ ... │

│ │MQ │ │ │ │

│ └───────┘ └───────┘ │

└──────────────┬───────────────┘

│

┌──────────────▼──────────────┐

│ Message Broker │

│ (RabbitMQ/Kafka/PubSub) │

└─────────────────────────────┘Spring Cloud Stream 世界中的关键术语包括但不限于:

1、Binder:特定代理(RabbitMQ/Kafka)的插件连接器。

2、绑定:将代码链接到代理目标的配置。

3、目标:消息的逻辑地址(交换/主题)。

4、消息通道:虚拟管道(输入/输出)。

5、StreamBridge:命令式消息发送实用程序。

6、供应商/消费者:流的功能接口。

7、消费者组:并行处理的扩展机制。

sql

消息流(生产者→消费者)

PRODUCER SIDE

┌───────────┐ ┌───────────┐ ┌───────────┐

│ Business │ │ Stream │ │ Message │

│ Logic ├──────►│ Bridge ├──────►│ Broker │

│ │ │ │ │ │

└───────────┘ └───────────┘ └─────┬─────┘

│

CONSUMER SIDE ▼

┌───────────┐ ┌───────────┐ ┌───────────┐

│ @Bean │ │ Spring │ │ Message │

│ Consumer │◄──────┤ Cloud │◄──────┤ Broker │

│ │ │ Stream │ │ │

└───────────┘ └───────────┘ └───────────┘业务逻辑可能包括多个需要向我们的交换/主题发送消息的方法或服务,这是 StreamBridge 的完美用例。然后,使用者应用程序从通道接收消息,以便通过返回将处理消息/事件的使用者的方法进行处理。

消费者群体扩展

sql

┌───────────────────────┐

│ Message Broker │

│ (post-tts-analytics) │

└───────────┬───────────┘

│

┌───────────▼───────────┐

│ Consumer Group │

│ "analytics-group" │

└───────────┬───────────┘

┌─────────────┼─────────────┐

┌────────▼───┐ ┌─────▼─────┐ ┌───▼───────┐

│ Consumer │ │ Consumer │ │ Consumer │

│ Instance 1 │ │ Instance2 │ │ Instance3 │

└────────────┘ └───────────┘ └───────────┘在现实场景中,我们可能有多个消费者应用程序实例。不同的实例最终成为相互竞争的消费者,我们期望每条消息仅由一个实例处理。在这里,消费者群体的概念很重要。每个使用者绑定都可以指定一个组名称,订阅给定目标的每个组将收到消息/事件的副本,并且该组中只有一个实例/成员将接收消息/事件。

Binder 抽象层

sql

┌───────────────────────────────────┐

│ Your Application Code │

│ ┌──────────────────────────────┐ │

│ │ Spring Cloud Stream │ │

│ │ ┌───────────────────────┐ │ │

│ │ │ Bindings │ │ │

│ │ │ (Logical Destinations)│ │ │

│ │ └───────────┬───────────┘ │ │

│ └──────────────│───────────────┘ │

│ ▼ │

│ ┌──────────────────────────────┐ │

│ │ Binder Bridge │ │

│ └──────────────┬───────────────┘ │

└─────────────────│─────────────────┘

│

┌──────────┴─────────────┐

┌──────▼─────────┐ ┌─────────▼───────┐

│ RabbitMQ │ │ Kafka │

│ Implementation │ │ Implementation │

└────────────────┘ └─────────────────┘Binder 服务提供者接口 (SPI) 是 Spring Cloud Stream 代理独立性的基础。它定义了一个将应用程序逻辑连接到消息传递系统的契约,同时抽象了特定于代理的细节。

sql

public interface Binder<T> {

Binding<T> bindProducer(String name, T outboundTarget,

ProducerProperties producerProperties);

Binding<T> bindConsumer(String name, String group,

T inboundTarget,

ConsumerProperties consumerProperties);

}1、bindProducer():将输出通道连接到代理目标。

2、bindConsumer():将输入通道链接到代理队列/主题。

Kafka Binder 实现将目标和消费者组映射到 Kafka 主题。对于 RabbitMQ 绑定器实现,它将目的地映射到 TopicExchange,并且对于每个消费者组,一个队列将绑定到该 TopicExchange。

生产者申请

sql

@Service

public class PostTtsAnalyticsPublisherService {

private final StreamBridge streamBridge;

public void sendAnalytics(PostTtsAnalyticsRequest request) {

streamBridge.send("postTtsAnalytics-out-0", request);

}

}1、命令式发送:StreamBridge 允许从任何业务逻辑发送临时消息。

2、绑定抽象:postTtsAnalytics-out-0 通过配置映射到代理目标。

3、零代理特定代码:没有 RabbitMQ/Kafka API 依赖项。

sql

spring:

cloud:

stream:

bindings:

postTtsAnalytics-out-0:

destination: post-tts-analytics # Exchange/topic name

content-type: application/json

default-binder: ${BINDER_TYPE:rabbit} # Magic switch1、绑定名称:postTtsAnalytics-out-0,定义用于发送消息的输出通道,并遵循通常为 -out- 的约定。

2、destination:post-tts-analytics,指定消息传递系统中的目标目标。对于 RabbitMQ,这将是一个名为 post-tts-analytics 的交换;对于 Kafka,一个同名的主题。

3、content-type: application/json,表示通过此绑定发送的消息将序列化为 JSON。Spring Cloud Stream 使用它来适当地处理消息转换。

4、default-binder: ${BINDER_TYPE:rabbit},设置用于消息通信的默认绑定器。设置BINDER_TYPE环境变量,将使用其值;否则,默认为 rabbit。这种灵活性使得无需更改代码库即可在不同的消息传递系统(例如 RabbitMQ 或 Kafka)之间轻松切换。

sql

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-stream-binder-rabbit</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-stream-binder-kafka</artifactId>

</dependency>通过为 Kafka 和 RabbitMQ 提供绑定器依赖项,我们可以轻松地在 Kafka 和 RabbitMQ 之间切换,而无需更改任何代码库。

消费类应用

sql

@Service

public class PostTtsAnalyticsConsumerService {

@Bean

public Consumer<PostTtsAnalyticsRequest> postTtsAnalytics() {

return this::processAnalytics;

}

}1、声明性消费:功能消费者 bean 处理传入消息。

2、自动绑定:方法名称 postTtsAnalytics 与绑定配置匹配。

3、重试/DLQ 支持:通过简单的 YAML 进行配置。

sql

spring:

cloud:

stream:

bindings:

postTtsAnalytics-in-0:

destination: post-tts-analytics

content-type: application/json

group: analytics-group

consumer:

max-attempts: 3 # Retry attempts

back-off-initial-interval: 1000 # Retry delay

default-binder: ${BINDER_TYPE:rabbit} # Magic switch1、绑定名称:postTtsAnalytics-in-0,定义输入绑定,表示此应用程序将消费消息。命名约定通常遵循 -in-。

2、destination:post-tts-analytics,指定消息传递系统中的目标目标。对于 RabbitMQ,这将对应于一个名为 post-tts-analytics 的交换;对于 Kafka,一个同名的主题。

3、group:analytics-group,定义一个消费者组。在 Kafka 中,这可确保消息在同一组中的消费者之间分发。在 RabbitMQ 中,它有助于为组创建共享队列。

4、max-attempts: 3,指定应用程序将尝试处理消息最多三次,然后才认为它失败(这包括初始尝试和两次重试)。

5、back-off-initial-interval: 1000,设置重试尝试之间的初始延迟(以毫秒为单位)。在这种情况下,在第一次重试之前有 1 秒的延迟。

sql

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-stream-binder-rabbit</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-stream-binder-kafka</artifactId>

</dependency>测试策略

sql

@SpringBootTest

@Import(TestChannelBinderConfiguration.class)

class PostTtsAnalyticsPublisherServiceTest {

@Autowired

private OutputDestination outputDestination;

@Autowired

private CompositeMessageConverter converter;

@Autowired

private PostTtsAnalyticsPublisherService analyticsService;

@Test

void testSendMessage() {

PostTtsAnalyticsRequest request = new PostTtsAnalyticsRequest(

"123", LocalDateTime.now(), 1500

);

analyticsService.sendAnalytics(request);

var message = outputDestination.receive(1000, "post-tts-analytics");

assert message != null;

PostTtsAnalyticsRequest received = (PostTtsAnalyticsRequest) converter.fromMessage(message, PostTtsAnalyticsRequest.class);

assert Objects.requireNonNull(received).id().equals(request.id());

}

}该测试有效地确保了 PostTtsAnalyticsPublisherService 按预期发布消息,验证了发送机制和消息内容。

1、@import(TestChannelBinderConfiguration.class):导入 TestChannelBinderConfiguration,它设置了 Spring Cloud Stream 提供的内存中测试绑定器。该 Binder 模拟 JVM 中的消息代理交互,在测试期间无需实际的消息代理。

2、OutputDestination:测试绑定器提供的抽象,用于捕获应用程序发送的消息。它允许测试检索本应发送到外部消息代理的消息。

3、CompositeMessageConverter:多个 MessageConverter 实例的组合。它有助于根据内容类型将消息有效负载转换为不同格式,例如 JSON ** 到 **POJO。

sql

@SpringBootTest

@Import(TestChannelBinderConfiguration.class)

class PostTtsAnalyticsConsumerServiceTest {

@Autowired

private InputDestination inputDestination;

@MockitoSpyBean

private PostTtsAnalyticsConsumerService consumerService;

@Test

void testReceiveMessage() {

PostTtsAnalyticsRequest request = new PostTtsAnalyticsRequest(

"456", LocalDateTime.now(), 2000

);

inputDestination.send(new GenericMessage<>(request), "post-tts-analytics");

// Verify the handler was called

verify(consumerService, timeout(1000)).processAnalytics(request);

}

@Test

void testRetryMechanism() throws Exception {

PostTtsAnalyticsRequest request = new PostTtsAnalyticsRequest(

"456", LocalDateTime.now(), 2000

);

// Mock failure scenario

doThrow(new RuntimeException("Simulated processing failure"))

.when(consumerService).processAnalytics(request);

// Send test message

inputDestination.send(new GenericMessage<>(request), "post-tts-analytics");

// Verify retry attempts

verify(consumerService, timeout(4000).times(3)).processAnalytics(request);

}

}1、testReceiveMessage() 验证使用者是否正确处理传入消息。testRetryMechanism(),在消息处理失败时测试消费者的重试机制。

2、InputDestination:测试绑定器提供的抽象,用于向应用程序发送消息,模拟来自消息代理的传入消息。

3、@MockitoSpyBean:创建 PostTtsAnalyticsConsumerService bean 的间谍,允许测试验证与其方法的交互,同时保留原始行为。

RabbitMQ 演示

我们在不设置环境变量的情况下运行这两个应用程序BINDER_TYPE。

sql

. ____ _ __ _ _

/\\ / ___'_ __ _ _(_)_ __ __ _ \ \ \ \

( ( )\___ | '_ | '_| | '_ \/ _` | \ \ \ \

\\/ ___)| |_)| | | | | || (_| | ) ) ) )

' |____| .__|_| |_|_| |_\__, | / / / /

=========|_|==============|___/=/_/_/_/

:: Spring Boot :: (v3.4.5)

2025-05-25T21:20:41.345+01:00 INFO 15156 --- [tts] [ main] com.example.tts.TtsApplication : No active profile set, falling back to 1 default profile: "default"

2025-05-25T21:20:42.657+01:00 INFO 15156 --- [tts] [ main] faultConfiguringBeanFactoryPostProcessor : No bean named 'errorChannel' has been explicitly defined. Therefore, a default PublishSubscribeChannel will be created.

2025-05-25T21:20:42.666+01:00 INFO 15156 --- [tts] [ main] faultConfiguringBeanFactoryPostProcessor : No bean named 'integrationHeaderChannelRegistry' has been explicitly defined. Therefore, a default DefaultHeaderChannelRegistry will be created.

2025-05-25T21:20:43.297+01:00 INFO 15156 --- [tts] [ main] o.s.b.w.embedded.tomcat.TomcatWebServer : Tomcat initialized with port 8080 (http)

2025-05-25T21:20:43.312+01:00 INFO 15156 --- [tts] [ main] o.apache.catalina.core.StandardService : Starting service [Tomcat]

2025-05-25T21:20:43.313+01:00 INFO 15156 --- [tts] [ main] o.apache.catalina.core.StandardEngine : Starting Servlet engine: [Apache Tomcat/10.1.40]

2025-05-25T21:20:43.387+01:00 INFO 15156 --- [tts] [ main] o.a.c.c.C.[Tomcat].[localhost].[/] : Initializing Spring embedded WebApplicationContext

2025-05-25T21:20:43.387+01:00 INFO 15156 --- [tts] [ main] w.s.c.ServletWebServerApplicationContext : Root WebApplicationContext: initialization completed in 1977 ms

2025-05-25T21:20:45.676+01:00 INFO 15156 --- [tts] [ main] o.s.i.endpoint.EventDrivenConsumer : Adding {logging-channel-adapter:_org.springframework.integration.errorLogger} as a subscriber to the 'errorChannel' channel

2025-05-25T21:20:45.676+01:00 INFO 15156 --- [tts] [ main] o.s.i.channel.PublishSubscribeChannel : Channel 'tts.errorChannel' has 1 subscriber(s).

2025-05-25T21:20:45.676+01:00 INFO 15156 --- [tts] [ main] o.s.i.endpoint.EventDrivenConsumer : started bean '_org.springframework.integration.errorLogger'

2025-05-25T21:20:45.767+01:00 INFO 15156 --- [tts] [ main] o.s.b.w.embedded.tomcat.TomcatWebServer : Tomcat started on port 8080 (http) with context path '/'

2025-05-25T21:20:45.794+01:00 INFO 15156 --- [tts] [ main] com.example.tts.TtsApplication : Started TtsApplication in 5.064 seconds (process running for 5.525)以上是使用默认 rabbit binder 启动 Producer 应用程序的日志。我们可以看到以下内容:

1、自动配置 Spring Integration 通道(errorChannel、integrationHeaderChannelRegistry)

2、没有显式的 RabbitMQ 连接日志(绑定器延迟初始化)

3、用于日志记录的隐式 errorChannel 订阅者

sql

. ____ _ __ _ _

/\\ / ___'_ __ _ _(_)_ __ __ _ \ \ \ \

( ( )\___ | '_ | '_| | '_ \/ _` | \ \ \ \

\\/ ___)| |_)| | | | | || (_| | ) ) ) )

' |____| .__|_| |_|_| |_\__, | / / / /

=========|_|==============|___/=/_/_/_/

:: Spring Boot :: (v3.4.5)

2025-05-25T21:20:46.613+01:00 INFO 23932 --- [tts-analytics] [ main] c.e.t.TtsAnalyticsApplication : No active profile set, falling back to 1 default profile: "default"

2025-05-25T21:20:47.894+01:00 INFO 23932 --- [tts-analytics] [ main] faultConfiguringBeanFactoryPostProcessor : No bean named 'errorChannel' has been explicitly defined. Therefore, a default PublishSubscribeChannel will be created.

2025-05-25T21:20:47.903+01:00 INFO 23932 --- [tts-analytics] [ main] faultConfiguringBeanFactoryPostProcessor : No bean named 'integrationHeaderChannelRegistry' has been explicitly defined. Therefore, a default DefaultHeaderChannelRegistry will be created.

2025-05-25T21:20:48.520+01:00 INFO 23932 --- [tts-analytics] [ main] o.s.b.w.embedded.tomcat.TomcatWebServer : Tomcat initialized with port 8090 (http)

2025-05-25T21:20:48.535+01:00 INFO 23932 --- [tts-analytics] [ main] o.apache.catalina.core.StandardService : Starting service [Tomcat]

2025-05-25T21:20:48.535+01:00 INFO 23932 --- [tts-analytics] [ main] o.apache.catalina.core.StandardEngine : Starting Servlet engine: [Apache Tomcat/10.1.40]

2025-05-25T21:20:48.599+01:00 INFO 23932 --- [tts-analytics] [ main] o.a.c.c.C.[Tomcat].[localhost].[/] : Initializing Spring embedded WebApplicationContext

2025-05-25T21:20:48.599+01:00 INFO 23932 --- [tts-analytics] [ main] w.s.c.ServletWebServerApplicationContext : Root WebApplicationContext: initialization completed in 1913 ms

2025-05-25T21:20:50.354+01:00 INFO 23932 --- [tts-analytics] [ main] o.s.c.s.m.DirectWithAttributesChannel : Channel 'tts-analytics.postTtsAnalytics-in-0' has 1 subscriber(s).

2025-05-25T21:20:50.479+01:00 INFO 23932 --- [tts-analytics] [ main] o.s.i.endpoint.EventDrivenConsumer : Adding {logging-channel-adapter:_org.springframework.integration.errorLogger} as a subscriber to the 'errorChannel' channel

2025-05-25T21:20:50.479+01:00 INFO 23932 --- [tts-analytics] [ main] o.s.i.channel.PublishSubscribeChannel : Channel 'tts-analytics.errorChannel' has 1 subscriber(s).

2025-05-25T21:20:50.483+01:00 INFO 23932 --- [tts-analytics] [ main] o.s.i.endpoint.EventDrivenConsumer : started bean '_org.springframework.integration.errorLogger'

2025-05-25T21:20:50.558+01:00 INFO 23932 --- [tts-analytics] [ main] o.s.c.s.binder.DefaultBinderFactory : Creating binder: rabbit

2025-05-25T21:20:50.558+01:00 INFO 23932 --- [tts-analytics] [ main] o.s.c.s.binder.DefaultBinderFactory : Constructing binder child context for rabbit

2025-05-25T21:20:50.760+01:00 INFO 23932 --- [tts-analytics] [ main] o.s.c.s.binder.DefaultBinderFactory : Caching the binder: rabbit

2025-05-25T21:20:50.790+01:00 INFO 23932 --- [tts-analytics] [ main] c.s.b.r.p.RabbitExchangeQueueProvisioner : declaring queue for inbound: post-tts-analytics.analytics-group, bound to: post-tts-analytics

2025-05-25T21:20:50.804+01:00 INFO 23932 --- [tts-analytics] [ main] o.s.a.r.c.CachingConnectionFactory : Attempting to connect to: [localhost:5672]

2025-05-25T21:20:50.876+01:00 INFO 23932 --- [tts-analytics] [ main] o.s.a.r.c.CachingConnectionFactory : Created new connection: rabbitConnectionFactory#2055833f:0/SimpleConnection@1ff463bb [delegate=amqp://guest@127.0.0.1:5672/, localPort=54933]

2025-05-25T21:20:50.964+01:00 INFO 23932 --- [tts-analytics] [ main] o.s.c.stream.binder.BinderErrorChannel : Channel 'rabbit-230456842.postTtsAnalytics-in-0.errors' has 1 subscriber(s).

2025-05-25T21:20:50.966+01:00 INFO 23932 --- [tts-analytics] [ main] o.s.c.stream.binder.BinderErrorChannel : Channel 'rabbit-230456842.postTtsAnalytics-in-0.errors' has 2 subscriber(s).

2025-05-25T21:20:50.997+01:00 INFO 23932 --- [tts-analytics] [ main] o.s.i.a.i.AmqpInboundChannelAdapter : started bean 'inbound.post-tts-analytics.analytics-group'

2025-05-25T21:20:51.020+01:00 INFO 23932 --- [tts-analytics] [ main] o.s.b.w.embedded.tomcat.TomcatWebServer : Tomcat started on port 8090 (http) with context path '/'

2025-05-25T21:20:51.047+01:00 INFO 23932 --- [tts-analytics] [ main] c.e.t.TtsAnalyticsApplication : Started TtsAnalyticsApplication in 5.096 seconds (process running for 5.797)对于消费者应用程序,我们从启动日志中看到:

1、绑定到 RabbitMQ 队列:post-tts-analytics.analytics-group

2、创建与 localhost:5672 的连接

3、AmqpInboundChannelAdapter:已启动消息侦听器(bean 'inbound.post-tts-analytics.analytics-group')

4、具有 2 个订阅者的 BinderErrorChannel(重试 + DLQ 处理)

sql

/ # rabbitmqctl list_connections name user state protocol

Listing connections ...

name user state protocol

172.17.0.1:43720 -> 172.17.0.2:5672 guest running {0,9,1}

/ # rabbitmqctl list_channels name connection user confirm consumer_count

Listing channels ...

name connection user confirm consumer_count

172.17.0.1:43720 -> 172.17.0.2:5672 (1) <rabbit@6332dec50309.1748204432.670.0> guest false 1

/ # rabbitmqctl list_exchanges name type durable auto_delete

Listing exchanges for vhost / ...

name type durable auto_delete

post-tts-analytics topic true false

amq.match headers true false

amq.fanout fanout true false

amq.rabbitmq.trace topic true false

amq.headers headers true false

direct true false

amq.topic topic true false

amq.direct direct true false

/ # rabbitmqctl list_queues name durable auto_delete consumers state

Timeout: 60.0 seconds ...

Listing queues for vhost / ...

name durable auto_delete consumers state

post-tts-analytics.analytics-group true false 1 running

/ # rabbitmqctl list_bindings source_name routing_key destination_name

Listing bindings for vhost /...

source_name routing_key destination_name

post-tts-analytics.analytics-group post-tts-analytics.analytics-group

post-tts-analytics # post-tts-analytics.analytics-group我们可以看到,所有的 RabbitMQ 资源都是由 Spring Cloud Stream 正确创建的。

sql

2025-05-25T21:38:52.121+01:00 INFO 15156 --- [tts] [nio-8080-exec-1] c.e.t.controller.TextToSpeechController : textToSpeech request: TtsRequest[text=Hi this is a complete test. From RabbitMQ]

2025-05-25T21:38:52.809+01:00 INFO 15156 --- [tts] [nio-8080-exec-1] c.e.tts.service.TextToSpeechService : do Something with analytics response: TtsAnalyticsResponse[device=Bot, countryIso=ZA]

Wrote synthesized speech to \tts\output\14449f58-7140-45a5-b024-26021229dfb3.wav

2025-05-25T21:38:53.063+01:00 INFO 15156 --- [tts] [nio-8080-exec-1] c.e.t.m.PostTtsAnalyticsPublisherService : sendAnalytics: PostTtsAnalyticsRequest[id=14449f58-7140-45a5-b024-26021229dfb3, creationDate=2025-05-25T21:38:53.063039900, processTimeMs=937]

2025-05-25T21:38:53.068+01:00 INFO 15156 --- [tts] [nio-8080-exec-1] o.s.c.s.binder.DefaultBinderFactory : Creating binder: rabbit

2025-05-25T21:38:53.068+01:00 INFO 15156 --- [tts] [nio-8080-exec-1] o.s.c.s.binder.DefaultBinderFactory : Constructing binder child context for rabbit

2025-05-25T21:38:53.188+01:00 INFO 15156 --- [tts] [nio-8080-exec-1] o.s.c.s.binder.DefaultBinderFactory : Caching the binder: rabbit

2025-05-25T21:38:53.207+01:00 INFO 15156 --- [tts] [nio-8080-exec-1] o.s.a.r.c.CachingConnectionFactory : Attempting to connect to: [localhost:5672]

2025-05-25T21:38:53.255+01:00 INFO 15156 --- [tts] [nio-8080-exec-1] o.s.a.r.c.CachingConnectionFactory : Created new connection: rabbitConnectionFactory#54463380:0/SimpleConnection@18b2124b [delegate=amqp://guest@127.0.0.1:5672/, localPort=55076]

2025-05-25T21:38:53.293+01:00 INFO 15156 --- [tts] [nio-8080-exec-1] o.s.c.s.m.DirectWithAttributesChannel : Channel 'tts.postTtsAnalytics-out-0' has 1 subscriber(s).

2025-05-25T21:38:53.311+01:00 INFO 15156 --- [tts] [nio-8080-exec-1] o.s.a.r.c.CachingConnectionFactory : Attempting to connect to: [localhost:5672]

2025-05-25T21:38:53.319+01:00 INFO 15156 --- [tts] [nio-8080-exec-1] o.s.a.r.c.CachingConnectionFactory : Created new connection: rabbitConnectionFactory.publisher#105dbd15:0/SimpleConnection@7243df50 [delegate=amqp://guest@127.0.0.1:5672/, localPort=55077]

2025-05-25T21:38:55.309+01:00 INFO 15156 --- [tts] [nio-8080-exec-3] c.e.t.controller.TextToSpeechController : textToSpeech request: TtsRequest[text=Hi this is a complete test. From RabbitMQ]

2025-05-25T21:38:55.315+01:00 INFO 15156 --- [tts] [nio-8080-exec-3] c.e.tts.service.TextToSpeechService : do Something with analytics response: TtsAnalyticsResponse[device=Tablet, countryIso=AU]

Wrote synthesized speech to tts\output\a4021436-fd12-486b-9d65-cff25c5611c5.wav

2025-05-25T21:38:55.400+01:00 INFO 15156 --- [tts] [nio-8080-exec-3] c.e.t.m.PostTtsAnalyticsPublisherService : sendAnalytics: PostTtsAnalyticsRequest[id=a4021436-fd12-486b-9d65-cff25c5611c5, creationDate=2025-05-25T21:38:55.400422300, processTimeMs=91]通过调用 TTS 应用程序中的"/tts"端点,我们可以看到以下内容:

1、该应用程序使用 Spring Cloud Stream 处理文本转语音请求、生成音频文件并将分析数据发布到 RabbitMQ 交换。

2、在第一次请求时,应用程序初始化 RabbitMQ 绑定器,构造必要的上下文,并将其缓存以供将来使用。

3、应用程序在 localhost:5672 处建立与 RabbitMQ 的连接,确认与消息代理通信成功。

4、应用程序确认输出通道具有活动订阅者,确保消息正确路由。

5、进一步的请求得到有效处理,应用程序重用已建立的绑定器和连接,从而展示了有效的资源管理。第二个请求的 processTimeMs=91 与第一个请求的 processTimeMs=937 相比。

sql

2025-05-25T21:38:52.567+01:00 INFO 23932 --- [tts-analytics] [nio-8090-exec-1] o.a.c.c.C.[Tomcat].[localhost].[/] : Initializing Spring DispatcherServlet 'dispatcherServlet'

2025-05-25T21:38:52.567+01:00 INFO 23932 --- [tts-analytics] [nio-8090-exec-1] o.s.web.servlet.DispatcherServlet : Initializing Servlet 'dispatcherServlet'

2025-05-25T21:38:52.567+01:00 INFO 23932 --- [tts-analytics] [nio-8090-exec-1] o.s.web.servlet.DispatcherServlet : Completed initialization in 0 ms

2025-05-25T21:38:52.664+01:00 INFO 23932 --- [tts-analytics] [nio-8090-exec-1] c.e.t.controller.AnalyticsController : doAnalytics request: TtsAnalyticsRequest[clientIp=0:0:0:0:0:0:0:1, userAgent=PostmanRuntime/7.44.0]

2025-05-25T21:38:53.381+01:00 INFO 23932 --- [tts-analytics] [alytics-group-1] c.e.t.m.PostTtsAnalyticsConsumerService : processAnalytics: PostTtsAnalyticsRequest[id=14449f58-7140-45a5-b024-26021229dfb3, creationDate=2025-05-25T21:38:53.063039900, processTimeMs=937]

2025-05-25T21:38:55.313+01:00 INFO 23932 --- [tts-analytics] [nio-8090-exec-2] c.e.t.controller.AnalyticsController : doAnalytics request: TtsAnalyticsRequest[clientIp=0:0:0:0:0:0:0:1, userAgent=PostmanRuntime/7.44.0]

2025-05-25T21:38:55.408+01:00 INFO 23932 --- [tts-analytics] [alytics-group-1] c.e.t.m.PostTtsAnalyticsConsumerService : processAnalytics: PostTtsAnalyticsRequest[id=a4021436-fd12-486b-9d65-cff25c5611c5, creationDate=2025-05-25T21:38:55.400422300, processTimeMs=91]从上面的 TTS Analytics 对应日志中,根据从 TTS 应用程序发送的消息,我们看到:

1、该应用程序在 Spring Cloud Stream 设置中充当消费者,从名为 post-tts-analytics 的 RabbitMQ 交换接收消息。

2、收到 PostTtsAnalyticsRequest 后,PostTtsAnalyticsConsumerService 会处理消息,这可能涉及存储分析数据或触发进一步作等业务逻辑。

3、处理 TtsAnalyticsRequest 的 AnalyticsController 的存在表明应用程序还公开了 REST 端点,可能用于手动测试或其他数据摄取。

Kafka 演示

我们现在通过设置环境变量 BINDER_TYPE=kafka 来运行这两个应用程序。生产者应用程序 TTS 与前面的示例一样运行,没有任何区别。

sql

. ____ _ __ _ _

/\\ / ___'_ __ _ _(_)_ __ __ _ \ \ \ \

( ( )\___ | '_ | '_| | '_ \/ _` | \ \ \ \

\\/ ___)| |_)| | | | | || (_| | ) ) ) )

' |____| .__|_| |_|_| |_\__, | / / / /

=========|_|==============|___/=/_/_/_/

:: Spring Boot :: (v3.4.5)

2025-05-26T23:31:21.842+01:00 INFO 17204 --- [tts-analytics] [ main] c.e.t.TtsAnalyticsApplication : No active profile set, falling back to 1 default profile: "default"

2025-05-26T23:31:24.238+01:00 INFO 17204 --- [tts-analytics] [ main] faultConfiguringBeanFactoryPostProcessor : No bean named 'errorChannel' has been explicitly defined. Therefore, a default PublishSubscribeChannel will be created.

2025-05-26T23:31:24.260+01:00 INFO 17204 --- [tts-analytics] [ main] faultConfiguringBeanFactoryPostProcessor : No bean named 'integrationHeaderChannelRegistry' has been explicitly defined. Therefore, a default DefaultHeaderChannelRegistry will be created.

2025-05-26T23:31:25.529+01:00 INFO 17204 --- [tts-analytics] [ main] o.s.b.w.embedded.tomcat.TomcatWebServer : Tomcat initialized with port 8090 (http)

2025-05-26T23:31:25.556+01:00 INFO 17204 --- [tts-analytics] [ main] o.apache.catalina.core.StandardService : Starting service [Tomcat]

2025-05-26T23:31:25.556+01:00 INFO 17204 --- [tts-analytics] [ main] o.apache.catalina.core.StandardEngine : Starting Servlet engine: [Apache Tomcat/10.1.40]

2025-05-26T23:31:25.673+01:00 INFO 17204 --- [tts-analytics] [ main] o.a.c.c.C.[Tomcat].[localhost].[/] : Initializing Spring embedded WebApplicationContext

2025-05-26T23:31:25.676+01:00 INFO 17204 --- [tts-analytics] [ main] w.s.c.ServletWebServerApplicationContext : Root WebApplicationContext: initialization completed in 3734 ms

2025-05-26T23:31:29.799+01:00 INFO 17204 --- [tts-analytics] [ main] o.s.c.s.m.DirectWithAttributesChannel : Channel 'tts-analytics.postTtsAnalytics-in-0' has 1 subscriber(s).

2025-05-26T23:31:30.167+01:00 INFO 17204 --- [tts-analytics] [ main] o.s.i.endpoint.EventDrivenConsumer : Adding {logging-channel-adapter:_org.springframework.integration.errorLogger} as a subscriber to the 'errorChannel' channel

2025-05-26T23:31:30.167+01:00 INFO 17204 --- [tts-analytics] [ main] o.s.i.channel.PublishSubscribeChannel : Channel 'tts-analytics.errorChannel' has 1 subscriber(s).

2025-05-26T23:31:30.167+01:00 INFO 17204 --- [tts-analytics] [ main] o.s.i.endpoint.EventDrivenConsumer : started bean '_org.springframework.integration.errorLogger'

2025-05-26T23:31:30.373+01:00 INFO 17204 --- [tts-analytics] [ main] o.s.c.s.binder.DefaultBinderFactory : Creating binder: kafka

2025-05-26T23:31:30.376+01:00 INFO 17204 --- [tts-analytics] [ main] o.s.c.s.binder.DefaultBinderFactory : Constructing binder child context for kafka

2025-05-26T23:31:30.980+01:00 INFO 17204 --- [tts-analytics] [ main] o.s.c.s.binder.DefaultBinderFactory : Caching the binder: kafka

2025-05-26T23:31:31.055+01:00 INFO 17204 --- [tts-analytics] [ main] o.a.k.clients.admin.AdminClientConfig : AdminClientConfig values:

............

2025-05-26T23:31:37.664+01:00 INFO 17204 --- [tts-analytics] [container-0-C-1] o.a.k.c.c.internals.ConsumerCoordinator : [Consumer clientId=consumer-analytics-group-2, groupId=analytics-group] Successfully joined group with generation Generation{generationId=1, memberId='consumer-analytics-group-2-2e0c644b-239a-444d-bedb-24be9d40df31', protocol='range'}

2025-05-26T23:31:37.674+01:00 INFO 17204 --- [tts-analytics] [container-0-C-1] o.a.k.c.c.internals.ConsumerCoordinator : [Consumer clientId=consumer-analytics-group-2, groupId=analytics-group] Finished assignment for group at generation 1: {consumer-analytics-group-2-2e0c644b-239a-444d-bedb-24be9d40df31=Assignment(partitions=[post-tts-analytics-0])}

2025-05-26T23:31:37.691+01:00 INFO 17204 --- [tts-analytics] [container-0-C-1] o.a.k.c.c.internals.ConsumerCoordinator : [Consumer clientId=consumer-analytics-group-2, groupId=analytics-group] Successfully synced group in generation Generation{generationId=1, memberId='consumer-analytics-group-2-2e0c644b-239a-444d-bedb-24be9d40df31', protocol='range'}

2025-05-26T23:31:37.692+01:00 INFO 17204 --- [tts-analytics] [container-0-C-1] o.a.k.c.c.internals.ConsumerCoordinator : [Consumer clientId=consumer-analytics-group-2, groupId=analytics-group] Notifying assignor about the new Assignment(partitions=[post-tts-analytics-0])

2025-05-26T23:31:37.696+01:00 INFO 17204 --- [tts-analytics] [container-0-C-1] k.c.c.i.ConsumerRebalanceListenerInvoker : [Consumer clientId=consumer-analytics-group-2, groupId=analytics-group] Adding newly assigned partitions: post-tts-analytics-0

2025-05-26T23:31:37.713+01:00 INFO 17204 --- [tts-analytics] [container-0-C-1] o.a.k.c.c.internals.ConsumerCoordinator : [Consumer clientId=consumer-analytics-group-2, groupId=analytics-group] Found no committed offset for partition post-tts-analytics-0

2025-05-26T23:31:37.736+01:00 INFO 17204 --- [tts-analytics] [container-0-C-1] o.a.k.c.c.internals.SubscriptionState : [Consumer clientId=consumer-analytics-group-2, groupId=analytics-group] Resetting offset for partition post-tts-analytics-0 to position FetchPosition{offset=0, offsetEpoch=Optional.empty, currentLeader=LeaderAndEpoch{leader=Optional[localhost:9092 (id: 1 rack: null)], epoch=0}}.

2025-05-26T23:31:37.738+01:00 INFO 17204 --- [tts-analytics] [container-0-C-1] o.s.c.s.b.k.KafkaMessageChannelBinder$2 : analytics-group: partitions assigned: [post-tts-analytics-0]

...........我们可以从上面的日志中看到以下内容:

1、Spring Cloud Stream 连接名为 tts-analytics.postTtsAnalytics-in-0 的输入绑定通道,并确认它有一个订阅者(消费者应用程序)。

2、紧接着,错误日志记录终结点将附加到 errorChannel,因此将记录消息处理中的任何异常,而不是以静默方式删除。

3、创建 Kafka 绑定器,构造其子上下文并缓存。

4、Kafka 消费者 (clientId=consumer-analytics-group-2) 成功加入 analytics-group,接收其分区分配 (post-tts-analytics-0) 并同步组并通知内部分配者。

接下来,Kafka 命令正在验证是否正确创建了所有 Kafka 资源:

sql

/ $ /opt/kafka/bin/kafka-topics.sh --bootstrap-server localhost:9092 --list

__consumer_offsets

post-tts-analytics

/ $ /opt/kafka/bin/kafka-topics.sh --bootstrap-server localhost:9092 --describe --topic post-tts-analytics

Topic: post-tts-analytics TopicId: bq8s8k8mTJCexcXcG_s-3A PartitionCount: 1 ReplicationFactor: 1 Configs: segment.bytes=1073741824

Topic: post-tts-analytics Partition: 0 Leader: 1 Replicas: 1 Isr: 1 Elr: LastKnownElr:

/ $ /opt/kafka/bin/kafka-consumer-groups.sh --bootstrap-server localhost:9092 --list

analytics-group

/ $ /opt/kafka/bin/kafka-consumer-groups.sh --bootstrap-server localhost:9092 --describe --group analytics-group

GROUP TOPIC PARTITION CURRENT-OFFSET LOG-END-OFFSET LAG CONSUMER-ID HOST CLIENT-ID

analytics-group post-tts-analytics 0 2 2 0 consumer-analytics-group-2-2e0c644b-239a-444d-bedb-24be9d40df31 /172.17.0.1 consumer-analytics-group-2

正如我们之前所做的那样,我们将调用相同的端点并测试整个流。

2025-05-26T23:59:25.601+01:00 INFO 19336 --- [tts] [nio-8080-exec-2] o.a.c.c.C.[Tomcat].[localhost].[/] : Initializing Spring DispatcherServlet 'dispatcherServlet'

2025-05-26T23:59:25.602+01:00 INFO 19336 --- [tts] [nio-8080-exec-2] o.s.web.servlet.DispatcherServlet : Initializing Servlet 'dispatcherServlet'

2025-05-26T23:59:25.604+01:00 INFO 19336 --- [tts] [nio-8080-exec-2] o.s.web.servlet.DispatcherServlet : Completed initialization in 1 ms

2025-05-26T23:59:25.785+01:00 INFO 19336 --- [tts] [nio-8080-exec-2] c.e.t.controller.TextToSpeechController : textToSpeech request: TtsRequest[text=Hi this is a complete test. From Kafka]

2025-05-26T23:59:26.992+01:00 INFO 19336 --- [tts] [nio-8080-exec-2] c.e.tts.service.TextToSpeechService : do Something with analytics response: TtsAnalyticsResponse[device=Mobile, countryIso=ZA]

Wrote synthesized speech to tts\output\7e82f0d0-050f-4e05-8f50-f39fc8549ae2.wav

2025-05-26T23:59:27.492+01:00 INFO 19336 --- [tts] [nio-8080-exec-2] c.e.t.m.PostTtsAnalyticsPublisherService : sendAnalytics: PostTtsAnalyticsRequest[id=7e82f0d0-050f-4e05-8f50-f39fc8549ae2, creationDate=2025-05-26T23:59:27.491530100, processTimeMs=1701]

2025-05-26T23:59:27.504+01:00 INFO 19336 --- [tts] [nio-8080-exec-2] o.s.c.s.binder.DefaultBinderFactory : Creating binder: kafka

2025-05-26T23:59:27.504+01:00 INFO 19336 --- [tts] [nio-8080-exec-2] o.s.c.s.binder.DefaultBinderFactory : Constructing binder child context for kafka

2025-05-26T23:59:27.793+01:00 INFO 19336 --- [tts] [nio-8080-exec-2] o.s.c.s.binder.DefaultBinderFactory : Caching the binder: kafka

2025-05-26T23:59:27.815+01:00 INFO 19336 --- [tts] [nio-8080-exec-2] o.s.c.s.b.k.p.KafkaTopicProvisioner : Using kafka topic for outbound: post-tts-analytics

2025-05-26T23:59:27.826+01:00 INFO 19336 --- [tts] [nio-8080-exec-2] o.a.k.clients.admin.AdminClientConfig : AdminClientConfig values:

..........

2025-05-26T23:59:28.970+01:00 INFO 19336 --- [tts] [nio-8080-exec-2] o.a.k.c.t.i.KafkaMetricsCollector : initializing Kafka metrics collector

2025-05-26T23:59:29.023+01:00 INFO 19336 --- [tts] [nio-8080-exec-2] o.a.kafka.common.utils.AppInfoParser : Kafka version: 3.8.1

2025-05-26T23:59:29.023+01:00 INFO 19336 --- [tts] [nio-8080-exec-2] o.a.kafka.common.utils.AppInfoParser : Kafka commitId: 70d6ff42debf7e17

2025-05-26T23:59:29.023+01:00 INFO 19336 --- [tts] [nio-8080-exec-2] o.a.kafka.common.utils.AppInfoParser : Kafka startTimeMs: 1748300369023

2025-05-26T23:59:29.044+01:00 INFO 19336 --- [tts] [ad | producer-1] org.apache.kafka.clients.Metadata : [Producer clientId=producer-1] Cluster ID: 5L6g3nShT-eMCtK--X86sw

2025-05-26T23:59:29.066+01:00 INFO 19336 --- [tts] [nio-8080-exec-2] o.s.c.s.m.DirectWithAttributesChannel : Channel 'tts.postTtsAnalytics-out-0' has 1 subscriber(s).

2025-05-26T23:59:36.998+01:00 INFO 19336 --- [tts] [nio-8080-exec-3] c.e.t.controller.TextToSpeechController : textToSpeech request: TtsRequest[text=Hi this is a complete test. From Kafka]

2025-05-26T23:59:37.007+01:00 INFO 19336 --- [tts] [nio-8080-exec-3] c.e.tts.service.TextToSpeechService : do Something with analytics response: TtsAnalyticsResponse[device=SmartTV, countryIso=US]

Wrote synthesized speech to tts\output\fe504b51-5149-4f6b-a65f-86eecf6daa66.wav

2025-05-26T23:59:37.158+01:00 INFO 19336 --- [tts] [nio-8080-exec-3] c.e.t.m.PostTtsAnalyticsPublisherService : sendAnalytics: PostTtsAnalyticsRequest[id=fe504b51-5149-4f6b-a65f-86eecf6daa66, creationDate=2025-05-26T23:59:37.158350700, processTimeMs=159]我们从上面的日志中可以看到:

1、DefaultBinderFactory 为 kafka 创建、构造子上下文,然后缓存该 binder 实例------这是我们选择 Kafka 的"魔术开关"。

2、KafkaTopicProvisioner 确认它将使用 post-tts-analytics 主题,确保该主题存在。

创建消息并将其发送到要由消费者处理的主题,如下所示。

sql

2025-05-26T23:59:26.739+01:00 INFO 17204 --- [tts-analytics] [nio-8090-exec-1] c.e.t.controller.AnalyticsController : doAnalytics request: TtsAnalyticsRequest[clientIp=0:0:0:0:0:0:0:1, userAgent=PostmanRuntime/7.44.0]

2025-05-26T23:59:29.272+01:00 INFO 17204 --- [tts-analytics] [container-0-C-1] c.e.t.m.PostTtsAnalyticsConsumerService : processAnalytics: PostTtsAnalyticsRequest[id=7e82f0d0-050f-4e05-8f50-f39fc8549ae2, creationDate=2025-05-26T23:59:27.491530100, processTimeMs=1701]

2025-05-26T23:59:37.004+01:00 INFO 17204 --- [tts-analytics] [nio-8090-exec-2] c.e.t.controller.AnalyticsController : doAnalytics request: TtsAnalyticsRequest[clientIp=0:0:0:0:0:0:0:1, userAgent=PostmanRuntime/7.44.0]

2025-05-26T23:59:37.168+01:00 INFO 17204 --- [tts-analytics] [container-0-C-1] c.e.t.m.PostTtsAnalyticsConsumerService : processAnalytics: PostTtsAnalyticsRequest[id=fe504b51-5149-4f6b-a65f-86eecf6daa66, creationDate=2025-05-26T23:59:37.158350700, processTimeMs=159]对于这些测试,RabbitMQ 和 Kafka 实例在本地运行,并使用 Docker 使用:

sql

docker run -d --name=kafka -p 9092:9092 apache/kafka

docker run -d --name=rabbitmq -p 5672:5672 -p 15672:15672 rabbitmq:4-management总结

1、与代理无关的消息传递:Spring Cloud Stream 提供了对消息传递系统(RabbitMQ、Kafka 等)的精简抽象,因此您可以编写一次生产者和消费者逻辑,只需更改环境变量即可切换代理。

2、关键组件:它引入了 Binders(特定代理的插件)、Bindings(指向目标的逻辑链接)和用于命令式发送的 StreamBridge API------所有这些都通过 Spring Boot 自动配置进行连接。

3、轻量级配置:重试和死信队列等复杂模式只需要几行 YAML 行(最大尝试次数、回退初始间隔),从而以最少的样板实现企业级消息传递。

4、测试策略:演示使用 TestChannelBinderConfiguration 以及 InputDestination/OutputDestination 和 CompositeMessageConverter 进行 JVM 内测试,以验证没有实际代理的端到端消息发布和使用。