3.业务监控

实现方式:

- 通过servicemonitor或者podmonitor实现

- 通过配置自动发现规则添加监控项

注意:需事先规划化监控项的标签,后面模版以及rule告警都会涉及到Label这个参数

bash

#Servicemonitor和Podmonitor编写的注意事项

ServiceMonitor的label需要跟prometheus中定义的serviceMonitorSelector一致

ServiceMonitor的endpoints中port时对应k8s service资源中的portname, 不是port number.

ServiceMonitor的selector.matchLabels需要匹配k8s service中的label

ServiceMonitor资源创建在prometheus的namespace下,使用namespaceSelector匹配要监控的k8s svc的ns.(operator Prometheus能识别到的只有default和monitoring)

PodMonitor的label 需要跟prometheus中定义的podMonitorSelector一致

PodMonitor的spec.podMetricsEndpoints.port 需要写pod中定义的port name,不能写port number。

PodMonitor的selector.matchLabels需要匹配k8s pod 中的label

PodMonitor资源创建在prometheus的namespace下,使用namespaceSelector匹配要监控的k8s pod的ns.(operator Prometheus能识别到的只有default和monitoring)3.1 通过配置Servicemonitor添加监控项

由于告警模版设置有:env和object_name,故写监控规则时监控项必须包含这两个label

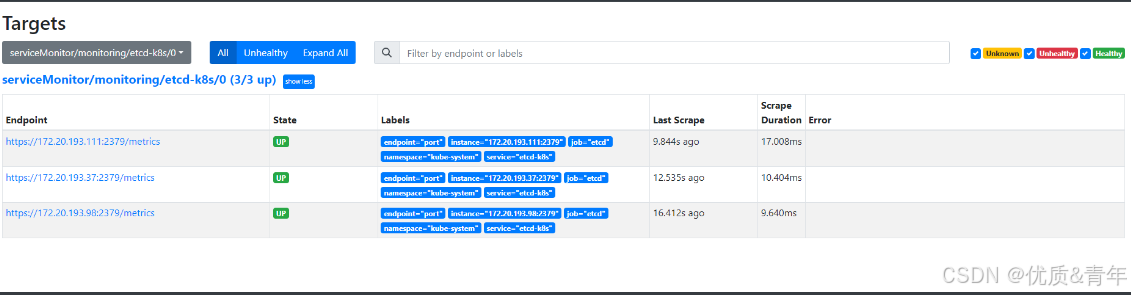

3.1 Etcd组件监控

- 给Etcd创建svc

- 给Etcd创建ServiceMonitor

- 给Etcd创建ep

根据架构图可以发现Operator Prometheus是通过ServiceMonitor关联监控项的,由于我的k8s是二进制安装的,etcd、kube-proxy等基础组件是没有svc的,故需要手动创建svc、ep、ServiceMonitor

bash

root@kcsmaster1:~/operator-prometheus# cat etcd-servicemonitor.yaml

#Etcd的Service

---

apiVersion: v1

kind: Service

metadata:

name: etcd-k8s

namespace: kube-system

labels:

k8s-app: etcd #这个label标签须和servicemonitor的保持一致

spec:

type: ClusterIP

clusterIP: None # 一定要设置 clusterIP:None

ports:

- name: etcd

port: 2379

#Etcd的endpoints

---

apiVersion: v1

kind: Endpoints

metadata:

name: etcd-k8s

namespace: kube-system

labels:

k8s-app: etcd

subsets:

- addresses:

- ip: 172.20.193.111 # 指定etcd节点地址,如果是集群则继续向下添加

nodeName: kcsmaster1.4457.safe-hy4

- ip: 172.20.193.37

nodeName: kcsmaster2.4457.safe-hy4

- ip: 172.20.193.98

nodeName: kcsmaster3.4457.safe-hy4

ports:

- name: etcd

port: 2379

#etcd的ServiceMonitor

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: etcd-k8s

namespace: monitoring

labels:

k8s-app: etcd-k8s

spec:

jobLabel: k8s-app

endpoints:

- port: port

interval: 15s

scheme: https

tlsConfig: #挂载的是prometheus pod里面的路径

caFile: /etc/prometheus/secrets/etcd-client-certs/etcd-ca.crt

certFile: /etc/prometheus/secrets/etcd-client-certs/etcd-client.crt

keyFile: /etc/prometheus/secrets/etcd-client-certs/etcd-client.key

relabelings: #设置监控标签(采集后的标签)

- sourceLabels: [__meta_kubernetes_endpoint_node_name]

targetLabel: object_name

- targetLabel: env

replacement: "hangyan-prod"

selector:

matchLabels:

k8s-app: etcd #需和etcd的svc label保持一致

namespaceSelector:

matchNames:

- kube-system

3.2 配置监控项自动发现并添加

之前介绍都是通过手动创建ServiceMonitor如果服务多了,配置起来就很麻烦,故通过kubernetes_sd_config配置自动发现监控项并自动添加至Prometheus server,需借助additionalScrapeConfigs这个参数

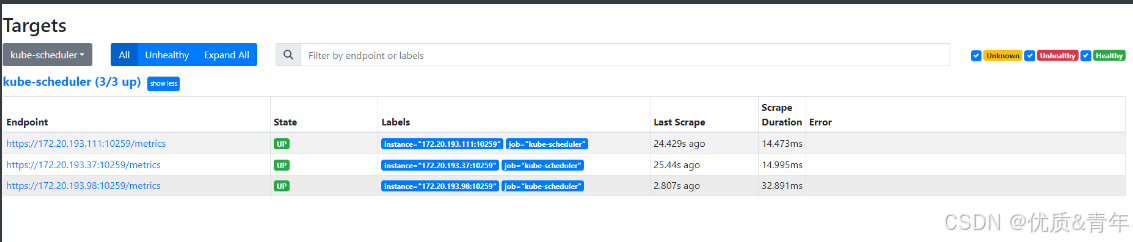

3.2 kube-sceduler组件监控

3.2.1 创建prometheus-additional.yaml文件

bash

#编写prometheus-additional.yaml

vim prometheus-additional.yaml

- job_name: 'kube-scheduler'

tls_config:

insecure_skip_verify: true

scheme: https

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

static_configs:

- targets: ['172.20.193.111:10259','172.20.193.37:10259','172.20.193.98:10259']

#创建additional-configs secret

kubectl create secret generic additional-configs --from-file=prometheus-additional.yaml

#验证规则是否同步

root@kcsmaster1:~/operator-prometheus# kubectl get secret prometheus-k8s -n monitoring -o jsonpath='{.data.prometheus\.yaml\.gz}' | base64 -d | gunzip|grep -A 10 kube-scheduler3.2.2 热加载Prometheus server使配置生效

bash

curl -X POST http://10.10.165.214:9090/-/reload #Prometheus Service的地址,多执行几次,执行一次可能没有生效

注意:如果热加载后还不生效,删除pod重建3.2.3 Prometheus web界面查看kube-scheduler是否被监控

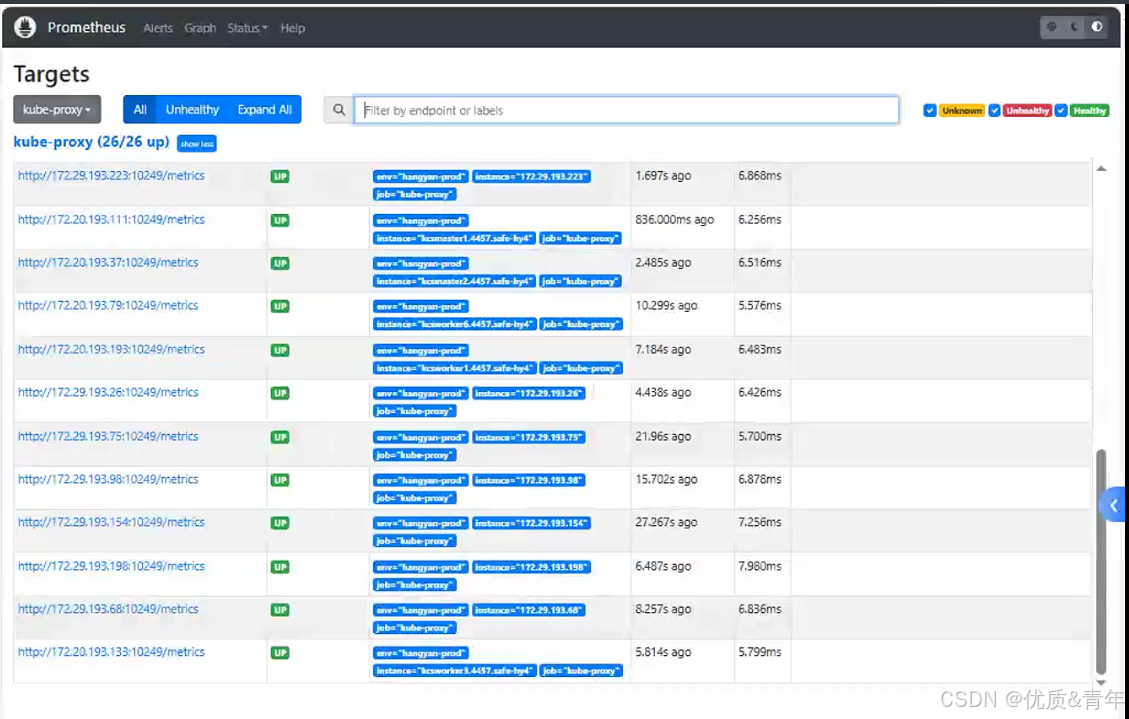

3.3 kube-proxy组价监控

3.3.1 修改prometheus-additional.yaml文件

bash

- job_name: 'kube-proxy'

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: [__address__]

regex: '(.*):.*'

target_label: __address__

replacement: '${1}:10249'

- action: replace

target_label: "env"

replacement: "hangyan-prod"

kubectl create secret generic additional-configs --from-file=prometheus-additional.yaml --dry-run=client -o yaml -n monitoring | kubectl apply -f -

#热加载

curl -X POST http://10.10.165.214:9090/-/reload #Prometheus Service的地址,多执行几次,执行一次可能没有生效 3.3.2 Prometheus web界面查看kube-proxy是否被监控

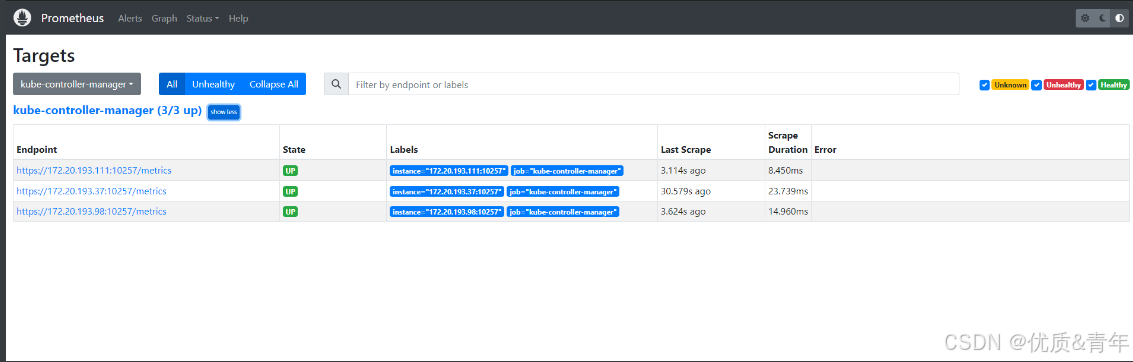

3.4 kube-controller-manager组件监控

3.4.1 修改kube-controller-manager.service文件

bash

vim /etc/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-controller-manager \

--allocate-node-cidrs=true \

--authentication-kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \

--authorization-kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \

--bind-address=0.0.0.0 \

--cluster-cidr=10.20.0.0/16 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \

--authorization-always-allow-paths=/metrics \ #添加这行

--leader-elect=true \

--node-cidr-mask-size=24 \

--root-ca-file=/etc/kubernetes/ssl/ca.pem \

--service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-cluster-ip-range=10.10.0.0/16 \

--use-service-account-credentials=true \

--v=2

Restart=always

RestartSec=5

#在prometheus-additional.yaml文件添加

- job_name: 'kube-controller-manager'

tls_config:

insecure_skip_verify: true

scheme: https

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

static_configs:

- targets: ['172.20.193.111:10257','172.20.193.37:10257','172.20.193.98:10257']3.4.2 修改prometheus-additional.yaml文件

bash

vim prometheus-additional.yaml

#添加一下内容

- job_name: 'kube-controller-manager'

tls_config:

insecure_skip_verify: true

scheme: https

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

static_configs:

- targets: ['172.20.193.111:10257','172.20.193.37:10257','172.20.193.98:10257']

kubectl create secret generic additional-configs --from-file=prometheus-additional.yaml --dry-run=client -o yaml -n monitoring | kubectl apply -f -

#热加载

curl -X POST http://10.10.165.214:9090/-/reload #Prometheus Service的地址,多执行几次,执行一次可能没有生效 3.4.3 Prometheus web界面查看kube-controller-manager是否被监控

3.5 后端服务监控

3.5.1 修改prometheus-additional.yaml文件

bash

vim prometheus-additional.yaml

#添加以下内容

- job_name: hy-appsotre

kubernetes_sd_configs:

- role: endpoints

namespaces:

names:

- hy-pcas

scrape_interval: 15s

scrape_timeout: 10s

metrics_path: /api/v1/metrics

scheme: http

relabel_configs:

- source_labels: [__meta_kubernetes_service_name]

regex: "(hy-pcas-app-mgmt-web)" #这个是前端服务没有开发metrics接口,故无需监控

action: drop

- source_labels: [__meta_kubernetes_pod_ip, __meta_kubernetes_endpoint_port_number] # 构建目标地址

regex: "(.+);(.+)"

target_label: __address__

replacement: "${1}:${2}"

- source_labels: [__meta_kubernetes_namespace]

target_label: namespace

- source_labels: [__meta_kubernetes_pod_name]

target_label: pod

- source_labels: [__meta_kubernetes_pod_label_app]

target_label: appstore

kubectl create secret generic additional-configs --from-file=prometheus-additional.yaml --dry-run=client -o yaml -n monitoring | kubectl apply -f -

curl -X POST http://10.10.165.214:9090/-/reload #Prometheus Service的地址,多执行几次,执行一次可能没有生效

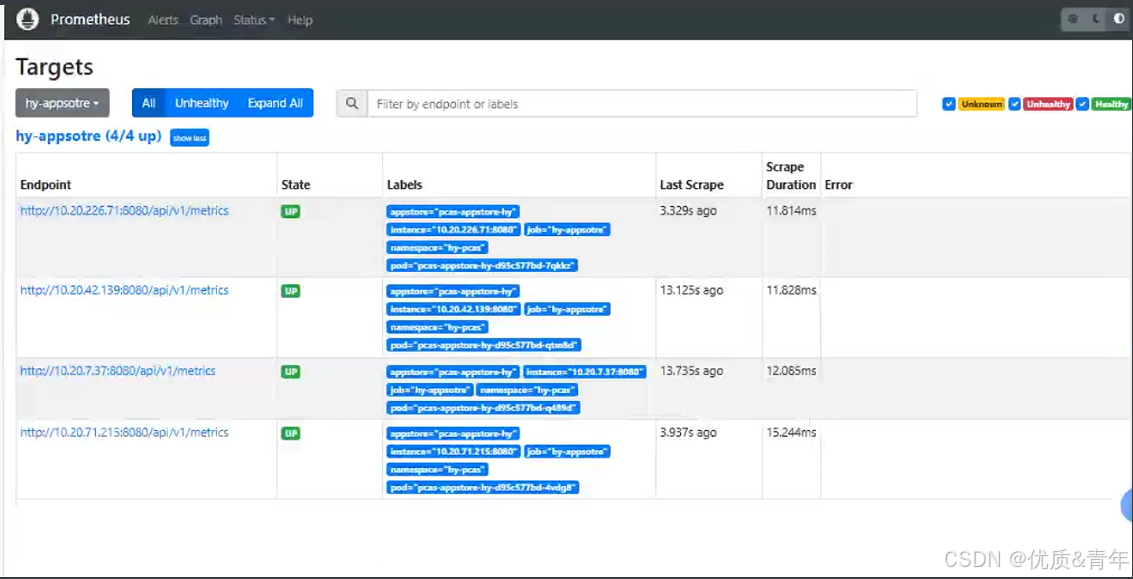

#注意:如果热加载后还不生效,删除pod重建3.5.2 Prometheus web界面查看后端服务是否被监控

3.6 redis的监控

3.6.1 部署redis-export插件

bash

vim redis-export.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: redis-exporter

name: redis-exporter

namespace: monitoring

spec:

replicas: 1

selector:

matchLabels:

k8s-app: redis-exporter

template:

metadata:

labels:

k8s-app: redis-exporter

spec:

containers:

- image: harbor.cloudtrust.com.cn:30381/hangyan/redis_exporter:latest

imagePullPolicy: IfNotPresent

name: redis-exporter

args: ["-redis.addr","redis-headless.redis.svc.cluster.local:6379","-redis.password","Pcas123_"]

ports:

- containerPort: 9121 # default export port number

name: http

---

apiVersion: v1

kind: Service

metadata:

name: redis-exporter

namespace: monitoring

labels:

k8s-app: redis-exporter

spec:

ports:

- name: http

port: 9121

protocol: TCP

selector:

k8s-app: redis-exporter

type: ClusterIP

#验证数据3.6.2 查看pod

bash

root@kcsmaster1:~/operator-prometheus# kubectl get pod -n monitoring |grep redis

redis-exporter-5bff57549-fkkgl 1/1 Running 0 4m40s3.6.3 验证能否获取到监控数据

bash

curl -s 10.10.29.201:9121/metrics | tail -5

# TYPE redis_uptime_in_seconds gauge

redis_uptime_in_seconds 767741

# HELP redis_watching_clients watching_clients metric

# TYPE redis_watching_clients gauge

redis_watching_clients 03.8 mongodb的监控

3.8.1 部署mongodb-export插件

bash

root@kcsmaster1:~/operator-prometheus# vim mongodb-export.yaml

---

apiVersion: v1

kind: Service

metadata:

name: mongodb-exporter

namespace: monitoring

labels:

app: mongodb-exporter

spec:

ports:

- port: 9216

protocol: TCP

targetPort: 9216

selector:

app: mongodb-exporter # 确保这里的标签一致

type: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mongodb-exporter

namespace: monitoring

spec:

replicas: 1

selector:

matchLabels:

app: mongodb-exporter

template:

metadata:

labels:

app: mongodb-exporter

spec:

containers:

- name: mongodb-exporter

image: harbor.cloudtrust.com.cn:30381/prometheus/mongodb-exporter:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9216

env:

- name: MONGODB_URI

value: "mongodb://root:Hqs87op9HYt@mongo-mongodb-headless.mongo-prod.svc.cluster.local:27017/admin"

- name: MONGODB_DIRECT_CONNECT

value: "false" # 对于副本集可能需要设置为 false3.6.2 查看pod

bash

root@kcsmaster1:~/operator-prometheus# kubectl get pod -n monitoring |grep mongo

mongodb-exporter-6c9f75888f-rlbpg 1/1 Running 0 15d3.6.3验证能否获取到监控数据

bash

root@kcsmaster1:~/operator-prometheus# curl -s http://10.10.77.26:9216/metrics|tail -n 5

# TYPE process_virtual_memory_bytes gauge

process_virtual_memory_bytes 2.415161344e+09

# HELP process_virtual_memory_max_bytes Maximum amount of virtual memory available in bytes.

# TYPE process_virtual_memory_max_bytes gauge

process_virtual_memory_max_bytes 1.8446744073709552e+193.6.3验证能否获取到监控数据

bash

root@kcsmaster1:~/operator-prometheus# curl -s http://10.10.77.26:9216/metrics|tail -n 5

# TYPE process_virtual_memory_bytes gauge

process_virtual_memory_bytes 2.415161344e+09

# HELP process_virtual_memory_max_bytes Maximum amount of virtual memory available in bytes.

# TYPE process_virtual_memory_max_bytes gauge

process_virtual_memory_max_bytes 1.8446744073709552e+19