使用PrivateUse1定义out-of-tree backend

pytorch tutotial Facilitating New Backend Integration by PrivateUse1

相关api:

torch.utils.rename_privateuse1_backend

distributed backend

pytorch tutorial Customize Process Group Backends Using Cpp Extensions

相关api:

torch.distributed.Backend.register_backend

torch.distributed.init_process_group

ProcessGroup::allreduce

fallback example1

fallback example2

分布式算子fallback处理

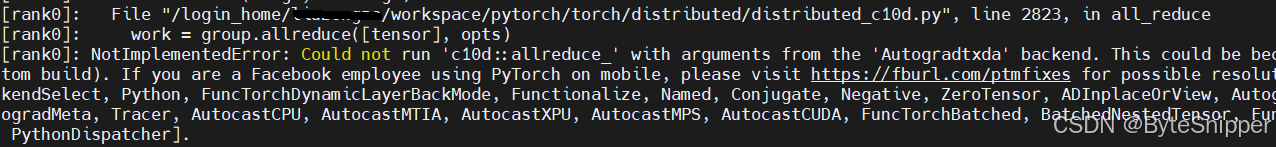

报错:

NotImplementedError: Could not run 'c10d::allreduce_' with arguments from the 'Autogradtxda' backend.

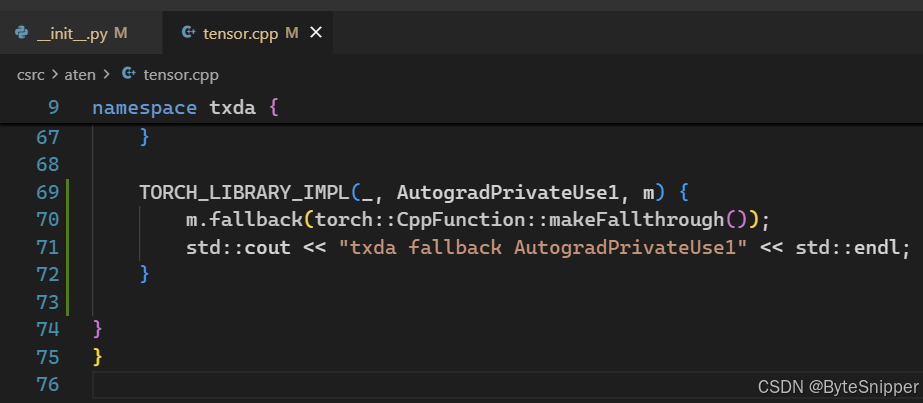

解决

参考CPU,为DispatchKey AutogradCPU(_表示整个module?)设置fallback:

以上修改会让此接口返回True:

python

print("AutogradCPU backend fallback registered:", torch._C._dispatch_has_backend_fallback(

torch._C.DispatchKey.AutogradCPU

))调试代码段

python

print(f"! rank {MY_RANK} privateuse1_backend_name: {torch._C._get_privateuse1_backend_name()}")

# 查看 c10d::allreduce_ 是否在 多个 dispatch key 上有 kernel

print("AutogradPrivateUse1 kernel registered:", torch._C._dispatch_has_kernel_for_dispatch_key(

"c10d::allreduce_",

torch._C.DispatchKey.AutogradPrivateUse1

)) # 应为 False

print("AutogradCPU kernel registered:", torch._C._dispatch_has_kernel_for_dispatch_key(

"c10d::allreduce_",

torch._C.DispatchKey.AutogradCPU

)) # 应为 False

print("CPU kernel registered:", torch._C._dispatch_has_kernel_for_dispatch_key(

"c10d::allreduce_",

torch._C.DispatchKey.CPU

)) # 应为 True

print("PrivateUse1 kernel registered:", torch._C._dispatch_has_kernel_for_dispatch_key(

"c10d::allreduce_",

torch._C.DispatchKey.PrivateUse1

)) # 应为 True

# 查看AutogradPrivateUse1, AutogradCPU, 是否有 backend fallback

print("AutogradPrivateUse1 backend fallback registered:", torch._C._dispatch_has_backend_fallback(

torch._C.DispatchKey.AutogradPrivateUse1

)) # 应为 True

print("AutogradCPU backend fallback registered:", torch._C._dispatch_has_backend_fallback(

torch._C.DispatchKey.AutogradCPU

)) # 应为 True

# export TORCH_LOGS=all IS NEEDED

print(torch._C._dispatch_dump("c10d::allreduce_"))