GAN图像生成

数据集简介

MNIST手写数字数据集是NIST数据集的子集,共有70000张手写数字图片,包含60000张训练样本和10000张测试样本。数字图片为二进制文件,图片大小为28*28,单通道。图片已经预先进行了尺寸归一化和中心化处理。

数据集网站:http://yann.lecun.com/exdb/mnist/

本案例将使用MNIST手写数字数据集来训练一个生成式对抗网络,使用该网络模拟生成手写数字图片。

数据集下载

python

# 数据下载

from download import download

url = "https://mindspore-website.obs.cn-north-4.myhuaweicloud.com/notebook/datasets/MNIST_Data.zip"

download(url, ".", kind="zip", replace=True)数据加载

python

import numpy as np

import mindspore.dataset as ds

batch_size = 128

latent_size = 100 # 隐码的长度

train_dataset = ds.MnistDataset(dataset_dir='./MNIST_Data/train')

test_dataset = ds.MnistDataset(dataset_dir='./MNIST_Data/test')

def data_load(dataset):

dataset1 = ds.GeneratorDataset(dataset, ["image", "label"], shuffle=True, python_multiprocessing=False)

# 数据增强

mnist_ds = dataset1.map(

operations=lambda x: (x.astype("float32"), np.random.normal(size=latent_size).astype("float32")),

output_columns=["image", "latent_code"])

mnist_ds = mnist_ds.project(["image", "latent_code"])

# 批量操作

mnist_ds = mnist_ds.batch(batch_size, True)

return mnist_ds

mnist_ds = data_load(train_dataset)

iter_size = mnist_ds.get_dataset_size()

print('Iter size: %d' % iter_size)运行结果

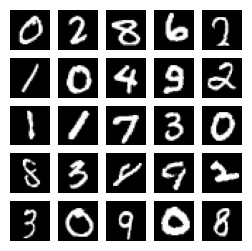

Iter size: 468数据集可视化

python

import matplotlib.pyplot as plt

data_iter = next(mnist_ds.create_dict_iterator(output_numpy=True))

figure = plt.figure(figsize=(3, 3))

cols, rows = 5, 5

for idx in range(1, cols * rows + 1):

image = data_iter['image'][idx]

figure.add_subplot(rows, cols, idx)

plt.axis("off")

plt.imshow(image.squeeze(), cmap="gray")

plt.show()

隐码构造

python

import random

import numpy as np

from mindspore import Tensor, dtype

# 利用随机种子创建一批隐码

np.random.seed(2323)

test_noise = Tensor(np.random.normal(size=(25, 100)), dtype.float32)

random.shuffle(test_noise)模型构建

生成器

python

from mindspore import nn

import mindspore.ops as ops

img_size = 28 # 训练图像长(宽)

class Generator(nn.Cell):

def __init__(self, latent_size, auto_prefix=True):

super(Generator, self).__init__(auto_prefix=auto_prefix)

self.model = nn.SequentialCell()

# [N, 100] -> [N, 128]

# 输入一个100维的0~1之间的高斯分布,然后通过第一层线性变换将其映射到256维

self.model.append(nn.Dense(latent_size, 128))

self.model.append(nn.ReLU())

# [N, 128] -> [N, 256]

self.model.append(nn.Dense(128, 256))

self.model.append(nn.BatchNorm1d(256))

self.model.append(nn.ReLU())

# [N, 256] -> [N, 512]

self.model.append(nn.Dense(256, 512))

self.model.append(nn.BatchNorm1d(512))

self.model.append(nn.ReLU())

# [N, 512] -> [N, 1024]

self.model.append(nn.Dense(512, 1024))

self.model.append(nn.BatchNorm1d(1024))

self.model.append(nn.ReLU())

# [N, 1024] -> [N, 784]

# 经过线性变换将其变成784维

self.model.append(nn.Dense(1024, img_size * img_size))

# 经过Tanh激活函数是希望生成的假的图片数据分布能够在-1~1之间

self.model.append(nn.Tanh())

def construct(self, x):

img = self.model(x)

return ops.reshape(img, (-1, 1, 28, 28))

net_g = Generator(latent_size)

net_g.update_parameters_name('generator')判别器

python

# 判别器

class Discriminator(nn.Cell):

def __init__(self, auto_prefix=True):

super().__init__(auto_prefix=auto_prefix)

self.model = nn.SequentialCell()

# [N, 784] -> [N, 512]

self.model.append(nn.Dense(img_size * img_size, 512)) # 输入特征数为784,输出为512

self.model.append(nn.LeakyReLU()) # 默认斜率为0.2的非线性映射激活函数

# [N, 512] -> [N, 256]

self.model.append(nn.Dense(512, 256)) # 进行一个线性映射

self.model.append(nn.LeakyReLU())

# [N, 256] -> [N, 1]

self.model.append(nn.Dense(256, 1))

self.model.append(nn.Sigmoid()) # 二分类激活函数,将实数映射到[0,1]

def construct(self, x):

x_flat = ops.reshape(x, (-1, img_size * img_size))

return self.model(x_flat)

net_d = Discriminator()

net_d.update_parameters_name('discriminator')损失函数和优化器

python

lr = 0.0002 # 学习率

# 损失函数

adversarial_loss = nn.BCELoss(reduction='mean')

# 优化器

optimizer_d = nn.Adam(net_d.trainable_params(), learning_rate=lr, beta1=0.5, beta2=0.999)

optimizer_g = nn.Adam(net_g.trainable_params(), learning_rate=lr, beta1=0.5, beta2=0.999)

optimizer_g.update_parameters_name('optim_g')

optimizer_d.update_parameters_name('optim_d')模型训练

python

import os

import time

import matplotlib.pyplot as plt

import mindspore as ms

from mindspore import Tensor, save_checkpoint

total_epoch = 200 # 训练周期数

batch_size = 128 # 用于训练的训练集批量大小

# 加载预训练模型的参数

pred_trained = False

pred_trained_g = './result/checkpoints/Generator99.ckpt'

pred_trained_d = './result/checkpoints/Discriminator99.ckpt'

checkpoints_path = "./result/checkpoints" # 结果保存路径

image_path = "./result/images" # 测试结果保存路径

# 生成器计算损失过程

def generator_forward(test_noises):

fake_data = net_g(test_noises)

fake_out = net_d(fake_data)

loss_g = adversarial_loss(fake_out, ops.ones_like(fake_out))

return loss_g

# 判别器计算损失过程

def discriminator_forward(real_data, test_noises):

fake_data = net_g(test_noises)

fake_out = net_d(fake_data)

real_out = net_d(real_data)

real_loss = adversarial_loss(real_out, ops.ones_like(real_out))

fake_loss = adversarial_loss(fake_out, ops.zeros_like(fake_out))

loss_d = real_loss + fake_loss

return loss_d

# 梯度方法

grad_g = ms.value_and_grad(generator_forward, None, net_g.trainable_params())

grad_d = ms.value_and_grad(discriminator_forward, None, net_d.trainable_params())

def train_step(real_data, latent_code):

# 计算判别器损失和梯度

loss_d, grads_d = grad_d(real_data, latent_code)

optimizer_d(grads_d)

loss_g, grads_g = grad_g(latent_code)

optimizer_g(grads_g)

return loss_d, loss_g

# 保存生成的test图像

def save_imgs(gen_imgs1, idx):

for i3 in range(gen_imgs1.shape[0]):

plt.subplot(5, 5, i3 + 1)

plt.imshow(gen_imgs1[i3, 0, :, :] / 2 + 0.5, cmap="gray")

plt.axis("off")

plt.savefig(image_path + "/test_{}.png".format(idx))

# 设置参数保存路径

os.makedirs(checkpoints_path, exist_ok=True)

# 设置中间过程生成图片保存路径

os.makedirs(image_path, exist_ok=True)

net_g.set_train()

net_d.set_train()

# 储存生成器和判别器loss

losses_g, losses_d = [], []

for epoch in range(total_epoch):

start = time.time()

for (iter, data) in enumerate(mnist_ds):

start1 = time.time()

image, latent_code = data

image = (image - 127.5) / 127.5 # [0, 255] -> [-1, 1]

image = image.reshape(image.shape[0], 1, image.shape[1], image.shape[2])

d_loss, g_loss = train_step(image, latent_code)

end1 = time.time()

if iter % 10 == 0:

print(f"Epoch:[{int(epoch):>3d}/{int(total_epoch):>3d}], "

f"step:[{int(iter):>4d}/{int(iter_size):>4d}], "

f"loss_d:{d_loss.asnumpy():>4f} , "

f"loss_g:{g_loss.asnumpy():>4f} , "

f"time:{(end1 - start1):>3f}s, "

f"lr:{lr:>6f}")

end = time.time()

print("time of epoch {} is {:.2f}s".format(epoch + 1, end - start))

losses_d.append(d_loss.asnumpy())

losses_g.append(g_loss.asnumpy())

# 每个epoch结束后,使用生成器生成一组图片

gen_imgs = net_g(test_noise)

save_imgs(gen_imgs.asnumpy(), epoch)

# 根据epoch保存模型权重文件

if epoch % 1 == 0:

save_checkpoint(net_g, checkpoints_path + "/Generator%d.ckpt" % (epoch))

save_checkpoint(net_d, checkpoints_path + "/Discriminator%d.ckpt" % (epoch))训练过程

.........time of epoch 1 is 108.85s

time of epoch 2 is 7.42s

time of epoch 3 is 7.23s

time of epoch 4 is 7.34s

time of epoch 5 is 7.63s

time of epoch 6 is 7.31s

time of epoch 7 is 7.75s

time of epoch 8 is 7.15s

time of epoch 9 is 7.41s

time of epoch 10 is 7.41s

time of epoch 11 is 7.67s

time of epoch 12 is 7.28s

CPU times: user 5min 52s, sys: 43 s, total: 6min 35s

Wall time: 3min 19s

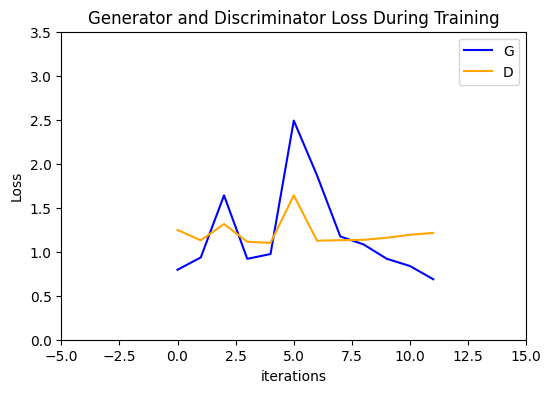

效果展示

python

plt.figure(figsize=(6, 4))

plt.title("Generator and Discriminator Loss During Training")

plt.plot(losses_g, label="G", color='blue')

plt.plot(losses_d, label="D", color='orange')

plt.xlim(-20, 220)

plt.ylim(0, 3.5)

plt.xlabel("iterations")

plt.ylabel("Loss")

plt.legend()

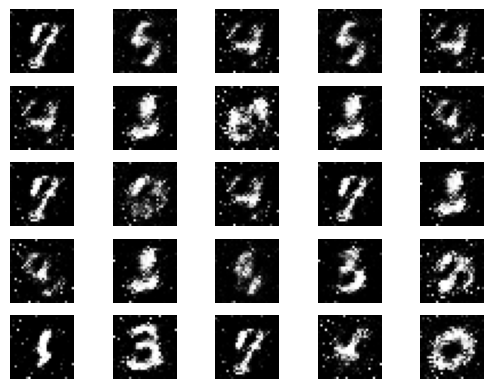

plt.show()可视化训练过程中的图像

python

import cv2

import matplotlib.animation as animation

# 将训练过程中生成的测试图转为动态图

image_list = []

for i in range(total_epoch):

image_list.append(cv2.imread(image_path + "/test_{}.png".format(i), cv2.IMREAD_GRAYSCALE))

show_list = []

fig = plt.figure(dpi=70)

for epoch in range(0, len(image_list), 5):

plt.axis("off")

show_list.append([plt.imshow(image_list[epoch], cmap='gray')])

ani = animation.ArtistAnimation(fig, show_list, interval=1000, repeat_delay=1000, blit=True)

ani.save('train_test.gif', writer='pillow', fps=1)

模型推理

python

import mindspore as ms

test_ckpt = './result/checkpoints/Generator199.ckpt'

parameter = ms.load_checkpoint(test_ckpt)

ms.load_param_into_net(net_g, parameter)

# 模型生成结果

test_data = Tensor(np.random.normal(0, 1, (25, 100)).astype(np.float32))

images = net_g(test_data).transpose(0, 2, 3, 1).asnumpy()

# 结果展示

fig = plt.figure(figsize=(3, 3), dpi=120)

for i in range(25):

fig.add_subplot(5, 5, i + 1)

plt.axis("off")

plt.imshow(images[i].squeeze(), cmap="gray")

plt.show()