一、简介

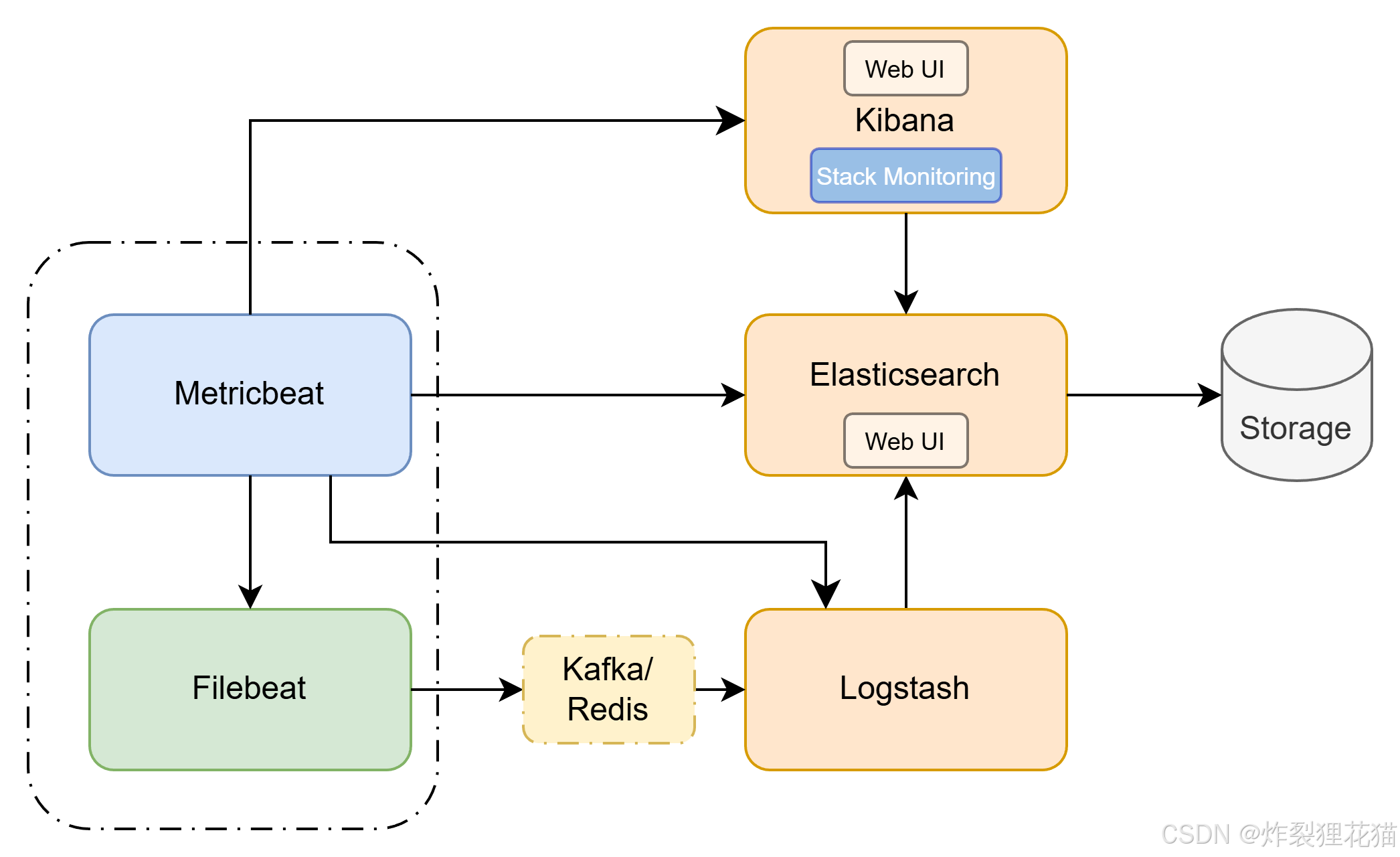

在现代企业IT系统中,应用规模持续扩大、架构不断演进,系统间的依赖关系愈加复杂。为了保证系统的可用性、性能和稳定性,建设一套完善的日志收集与分析平台变得至关重要。

ELK(Elasticsearch、Logstash、Kibana)是目前最广泛采用的开源日志系统解决方案之一。通过结合Filebeat、Heartbeat、Metricbeat等Beats组件,可以实现应用日志、系统指标、探活信息等多源数据的统一采集、处理与可视化,从而帮助运维团队和开发团队快速定位问题、监控系统健康状态、提升故障响应效率。

二、组件介绍

一个完整的日志采集体系通常由以下核心组件构成:

1. Elasticsearch(ES)

Elasticsearch是一个分布式搜索与分析引擎,用于存储和查询日志数据。其核心特性包括:

-

水平扩展能力强,支持海量数据;

-

高效全文检索、聚合分析;

-

多节点集群提供高可用性;

2. Logstash

Logstash是功能强大的数据处理管道,负责日志的解析、过滤与转换。其特点包括:

-

使用plugin机制支持输入、过滤、输出等多种流程;

-

支持grok、geoip、mutate等过滤器;

-

适用于需要复杂ETL或多格式日志处理的场景;

3. Kibana

Kibana是可视化与管理界面,用于展示和分析Elasticsearch中的数据。其功能包括:

-

仪表盘构建;

-

日志检索;

-

系统监控;

-

索引管理、可视化编辑;

4. Beats(Filebeat / Metricbeat等)

Beats是轻量级数据采集器,主要有:

-

Filebeat:采集日志文件;

-

Metricbeat:采集系统与应用指标;

-

Heartbeat:采集可用性信息(探活);

-

Auditbeat:采集审计信息;

Beats通常部署在应用服务器上,将原始日志转发到Logstash或Elasticsearch。

5. Kafka(可选)

在企业级架构中,Kafka常作为日志缓冲与解耦的中间件,提高系统可靠性与吞吐。

三、部署及配置

我们以常见架构为例,在Kubernetes环境中进行部署及配置说明。

假设:我们有前端服务和后端服务两种日志格式,内容格式分别如下:

#前端服务iwt-demo-site服务日志

!!!!!!!! ::: 10.3.3.220 ::: - ::: - ::: [14/Nov/2025:09:21:17 +0000] ::: "GET /assets/intro.8e730512.png HTTP/1.1" ::: 200 ::: 6320762 ::: "https://www.demo.com/assets/index.4fb9a4ac.css" ::: "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/142.0.0.0 Safari/537.36" ::: "10.3.2.154"

#后端服务iwt-demo-service服务日志

!!!!!!!! 2025:12:12 14:49:44.512 | IcerCarbonRegistryService | [main] | INFO | c.a.n.client.config.impl.CacheData | [fixed-project-demo-10.3.3.23_8848] [add-listener] ok, tenant=project-demo, dataId=project-demo.yaml, group=DEFAULT_GROUP, cnt=1

1. Elasticsearch

我们以单点es为例,创建es.yaml文件并部署到Kubernetes中,内容如下:

---

apiVersion: v1

kind: ConfigMap

metadata:

name: iwt-ctx-elk-es-config

namespace: log

data:

elasticsearch.yml: |-

cluster.name: "docker-cluster"

network.host: 0.0.0.0

xpack.security.enabled: true

xpack.security.http.ssl.enabled: false

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: iwt-ctx-elk-es

name: iwt-ctx-elk-es

namespace: log

spec:

minReadySeconds: 10

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: iwt-ctx-elk-es

strategy:

type: Recreate

template:

metadata:

creationTimestamp: null

labels:

app: iwt-ctx-elk-es

spec:

affinity:

nodeAffinity: {}

containers:

- env:

- name: discovery.type

value: single-node

- name: ES_JAVA_OPTS

value: -Xms4096m -Xmx12288m

- name: MINIMUM_MASTER_NODES

value: "1"

image: elastic/elasticsearch:8.19.8

imagePullPolicy: IfNotPresent

name: iwt-ctx-elk-es

ports:

- containerPort: 9200

name: db

protocol: TCP

- containerPort: 9300

name: transport

protocol: TCP

resources:

limits:

cpu: 4000m

memory: 16384Mi

requests:

cpu: 1000m

memory: 4096Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /usr/share/elasticsearch/data

name: data

- name: iwt-ctx-elk-es-config

mountPath: /usr/share/elasticsearch/config/elasticsearch.yml

subPath: elasticsearch.yml

dnsPolicy: ClusterFirst

imagePullSecrets:

- name: oci-container-registry

initContainers:

- command:

- /sbin/sysctl

- -w

- vm.max_map_count=262144

image: alpine:3.6

imagePullPolicy: IfNotPresent

name: iwt-ctx-elk-es-init

securityContext:

privileged: true

procMount: Default

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- hostPath:

path: /data/elk/elasticsearch/data

type: ""

name: data

- name: iwt-ctx-elk-es-config

configMap:

name: iwt-ctx-elk-es-config

---

apiVersion: v1

kind: Service

metadata:

namespace: log

name: iwt-ctx-elk-es

labels:

app: iwt-ctx-elk-es

spec:

ports:

- port: 9200

targetPort: 9200

name: db

- port: 9300

targetPort: 9300

name: transport

selector:

app: iwt-ctx-elk-es

type: NodePort初始化内置用户的密码:

$ kubectl -n log exec -it iwt-ctx-elk-es-6c6787fc49-6rxq8 -- /bin/sh

$ cd bin

$ ./elasticsearch-setup-passwords interactive

本示例中我们按照脚本的提示,将所有内置用户的密码设置为admin123,方便做配置演示。

2. Kibana

apiVersion: v1

kind: ConfigMap

metadata:

name: kibana-config

namespace: log

data:

kibana.yml: |

server.name: iwt-ctx-elk-kibana

i18n.locale: "en"

server.host: "0.0.0.0"

#自行修改encryptionKey内容

xpack.encryptedSavedObjects.encryptionKey: "b8f3e1a2d4c9b6f1a7d3e2c8f0b1a5d7"

elasticsearch.hosts: [ "http://iwt-ctx-elk-es:9200" ]

elasticsearch.username: "kibana_system"

elasticsearch.password: "admin123"

server.publicBaseUrl: "https://kibana.iwt.devops.com"

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: iwt-ctx-elk-kibana

namespace: log

labels:

app: iwt-ctx-elk-kibana

spec:

replicas: 1

revisionHistoryLimit: 10

progressDeadlineSeconds: 600

selector:

matchLabels:

app: iwt-ctx-elk-kibana

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

template:

metadata:

labels:

app: iwt-ctx-elk-kibana

name: iwt-ctx-elk-kibana

spec:

containers:

- name: kibana

image: docker.elastic.co/kibana/kibana:8.19.8

volumeMounts:

- name: kibana-config-volume

mountPath: /usr/share/kibana/config/kibana.yml

subPath: kibana.yml

imagePullPolicy: IfNotPresent

command: ["sh", "-c", "/usr/share/kibana/bin/kibana"]

ports:

- containerPort: 5601

protocol: TCP

env:

- name: ELASTICSEARCH_URL

value: http://iwt-ctx-elk-es:9200

- name: ELASTICSEARCH_HOSTS

value: http://iwt-ctx-elk-es:9200

- name: NODE_OPTIONS

value: --max-old-space-size=6144

resources:

requests:

cpu: "1"

memory: 2Gi

limits:

cpu: "4"

memory: 8Gi

restartPolicy: Always

terminationGracePeriodSeconds: 30

imagePullSecrets:

- name: oci-container-registry

dnsPolicy: ClusterFirst

schedulerName: default-scheduler

volumes:

- name: kibana-config-volume

configMap:

name: kibana-config

---

apiVersion: v1

kind: Service

metadata:

name: iwt-ctx-elk-kibana

namespace: log

labels:

app: iwt-ctx-elk-kibana

spec:

ports:

- name: http

port: 5601

protocol: TCP

targetPort: 5601

selector:

app: iwt-ctx-elk-kibana

type: NodePort3. Logstash

Logstash接收Filebeat发送的日志数据,并按照一定规则处理(如本示例中,前后端日志采用不同的filter处理),然后发送到es中。

---

apiVersion: v1

kind: ConfigMap

metadata:

name: iwt-ctx-elk-logstash-config

namespace: log

data:

logstash.yml: |-

http.host: "0.0.0.0"

path.config: /usr/share/logstash/conf.d/*.conf

change.conf: |-

input {

beats {

port => 5044

}

}

filter {

#后端日志处理

if [logtype] =~ /-service$/ {

grok {

match => {"message" => "^!{8}\s+(?<timestamp>\d{4}[:\-]\d{2}[:\-]\d{2} \d{2}:\d{2}:\d{2}(?:[.,]\d{3})?)\s*\|\s*%{DATA:contextName}\s*\|\s*%{DATA:thread}\s*\|\s*(?:\[(?<traceId>[^\]]+)\]\s*\|)?\s*%{WORD:level}\s*\|\s*%{DATA:logger}\s*\|\s*%{GREEDYDATA:msg}$"}

}

mutate {

remove_field => ["agent","ecs","host","log","input"]

}

date {

match => ["timestamp", "yyyy:MM:dd HH:mm:ss.SSS", "yyyy-MM-dd HH:mm:ss.SSS"]

target => "@timestamp"

timezone => "Asia/Shanghai"

}

}

#前端日志处理

if [logtype] =~ /-site$/ {

grok {

match => { "message" => '^!{8} ::: %{IP:remote_addr} ::: %{DATA:ident} ::: %{DATA:remote_user} ::: \[%{HTTPDATE:time_local}\] ::: "%{WORD:method} %{DATA:request} HTTP/%{NUMBER:http_version}" ::: %{NUMBER:status} ::: %{NUMBER:body_bytes_sent} ::: "%{DATA:http_referer}" ::: "%{DATA:http_user_agent}" ::: "%{DATA:http_x_forwarded_for}"$' }

}

mutate {

remove_field => ["agent", "ecs", "host", "log", "input"]

}

date {

match => ["time_local", "dd/MMM/yyyy:HH:mm:ss Z"]

target => "@timestamp"

}

}

}

#输出到es中

output {

elasticsearch {

hosts => ["iwt-ctx-elk-es:9200"]

index => "iwt-%{[logtype]}-%{+YYYY-MM}"

user => "elastic"

password => "admin123"

}

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: iwt-ctx-elk-logstash

namespace: log

labels:

app: iwt-ctx-elk-logstash

spec:

replicas: 1

selector:

matchLabels:

app: iwt-ctx-elk-logstash

template:

metadata:

labels:

app: iwt-ctx-elk-logstash

name: iwt-ctx-elk-logstash

spec:

imagePullSecrets:

- name: oci-container-registry

containers:

- name: iwt-ctx-elk-logstash

image: docker.elastic.co/logstash/logstash:8.19.8

ports:

- containerPort: 5044

protocol: TCP

name: transfer

- containerPort: 9600

protocol: TCP

name: config

resources:

limits:

cpu: 2000m

memory: 16Gi

requests:

cpu: 418m

memory: 1Gi

volumeMounts:

- name: iwt-ctx-elk-logstash-config

mountPath: /usr/share/logstash/conf.d/change.conf

subPath: change.conf

- name: iwt-ctx-elk-logstash-config

mountPath: /usr/share/logstash/config/logstash.yml

subPath: logstash.yml

volumes:

- name: iwt-ctx-elk-logstash-config

configMap:

name: iwt-ctx-elk-logstash-config

---

apiVersion: v1

kind: Service

metadata:

labels:

app: iwt-ctx-elk-logstash

name: iwt-ctx-elk-logstash

namespace: log

spec:

ports:

- name: transfer

port: 5044

protocol: TCP

targetPort: 5044

- name: http

port: 9600

protocol: TCP

targetPort: 9600

selector:

app: iwt-ctx-elk-logstash

type: NodePort4. Filebeat

Filebeat收集原始日志并发送到Logstash中,可以添加logtype字段方便Logstash处理;注意要启用http端口,用来给Metricbeat收集监控数据。

---

apiVersion: v1

kind: ConfigMap

metadata:

name: iwt-ctx-elk-filebeat-config

namespace: log

labels:

app: iwt-ctx-elk-filebeat

data:

filebeat.yml: |-

http:

enabled: true

host: 0.0.0.0

port: 5066

filebeat.config:

inputs:

# Mounted `iwt-ctx-elk-filebeat-inputs` configmap:

path: ${path.config}/inputs.d/*.yml

# Reload inputs configs as they change:

reload.enabled: true

reload.period: 60s

modules:

path: ${path.config}/modules.d/*.yml

# Reload module configs as they change:

reload.enabled: false

#输出到logstash中进行处理

output.logstash:

hosts: ["iwt-ctx-elk-logstash:5044"]

bulk_max_size: 4096

---

apiVersion: v1

kind: ConfigMap

metadata:

name: iwt-ctx-elk-filebeat-inputs

namespace: log

labels:

app: iwt-ctx-elk-filebeat

data:

filebeat-inputs.yml: |-

- type: log

enabled: true

backoff: "1s"

tail_files: true

multiline:

pattern: '^!{8}'

negate: true

match: after

paths:

- /data/logs/iwt-demo-site/access.log

fields:

logtype: iwt-demo-site

fields_under_root: true

- type: log

enabled: true

backoff: "1s"

tail_files: true

multiline:

pattern: '^!{8}'

negate: true

match: after

paths:

- /data/logs/iwt-demo-service/*.log

fields:

logtype: iwt-demo-service

fields_under_root: true

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: iwt-ctx-elk-filebeat

namespace: log

labels:

app: iwt-ctx-elk-filebeat

spec:

replicas: 1

selector:

matchLabels:

app: iwt-ctx-elk-filebeat

template:

metadata:

labels:

app: iwt-ctx-elk-filebeat

spec:

containers:

- name: iwt-ctx-elk-filebeat

image: docker.elastic.co/beats/filebeat:8.19.8

ports:

- containerPort: 5066

name: http-metrics

protocol: TCP

args: [

"-c", "/etc/filebeat.yml",

"-e",

]

securityContext:

runAsUser: 0

# If using Red Hat OpenShift uncomment this:

#privileged: true

resources:

limits:

cpu: 2000m

memory: 8Gi

requests:

cpu: 512m

memory: 1Gi

volumeMounts:

- name: config

mountPath: /etc/filebeat.yml

readOnly: true

subPath: filebeat.yml

- name: app-logs

mountPath: /data/logs

- name: inputs

mountPath: /usr/share/filebeat/inputs.d

readOnly: true

- name: data

mountPath: /usr/share/filebeat/data

volumes:

- name: config

configMap:

defaultMode: 0600

name: iwt-ctx-elk-filebeat-config

- name: app-logs

hostPath:

path: /data/logs

- name: inputs

configMap:

defaultMode: 0600

name: iwt-ctx-elk-filebeat-inputs

# data folder stores a registry of read status for all files, so we don't send everything again on a Filebeat pod restart

- name: data

hostPath:

path: /data/elk/filebeat

---

apiVersion: v1

kind: Service

metadata:

labels:

app: iwt-ctx-elk-filebeat

name: iwt-ctx-elk-filebeat

namespace: log

spec:

ports:

- name: transfer

port: 5066

protocol: TCP

targetPort: 5066

selector:

app: iwt-ctx-elk-filebeat

type: NodePort5. Metricbeat

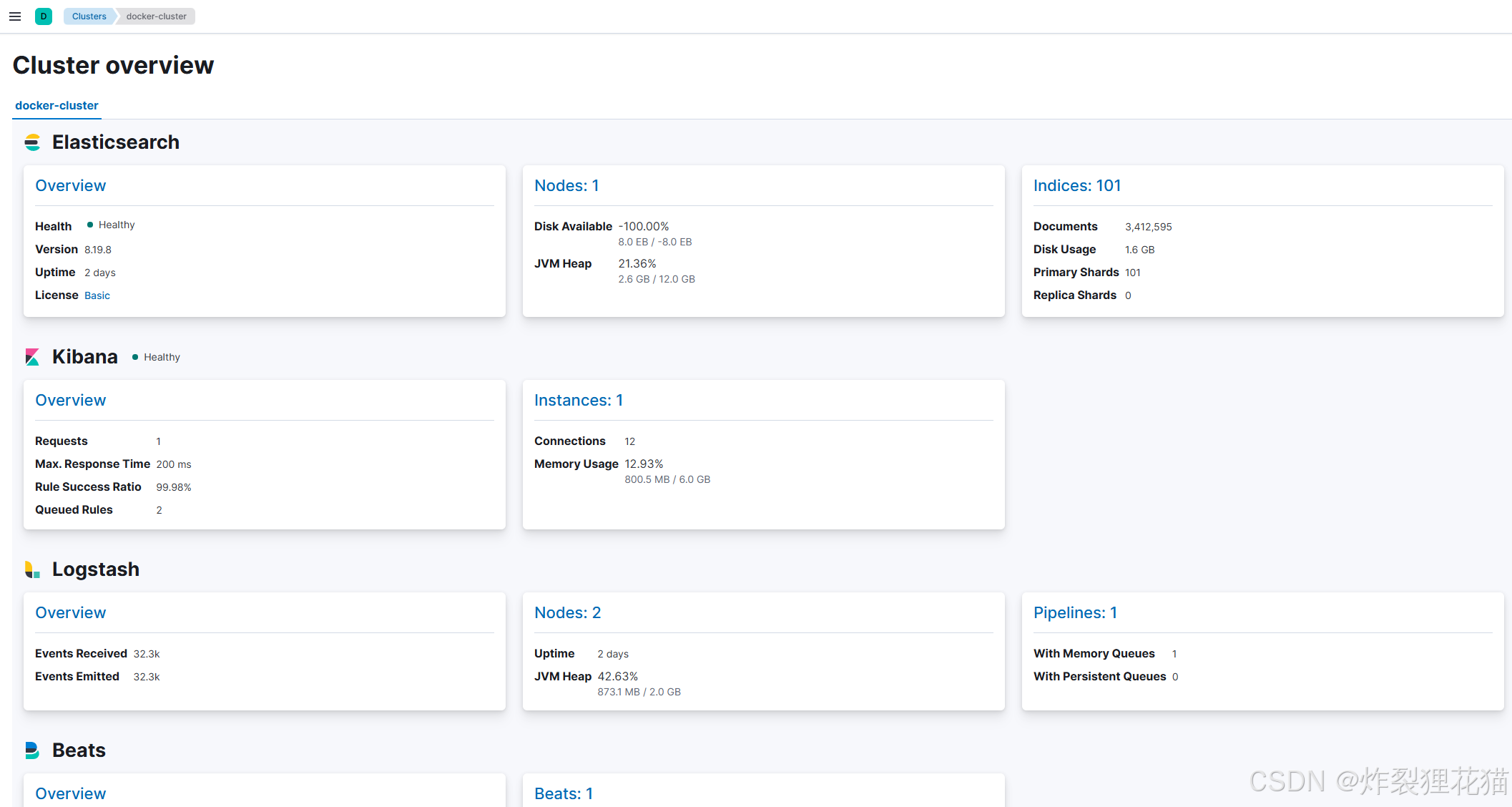

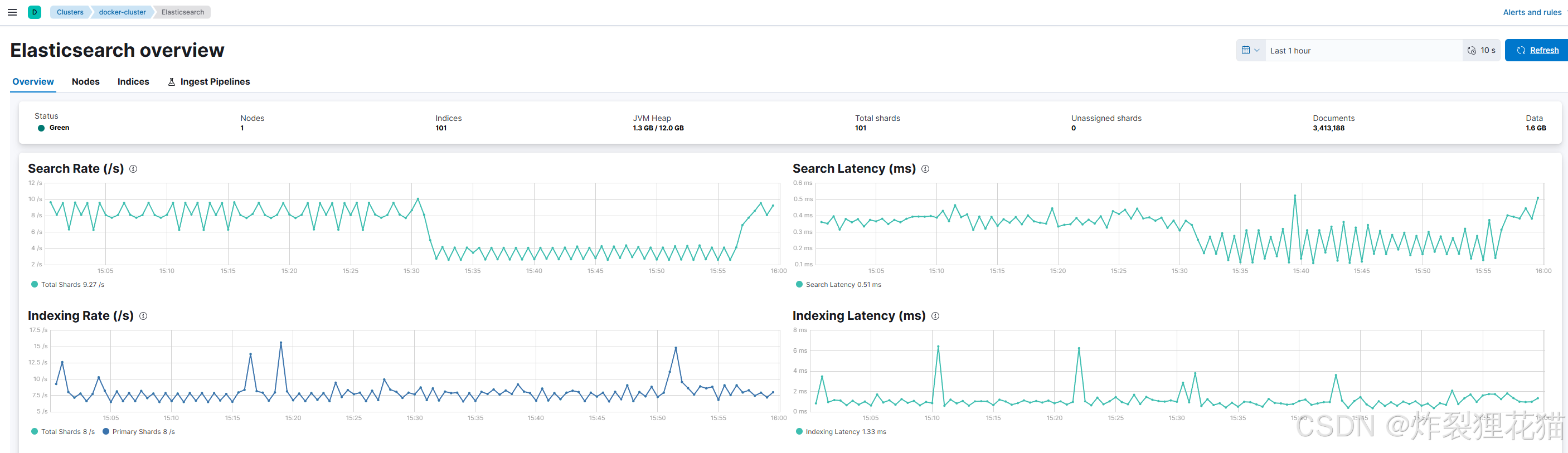

本示例中,Metricbeat用来收集以上组件的监控数据,并发送到es中;Kibana的Stack Monitoring模块会自动生成监控仪表盘;

apiVersion: v1

kind: ConfigMap

metadata:

name: metricbeat-elasticsearch

namespace: log

data:

# 主配置文件

metricbeat.yml: |-

metricbeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

queue.mem:

events: 4096

setup.kibana:

host: "http://iwt-ctx-elk-kibana:5601"

username: "elastic"

password: "admin123"

output.elasticsearch:

hosts: ["http://iwt-ctx-elk-es:9200"]

username: "elastic"

password: "admin123"

bulk_max_size: 2048

monitoring:

enabled: true

elasticsearch:

hosts: ["http://iwt-ctx-elk-es:9200"]

username: "elastic"

password: "admin123"

pipeline: "xpack-monitoring-8"

#Elasticsearch module

elasticsearch.yml: |-

- module: elasticsearch

xpack.enabled: true

period: 30s

hosts: ["http://iwt-ctx-elk-es:9200"]

username: "elastic"

password: "admin123"

cluster_stats: true

node: true

enabled: true

#kibana module

kibana.yml: |-

- module: kibana

xpack.enabled: true

period: 30s

hosts: ["http://iwt-ctx-elk-kibana:5601"]

username: "elastic"

password: "admin123"

enabled: true

#Logstash module

logstash.yml: |-

- module: logstash

xpack.enabled: true

period: 30s

hosts: ["http://iwt-ctx-elk-logstash:9600"]

username: "elastic"

password: "admin123"

enabled: true

#filebeat module

beat.yml: |-

- module: beat

xpack.enabled: true

period: 30s

hosts: ["http://iwt-ctx-elk-filebeat:5066"]

username: "elastic"

password: "admin123"

enabled: true

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: metricbeat-es-monitor

namespace: log

labels:

app: metricbeat-es

spec:

replicas: 1

selector:

matchLabels:

app: metricbeat-es

template:

metadata:

labels:

app: metricbeat-es

spec:

containers:

- name: metricbeat

image: docker.elastic.co/beats/metricbeat:8.19.0

args: ["-e"]

volumeMounts:

- name: config

mountPath: /usr/share/metricbeat/metricbeat.yml

subPath: metricbeat.yml

- name: modules

mountPath: /usr/share/metricbeat/modules.d

volumes:

- name: config

configMap:

name: metricbeat-elasticsearch

items:

- key: metricbeat.yml

path: metricbeat.yml

- name: modules

configMap:

name: metricbeat-elasticsearch

items:

- key: elasticsearch.yml

path: elasticsearch.yml

- key: kibana.yml

path: kibana.yml

- key: logstash.yml

path: logstash.yml

- key: beat.yml

path: beat.yml四、Web UI

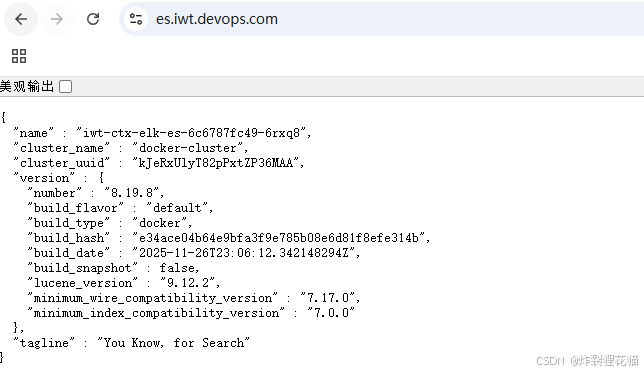

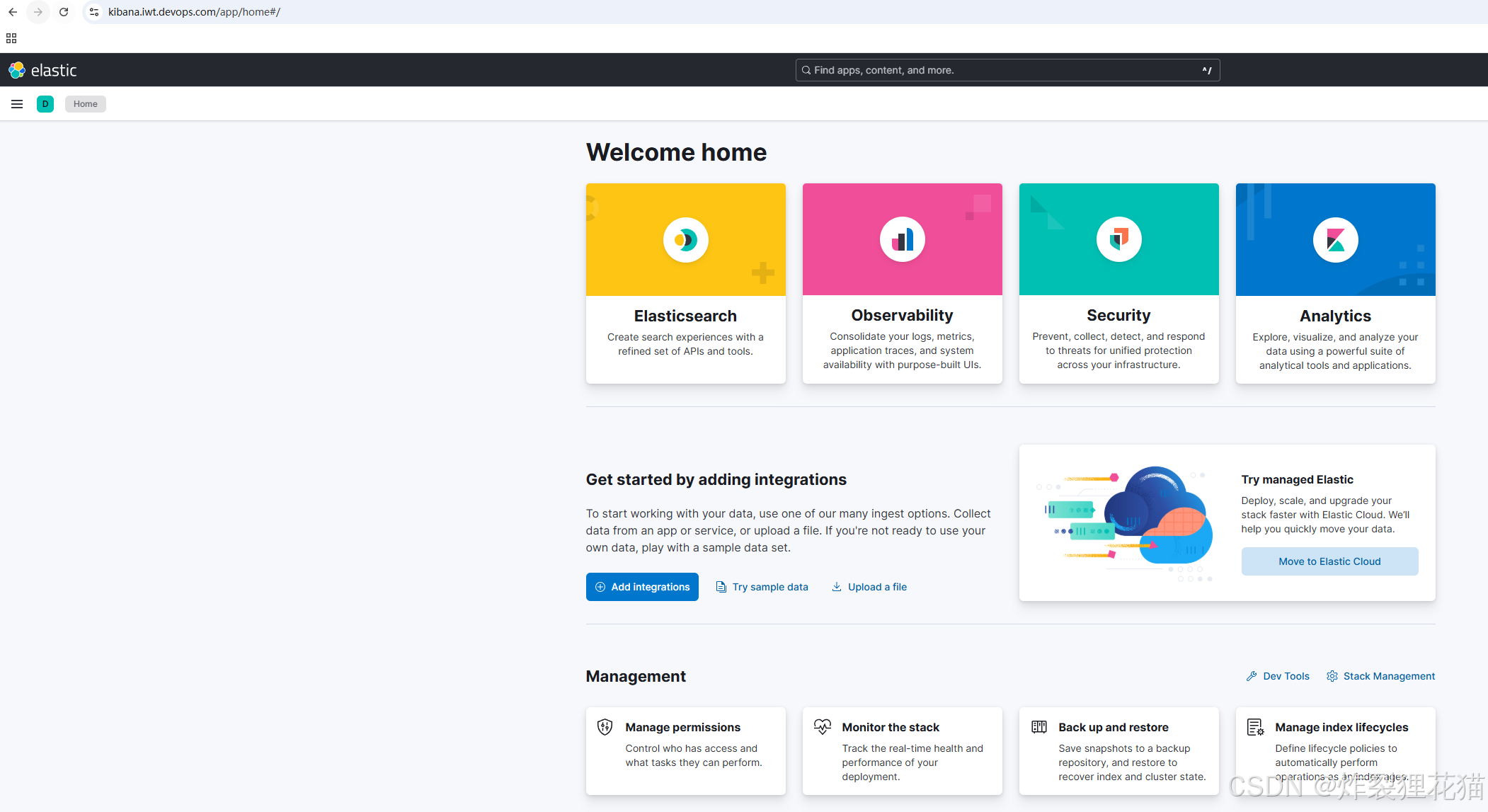

使用Ingress等组件,把Elasticsearch和Kibana的Service代理为域名,供浏览器访问。

1. Elasticsearch

2. Kibana

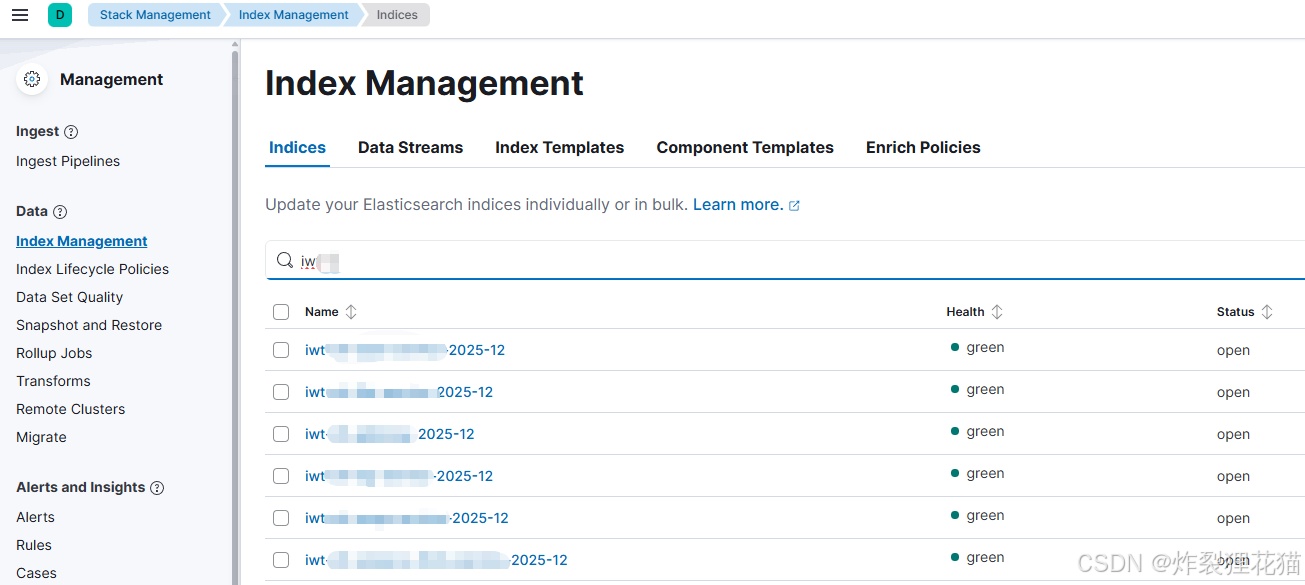

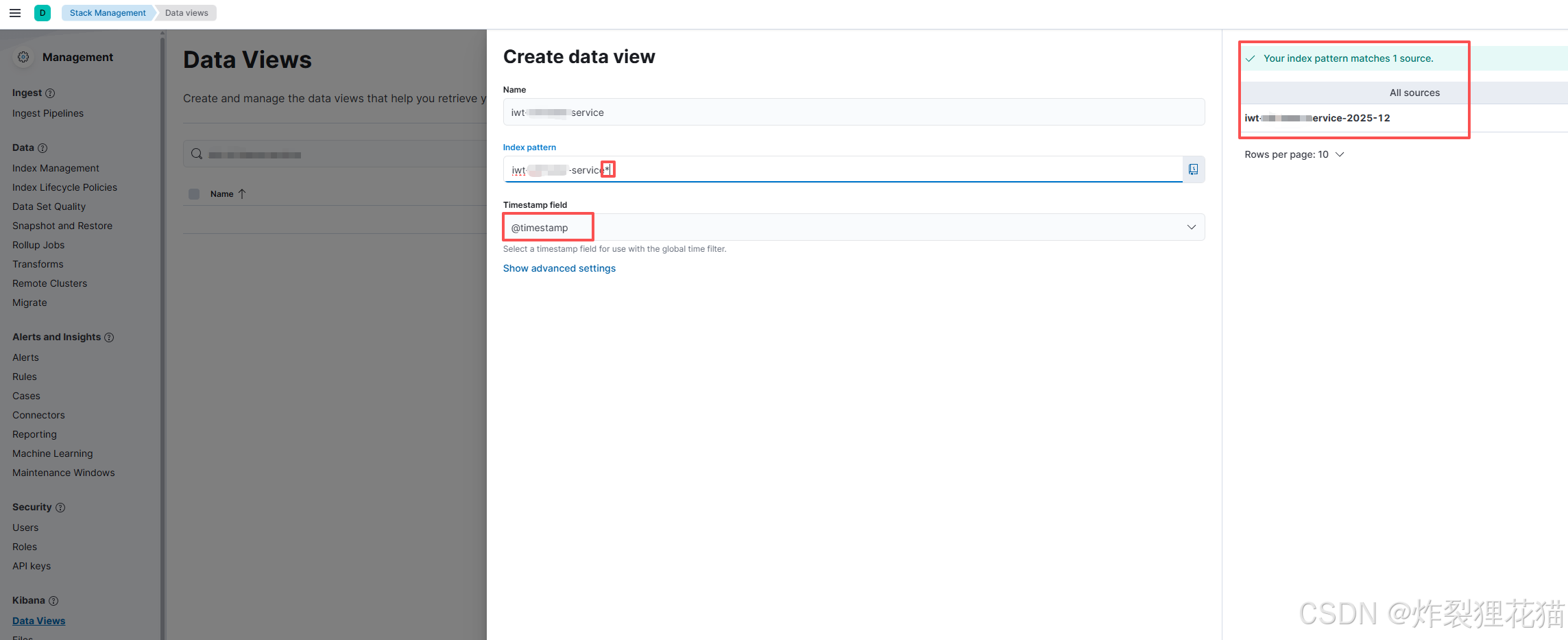

Data Views

依次点击Management >> Stack Management >> Index Management ,可以看到很多服务日志对应的Index数据:

依次点击Management >> Stack Management >> Data Views ,创建视图供普通用户日志检索使用:

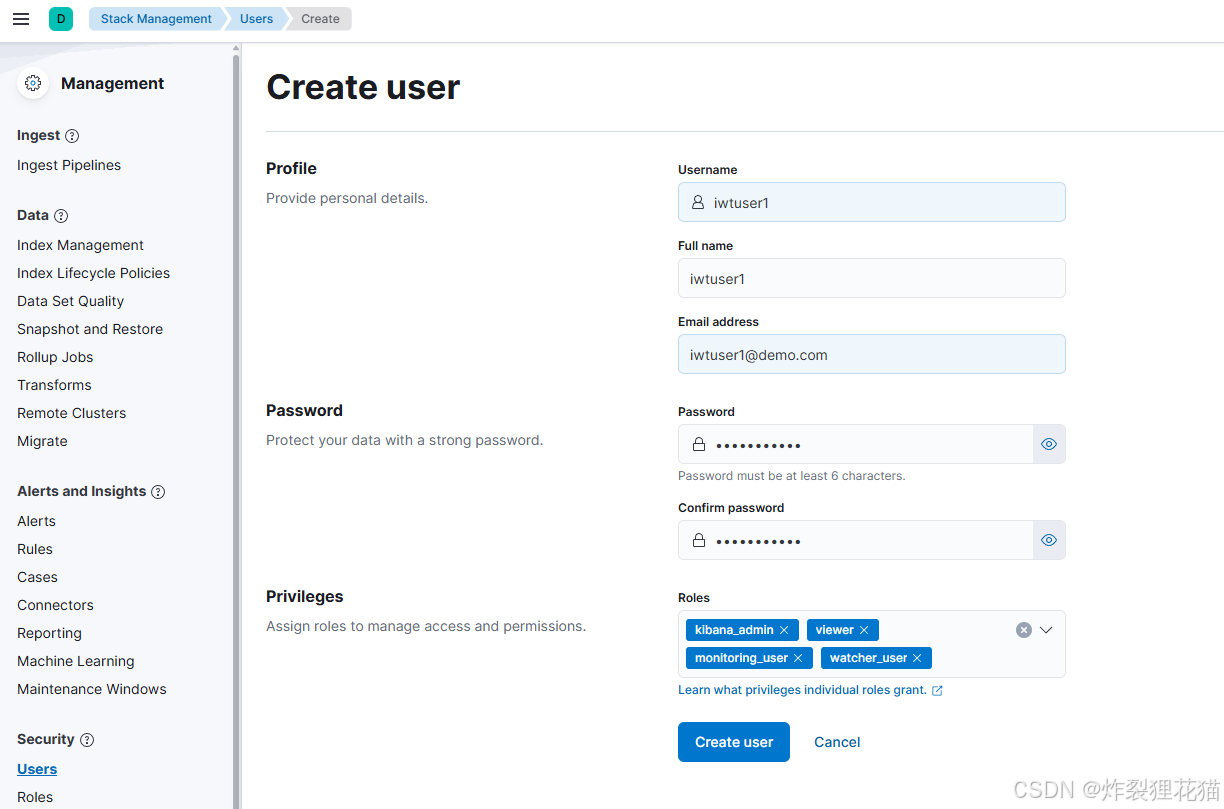

依次点击Management >> Stack Management >> Security >> Users,创建用户给使用者(生产环境中,可以创建Roles并做细粒度的权限控制):

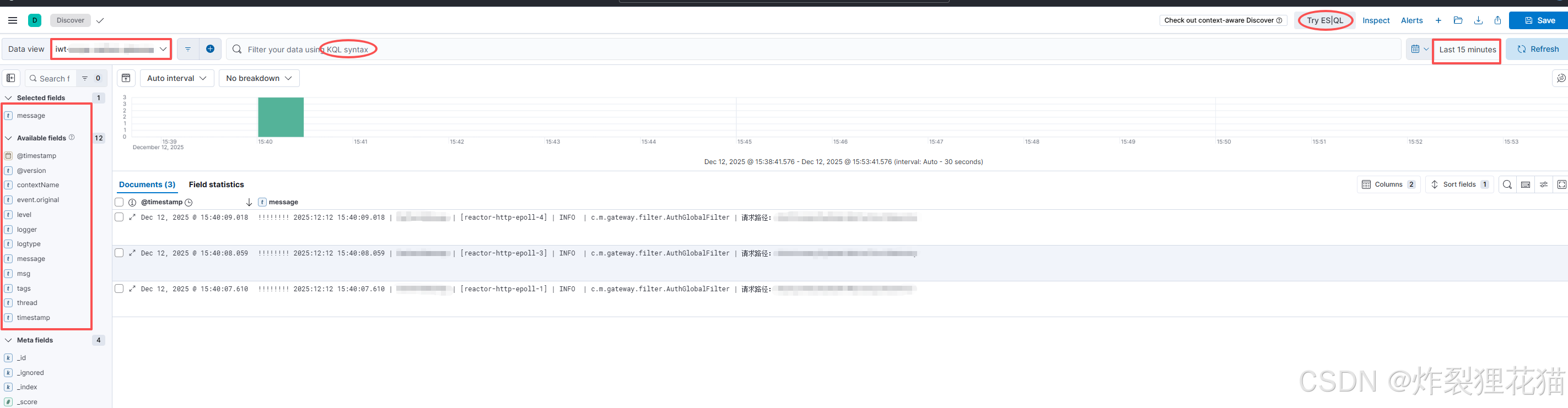

现在新用户登录Kibana之后,依次点击Analytics>> Discover,可以查看相关视图,并做日志检索:

Stack Monitoring

依次点击Management >> Stack Monitoring,可以查看各组件的运行状态:

以上是ELK的基础用法,现在企业级可观测性平台往往会引入更多组件,以整合日志、指标、链路追踪、安全、存储等,可能会涉及到Elastic APM Agent、Fluent Bit、Loki等;这些将会在后面的可观测性平台方案对比中详细介绍。