标题

引言

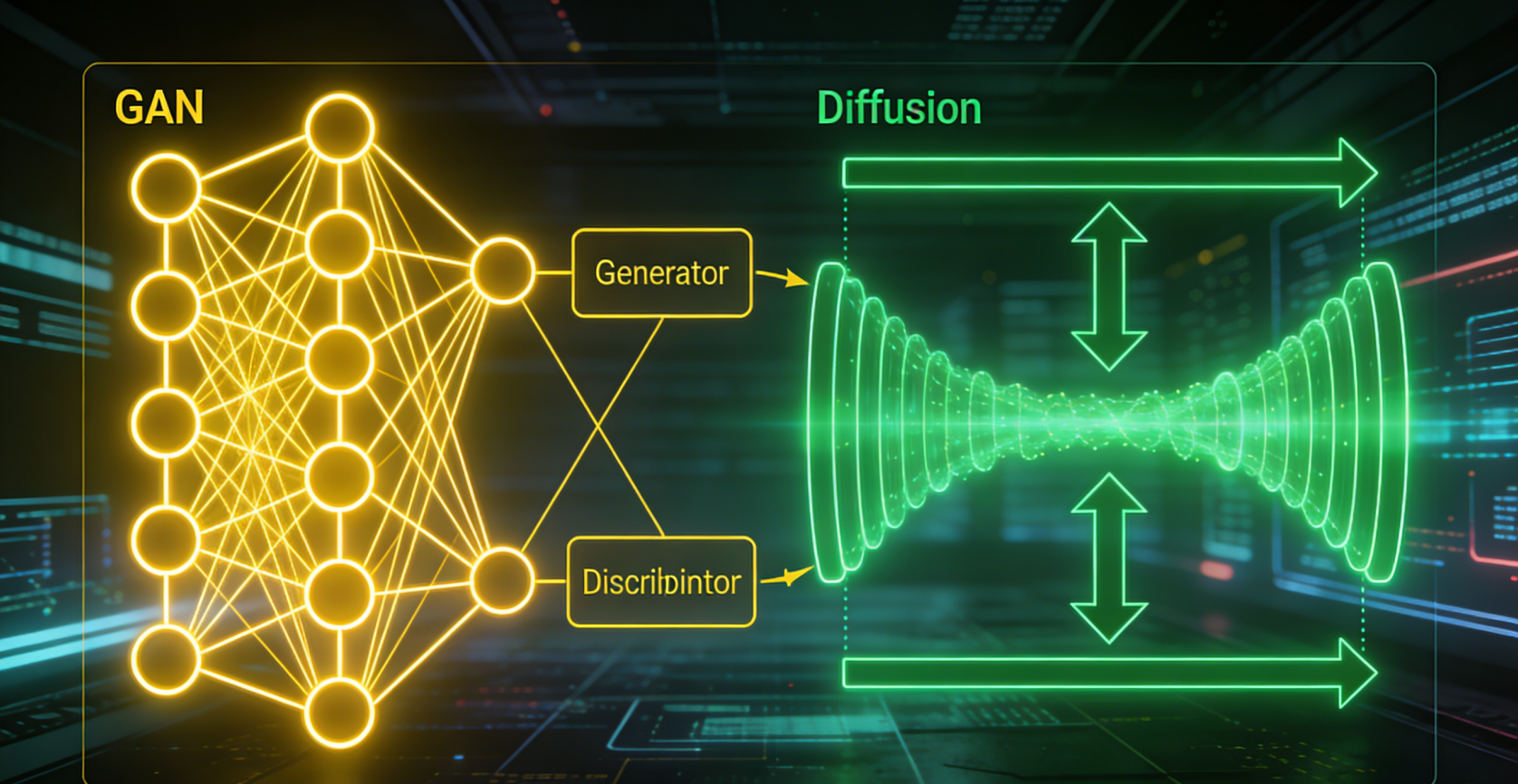

生成式人工智能(Generative AI)是近年来AI领域最引人注目的技术之一,它能够创造全新的、以前不存在的内容。从图像生成到文本创作,从音乐合成到视频生成,生成式AI正在改变我们对创造力的理解。本文将深入探讨两种最重要的生成模型:生成对抗网络(GAN)和扩散模型(Diffusion Models)。

生成式AI基础

什么是生成模型?

生成模型的目标是学习数据的真实分布,从而能够生成新的、与训练数据相似但不完全相同的样本。与判别模型(用于分类或回归)不同,生成模型专注于创造和理解数据分布。

python

import numpy as np

import matplotlib.pyplot as plt

from scipy.stats import multivariate_normal

class SimpleGenerator:

"""简单的2D数据生成器示例"""

def __init__(self):

# 定义两个不同的数据分布

self.distribution1 = multivariate_normal([2, 2], [[1, 0.5], [0.5, 1]])

self.distribution2 = multivariate_normal([-2, -2], [[1, -0.5], [-0.5, 1]])

def generate_samples(self, n_samples, mode='mixed'):

"""生成样本"""

if mode == 'mixed':

# 混合两个分布

mask = np.random.rand(n_samples) > 0.5

samples1 = self.distribution1.rvs(np.sum(mask))

samples2 = self.distribution2.rvs(n_samples - np.sum(mask))

samples = np.zeros((n_samples, 2))

samples[mask] = samples1

samples[~mask] = samples2

elif mode == 'dist1':

samples = self.distribution1.rvs(n_samples)

else:

samples = self.distribution2.rvs(n_samples)

return samples

def visualize_distributions(self):

"""可视化数据分布"""

x = np.linspace(-5, 5, 100)

y = np.linspace(-5, 5, 100)

X, Y = np.meshgrid(x, y)

pos = np.dstack((X, Y))

plt.figure(figsize=(12, 5))

# 分布1

plt.subplot(1, 2, 1)

Z1 = self.distribution1.pdf(pos)

plt.contour(X, Y, Z1, levels=10, alpha=0.8)

samples1 = self.generate_samples(500, 'dist1')

plt.scatter(samples1[:, 0], samples1[:, 1], alpha=0.5, s=10)

plt.title("Distribution 1")

plt.xlabel("X")

plt.ylabel("Y")

plt.grid(True, alpha=0.3)

# 分布2

plt.subplot(1, 2, 2)

Z2 = self.distribution2.pdf(pos)

plt.contour(X, Y, Z2, levels=10, alpha=0.8)

samples2 = self.generate_samples(500, 'dist2')

plt.scatter(samples2[:, 0], samples2[:, 1], alpha=0.5, s=10)

plt.title("Distribution 2")

plt.xlabel("X")

plt.grid(True, alpha=0.3)

plt.tight_layout()

plt.show()

# 创建并可视化生成器

generator = SimpleGenerator()

generator.visualize_distributions()生成对抗网络(GAN)

GAN的基本原理

生成对抗网络由Ian Goodfellow在2014年提出,包含两个相互竞争的神经网络:

- 生成器(Generator):尝试生成逼真的数据

- 判别器(Discriminator):区分真实数据和生成数据

这两个网络通过博弈论的方式相互对抗,共同进步。

python

import torch

import torch.nn as nn

import torch.optim as optim

import torch.nn.functional as F

from torch.utils.data import Dataset, DataLoader

class Generator(nn.Module):

"""GAN的生成器"""

def __init__(self, input_dim=100, output_dim=2, hidden_dim=128):

super(Generator, self).__init__()

self.model = nn.Sequential(

nn.Linear(input_dim, hidden_dim),

nn.LeakyReLU(0.2, inplace=True),

nn.BatchNorm1d(hidden_dim),

nn.Linear(hidden_dim, hidden_dim * 2),

nn.LeakyReLU(0.2, inplace=True),

nn.BatchNorm1d(hidden_dim * 2),

nn.Linear(hidden_dim * 2, output_dim),

nn.Tanh() # 输出范围[-1, 1]

)

def forward(self, z):

return self.model(z)

class Discriminator(nn.Module):

"""GAN的判别器"""

def __init__(self, input_dim=2, hidden_dim=128):

super(Discriminator, self).__init__()

self.model = nn.Sequential(

nn.Linear(input_dim, hidden_dim),

nn.LeakyReLU(0.2, inplace=True),

nn.Dropout(0.3),

nn.Linear(hidden_dim, hidden_dim * 2),

nn.LeakyReLU(0.2, inplace=True),

nn.Dropout(0.3),

nn.Linear(hidden_dim * 2, 1),

nn.Sigmoid() # 输出概率

)

def forward(self, x):

return self.model(x)

class GAN:

"""完整的GAN实现"""

def __init__(self, input_dim=100, output_dim=2, lr=0.0002):

self.device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# 创建生成器和判别器

self.generator = Generator(input_dim, output_dim).to(self.device)

self.discriminator = Discriminator(output_dim).to(self.device)

# 优化器

self.g_optimizer = optim.Adam(self.generator.parameters(), lr=lr, betas=(0.5, 0.999))

self.d_optimizer = optim.Adam(self.discriminator.parameters(), lr=lr, betas=(0.5, 0.999))

# 损失函数

self.criterion = nn.BCELoss()

# 训练历史

self.g_losses = []

self.d_losses = []

def train_step(self, real_data, batch_size):

"""单步训练"""

# 准备真实和假的标签

real_labels = torch.ones(batch_size, 1).to(self.device)

fake_labels = torch.zeros(batch_size, 1).to(self.device)

# 训练判别器

self.d_optimizer.zero_grad()

# 真实数据

real_data = real_data.to(self.device)

real_outputs = self.discriminator(real_data)

d_loss_real = self.criterion(real_outputs, real_labels)

# 生成假数据

z = torch.randn(batch_size, 100).to(self.device)

fake_data = self.generator(z)

fake_outputs = self.discriminator(fake_data.detach())

d_loss_fake = self.criterion(fake_outputs, fake_labels)

# 判别器总损失

d_loss = d_loss_real + d_loss_fake

d_loss.backward()

self.d_optimizer.step()

# 训练生成器

self.g_optimizer.zero_grad()

# 生成器希望判别器认为其输出是真实的

z = torch.randn(batch_size, 100).to(self.device)

fake_data = self.generator(z)

fake_outputs = self.discriminator(fake_data)

g_loss = self.criterion(fake_outputs, real_labels)

g_loss.backward()

self.g_optimizer.step()

return g_loss.item(), d_loss.item()

def train(self, real_data_loader, epochs=100):

"""训练GAN"""

print("开始训练GAN...")

for epoch in range(epochs):

epoch_g_loss = 0

epoch_d_loss = 0

for batch_idx, real_data in enumerate(real_data_loader):

g_loss, d_loss = self.train_step(real_data, len(real_data))

epoch_g_loss += g_loss

epoch_d_loss += d_loss

# 记录平均损失

self.g_losses.append(epoch_g_loss / len(real_data_loader))

self.d_losses.append(epoch_d_loss / len(real_data_loader))

if epoch % 10 == 0:

print(f"Epoch {epoch}: G_Loss = {self.g_losses[-1]:.4f}, D_Loss = {self.d_losses[-1]:.4f}")

# 定期可视化生成结果

if epoch % 20 == 0:

self.visualize_samples(epoch, real_data_loader.dataset.data)

def generate_samples(self, n_samples=1000):

"""生成样本"""

self.generator.eval()

with torch.no_grad():

z = torch.randn(n_samples, 100).to(self.device)

samples = self.generator(z).cpu().numpy()

self.generator.train()

return samples

def visualize_samples(self, epoch, real_data):

"""可视化生成样本与真实数据"""

plt.figure(figsize=(12, 5))

# 真实数据

plt.subplot(1, 2, 1)

plt.scatter(real_data[:, 0], real_data[:, 1], alpha=0.5, s=10, label='Real Data')

plt.title("Real Data Distribution")

plt.xlabel("X")

plt.ylabel("Y")

plt.grid(True, alpha=0.3)

plt.legend()

# 生成数据

plt.subplot(1, 2, 2)

generated_samples = self.generate_samples(1000)

plt.scatter(generated_samples[:, 0], generated_samples[:, 1],

alpha=0.5, s=10, c='red', label='Generated Data')

plt.title(f"Generated Data (Epoch {epoch})")

plt.xlabel("X")

plt.ylabel("Y")

plt.grid(True, alpha=0.3)

plt.legend()

plt.tight_layout()

plt.show()

# 准备数据

real_data = generator.generate_samples(2000, 'mixed')

real_data = torch.FloatTensor(real_data)

# 创建数据加载器

dataset = torch.utils.data.TensorDataset(real_data)

data_loader = DataLoader(dataset, batch_size=64, shuffle=True)

# 创建并训练GAN

gan = GAN(input_dim=100, output_dim=2)

gan.train(data_loader, epochs=200)

# 可视化训练过程

plt.figure(figsize=(10, 5))

plt.plot(gan.g_losses, label='Generator Loss')

plt.plot(gan.d_losses, label='Discriminator Loss')

plt.title("GAN Training Loss")

plt.xlabel("Epoch")

plt.ylabel("Loss")

plt.legend()

plt.grid(True, alpha=0.3)

plt.show()

# 最终可视化

gan.visualize_samples("Final", real_data.numpy())DCGAN:深度卷积生成对抗网络

DCGAN将卷积神经网络引入GAN,用于图像生成任务。

python

class DCGANGenerator(nn.Module):

"""DCGAN生成器(用于图像生成)"""

def __init__(self, nz=100, ngf=64, nc=3):

super(DCGANGenerator, self).__init__()

self.main = nn.Sequential(

# 输入: nz x 1 x 1

nn.ConvTranspose2d(nz, ngf * 8, 4, 1, 0, bias=False),

nn.BatchNorm2d(ngf * 8),

nn.ReLU(True),

# 状态: (ngf*8) x 4 x 4

nn.ConvTranspose2d(ngf * 8, ngf * 4, 4, 2, 1, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(True),

# 状态: (ngf*4) x 8 x 8

nn.ConvTranspose2d(ngf * 4, ngf * 2, 4, 2, 1, bias=False),

nn.BatchNorm2d(ngf * 2),

nn.ReLU(True),

# 状态: (ngf*2) x 16 x 16

nn.ConvTranspose2d(ngf * 2, ngf, 4, 2, 1, bias=False),

nn.BatchNorm2d(ngf),

nn.ReLU(True),

# 状态: (ngf) x 32 x 32

nn.ConvTranspose2d(ngf, nc, 4, 2, 1, bias=False),

nn.Tanh()

# 输出: nc x 64 x 64

)

def forward(self, input):

return self.main(input)

class DCGANDiscriminator(nn.Module):

"""DCGAN判别器"""

def __init__(self, nc=3, ndf=64):

super(DCGANDiscriminator, self).__init__()

self.main = nn.Sequential(

# 输入: nc x 64 x 64

nn.Conv2d(nc, ndf, 4, 2, 1, bias=False),

nn.LeakyReLU(0.2, inplace=True),

# 状态: ndf x 32 x 32

nn.Conv2d(ndf, ndf * 2, 4, 2, 1, bias=False),

nn.BatchNorm2d(ndf * 2),

nn.LeakyReLU(0.2, inplace=True),

# 状态: (ndf*2) x 16 x 16

nn.Conv2d(ndf * 2, ndf * 4, 4, 2, 1, bias=False),

nn.BatchNorm2d(ndf * 4),

nn.LeakyReLU(0.2, inplace=True),

# 状态: (ndf*4) x 8 x 8

nn.Conv2d(ndf * 4, ndf * 8, 4, 2, 1, bias=False),

nn.BatchNorm2d(ndf * 8),

nn.LeakyReLU(0.2, inplace=True),

# 状态: (ndf*8) x 4 x 4

nn.Conv2d(ndf * 8, 1, 4, 1, 0, bias=False),

nn.Sigmoid()

)

def forward(self, input):

return self.main(input).view(-1, 1).squeeze(1)

# 创建DCGAN示例

def create_sample_images(generator, n_samples=16):

"""生成示例图像"""

generator.eval()

with torch.no_grad():

# 生成随机噪声

noise = torch.randn(n_samples, 100, 1, 1)

# 生成图像

generated_images = generator(noise)

# 将图像从[-1, 1]转换到[0, 1]

generated_images = (generated_images + 1) / 2

generator.train()

return generated_images

# DCGAN的训练框架

class DCGAN:

def __init__(self, nz=100, ngf=64, ndf=64, nc=3):

self.device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# 创建网络

self.netG = DCGANGenerator(nz, ngf, nc).to(self.device)

self.netD = DCGANDiscriminator(nc, ndf).to(self.device)

# 初始化权重

self.weights_init(self.netG)

self.weights_init(self.netD)

# 损失函数和优化器

self.criterion = nn.BCELoss()

self.optimizerG = optim.Adam(self.netG.parameters(), lr=0.0002, betas=(0.5, 0.999))

self.optimizerD = optim.Adam(self.netD.parameters(), lr=0.0002, betas=(0.5, 0.999))

# 固定噪声用于可视化

self.fixed_noise = torch.randn(64, nz, 1, 1, device=self.device)

def weights_init(self, m):

"""自定义权重初始化"""

classname = m.__class__.__name__

if classname.find('Conv') != -1:

nn.init.normal_(m.weight.data, 0.0, 0.02)

elif classname.find('BatchNorm') != -1:

nn.init.normal_(m.weight.data, 1.0, 0.02)

nn.init.constant_(m.bias.data, 0)

def visualize_generated(self, epoch):

"""可视化生成的图像"""

with torch.no_grad():

fake = self.netG(self.fixed_noise).detach().cpu()

# 将图像从[-1,1]转换到[0,1]

fake = (fake + 1) / 2

plt.figure(figsize=(8, 8))

plt.axis("off")

plt.title(f"Generated Images - Epoch {epoch}")

# 显示8x8网格的图像

plt.imshow(np.transpose(

torchvision.utils.make_grid(fake, padding=2, normalize=True),

(1, 2, 0)

))

plt.show()

# 注意:实际使用DCGAN需要真实的图像数据集

# 这里仅展示框架代码

print("DCGAN框架代码已准备就绪,需要真实图像数据集进行训练")改进的GAN变体

WGAN(Wasserstein GAN)

WGAN通过使用Wasserstein距离代替JS散度来改善训练稳定性。

python

class WGANDiscriminator(nn.Module):

"""WGAN的判别器(称为Critic)"""

def __init__(self, input_dim=2, hidden_dim=128):

super(WGANDiscriminator, self).__init__()

self.model = nn.Sequential(

nn.Linear(input_dim, hidden_dim),

nn.LeakyReLU(0.2, inplace=True),

nn.Linear(hidden_dim, hidden_dim * 2),

nn.LeakyReLU(0.2, inplace=True),

nn.Linear(hidden_dim * 2, hidden_dim * 2),

nn.LeakyReLU(0.2, inplace=True),

nn.Linear(hidden_dim * 2, 1)

# 不使用Sigmoid,输出实数值

)

def forward(self, x):

return self.model(x)

class WGAN:

"""Wasserstein GAN实现"""

def __init__(self, input_dim=2, latent_dim=100, hidden_dim=128, lr=0.00005):

self.device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# 网络结构

self.generator = Generator(latent_dim, input_dim, hidden_dim).to(self.device)

self.critic = WGANDiscriminator(input_dim, hidden_dim).to(self.device)

# 优化器(使用RMSprop)

self.g_optimizer = optim.RMSprop(self.generator.parameters(), lr=lr)

self.c_optimizer = optim.RMSprop(self.critic.parameters(), lr=lr)

# 权重裁剪参数

self.clip_value = 0.01

# 训练历史

self.g_losses = []

self.c_losses = []

def train_step(self, real_data, batch_size):

"""WGAN训练步骤"""

# 训练Critic(n_critic次)

for _ in range(5): # 通常n_critic=5

self.c_optimizer.zero_grad()

# 真实数据

real_data = real_data.to(self.device)

real_output = self.critic(real_data)

# 生成假数据

z = torch.randn(batch_size, 100).to(self.device)

fake_data = self.generator(z)

fake_output = self.critic(fake_data.detach())

# Wasserstein损失

c_loss = -torch.mean(real_output) + torch.mean(fake_output)

c_loss.backward()

self.c_optimizer.step()

# 权重裁剪

for p in self.critic.parameters():

p.data.clamp_(-self.clip_value, self.clip_value)

# 训练Generator

self.g_optimizer.zero_grad()

z = torch.randn(batch_size, 100).to(self.device)

fake_data = self.generator(z)

fake_output = self.critic(fake_data)

# 生成器希望最大化Critic的输出

g_loss = -torch.mean(fake_output)

g_loss.backward()

self.g_optimizer.step()

return g_loss.item(), c_loss.item()

def train(self, data_loader, epochs=100):

"""训练WGAN"""

print("开始训练WGAN...")

for epoch in range(epochs):

epoch_g_loss = 0

epoch_c_loss = 0

for real_data in data_loader:

g_loss, c_loss = self.train_step(real_data, len(real_data))

epoch_g_loss += g_loss

epoch_c_loss += c_loss

self.g_losses.append(epoch_g_loss / len(data_loader))

self.c_losses.append(epoch_c_loss / len(data_loader))

if epoch % 10 == 0:

print(f"Epoch {epoch}: G_Loss = {self.g_losses[-1]:.4f}, C_Loss = {self.c_losses[-1]:.4f}")

# 训练WGAN

wgan = WGAN()

wgan.train(data_loader, epochs=200)

# 可视化结果

plt.figure(figsize=(12, 5))

plt.subplot(1, 2, 1)

plt.scatter(real_data.numpy()[:, 0], real_data.numpy()[:, 1], alpha=0.5, s=10, label='Real')

plt.title("Real Data")

plt.legend()

plt.subplot(1, 2, 2)

generated_samples = wgan.generate_samples(1000)

plt.scatter(generated_samples[:, 0], generated_samples[:, 1],

alpha=0.5, s=10, c='red', label='Generated')

plt.title("WGAN Generated Data")

plt.legend()

plt.show()扩散模型(Diffusion Models)

扩散模型的基本原理

扩散模型是一种新兴的生成模型,通过逐步添加噪声然后学习逆转这个过程来生成数据。

python

import math

class DiffusionProcess:

"""扩散过程的前向和反向过程"""

def __init__(self, num_timesteps=1000, beta_start=1e-4, beta_end=0.02):

self.num_timesteps = num_timesteps

# beta schedule(噪声调度)

self.betas = torch.linspace(beta_start, beta_end, num_timesteps)

self.alphas = 1.0 - self.betas

self.alphas_cumprod = torch.cumprod(self.alphas, axis=0)

self.alphas_cumprod_prev = F.pad(

self.alphas_cumprod[:-1], (1, 0), value=1.0

)

# 预计算常量

self.sqrt_alphas_cumprod = torch.sqrt(self.alphas_cumprod)

self.sqrt_one_minus_alphas_cumprod = torch.sqrt(1.0 - self.alphas_cumprod)

self.sqrt_recip_alphas = torch.sqrt(1.0 / self.alphas)

# 后验方差

self.posterior_variance = (

self.betas * (1.0 - self.alphas_cumprod_prev) / (1.0 - self.alphas_cumprod)

)

def q_sample(self, x_start, t, noise=None):

"""前向过程:q(x_t | x_0)"""

if noise is None:

noise = torch.randn_like(x_start)

sqrt_alphas_cumprod_t = self._extract(self.sqrt_alphas_cumprod, t, x_start.shape)

sqrt_one_minus_alphas_cumprod_t = self._extract(

self.sqrt_one_minus_alphas_cumprod, t, x_start.shape

)

return sqrt_alphas_cumprod_t * x_start + sqrt_one_minus_alphas_cumprod_t * noise

def p_sample(self, model, x, t):

"""反向过程:p(x_{t-1} | x_t)"""

# 预测噪声

predicted_noise = model(x, t)

# 计算均值

sqrt_recip_alphas_t = self._extract(self.sqrt_recip_alphas, t, x.shape)

betas_t = self._extract(self.betas, t, x.shape)

sqrt_one_minus_alphas_cumprod_t = self._extract(

self.sqrt_one_minus_alphas_cumprod, t, x.shape

)

posterior_variance_t = self._extract(self.posterior_variance, t, x.shape)

model_mean = sqrt_recip_alphas_t * (

x - betas_t * predicted_noise / sqrt_one_minus_alphas_cumprod_t

)

if t[0] == 0:

return model_mean

else:

noise = torch.randn_like(x)

return model_mean + torch.sqrt(posterior_variance_t) * noise

def _extract(self, a, t, x_shape):

"""从a中提取特定时间步的值"""

batch_size = t.shape[0]

out = a.to(t.device).gather(0, t)

return out.reshape(batch_size, *((1,) * (len(x_shape) - 1)))

# 简单的UNet模型用于扩散模型

class SimpleUNet(nn.Module):

"""简化的UNet模型"""

def __init__(self, input_dim=2, hidden_dim=128):

super(SimpleUNet, self).__init__()

# 时间嵌入

self.time_embed = nn.Sequential(

nn.Linear(128, hidden_dim),

nn.SiLU(),

nn.Linear(hidden_dim, hidden_dim)

)

# 编码器

self.encoder = nn.Sequential(

nn.Linear(input_dim + hidden_dim, hidden_dim),

nn.SiLU(),

nn.Linear(hidden_dim, hidden_dim * 2),

nn.SiLU(),

nn.Linear(hidden_dim * 2, hidden_dim * 2)

)

# 解码器

self.decoder = nn.Sequential(

nn.Linear(hidden_dim * 2, hidden_dim * 2),

nn.SiLU(),

nn.Linear(hidden_dim * 2, hidden_dim),

nn.SiLU(),

nn.Linear(hidden_dim, input_dim)

)

def timestep_embedding(self, timesteps, dim, max_period=10000):

"""时间步嵌入"""

half = dim // 2

freqs = torch.exp(

-math.log(max_period) * torch.arange(start=0, end=half, dtype=torch.float32) / half

).to(timesteps.device)

args = timesteps[:, None].float() * freqs[None]

embedding = torch.cat([torch.cos(args), torch.sin(args)], dim=-1)

if dim % 2:

embedding = torch.cat([embedding, torch.zeros_like(embedding[:, :1])], dim=-1)

return embedding

def forward(self, x, t):

# 时间嵌入

t_emb = self.timestep_embedding(t, 128)

t_emb = self.time_embed(t_emb)

# 拼接输入和时间嵌入

x = torch.cat([x, t_emb], dim=-1)

# 编码

h = self.encoder(x)

# 解码

output = self.decoder(h)

return output

class DiffusionModel:

"""完整的扩散模型实现"""

def __init__(self, input_dim=2, hidden_dim=128, num_timesteps=1000):

self.device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# 扩散过程

self.diffusion = DiffusionProcess(num_timesteps)

# 噪声预测网络

self.model = SimpleUNet(input_dim, hidden_dim).to(self.device)

# 优化器

self.optimizer = optim.Adam(self.model.parameters(), lr=0.001)

# 训练历史

self.losses = []

def train_step(self, x0):

"""单步训练"""

# 采样时间步

batch_size = x0.shape[0]

t = torch.randint(0, self.diffusion.num_timesteps, (batch_size,), device=self.device)

# 添加噪声

noise = torch.randn_like(x0)

xt = self.diffusion.q_sample(x0, t, noise)

# 预测噪声

predicted_noise = self.model(xt, t)

# 计算损失

loss = F.mse_loss(predicted_noise, noise)

# 反向传播

self.optimizer.zero_grad()

loss.backward()

self.optimizer.step()

return loss.item()

def train(self, data_loader, epochs=100):

"""训练扩散模型"""

print("开始训练扩散模型...")

for epoch in range(epochs):

epoch_loss = 0

for batch in data_loader:

batch = batch[0].to(self.device)

loss = self.train_step(batch)

epoch_loss += loss

avg_loss = epoch_loss / len(data_loader)

self.losses.append(avg_loss)

if epoch % 10 == 0:

print(f"Epoch {epoch}: Loss = {avg_loss:.4f}")

def sample(self, n_samples=1000):

"""从扩散模型采样"""

self.model.eval()

with torch.no_grad():

# 从纯噪声开始

x = torch.randn(n_samples, 2).to(self.device)

# 反向扩散过程

for t in reversed(range(self.diffusion.num_timesteps)):

t_batch = torch.full((n_samples,), t, device=self.device, dtype=torch.long)

x = self.diffusion.p_sample(self.model, x, t_batch)

self.model.train()

return x.cpu().numpy()

def visualize_diffusion_process(self, data):

"""可视化扩散过程"""

data = data[:1].to(self.device) # 只取一个样本

# 采样几个时间步

timesteps = [0, 100, 300, 500, 700, 999]

plt.figure(figsize=(15, 3))

for i, t in enumerate(timesteps):

t_tensor = torch.full((1,), t, device=self.device)

xt = self.diffusion.q_sample(data, t_tensor)

plt.subplot(1, len(timesteps), i+1)

if t == 0:

plt.scatter(data[0, 0].cpu(), data[0, 1].cpu(), s=100, c='blue')

plt.title("Original (t=0)")

else:

plt.scatter(xt[0, 0].cpu(), xt[0, 1].cpu(), s=100, c='red')

plt.title(f"t={t}")

plt.xlim(-4, 4)

plt.ylim(-4, 4)

plt.grid(True, alpha=0.3)

plt.suptitle("Forward Diffusion Process")

plt.show()

# 训练扩散模型

diffusion_model = DiffusionModel(input_dim=2, hidden_dim=128)

diffusion_model.train(data_loader, epochs=200)

# 可视化扩散过程

sample_data = real_data[:1]

diffusion_model.visualize_diffusion_process(sample_data)

# 生成新样本

generated_samples = diffusion_model.sample(1000)

# 可视化生成结果

plt.figure(figsize=(12, 5))

plt.subplot(1, 2, 1)

plt.scatter(real_data.numpy()[:, 0], real_data.numpy()[:, 1],

alpha=0.5, s=10, label='Real Data')

plt.title("Real Data Distribution")

plt.legend()

plt.subplot(1, 2, 2)

plt.scatter(generated_samples[:, 0], generated_samples[:, 1],

alpha=0.5, s=10, c='red', label='Generated')

plt.title("Diffusion Model Generated Data")

plt.legend()

plt.show()

# 绘制训练损失

plt.figure(figsize=(10, 5))

plt.plot(diffusion_model.losses)

plt.title("Diffusion Model Training Loss")

plt.xlabel("Epoch")

plt.ylabel("MSE Loss")

plt.grid(True, alpha=0.3)

plt.show()高级扩散模型技术

条件扩散模型

条件扩散模型允许根据条件信息生成数据。

python

class ConditionalDiffusionModel(DiffusionModel):

"""条件扩散模型"""

def __init__(self, input_dim=2, condition_dim=2, hidden_dim=128, num_timesteps=1000):

super().__init__(input_dim, hidden_dim, num_timesteps)

self.condition_dim = condition_dim

# 条件UNet模型

self.model = ConditionalUNet(input_dim, condition_dim, hidden_dim).to(self.device)

def train_step(self, x0, condition):

"""条件训练步骤"""

batch_size = x0.shape[0]

t = torch.randint(0, self.diffusion.num_timesteps, (batch_size,), device=self.device)

# 添加噪声

noise = torch.randn_like(x0)

xt = self.diffusion.q_sample(x0, t, noise)

# 带条件的噪声预测

predicted_noise = self.model(xt, t, condition)

loss = F.mse_loss(predicted_noise, noise)

self.optimizer.zero_grad()

loss.backward()

self.optimizer.step()

return loss.item()

def sample(self, condition, n_samples=1):

"""条件采样"""

self.model.eval()

with torch.no_grad():

x = torch.randn(n_samples, 2).to(self.device)

for t in reversed(range(self.diffusion.num_timesteps)):

t_batch = torch.full((n_samples,), t, device=self.device, dtype=torch.long)

x = self.diffusion.conditional_p_sample(

self.model, x, t_batch, condition

)

self.model.train()

return x.cpu().numpy()

class ConditionalUNet(nn.Module):

"""条件UNet模型"""

def __init__(self, input_dim, condition_dim, hidden_dim):

super(ConditionalUNet, self).__init__()

# 时间嵌入

self.time_embed = nn.Sequential(

nn.Linear(128, hidden_dim),

nn.SiLU(),

nn.Linear(hidden_dim, hidden_dim)

)

# 条件嵌入

self.condition_embed = nn.Sequential(

nn.Linear(condition_dim, hidden_dim),

nn.SiLU(),

nn.Linear(hidden_dim, hidden_dim)

)

# 主网络

self.network = nn.Sequential(

nn.Linear(input_dim + hidden_dim * 2, hidden_dim * 2),

nn.SiLU(),

nn.Linear(hidden_dim * 2, hidden_dim * 2),

nn.SiLU(),

nn.Linear(hidden_dim * 2, hidden_dim),

nn.SiLU(),

nn.Linear(hidden_dim, input_dim)

)

def timestep_embedding(self, timesteps, dim, max_period=10000):

half = dim // 2

freqs = torch.exp(

-math.log(max_period) * torch.arange(start=0, end=half, dtype=torch.float32) / half

).to(timesteps.device)

args = timesteps[:, None].float() * freqs[None]

embedding = torch.cat([torch.cos(args), torch.sin(args)], dim=-1)

if dim % 2:

embedding = torch.cat([embedding, torch.zeros_like(embedding[:, :1])], dim=-1)

return embedding

def forward(self, x, t, condition):

# 嵌入

t_emb = self.timestep_embedding(t, 128)

t_emb = self.time_embed(t_emb)

c_emb = self.condition_embed(condition)

# 拼接所有信息

x = torch.cat([x, t_emb, c_emb], dim=-1)

# 通过网络

output = self.network(x)

return output

# 创建条件生成示例

print("\n条件扩散模型示例:")

print("可以基于给定的条件(如类别标签)生成特定类型的数据")实战项目:图像去噪

使用扩散模型进行图像去噪任务。

python

class ImageDenoisingDiffusion:

"""图像去噪扩散模型"""

def __init__(self, image_size=28, channels=1):

self.image_size = image_size

self.channels = channels

self.device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# 扩散过程

self.diffusion = DiffusionProcess(num_timesteps=1000)

# UNet架构用于图像

self.model = ImageUNet(channels, channels).to(self.device)

# 优化器

self.optimizer = optim.Adam(self.model.parameters(), lr=0.0001)

def add_noise(self, images, noise_level=0.1):

"""向图像添加噪声"""

noise = torch.randn_like(images) * noise_level

noisy_images = images + noise

return noisy_images.clamp(-1, 1), noise

def train(self, clean_images, epochs=50):

"""训练去噪模型"""

print("开始训练图像去噪模型...")

for epoch in range(epochs):

epoch_loss = 0

for batch in clean_images:

batch = batch.to(self.device)

# 添加噪声

noisy_images, noise = self.add_noise(batch, noise_level=0.3)

# 训练

loss = self.train_step(noisy_images, batch)

epoch_loss += loss

avg_loss = epoch_loss / len(clean_images)

print(f"Epoch {epoch}: Loss = {avg_loss:.4f}")

def train_step(self, noisy_images, clean_images):

"""单步训练"""

batch_size = noisy_images.shape[0]

t = torch.randint(0, self.diffusion.num_timesteps, (batch_size,), device=self.device)

# 进一步扩散

xt = self.diffusion.q_sample(clean_images, t)

# 添加噪声

noise = torch.randn_like(xt)

xt_noisy = self.diffusion.q_sample(xt, torch.zeros_like(t), noise)

# 预测噪声

predicted_noise = self.model(xt_noisy, t)

loss = F.mse_loss(predicted_noise, noise)

self.optimizer.zero_grad()

loss.backward()

self.optimizer.step()

return loss.item()

def denoise(self, noisy_image, num_steps=50):

"""去噪图像"""

self.model.eval()

with torch.no_grad():

x = noisy_image.unsqueeze(0).to(self.device)

# 从高噪声逐步去噪

for t in reversed(range(num_steps)):

t_batch = torch.full((1,), t, device=self.device, dtype=torch.long)

x = self.diffusion.p_sample(self.model, x, t_batch)

self.model.train()

return x.squeeze(0).cpu()

class ImageUNet(nn.Module):

"""用于图像的简化UNet"""

def __init__(self, in_channels, out_channels):

super(ImageUNet, self).__init__()

# 编码器

self.enc1 = self.conv_block(in_channels, 64)

self.enc2 = self.conv_block(64, 128)

self.enc3 = self.conv_block(128, 256)

# 中间层

self.middle = self.conv_block(256, 512)

# 解码器

self.dec3 = self.conv_block(256 + 512, 256)

self.dec2 = self.conv_block(128 + 256, 128)

self.dec1 = self.conv_block(64 + 128, 64)

# 输出层

self.out = nn.Conv2d(64, out_channels, kernel_size=1)

# 时间嵌入

self.time_embed = nn.Sequential(

nn.Linear(128, 512),

nn.SiLU(),

nn.Linear(512, 512)

)

def conv_block(self, in_channels, out_channels):

return nn.Sequential(

nn.Conv2d(in_channels, out_channels, kernel_size=3, padding=1),

nn.GroupNorm(8, out_channels),

nn.SiLU(),

nn.Conv2d(out_channels, out_channels, kernel_size=3, padding=1),

nn.GroupNorm(8, out_channels),

nn.SiLU()

)

def timestep_embedding(self, timesteps, dim, max_period=10000):

half = dim // 2

freqs = torch.exp(

-math.log(max_period) * torch.arange(start=0, end=half, dtype=torch.float32) / half

).to(timesteps.device)

args = timesteps[:, None].float() * freqs[None]

embedding = torch.cat([torch.cos(args), torch.sin(args)], dim=-1)

if dim % 2:

embedding = torch.cat([embedding, torch.zeros_like(embedding[:, :1])], dim=-1)

return embedding

def forward(self, x, t):

# 时间嵌入

t_emb = self.timestep_embedding(t, 128)

t_emb = self.time_embed(t_emb)

# 编码

e1 = self.enc1(x)

e2 = self.enc2(F.avg_pool2d(e1, 2))

e3 = self.enc3(F.avg_pool2d(e2, 2))

# 中间层

middle = self.middle(F.avg_pool2d(e3, 2))

# 解码

d3 = self.dec3(torch.cat([F.interpolate(middle, scale_factor=2), e3], dim=1))

d2 = self.dec2(torch.cat([F.interpolate(d3, scale_factor=2), e2], dim=1))

d1 = self.dec1(torch.cat([F.interpolate(d2, scale_factor=2), e1], dim=1))

# 输出

return self.out(d1)

# 模拟图像去噪示例

print("\n图像去噪扩散模型示例:")

print("该模型可以去除图像中的噪声,恢复清晰图像")

# 创建模拟图像数据

def create_test_images(n_images=10, size=28):

"""创建测试图像"""

images = []

for _ in range(n_images):

# 创建简单的几何形状

img = torch.zeros(1, size, size)

# 添加随机形状

x = np.random.randint(5, size-5)

y = np.random.randint(5, size-5)

r = np.random.randint(3, 8)

cv2.circle(img[0].numpy(), (x, y), r, 1.0, -1)

images.append(img)

return images

# 生成测试图像

test_images = create_test_images(5, 64)

# 添加噪声

noisy_images = []

noise_level = 0.3

for img in test_images:

noise = torch.randn_like(img) * noise_level

noisy = img + noise

noisy_images.append(noisy.clamp(-1, 1))

# 可视化去噪效果

plt.figure(figsize=(15, 5))

for i in range(3):

plt.subplot(1, 3, i+1)

plt.imshow(test_images[i][0], cmap='gray')

plt.title(f"Clean Image {i+1}")

plt.axis('off')

plt.show()

plt.figure(figsize=(15, 5))

for i in range(3):

plt.subplot(1, 3, i+1)

plt.imshow(noisy_images[i][0], cmap='gray')

plt.title(f"Noisy Image {i+1}")

plt.axis('off')

plt.show()

print("扩散模型可以学习从噪声中恢复清晰图像")比较GAN和扩散模型

python

def compare_models():

"""比较GAN和扩散模型的特性"""

comparison = {

"特性": [

"训练稳定性",

"生成质量",

"采样速度",

"训练难度",

"理论保证",

"并行训练",

"条件生成",

"模式崩溃风险"

],

"GAN": [

"中等", "高", "快", "高", "弱", "是", "支持", "存在"

],

"扩散模型": [

"高", "极高", "慢", "中等", "强", "是", "支持", "极低"

]

}

# 打印比较表格

print("\nGAN vs 扩散模型比较:")

print("-" * 60)

for i in range(len(comparison["特性"])):

print(f"{comparison['特性'][i]:<12} | GAN: {comparison['GAN'][i]:<8} | 扩散: {comparison['扩散模型'][i]:<8}")

# 生成质量趋势图

plt.figure(figsize=(10, 5))

years = [2014, 2015, 2017, 2019, 2021, 2023]

gan_quality = [20, 40, 60, 75, 85, 90]

diffusion_quality = [0, 0, 10, 50, 85, 95]

plt.plot(years, gan_quality, 'b-o', label='GAN', linewidth=2)

plt.plot(years, diffusion_quality, 'r-s', label='Diffusion Models', linewidth=2)

plt.title("生成质量发展趋势")

plt.xlabel("年份")

plt.ylabel("生成质量评分")

plt.grid(True, alpha=0.3)

plt.legend()

plt.show()

compare_models()生成式AI的应用

1. 文本到图像生成

python

class TextToImageGenerator:

"""文本到图像生成器的概念实现"""

def __init__(self):

print("文本到图像生成器框架")

print("- 需要CLIP模型编码文本")

print("- 需要扩散模型生成图像")

print("- 需要大规模配对数据集训练")

def generate_from_text(self, text_prompt):

"""从文本生成图像(概念)"""

print(f"\n生成图像,文本提示: '{text_prompt}'")

print("步骤1: 使用CLIP编码文本")

print("步骤2: 使用扩散模型生成图像")

print("步骤3: 调整以匹配文本语义")

print("[图像生成完成]")

# 示例

t2i = TextToImageGenerator()

t2i.generate_from_text("一只可爱的小猫坐在花园里")2. 图像编辑

python

class ImageEditor:

"""基于生成模型的图像编辑器"""

def __init__(self):

self.edit_modes = ["inpainting", "outpainting", "style_transfer", "image_to_image"]

def edit_image(self, image, mode, instruction):

"""编辑图像"""

print(f"\n编辑模式: {mode}")

print(f"编辑指令: {instruction}")

print("处理中...")

if mode == "inpainting":

print("图像修复/填充:使用生成模型填充缺失区域")

elif mode == "outpainting":

print("图像扩展:生成超出原始边界的内容")

elif mode == "style_transfer":

print("风格迁移:改变图像的艺术风格")

elif mode == "image_to_image":

print("图像转换:根据指令改变图像内容")

print("编辑完成!")

# 示例

editor = ImageEditor()

editor.edit_image("image.jpg", "inpainting", "修复图像中的损坏区域")总结

本文深入探讨了生成式AI的两种主要技术:GAN和扩散模型,涵盖了:

- 生成模型基础:理解生成式AI的核心概念

- GAN技术:从基础GAN到DCGAN、WGAN等改进版本

- 扩散模型:新兴的强大生成技术

- 实际应用:图像生成、去噪、编辑等任务

- 技术比较:GAN和扩散模型的优缺点对比

生成式AI正在快速演进,从最初简单的GAN到今天强大的扩散模型,我们已经能够生成高质量、多样化的内容。未来,随着技术的进一步发展,生成式AI将在更多领域发挥重要作用。

未来发展方向

- 更高效的采样方法:加速扩散模型的生成过程

- 多模态生成:统一文本、图像、音频、视频的生成

- 可控生成:更精确地控制生成内容的属性

- 3D内容生成:直接生成3D模型和场景

- 实时生成:实现实时的内容生成和编辑

实践建议

- 从简单的数据集和模型开始

- 理解数学原理,特别是概率论和扩散过程

- 利用预训练模型进行微调

- 注意计算资源需求,扩散模型尤其需要大量算力

- 关注伦理问题,避免生成有害内容