- 🍨 本文为🔗365天深度学习训练营 中的学习记录博客

- 🍖 原作者:K同学啊

这是一个简单的英法翻译程序,它使用深度学习技术(RNN)将英语句子自动翻译成法语。就像Google翻译一样,但这是自己训练的小型模型。程序从头到尾展示了如何:

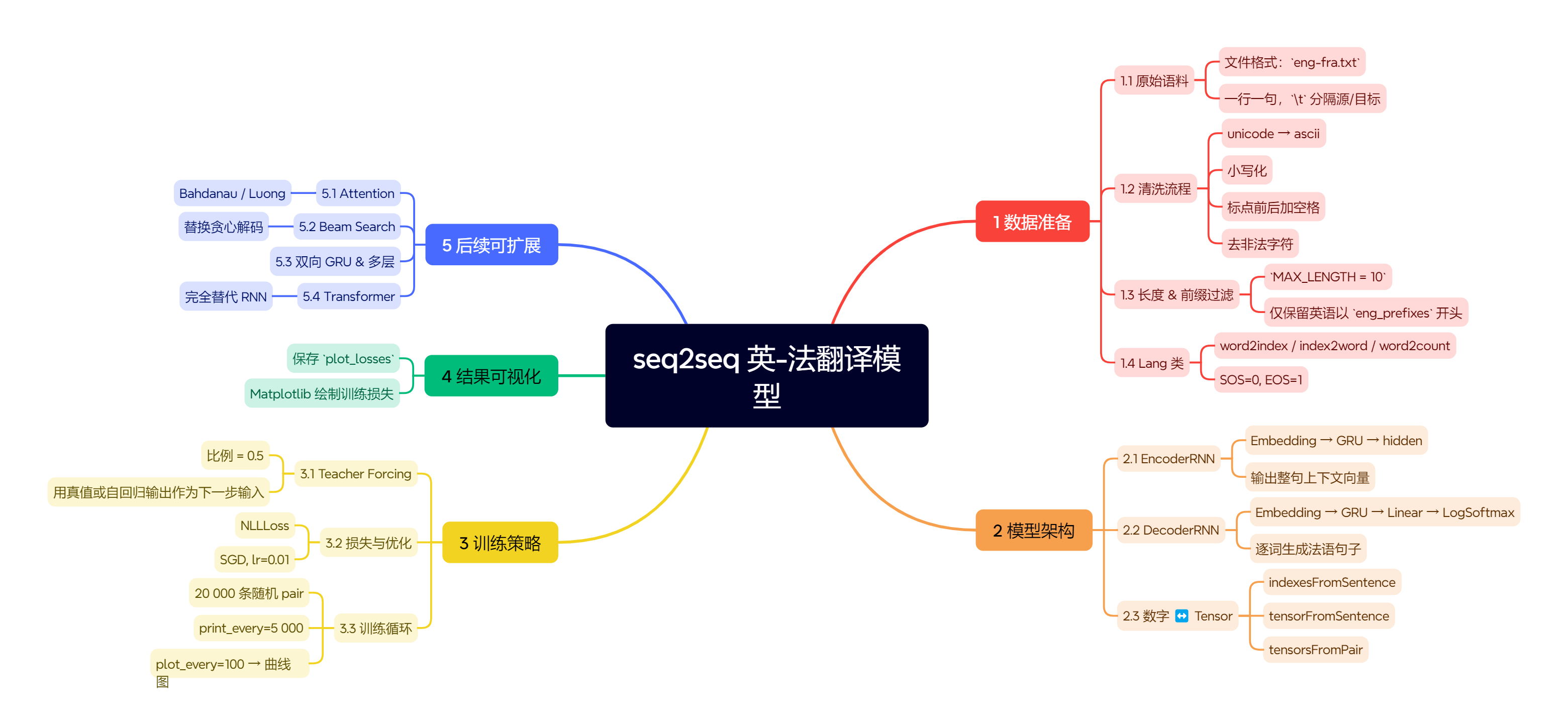

思维导图如下:

bash

# =====================================================

# 0. 基础环境配置(关键!避免编码/显示问题)

# =====================================================

# 确保Python3兼容Unicode处理(防止中文/特殊字符报错)

from __future__ import unicode_literals, print_function, division

# 文件操作模块(用于读取语料库)

from io import open

# 字符处理工具(处理特殊字符如重音符号)

import unicodedata

# 字母数字处理(处理ASCII字符)

import string

# 正则表达式(文本清洗核心工具)

import re

# 随机数生成(数据集划分/打乱)

import random

# =====================================================

# 1. 核心深度学习框架(PyTorch核心组件)

# =====================================================

import torch # 深度学习框架

import torch.nn as nn # 神经网络模块

from torch import optim # 优化器

import torch.nn.functional as F # 功能函数(如激活函数)

# GPU加速配置(关键!避免CPU训练慢)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(f"使用设备: {device}") # 输出确认:GPU/CPU

# =====================================================

# 2. 序列标记系统(NLP基础)

# =====================================================

SOS_token = 0 # 序列起始符(Start Of Sequence)

EOS_token = 1 # 序列结束符(End Of Sequence)

# 语言类(处理词表/索引映射的核心数据结构)

class Lang:

def __init__(self, name):

self.name = name # 语言名称(如'eng'/'fra')

self.word2index = {} # 词 -> 索引 (e.g. "hello"->3)

self.word2count = {} # 词 -> 出现次数 (用于过滤低频词)

self.index2word = {0: "SOS", 1: "EOS"} # 索引 -> 词 (反向映射)

self.n_words = 2 # 词表大小(初始包含SOS/EOS)

# 添加整句到词表(自动处理每个词)

def addSentence(self, sentence):

for word in sentence.split(' '): # 按空格分割单词

self.addWord(word) # 单词级处理

# 添加单个词(核心词表构建逻辑)

def addWord(self, word):

if word not in self.word2index:

self.word2index[word] = self.n_words # 新词分配新索引

self.word2count[word] = 1 # 初始计数为1

self.index2word[self.n_words] = word # 反向映射

self.n_words += 1 # 词表大小+1

else:

self.word2count[word] += 1 # 重复词计数+1

# =====================================================

# 3. 文本预处理(关键!影响模型效果)

# =====================================================

# Unicode转ASCII(处理特殊字符如éà)

def unicodeToAscii(s):

# normalize('NFD'): 分离组合字符

# category(c) != 'Mn': 过滤掉非字母符号(如重音符号)

return ''.join(

c for c in unicodedata.normalize('NFD', s)

if unicodedata.category(c) != 'Mn'

)

# 标准化文本(小写化/标点处理/非字母过滤)

def normalizeString(s):

# 1. Unicode转ASCII

s = unicodeToAscii(s.lower().strip()) # 小写+去空格

# 2. 标点处理:将标点前后加空格(如"Hello!" -> "Hello !")

s = re.sub(r"([.!?])", r" \1", s)

# 3. 过滤非字母/标点(保留a-zA-Z.!?)

s = re.sub(r"[^a-zA-Z.!?]+", r" ", s)

return s

# =====================================================

# 4. 语料库读取(核心数据准备)

# =====================================================

MAX_LENGTH = 10 # 最大句子长度(避免长序列计算开销)

# 英语常见前缀(用于过滤训练数据,提升翻译质量)

eng_prefixes = (

"i am ", "i m ",

"he is", "he s ",

"she is", "she s ",

"you are", "you re ",

"we are", "we re ",

"they are", "they re "

)

# 过滤条件(确保句子长度合理且以指定前缀开头)

def filterPair(p):

return len(p[0].split(' ')) < MAX_LENGTH and \

len(p[1].split(' ')) < MAX_LENGTH and p[1].startswith(eng_prefixes)

# 过滤整个语料库

def filterPairs(pairs):

return [pair for pair in pairs if filterPair(pair)]

# 数据准备主函数(整合所有预处理)

def prepareData(lang1, lang2, reverse=False):

print("Reading lines...") # 状态提示

# 读取文件(按行分割,UTF-8编码)

lines = open('%s-%s.txt'%(lang1,lang2), encoding='utf-8').\

read().strip().split('\n')

# 按制表符分割成双语对([源语言, 目标语言])

pairs = [[normalizeString(s) for s in l.split('\t')] for l in lines]

# 创建语言对象并反转顺序(如法语->英语)

if reverse:

pairs = [list(reversed(p)) for p in pairs] # 交换顺序

input_lang = Lang(lang2) # 输入语言 = 目标语言

output_lang = Lang(lang1) # 输出语言 = 源语言

else:

input_lang = Lang(lang1) # 输入语言 = 源语言

output_lang = Lang(lang2) # 输出语言 = 目标语言

# 返回处理后的数据

return input_lang, output_lang, pairs

# =====================================================

# 5. 数据集加载与验证(关键步骤)

# =====================================================

input_lang, output_lang, pairs = prepareData('eng', 'fra', True)

print("随机示例:", random.choice(pairs)) # 验证数据格式

# =====================================================

# 6. 编码器模型(核心组件1)

# =====================================================

class EncoderRNN(nn.Module):

def __init__(self, input_size, hidden_size):

super(EncoderRNN, self).__init__()

self.hidden_size = hidden_size # 隐藏层维度

# 词嵌入层(将词索引转为向量)

self.embedding = nn.Embedding(input_size, hidden_size)

# GRU层(核心循环单元)

self.gru = nn.GRU(hidden_size, hidden_size)

# 前向传播(核心逻辑)

def forward(self, input, hidden):

# 1. 词嵌入:[batch=1, 1] -> [1,1,hidden_size]

embedded = self.embedding(input).view(1, 1, -1)

# 2. GRU处理:输入=嵌入向量,隐藏状态=初始状态

output, hidden = self.gru(embedded, hidden)

return output, hidden

# 初始化隐藏状态(每次新序列开始)

def initHidden(self):

return torch.zeros(1, 1, self.hidden_size, device=device)

# =====================================================

# 7. 解码器模型(核心组件2)

# =====================================================

class DecoderRNN(nn.Module):

def __init__(self, hidden_size, output_size):

super(DecoderRNN, self).__init__()

self.hidden_size = hidden_size

# 词嵌入层

self.embedding = nn.Embedding(output_size, hidden_size)

# GRU层

self.gru = nn.GRU(hidden_size, hidden_size)

# 输出层(将隐藏状态映射到词汇表)

self.out = nn.Linear(hidden_size, output_size)

# Softmax(概率分布)

self.softmax = nn.LogSoftmax(dim=1)

def forward(self, input, hidden):

# 1. 词嵌入

output = self.embedding(input).view(1, 1, -1)

# 2. ReLU激活(增加非线性)

output = F.relu(output)

# 3. GRU处理

output, hidden = self.gru(output, hidden)

# 4. 输出层 + Softmax

output = self.softmax(self.out(output[0]))

return output, hidden

def initHidden(self):

return torch.zeros(1, 1, self.hidden_size, device=device)

# =====================================================

# 8. 数据预处理工具(关键转换)

# =====================================================

# 文本转索引(词 -> 索引)

def indexesFromSentence(lang, sentence):

return [lang.word2index[word] for word in sentence.split(' ')]

# 索引转张量(添加EOS并转为PyTorch张量)

def tensorFromSentence(lang, sentence):

indexes = indexesFromSentence(lang, sentence)

indexes.append(EOS_token) # 添加结束符

return torch.tensor(indexes, dtype=torch.long, device=device).view(-1, 1)

# 双语对转张量(核心数据准备函数)

def tensorsFromPair(pair):

input_tensor = tensorFromSentence(input_lang, pair[0])

target_tensor = tensorFromSentence(output_lang, pair[1])

return (input_tensor, target_tensor)

# =====================================================

# 9. 训练核心(关键算法)

# =====================================================

teacher_forcing_ratio = 0.5 # 教师强制率(训练技巧)

def train(input_tensor, target_tensor, encoder, decoder,

encoder_optimizer, decoder_optimizer, criterion, max_length=MAX_LENGTH):

# 1. 初始化编码器状态

encoder_hidden = encoder.initHidden()

# 2. 清空梯度(避免累积)

encoder_optimizer.zero_grad()

decoder_optimizer.zero_grad()

input_length = input_tensor.size(0) # 输入序列长度

target_length = target_tensor.size(0) # 目标序列长度

# 3. 初始化编码器输出(用于注意力机制)

encoder_outputs = torch.zeros(max_length, encoder.hidden_size, device=device)

loss = 0 # 累计损失

# 4. 编码器处理输入序列

for ei in range(input_length):

# 注意:每次输入一个词

encoder_output, encoder_hidden = encoder(input_tensor[ei], encoder_hidden)

encoder_outputs[ei] = encoder_output[0, 0] # 保存输出

# 5. 解码器初始化

decoder_input = torch.tensor([[SOS_token]], device=device) # SOS开始

decoder_hidden = encoder_hidden # 传递编码器状态

# 6. 教师强制策略(关键技巧)

use_teacher_forcing = True if random.random() < teacher_forcing_ratio else False

# 7. 解码器训练

if use_teacher_forcing:

# 教师强制:用真实目标词作为下一次输入(加速收敛)

for di in range(target_length):

decoder_output, decoder_hidden = decoder(decoder_input, decoder_hidden)

loss += criterion(decoder_output, target_tensor[di])

decoder_input = target_tensor[di] # 下次输入=真实目标词

else:

# 无教师强制:用模型预测词作为下一次输入(更像推理)

for di in range(target_length):

decoder_output, decoder_hidden = decoder(decoder_input, decoder_hidden)

topv, topi = decoder_output.topk(1) # 取最高概率词

decoder_input = topi.squeeze().detach() # 保留计算图但断开

loss += criterion(decoder_output, target_tensor[di])

if decoder_input.item() == EOS_token: # 到达结束符

break

# 8. 反向传播与优化

loss.backward()

encoder_optimizer.step()

decoder_optimizer.step()

return loss.item() / target_length # 返回平均损失

# =====================================================

# 10. 训练监控工具(实用功能)

# =====================================================

def asMinutes(s):

m = math.floor(s / 60)

s -= m * 60

return '%dm %ds' % (m, s)

def timeSince(since, percent):

now = time.time()

s = now - since

es = s / (percent)

rs = es - s

return '%s (- %s)' % (asMinutes(s), asMinutes(rs))

# =====================================================

# 11. 训练主循环(核心训练逻辑)

# =====================================================

def trainIters(encoder, decoder, n_iters, print_every=1000,

plot_every=100, learning_rate=0.01):

start = time.time()

plot_losses = [] # 存储绘图数据

print_loss_total = 0 # 用于打印的累计损失

plot_loss_total = 0 # 用于绘图的累计损失

# 1. 创建优化器(SGD是基础选择,Adam更常用但这里用SGD)

encoder_optimizer = optim.SGD(encoder.parameters(), lr=learning_rate)

decoder_optimizer = optim.SGD(decoder.parameters(), lr=learning_rate)

# 2. 准备训练数据(随机采样n_iters条)

training_pairs = [tensorsFromPair(random.choice(pairs)) for i in range(n_iters)]

criterion = nn.NLLLoss() # 负对数似然损失(适合分类任务)

# 3. 训练主循环

for iter in range(1, n_iters + 1):

training_pair = training_pairs[iter - 1]

input_tensor = training_pair[0]

target_tensor = training_pair[1]

# 4. 执行单次训练迭代

loss = train(input_tensor, target_tensor, encoder,

decoder, encoder_optimizer, decoder_optimizer, criterion)

print_loss_total += loss

plot_loss_total += loss

# 5. 每print_every轮打印进度

if iter % print_every == 0:

print_loss_avg = print_loss_total / print_every

print_loss_total = 0

print('%s (%d %d%%) %.4f' % (timeSince(start, iter / n_iters),

iter, iter / n_iters * 100, print_loss_avg))

# 6. 每plot_every轮保存损失用于绘图

if iter % plot_every == 0:

plot_loss_avg = plot_loss_total / plot_every

plot_losses.append(plot_loss_avg)

plot_loss_total = 0

return plot_losses # 返回损失历史

# =====================================================

# 12. 模型实例化(关键配置)

# =====================================================

hidden_size = 256 # 隐藏层维度(常用值:128/256/512)

encoder1 = EncoderRNN(input_lang.n_words, hidden_size).to(device) # 编码器

attn_decoder1 = DecoderRNN(hidden_size, output_lang.n_words).to(device) # 解码器

# =====================================================

# 13. 训练执行(启动训练)

# =====================================================

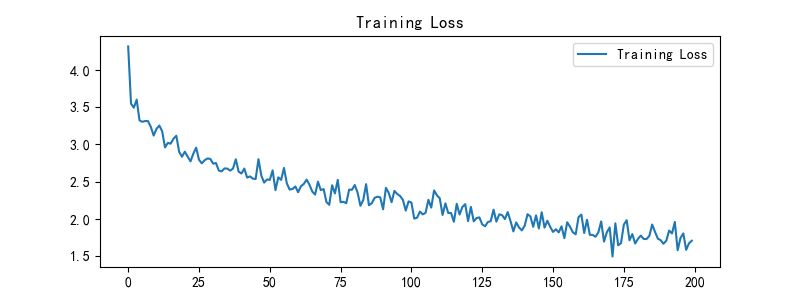

plot_losses = trainIters(encoder1, attn_decoder1, 20000, print_every=5000)

# =====================================================

# 14. 可视化(解决Jupyter图片问题的关键)

# =====================================================

# 注意:必须添加%matplotlib inline才能在Jupyter显示图片!

import os

os.environ["KMP_DUPLICATE_LIB_OK"] = "TRUE" # 允许重复加载 OpenMP 库,解决 Error #15

import matplotlib.pyplot as plt

import warnings

warnings.filterwarnings("ignore") # 忽略警告

# 中文支持(解决乱码问题)

plt.rcParams['font.sans-serif'] = ['SimHei'] # 黑体

plt.rcParams['axes.unicode_minus'] = False # 正确显示负号

# 设置图像分辨率(100 DPI是标准值)

plt.rcParams['figure.dpi'] = 100

# 创建绘图

epochs_range = range(len(plot_losses)) # 横坐标:训练轮次

plt.figure(figsize=(8, 3)) # 设置图形尺寸

plt.subplot(1, 1, 1) # 1行1列第1个子图

plt.plot(epochs_range, plot_losses, label='Training Loss') # 绘制损失曲线

plt.legend(loc='upper right') # 图例位置

plt.title('Training Loss') # 标题

plt.show() # 关键!显示图像(Jupyter需配合%matplotlib inline)