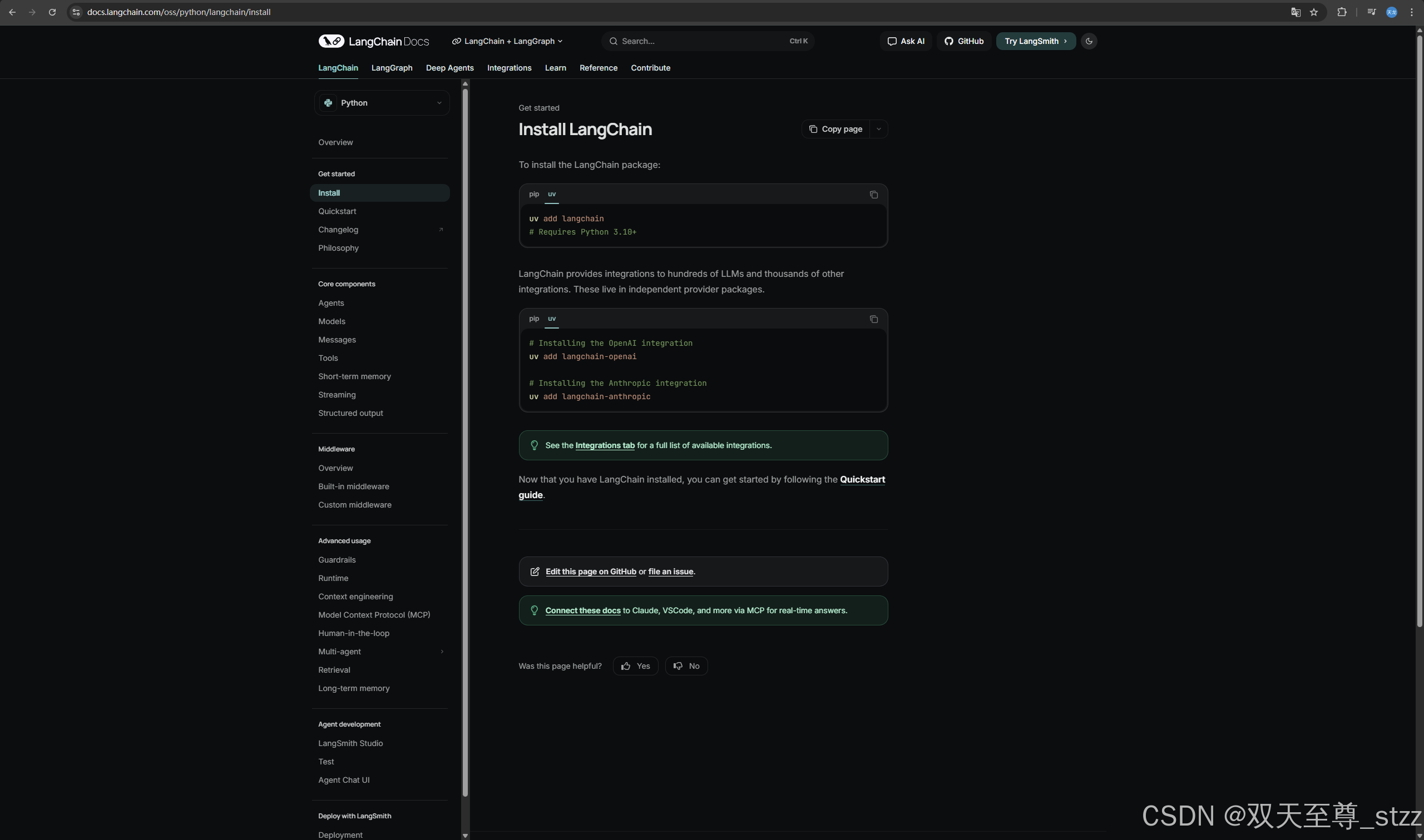

https://docs.langchain.com/oss/python/langchain/install

bash

uv add langchain[openai]

uv add dotenv集成厂商:

pyproject.toml:

bash

[project]

name = "langchain_demo"

version = "0.1.0"

description = "langchain demo"

readme = "README.md"

requires-python = ">=3.13, < 3.14"

dependencies = [

"dotenv>=0.9.9",

"langchain[openai]>=1.2.0",

]聊天调用:

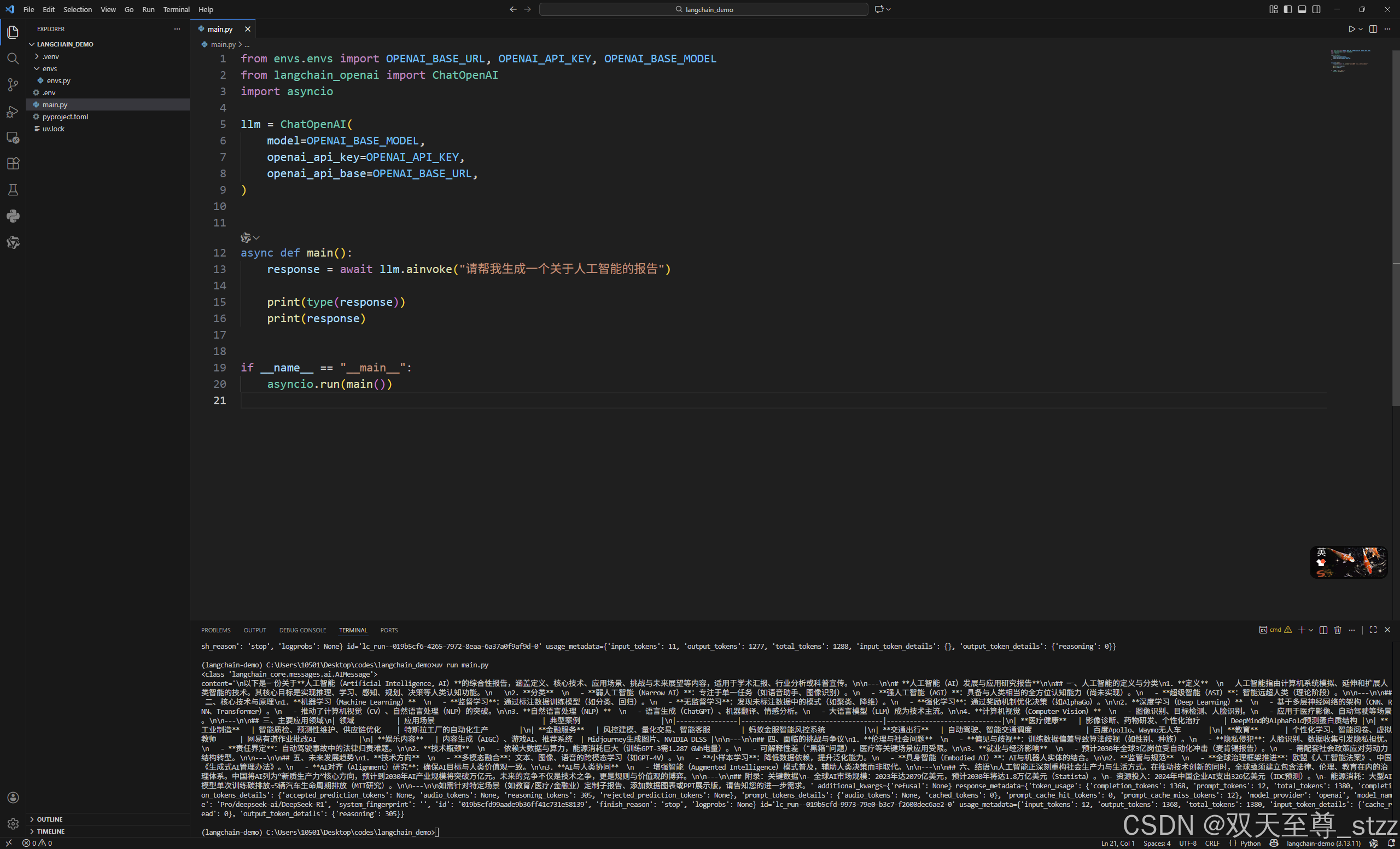

python

from envs.envs import OPENAI_BASE_URL, OPENAI_API_KEY, OPENAI_BASE_MODEL

from langchain_openai import ChatOpenAI

import asyncio

llm = ChatOpenAI(

model=OPENAI_BASE_MODEL,

openai_api_key=OPENAI_API_KEY,

openai_api_base=OPENAI_BASE_URL,

)

async def main():

response = await llm.ainvoke("请帮我生成一个关于人工智能的报告")

print(type(response))

print(response)

if __name__ == "__main__":

asyncio.run(main())

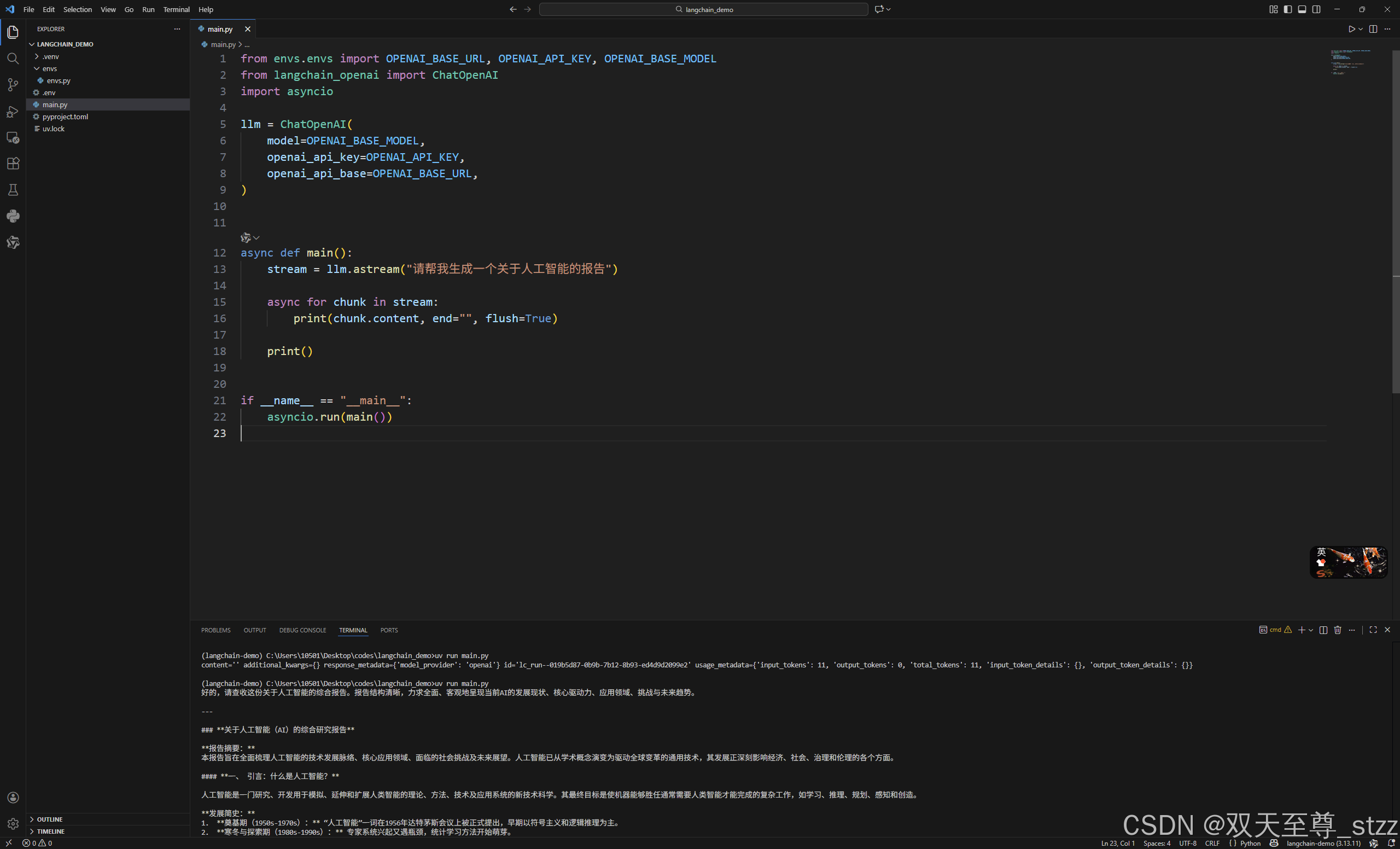

流式输出:

python

from envs.envs import OPENAI_BASE_URL, OPENAI_API_KEY, OPENAI_BASE_MODEL

from langchain_openai import ChatOpenAI

import asyncio

llm = ChatOpenAI(

model=OPENAI_BASE_MODEL,

openai_api_key=OPENAI_API_KEY,

openai_api_base=OPENAI_BASE_URL,

)

async def main():

stream = llm.astream("请帮我生成一个关于人工智能的报告")

async for chunk in stream:

print(chunk.content, end="", flush=True)

print()

if __name__ == "__main__":

asyncio.run(main())