作者:来自 Elastic Han Xiang Choong

学习如何构建一个 agent 知识库,并测试它基于上下文查询信息源的能力,对超出范围的查询使用 WebSearch,并根据用户意图优化推荐。

Agent Builder 现在以 tech preview 形式提供。使用 Elastic Cloud Trial 开始,并在这里查看 Agent Builder 的文档。

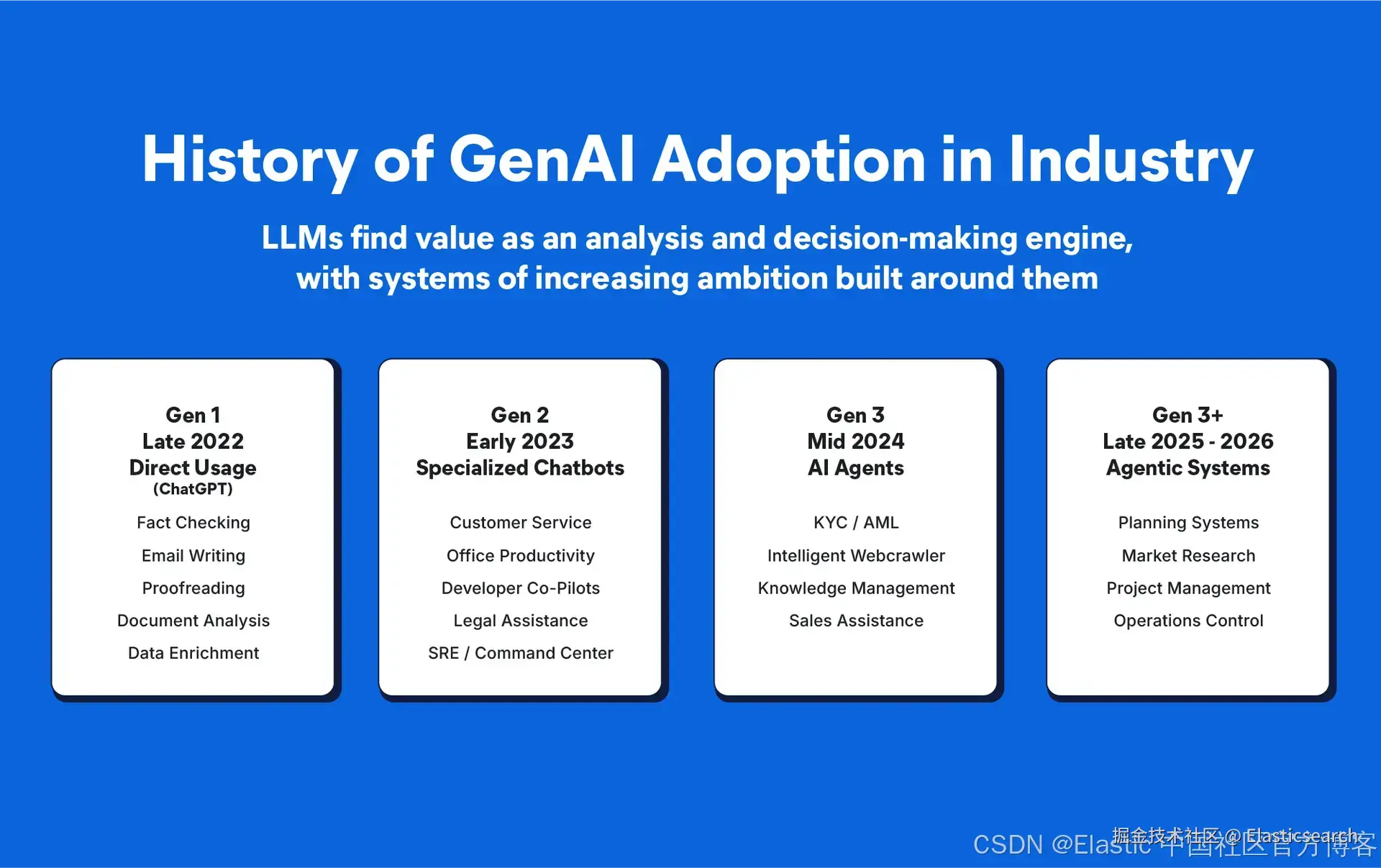

大型语言模型在各个行业中的应用。

大型语言模型在各个行业中的应用。

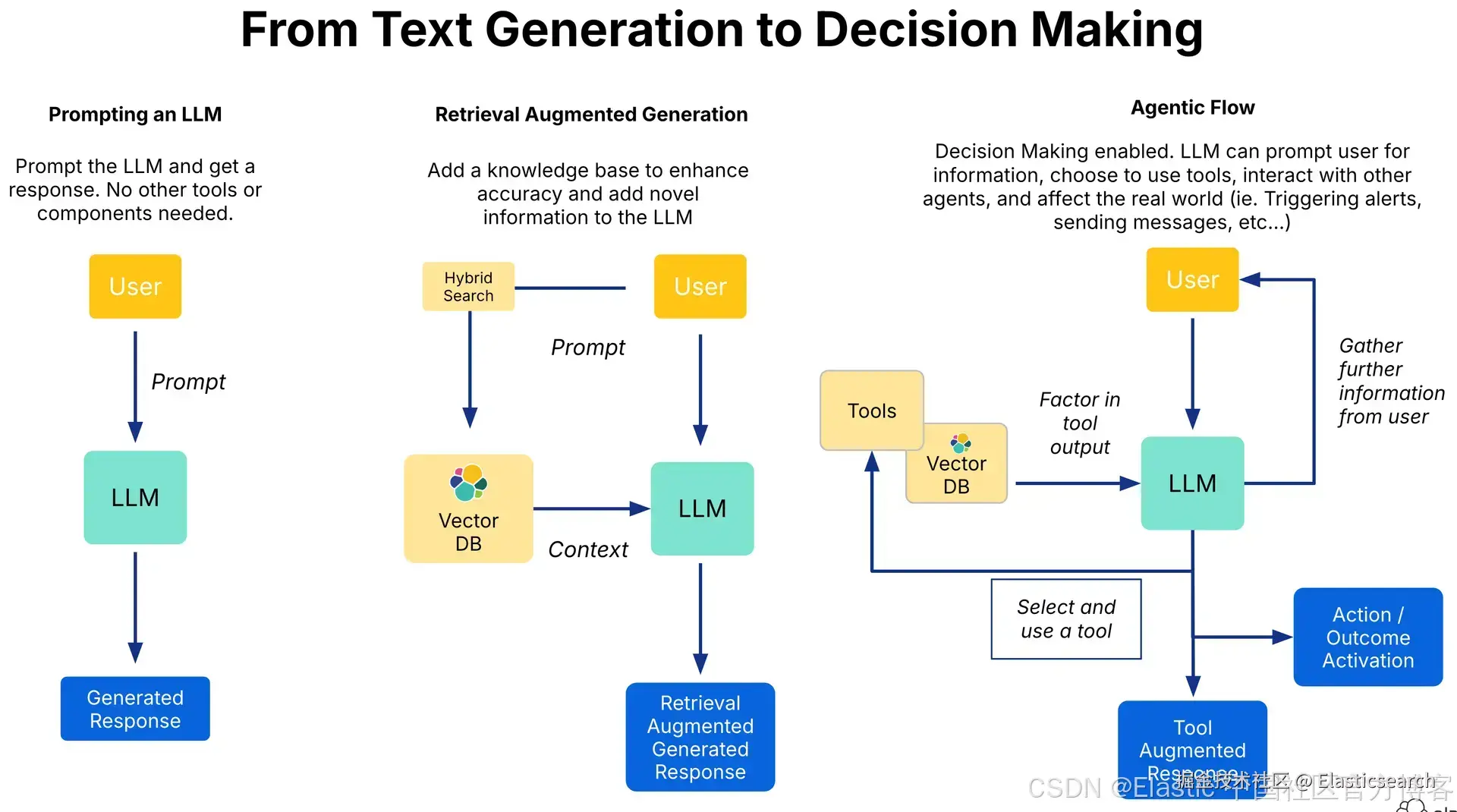

在行业使用场景中,与大型语言模型( LLMs )交互主要有两种模式。直接查询,即以临时方式与 LLM 对话,适用于获取诸如摘要、校对、信息抽取以及非特定领域查询等任务的帮助。

对于特定的业务应用,例如客户关系管理、 IT 系统维护以及调查工作等,仅使用 LLM 是不够的。私有的、企业特有的信息,或关于小众兴趣和主题的信息,甚至来自特定文档和书面来源的信息,通常在 LLM 的训练数据集中是缺失的。此外,现实世界的数据在不断变化,企业环境也在持续演进。 LLM 也往往需要对事实准确性进行强化。所有这些因素都限制了直接使用 LLM 在企业使用场景中的实用价值,尤其是那些需要关于特定技术或业务主题的最新事实信息的场景。

检索增强生成( Retrieval Augmented Generation,RAG )通过使用可搜索的数据存储来检索与用户查询的上下文和意图相关的信息源,被推广为解决这一不足的方法。围绕 RAG 应用的实现、评估以及质量改进已经开展了大量工作, RAG 也在企业使用场景中被广泛采用,用于提升生产力和实现工作流自动化。然而, RAG 并未利用大型语言模型的决策能力。

大型语言模型的不同应用模式。

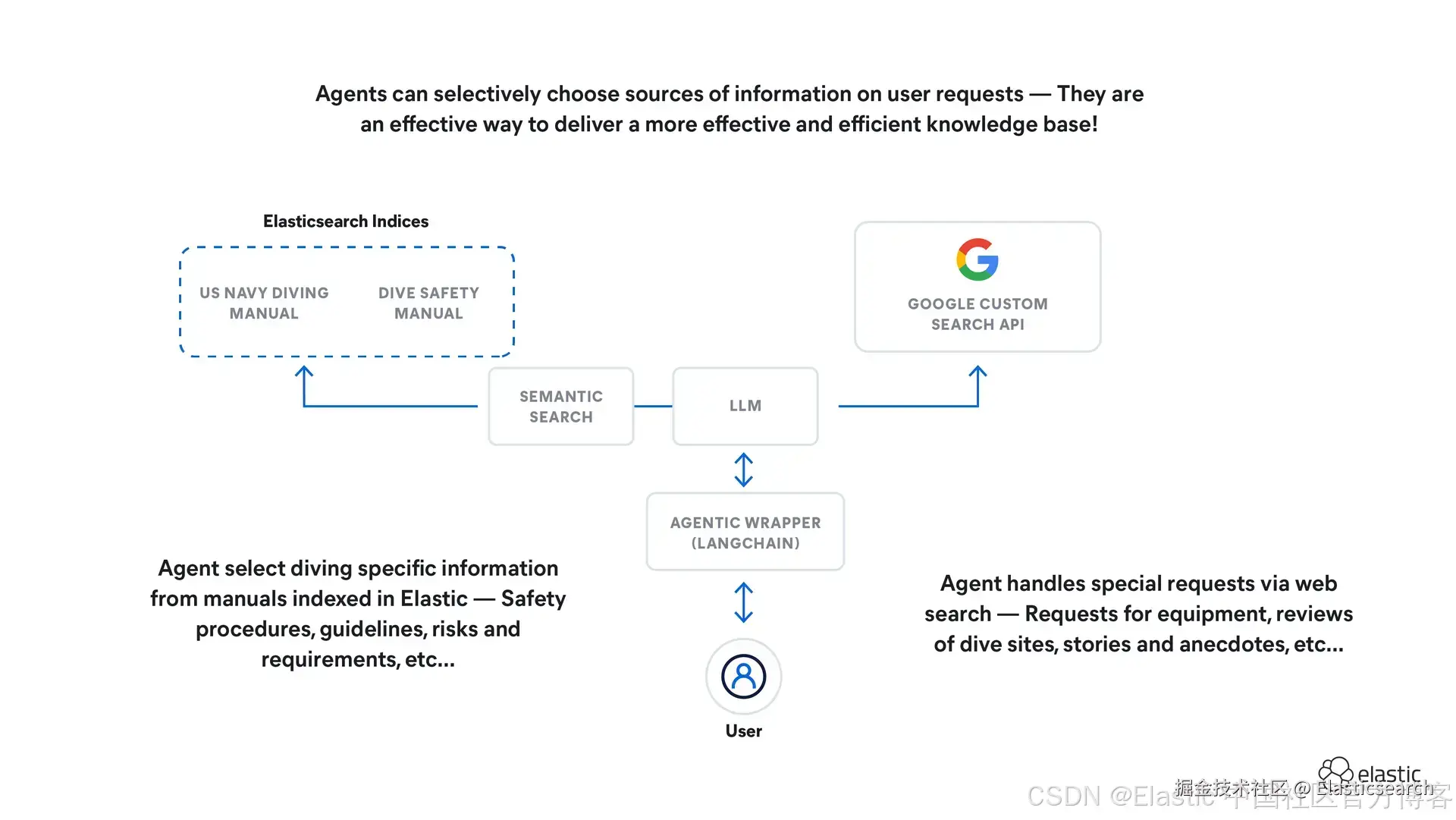

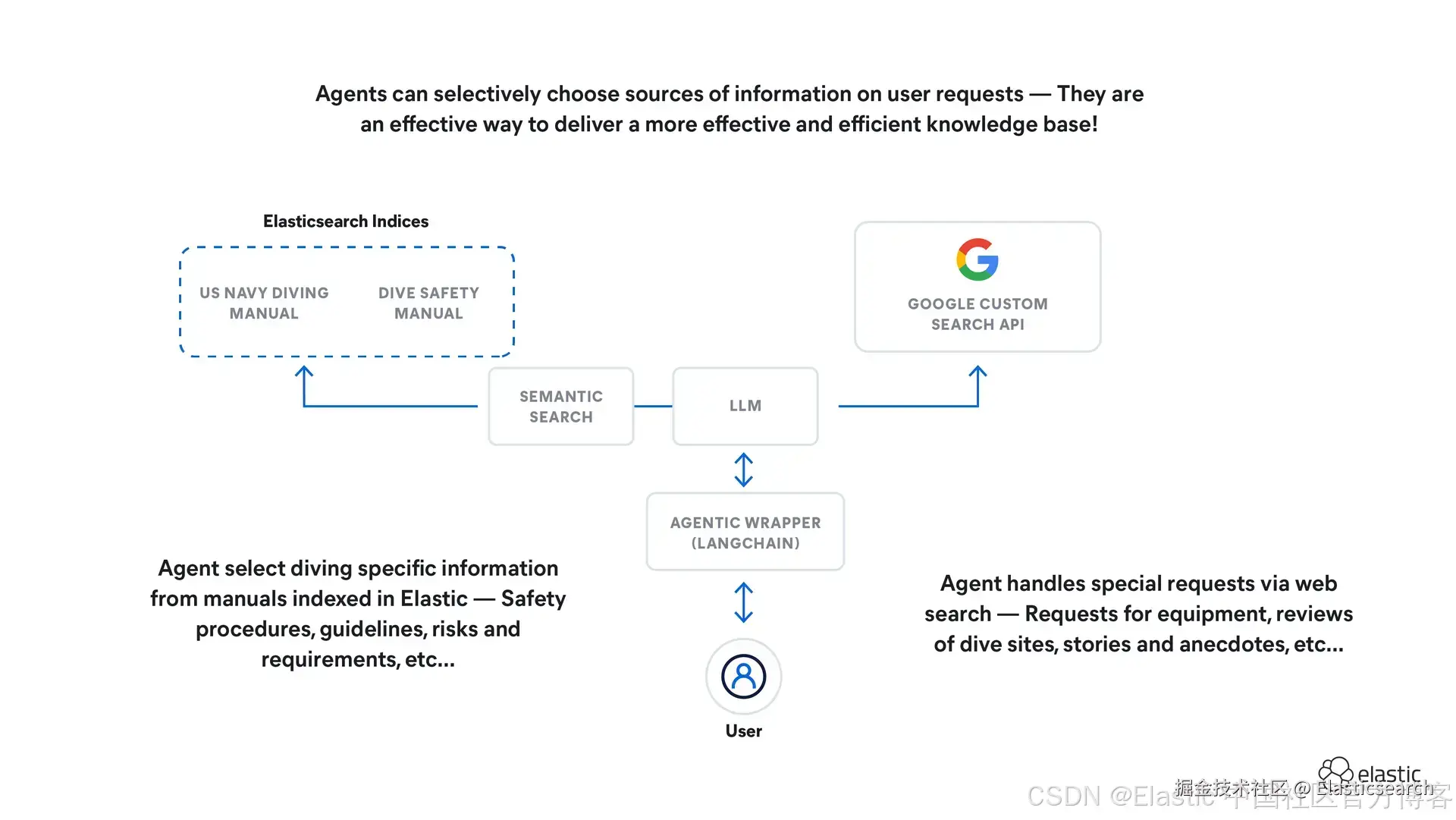

Agentic 模型的核心在于 LLM 能够根据用户输入采取特定的行动。这些行动可能涉及使用工具来增强 LLM 现有的能力。从这个意义上说, RAG 充当一个长期记忆存储, LLM agent 可以选择使用它来增强和强化对用户查询的回答。传统的 RAG 模型是由 LLM 查询一个或多个知识库,而 agentic 实现则允许 LLM 从一组知识库中进行选择。这使问答行为更加灵活,并且可以提高准确性,因为无关知识库中的信息会被省略,从而减少潜在的噪声来源。我们可以将这样的系统称为 " agent 知识库 "。下面我们来看看如何使用 Elasticsearch 实现这样一个系统。

设计一个 agent 知识库

所有代码都可以在 GitHub 仓库中找到。

本文实现的 agent 知识库。

本文实现的 agent 知识库。

我最近在尝试潜水后对它产生了兴趣,并意识到它可以治愈我长期的海洋恐惧症( thalassophobia ),于是我决定为潜水专门建立一个 agent 知识库。

- 美国海军潜水手册 - 包含大量关于潜水操作和设备的技术细节。

- 潜水安全手册 - 包含面向休闲潜水员的一般指导方针和操作程序。

- Google Custom Search API - 能够搜索网页中不包含在上述两本手册中的任何信息。

目标是让这个 Diving Assistant 成为潜水相关知识的一站式工具,能够回答任何查询,即使是超出已导入知识库范围的问题。 LLM 会识别用户查询背后的动机,并选择最可能相关的信息源。我决定使用 LangChain 作为 agentic 包装器,并在其上构建了一个 streamlit UI。

设置端点

我首先创建一个 .env 文件,并填入以下变量:

ini

`

1. ELASTIC_ENDPOINT=<ELASTIC CLOUD ENDPOINT>

2. ELASTIC_API_KEY=<ELASTIC CLOUD API KEY>

4. # Enable custom search API

5. # https://developers.google.com/custom-search/v1/introduction/?apix=true

6. GCP_API_KEY=<GCP API KEY>

7. GCP_PSE_ID=<GCP PSE ID>

9. AZURE_OPENAI_SYSTEM_PROMPT="You are a helpful assistant. Be as concise and efficient as possible. Convey maximum meaning in fewest words possible."

11. AZURE_OPENAI_ENDPOINT=<AZURE ENDPOINT>

12. AZURE_OPENAI_API_VERSION=<AZURE API VERDSION>

13. AZURE_OPENAI_API_KEY=<AZURE API KEY>

14. AZURE_OPENAI_MODEL="gpt-4o-mini"

`AI写代码该项目使用了部署在 Azure OpenAI 上的 GPT-4o-Mini,以及 Google Custom Search API 和用于存储数据的 Elastic Cloud 部署。我还添加了一个自定义系统提示,鼓励 LLM 尽量避免啰嗦。

导入和处理

美国海军潜水手册和潜水安全手册都是 PDF 格式,因此下一步是将它们导入到 Elastic Cloud 部署中。我使用 Elastic 的 bulk API 设置了这个 python 脚本,将文档上传到 Elastic Cloud:

python

`

1. import os

2. from collections import defaultdict

3. from concurrent.futures import ThreadPoolExecutor, as_completed

4. from elasticsearch import Elasticsearch, helpers # elasticsearch==8.14.0

5. from tqdm import tqdm # tqdm==4.66.4

6. from llama_index.core import SimpleDirectoryReader

8. def bulk_upload_to_elasticsearch(data, index_name, es, batch_size=500, max_workers=10):

9. '''

10. data: [ {document} ]

11. document: {

12. "_id": str

13. ...

14. }

15. index_name: str

16. es: Elasticsearch

17. batch_size: int

18. max_workers: int

19. '''

20. total_documents = len(data)

21. success_bar = tqdm(total=total_documents, desc="Successful uploads", colour="green")

22. failed_bar = tqdm(total=total_documents, desc="Failed uploads", colour="red")

24. def create_action(doc):

25. '''

26. Define upload action from source documents

27. '''

28. return {

29. "_index": index_name,

30. "_id": doc["id_"],

31. "body": doc["text"]

32. }

34. def read_and_create_batches(data):

35. '''

36. Yield document batches

37. '''

38. batch = []

39. for doc in data:

40. batch.append(create_action(doc))

41. if len(batch) == batch_size:

42. yield batch

43. batch = []

44. if batch:

45. yield batch

47. def upload_batch(batch):

48. '''

49. Make bulk call for batch

50. '''

51. try:

52. success, failed = helpers.bulk(es, batch, raise_on_error=False, request_timeout=45)

53. if isinstance(failed, list):

54. failed = len(failed)

55. return success, failed

56. except Exception as e:

57. print(f"Error during bulk upload: {str(e)}")

58. return 0, len(batch)

59. '''

60. Parallel execution of batch upload

61. '''

62. with ThreadPoolExecutor(max_workers=max_workers) as executor:

63. future_to_batch = {executor.submit(upload_batch, batch): batch for batch in read_and_create_batches(data)}

64. for future in as_completed(future_to_batch):

65. success, failed = future.result()

66. success_bar.update(success)

67. failed_bar.update(failed)

69. '''

70. Update progress bars

71. '''

72. total_uploaded = success_bar.n

73. total_failed = failed_bar.n

74. success_bar.close()

75. failed_bar.close()

77. return total_uploaded, total_failed

79. # This is connecting to ES Cloud via credentials stored in .env

80. # May have to change this to suit your env.

81. try:

82. es_endpoint = os.environ.get("ELASTIC_ENDPOINT")

83. es_client = Elasticsearch(

84. es_endpoint,

85. api_key=os.environ.get("ELASTIC_API_KEY")

86. )

87. except Exception as e:

88. es_client = None

90. print(es_client.ping())

`AI写代码收起代码块在下载美国海军潜水手册 PDF 并将其存放在单独文件夹后,我使用 LlamaIndex 的 SimpleDirectoryReader 加载 PDF 数据,然后触发批量上传:

ini

`

1. reader = SimpleDirectoryReader(input_dir="./data")

2. documents = reader.load_data()

3. bulk_upload_to_elasticsearch([i.to_dict() for i in list(documents)],

4. "us_navy_dive_manual_raw",

5. es_client, batch_size=16, max_workers=10)

`AI写代码这会将所有文本内容发送到 Elastic Cloud,每页 PDF 作为一个单独的文档,存入名为 us_navy_dive_manual_raw 的索引中。没有进行进一步处理,因此上传全部 991 页的过程不到一秒。下一步是在 Elastic Cloud 中进行语义嵌入。

语义数据嵌入与分块

在我的 Elastic Cloud DevTools 控制台中,我首先使用 Elastic 推理 API 部署 ELSER v2 模型。

bash

`

1. PUT _inference/sparse_embedding/elser_v2

2. {

3. "service": "elasticsearch",

4. "service_settings": {

5. "num_allocations": 1,

6. "num_threads": 8,

7. "model_id": ".elser_model_2_linux-x86_64"

8. },

9. "chunking_settings": {

10. "strategy": "sentence",

11. "max_chunk_size": 250,

12. "sentence_overlap": 1

13. }

14. }

`AI写代码然后我定义了一个简单的 pipeline。每个文档在 body 字段中存储潜水手册一页的文本,因此我将 body 的内容复制到一个名为 semantic_content 的字段中。

bash

`

1. PUT _ingest/pipeline/diving_pipeline

2. {

3. "processors": [

4. {

5. "set": {

6. "field": "semantic_content",

8. "copy_from": "body",

9. "if": "ctx.body != null"

10. }

11. }

12. ]

13. }

`AI写代码然后我创建了一个名为 us_navy_dive_manual 的新索引,并将 semantic_content 设置为 semantic_text 字段:

bash

`

1. PUT us_navy_dive_manual

2. {

3. "mappings": {

4. "properties": {

5. "semantic_content": {

6. "type": "semantic_text",

7. "inference_id": "elser_v2"

8. }

9. }

10. }

11. }

`AI写代码然后我触发了一个 reindex 任务。现在数据将从 us_navy_dive_manual_raw 流向 ELSER 进行分块和嵌入,并重新索引到 us_navy_dive_manual 中以供使用。

bash

`

1. POST _reindex?slices=auto&wait_for_completion=false

2. {

3. "source": {

4. "index": "us_navy_dive_manual_raw",

5. "size": 4

6. },

7. "dest": {

8. "index": "us_navy_dive_manual",

9. "pipeline": "diving_pipeline"

10. },

11. "conflicts": "proceed"

12. }

`AI写代码我对潜水安全手册重复了这个过程,通过这个简单的流程,数据导入就完成了。

Agentic 搜索的工具

这个 agent 相对简单,所以我使用 LangChain 的 AgentExecutor,它可以创建一个 agent 并将其与一组工具捆绑在一起。复杂的决策流程可以使用 LangGraph 实现,我们将在未来的博客中介绍。这里我们将重点关注与 agent 相关的部分,关于实际的 streamlit UI 详情,请查看 github 仓库。

我为我的 agent 创建了两个工具。第一个是 ElasticSearcher 类,它对 Elastic 索引进行语义搜索,然后返回前 10 篇文章的文本。

css

`

1. class ElasticSearcher:

2. def __init__(self):

3. self.client = Elasticsearch(

4. os.environ.get("ELASTIC_ENDPOINT"),

5. api_key=os.environ.get("ELASTIC_API_KEY")

6. )

8. def search(self, query, index="us_navy_dive_manual", size=10):

9. response = self.client.search(

10. index=index,

11. body={

12. "query": {

13. "semantic": {

14. "field": "semantic_content",

15. "query": query

16. }

17. }

18. },

19. size=size

20. )

21. return "\n".join([hit["_source"].get("body", "No Body")

22. for hit in response["hits"]["hits"]])

`AI写代码第二个工具是 Googler 类,它调用 Google Custom Search API 执行一般的网页搜索。

ini

`

1. class Googler:

2. def __init__(self):

3. self.service = build('customsearch', 'v1', developerKey=os.getenv("GCP_API_KEY"))

5. def scrape(self, url):

6. try:

7. response = requests.get(url, timeout=10)

8. if response.status_code == 200:

9. soup = BeautifulSoup(response.text, 'html.parser')

10. for script in soup(["script", "style"]):

11. script.decompose()

12. text = soup.get_text()

13. lines = (line.strip() for line in text.splitlines())

14. chunks = (phrase.strip() for line in lines for phrase in line.split(" "))

15. return '\n'.join(chunk for chunk in chunks if chunk)[:5000]

16. return None

17. except:

18. return None

20. def search(self, query, n=5):

21. results = self.service.cse().list(q=query, cx=os.getenv("GCP_PSE_ID"), num=n).execute()

22. scraped_data = []

23. for item in results.get('items', []):

24. url = item['link']

25. title = item['title']

26. content = self.scrape(url) or item['snippet']

27. scraped_data.append(f"Page: {title}\nURL: {url}\n\n{content}\n")

28. return "\n".join(scraped_data)

`AI写代码然后我为 agent 创建了一组工具。每个工具的描述是 prompt engineering 的重要部分,因为 agent 在选择用于响应用户查询的工具时主要会参考这些描述。

markdown

`

1. tools = [

2. Tool(

3. ,

4. func=lambda q: googler.search(q, n=3),

5. description="Search the web for information. Use for current events or general knowledge or to complement with additional information."

6. ),

7. Tool(

8. ,

9. func=lambda q: elastic.search(q, index="us_navy_dive_manual"),

10. description="Search the Operations Dive Manual. Use for diving procedures, advanced or technical operational planning, resourcing, and technical information."

11. ),

12. Tool(

13. ,

14. func=lambda q: elastic.search(q, index="diving_safety_manual"),

15. description="Search the Diving Safety Manual. Use for generic diving safety protocols and best practices."

16. )

17. ]

`AI写代码接下来,我使用 AzureChatOpenAI 抽象定义一个 LLM:

ini

`

1. llm = AzureChatOpenAI(

2. azure_endpoint=os.getenv("AZURE_OPENAI_ENDPOINT"),

3. api_key=os.getenv("AZURE_OPENAI_API_KEY"),

4. api_version=os.getenv("AZURE_OPENAI_API_VERSION"),

5. deployment_),

6. streaming=False

7. )

`AI写代码并且为 LLM 创建一个自定义 prompt,告诉它如何使用这些工具及其输出。

ini

`

1. prompt = PromptTemplate.from_template("""Answer the following questions as best you can. You have access to the following tools:

2. {tools}

3. You should use multiple tools in conjunction to promote completeness of information.

4. Be comprehensive in your answer.

5. Use the following format:

6. Question: the input question you must answer

7. Thought: you should always think about what to do

8. Action: the action to take, should be one of [{tool_names}]

9. Action Input: the input to the action

10. Observation: the result of the action

11. ... (this Thought/Action/Action Input/Observation can repeat N times)

12. Thought: I now know the final answer

13. Final Answer: the final answer to the original input question

14. Question: {input}

15. {agent_scratchpad}""")

`AI写代码最后,我定义了 agent,将 LLM、prompt 和工具集传入,并将其集成到其余的 UI 中。

ini

`

1. agent = create_react_agent(llm, tools, prompt)

2. agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=False)

`AI写代码到此,我们就可以测试我们的 agent 知识库了。

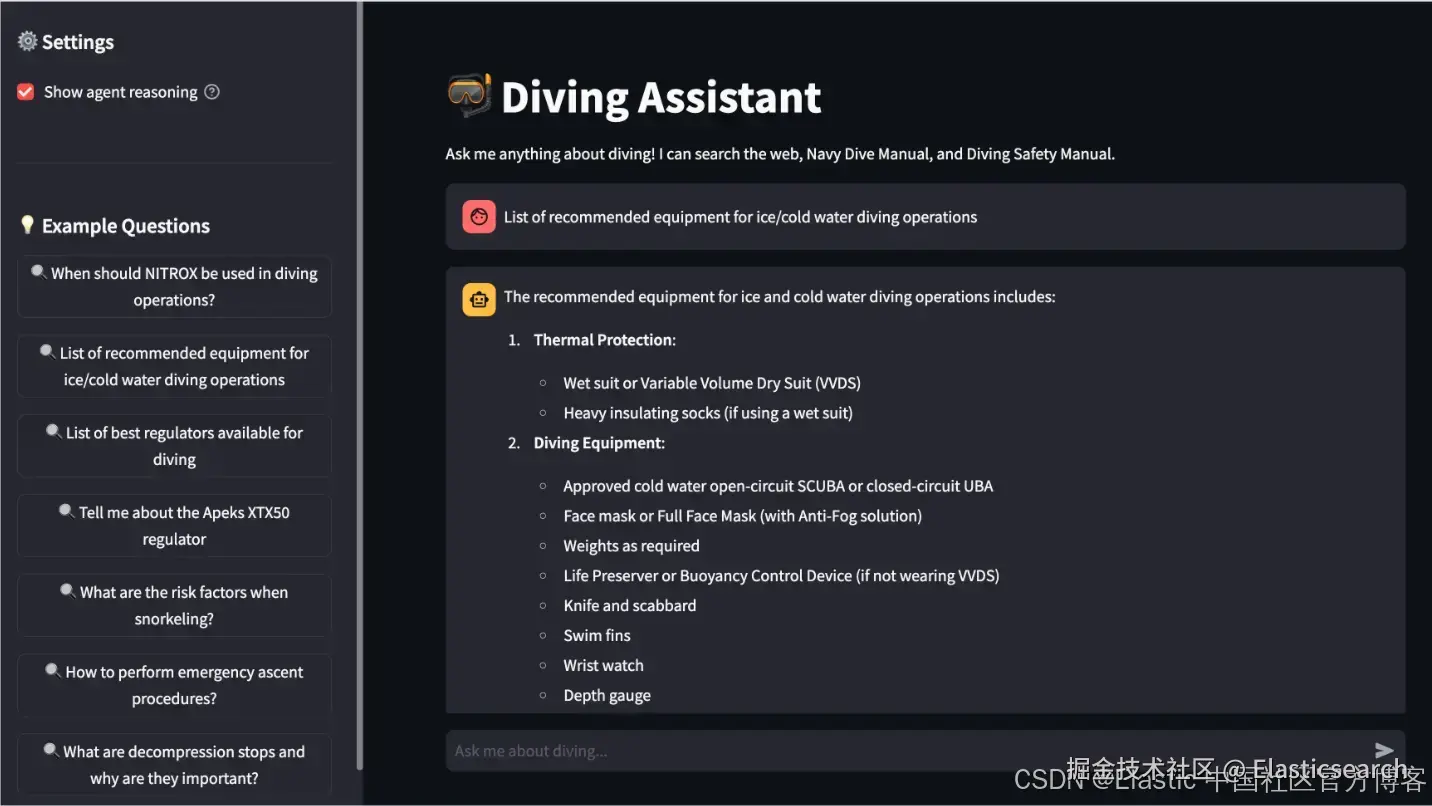

为 agentic 知识库实现的 UI。

测试 1:从特定知识库检索知识

首先,我们应测试知识库组件是否按预期工作。让我们从提出一个详细的技术问题开始。agent 应该选择正确的知识库,检索一些信息,并用它来生成回答。

bash

`List of recommended equipment for ice/cold water diving operations`AI写代码UI 显示了 Agent 的思考过程,它的第一个想法是查阅潜水安全手册:

markdown

`

1. Thought: To provide a comprehensive list of recommended equipment for ice

2. or cold water diving operations, I should consult specialized diving

3. manuals that cover operational procedures and safety protocols.

4. This will ensure that I include all necessary items for safety and

5. efficiency in such challenging environments.

7. Action: NavyDiveManual

9. Action Input: "recommended equipment for ice cold water

10. diving operations"

`AI写代码Agent 使用 NavyDiveManual 工具对 us_navy_dive_manual 索引进行语义搜索。结果是如下信息:

markdown

`

1. CHAPTER 11 --- Ice and Cold Water Diving Operations 11-9

2. A detailed reconnaissance of the dive site will provide the

3. planner with information that is helpful in deciding what ancillary equipment is

4. required. Diving under ice will require special accessory equipment such as a line

5. with lights/strobes for under water navigation, ice-cutting tools, platforms, engine

6. protection kits, and stainless steel ice screws, quick draw, and carabineers.

7. The method of cutting the hole through the ice depends on ice thickness and availability

8. of equipment. Normally, two or more of the following tools are used: hand

9. ice chipper, ice handsaw, ice auger, chain saw, thermal ice cutter or blasting equipment.

10. In addition, equipment to lift the ice block, remove the slush, and mark ...

`AI写代码LLM 然后使用这些信息生成回答:

markdown

`

1. The recommended equipment for ice and cold water diving operations includes:

3. Thermal Protection:

5. * Wet suit or Variable Volume Dry Suit (VVDS)

6. * Heavy insulating socks (if using a wet suit)

7. * Diving Equipment:

8. * Approved cold water open-circuit SCUBA or closed-circuit UBA

`AI写代码回答正是我们想要的,太好了!

测试 2:迭代优化知识收集

下一个测试是看看 agent 是否能选择多个知识来源来优化其回答。让我们提出一个更开放性的问题:

sql

`What are the risk factors when snorkeling?`AI写代码Agent 的第一个想法是搜索 Google,以获取关于风险因素和危险的具体信息:

vbnet

`

1. Thought: To provide a comprehensive answer to the risk factors associated with snorkeling,

2. I should gather information on general safety protocols and specific risks from both diving

3. and snorkeling perspectives. I will start by searching for general snorkeling risks and

4. then look into specific safety guidelines related to diving and snorkeling.

6. Action: WebSearch

7. Action Input: "risk factors when snorkeling"

`AI写代码这会返回如下网页结果:

vbnet

`

1. Factors Contributing to Snorkel Drowning in Hawai'i - PMC

2. URL: https://pmc.ncbi.nlm.nih.gov/articles/PMC8899085/

3. Causes of the extraordinarily high and increasing incidence of snorkeler drownings in Hawai'i

4. have remained unexplained for years. Defining the mechanisms and factors predisposing to

5. drowning while snorkeling is needed to provide recommendations to substantially mitigate

6. the incidence of this form of preventable death. The mechanisms of drowning are described

7. and insight into the predisposing factors are explored in this study...

`AI写代码然后 agent 选择查阅 diving_safety_manual,并采取相应操作来获取安全规程和指导方针。

markdown

`

1. Action: DivingSafetyManual

2. Action Input: "snorkeling safety protocols"

`AI写代码这会从 DivingSafetyManual 工具返回相关信息:

markdown

`

1. Pre-Snorkel briefing

2. Immediately before snorkelling commences, and using site diagrams or

3. photos as appropriate, the snorkeller supervisor should emphasise

4. the following points:

5. • On-site environmental conditions

6. • Boundaries of the snorkelling area

7. • Times when snorkelli ng is being conducted

8. • Specific site hazards

9. • Location of lookout

10. • Emergency and recall signals

11. • Buddy and guided group procedures

`AI写代码最后,LLM 提供了一个全面的回答,包含风险因素和安全指导方针:

markdown

`

1. The risk factors when snorkeling include:

2. Environmental Conditions: Strong currents, waves, and marine life can pose

3. immediate threats. Snorkelers should be aware of local weather and water

4. conditions before entering.

5. Physical Fitness: Snorkeling is a strenuous activity that can be

6. challenging for individuals with pre-existing medical conditions

7. such as heart disease, respiratory issues (e.g., asthma), or those

8. who are not strong swimmers.

9. ...

10. To mitigate these risks, snorkelers should engage in pre-snorkel briefings,

11. wear personal flotation devices, ensure proper equipment checks, and

12. maintain awareness of their environment and physical limits.

`AI写代码测试 3:搜索超出范围的问题

最后一个测试是看看当用户提出的问题明显超出已准备知识库的范围时会发生什么。让我们询问一个特定的潜水产品:

go

`Tell me about the Apeks XTX50 regulator`AI写代码Agent 的第一个想法是查找外部网页以获取相关信息:

less

`

1. Thought: I need to gather information about the Apeks XTX50 regulator,

2. including its features, specifications, and performance in diving conditions.

3. I will begin with a web search for comprehensive details.

4. Action: WebSearch

5. Action Input: "Apeks XTX50 regulator review features specifications"

`AI写代码它从商业网站和潜水爱好者网站中发现了大量信息,并返回了技术规格和用户反馈:

markdown

`1. The Apeks XTX50 is a high-performance scuba diving regulator designed for

2. divers who seek reliability and advanced features at a moderate price point.

3. Here are the key features and specifications:

4. Features:

5. First Stage:

6. Utilizes the DST (Diver Adjustable) first stage with an environmentally

7. sealed design to prevent water ingress. Over-balanced diaphragm technology

8. provides superior performance as divers descend, with medium pressure gas

9. increasing faster than ambient pressure.

10. ...

11. Divers appreciate the XTX50's reliability and comfort, making it a popular

12. choice for both recreational and technical diving.

13. The regulator is lauded for its performance in colder conditions, with

14. many users noting its effectiveness during deep dives.

15. Some divers have raised concerns about the availability of parts for maintenance,

16. as Apeks does not sell parts directly to consumers.

17. ...` AI写代码Agent 没有选择搜索关注操作规划的 US Navy Dive Manual,也没有使用 DivingSafetyManual。

结论

在传统的 RAG 实现中,我们可能会选择强制 LLM 同时搜索并使用所有三个数据源的信息,但这会因为引入无关信息的噪声而降低准确性。使用 agentic 知识库,我们可以看到 LLM 根据用户意图和上下文针对性地搜索特定的知识源。agent 能够通过在初始搜索的基础上整合从其他来源收集的信息来优化知识收集。

Agent 还能够处理超出其准备数据范围的问题,同时能够排除与查询无关的知识库 ------ 这是对传统 RAG 模型的显著增强。

这个 agent 知识库的概念提供了一种优雅的方式,将多种不同来源整合成一个连贯且全面的系统。下一步可以扩展可执行操作的范围以及可参考信息的多样性。引入事实核查和交叉引用的工作流将有助于整体可靠性,而像计算这样的专业能力工具将是一个非常有趣的探索方向。