上一篇文章中,我们介绍了LangChain1.0框架下调用人机交互式大模型的方法。今天,我们聚焦另一个核心实操场景------MCP(Model Context Protocol)的调用流程,以及实践中常见报错的解决方案。

一、基础铺垫:官方****MCP 调用示例

官方文档告诉我们如下调用MCP即可:

python

from langchain_mcp_adapters.client import MultiServerMCPClient

from langchain.agents import create_agent

client = MultiServerMCPClient(

{

"math": {

"transport": "stdio", # Local subprocess communication

"command": "python",

# Absolute path to your math_server.py file

"args": ["/path/to/math_server.py"],

},

"weather": {

"transport": "http", # HTTP-based remote server

# Ensure you start your weather server on port 8000

"url": "http://localhost:8000/mcp",

}

}

)

tools = await client.get_tools()

agent = create_agent(

"claude-sonnet-4-5-20250929",

tools

)

math_response = await agent.ainvoke(

{"messages": [{"role": "user", "content": "what's (3 + 5) x 12?"}]}

)

weather_response = await agent.ainvoke(

{"messages": [{"role": "user", "content": "what is the weather in nyc?"}]}

)二、实战改造:对接百度地图****MCP 获取天气

基于官方示例,我们将MCP改造为百度地图MCP(其自带天气查询工具),实现具体业务场景的落地。完整代码如下:

python

# 通过langchain智能体调用MCP

import requests

import json

from langchain_openai import ChatOpenAI

from langchain.agents import create_agent

from langchain_core.tools import tool

from langchain.agents.middleware import HumanInTheLoopMiddleware

from langgraph.checkpoint.memory import InMemorySaver

from langgraph.types import Command

import uuid

import asyncio

import time

from langchain_mcp_adapters.client import MultiServerMCPClient

model = ChatOpenAI(

streaming=True,

model='deepseek-chat',

openai_api_key=<API KEY>,

openai_api_base='https://api.deepseek.com',

max_tokens=1024,

temperature=0.1

)

async def mcp_agent():

# 我们用两种方式启动 MCP Server:stdio 和 streamable_http

client = MultiServerMCPClient(

{

"baidu-map": {

"command": "cmd",

"args": [

"/c",

"npx",

"-y",

"@baidumap/mcp-server-baidu-map"

],

"env": {

"BAIDU_MAP_API_KEY": <BAIDU API KEY>

},

"transport": "stdio",

},

}

)

tools = await client.get_tools()

for tool in tools:

print(tool.name)

agent = create_agent(

model=model,

tools=tools,

system_prompt="你是一个友好的助手",

)

return agent

async def use_mcp(messages):

agent = await mcp_agent()

response = await agent.ainvoke(messages)

return response

async def main():

messages = {"messages": [{"role": "user", "content": "今天杭州天气怎么样?"}]}

response = await use_mcp(messages)

print(response["messages"][-1].content)

if __name__ == "__main__":

asyncio.run(main())核心说明:MCP调用需异步访问,因此必须使用 ainvoke() 方法,并通过 await 等待返回结果,最终在主函数中用 asyncio.run() 启动异步任务。

三、核心问题:JSON反序列化报错排查与解决

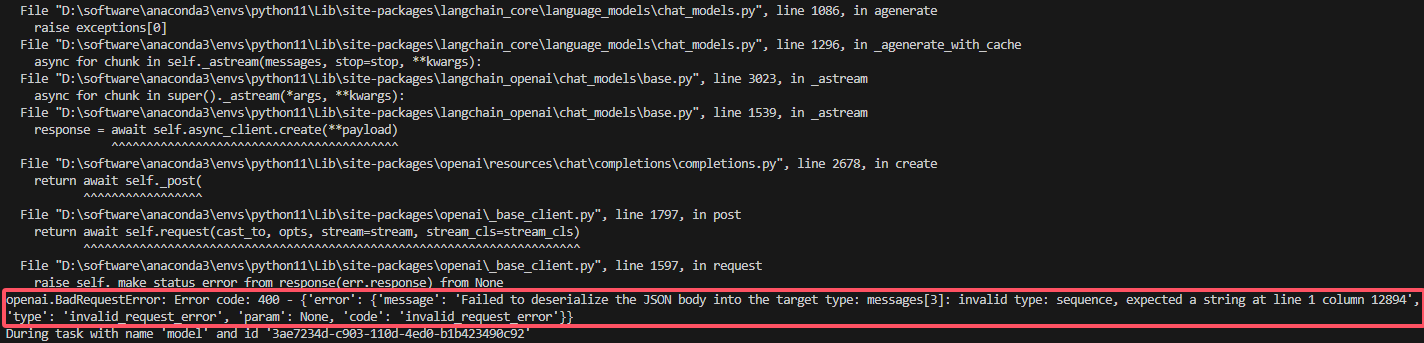

上述代码运行后,出现了关键报错:"Failed to deserialize the JSON body into the target type"(JSON反序列化失败)。

3.1****报错信息核心片段

3.2****问题定位

通过检索发现,该问题大概率与DeepSeek大模型相关(未测试其他模型)。进一步排查环境差异:

- 报错环境:Python 3.11 + langchain-mcp-adapters 0.2.1

- 正常环境:Python 3.10 + langchain-mcp-adapters 0.1.11

核心原因:MCP返回的数据格式 [TextContent(type='text', text='students_scores', annotations=None, meta=None)] 无法直接传递给DeepSeek,需封装为 ToolMessage 类型。

3.3****解决方案:自定义拦截器封装数据

LangChain新版本支持自定义拦截器,可对工具调用结果进行处理。我们通过拦截器将MCP返回数据拆包并封装为 ToolMessage,解决反序列化问题。优化后完整代码:

python

from langchain_openai import ChatOpenAI

from openai import OpenAI

from langchain_mcp_adapters.client import MultiServerMCPClient

from langchain_mcp_adapters.interceptors import MCPToolCallRequest

from mcp.types import TextContent

import json

from langchain.agents import create_agent, AgentState

import asyncio

from langchain.messages import ToolMessage

from langchain.agents.middleware import before_model, after_model

from langgraph.runtime import Runtime

from typing import Any

#from mcp.types import Message

# 配置大模型服务

llm = ChatOpenAI(

streaming=True,

model='deepseek-chat',

openai_api_key=<APK KEY>,

openai_api_base='https://api.deepseek.com',

max_tokens=1024,

temperature=0.1

)

system_prefix = """

请讲中文

当用户提问中涉及天气时,需要使用百度地图的MCP工具进行查询;

当用户提问中涉及电影信息时,需要使用postgresql MCP进行数据查询和操作,仅返回前十条数据,表结构如下:

## 电影表(tb_movie)

"table_name": "tb_movie",

"description": "电影表",

"columns":[

{"column_name": "id","chinese_name": "标识码","data_type": "int"},

{"column_name": "name","chinese_name": "电影名称","data_type": "varchar"},

{"column_name": "actor","chinese_name": "主演","data_type": "varchar"},

{"column_name": "director","chinese_name": "导演","data_type": "varchar"},

{"column_name": "category","chinese_name": "类型","data_type": "varchar"},

{"column_name": "country","chinese_name": "制片国家/地区","data_type": "varchar"},

{"column_name": "language","chinese_name": "语言","data_type": "varchar"},

{"column_name": "release_date","chinese_name": "上映日期","data_type": "varchar"},

{"column_name": "runtime","chinese_name": "片长","data_type": "varchar"},

{"column_name": "abstract","chinese_name": "简介","data_type": "varchar"},

{"column_name": "score","chinese_name": "评分","data_type": "varchar"}

]

当用户提及画图时,返回数据按照如下格式输出,输出图片URL后直接结束,不要输出多余的内容:

1.查询结果:{}

2.图表展示:{图片URL}

否则,直接输出返回结果。

"""

async def append_structured_content(request: MCPToolCallRequest, handler):

"""Append structured content from artifact to tool message."""

result = await handler(request)

runtime = request.runtime

print("========================result.content:", result.content[-1].text)

if result.structuredContent:

result.content += [

TextContent(type="text", text=json.dumps(result.structuredContent)),

]

return ToolMessage(content=result.content, tool_call_id=runtime.tool_call_id)

async def mcp_agent():

client = MultiServerMCPClient(

{

"postgres": {

"command": "cmd",

"args": [

"/c",

"npx",

"-y",

"@modelcontextprotocol/server-postgres",

"postgresql://postgres:123456@localhost:5432/movie"

],

"transport": "stdio",

},

"mcp-server-chart": {

"command": "cmd",

"args": [

"/c",

"npx",

"-y",

"@antv/mcp-server-chart"

],

"transport": "stdio",

},

"baidu-map": {

"command": "cmd",

"args": [

"/c",

"npx",

"-y",

"@baidumap/mcp-server-baidu-map"

],

"env": {

"BAIDU_MAP_API_KEY": <BAIDU API KEY>

},

"transport": "stdio",

}

}, tool_interceptors=[append_structured_content]

)

tools2 = await client.get_tools()

for tool in tools2:

print(tool.name)

agent = create_agent(

model=llm,

tools=tools2,

system_prompt=system_prefix,

)

return agent

async def use_mcp(query:str):

agent = await mcp_agent()

#response = await agent.ainvoke(messages)

try:

response = await agent.ainvoke(

{"messages": [{"role": "user", "content": str}]}

)

print("============================================================")

print(response['messages'][-1].content)

except Exception as e:

print(str(e))

return None

return response

if __name__ == "__main__":

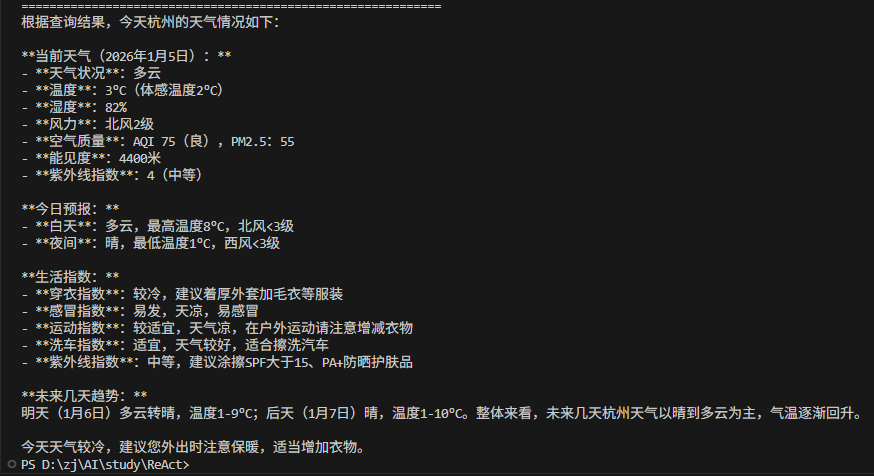

asyncio.run(use_mcp("今天杭州天气怎么样?"))3.4****验证结果

运行优化后代码,成功获取正确天气信息:

可以看到,我们得到了正确的返回结果。这里的核心是append_structured_content()这个方法,把MCPToolCallRequest请求的返回值进行解析并包装成ToolMessage,并传递到MultiServerMCPClient的tool_interceptors中,从而获得正确的结果。

**四、进阶场景:**MCP 工具的人机交互调用

官方文档中MCP的人机交互(Human-In-The-Loop)示例为同步调用,但MCP本身是异步访问的,直接复用会出现兼容性问题。我们需要改造实现异步场景下的人机交互。

4.1****官方示例

python

from langgraph.types import Command

# Human-in-the-loop leverages LangGraph's persistence layer.

# You must provide a thread ID to associate the execution with a conversation thread,

# so the conversation can be paused and resumed (as is needed for human review).

config = {"configurable": {"thread_id": "some_id"}}

# Run the graph until the interrupt is hit.

result = agent.invoke(

{

"messages": [

{

"role": "user",

"content": "Delete old records from the database",

}

]

},

config=config

)

# The interrupt contains the full HITL request with action_requests and review_configs

print(result['__interrupt__'])

# Resume with approval decision

agent.invoke(

Command(

resume={"decisions": [{"type": "approve"}]} # or "reject"

),

config=config # Same thread ID to resume the paused conversation

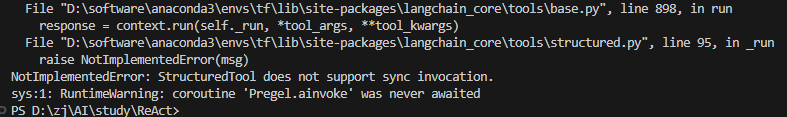

)4.2****异步改造初次尝试与报错

python

async def get_agent():

tools = await client.get_tools()

agent = create_agent(

model=model,

tools=tools,

middleware=[

HumanInTheLoopMiddleware(

interrupt_on={

# 需要审批,允许approve,reject两种审批类型

"generate_bar_chart": {"allowed_decisions": ["approve", "reject"]},

},

description_prefix="Tool execution pending approval",

),

],

checkpointer=InMemorySaver(),

system_prompt='''当用户提及画图时,返回数据按照如下格式输出,输出图片URL后直接结束,不要输出多余的内容:

图表展示:{图片URL}'''

)

for tool in tools:

print(tool.name)

return agent

async def action_tool():

tool_agent = await get_agent()

config = {'configurable': {'thread_id': str(uuid.uuid4())}}

result = tool_agent.ainvoke(

{"messages": [{

"role": "user",

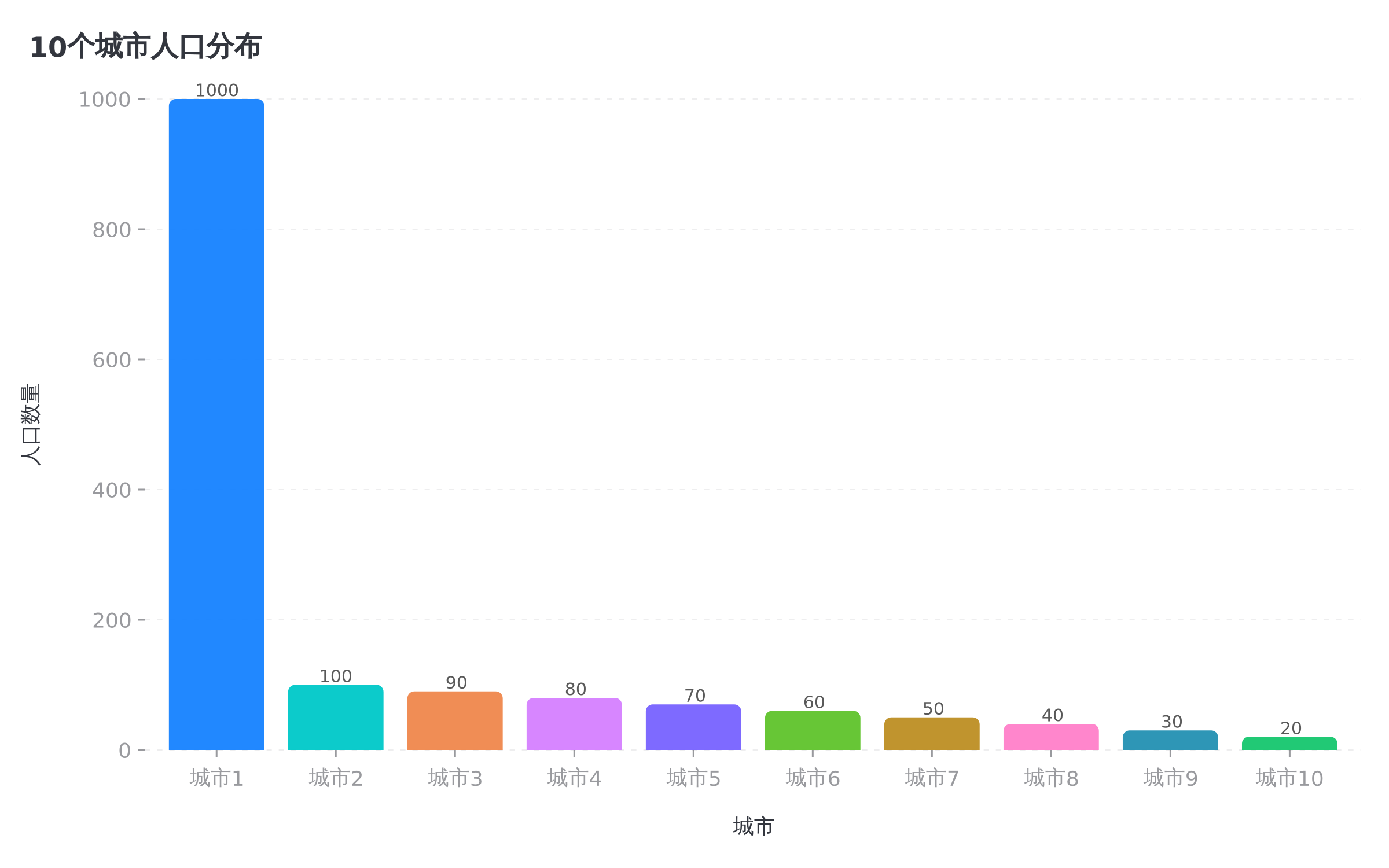

"content": "帮我生成一个柱状图,数据如下:有10个城市,每个城市的人口是1000,100,90,80,70,60,50,40,30,20。"

}]},

config=config,

)

# Resume with approval decision

result = tool_agent.invoke(

Command(

resume={"decisions": [{"type": "approve"}]} # or "edit", "reject"

),

config=config

)

print(result['messages'][-1].content)

if __name__ == '__main__':

asyncio.run(action_tool())按异步思路改造后,出现报错:"StructuredTool does not support sync invocation"(结构化工具不支持同步操作),核心原因是人机交互中间件的同步调用与MCP的异步特性冲突。

4.3****解决方案:异步 MCP 工具封装为同步工具

核心思路:将异步的MCP工具调用封装为同步工具,在同步工具内部通过 asyncio.run() 执行异步任务,实现与同步人机交互中间件的兼容。完整代码:

python

# 通过langchain智能体调用MCP

.... # 导入包和大模型的初始化代码省略了

client = MultiServerMCPClient(

{

"mcp-server-chart": {

"command": "cmd",

"args": [

"/c",

"npx",

"-y",

"@antv/mcp-server-chart"

],

"transport": "stdio",

}

}

)

async def use_mcp(messages):

tools = await client.get_tools()

agent = create_agent(

model=model,

tools=tools,

system_prompt='''当用户提及画图时,返回数据按照如下格式输出,输出图片URL后直接结束,不要输出多余的内容:

图表展示:{图片URL}'''

)

for tool in tools:

print(tool.name)

print(f"messages:{messages}")

response = await agent.ainvoke({

"messages": [{"role": "user", "content": messages}]

})

print(f"response:{response['messages'][-1].content}")

return response

@tool(description="生成图表工具")

def generate_chart(query: str) -> str:

"""generate chart"""

print("==============generate_chart===============")

response = asyncio.run(use_mcp(query))

print(f"response==============:{response}")

return response

# 创建带工具调用的Agent

tool_agent = create_agent(

model=model,

tools=[generate_chart],

middleware=[

HumanInTheLoopMiddleware(

interrupt_on={

# 需要审批,允许approve,reject两种审批类型

"generate_chart": {"allowed_decisions": ["approve", "reject"]},

},

description_prefix="Tool execution pending approval",

),

],

checkpointer=InMemorySaver(),

system_prompt="你是一个友好的助手,请根据用户问题作答,当用户想要画图时,就调用生成图表工具来实现",

)

# 运行Agent

def action_tool():

config = {'configurable': {'thread_id': str(uuid.uuid4())}}

result = tool_agent.invoke(

{"messages": [{

"role": "user",

"content": "帮我生成一个柱状图,数据如下:有10个城市,每个城市的人口是1000,100,90,80,70,60,50,40,30,20。"

}]},

config=config,

)

print(result.get('__interrupt__'))

# Resume with approval decision

result = tool_agent.invoke(

Command(

resume={"decisions": [{"type": "approve"}]} # or "edit", "reject"

),

config=config

)

print(result['messages'][-1].content)

if __name__ == "__main__":

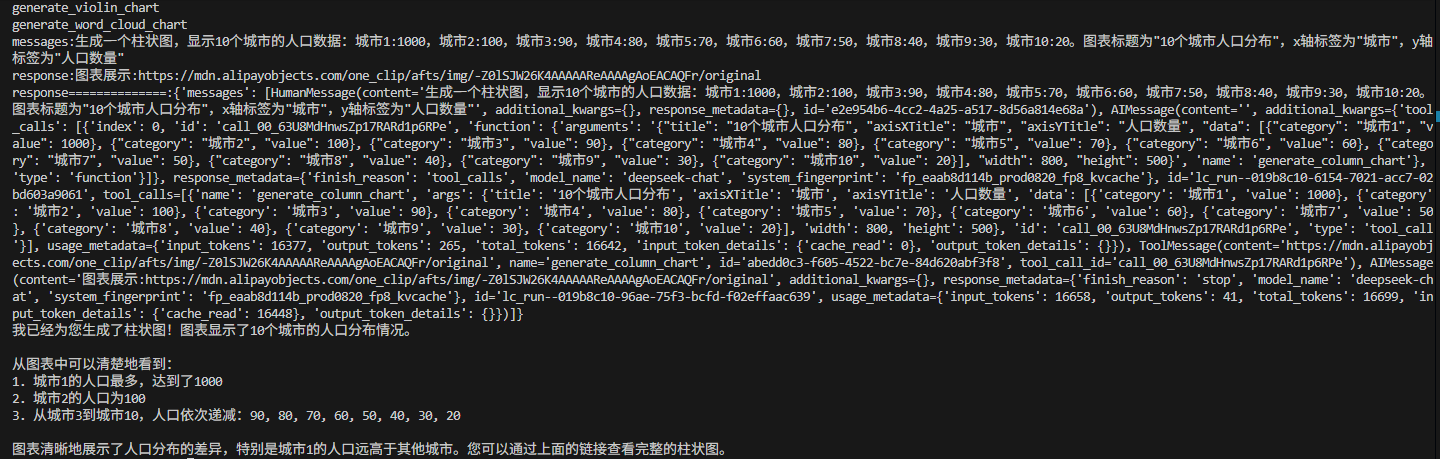

action_tool()4.4****验证结果

运行后成功返回图表URL,浏览器访问即可查看10个城市人口分布柱状图,实现了"异步MCP工具+同步人机交互"的需求。

五、核心总结

- LangChain1.0调用MCP需遵循异步规范,使用 ainvoke() + asyncio.run() 组合;

- DeepSeek大模型与MCP返回数据存在格式兼容问题,可通过自定义拦截器封装 ToolMessage 解决;

- 异步MCP工具对接人机交互中间件时,需将异步调用封装为同步工具,兼容中间件的同步特性;

- 版本差异(Python、langchain-mcp-adapters)可能引发隐藏问题,实践中需注意环境一致性。