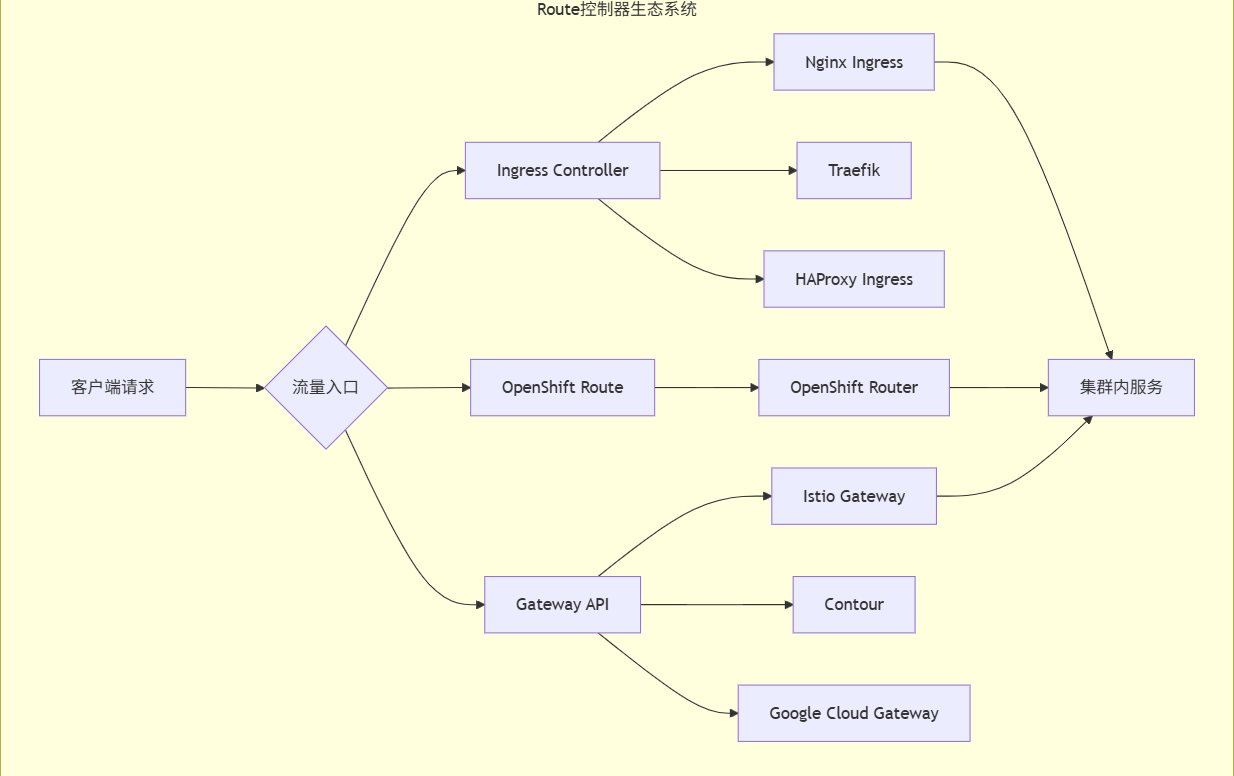

一、Route控制器架构总览

1. 什么是Route控制器?

在Kubernetes生态中,Route控制器 通常指代实现外部流量路由到集群内部服务的组件。它主要包含两种实现:

-

Ingress Controller:Kubernetes原生解决方案

-

OpenShift Route:Red Hat OpenShift扩展实现

-

Gateway API Controllers:新一代标准

二、Ingress Controller深度解析

1. Ingress Controller架构原理

// Ingress Controller核心架构

type IngressController struct {

// 1. 监听器

kubernetesClient clientset.Interface

informerFactory informers.SharedInformerFactory

ingressInformer cache.SharedIndexInformer

serviceInformer cache.SharedIndexInformer

endpointInformer cache.SharedIndexInformer

// 2. 队列系统

workqueue workqueue.RateLimitingInterface

// 3. 配置生成器

configGenerator ConfigGenerator

// 4. 代理管理器

proxyManager ProxyManager

// 5. 状态同步器

statusSyncer StatusSyncer

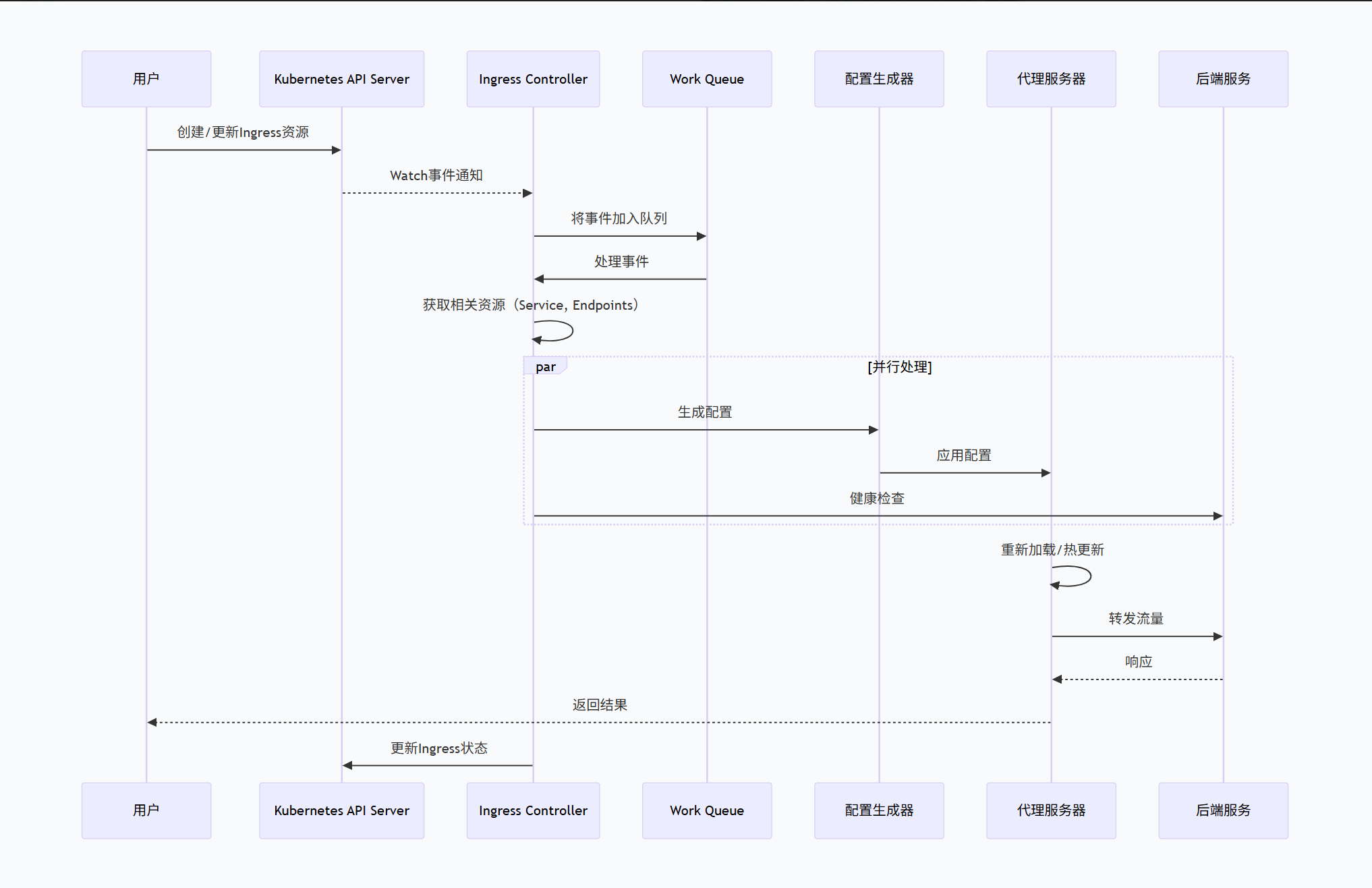

}2. Ingress Controller工作流程

3. Nginx Ingress Controller实现细节

(1) 核心循环

// Nginx Ingress Controller主循环

func (n *NGINXController) syncIngress(key string) error {

// 1. 从队列获取事件

namespace, name, err := cache.SplitMetaNamespaceKey(key)

// 2. 获取Ingress对象

ingress, err := n.ingressLister.Ingresses(namespace).Get(name)

// 3. 验证Ingress

if !n.isValidIngress(ingress) {

return fmt.Errorf("invalid ingress")

}

// 4. 获取相关资源

service, err := n.getService(ingress)

endpoints, err := n.getEndpoints(ingress)

secret, err := n.getTLSSecret(ingress)

// 5. 生成Nginx配置

config := n.generateNginxConfig(ingress, service, endpoints, secret)

// 6. 更新Nginx

if n.configChanged(config) {

// 写入配置文件

n.writeConfig(config)

// 测试配置

if err := n.testConfig(); err != nil {

n.rollbackConfig()

return err

}

// 重载Nginx

n.reloadNginx()

}

// 7. 更新状态

n.updateIngressStatus(ingress)

return nil

}(2) 配置生成

// Nginx配置生成逻辑

func (n *NGINXController) generateNginxConfig(ingress *networkingv1.Ingress, service *v1.Service, endpoints *v1.Endpoints, secret *v1.Secret) NginxConfig {

config := NginxConfig{}

// 1. 生成Upstream配置

config.Upstreams = n.generateUpstreams(service, endpoints)

// 2. 生成Server配置

for _, rule := range ingress.Spec.Rules {

server := NginxServer{

Host: rule.Host,

SSL: false,

}

// TLS配置

for _, tls := range ingress.Spec.TLS {

if contains(tls.Hosts, rule.Host) {

server.SSL = true

server.SSLCertificate = n.getCertificatePath(secret)

server.SSLCertificateKey = n.getPrivateKeyPath(secret)

break

}

}

// Location配置

for _, path := range rule.HTTP.Paths {

location := NginxLocation{

Path: path.Path,

PathType: *path.PathType,

Upstream: path.Backend.Service.Name,

}

// 应用注解

if rewrite, ok := ingress.Annotations["nginx.ingress.kubernetes.io/rewrite-target"]; ok {

location.Rewrite = rewrite

}

// 限流配置

if limit, ok := ingress.Annotations["nginx.ingress.kubernetes.io/limit-rps"]; ok {

location.RateLimit = limit

}

server.Locations = append(server.Locations, location)

}

config.Servers = append(config.Servers, server)

}

// 3. 全局配置

config.GlobalConfig = n.getGlobalConfig(ingress)

return config

}(3) Nginx配置模板

# 生成的Nginx配置示例

# nginx.conf

events {

worker_connections 1024;

use epoll;

}

http {

# 全局配置

include /etc/nginx/mime.types;

default_type application/octet-stream;

# Upstream配置

upstream my-service {

least_conn;

server 10.244.1.3:8080 max_fails=3 fail_timeout=30s;

server 10.244.1.4:8080 max_fails=3 fail_timeout=30s;

server 10.244.1.5:8080 max_fails=3 fail_timeout=30s;

# 健康检查

check interval=3000 rise=2 fall=5 timeout=1000;

}

# Server配置

server {

listen 80;

server_name api.example.com;

# 重定向到HTTPS

return 301 https://$server_name$request_uri;

}

server {

listen 443 ssl http2;

server_name api.example.com;

# SSL证书

ssl_certificate /etc/nginx/ssl/tls.crt;

ssl_certificate_key /etc/nginx/ssl/tls.key;

ssl_protocols TLSv1.2 TLSv1.3;

# Location配置

location /v1/users {

proxy_pass http://my-service;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

# 连接超时

proxy_connect_timeout 5s;

proxy_read_timeout 60s;

proxy_send_timeout 60s;

# 缓冲

proxy_buffering on;

proxy_buffer_size 4k;

proxy_buffers 8 4k;

# 限流

limit_req zone=mylimit burst=10 nodelay;

}

location /health {

access_log off;

return 200 "healthy\n";

}

}

}4. Traefik Ingress Controller实现

(1) Traefik架构特点

// Traefik动态配置管理

type TraefikController struct {

// Provider系统

providers []Provider

// 配置聚合

configurationChan chan dynamic.Message

// 路由器

routerManager RouterManager

// 中间件链

middlewareChain MiddlewareChain

}

// Provider接口

type Provider interface {

Provide(configurationChan chan<- dynamic.Message) error

}

// Kubernetes Provider实现

type KubernetesProvider struct {

kubernetesClient kubernetes.Interface

ingressClasses []string

watchNamespaces []string

}

func (k *KubernetesProvider) Provide(configurationChan chan<- dynamic.Message) error {

// 监视Ingress、Service、Endpoint等资源

// 生成Traefik配置

config := dynamic.Config{

HTTP: &dynamic.HTTPConfiguration{

Routers: make(map[string]*dynamic.Router),

Services: make(map[string]*dynamic.Service),

Middlewares: make(map[string]*dynamic.Middleware),

},

TCP: &dynamic.TCPConfiguration{},

}

// 构建路由

for _, ingress := range ingresses {

routerName := fmt.Sprintf("%s-%s", ingress.Namespace, ingress.Name)

config.HTTP.Routers[routerName] = &dynamic.Router{

EntryPoints: []string{"web", "websecure"},

Rule: buildRule(ingress),

Service: ingress.Spec.Rules[0].HTTP.Paths[0].Backend.Service.Name,

Middlewares: getMiddlewares(ingress),

TLS: buildTLSConfig(ingress),

}

}

// 发送配置更新

configurationChan <- dynamic.Message{

ProviderName: "kubernetes",

Configuration: config,

}

return nil

}(2) Traefik配置示例

# Traefik动态配置(YAML格式)

http:

routers:

myapp-router:

rule: "Host(`api.example.com`) && PathPrefix(`/api`)"

service: myapp-service

middlewares:

- rate-limit

- auth

tls:

certResolver: letsencrypt

services:

myapp-service:

loadBalancer:

servers:

- url: "http://10.244.1.3:8080"

- url: "http://10.244.1.4:8080"

healthCheck:

path: "/health"

interval: "30s"

middlewares:

rate-limit:

rateLimit:

average: 100

burst: 50

auth:

forwardAuth:

address: "http://auth-service.default.svc.cluster.local:8080"

trustForwardHeader: true

tcp:

routers:

mysql-router:

rule: "HostSNI(`mysql.example.com`)"

service: mysql-service

tls: true

services:

mysql-service:

loadBalancer:

servers:

- address: "10.244.2.10:3306"三、OpenShift Route控制器详解

1. OpenShift Route架构

// OpenShift Router控制器架构

type OpenShiftRouter struct {

// 1. 客户端

openshiftClient openshiftclient.Interface

// 2. 监听器

routeLister routev1listers.RouteLister

routeSynced cache.InformerSynced

// 3. HAProxy管理器

haproxyManager HAProxyManager

// 4. 证书管理器

certManager CertificateManager

// 5. 状态管理器

statusManager RouteStatusManager

}

// HAProxy管理器

type HAProxyManager struct {

configDir string

templateDir string

pidFile string

reloadStrategy ReloadStrategy

}

// 重载策略

type ReloadStrategy string

const (

ReloadStrategyDaemon ReloadStrategy = "daemon" // 使用HAProxy守护进程

ReloadStrategySocket ReloadStrategy = "socket" // 使用Runtime API

ReloadStrategyContainer ReloadStrategy = "container" // 重启容器

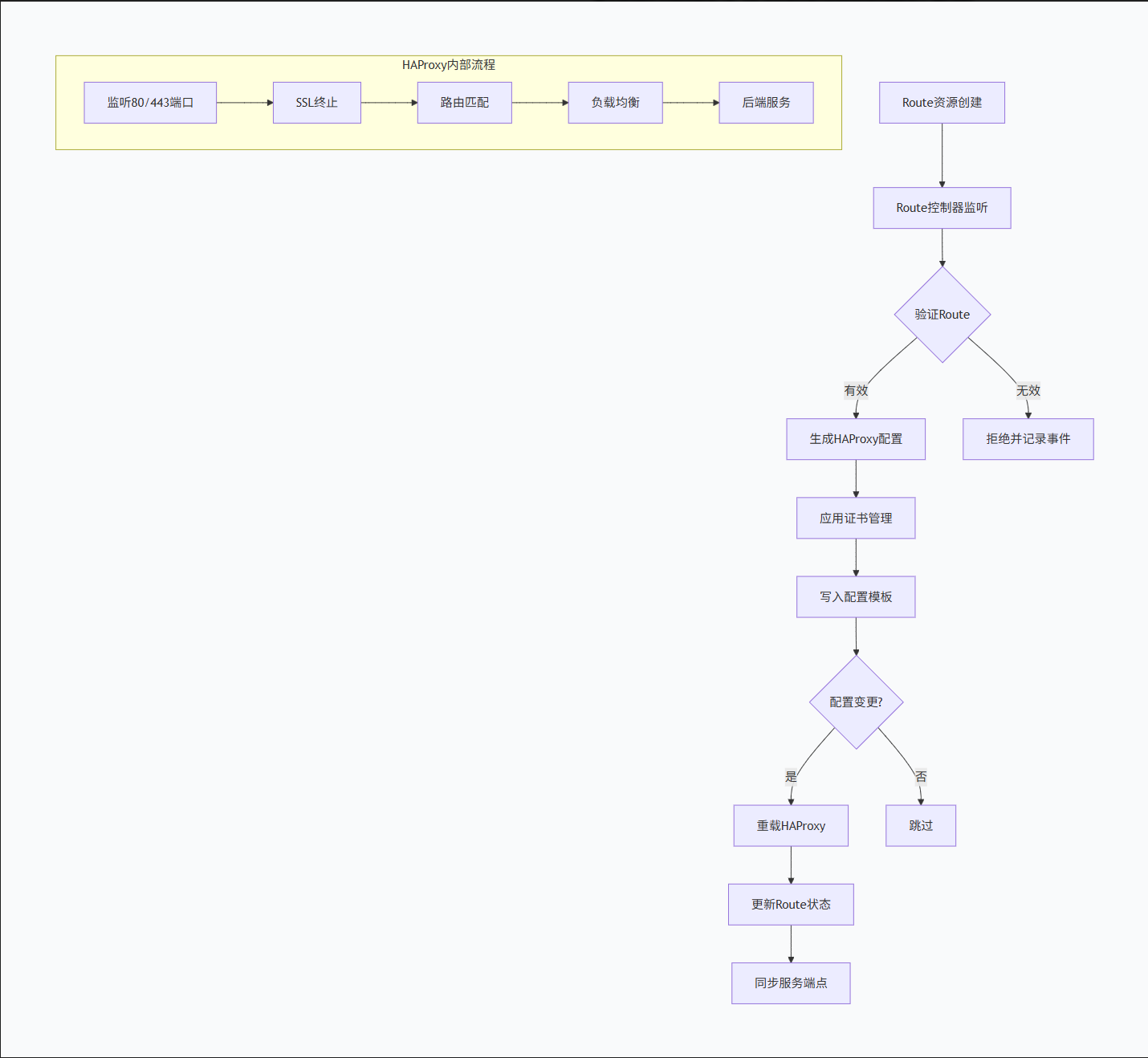

)2. OpenShift Route工作流程

3. Route配置生成

// Route到HAProxy配置转换

func (r *OpenShiftRouter) generateHAProxyConfig(route *routev1.Route, endpoints []string) HAProxyConfig {

config := HAProxyConfig{}

// 1. 前端配置

frontend := HAProxyFrontend{

Name: route.Name,

BindAddress: ":80",

Mode: "http",

}

// 如果是TLS路由

if route.Spec.TLS != nil {

frontend.BindAddress = ":443 ssl crt /var/lib/haproxy/certs/"

switch route.Spec.TLS.Termination {

case routev1.TLSTerminationEdge:

// 边缘终止

frontend.SSLOptions = "no-sslv3 no-tlsv10 no-tlsv11"

case routev1.TLSTerminationPassthrough:

// 透传模式

frontend.Mode = "tcp"

case routev1.TLSTerminationReencrypt:

// 重新加密

frontend.SSLOptions = "verify required ca-file /etc/ssl/certs/ca-bundle.crt"

}

}

// 2. 后端配置

backend := HAProxyBackend{

Name: route.Name,

Balance: "roundrobin",

Mode: "http",

}

for _, endpoint := range endpoints {

backend.Servers = append(backend.Servers, HAProxyServer{

Name: endpoint,

Address: endpoint,

Check: true,

})

}

// 3. 路由规则

rule := ""

// 基于主机的路由

if route.Spec.Host != "" {

rule += fmt.Sprintf("hdr(host) -i %s", route.Spec.Host)

}

// 基于路径的路由

if route.Spec.Path != "" {

rule += fmt.Sprintf(" path_beg %s", route.Spec.Path)

}

config.Frontends = append(config.Frontends, frontend)

config.Backends = append(config.Backends, backend)

config.ACLs = append(config.ACLs, HAProxyACL{

Name: route.Name,

Rule: rule,

Use: "backend " + backend.Name,

})

return config

}4. OpenShift Router示例配置

# OpenShift Route资源

apiVersion: route.openshift.io/v1

kind: Route

metadata:

name: myapp-route

namespace: myapp

annotations:

haproxy.router.openshift.io/balance: roundrobin

haproxy.router.openshift.io/ip_whitelist: 192.168.1.0/24

haproxy.router.openshift.io/timeout: 2m

spec:

host: myapp.example.com

path: /api

to:

kind: Service

name: myapp-service

weight: 100

port:

targetPort: 8080

tls:

termination: edge

certificate: |

-----BEGIN CERTIFICATE-----

MIIE...

-----END CERTIFICATE-----

key: |

-----BEGIN PRIVATE KEY-----

MIIJ...

-----END PRIVATE KEY-----

insecureEdgeTerminationPolicy: Redirect

wildcardPolicy: None四、Gateway API控制器

1. Gateway API架构

// Gateway API控制器架构

type GatewayAPIController struct {

// 1. Gateway API客户端

gatewayClient gatewayclient.Interface

// 2. 网关类管理器

gatewayClassManager GatewayClassManager

// 3. 网关管理器

gatewayManager GatewayManager

// 4. 路由管理器

routeManager RouteManager

// 5. 状态协调器

statusReconciler StatusReconciler

}

// Gateway管理器

type GatewayManager struct {

gatewayLister gatewayv1beta1listers.GatewayLister

gatewayClasses map[string]*gatewayv1beta1.GatewayClass

implementation GatewayImplementation

}

// Gateway实现接口

type GatewayImplementation interface {

CreateGateway(gateway *gatewayv1beta1.Gateway) error

UpdateGateway(old, new *gatewayv1beta1.Gateway) error

DeleteGateway(gateway *gatewayv1beta1.Gateway) error

GetGatewayStatus(gateway *gatewayv1beta1.Gateway) (gatewayv1beta1.GatewayStatus, error)

}2. Gateway API工作流程

// Gateway API控制器协调循环

func (c *GatewayAPIController) reconcileGateway(key string) error {

// 1. 解析key

namespace, name, err := cache.SplitMetaNamespaceKey(key)

// 2. 获取Gateway

gateway, err := c.gatewayLister.Gateways(namespace).Get(name)

// 3. 验证GatewayClass

gatewayClass, err := c.validateGatewayClass(gateway.Spec.GatewayClassName)

// 4. 检查控制器是否负责此GatewayClass

if gatewayClass.Spec.ControllerName != c.controllerName {

return nil // 不处理

}

// 5. 获取关联的HTTPRoutes

httpRoutes, err := c.getHTTPRoutesForGateway(gateway)

// 6. 生成基础设施配置

infraConfig := c.generateInfrastructureConfig(gateway, httpRoutes)

// 7. 调用实现层

if gateway.DeletionTimestamp != nil {

// 删除网关

err = c.implementation.DeleteGateway(gateway)

} else {

// 创建或更新网关

if c.gatewayExists(gateway) {

err = c.implementation.UpdateGateway(c.getExistingGateway(gateway), gateway)

} else {

err = c.implementation.CreateGateway(gateway)

}

}

// 8. 更新Gateway状态

status, err := c.implementation.GetGatewayStatus(gateway)

c.updateGatewayStatus(gateway, status)

// 9. 更新HTTPRoute状态

c.updateHTTPRouteStatuses(httpRoutes, gateway)

return nil

}3. Gateway API配置示例

# Gateway API配置示例

apiVersion: gateway.networking.k8s.io/v1beta1

kind: GatewayClass

metadata:

name: istio

spec:

controllerName: istio.io/gateway-controller

description: "Istio GatewayClass"

parametersRef:

group: networking.istio.io

kind: IstioGatewayClassParameters

name: default-parameters

---

apiVersion: gateway.networking.k8s.io/v1beta1

kind: Gateway

metadata:

name: internet-gateway

namespace: default

spec:

gatewayClassName: istio

listeners:

- name: http

port: 80

protocol: HTTP

allowedRoutes:

namespaces:

from: All

- name: https

port: 443

protocol: HTTPS

tls:

mode: Terminate

certificateRefs:

- name: wildcard-cert

allowedRoutes:

namespaces:

from: Same

---

apiVersion: gateway.networking.k8s.io/v1beta1

kind: HTTPRoute

metadata:

name: http-app-route

namespace: app

spec:

parentRefs:

- name: internet-gateway

namespace: default

port: 443

hostnames:

- "app.example.com"

rules:

- matches:

- path:

type: PathPrefix

value: /api

filters:

- type: RequestHeaderModifier

requestHeaderModifier:

add:

- name: X-API-Version

value: "v2"

backendRefs:

- name: api-service

port: 8080

weight: 90

- name: api-service-v2

port: 8080

weight: 10五、Route控制器的核心功能实现

1. 负载均衡算法实现

// 负载均衡器接口

type LoadBalancer interface {

Next(endpoints []Endpoint) Endpoint

Name() string

}

// 轮询负载均衡

type RoundRobinLoadBalancer struct {

currentIndex int

mu sync.Mutex

}

func (rr *RoundRobinLoadBalancer) Next(endpoints []Endpoint) Endpoint {

rr.mu.Lock()

defer rr.mu.Unlock()

if len(endpoints) == 0 {

return Endpoint{}

}

endpoint := endpoints[rr.currentIndex]

rr.currentIndex = (rr.currentIndex + 1) % len(endpoints)

return endpoint

}

// 最少连接负载均衡

type LeastConnLoadBalancer struct {

connectionCount map[string]int64

mu sync.RWMutex

}

func (lc *LeastConnLoadBalancer) Next(endpoints []Endpoint) Endpoint {

lc.mu.RLock()

defer lc.mu.RUnlock()

if len(endpoints) == 0 {

return Endpoint{}

}

var minEndpoint Endpoint

var minCount int64 = math.MaxInt64

for _, endpoint := range endpoints {

count := lc.connectionCount[endpoint.Address]

if count < minCount {

minCount = count

minEndpoint = endpoint

}

}

// 更新连接数

go func() {

lc.mu.Lock()

lc.connectionCount[minEndpoint.Address]++

lc.mu.Unlock()

}()

return minEndpoint

}

// 加权轮询负载均衡

type WeightedRoundRobinLoadBalancer struct {

currentWeight int

gcdWeight int

maxWeight int

currentIndex int

}

func (wrr *WeightedRoundRobinLoadBalancer) Next(endpoints []Endpoint) Endpoint {

for {

wrr.currentIndex = (wrr.currentIndex + 1) % len(endpoints)

if wrr.currentIndex == 0 {

wrr.currentWeight = wrr.currentWeight - wrr.gcdWeight

if wrr.currentWeight <= 0 {

wrr.currentWeight = wrr.maxWeight

if wrr.currentWeight == 0 {

return Endpoint{}

}

}

}

if endpoints[wrr.currentIndex].Weight >= wrr.currentWeight {

return endpoints[wrr.currentIndex]

}

}

}2. 健康检查实现

// 健康检查器

type HealthChecker struct {

checkInterval time.Duration

timeout time.Duration

checkers map[string]Checker

results map[string]HealthStatus

mu sync.RWMutex

}

// 健康检查接口

type Checker interface {

Check(endpoint Endpoint) (bool, error)

Type() string

}

// HTTP健康检查

type HTTPHealthChecker struct {

path string

method string

client *http.Client

}

func (h *HTTPHealthChecker) Check(endpoint Endpoint) (bool, error) {

url := fmt.Sprintf("http://%s%s", endpoint.Address, h.path)

req, err := http.NewRequest(h.method, url, nil)

if err != nil {

return false, err

}

resp, err := h.client.Do(req)

if err != nil {

return false, err

}

defer resp.Body.Close()

return resp.StatusCode >= 200 && resp.StatusCode < 300, nil

}

// TCP健康检查

type TCPHealthChecker struct {

timeout time.Duration

}

func (t *TCPHealthChecker) Check(endpoint Endpoint) (bool, error) {

conn, err := net.DialTimeout("tcp", endpoint.Address, t.timeout)

if err != nil {

return false, err

}

defer conn.Close()

return true, nil

}

// 健康检查管理器

func (hc *HealthChecker) Run() {

ticker := time.NewTicker(hc.checkInterval)

defer ticker.Stop()

for range ticker.C {

hc.performChecks()

}

}

func (hc *HealthChecker) performChecks() {

hc.mu.Lock()

defer hc.mu.Unlock()

for endpointID, checker := range hc.checkers {

go func(id string, c Checker) {

endpoint := hc.getEndpoint(id)

healthy, err := c.Check(endpoint)

hc.mu.Lock()

if healthy {

hc.results[id] = HealthStatus{

Healthy: true,

Timestamp: time.Now(),

}

} else {

hc.results[id] = HealthStatus{

Healthy: false,

Error: err.Error(),

Timestamp: time.Now(),

}

}

hc.mu.Unlock()

}(endpointID, checker)

}

}3. 证书管理

// 证书管理器

type CertificateManager struct {

cache map[string]*tls.Certificate

secrets map[string]*v1.Secret

watchNames []string

mu sync.RWMutex

}

// 证书自动续期

func (cm *CertificateManager) autoRenew() {

ticker := time.NewTicker(1 * time.Hour)

defer ticker.Stop()

for range ticker.C {

cm.checkAndRenewCertificates()

}

}

func (cm *CertificateManager) checkAndRenewCertificates() {

cm.mu.RLock()

defer cm.mu.RUnlock()

for secretName, cert := range cm.cache {

// 检查证书是否快要过期

if cm.isCertificateExpiring(cert, 30*24*time.Hour) { // 30天

go cm.renewCertificate(secretName)

}

}

}

// 证书发现和加载

func (cm *CertificateManager) discoverCertificates() error {

// 从Kubernetes Secrets加载证书

secrets, err := cm.kubernetesClient.CoreV1().Secrets("").List(context.TODO(), metav1.ListOptions{

LabelSelector: "cert-manager.io/certificate-name",

})

if err != nil {

return err

}

for _, secret := range secrets.Items {

if secret.Type == v1.SecretTypeTLS {

cert, err := tls.X509KeyPair(secret.Data["tls.crt"], secret.Data["tls.key"])

if err != nil {

log.Printf("Failed to load certificate from secret %s: %v", secret.Name, err)

continue

}

cm.mu.Lock()

cm.cache[secret.Name] = &cert

cm.secrets[secret.Name] = &secret

cm.mu.Unlock()

}

}

return nil

}4. 流量管理和策略

// 流量策略管理器

type TrafficPolicyManager struct {

policies map[string]*TrafficPolicy

}

// 流量策略

type TrafficPolicy struct {

// 限流策略

RateLimit *RateLimitPolicy

// 熔断策略

CircuitBreaker *CircuitBreakerPolicy

// 重试策略

RetryPolicy *RetryPolicy

// 超时策略

TimeoutPolicy *TimeoutPolicy

// 故障注入

FaultInjection *FaultInjectionPolicy

}

// 限流策略实现

type RateLimiter struct {

limiter *rate.Limiter

}

func NewRateLimiter(rps int, burst int) *RateLimiter {

return &RateLimiter{

limiter: rate.NewLimiter(rate.Limit(rps), burst),

}

}

func (rl *RateLimiter) Allow() bool {

return rl.limiter.Allow()

}

// 熔断器实现

type CircuitBreaker struct {

failureThreshold int

successThreshold int

timeout time.Duration

failures int

successes int

state CircuitBreakerState

lastChange time.Time

mu sync.RWMutex

}

type CircuitBreakerState string

const (

StateClosed CircuitBreakerState = "closed"

StateOpen CircuitBreakerState = "open"

StateHalfOpen CircuitBreakerState = "half-open"

)

func (cb *CircuitBreaker) Execute(fn func() error) error {

cb.mu.RLock()

state := cb.state

cb.mu.RUnlock()

if state == StateOpen {

if time.Since(cb.lastChange) > cb.timeout {

cb.mu.Lock()

cb.state = StateHalfOpen

cb.lastChange = time.Now()

cb.mu.Unlock()

} else {

return fmt.Errorf("circuit breaker is open")

}

}

err := fn()

cb.mu.Lock()

defer cb.mu.Unlock()

if err != nil {

cb.failures++

cb.successes = 0

if cb.failures >= cb.failureThreshold {

cb.state = StateOpen

cb.lastChange = time.Now()

}

return err

}

cb.successes++

cb.failures = 0

if cb.state == StateHalfOpen && cb.successes >= cb.successThreshold {

cb.state = StateClosed

}

return nil

}六、Route控制器的高级特性

1. 金丝雀发布支持

// 金丝雀发布管理器

type CanaryManager struct {

config CanaryConfig

trafficRouter TrafficRouter

metrics MetricsCollector

}

// 金丝雀配置

type CanaryConfig struct {

Service string

PrimaryWeight int

CanaryWeight int

MatchRules []MatchRule

Metrics []MetricCheck

Steps []CanaryStep

}

// 流量路由

func (cm *CanaryManager) routeTraffic(request Request) (string, error) {

// 检查匹配规则

for _, rule := range cm.config.MatchRules {

if cm.matchesRule(request, rule) {

// 基于权重选择后端

if cm.shouldRouteToCanary() {

return cm.config.CanaryBackend, nil

}

break

}

}

return cm.config.PrimaryBackend, nil

}

// 权重计算

func (cm *CanaryManager) shouldRouteToCanary() bool {

total := cm.config.PrimaryWeight + cm.config.CanaryWeight

if total == 0 {

return false

}

// 基于权重的随机选择

randValue := rand.Intn(total)

return randValue < cm.config.CanaryWeight

}

// 自动推进逻辑

func (cm *CanaryManager) autoAdvance() {

for {

// 收集指标

metrics := cm.metrics.Collect()

// 检查指标是否达标

if cm.checkMetrics(metrics) {

// 增加金丝雀权重

cm.increaseCanaryWeight()

// 检查是否完成

if cm.config.CanaryWeight >= 100 {

cm.promoteCanary()

return

}

} else {

// 回滚

cm.rollbackCanary()

return

}

time.Sleep(cm.config.StepInterval)

}

}2. A/B测试支持

// A/B测试管理器

type ABTestManager struct {

experiments map[string]*Experiment

router ABTestRouter

}

// 实验配置

type Experiment struct {

Name string

Variants []Variant

TrafficSplit map[string]int // 变体名称 -> 流量百分比

Duration time.Duration

StartTime time.Time

Metrics []ExperimentMetric

}

// 变体

type Variant struct {

Name string

Backend string

Features map[string]interface{}

}

// 路由决策

func (m *ABTestManager) routeToVariant(request Request, experimentName string) (Variant, error) {

experiment, exists := m.experiments[experimentName]

if !exists {

return Variant{}, fmt.Errorf("experiment not found")

}

// 基于用户ID的稳定路由

userID := getUserID(request)

if userID == "" {

// 如果没有用户ID,使用随机路由

return m.routeRandomly(experiment), nil

}

// 使用一致性哈希确保同一用户总是路由到同一变体

hash := fnv.New32a()

hash.Write([]byte(userID + experimentName))

hashValue := hash.Sum32() % 100

cumulative := 0

for variantName, percentage := range experiment.TrafficSplit {

cumulative += percentage

if int(hashValue) < cumulative {

for _, variant := range experiment.Variants {

if variant.Name == variantName {

return variant, nil

}

}

}

}

return experiment.Variants[0], nil

}3. 服务网格集成

// 服务网格集成控制器

type ServiceMeshController struct {

meshClient meshclient.Interface

routeLister routev1listers.RouteLister

configStore meshconfig.Store

}

// 将Route转换为服务网格配置

func (c *ServiceMeshController) convertToMeshConfig(route *routev1.Route) (*meshconfig.VirtualService, error) {

vs := &meshconfig.VirtualService{

Hosts: []string{route.Spec.Host},

}

// 转换为HTTP路由

for _, rule := range route.Spec.Rules {

httpRoute := &meshconfig.HTTPRoute{

Match: []*meshconfig.HTTPMatchRequest{

{

Uri: &meshconfig.StringMatch{

MatchType: &meshconfig.StringMatch_Prefix{

Prefix: rule.Path,

},

},

},

},

Route: []*meshconfig.HTTPRouteDestination{

{

Destination: &meshconfig.Destination{

Host: route.Spec.To.Name,

Port: &meshconfig.PortSelector{

Number: uint32(route.Spec.Port.TargetPort.IntValue()),

},

},

Weight: 100,

},

},

}

// 添加超时和重试

if timeout, ok := route.Annotations["mesh.retry.timeout"]; ok {

if timeoutMs, err := strconv.ParseInt(timeout, 10, 32); err == nil {

httpRoute.Timeout = &duration.Duration{

Seconds: timeoutMs / 1000,

Nanos: int32(timeoutMs%1000) * 1000000,

}

}

}

vs.Http = append(vs.Http, httpRoute)

}

return vs, nil

}七、Route控制器的最佳实践

1. 性能优化配置

# Nginx Ingress性能优化配置

controller:

config:

# 连接优化

worker-processes: "auto"

worker-connections: "10240"

keepalive-requests: "10000"

keepalive-timeout: "75s"

# 缓冲优化

proxy-buffer-size: "16k"

proxy-buffers: "8 16k"

proxy-busy-buffers-size: "32k"

# 超时优化

proxy-connect-timeout: "5s"

proxy-read-timeout: "60s"

proxy-send-timeout: "60s"

# 压缩优化

gzip: "on"

gzip-types: "text/plain text/css application/json application/javascript text/xml application/xml application/xml+rss text/javascript"

gzip-min-length: "1024"

# 日志优化

log-format-upstream: '$remote_addr - $remote_user [$time_local] "$request" $status $body_bytes_sent "$http_referer" "$http_user_agent" $request_length $request_time [$proxy_upstream_name] [$proxy_alternative_upstream_name] $upstream_addr $upstream_response_length $upstream_response_time $upstream_status'

access-log-path: "/dev/stdout"

error-log-path: "/dev/stderr"

# 上游优化

upstream-keepalive: "64"

upstream-keepalive-requests: "100"

upstream-keepalive-timeout: "60s"2. 高可用性配置

# 高可用Ingress Controller部署

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx-ingress

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

template:

metadata:

labels:

app: nginx-ingress

spec:

serviceAccountName: nginx-ingress-serviceaccount

terminationGracePeriodSeconds: 300

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- nginx-ingress

topologyKey: kubernetes.io/hostname

containers:

- name: nginx-ingress-controller

image: k8s.gcr.io/ingress-nginx/controller:v1.3.0

args:

- /nginx-ingress-controller

- --election-id=ingress-controller-leader

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

- name: webhook

containerPort: 8443

protocol: TCP

livenessProbe:

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 1

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 1

successThreshold: 1

failureThreshold: 3

resources:

requests:

cpu: 100m

memory: 90Mi

limits:

cpu: 1000m

memory: 1024Mi3. 监控和可观测性

# Prometheus监控配置

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: nginx-ingress-controller

namespace: monitoring

labels:

app: nginx-ingress

spec:

selector:

matchLabels:

app: nginx-ingress

namespaceSelector:

matchNames:

- ingress-nginx

endpoints:

- port: metrics

interval: 30s

scrapeTimeout: 10s

path: /metrics

honorLabels: true

metricRelabelings:

- sourceLabels: [__name__]

regex: 'nginx_ingress_controller_.*'

action: keep

---

# 监控指标告警规则

apiVersion: monitoring.coreos.com/v1

kind: PrometheusRule

metadata:

name: nginx-ingress-alerts

namespace: monitoring

spec:

groups:

- name: nginx-ingress.rules

rules:

- alert: NginxIngressDown

expr: up{job="nginx-ingress"} == 0

for: 5m

labels:

severity: critical

annotations:

summary: "Nginx Ingress controller is down"

description: "{{ $labels.instance }} of job {{ $labels.job }} has been down for more than 5 minutes."

- alert: NginxIngressHighLatency

expr: histogram_quantile(0.99, rate(nginx_ingress_controller_request_duration_seconds_bucket[5m])) > 1

for: 5m

labels:

severity: warning

annotations:

summary: "High request latency on Nginx Ingress"

description: "99th percentile request latency on {{ $labels.instance }} is above 1 second."

- alert: NginxIngressHighErrorRate

expr: rate(nginx_ingress_controller_requests{status=~"5.."}[5m]) / rate(nginx_ingress_controller_requests[5m]) > 0.05

for: 5m

labels:

severity: warning

annotations:

summary: "High error rate on Nginx Ingress"

description: "Error rate on {{ $labels.instance }} is above 5%."八、总结

Route控制器的核心运作机制:

-

监听和同步:通过Kubernetes Informer机制监听API对象变化

-

配置生成:将声明式配置转换为具体代理配置

-

动态应用:热加载或重启代理应用新配置

-

状态反馈:更新资源状态,提供反馈

-

健康检查:持续监控后端服务健康状态

关键设计模式:

-

控制器模式:持续的协调循环,确保实际状态与期望状态一致

-

观察者模式:通过Watch机制监听资源变化

-

策略模式:支持多种负载均衡、路由算法

-

模板模式:使用配置模板生成最终配置

发展趋势:

-

标准化:Gateway API正在成为新的标准

-

智能化:基于AI/ML的自动流量管理和优化

-

无服务器化:与Knative等无服务器平台集成

-

边缘计算:支持边缘场景的轻量级路由

-

零信任:集成零信任安全模型

Route控制器作为Kubernetes网络栈的关键组件,承担着外部流量进入集群的第一道关口的角色。理解其工作原理对于设计高性能、高可用的云原生应用架构至关重要。