欢迎访问我的GitHub

这里分类和汇总了欣宸的全部原创(含配套源码):https://github.com/zq2599/blog_demos

LangChain4j实战全系列链接

- 准备工作

- 极速开发体验

- 细说聊天API

- 集成到spring-boot

- 图像模型

- 聊天记忆,低级API版

- 聊天记忆,高级API版

- 响应流式传输

- 高级API(AI Services)实例的创建方式

- 结构化输出之一,用提示词指定输出格式

- 结构化输出之二,function call

- 结构化输出之三,json模式

- 函数调用,低级API版本

- 函数调用,高级API版本

本篇概览

- 本文是关于LangChain4j支持的函数调用的第二篇,《前文》咱们已经知道了什么是函数调用,也参与了完整的低级API版函数调用的开发

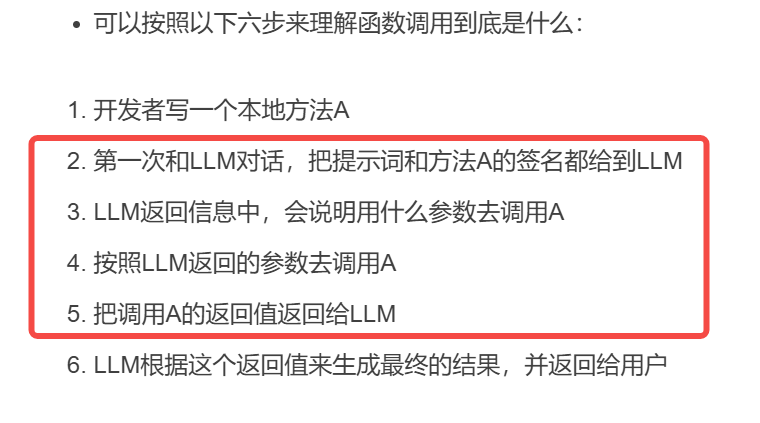

- 亲手完成所有实现的好处就是逻辑全部清楚,也明白其实写了很多与业务无关的代码,必然会思考一个问题:与LLM两次通信的代码有必要自己写吗?LangChain4j能不能帮我们把下图红框中这些事情做了?

- LangChain4j当然考虑到了这些,通过高级API(AiService)把这些都封装进去了,咱们使用者只要定义自己的业务接口,然后调用这些接口即可,业务代码完全不到与LLM交互的细节

- 基于高级API的函数调用编码实战,就是接下来本篇的内容,用于实战的业务功能还是和前文一样:天气预报的函数调用

源码下载(觉得作者啰嗦的,直接在这里下载)

- 如果您只想快速浏览完整源码,可以在GitHub下载代码直接运行,地址和链接信息如下表所示(https://github.com/zq2599/blog_demos):

| 名称 | 链接 | 备注 |

|---|---|---|

| 项目主页 | https://github.com/zq2599/blog_demos | 该项目在GitHub上的主页 |

| git仓库地址(https) | https://github.com/zq2599/blog_demos.git | 该项目源码的仓库地址,https协议 |

| git仓库地址(ssh) | git@github.com:zq2599/blog_demos.git | 该项目源码的仓库地址,ssh协议 |

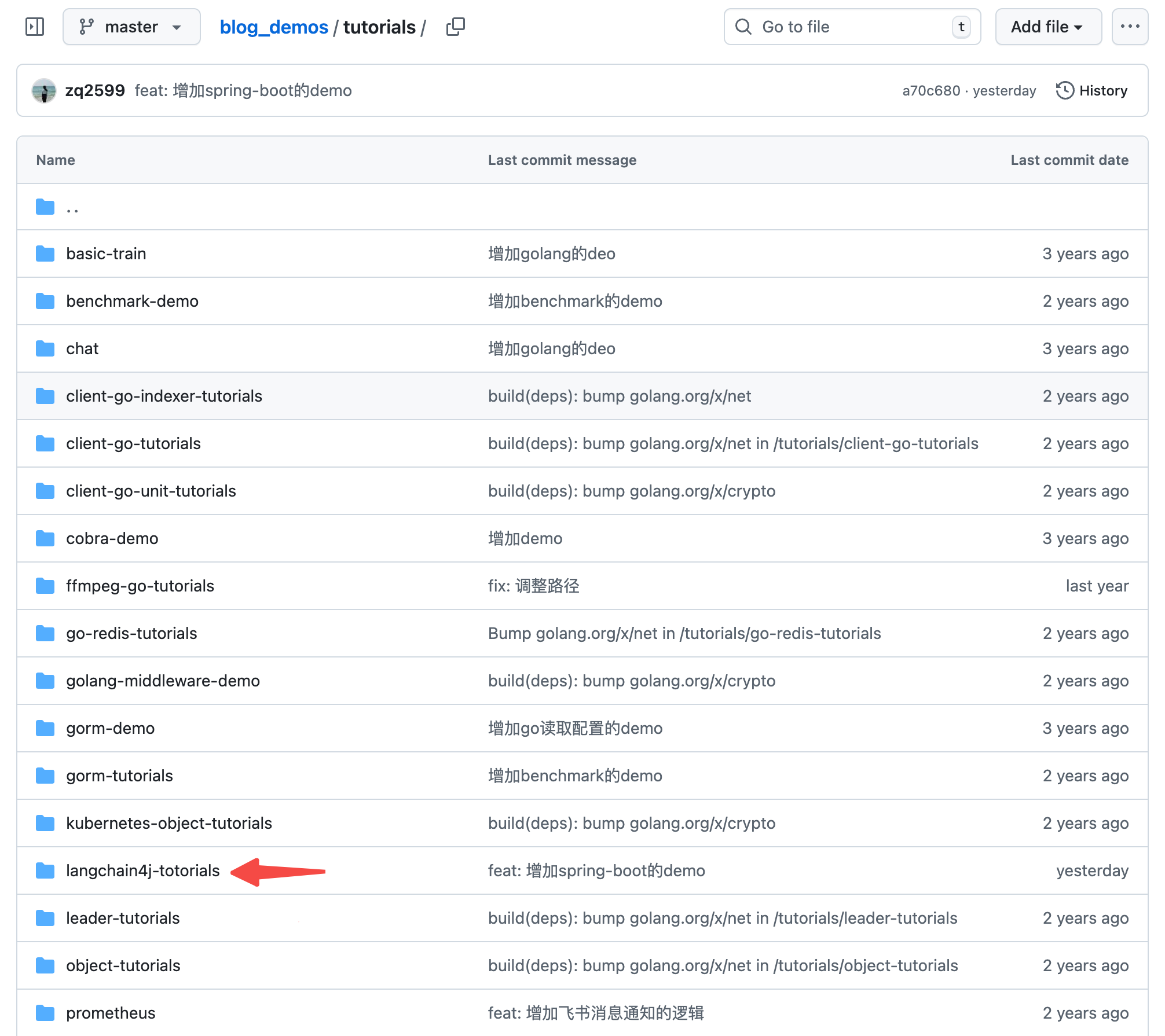

- 这个git项目中有多个文件夹,本篇的源码在langchain4j-tutorials文件夹下,如下图红色箭头所示:

编码:父工程调整

- 《准备工作》中创建了整个《LangChain4j实战》系列代码的父工程,本篇实战会在父工程下新建一个子工程,所以这里要对父工程的pom.xml做少量修改

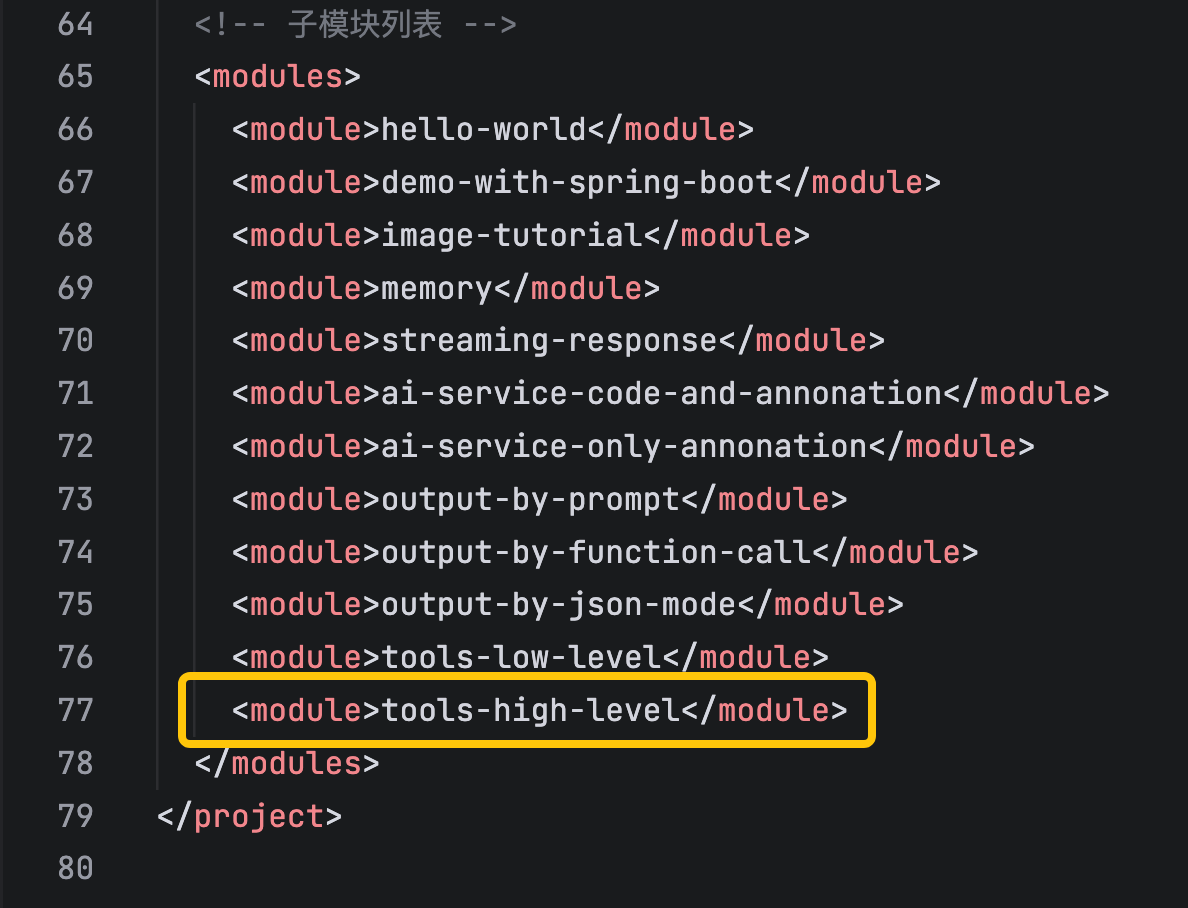

- modules中增加一个子工程,如下图黄框所示

编码:新增子工程

- 新增名为tools-high-level的子工程

- langchain4j-totorials目录下新增名tools-high-level为的文件夹

- tools-high-level文件夹下新增pom.xml,内容如下

xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>com.bolingcavalry</groupId>

<artifactId>langchain4j-totorials</artifactId>

<version>1.0-SNAPSHOT</version>

</parent>

<artifactId>tools-high-level</artifactId>

<packaging>jar</packaging>

<dependencies>

<!-- Lombok -->

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<!-- Spring Boot Starter -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter</artifactId>

</dependency>

<!-- Spring Boot Web -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<!-- Spring Boot Test -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<!-- JUnit Jupiter Engine -->

<dependency>

<groupId>org.junit.jupiter</groupId>

<artifactId>junit-jupiter-engine</artifactId>

<scope>test</scope>

</dependency>

<!-- Mockito Core -->

<dependency>

<groupId>org.mockito</groupId>

<artifactId>mockito-core</artifactId>

<scope>test</scope>

</dependency>

<!-- Mockito JUnit Jupiter -->

<dependency>

<groupId>org.mockito</groupId>

<artifactId>mockito-junit-jupiter</artifactId>

<scope>test</scope>

</dependency>

<!-- LangChain4j Core -->

<dependency>

<groupId>dev.langchain4j</groupId>

<artifactId>langchain4j-core</artifactId>

</dependency>

<!-- LangChain4j OpenAI支持(用于通义千问的OpenAI兼容接口) -->

<dependency>

<groupId>dev.langchain4j</groupId>

<artifactId>langchain4j-open-ai</artifactId>

</dependency>

<!-- 官方 langchain4j(包含 AiServices 等服务类) -->

<dependency>

<groupId>dev.langchain4j</groupId>

<artifactId>langchain4j</artifactId>

</dependency>

<dependency>

<groupId>dev.langchain4j</groupId>

<artifactId>langchain4j-community-dashscope</artifactId>

</dependency>

<dependency>

<groupId>dev.langchain4j</groupId>

<artifactId>langchain4j-spring-boot-starter</artifactId>

</dependency>

<!-- 日志依赖由Spring Boot Starter自动管理,无需单独声明 -->

</dependencies>

<build>

<plugins>

<!-- Spring Boot Maven Plugin -->

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<version>3.3.5</version>

<executions>

<execution>

<goals>

<goal>repackage</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>- 在langchain4j-totorials/tools-high-level/src/main/resources新增配置文件application.properties,内容如下,主要是三个模型的配置信息,记得把your-api-key换成您自己的apikey,另外由于用到了接口盒子的服务查询,相关的ulr模板、用户id、用户key也在这里面配置好

properties

# Spring Boot 应用配置

server.port=8080

server.servlet.context-path=/

# LangChain4j 使用OpenAI兼容模式配置通义千问模型

# 注意:请将your-api-key替换为您实际的通义千问API密钥

langchain4j.open-ai.chat-model.api-key=your-api-key

# 通义千问模型名称

langchain4j.open-ai.chat-model.model-name=qwen3-max

# 阿里云百炼OpenAI兼容接口地址

langchain4j.open-ai.chat-model.base-url=https://dashscope.aliyuncs.com/compatible-mode/v1

# 日志配置

logging.level.root=INFO

logging.level.com.bolingcavalry=DEBUG

logging.pattern.console=%d{HH:mm:ss.SSS} [%thread] %-5level %logger{36} - %msg%n

# 应用名称

spring.application.name=tools-high-level

# 调用接口盒子的接口查询天气,这里是地址

weather.tools.url=https://cn.apihz.cn/api/tianqi/tqyb.php?id=%s&key=%s&sheng=%s&place=%s

# 调用接口盒子的接口查询天气,这里是ID,请改成您自己的注册ID

weather.tools.id=your-id

# 调用接口盒子的接口查询天气,这里是通讯KEY,请改成您自己的通讯KEY

weather.tools.key=your-key- 新增启动类,依旧平平无奇

java

package com.bolingcavalry;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

/**

* Spring Boot应用程序的主类

*/

@SpringBootApplication

public class Application {

public static void main(String[] args) {

SpringApplication.run(Application.class, args);

}

}- 接口盒子返回的json数据,要准备数据结构来保存,由于有嵌套对象,所以需要两个pojo,第一个是NowInfo,存的是实时数据,如温度湿度(这段数据结构的代码和前文一样)

java

package com.bolingcavalry.vo;

import lombok.Data;

import java.io.Serializable;

@Data

public class NowInfo implements Serializable {

private double precipitation;

private double temperature;

private int pressure;

private int humidity;

private String windDirection;

private int windDirectionDegree;

private int windSpeed;

private String windScale;

private double feelst;

private String uptime;

}- 第二个pojo是WeatherInfo,对应的是接口返回的完整JSON(这段数据结构的代码和前文一样)

java

package com.bolingcavalry.vo;

import lombok.Data;

import java.io.Serializable;

@Data

public class WeatherInfo implements Serializable {

private int code;

private String guo;

private String sheng;

private String shi;

private String name;

private String weather1;

private String weather2;

private int wd1;

private int wd2;

private String winddirection1;

private String winddirection2;

private String windleve1;

private String windleve2;

private String weather1img;

private String weather2img;

private double lon;

private double lat;

private String uptime;

private NowInfo nowinfo;

private Object alarm;

}- 然后是本篇的第一个重点:自定义函数,该函数的作用是调用接口盒子的HTTP服务获取实时天气,需要注意的是要用Tool注解修饰(自定义函数的代码也和前文一模一样)

java

package com.bolingcavalry.service;

import java.net.URLEncoder;

import java.nio.charset.StandardCharsets;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.web.client.RestTemplate;

import com.bolingcavalry.vo.WeatherInfo;

import dev.langchain4j.agent.tool.P;

import dev.langchain4j.agent.tool.Tool;

import lombok.Data;

@Data

public class WeatherTools {

private static final Logger logger = LoggerFactory.getLogger(WeatherTools.class);

private String weatherToolsUrl;

private String weatherToolsId;

private String weatherToolsKey;

@SuppressWarnings("null")

@Tool("返回给定省份和城市的天气预报综合信息")

public WeatherInfo getWeather(@P("应返回天气预报的省份") String province, @P("应返回天气预报的城市") String city) throws IllegalArgumentException {

String encodedProvince = URLEncoder.encode(province, StandardCharsets.UTF_8);

String encodedCity = URLEncoder.encode(city, StandardCharsets.UTF_8);

String url = String.format(weatherToolsUrl, weatherToolsId, weatherToolsKey, encodedProvince, encodedCity);

logger.info("调用天气接口:{}", url);

return new RestTemplate().getForObject(url, WeatherInfo.class);

}

}- 由于本篇是高级API,所以增加一个自定义接口,里面是获取天气的方法定义,该接口的实现就是和LLM的两次交互以及函数调用,这些都是LangChain4j在背后实现的

java

package com.bolingcavalry.service;

public interface Assistant {

/**

* 通过提示词查询最新的天气情况

*

* @param userMessage 用户消息

* @return 助手生成的回答

*/

String getWeather(String userMessage);

}- 接着是配置类,里面创建了天气服务类和LLM模型服务类的bean,这个和前文一样没啥特别的,就是传入一些参数,还有model实例OpenAiChatModel,它设置的自定义监听类,可以把所有和LLM交互的信息在日志中打印出来,最后有个重点:assistant实例的创建必须执行tools方法,这样LangChain4j才知道有哪些函数可用

java

package com.bolingcavalry.config;

import java.util.List;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import com.bolingcavalry.service.Assistant;

import com.bolingcavalry.service.WeatherTools;

import dev.langchain4j.agent.tool.ToolExecutionRequest;

import dev.langchain4j.data.message.AiMessage;

import dev.langchain4j.data.message.ChatMessage;

import dev.langchain4j.model.chat.listener.ChatModelErrorContext;

import dev.langchain4j.model.chat.listener.ChatModelListener;

import dev.langchain4j.model.chat.listener.ChatModelRequestContext;

import dev.langchain4j.model.chat.listener.ChatModelResponseContext;

import dev.langchain4j.model.chat.response.ChatResponse;

import dev.langchain4j.model.openai.OpenAiChatModel;

import dev.langchain4j.service.AiServices;

/**

* LangChain4j配置类

*/

@Configuration

public class LangChain4jConfig {

private static final Logger logger = LoggerFactory.getLogger(LangChain4jConfig.class);

@Value("${langchain4j.open-ai.chat-model.api-key}")

private String apiKey;

@Value("${langchain4j.open-ai.chat-model.model-name:qwen-turbo}")

private String modelName;

@Value("${langchain4j.open-ai.chat-model.base-url}")

private String baseUrl;

/**

* 创建并配置OpenAiChatModel实例(使用通义千问的OpenAI兼容接口)

*

* @return OpenAiChatModel实例

*/

@Bean

public OpenAiChatModel chatModel() {

ChatModelListener listener = new ChatModelListener() {

@Override

public void onRequest(ChatModelRequestContext reqCtx) {

// 1. 拿到 List<ChatMessage>

List<ChatMessage> messages = reqCtx.chatRequest().messages();

logger.info("发到LLM的请求: {}", messages);

}

@Override

public void onResponse(ChatModelResponseContext respCtx) {

// 2. 先取 ChatModelResponse

ChatResponse response = respCtx.chatResponse();

// 3. 再取 AiMessage

AiMessage aiMessage = response.aiMessage();

// 4. 工具调用

List<ToolExecutionRequest> tools = aiMessage.toolExecutionRequests();

for (ToolExecutionRequest t : tools) {

logger.info("LLM响应, 执行函数[{}], 函数入参 : {}", t.name(), t.arguments());

}

// 5. 纯文本

if (aiMessage.text() != null) {

logger.info("LLM响应, 纯文本 : {}", aiMessage.text());

}

}

@Override

public void onError(ChatModelErrorContext errorCtx) {

errorCtx.error().printStackTrace();

}

};

return OpenAiChatModel.builder()

.apiKey(apiKey)

.modelName(modelName)

.baseUrl(baseUrl)

.listeners(List.of(listener))

.build();

}

@Value("${weather.tools.url}")

private String weatherToolsUrl;

@Value("${weather.tools.id}")

private String weatherToolsId;

@Value("${weather.tools.key}")

private String weatherToolsKey;

@Bean

public WeatherTools weatherTools() {

WeatherTools tools = new WeatherTools();

tools.setWeatherToolsUrl(weatherToolsUrl);

tools.setWeatherToolsId(weatherToolsId);

tools.setWeatherToolsKey(weatherToolsKey);

return tools;

}

@Bean

public Assistant assistant(OpenAiChatModel chatModel, WeatherTools weatherTools) {

return AiServices.builder(Assistant.class)

.chatModel(chatModel)

.tools(weatherTools)

.build();

}

}- 接下来是服务类,还记得前文低级API的服务类吗?咱们可是写了不少代码,如今用了高级API,事情一下子简单了,只需要调用bean的方法就行,不过简单也是有代价的:流程固定不能随心所欲的调整了(例如调用本地函数时加些业务逻辑)

java

package com.bolingcavalry.service;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Service;

@Service

public class QwenService {

@Autowired

private Assistant assistant;

public String getWeather(String prompt) {

return assistant.getWeather(prompt)+ "[from high level getWeather]";

}

}- 最后是controller类,这里准备个http接口响应,用来调用前的服务类的功能

java

package com.bolingcavalry.controller;

import org.springframework.http.ResponseEntity;

import org.springframework.web.bind.annotation.PostMapping;

import org.springframework.web.bind.annotation.RequestBody;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

import com.bolingcavalry.service.QwenService;

import lombok.Data;

/**

* 通义千问控制器,处理与大模型交互的HTTP请求

*/

@RestController

@RequestMapping("/api/qwen")

public class QwenController {

private final QwenService qwenService;

/**

* 构造函数,通过依赖注入获取QwenService实例

*

* @param qwenService QwenService实例

*/

public QwenController(QwenService qwenService) {

this.qwenService = qwenService;

}

/**

* 提示词请求实体类

*/

@Data

static class PromptRequest {

private String prompt;

private int userId;

private String province;

private String city;

}

/**

* 响应实体类

*/

@Data

static class Response {

private String result;

public Response(String result) {

this.result = result;

}

}

/**

* 检查请求体是否有效

*

* @param request 包含提示词的请求体

* @return 如果有效则返回null,否则返回包含错误信息的ResponseEntity

*/

private ResponseEntity<Response> check(PromptRequest request) {

if (request == null || request.getPrompt() == null || request.getPrompt().trim().isEmpty()) {

return ResponseEntity.badRequest().body(new Response("提示词不能为空"));

}

return null;

}

@PostMapping("/tool/high/getwether")

public ResponseEntity<Response> getWeather(@RequestBody PromptRequest request) {

ResponseEntity<Response> checkRlt = check(request);

if (checkRlt != null) {

return checkRlt;

}

try {

Object response = qwenService.getWeather(request.getPrompt());

return ResponseEntity.ok(new Response(response.toString()));

} catch (Exception e) {

// 捕获异常并返回错误信息

return ResponseEntity.status(500).body(new Response("请求处理失败: " + e.getMessage()));

}

}

}- 至此代码就全部写完了,现在把工程运行起来试试,在tools-high-level目录下执行以下命令即可启动服务

bash

mvn spring-boot:run- 用vscode的 REST Client插件发起http请求,参数如下,和前文用提示词指定JSON不同,这里并没有要求LLM返回JSON格式

bash

### 用提示词实现json格式的输出

POST http://localhost:8080/api/qwen/tool/high/getwether

Content-Type: application/json

Accept: application/json

{

"prompt": "深圳气温,简单回答"

}- 收到响应如下,可见LLM的回复内容准确且精简

bash

HTTP/1.1 200 OK

Content-Type: application/json

Transfer-Encoding: chunked

Date: Sat, 10 Jan 2026 09:41:58 GMT

Connection: close

{

"result": "深圳当前气温为19.8℃。[from high level getWeather]"

}- 再看日志,可见LLM第一次响应会提醒业务侧应该执行的函数及其方法,第二次请求就带上了函数结果,这样第二次LLM返回的就是根据题词要求从函数结果中整理出来的内容,这些内容源自函数结果,但是被LLM整理了一番,这就是用户最终拿到的结果

bash

09:43:50.182 [http-nio-8080-exec-3] INFO c.b.config.LangChain4jConfig - 发到LLM的请求: [UserMessage { name = null, contents = [TextContent { text = "深圳气温,简单回答" }], attributes = {} }]

09:43:52.259 [http-nio-8080-exec-3] INFO c.b.config.LangChain4jConfig - LLM响应, 执行函数[getWeather], 函数入参 : {"arg0": "广东", "arg1": "深圳"}

09:43:52.260 [http-nio-8080-exec-3] INFO c.bolingcavalry.service.WeatherTools - 调用天气接口:https://cn.apihz.cn/api/tianqi/tqyb.php?id=10011856&key=b860cf8c32bc224c1003078105ef43e4&sheng=%E5%B9%BF%E4%B8%9C&place=%E6%B7%B1%E5%9C%B3

09:43:52.692 [http-nio-8080-exec-3] INFO c.b.config.LangChain4jConfig - 发到LLM的请求: [UserMessage { name = null, contents = [TextContent { text = "深圳气温,简单回答" }], attributes = {} }, AiMessage { text = null, thinking = null, toolExecutionRequests = [ToolExecutionRequest { id = "call_86d916ea88574992a4d0d533", name = "getWeather", arguments = "{"arg0": "广东", "arg1": "深圳"}" }], attributes = {} }, ToolExecutionResultMessage { id = "call_86d916ea88574992a4d0d533" toolName = "getWeather" text = "{

"code" : 200,

"guo" : "中国",

"sheng" : "广东",

"shi" : "深圳",

"name" : "深圳",

"weather1" : "晴",

"weather2" : "多云",

"wd1" : 21,

"wd2" : 11,

"winddirection1" : "无持续风向",

"winddirection2" : "东北风",

"windleve1" : "微风",

"windleve2" : "3~4级",

"weather1img" : "https://rescdn.apihz.cn/resimg/tianqi/qing.png",

"weather2img" : "https://rescdn.apihz.cn/resimg/tianqi/duoyun.png",

"lon" : 114.0,

"lat" : 22.54,

"uptime" : "2026-01-10 20:00:00",

"nowinfo" : {

"precipitation" : 0.0,

"temperature" : 19.8,

"pressure" : 1012,

"humidity" : 27,

"windDirection" : "东北风",

"windDirectionDegree" : 6,

"windSpeed" : 1,

"windScale" : "微风",

"feelst" : 18.9,

"uptime" : "2026/01/10 17:05"

},

"alarm" : null

}" }]

09:43:53.994 [http-nio-8080-exec-3] INFO c.b.config.LangChain4jConfig - LLM响应, 纯文本 : 深圳当前气温为19.8℃。- 至此,函数调用的知识点就全部学习完了,可见实时接口+LLM理解能力的组合还是非常高效的,希望本文能给您一些参考,助力你的工具能力落地