前言

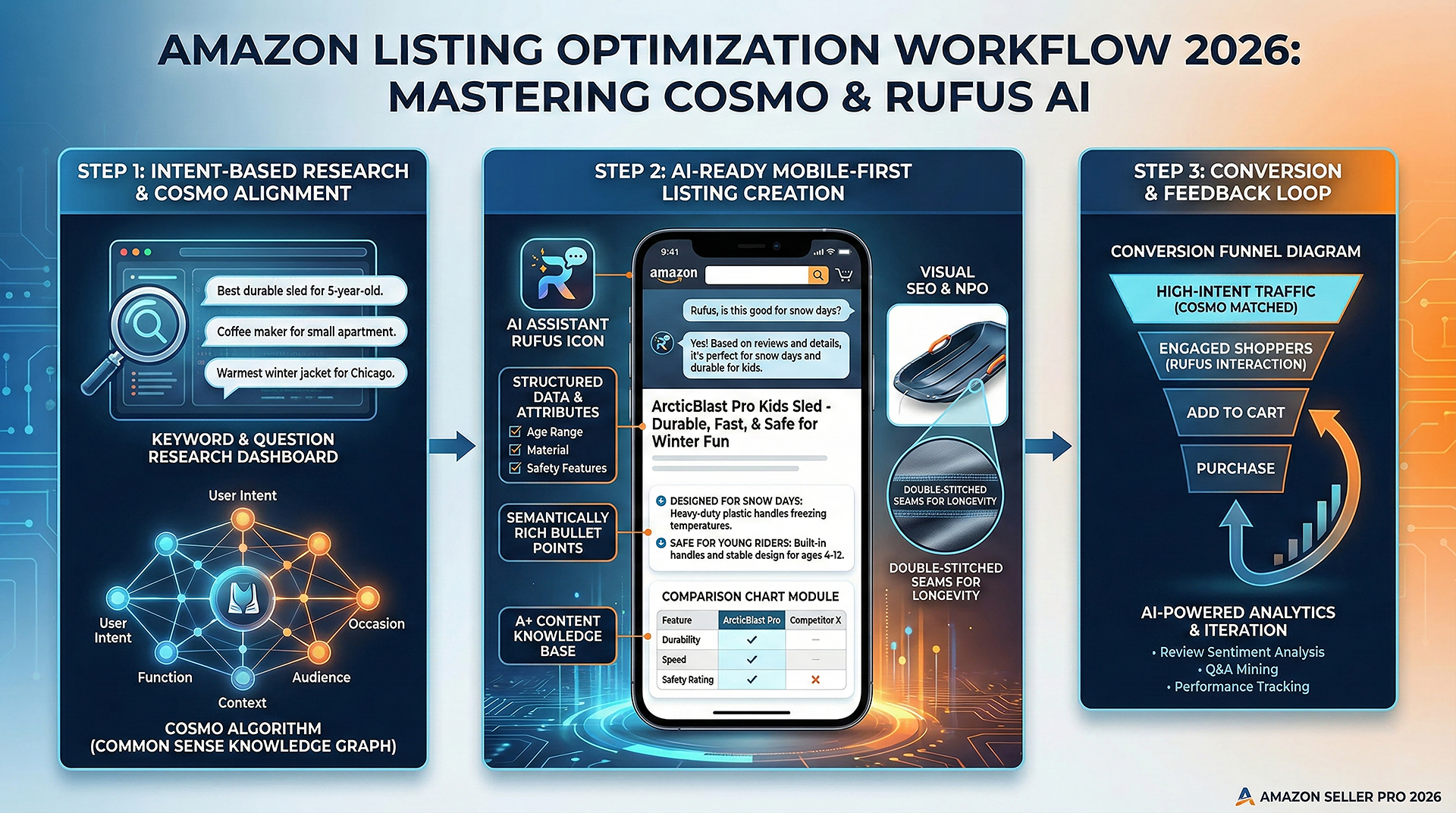

2026年的亚马逊搜索引擎已经完成了从传统信息检索到认知智能的跨越。本文将从技术视角深度解析COSMO(Common Sense Knowledge Generation)算法和Rufus AI助手的工作原理,并提供基于算法逻辑的Amazon Listing精细化优化方案。

作为一名长期从事电商数据采集和分析的技术从业者,我将结合实际案例和代码示例,帮助开发者和技术型卖家理解2026年亚马逊搜索引擎的底层机制,构建数据驱动的Listing优化体系。

技术背景:从词汇匹配到语义理解的范式转移

传统A9算法的局限性

亚马逊早期的A9算法本质上是一个基于TF-IDF和协同过滤的推荐系统:

python

# 简化的A9算法相关性计算逻辑

def calculate_relevance_score(query, listing):

# 1. 词汇匹配得分

keyword_match_score = tfidf_similarity(query, listing['title'] + listing['bullets'])

# 2. 历史CTR权重

ctr_weight = listing['historical_ctr'] * 0.3

# 3. 转化率权重

cvr_weight = listing['conversion_rate'] * 0.4

# 4. 评分和评论数

review_score = (listing['rating'] * listing['review_count']) * 0.3

return keyword_match_score + ctr_weight + cvr_weight + review_score这种方法的问题在于:

- 语义鸿沟:无法理解"Running Shoes"和"Jogging Sneakers"是同义表达

- 意图盲区:搜索"孕妇鞋"时,无法推理出"防滑"、"宽楦"是隐性需求

- 冷启动困境:新品缺乏历史数据,难以获得曝光

COSMO算法:基于知识图谱的常识推理

COSMO的核心突破在于构建了一个包含"实体-属性-意图"的知识图谱(Knowledge Graph):

python

# COSMO知识图谱的简化表示

knowledge_graph = {

"maternity_shoes": {

"implicit_attributes": [

{"name": "non_slip", "weight": 0.9, "reason": "pregnancy_safety"},

{"name": "wide_fit", "weight": 0.85, "reason": "foot_swelling"},

{"name": "slip_on", "weight": 0.8, "reason": "difficulty_bending"}

],

"related_scenarios": ["pregnancy", "postpartum", "foot_edema"],

"pain_points": ["safety_concern", "comfort", "ease_of_use"]

}

}

def cosmo_semantic_match(query, listing):

# 1. 提取查询意图

intent = extract_intent(query) # "maternity_shoes"

# 2. 从知识图谱获取隐性需求

implicit_needs = knowledge_graph[intent]["implicit_attributes"]

# 3. 检查Listing是否满足隐性需求

match_score = 0

for need in implicit_needs:

if check_attribute_in_listing(need["name"], listing):

# 不仅检查关键词,还检查因果关系描述

if check_causal_explanation(need["name"], need["reason"], listing):

match_score += need["weight"] * 1.5 # 有因果解释加权

else:

match_score += need["weight"]

return match_score关键洞察:COSMO不仅匹配关键词,更看重你是否在Listing中解释了"为什么这个属性能解决用户的痛点"。

Rufus AI:基于RAG的检索增强生成

Rufus采用了RAG(Retrieval-Augmented Generation)架构:

python

# Rufus的简化工作流程

class RufusAssistant:

def __init__(self):

self.llm = LargeLanguageModel("amazon-rufus-2026")

self.vector_db = VectorDatabase() # 存储所有Listing的向量表示

def answer_question(self, user_question, context_asins):

# 1. 从相关Listing中检索信息片段

relevant_chunks = []

for asin in context_asins:

listing_data = self.get_listing_data(asin)

# 提取高价值数据片段

chunks = [

listing_data['title'],

listing_data['bullets'], # 五点描述

listing_data['qa_pairs'], # Q&A

listing_data['top_reviews'] # 高赞评论

]

# 向量相似度检索

for chunk in chunks:

similarity = self.vector_db.similarity(user_question, chunk)

if similarity > 0.7:

relevant_chunks.append({

'content': chunk,

'source': asin,

'similarity': similarity

})

# 2. 按相似度排序,取Top 5

top_chunks = sorted(relevant_chunks, key=lambda x: x['similarity'], reverse=True)[:5]

# 3. 构建Prompt并生成答案

prompt = f"""

User Question: {user_question}

Relevant Information:

{self.format_chunks(top_chunks)}

Please provide a concise answer based on the above information.

"""

answer = self.llm.generate(prompt)

return answer

def format_chunks(self, chunks):

# 将检索到的片段格式化为LLM可理解的上下文

formatted = ""

for i, chunk in enumerate(chunks):

formatted += f"[Source {i+1} - ASIN: {chunk['source']}]\n{chunk['content']}\n\n"

return formatted优化启示:

- 事实密度优先:五点描述要包含具体参数,避免空洞形容词

- 结构化表达:用"问题-解决方案"的格式,便于AI提取

- Q&A战略地位:Rufus优先引用Q&A内容,必须提前布局

技术实现:基于数据的Listing优化工作流

第一步:竞品数据采集与分析

使用API批量采集竞品数据:

python

import requests

import json

class AmazonCompetitorAnalyzer:

def __init__(self, api_key):

self.api_key = api_key

self.base_url = "https://api.pangolinfo.com/v1"

def collect_competitor_data(self, keyword, num_pages=3):

"""采集搜索结果页的竞品ASIN"""

asins = []

for page in range(1, num_pages + 1):

response = requests.post(

f"{self.base_url}/amazon/search",

headers={"Authorization": f"Bearer {self.api_key}"},

json={

"keyword": keyword,

"page": page,

"marketplace": "US"

}

)

data = response.json()

asins.extend([item['asin'] for item in data['results']])

return asins

def analyze_titles(self, asins):

"""分析竞品标题的名词短语结构"""

import spacy

nlp = spacy.load("en_core_web_sm")

noun_phrases = []

for asin in asins:

listing = self.get_listing_detail(asin)

doc = nlp(listing['title'])

# 提取名词短语

for chunk in doc.noun_chunks:

noun_phrases.append({

'phrase': chunk.text,

'asin': asin,

'position': chunk.start_char

})

# 统计高频名词短语

from collections import Counter

phrase_freq = Counter([np['phrase'] for np in noun_phrases])

return phrase_freq.most_common(20)

def extract_pain_points(self, asins):

"""从差评中提取痛点"""

pain_points = []

for asin in asins:

# 采集1-3星评论

reviews = self.get_reviews(asin, rating_filter="1-3")

for review in reviews:

# 使用情感分析提取负面短语

negative_phrases = self.sentiment_analysis(review['text'])

pain_points.extend(negative_phrases)

# 统计高频痛点

from collections import Counter

pain_point_freq = Counter(pain_points)

return pain_point_freq.most_common(10)

def get_listing_detail(self, asin):

"""获取Listing详情"""

response = requests.post(

f"{self.base_url}/amazon/product",

headers={"Authorization": f"Bearer {self.api_key}"},

json={"asin": asin, "marketplace": "US"}

)

return response.json()

def get_reviews(self, asin, rating_filter=None):

"""获取评论数据"""

response = requests.post(

f"{self.base_url}/amazon/reviews",

headers={"Authorization": f"Bearer {self.api_key}"},

json={

"asin": asin,

"rating_filter": rating_filter,

"marketplace": "US"

}

)

return response.json()['reviews']

# 使用示例

analyzer = AmazonCompetitorAnalyzer(api_key="your_api_key")

# 采集"Running Shoes"的竞品数据

competitor_asins = analyzer.collect_competitor_data("Running Shoes", num_pages=3)

# 分析标题结构

top_noun_phrases = analyzer.analyze_titles(competitor_asins)

print("高频名词短语:", top_noun_phrases)

# 提取痛点

pain_points = analyzer.extract_pain_points(competitor_asins)

print("高频痛点:", pain_points)第二步:标题优化------名词短语优化(NPO)

python

def optimize_title_npo(product_info, keyword_research):

"""

基于名词短语优化(Noun Phrase Optimization)生成标题

"""

# 1. 核心名词短语(前70字符内)

core_phrase = f"{product_info['brand']} {product_info['product_type']}"

# 2. 添加最强差异化卖点

key_differentiator = product_info['top_selling_point']

# 3. 添加目标人群/场景

target_context = product_info['target_audience']

# 构建标题(确保前70字符包含核心信息)

title = f"{core_phrase}, {key_differentiator} for {target_context}"

# 4. 在剩余空间添加次要卖点

if len(title) < 150:

secondary_features = ", ".join(product_info['secondary_features'][:2])

title += f", {secondary_features}"

return title[:200] # 亚马逊标题限制200字符

# 示例

product = {

'brand': 'YourBrand',

'product_type': 'Running Shoes',

'top_selling_point': 'Breathable Mesh Upper with Arch Support',

'target_audience': 'Marathon Training',

'secondary_features': ['Lightweight Design', 'Shock Absorption']

}

optimized_title = optimize_title_npo(product, None)

print(optimized_title)

# 输出: "YourBrand Running Shoes, Breathable Mesh Upper with Arch Support for Marathon Training, Lightweight Design, Shock Absorption"第三步:五点描述生成------RAG就绪格式

python

def generate_rag_ready_bullets(product_info, competitor_pain_points):

"""

生成符合RAG检索逻辑的五点描述

"""

bullets = []

# 模板:大写标题 + 竞品痛点 + 功能 + 场景 + 利益

template = "{headline}: Unlike {competitor_pain}, our {product} features {feature}. This ensures {benefit} during {scenario}, providing you with {outcome}."

# 示例:针对"出汗打滑"痛点

bullet_1 = template.format(

headline="SWEAT-PROOF GRIP TECHNOLOGY",

competitor_pain="standard PVC mats that become slippery when wet",

product="yoga mat",

feature="dual-layer texture with moisture-wicking channels",

benefit="stable grip",

scenario="hot yoga sessions",

outcome="safety and confidence"

)

bullets.append(bullet_1)

# 可以根据不同痛点生成多条

for pain_point in competitor_pain_points[:5]:

bullet = generate_bullet_for_pain_point(product_info, pain_point)

bullets.append(bullet)

return bullets

def generate_bullet_for_pain_point(product, pain_point):

# 基于痛点生成针对性描述的逻辑

# 这里可以接入GPT等LLM来自动生成

pass第四步:后台数据完整性检查

python

def audit_backend_attributes(listing_data):

"""

审计后台属性完整性

"""

required_attributes = [

'item_type',

'target_audience',

'material_type',

'specific_uses',

'care_instructions',

'color',

'size',

'brand'

]

missing_attributes = []

for attr in required_attributes:

if not listing_data.get(attr) or listing_data[attr] == "":

missing_attributes.append(attr)

if missing_attributes:

print(f"警告:以下属性为空,将导致AI过滤: {missing_attributes}")

return False

# 检查是否使用标准值

non_standard_values = []

for attr, value in listing_data.items():

if not is_taxonomy_value(attr, value):

non_standard_values.append({attr: value})

if non_standard_values:

print(f"警告:以下属性未使用标准值: {non_standard_values}")

return False

print("✓ 后台属性完整性检查通过")

return True

def is_taxonomy_value(attribute, value):

# 检查是否为亚马逊标准分类值

# 实际应用中需要对接亚马逊的分类API

taxonomy_db = load_amazon_taxonomy()

return value in taxonomy_db.get(attribute, [])第五步:SQP数据驱动的持续优化

python

class SQPAnalyzer:

"""搜索查询表现(Search Query Performance)分析器"""

def __init__(self, sqp_data):

self.data = sqp_data

def diagnose_funnel_issues(self):

"""诊断转化漏斗问题"""

issues = []

for keyword_data in self.data:

impressions = keyword_data['impressions']

clicks = keyword_data['clicks']

atc = keyword_data['add_to_cart']

orders = keyword_data['orders']

# 计算各阶段转化率

ctr = clicks / impressions if impressions > 0 else 0

atc_rate = atc / clicks if clicks > 0 else 0

cvr = orders / atc if atc > 0 else 0

# 诊断逻辑

if impressions > 1000 and ctr < 0.005: # CTR < 0.5%

issues.append({

'keyword': keyword_data['keyword'],

'issue': 'low_ctr',

'recommendation': '优化主图或标题前70字符',

'priority': 'high'

})

if ctr > 0.01 and atc_rate < 0.1: # ATR < 10%

issues.append({

'keyword': keyword_data['keyword'],

'issue': 'low_atc',

'recommendation': '优化五点描述,检查差评,增加A+对比图',

'priority': 'high'

})

if atc_rate > 0.15 and cvr < 0.3: # CVR < 30%

issues.append({

'keyword': keyword_data['keyword'],

'issue': 'low_cvr',

'recommendation': '添加Coupon,检查Prime配送',

'priority': 'medium'

})

return issues

def generate_ppc_strategy(self, issues):

"""基于SQP问题生成PPC策略"""

ppc_campaigns = []

for issue in issues:

if issue['issue'] == 'low_impressions':

# 曝光不足,加大PPC投放

ppc_campaigns.append({

'keyword': issue['keyword'],

'match_type': 'exact',

'bid_adjustment': '+50%',

'reason': '提升自然排名信号'

})

return ppc_campaigns

# 使用示例

sqp_data = load_sqp_report() # 从亚马逊后台导出的SQP数据

analyzer = SQPAnalyzer(sqp_data)

issues = analyzer.diagnose_funnel_issues()

for issue in issues:

print(f"关键词: {issue['keyword']}")

print(f"问题: {issue['issue']}")

print(f"建议: {issue['recommendation']}\n")

ppc_strategy = analyzer.generate_ppc_strategy(issues)常见问题与解决方案

Q1: 如何批量采集竞品数据?

A: 我们可以选择自建爬虫或者使用专业的数据采集API。以Pangolinfo Scrape API为例:

python

# 批量采集竞品Listing数据

import requests

api_endpoint = "https://api.pangolinfo.com/v1/amazon/batch"

headers = {"Authorization": "Bearer YOUR_API_KEY"}

asins = ["B08N5WRWNW", "B07XJ8C8F5", "B09B8RJQN3"] # 竞品ASIN列表

response = requests.post(

api_endpoint,

headers=headers,

json={

"asins": asins,

"data_points": ["title", "bullets", "reviews", "qa", "images"],

"marketplace": "US"

}

)

competitor_data = response.json()Q2: 如何监控关键词排名变化?

A: 使用可视化监控工具,如AMZ Data Tracker,可以实时追踪:

- 关键词自然排名

- BSR变化趋势

- 评论增长速度

- 竞品动态

Q3: 后台搜索词(Search Terms)如何优化?

A: 250字节的Search Terms应该:

- 不重复标题和五点中的词

- 包含同义词(如"sneakers"对应"shoes")

- 包含常见拼写错误(如"pilliow")

- 包含西班牙语同义词(美国站)

- 直接空格分隔,不用逗号

python

def optimize_search_terms(title, bullets, max_bytes=250):

"""优化后台搜索词"""

# 1. 提取标题和五点中的词

existing_words = set(extract_words(title + " ".join(bullets)))

# 2. 生成同义词

synonyms = generate_synonyms(existing_words)

# 3. 添加常见拼写错误

misspellings = generate_common_misspellings(existing_words)

# 4. 添加西班牙语同义词(针对美国站)

spanish_synonyms = translate_to_spanish(existing_words)

# 5. 合并并去重

search_terms = list(set(synonyms + misspellings + spanish_synonyms) - existing_words)

# 6. 控制在250字节内

result = ""

for term in search_terms:

if len((result + " " + term).encode('utf-8')) <= max_bytes:

result += " " + term

else:

break

return result.strip()性能优化建议

1. 图片OCR优化

确保卖点图上的文字能被AI识别:

python

from PIL import Image, ImageDraw, ImageFont

def create_ocr_friendly_infographic(product_features):

"""创建OCR友好的信息图"""

img = Image.new('RGB', (1500, 1500), color='white')

draw = ImageDraw.Draw(img)

# 使用大号清晰字体

font = ImageFont.truetype("Arial.ttf", 48)

y_position = 100

for feature in product_features:

# 确保文字清晰、对比度高

draw.text((100, y_position), feature['text'], fill='black', font=font)

y_position += 150

img.save('infographic_ocr_ready.jpg', quality=95)2. A+页面ALT文本优化

python

def generate_alt_text(image_description, keywords):

"""生成SEO友好的ALT文本"""

# 不要堆砌关键词,要写成自然的句子

alt_text = f"{image_description}"

# 自然融入1-2个关键词

if keywords:

alt_text += f" featuring {keywords[0]}"

return alt_text[:125] # ALT文本建议不超过125字符

# 示例

alt = generate_alt_text(

"Close-up view of waterproof zipper on black travel backpack",

["water-resistant design", "durable construction"]

)

print(alt)

# 输出: "Close-up view of waterproof zipper on black travel backpack featuring water-resistant design"总结

2026年的Amazon Listing优化已经从文案技巧进化为算法工程。理解COSMO的知识图谱推理机制和Rufus的RAG检索逻辑,是构建高转化Listing的前提。

核心技术要点:

- 名词短语优化(NPO):用自然语言结构替代关键词堆砌

- 因果链条表达:在五点描述中显性化"功能-痛点-利益"关系

- 结构化数据完整性:后台属性零空值,使用标准分类值

- RAG就绪文案:高事实密度,便于AI提取的结构化表达

- 数据驱动迭代:基于SQP报告持续优化

对于需要大规模数据支撑的技术团队,推荐使用Pangolinfo的API解决方案,实现从数据采集到分析的全链路自动化。

原创技术文章 | 转载请注明出处

#Amazon #Listing优化 #COSMO算法 #RufusAI #电商数据 #API开发