资源感知优化使AI智能体能够在运行过程中,动态监控并管理其计算、时间与财务资源的使用。这超越了传统的动作序列规划,要求智能体在给定的资源约束下,为达成目标而做出执行层面的优化决策。

其核心在于智能权衡:例如,在精度更高但更昂贵的模型与更快、成本更低的模型之间进行选择;或决定是否分配额外计算资源以获得更精细的答案,还是返回一个更快但相对简略的响应。

核心策略:资源权衡决策

| 场景与目标 | 可能采取的资源策略 | 预期效果 |

|---|---|---|

| 需要快速初步洞察 (如:即时趋势总结) | 调用更快、更经济的轻量模型或算法。 | 优先速度与成本,牺牲部分精度以换取即时性。 |

| 需要高精度关键决策 (如:重要投资预测) | 分配更多计算资源与时间,调用更强、更精确的模型。 | 优先质量与可靠性,接受更高的资源消耗与延迟。 |

| 服务连续性保障 (如:主模型不可用) | 启用回退机制,自动切换至默认或更经济的备用模型。 | 实现优雅降级,确保核心功能可用,避免服务完全中断。 |

实际应用与用例

资源感知优化使得AI智能体能够根据实际约束与目标,在多种资源维度上做出智能权衡与决策。以下是其在实际场景中的具体体现:

| 应用场景 | 核心资源约束 | 典型的优化策略 | 示例决策 |

|---|---|---|---|

| 成本优化的LLM调用 | 财务预算、API成本 | 根据任务复杂性与价值,分级调用不同规格的模型。 | 复杂分析调用GPT-4,简单问答调用成本更低的轻量模型。 |

| 延迟敏感的操作 | 响应时间、实时性要求 | 优先选择低延迟的推理路径或模型,确保及时响应。 | 在实时对话中,选用快速生成模型,牺牲部分创造性以换取速度。 |

| 能源效率管理 | 电池电量、设备功耗 | 优化计算负载,降低能耗以延长设备续航。 | 在移动设备上,优先使用本地轻量化模型,减少高性能计算。 |

| 服务可靠性保障 | 服务可用性、故障容错 | 设置回退与降级机制,确保主要服务不可用时仍能提供基本功能。 | 当GPT-4服务超时,自动切换至可用的备用模型(如Claude)继续响应。 |

| 数据使用管理 | 网络带宽、存储空间 | 根据需求精度,选择数据传输与存储的粒度。 | 仅检索文档摘要或关键片段,而非下载完整的大型文件。 |

| 自适应任务分配 | 计算负载、处理能力 | 在多个Agent间动态分配任务,实现负载均衡与效率最大化。 | 系统监控各Agent的CPU使用率,将新任务自动分配给当前最空闲的Agent。 |

通过上述策略,资源感知优化使AI系统能够灵活适应各种实际环境与业务目标,在成本、速度、能耗与可靠性等多重约束下,持续做出最优的执行决策。

关键价值

通过资源感知优化,AI系统不再是僵化地执行固定流程,而是能够根据目标优先级 和实时资源状况 ,做出自适应决策。这使其在复杂多变的真实环境中,能够在效率、质量与成本之间取得最佳平衡,从而成为一个更智能、更经济、更可靠的任务执行者。

超越动态模型切换:Agent 资源优化的范围

资源感知优化旨在确保智能体在现实资源约束下高效运行,其技术范畴不仅限于动态模型切换,还包含一系列系统性的优化策略:

1、模型与工具优化

| 技术 | 核心思想 | 典型应用 |

|---|---|---|

| 动态模型切换 | 根据任务复杂度与可用资源,灵活选择不同规模与成本的LLM。 | 简单查询使用轻量模型,复杂分析调用高性能模型。 |

| 自适应工具选择 | 综合考虑API成本、延迟等因素,为每个子任务选择最合适的工具。 | 优先选择本地工具或低成本API,避免不必要的昂贵调用。 |

2、上下文与预测优化

| 技术 | 核心思想 | 典型应用 |

|---|---|---|

| 上下文修剪与摘要 | 通过智能摘要与筛选,保留对话历史中最关键的信息,减少token消耗与推理成本。 | 自动提炼长对话的核心内容,避免重复传递冗余信息。 |

| 主动资源预测 | 通过预测未来工作负载,提前进行资源分配与管理,预防系统瓶颈。 | 根据历史流量模式,在高峰前预分配计算资源。 |

3、系统架构优化

| 技术 | 核心思想 | 典型应用 |

|---|---|---|

| 成本敏感探索 | 在多智能体协作中,统筹计算成本与通信成本,优化整体资源支出。 | 在分布式系统中权衡本地计算与跨节点通信的开销。 |

| 节能部署 | 在资源受限环境中,优化算法与策略以降低系统能耗。 | 在移动或边缘设备上使用低功耗推理模式。 |

| 并行化与分布式计算 | 将任务分解并分配到多个计算单元,提升处理能力与吞吐量。 | 大型数据分析任务分发到多个服务器并行执行。 |

4、自适应与容错优化

| 技术 | 核心思想 | 典型应用 |

|---|---|---|

| 学习型资源分配 | 基于历史反馈与性能指标,持续优化资源分配策略。 | 系统自动调整模型调用频率与批次大小以平衡成本与效果。 |

| 优雅降级与回退 | 在资源严重受限时,自动切换至简化模式或备用方案,维持基本服务可用性。 | 主模型不可用时自动切换至轻量模型,保障服务不中断。 |

这些技术共同构成了智能体资源优化的完整体系,使其能够在成本、速度、能耗与可靠性等多重约束下,动态调整行为并维持高效、稳定的运行。

概览

是什么

资源感知优化解决了在智能系统中管理计算、时间和财务资源消耗的挑战。基于 LLM 的应用程序 可能既昂贵又缓慢,为每项任务选择最佳模型或工具通常效率低下。这在系统输出的质量与产生它所需的资 源之间创建了基本权衡。如果没有动态管理策略,系统无法适应不同的任务复杂性或在预算和性能约束内运 行。

为什么

标准化解决方案是构建一个智能监控和分配资源的 agentic 系统。此模式通常使用"路由器 Agent" 首先对传入请求的复杂性进行分类。然后将请求转发到最合适的 LLM 或工具------对于简单查询使用快速、经 济的模型,对于复杂推理使用更强大的模型。"批评 Agent"可以通过评估响应质量来进一步改进流程,提 供反馈以随时间改进路由逻辑。这种动态、多 Agent 方法确保系统高效运行,在响应质量和成本效益之间取 得平衡。

经验法则

在以下情况下使用此模式:在 API 调用或计算能力的严格财务预算下运行,构建对延迟敏感的应 用程序(其中快速响应时间至关重要),在资源受限的硬件(如电池寿命有限的边缘设备)上部署 Agent,以 编程方式平衡响应质量和运营成本之间的权衡,以及管理复杂的、多步骤的工作流(其中不同任务具有不同 的资源需求)。

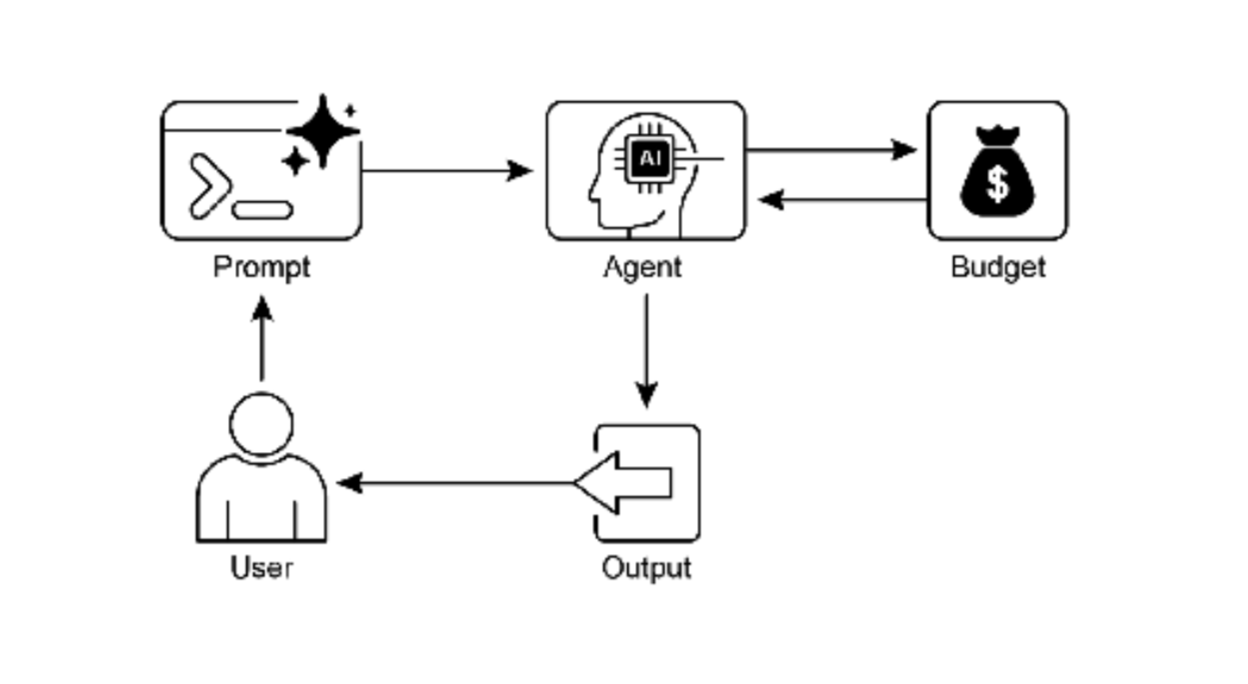

图 2:资源感知优化设计模式

关键要点

-

・ 资源感知优化至关重要:智能 Agent 可以动态管理计算、时间和财务资源。根据实时约束和目标做出 关于模型使用和执行路径的决策。

-

・ 可扩展性的多 Agent 架构:Google 的 ADK 提供多 Agent 框架,实现模块化设计。不同的 Agent(回 答、路由、批评)处理特定任务。

-

・ 动态、LLM 驱动的路由:路由器 Agent 根据查询复杂性和预算将查询引导到语言模型(简单查询使用 Gemini Flash,复杂查询使用 Gemini Pro)。这优化了成本和性能。

-

・ 批评Agent功能:专用批评Agent提供自我纠正、性能监控和改进路由逻辑的反馈,增强系统有效性。

-

・ 通过反馈和灵活性进行优化:批评和模型集成灵活性的评估能力有助于自适应和自我改进的系统行为。

-

・ 其他资源感知优化技术:其他方法包括自适应工具使用和选择、上下文修剪和摘要、主动资源预测、多

Agent 系统中的成本敏感探索、节能部署、并行化和分布式计算感知、学习型资源分配策略、优雅降级 和回退机制,以及关键任务的优先级排序。

基于LangChain4j的资源感知优化实现示例

我们将在LangChain4j中实现一个资源感知优化的示例。我们将创建一个系统,该系统包含一个路由器Agent,它根据任务的复杂性和资源约束,将任务分配给不同的处理Agent。我们还将考虑成本、延迟和能源效率等因素。

由于LangChain4j主要是一个Java库,我们将使用Java代码来构建这个示例。我们将模拟以下场景:

-

1、有一个路由器Agent,它接收用户请求,并评估请求的复杂性。

-

2、根据评估结果,路由器Agent将请求路由到不同的处理Agent:一个使用大型、昂贵的LLM(如GPT-4),另一个使用小型、经济的LLM(如GPT-3.5-turbo)。

-

3、我们还将考虑一个回退机制,当主模型不可用时,切换到备用模型。

注意:为了简化示例,我们将使用模拟的LLM调用,而不是实际的API调用。

步骤:

a. 定义请求和响应类。

b. 创建路由器Agent,它使用一个简单的规则(例如,基于请求的长度或关键字)来评估请求的复杂性。

c. 创建两个处理Agent:一个用于复杂任务(使用"昂贵"模型),另一个用于简单任务(使用"经济"模型)。

d. 实现一个回退机制,当主处理Agent失败时,使用备用处理Agent。

e. 展示如何根据资源约束(如预算、延迟)来调整路由决策。

以下是使用LangChain4j框架实现资源感知优化模式的代码示例,展示了在不同应用场景中如何智能地管理计算、时间和财务资源:

1. 资源感知路由器框架

java

import dev.langchain4j.agent.tool.Tool;

import dev.langchain4j.data.message.AiMessage;

import dev.langchain4j.data.message.SystemMessage;

import dev.langchain4j.data.message.UserMessage;

import dev.langchain4j.memory.ChatMemory;

import dev.langchain4j.memory.chat.MessageWindowChatMemory;

import dev.langchain4j.model.chat.ChatLanguageModel;

import dev.langchain4j.model.openai.OpenAiChatModel;

import dev.langchain4j.service.AiServices;

import lombok.extern.slf4j.Slf4j;

import java.time.Duration;

import java.time.LocalDateTime;

import java.util.*;

import java.util.concurrent.ConcurrentHashMap;

import java.util.concurrent.atomic.AtomicLong;

@Slf4j

public class ResourceAwareRouter {

// 资源配置

private final ResourceConfig resourceConfig;

// 模型配置映射

private final Map<String, ModelConfig> modelConfigs = new HashMap<>();

// 资源使用统计

private final Map<String, ResourceUsage> resourceUsage = new ConcurrentHashMap<>();

// 成本追踪器

private final CostTracker costTracker;

public ResourceAwareRouter(ResourceConfig resourceConfig) {

this.resourceConfig = resourceConfig;

this.costTracker = new CostTracker();

initializeModelConfigs();

}

/**

* 初始化模型配置

*/

private void initializeModelConfigs() {

// 配置不同模型的资源特性

modelConfigs.put("gpt-4", ModelConfig.builder()

.modelName("gpt-4")

.costPerToken(0.00003) // $0.03 per 1K tokens

.maxTokens(8192)

.latencyMs(3000)

.accuracyScore(0.95)

.build());

modelConfigs.put("gpt-3.5-turbo", ModelConfig.builder()

.modelName("gpt-3.5-turbo")

.costPerToken(0.0000015) // $0.0015 per 1K tokens

.maxTokens(4096)

.latencyMs(1000)

.accuracyScore(0.85)

.build());

modelConfigs.put("claude-instant", ModelConfig.builder()

.modelName("claude-instant")

.costPerToken(0.00000163) // $0.00163 per 1K tokens

.maxTokens(100000)

.latencyMs(2000)

.accuracyScore(0.88)

.build());

modelConfigs.put("local-llama", ModelConfig.builder()

.modelName("local-llama")

.costPerToken(0.0) // 本地模型无API成本

.maxTokens(2048)

.latencyMs(5000)

.accuracyScore(0.75)

.build());

}

/**

* 智能路由请求到最佳模型

*/

public RoutedResponse routeRequest(String request, RequestContext context) {

log.info("路由请求: {}, 上下文: {}",

request.substring(0, Math.min(50, request.length())),

context);

// 步骤1: 评估请求复杂度

ComplexityScore complexity = assessComplexity(request, context);

// 步骤2: 检查资源约束

ResourceConstraints constraints = checkResourceConstraints(context);

// 步骤3: 选择最佳模型

SelectedModel selection = selectOptimalModel(complexity, constraints);

// 步骤4: 执行请求

ExecutionResult result = executeWithSelectedModel(request, selection, context);

// 步骤5: 更新资源使用统计

updateResourceUsage(selection, result);

return RoutedResponse.builder()

.request(request)

.selectedModel(selection.getModelName())

.response(result.getResponse())

.executionTimeMs(result.getExecutionTimeMs())

.tokensUsed(result.getTokensUsed())

.estimatedCost(result.getEstimatedCost())

.complexityScore(complexity.getScore())

.fallbackUsed(result.isFallbackUsed())

.timestamp(LocalDateTime.now())

.build();

}

/**

* 评估请求复杂度

*/

private ComplexityScore assessComplexity(String request, RequestContext context) {

// 基于多个维度评估复杂度

double lengthScore = Math.min(request.length() / 1000.0, 1.0);

double technicalScore = containsTechnicalTerms(request) ? 0.8 : 0.2;

double reasoningScore = requiresDeepReasoning(request) ? 0.9 : 0.3;

// 计算综合复杂度分数

double totalScore = (lengthScore * 0.3) + (technicalScore * 0.4) + (reasoningScore * 0.3);

return ComplexityScore.builder()

.score(totalScore)

.lengthScore(lengthScore)

.technicalScore(technicalScore)

.reasoningScore(reasoningScore)

.complexityLevel(determineComplexityLevel(totalScore))

.build();

}

/**

* 检查资源约束

*/

private ResourceConstraints checkResourceConstraints(RequestContext context) {

return ResourceConstraints.builder()

.remainingBudget(resourceConfig.getMonthlyBudget() - costTracker.getCurrentMonthCost())

.maxLatencyMs(context.getMaxLatencyMs() != null ?

context.getMaxLatencyMs() : resourceConfig.getDefaultMaxLatencyMs())

.energyLimit(resourceConfig.isEnergyConstrained() ?

resourceConfig.getEnergyBudget() : Double.MAX_VALUE)

.currentLoad(getSystemLoad())

.build();

}

/**

* 选择最优模型

*/

private SelectedModel selectOptimalModel(ComplexityScore complexity,

ResourceConstraints constraints) {

List<ModelCandidate> candidates = new ArrayList<>();

// 评估每个候选模型

for (ModelConfig config : modelConfigs.values()) {

if (isModelSuitable(config, complexity, constraints)) {

double score = calculateModelScore(config, complexity, constraints);

candidates.add(new ModelCandidate(config, score));

}

}

// 如果没有合适的模型,选择最便宜的作为回退

if (candidates.isEmpty()) {

log.warn("没有找到合适的模型,使用最便宜的模型作为回退");

return findCheapestModel(complexity, constraints);

}

// 选择得分最高的模型

candidates.sort((a, b) -> Double.compare(b.getScore(), a.getScore()));

ModelCandidate bestCandidate = candidates.get(0);

log.info("选择模型: {}, 得分: {}",

bestCandidate.getConfig().getModelName(),

bestCandidate.getScore());

return SelectedModel.builder()

.modelName(bestCandidate.getConfig().getModelName())

.config(bestCandidate.getConfig())

.selectionScore(bestCandidate.getScore())

.reasoning(generateSelectionReasoning(bestCandidate, complexity, constraints))

.build();

}

/**

* 计算模型得分

*/

private double calculateModelScore(ModelConfig config,

ComplexityScore complexity,

ResourceConstraints constraints) {

double score = 0.0;

// 1. 成本得分(成本越低得分越高)

double costScore = 1.0 - Math.min(config.getCostPerToken() * 1000000, 1.0);

score += costScore * resourceConfig.getCostWeight();

// 2. 延迟得分(延迟越低得分越高)

double latencyScore = 1.0 - Math.min(config.getLatencyMs() / 10000.0, 1.0);

score += latencyScore * resourceConfig.getLatencyWeight();

// 3. 准确度得分(对于复杂任务更重要)

double accuracyScore = config.getAccuracyScore();

double accuracyWeight = complexity.getScore() > 0.7 ? 0.4 : 0.2;

score += accuracyScore * accuracyWeight;

// 4. 复杂度匹配得分

double complexityMatch = 1.0 - Math.abs(

config.getAccuracyScore() - complexity.getScore()

);

score += complexityMatch * 0.2;

return score;

}

/**

* 使用选定的模型执行请求

*/

private ExecutionResult executeWithSelectedModel(String request,

SelectedModel selection,

RequestContext context) {

long startTime = System.currentTimeMillis();

try {

// 创建选定的模型实例

ChatLanguageModel model = createModelInstance(selection.getConfig());

// 设置超时

Duration timeout = Duration.ofMillis(

Math.min(selection.getConfig().getLatencyMs() * 2, 30000)

);

// 执行请求

String response = model.generate(request);

long executionTime = System.currentTimeMillis() - startTime;

int estimatedTokens = estimateTokenCount(request + response);

double estimatedCost = estimatedTokens * selection.getConfig().getCostPerToken();

return ExecutionResult.builder()

.success(true)

.response(response)

.executionTimeMs(executionTime)

.tokensUsed(estimatedTokens)

.estimatedCost(estimatedCost)

.fallbackUsed(false)

.build();

} catch (Exception e) {

log.warn("主模型执行失败: {}, 错误: {}",

selection.getModelName(), e.getMessage());

// 尝试回退机制

return executeWithFallback(request, context, startTime);

}

}

/**

* 执行回退机制

*/

private ExecutionResult executeWithFallback(String request,

RequestContext context,

long startTime) {

log.info("执行回退机制");

// 尝试使用更便宜/更可靠的模型

List<ModelConfig> fallbackModels = Arrays.asList(

modelConfigs.get("gpt-3.5-turbo"),

modelConfigs.get("claude-instant"),

modelConfigs.get("local-llama")

);

for (ModelConfig fallback : fallbackModels) {

try {

ChatLanguageModel model = createModelInstance(fallback);

String response = model.generate(request);

long executionTime = System.currentTimeMillis() - startTime;

int estimatedTokens = estimateTokenCount(request + response);

double estimatedCost = estimatedTokens * fallback.getCostPerToken();

return ExecutionResult.builder()

.success(true)

.response(response)

.executionTimeMs(executionTime)

.tokensUsed(estimatedTokens)

.estimatedCost(estimatedCost)

.fallbackUsed(true)

.fallbackModel(fallback.getModelName())

.build();

} catch (Exception e) {

log.warn("回退模型 {} 也失败: {}",

fallback.getModelName(), e.getMessage());

}

}

// 所有回退都失败

return ExecutionResult.builder()

.success(false)

.response("抱歉,所有处理模型当前都不可用,请稍后重试。")

.executionTimeMs(System.currentTimeMillis() - startTime)

.tokensUsed(0)

.estimatedCost(0.0)

.fallbackUsed(true)

.build();

}

// 辅助方法

private boolean containsTechnicalTerms(String text) {

String[] technicalTerms = {"algorithm", "api", "database", "server", "protocol",

"function", "variable", "class", "object", "compile"};

String lowerText = text.toLowerCase();

return Arrays.stream(technicalTerms).anyMatch(lowerText::contains);

}

private boolean requiresDeepReasoning(String text) {

String[] reasoningIndicators = {"why", "how", "explain", "compare", "analyze",

"evaluate", "justify", "predict", "what if"};

String lowerText = text.toLowerCase();

return Arrays.stream(reasoningIndicators).anyMatch(lowerText::contains);

}

private ComplexityLevel determineComplexityLevel(double score) {

if (score > 0.8) return ComplexityLevel.HIGH;

if (score > 0.5) return ComplexityLevel.MEDIUM;

return ComplexityLevel.LOW;

}

private boolean isModelSuitable(ModelConfig config,

ComplexityScore complexity,

ResourceConstraints constraints) {

// 检查预算

double estimatedCost = 1000 * config.getCostPerToken(); // 估算1000个token

if (estimatedCost > constraints.getRemainingBudget()) {

return false;

}

// 检查延迟要求

if (config.getLatencyMs() > constraints.getMaxLatencyMs()) {

return false;

}

// 检查模型能力是否匹配任务复杂度

if (complexity.getComplexityLevel() == ComplexityLevel.HIGH &&

config.getAccuracyScore() < 0.8) {

return false;

}

return true;

}

private SelectedModel findCheapestModel(ComplexityScore complexity,

ResourceConstraints constraints) {

ModelConfig cheapest = null;

for (ModelConfig config : modelConfigs.values()) {

if (cheapest == null || config.getCostPerToken() < cheapest.getCostPerToken()) {

if (isModelSuitable(config, complexity, constraints)) {

cheapest = config;

}

}

}

if (cheapest == null) {

// 如果还是没有合适的,强制使用最便宜的

cheapest = modelConfigs.values().stream()

.min(Comparator.comparing(ModelConfig::getCostPerToken))

.orElseThrow();

}

return SelectedModel.builder()

.modelName(cheapest.getModelName())

.config(cheapest)

.selectionScore(0.0)

.reasoning("使用最便宜的可用模型作为回退")

.build();

}

private ChatLanguageModel createModelInstance(ModelConfig config) {

return OpenAiChatModel.builder()

.apiKey(System.getenv("OPENAI_API_KEY"))

.modelName(config.getModelName())

.timeout(Duration.ofMillis(config.getLatencyMs() * 2))

.maxRetries(2)

.build();

}

private int estimateTokenCount(String text) {

// 简单估算:平均每个token 4个字符

return (int) Math.ceil(text.length() / 4.0);

}

private double getSystemLoad() {

// 模拟获取系统负载

return Math.random() * 100;

}

private void updateResourceUsage(SelectedModel selection, ExecutionResult result) {

String modelName = selection.getModelName();

ResourceUsage usage = resourceUsage.computeIfAbsent(

modelName, k -> new ResourceUsage()

);

usage.incrementRequestCount();

usage.addTokensUsed(result.getTokensUsed());

usage.addCost(result.getEstimatedCost());

usage.addExecutionTime(result.getExecutionTimeMs());

// 更新成本追踪器

costTracker.recordCost(result.getEstimatedCost(), modelName);

}

private String generateSelectionReasoning(ModelCandidate candidate,

ComplexityScore complexity,

ResourceConstraints constraints) {

return String.format(

"选择模型 %s: 成本 $%.6f/token, 延迟 %dms, 准确度 %.2f. " +

"任务复杂度: %.2f (%s), 剩余预算: $%.4f, 延迟限制: %dms",

candidate.getConfig().getModelName(),

candidate.getConfig().getCostPerToken(),

candidate.getConfig().getLatencyMs(),

candidate.getConfig().getAccuracyScore(),

complexity.getScore(),

complexity.getComplexityLevel(),

constraints.getRemainingBudget(),

constraints.getMaxLatencyMs()

);

}

// 数据类

@Data

@Builder

public static class ResourceConfig {

private double monthlyBudget;

private int defaultMaxLatencyMs;

private boolean energyConstrained;

private Double energyBudget;

private double costWeight;

private double latencyWeight;

}

@Data

@Builder

public static class ModelConfig {

private String modelName;

private double costPerToken;

private int maxTokens;

private int latencyMs;

private double accuracyScore;

}

@Data

@Builder

public static class RoutedResponse {

private String request;

private String selectedModel;

private String response;

private long executionTimeMs;

private int tokensUsed;

private double estimatedCost;

private double complexityScore;

private boolean fallbackUsed;

private LocalDateTime timestamp;

}

}2. 延迟敏感操作优化

java

import dev.langchain4j.agent.tool.Tool;

import dev.langchain4j.service.AiServices;

import lombok.extern.slf4j.Slf4j;

import java.time.Duration;

import java.util.concurrent.*;

@Slf4j

public class LatencySensitiveAgent {

private final ResourceAwareRouter router;

private final ExecutorService executorService;

private final ScheduledExecutorService timeoutScheduler;

// 延迟敏感配置

private final LatencyConfig latencyConfig;

public LatencySensitiveAgent(ResourceAwareRouter router, LatencyConfig latencyConfig) {

this.router = router;

this.latencyConfig = latencyConfig;

this.executorService = Executors.newCachedThreadPool();

this.timeoutScheduler = Executors.newScheduledThreadPool(2);

}

/**

* 处理延迟敏感请求

*/

public TimedResponse processWithLatencyConstraint(String request, int maxLatencyMs) {

log.info("处理延迟敏感请求,最大延迟: {}ms", maxLatencyMs);

RequestContext context = RequestContext.builder()

.maxLatencyMs(maxLatencyMs)

.priority(RequestPriority.HIGH)

.build();

// 使用CompletableFuture实现超时控制

CompletableFuture<ResourceAwareRouter.RoutedResponse> future =

CompletableFuture.supplyAsync(() ->

router.routeRequest(request, context),

executorService

);

// 设置超时

CompletableFuture<ResourceAwareRouter.RoutedResponse> timeoutFuture =

future.orTimeout(maxLatencyMs, TimeUnit.MILLISECONDS);

try {

ResourceAwareRouter.RoutedResponse routedResponse = timeoutFuture.get();

return TimedResponse.builder()

.success(true)

.response(routedResponse.getResponse())

.actualLatencyMs(routedResponse.getExecutionTimeMs())

.withinBudget(routedResponse.getExecutionTimeMs() <= maxLatencyMs)

.modelUsed(routedResponse.getSelectedModel())

.estimatedCost(routedResponse.getEstimatedCost())

.build();

} catch (TimeoutException e) {

log.warn("请求超时: {}ms", maxLatencyMs);

return handleTimeout(request, maxLatencyMs);

} catch (Exception e) {

log.error("请求处理失败", e);

return TimedResponse.builder()

.success(false)

.response("处理失败: " + e.getMessage())

.actualLatencyMs(maxLatencyMs)

.withinBudget(false)

.error(e.getMessage())

.build();

}

}

/**

* 处理超时情况

*/

private TimedResponse handleTimeout(String request, int maxLatencyMs) {

// 尝试快速回退方案

String quickResponse = generateQuickResponse(request);

// 异步继续处理完整请求(不阻塞当前响应)

if (latencyConfig.isBackgroundProcessingEnabled()) {

executorService.submit(() -> {

try {

ResourceAwareRouter.RoutedResponse fullResponse =

router.routeRequest(request,

RequestContext.builder().maxLatencyMs(30000).build());

log.info("后台处理完成,响应: {}",

fullResponse.getResponse().substring(0, 50));

// 可以存储结果供后续使用

storeBackgroundResult(request, fullResponse);

} catch (Exception e) {

log.error("后台处理失败", e);

}

});

}

return TimedResponse.builder()

.success(true)

.response(quickResponse + "\n\n[注:这是快速响应,完整分析将在后台处理]")

.actualLatencyMs(maxLatencyMs)

.withinBudget(false)

.modelUsed("quick-fallback")

.isQuickResponse(true)

.build();

}

/**

* 生成快速响应

*/

private String generateQuickResponse(String request) {

// 使用简单的规则或缓存生成快速响应

if (request.toLowerCase().contains("hello") || request.contains("你好")) {

return "您好!我是AI助手。";

} else if (request.toLowerCase().contains("time") || request.contains("时间")) {

return "当前时间是:" + java.time.LocalTime.now();

} else if (request.length() < 50) {

return "这是一个简短的问题,我需要更多上下文来提供详细答案。";

} else {

return "我已收到您的问题,正在处理中。初步分析可能需要更长时间,请稍候完整响应。";

}

}

/**

* 批量处理优化(为延迟敏感场景)

*/

public BatchTimedResponse processBatchWithDeadlines(List<String> requests,

int deadlineMs) {

log.info("批量处理 {} 个请求,截止时间: {}ms", requests.size(), deadlineMs);

List<CompletableFuture<TimedResponse>> futures = new ArrayList<>();

long startTime = System.currentTimeMillis();

for (String request : requests) {

CompletableFuture<TimedResponse> future = CompletableFuture.supplyAsync(() -> {

// 动态调整每个请求的可用时间

long elapsed = System.currentTimeMillis() - startTime;

int remainingTime = Math.max(100, (int)(deadlineMs - elapsed));

return processWithLatencyConstraint(request, remainingTime);

}, executorService);

futures.add(future);

}

// 等待所有完成或超时

try {

CompletableFuture.allOf(futures.toArray(new CompletableFuture[0]))

.get(deadlineMs, TimeUnit.MILLISECONDS);

} catch (TimeoutException e) {

log.warn("批量处理部分超时");

} catch (Exception e) {

log.error("批量处理失败", e);

}

// 收集结果

List<TimedResponse> responses = new ArrayList<>();

int completed = 0;

int failed = 0;

int quickResponses = 0;

for (CompletableFuture<TimedResponse> future : futures) {

try {

if (future.isDone()) {

TimedResponse response = future.get();

responses.add(response);

completed++;

if (response.isQuickResponse()) {

quickResponses++;

}

} else {

responses.add(TimedResponse.timeoutResponse());

failed++;

}

} catch (Exception e) {

responses.add(TimedResponse.errorResponse(e.getMessage()));

failed++;

}

}

return BatchTimedResponse.builder()

.responses(responses)

.totalRequests(requests.size())

.completedRequests(completed)

.failedRequests(failed)

.quickResponses(quickResponses)

.totalTimeMs(System.currentTimeMillis() - startTime)

.withinDeadline((System.currentTimeMillis() - startTime) <= deadlineMs)

.build();

}

private void storeBackgroundResult(String request,

ResourceAwareRouter.RoutedResponse response) {

// 存储到缓存或数据库

BackgroundResult result = BackgroundResult.builder()

.request(request)

.response(response.getResponse())

.modelUsed(response.getSelectedModel())

.processingTimeMs(response.getExecutionTimeMs())

.timestamp(java.time.LocalDateTime.now())

.build();

backgroundResultCache.put(request.hashCode(), result);

}

// 数据类

@Data

@Builder

public static class TimedResponse {

private boolean success;

private String response;

private long actualLatencyMs;

private boolean withinBudget;

private String modelUsed;

private double estimatedCost;

private boolean isQuickResponse;

private String error;

public static TimedResponse timeoutResponse() {

return TimedResponse.builder()

.success(false)

.response("请求超时")

.withinBudget(false)

.error("timeout")

.build();

}

public static TimedResponse errorResponse(String error) {

return TimedResponse.builder()

.success(false)

.response("处理错误: " + error)

.withinBudget(false)

.error(error)

.build();

}

}

@Data

@Builder

public static class BatchTimedResponse {

private List<TimedResponse> responses;

private int totalRequests;

private int completedRequests;

private int failedRequests;

private int quickResponses;

private long totalTimeMs;

private boolean withinDeadline;

}

@Data

@Builder

public static class LatencyConfig {

private boolean backgroundProcessingEnabled;

private int defaultTimeoutMs;

private int quickResponseThresholdMs;

private double maxTimeoutRatio;

}

}3. 能源效率优化(边缘设备场景)

java

import lombok.extern.slf4j.Slf4j;

import java.util.*;

import java.util.concurrent.atomic.AtomicInteger;

@Slf4j

public class EnergyEfficientAgent {

private final EnergyMonitor energyMonitor;

private final DeviceCapability deviceCapability;

private final ResourceAwareRouter router;

// 能源优化策略

private final EnergyOptimizationStrategy strategy;

// 能源使用状态

private final AtomicInteger currentPowerMode = new AtomicInteger(0); // 0:正常, 1:节能, 2:极限节能

public EnergyEfficientAgent(DeviceCapability deviceCapability,

ResourceAwareRouter router,

EnergyOptimizationStrategy strategy) {

this.deviceCapability = deviceCapability;

this.router = router;

this.strategy = strategy;

this.energyMonitor = new EnergyMonitor();

// 启动能源监控

startEnergyMonitoring();

}

/**

* 能源感知处理

*/

public EnergyAwareResponse processWithEnergyConstraint(String request) {

EnergyState energyState = energyMonitor.getCurrentEnergyState();

PowerMode powerMode = determinePowerMode(energyState);

log.info("能源状态: {}%, 电源模式: {}",

energyState.getBatteryPercentage(), powerMode);

// 根据能源状态调整处理策略

ProcessingStrategy processingStrategy =

selectProcessingStrategy(powerMode, request);

// 执行处理

ProcessingResult result = executeWithEnergyOptimization(

request, processingStrategy, energyState);

// 更新能源使用

energyMonitor.recordEnergyUsage(result.getEstimatedEnergyConsumption());

return EnergyAwareResponse.builder()

.response(result.getResponse())

.powerMode(powerMode)

.energyUsedJoules(result.getEstimatedEnergyConsumption())

.batteryRemaining(energyState.getBatteryPercentage())

.processingStrategy(processingStrategy.getStrategyName())

.executionTimeMs(result.getExecutionTimeMs())

.build();

}

/**

* 根据能源状态确定电源模式

*/

private PowerMode determinePowerMode(EnergyState energyState) {

if (energyState.getBatteryPercentage() < 20) {

return PowerMode.CRITICAL_SAVING;

} else if (energyState.getBatteryPercentage() < 50) {

return PowerMode.POWER_SAVING;

} else if (energyState.isCharging()) {

return PowerMode.PERFORMANCE;

} else {

return PowerMode.BALANCED;

}

}

/**

* 选择处理策略

*/

private ProcessingStrategy selectProcessingStrategy(PowerMode powerMode, String request) {

switch (powerMode) {

case CRITICAL_SAVING:

return ProcessingStrategy.builder()

.strategyName("energy-critical")

.useLocalProcessing(true)

.maxModelSize("tiny")

.enableCaching(true)

.disableUnnecessaryFeatures(true)

.maxResponseLength(100)

.build();

case POWER_SAVING:

return ProcessingStrategy.builder()

.strategyName("energy-saving")

.useLocalProcessing(true)

.maxModelSize("small")

.enableCaching(true)

.batchProcessing(true)

.maxResponseLength(500)

.build();

case PERFORMANCE:

return ProcessingStrategy.builder()

.strategyName("performance")

.useLocalProcessing(false) // 可以使用云端

.maxModelSize("large")

.enableCaching(false)

.maxResponseLength(2000)

.build();

default: // BALANCED

return ProcessingStrategy.builder()

.strategyName("balanced")

.useLocalProcessing(deviceCapability.hasSufficientResources())

.maxModelSize("medium")

.enableCaching(true)

.maxResponseLength(1000)

.build();

}

}

/**

* 执行能源优化处理

*/

private ProcessingResult executeWithEnergyOptimization(String request,

ProcessingStrategy strategy,

EnergyState energyState) {

long startTime = System.currentTimeMillis();

try {

// 检查是否可以使用缓存

if (strategy.isEnableCaching()) {

CachedResponse cached = responseCache.get(request.hashCode());

if (cached != null && !cached.isExpired()) {

log.info("使用缓存响应,节省能源");

return ProcessingResult.builder()

.response(cached.getResponse())

.executionTimeMs(1) // 缓存访问时间

.estimatedEnergyConsumption(0.001) // 极少能耗

.fromCache(true)

.build();

}

}

// 选择处理引擎

ProcessingEngine engine = selectProcessingEngine(strategy);

// 执行处理

String response = engine.process(request, strategy);

long executionTime = System.currentTimeMillis() - startTime;

// 估算能源消耗

double energyConsumption = estimateEnergyConsumption(

engine, executionTime, strategy);

// 缓存结果(如果启用)

if (strategy.isEnableCaching()) {

responseCache.put(request.hashCode(),

CachedResponse.builder()

.response(response)

.timestamp(System.currentTimeMillis())

.ttlSeconds(300)

.build());

}

return ProcessingResult.builder()

.response(response)

.executionTimeMs(executionTime)

.estimatedEnergyConsumption(energyConsumption)

.fromCache(false)

.processingEngine(engine.getEngineName())

.build();

} catch (Exception e) {

log.error("能源优化处理失败", e);

// 尝试简化处理

return fallbackToSimplifiedProcessing(request, strategy);

}

}

/**

* 选择处理引擎

*/

private ProcessingEngine selectProcessingEngine(ProcessingStrategy strategy) {

if (strategy.isUseLocalProcessing()) {

// 使用本地轻量模型

return new LocalProcessingEngine(deviceCapability, strategy);

} else {

// 使用云端处理(可能更节能,取决于网络能耗)

RequestContext context = RequestContext.builder()

.maxLatencyMs(10000)

.energyAware(true)

.build();

return new CloudProcessingEngine(router, context, strategy);

}

}

/**

* 估算能源消耗

*/

private double estimateEnergyConsumption(ProcessingEngine engine,

long executionTimeMs,

ProcessingStrategy strategy) {

// 基础能源模型

double basePowerWatts = 0.0;

if (engine instanceof LocalProcessingEngine) {

// 本地处理:CPU/GPU能耗

basePowerWatts = deviceCapability.getProcessingPowerWatts();

} else {

// 云端处理:网络传输能耗 + 设备待机能耗

basePowerWatts = deviceCapability.getNetworkPowerWatts() + 0.5;

}

// 根据策略调整

double powerMultiplier = 1.0;

if (strategy.getStrategyName().contains("critical")) {

powerMultiplier = 0.3;

} else if (strategy.getStrategyName().contains("saving")) {

powerMultiplier = 0.6;

}

// 计算能源消耗(焦耳 = 瓦特 × 时间)

double powerWatts = basePowerWatts * powerMultiplier;

double timeSeconds = executionTimeMs / 1000.0;

return powerWatts * timeSeconds;

}

/**

* 简化处理回退

*/

private ProcessingResult fallbackToSimplifiedProcessing(String request,

ProcessingStrategy strategy) {

long startTime = System.currentTimeMillis();

// 生成极简响应

String simplifiedResponse = "当前设备能源受限,提供简化响应:\n" +

"问题: " + request.substring(0, Math.min(50, request.length())) + "...\n" +

"建议在设备充电后获取完整答案。";

long executionTime = System.currentTimeMillis() - startTime;

return ProcessingResult.builder()

.response(simplifiedResponse)

.executionTimeMs(executionTime)

.estimatedEnergyConsumption(0.01) // 极低能耗

.fromCache(false)

.processingEngine("simplified-fallback")

.isFallback(true)

.build();

}

/**

* 启动能源监控

*/

private void startEnergyMonitoring() {

Timer monitorTimer = new Timer(true);

monitorTimer.scheduleAtFixedRate(new TimerTask() {

@Override

public void run() {

try {

EnergyState state = energyMonitor.getCurrentEnergyState();

// 根据能源状态动态调整策略

if (state.getBatteryPercentage() < 10) {

strategy.setAggressiveSaving(true);

log.warn("电池电量低于10%,启用激进节能模式");

}

// 记录能源使用趋势

energyMonitor.recordEnergyTrend();

} catch (Exception e) {

log.error("能源监控失败", e);

}

}

}, 0, 60000); // 每分钟检查一次

}

/**

* 批量能源优化处理

*/

public BatchEnergyResponse processBatchWithEnergyOptimization(List<String> requests) {

log.info("批量处理 {} 个请求,使用能源优化", requests.size());

EnergyState initialEnergy = energyMonitor.getCurrentEnergyState();

List<EnergyAwareResponse> responses = new ArrayList<>();

double totalEnergyUsed = 0.0;

// 按优先级排序请求

List<PrioritizedRequest> prioritizedRequests = prioritizeRequests(requests);

for (PrioritizedRequest pr : prioritizedRequests) {

// 检查剩余电量是否足够

if (!hasSufficientEnergyForNextRequest(totalEnergyUsed, initialEnergy)) {

log.warn("电量不足,跳过剩余请求");

responses.add(EnergyAwareResponse.energyExhaustedResponse());

break;

}

EnergyAwareResponse response = processWithEnergyConstraint(pr.getRequest());

responses.add(response);

totalEnergyUsed += response.getEnergyUsedJoules();

}

EnergyState finalEnergy = energyMonitor.getCurrentEnergyState();

return BatchEnergyResponse.builder()

.responses(responses)

.totalRequests(requests.size())

.processedRequests(responses.size())

.totalEnergyUsedJoules(totalEnergyUsed)

.initialBatteryPercentage(initialEnergy.getBatteryPercentage())

.finalBatteryPercentage(finalEnergy.getBatteryPercentage())

.batteryDrained(initialEnergy.getBatteryPercentage() -

finalEnergy.getBatteryPercentage())

.build();

}

private boolean hasSufficientEnergyForNextRequest(double energyUsedSoFar,

EnergyState energyState) {

// 估算剩余可用能源

double estimatedRemainingEnergy = energyState.getBatteryCapacityJoules() *

(energyState.getBatteryPercentage() / 100.0);

// 保留至少10%的电量用于系统运行

double minimumRequired = energyState.getBatteryCapacityJoules() * 0.1;

return (estimatedRemainingEnergy - energyUsedSoFar) > minimumRequired;

}

private List<PrioritizedRequest> prioritizeRequests(List<String> requests) {

List<PrioritizedRequest> prioritized = new ArrayList<>();

for (String request : requests) {

int priority = calculateRequestPriority(request);

prioritized.add(new PrioritizedRequest(request, priority));

}

prioritized.sort((a, b) -> Integer.compare(b.getPriority(), a.getPriority()));

return prioritized;

}

private int calculateRequestPriority(String request) {

// 简单的优先级计算

if (request.toLowerCase().contains("urgent") ||

request.contains("紧急")) {

return 10;

} else if (request.contains("?") && request.length() < 100) {

return 5; // 简短问题优先级较高

} else {

return 1;

}

}

// 数据类

@Data

@Builder

public static class EnergyAwareResponse {

private String response;

private PowerMode powerMode;

private double energyUsedJoules;

private double batteryRemaining;

private String processingStrategy;

private long executionTimeMs;

public static EnergyAwareResponse energyExhaustedResponse() {

return EnergyAwareResponse.builder()

.response("设备电量不足,无法完成处理")

.powerMode(PowerMode.CRITICAL_SAVING)

.energyUsedJoules(0)

.batteryRemaining(0)

.processingStrategy("energy-exhausted")

.executionTimeMs(0)

.build();

}

}

@Data

@Builder

public static class ProcessingStrategy {

private String strategyName;

private boolean useLocalProcessing;

private String maxModelSize;

private boolean enableCaching;

private boolean batchProcessing;

private boolean disableUnnecessaryFeatures;

private int maxResponseLength;

}

enum PowerMode {

PERFORMANCE, BALANCED, POWER_SAVING, CRITICAL_SAVING

}

}4. 自适应任务分配(多Agent系统)

java

import lombok.extern.slf4j.Slf4j;

import java.util.*;

import java.util.concurrent.*;

import java.util.concurrent.atomic.AtomicInteger;

@Slf4j

public class AdaptiveTaskAllocator {

private final List<WorkerAgent> workerAgents;

private final LoadBalancingStrategy loadBalancingStrategy;

private final ExecutorService taskExecutor;

// 系统状态监控

private final SystemMonitor systemMonitor;

// 任务队列

private final BlockingQueue<Task> taskQueue;

public AdaptiveTaskAllocator(List<WorkerAgent> workerAgents,

LoadBalancingStrategy strategy) {

this.workerAgents = workerAgents;

this.loadBalancingStrategy = strategy;

this.taskExecutor = Executors.newFixedThreadPool(workerAgents.size() * 2);

this.systemMonitor = new SystemMonitor();

this.taskQueue = new LinkedBlockingQueue<>();

// 启动工作线程

startWorkerThreads();

startSystemMonitoring();

}

/**

* 提交任务进行自适应分配

*/

public CompletableFuture<TaskResult> submitTask(Task task) {

log.info("提交任务: {}", task.getId());

CompletableFuture<TaskResult> future = new CompletableFuture<>();

task.setResultFuture(future);

try {

taskQueue.put(task);

return future;

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

future.completeExceptionally(e);

return future;

}

}

/**

* 批量提交任务

*/

public List<CompletableFuture<TaskResult>> submitTasks(List<Task> tasks) {

List<CompletableFuture<TaskResult>> futures = new ArrayList<>();

for (Task task : tasks) {

CompletableFuture<TaskResult> future = submitTask(task);

futures.add(future);

}

return futures;

}

/**

* 根据系统负载动态分配任务

*/

private void allocateTask(Task task) {

// 获取系统状态

SystemStatus systemStatus = systemMonitor.getCurrentStatus();

// 选择最佳工作Agent

WorkerAgent selectedAgent = selectBestWorker(task, systemStatus);

if (selectedAgent == null) {

log.error("没有可用的工作Agent来处理任务: {}", task.getId());

task.getResultFuture().complete(TaskResult.failed("无可用工作节点"));

return;

}

// 执行任务

taskExecutor.submit(() -> {

try {

TaskResult result = selectedAgent.executeTask(task);

task.getResultFuture().complete(result);

// 更新系统状态

systemMonitor.recordTaskCompletion(selectedAgent.getId(), result);

} catch (Exception e) {

log.error("任务执行失败: {}", task.getId(), e);

// 尝试重新分配或使用回退

handleTaskFailure(task, selectedAgent, e);

}

});

}

/**

* 选择最佳工作Agent

*/

private WorkerAgent selectBestWorker(Task task, SystemStatus systemStatus) {

List<WorkerCandidate> candidates = new ArrayList<>();

for (WorkerAgent agent : workerAgents) {

if (isAgentSuitable(agent, task, systemStatus)) {

double score = calculateAgentScore(agent, task, systemStatus);

candidates.add(new WorkerCandidate(agent, score));

}

}

if (candidates.isEmpty()) {

return null;

}

// 根据负载均衡策略选择

candidates.sort((a, b) -> Double.compare(b.getScore(), a.getScore()));

switch (loadBalancingStrategy.getStrategy()) {

case "weighted-round-robin":

return selectWeightedRoundRobin(candidates);

case "least-loaded":

return selectLeastLoaded(candidates, systemStatus);

case "latency-optimized":

return selectLatencyOptimized(candidates, task, systemStatus);

default:

return candidates.get(0).getAgent();

}

}

/**

* 计算Agent得分

*/

private double calculateAgentScore(WorkerAgent agent, Task task, SystemStatus status) {

double score = 0.0;

// 1. 负载得分(负载越低得分越高)

double loadScore = 1.0 - Math.min(agent.getCurrentLoad() / 100.0, 1.0);

score += loadScore * loadBalancingStrategy.getLoadWeight();

// 2. 能力匹配得分

double capabilityScore = calculateCapabilityMatch(agent, task);

score += capabilityScore * loadBalancingStrategy.getCapabilityWeight();

// 3. 延迟得分(延迟越低得分越高)

double latencyScore = 1.0 - Math.min(agent.getAverageLatency() / 10000.0, 1.0);

score += latencyScore * loadBalancingStrategy.getLatencyWeight();

// 4. 成本得分(成本越低得分越高)

double costScore = 1.0 - Math.min(agent.getCostPerTask() / 10.0, 1.0);

score += costScore * loadBalancingStrategy.getCostWeight();

// 5. 可靠性得分

double reliabilityScore = agent.getReliability();

score += reliabilityScore * loadBalancingStrategy.getReliabilityWeight();

return score;

}

/**

* 处理任务失败

*/

private void handleTaskFailure(Task task, WorkerAgent failedAgent, Exception e) {

log.info("任务 {} 在Agent {} 上失败,尝试重新分配",

task.getId(), failedAgent.getId());

// 标记Agent为暂时不可用

failedAgent.markTemporarilyUnavailable();

// 检查重试次数

if (task.getRetryCount() < task.getMaxRetries()) {

task.incrementRetryCount();

// 重新提交任务

taskExecutor.submit(() -> {

try {

Thread.sleep(1000); // 等待1秒后重试

allocateTask(task);

} catch (InterruptedException ie) {

Thread.currentThread().interrupt();

task.getResultFuture().completeExceptionally(ie);

}

});

} else {

// 重试次数用尽,使用回退处理

TaskResult fallbackResult = executeFallbackProcessing(task);

task.getResultFuture().complete(fallbackResult);

}

}

/**

* 执行回退处理

*/

private TaskResult executeFallbackProcessing(Task task) {

log.info("对任务 {} 执行回退处理", task.getId());

try {

// 使用最简化的处理

String simplifiedResult = "任务处理失败后的简化结果。原始任务: " +

task.getDescription().substring(0, Math.min(50, task.getDescription().length()));

return TaskResult.builder()

.taskId(task.getId())

.success(true)

.result(simplifiedResult)

.processingAgent("fallback-processor")

.executionTimeMs(100)

.isFallbackResult(true)

.build();

} catch (Exception e) {

return TaskResult.failed("回退处理也失败: " + e.getMessage());

}

}

/**

* 启动工作线程

*/

private void startWorkerThreads() {

for (int i = 0; i < workerAgents.size(); i++) {

Thread workerThread = new Thread(() -> {

while (!Thread.currentThread().isInterrupted()) {

try {

Task task = taskQueue.poll(1, TimeUnit.SECONDS);

if (task != null) {

allocateTask(task);

}

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

break;

}

}

});

workerThread.setName("TaskAllocator-Worker-" + i);

workerThread.setDaemon(true);

workerThread.start();

}

log.info("启动了 {} 个工作线程", workerAgents.size());

}

/**

* 启动系统监控

*/

private void startSystemMonitoring() {

Timer monitoringTimer = new Timer(true);

monitoringTimer.scheduleAtFixedRate(new TimerTask() {

@Override

public void run() {

try {

// 收集系统指标

SystemStatus status = systemMonitor.collectSystemMetrics();

// 动态调整负载均衡策略

if (status.getAverageLoad() > 80) {

log.warn("系统负载过高 ({}%),调整负载均衡策略",

status.getAverageLoad());

loadBalancingStrategy.adjustWeightsForHighLoad();

}

// 检测并处理故障节点

detectAndHandleFaultyAgents(status);

} catch (Exception e) {

log.error("系统监控失败", e);

}

}

}, 0, 5000); // 每5秒监控一次

}

/**

* 检测并处理故障节点

*/

private void detectAndHandleFaultyAgents(SystemStatus status) {

for (WorkerAgent agent : workerAgents) {

AgentHealth health = agent.checkHealth();

if (!health.isHealthy()) {

log.warn("检测到不健康Agent: {}, 状态: {}",

agent.getId(), health.getStatus());

if (health.getFailureCount() > 3) {

// 从可用列表中移除

workerAgents.remove(agent);

log.error("从可用列表中移除故障Agent: {}", agent.getId());

// 可以尝试重启或恢复

attemptAgentRecovery(agent);

}

}

}

}

/**

* 尝试恢复故障Agent

*/

private void attemptAgentRecovery(WorkerAgent agent) {

taskExecutor.submit(() -> {

try {

log.info("尝试恢复Agent: {}", agent.getId());

// 模拟恢复过程

Thread.sleep(5000);

boolean recovered = agent.recover();

if (recovered) {

workerAgents.add(agent);

log.info("Agent {} 恢复成功,重新加入可用列表", agent.getId());

}

} catch (Exception e) {

log.error("Agent恢复失败: {}", agent.getId(), e);

}

});

}

// 辅助方法

private boolean isAgentSuitable(WorkerAgent agent, Task task, SystemStatus status) {

// 检查Agent是否可用

if (!agent.isAvailable()) {

return false;

}

// 检查Agent能力是否匹配任务需求

if (!agent.hasCapability(task.getRequiredCapabilities())) {

return false;

}

// 检查负载是否过高

if (agent.getCurrentLoad() > agent.getMaxLoadThreshold()) {

return false;

}

return true;

}

private double calculateCapabilityMatch(WorkerAgent agent, Task task) {

Set<String> agentCapabilities = agent.getCapabilities();

Set<String> requiredCapabilities = task.getRequiredCapabilities();

long matched = requiredCapabilities.stream()

.filter(agentCapabilities::contains)

.count();

return (double) matched / requiredCapabilities.size();

}

private WorkerAgent selectWeightedRoundRobin(List<WorkerCandidate> candidates) {

// 实现加权轮询

double totalWeight = candidates.stream()

.mapToDouble(WorkerCandidate::getScore)

.sum();

double random = Math.random() * totalWeight;

double current = 0;

for (WorkerCandidate candidate : candidates) {

current += candidate.getScore();

if (random <= current) {

return candidate.getAgent();

}

}

return candidates.get(0).getAgent();

}

private WorkerAgent selectLeastLoaded(List<WorkerCandidate> candidates,

SystemStatus status) {

return candidates.stream()

.min(Comparator.comparingDouble(c ->

c.getAgent().getCurrentLoad()))

.map(WorkerCandidate::getAgent)

.orElse(null);

}

private WorkerAgent selectLatencyOptimized(List<WorkerCandidate> candidates,

Task task,

SystemStatus status) {

return candidates.stream()

.min(Comparator.comparingDouble(c ->

estimateTaskLatency(c.getAgent(), task)))

.map(WorkerCandidate::getAgent)

.orElse(null);

}

private double estimateTaskLatency(WorkerAgent agent, Task task) {

return agent.getAverageLatency() *

(task.getEstimatedComplexity() / 10.0);

}

// 数据类

@Data

@Builder

public static class Task {

private String id;

private String description;

private Set<String> requiredCapabilities;

private int priority;

private long timeoutMs;

private int maxRetries;

private int retryCount;

private CompletableFuture<TaskResult> resultFuture;

private double estimatedComplexity;

public void incrementRetryCount() {

this.retryCount++;

}

}

@Data

@Builder

public static class TaskResult {

private String taskId;

private boolean success;

private String result;

private String processingAgent;

private long executionTimeMs;

private boolean isFallbackResult;

private String error;

public static TaskResult failed(String error) {

return TaskResult.builder()

.success(false)

.error(error)

.build();

}

}

}5. 使用示例和配置

java

public class ResourceAwareOptimizationDemo {

public static void main(String[] args) {

log.info("=== 资源感知优化演示开始 ===");

// 1. 配置资源感知路由器

ResourceAwareRouter.ResourceConfig resourceConfig =

ResourceAwareRouter.ResourceConfig.builder()

.monthlyBudget(100.0) // $100每月预算

.defaultMaxLatencyMs(5000)

.energyConstrained(false)

.costWeight(0.4)

.latencyWeight(0.3)

.build();

ResourceAwareRouter router = new ResourceAwareRouter(resourceConfig);

// 2. 测试成本优化的LLM使用

System.out.println("=== 测试成本优化的LLM使用 ===");

testCostOptimizedLLMUsage(router);

// 3. 测试延迟敏感操作

System.out.println("\n=== 测试延迟敏感操作 ===");

testLatencySensitiveOperations(router);

// 4. 测试服务可靠性回退

System.out.println("\n=== 测试服务可靠性回退 ===");

testServiceReliabilityFallback(router);

// 5. 测试自适应任务分配

System.out.println("\n=== 测试自适应任务分配 ===");

testAdaptiveTaskAllocation();

log.info("=== 资源感知优化演示结束 ===");

}

private static void testCostOptimizedLLMUsage(ResourceAwareRouter router) {

// 简单查询 - 应使用成本较低的模型

String simpleQuery = "今天的天气怎么样?";

ResourceAwareRouter.RequestContext simpleContext =

ResourceAwareRouter.RequestContext.builder()

.maxLatencyMs(2000)

.budgetCategory("low-cost")

.build();

ResourceAwareRouter.RoutedResponse simpleResponse =

router.routeRequest(simpleQuery, simpleContext);

System.out.println("简单查询:");

System.out.println(" 使用模型: " + simpleResponse.getSelectedModel());

System.out.println(" 估计成本: $" + simpleResponse.getEstimatedCost());

System.out.println(" 响应时间: " + simpleResponse.getExecutionTimeMs() + "ms");

// 复杂查询 - 应使用更强大的模型

String complexQuery = "请分析2024年全球人工智能发展趋势,包括技术突破、" +

"市场变化、政策影响和未来预测,并提供详细的数据支持。";

ResourceAwareRouter.RequestContext complexContext =

ResourceAwareRouter.RequestContext.builder()

.maxLatencyMs(10000)

.budgetCategory("high-quality")

.build();

ResourceAwareRouter.RoutedResponse complexResponse =

router.routeRequest(complexQuery, complexContext);

System.out.println("\n复杂查询:");

System.out.println(" 使用模型: " + complexResponse.getSelectedModel());

System.out.println(" 估计成本: $" + complexResponse.getEstimatedCost());

System.out.println(" 响应时间: " + complexResponse.getExecutionTimeMs() + "ms");

System.out.println(" 复杂度评分: " + complexResponse.getComplexityScore());

}

private static void testLatencySensitiveOperations(ResourceAwareRouter router) {

LatencySensitiveAgent.LatencyConfig latencyConfig =

LatencySensitiveAgent.LatencyConfig.builder()

.backgroundProcessingEnabled(true)

.defaultTimeoutMs(2000)

.quickResponseThresholdMs(500)

.build();

LatencySensitiveAgent latencyAgent =

new LatencySensitiveAgent(router, latencyConfig);

// 测试低延迟要求

String timeSensitiveQuery = "当前股价是多少?需要立即知道!";

LatencySensitiveAgent.TimedResponse timedResponse =

latencyAgent.processWithLatencyConstraint(timeSensitiveQuery, 1000);

System.out.println("延迟敏感查询 (1000ms限制):");

System.out.println(" 实际延迟: " + timedResponse.getActualLatencyMs() + "ms");

System.out.println(" 是否在预算内: " + timedResponse.isWithinBudget());

System.out.println(" 是否快速响应: " + timedResponse.isQuickResponse());

System.out.println(" 响应内容: " +

timedResponse.getResponse().substring(0, Math.min(100, timedResponse.getResponse().length())));

// 测试批量处理

List<String> batchRequests = Arrays.asList(

"查询AAPL股价",

"MSFT的最新财报",

"GOOGL的市场份额",

"AMZN的盈利预测"

);

LatencySensitiveAgent.BatchTimedResponse batchResponse =

latencyAgent.processBatchWithDeadlines(batchRequests, 3000);

System.out.println("\n批量处理结果:");

System.out.println(" 总请求数: " + batchResponse.getTotalRequests());

System.out.println(" 完成数: " + batchResponse.getCompletedRequests());

System.out.println(" 快速响应数: " + batchResponse.getQuickResponses());

System.out.println(" 总时间: " + batchResponse.getTotalTimeMs() + "ms");

System.out.println(" 是否在截止时间内: " + batchResponse.isWithinDeadline());

}

private static void testServiceReliabilityFallback(ResourceAwareRouter router) {

// 模拟主模型不可用的情况

System.out.println("测试服务可靠性回退...");

// 多次请求以触发可能的回退

for (int i = 1; i <= 5; i++) {

String query = "测试请求 " + i + ": 解释机器学习的基本概念";

ResourceAwareRouter.RequestContext context =

ResourceAwareRouter.RequestContext.builder()

.maxLatencyMs(3000)

.requireHighAvailability(true)

.build();

ResourceAwareRouter.RoutedResponse response =

router.routeRequest(query, context);

System.out.println("请求 " + i + ":");

System.out.println(" 使用模型: " + response.getSelectedModel());

System.out.println(" 是否使用回退: " + response.isFallbackUsed());

System.out.println(" 响应长度: " + response.getResponse().length());

}

}

private static void testAdaptiveTaskAllocation() {

// 创建工作Agent

List<AdaptiveTaskAllocator.WorkerAgent> workers = Arrays.asList(

new WorkerAgentImpl("agent-1", 4, 0.1), // 4核,$0.1每任务

new WorkerAgentImpl("agent-2", 2, 0.05), // 2核,$0.05每任务

new WorkerAgentImpl("agent-3", 8, 0.15) // 8核,$0.15每任务

);

// 配置负载均衡策略

AdaptiveTaskAllocator.LoadBalancingStrategy strategy =

AdaptiveTaskAllocator.LoadBalancingStrategy.builder()

.strategy("least-loaded")

.loadWeight(0.4)

.capabilityWeight(0.3)

.latencyWeight(0.2)

.costWeight(0.1)

.reliabilityWeight(0.2)

.build();

// 创建任务分配器

AdaptiveTaskAllocator allocator =

new AdaptiveTaskAllocator(workers, strategy);

// 创建测试任务

List<AdaptiveTaskAllocator.Task> tasks = new ArrayList<>();

for (int i = 1; i <= 10; i++) {

AdaptiveTaskAllocator.Task task = AdaptiveTaskAllocator.Task.builder()

.id("task-" + i)

.description("处理数据分析任务 " + i)

.requiredCapabilities(new HashSet<>(Arrays.asList("data-processing", "analysis")))

.priority(i % 3) // 0,1,2 优先级

.timeoutMs(10000)

.maxRetries(2)

.estimatedComplexity(Math.random() * 10)

.build();

tasks.add(task);

}

// 提交任务

List<CompletableFuture<AdaptiveTaskAllocator.TaskResult>> futures =

allocator.submitTasks(tasks);

// 等待结果

CompletableFuture.allOf(futures.toArray(new CompletableFuture[0]))

.thenRun(() -> {

System.out.println("所有任务完成:");

int successCount = 0;

int fallbackCount = 0;

for (CompletableFuture<AdaptiveTaskAllocator.TaskResult> future : futures) {

try {

AdaptiveTaskAllocator.TaskResult result = future.get();

if (result.isSuccess()) {

successCount++;

}

if (result.isFallbackResult()) {

fallbackCount++;

}

} catch (Exception e) {

System.out.println(" 任务结果获取失败: " + e.getMessage());

}

}

System.out.println(" 成功任务: " + successCount + "/" + tasks.size());

System.out.println(" 回退处理: " + fallbackCount + "/" + tasks.size());

})

.join();

}

}6. 监控和配置

XML

# application-resource-aware.yml

resource-optimization:

# 成本管理配置

cost-management:

monthly-budget: 100.0 # 美元

alert-threshold: 0.8 # 预算使用80%时告警

cost-tracking-enabled: true

cost-breakdown-by-model: true

# 延迟配置

latency:

default-timeout-ms: 5000

quick-response-threshold-ms: 1000

timeout-handling: "fallback" # fallback, queue, reject

# 能源效率配置

energy:

enabled: false # 仅在边缘设备启用

battery-thresholds:

warning: 30 # 30%电量警告

critical: 15 # 15%电量关键

shutdown: 5 # 5%电量关机

power-modes:

performance:

cpu-usage: 100

network-usage: high

balanced:

cpu-usage: 70

network-usage: medium

power-saving:

cpu-usage: 40

network-usage: low

critical-saving:

cpu-usage: 20

network-usage: minimal

# 模型配置

models:

gpt-4:

enabled: true

max-concurrent-requests: 5

cost-per-1k-tokens: 0.03

fallback-to: "gpt-3.5-turbo"

gpt-3.5-turbo:

enabled: true

max-concurrent-requests: 20

cost-per-1k-tokens: 0.0015

fallback-to: "claude-instant"

claude-instant:

enabled: true

max-concurrent-requests: 15

cost-per-1k-tokens: 0.00163

fallback-to: "local-llama"

local-llama:

enabled: true

max-concurrent-requests: 3

cost-per-1k-tokens: 0.0

fallback-to: "simple-rule"

# 负载均衡配置

load-balancing:

strategy: "weighted-round-robin"

weights:

load: 0.4

latency: 0.3

cost: 0.2

reliability: 0.1

health-check-interval: 10000 # 10秒

failure-threshold: 3

# 缓存配置

caching:

enabled: true

ttl-seconds: 300

max-size: 1000

eviction-policy: "LRU"

# 监控配置

monitoring:

enabled: true

metrics:

- "cost-per-request"

- "latency-percentile"

- "model-usage"

- "fallback-rate"

- "energy-consumption"

alerting:

enabled: true

channels:

- "email"

- "slack"关键实现特性总结

-

1、智能模型路由:

-

基于任务复杂度自动选择最佳模型

-

考虑成本、延迟、准确性等多维度

-

支持动态权重调整

-

-

2、延迟敏感处理:

-

快速响应回退机制

-

后台异步处理

-

批量处理优化

-

-

3、能源效率优化:

-

多级电源模式

-

本地/云端处理选择

-

缓存和简化处理

-

-

4、自适应任务分配:

-

实时负载监控

-

故障检测和恢复

-

动态负载均衡

-

-

5、回退和可靠性:

-

多级回退机制

-

优雅降级

-

服务连续性保障

-

这个实现展示了如何在LangChain4j框架中构建资源感知的AI系统,能够在不同的资源约束下智能地优化性能、成本和可靠性。

结论

资源感知优化对于智能 Agent 的开发至关重要,使其能够在现实世界约束内高效运行。通过管理计算、时间 和财务资源,Agent 可以实现最佳性能和成本效益。动态模型切换、自适应工具使用和上下文修剪等技术对 于实现这些效率至关重要。高级策略,包括学习型资源分配策略和优雅降级,增强了 Agent 在不同条件下的 适应性和弹性。将这些优化原则集成到 Agent 设计中对于构建可扩展、强大和可持续的 AI 系统至关重要。

参考文献

-

Google'sAgentDevelopmentKit(ADK):https://google.github.io/adk‐docs/

-

GeminiFlash2.5&Gemini2.5Pro:https://aistudio.google.com/

-

OpenRouter:https://openrouter.ai/docs/quickstart