第一步:准备数据

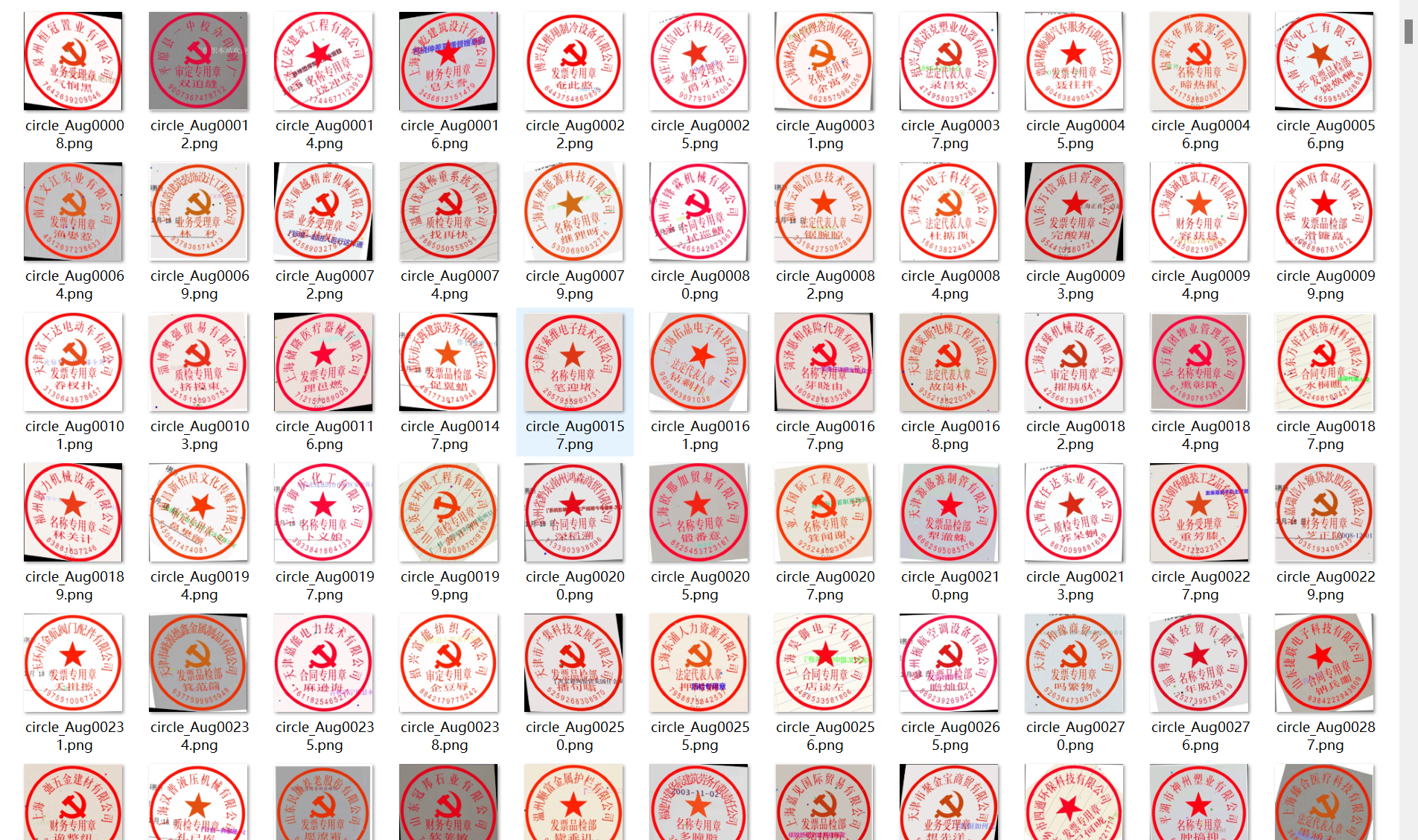

印章分割-深度学习图像分割数据集

印章分割数据,可直接应用到一些常用深度学习分割算法中,比如FCN、Unet、SegNet、DeepLabV1、DeepLabV2、DeepLabV3、DeepLabV3+、PSPNet、RefineNet、HRnet、Mask R-CNN、Segformer、DUCK-Net模型等

数据集总共有2000对图片,数据质量非常高,甚至可应用到工业落地的项目中

第二步:搭建模型

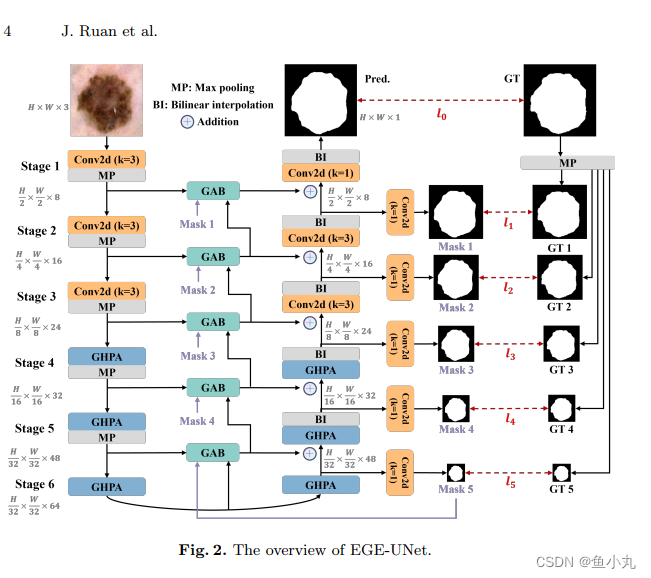

本文选择EGEUNet,其网络结构分别如下:

第三步:训练代码

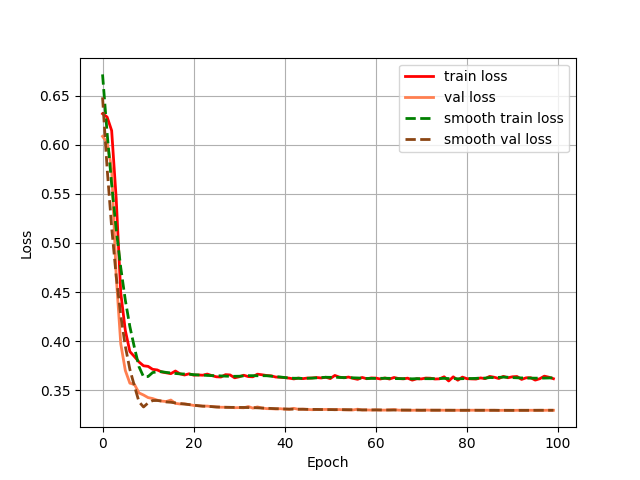

1)损失函数为:dice_loss + focal_loss

2)网络代码:

python

class EGEUNet(nn.Module):

def __init__(self, num_classes=1, input_channels=3, c_list=[8, 16, 24, 32, 48, 64], bridge=True, gt_ds=False):

super().__init__()

self.bridge = bridge

self.gt_ds = gt_ds

self.encoder1 = nn.Sequential(

nn.Conv2d(input_channels, c_list[0], 3, stride=1, padding=1),

)

self.encoder2 = nn.Sequential(

nn.Conv2d(c_list[0], c_list[1], 3, stride=1, padding=1),

)

self.encoder3 = nn.Sequential(

nn.Conv2d(c_list[1], c_list[2], 3, stride=1, padding=1),

)

self.encoder4 = nn.Sequential(

Grouped_multi_axis_Hadamard_Product_Attention(c_list[2], c_list[3]),

)

self.encoder5 = nn.Sequential(

Grouped_multi_axis_Hadamard_Product_Attention(c_list[3], c_list[4]),

)

self.encoder6 = nn.Sequential(

Grouped_multi_axis_Hadamard_Product_Attention(c_list[4], c_list[5]),

)

if bridge:

self.GAB1 = group_aggregation_bridge(c_list[1], c_list[0])

self.GAB2 = group_aggregation_bridge(c_list[2], c_list[1])

self.GAB3 = group_aggregation_bridge(c_list[3], c_list[2])

self.GAB4 = group_aggregation_bridge(c_list[4], c_list[3])

self.GAB5 = group_aggregation_bridge(c_list[5], c_list[4])

print('group_aggregation_bridge was used')

if gt_ds:

self.gt_conv1 = nn.Sequential(nn.Conv2d(c_list[4], 1, 1))

self.gt_conv2 = nn.Sequential(nn.Conv2d(c_list[3], 1, 1))

self.gt_conv3 = nn.Sequential(nn.Conv2d(c_list[2], 1, 1))

self.gt_conv4 = nn.Sequential(nn.Conv2d(c_list[1], 1, 1))

self.gt_conv5 = nn.Sequential(nn.Conv2d(c_list[0], 1, 1))

print('gt deep supervision was used')

self.decoder1 = nn.Sequential(

Grouped_multi_axis_Hadamard_Product_Attention(c_list[5], c_list[4]),

)

self.decoder2 = nn.Sequential(

Grouped_multi_axis_Hadamard_Product_Attention(c_list[4], c_list[3]),

)

self.decoder3 = nn.Sequential(

Grouped_multi_axis_Hadamard_Product_Attention(c_list[3], c_list[2]),

)

self.decoder4 = nn.Sequential(

nn.Conv2d(c_list[2], c_list[1], 3, stride=1, padding=1),

)

self.decoder5 = nn.Sequential(

nn.Conv2d(c_list[1], c_list[0], 3, stride=1, padding=1),

)

self.ebn1 = nn.GroupNorm(4, c_list[0])

self.ebn2 = nn.GroupNorm(4, c_list[1])

self.ebn3 = nn.GroupNorm(4, c_list[2])

self.ebn4 = nn.GroupNorm(4, c_list[3])

self.ebn5 = nn.GroupNorm(4, c_list[4])

self.dbn1 = nn.GroupNorm(4, c_list[4])

self.dbn2 = nn.GroupNorm(4, c_list[3])

self.dbn3 = nn.GroupNorm(4, c_list[2])

self.dbn4 = nn.GroupNorm(4, c_list[1])

self.dbn5 = nn.GroupNorm(4, c_list[0])

self.final = nn.Conv2d(c_list[0], num_classes, kernel_size=1)

self.apply(self._init_weights)

def _init_weights(self, m):

if isinstance(m, nn.Linear):

trunc_normal_(m.weight, std=.02)

if isinstance(m, nn.Linear) and m.bias is not None:

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Conv1d):

n = m.kernel_size[0] * m.out_channels

m.weight.data.normal_(0, math.sqrt(2. / n))

elif isinstance(m, nn.Conv2d):

fan_out = m.kernel_size[0] * m.kernel_size[1] * m.out_channels

fan_out //= m.groups

m.weight.data.normal_(0, math.sqrt(2.0 / fan_out))

if m.bias is not None:

m.bias.data.zero_()

def forward(self, x):

out = F.gelu(F.max_pool2d(self.ebn1(self.encoder1(x)), 2, 2))

t1 = out # b, c0, H/2, W/2

out = F.gelu(F.max_pool2d(self.ebn2(self.encoder2(out)), 2, 2))

t2 = out # b, c1, H/4, W/4

out = F.gelu(F.max_pool2d(self.ebn3(self.encoder3(out)), 2, 2))

t3 = out # b, c2, H/8, W/8

out = F.gelu(F.max_pool2d(self.ebn4(self.encoder4(out)), 2, 2))

t4 = out # b, c3, H/16, W/16

out = F.gelu(F.max_pool2d(self.ebn5(self.encoder5(out)), 2, 2))

t5 = out # b, c4, H/32, W/32

out = F.gelu(self.encoder6(out)) # b, c5, H/32, W/32

t6 = out

out5 = F.gelu(self.dbn1(self.decoder1(out))) # b, c4, H/32, W/32

if self.gt_ds:

gt_pre5 = self.gt_conv1(out5)

t5 = self.GAB5(t6, t5, gt_pre5)

gt_pre5 = F.interpolate(gt_pre5, scale_factor=32, mode='bilinear', align_corners=True)

else:

t5 = self.GAB5(t6, t5)

out5 = torch.add(out5, t5) # b, c4, H/32, W/32

out4 = F.gelu(F.interpolate(self.dbn2(self.decoder2(out5)), scale_factor=(2, 2), mode='bilinear',

align_corners=True)) # b, c3, H/16, W/16

if self.gt_ds:

gt_pre4 = self.gt_conv2(out4)

t4 = self.GAB4(t5, t4, gt_pre4)

gt_pre4 = F.interpolate(gt_pre4, scale_factor=16, mode='bilinear', align_corners=True)

else:

t4 = self.GAB4(t5, t4)

out4 = torch.add(out4, t4) # b, c3, H/16, W/16

out3 = F.gelu(F.interpolate(self.dbn3(self.decoder3(out4)), scale_factor=(2, 2), mode='bilinear',

align_corners=True)) # b, c2, H/8, W/8

if self.gt_ds:

gt_pre3 = self.gt_conv3(out3)

t3 = self.GAB3(t4, t3, gt_pre3)

gt_pre3 = F.interpolate(gt_pre3, scale_factor=8, mode='bilinear', align_corners=True)

else:

t3 = self.GAB3(t4, t3)

out3 = torch.add(out3, t3) # b, c2, H/8, W/8

out2 = F.gelu(F.interpolate(self.dbn4(self.decoder4(out3)), scale_factor=(2, 2), mode='bilinear',

align_corners=True)) # b, c1, H/4, W/4

if self.gt_ds:

gt_pre2 = self.gt_conv4(out2)

t2 = self.GAB2(t3, t2, gt_pre2)

gt_pre2 = F.interpolate(gt_pre2, scale_factor=4, mode='bilinear', align_corners=True)

else:

t2 = self.GAB2(t3, t2)

out2 = torch.add(out2, t2) # b, c1, H/4, W/4

out1 = F.gelu(F.interpolate(self.dbn5(self.decoder5(out2)), scale_factor=(2, 2), mode='bilinear',

align_corners=True)) # b, c0, H/2, W/2

if self.gt_ds:

gt_pre1 = self.gt_conv5(out1)

t1 = self.GAB1(t2, t1, gt_pre1)

gt_pre1 = F.interpolate(gt_pre1, scale_factor=2, mode='bilinear', align_corners=True)

else:

t1 = self.GAB1(t2, t1)

out1 = torch.add(out1, t1) # b, c0, H/2, W/2

out0 = F.interpolate(self.final(out1), scale_factor=(2, 2), mode='bilinear',

align_corners=True) # b, num_class, H, W

if self.gt_ds:

return (torch.sigmoid(gt_pre5), torch.sigmoid(gt_pre4), torch.sigmoid(gt_pre3), torch.sigmoid(gt_pre2),

torch.sigmoid(gt_pre1)), torch.sigmoid(out0)

else:

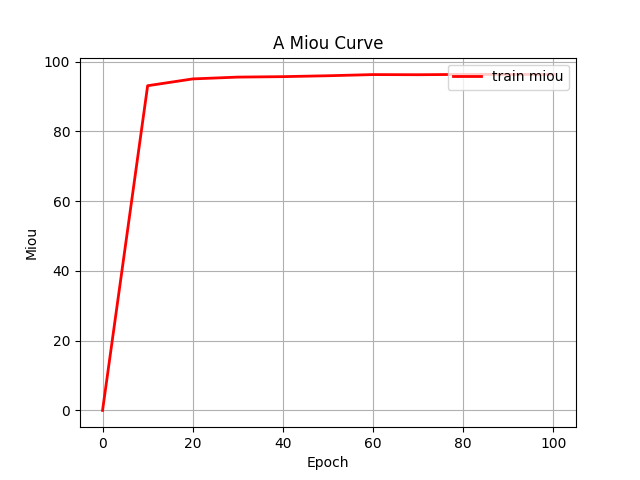

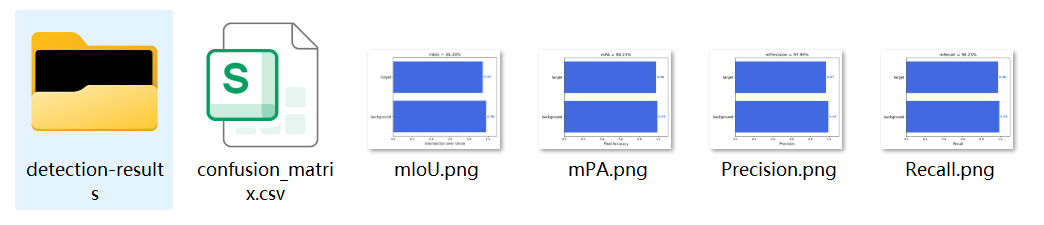

return torch.sigmoid(out0)第四步:统计一些指标(训练过程中的loss和miou)

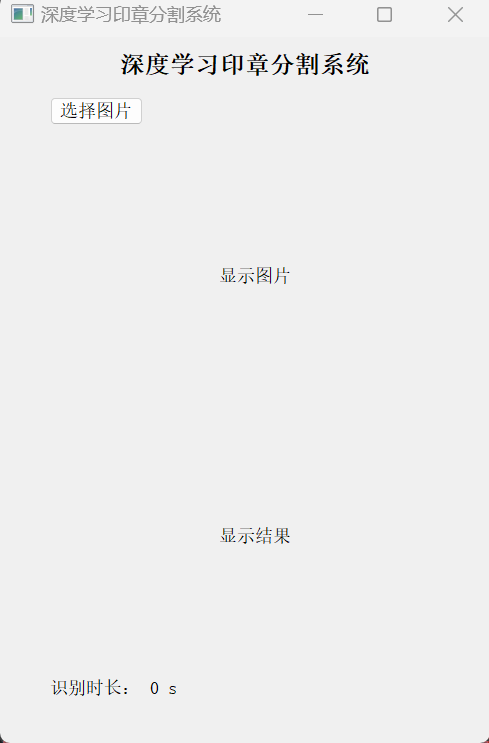

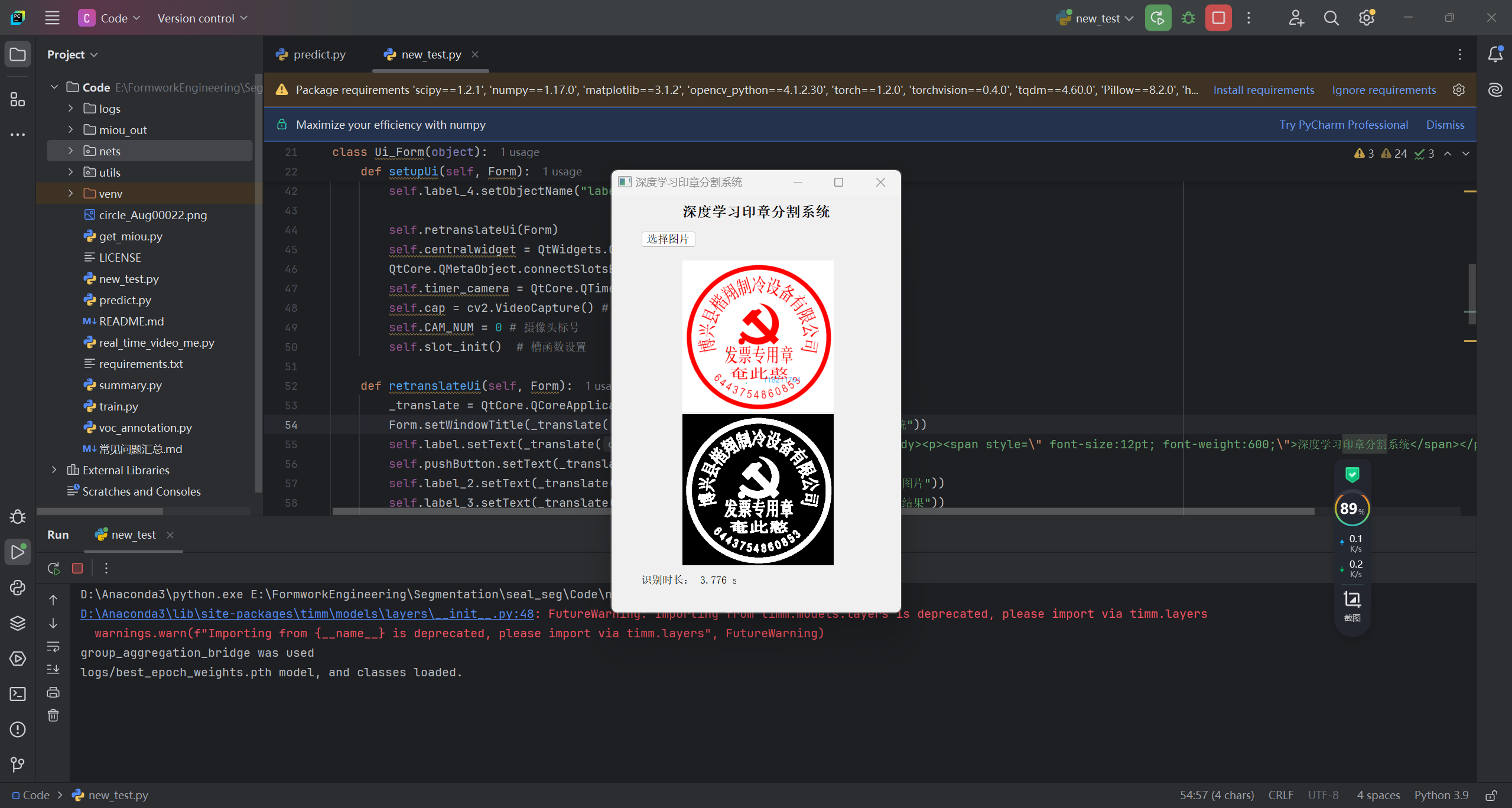

第五步:搭建GUI界面

第六步:整个工程的内容

项目完整文件下载请见演示与介绍视频的简介处给出:➷➷➷

https://www.bilibili.com/video/BV1Qpz4BfECo/