准备工作

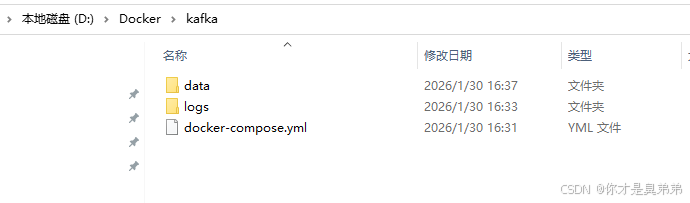

本地创建:D:\Docker\kafka下建立 data,logs,docker-compose.yml

拉取镜像

bash

docker pull apache/kafka:4.1.1配置docker-compose.yml

bash

services:

kafka:

image: apache/kafka:4.1.1

container_name: kafka-broker

hostname: kafka-broker # 明确主机名,用于网络识别

ports:

- "9092:9092"

- "9093:9093"

- "9999:9999" # JMX监控端口

volumes:

- ./data:/opt/kafka/data

- ./logs:/opt/kafka/logs

environment:

# ============ 基础KRaft配置 ============

- KAFKA_NODE_ID=1

- KAFKA_PROCESS_ROLES=broker,controller

- KAFKA_LISTENERS=PLAINTEXT://:9092,CONTROLLER://:9093

- KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://${HOST_IP:-localhost}:9092

- KAFKA_CONTROLLER_LISTENER_NAMES=CONTROLLER

- KAFKA_CONTROLLER_QUORUM_BOOTSTRAP_SERVERS=localhost:9093

- KAFKA_CONTROLLER_QUORUM_VOTERS=1@localhost:9093

- KAFKA_LOG_DIRS=/opt/kafka/data

- CLUSTER_ID=4P7L3w-8Sq1Q2vXcZ5dYyA

# ============ 数据安全与持久化(核心)============

# 单节点必须设置为1

- KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR=1

- KAFKA_TRANSACTION_STATE_LOG_REPLICATION_FACTOR=1

- KAFKA_MIN_INSYNC_REPLICAS=1

# 生产环境数据持久化策略(确保数据不丢失)

- KAFKA_TRANSACTION_STATE_LOG_MIN_ISR=1

- KAFKA_UNCLEAN_LEADER_ELECTION_ENABLE=false # 禁止不完整副本成为Leader

- KAFKA_AUTO_LEADER_REBALANCE_ENABLE=true

# ============ 生产级日志保留策略 ============

# 混合策略:满足任一条件即触发清理(时间+大小双重保障)

- KAFKA_LOG_RETENTION_HOURS=168 # 保留7天(根据业务调整)

- KAFKA_LOG_RETENTION_BYTES=53687091200 # 保留50GB(防止磁盘撑满)

- KAFKA_LOG_CLEANUP_POLICY=delete # 生产环境通常用delete

# 日志段优化(影响性能和恢复时间)

- KAFKA_LOG_SEGMENT_BYTES=1073741824 # 1GB段大小(平衡IO和恢复)

- KAFKA_LOG_ROLL_HOURS=168 # 7天滚动新段

- KAFKA_LOG_SEGMENT_MS=604800000 # 7天(毫秒)

- KAFKA_LOG_RETENTION_CHECK_INTERVAL_MS=300000 # 5分钟检查一次

# 刷盘策略(平衡性能与持久性)

- KAFKA_LOG_FLUSH_INTERVAL_MESSAGES=10000

- KAFKA_LOG_FLUSH_INTERVAL_MS=1000

- KAFKA_LOG_FLUSH_OFFSET_CHECKPOINT_INTERVAL_MS=60000

# ============ 网络与吞吐量优化 ============

# 消息大小调整(根据业务需求)

- KAFKA_MESSAGE_MAX_BYTES=5242880 # 5MB(默认1MB通常过小)

- KAFKA_MAX_REQUEST_SIZE=5242880 # 与message.max.bytes匹配

- KAFKA_REPLICA_FETCH_MAX_BYTES=5242880

- KAFKA_FETCH_MAX_BYTES=5242880

# Socket和网络缓冲区

- KAFKA_SOCKET_REQUEST_MAX_BYTES=5242880

- KAFKA_SOCKET_RECEIVE_BUFFER_BYTES=102400

- KAFKA_SOCKET_SEND_BUFFER_BYTES=102400

# 连接和线程配置

- KAFKA_NUM_NETWORK_THREADS=3 # 网络线程数(默认3,可调至CPU核数)

- KAFKA_NUM_IO_THREADS=8 # IO线程数(默认8)

- KAFKA_NUM_REPLICA_FETCHERS=1 # 单节点1足够

- KAFKA_QUEUED_MAX_REQUESTS=500 # 队列大小

# ============ 主题与分区策略 ============

- KAFKA_NUM_PARTITIONS=3 # 默认分区数(生产环境建议3+)

- KAFKA_DEFAULT_REPLICATION_FACTOR=1 # 单节点必须为1

# 副本和ISR配置

- KAFKA_REPLICA_LAG_TIME_MAX_MS=30000 # 副本延迟超时

- KAFKA_REPLICA_FETCH_WAIT_MAX_MS=500 # 副本拉取最大等待

- KAFKA_REPLICA_SOCKET_TIMEOUT_MS=30000

# ============ 控制器与KRaft优化 ============

- KAFKA_CONTROLLER_SOCKET_TIMEOUT_MS=30000

- KAFKA_CONTROLLER_QUORUM_REQUEST_TIMEOUT_MS=30000

- KAFKA_CONTROLLER_QUORUM_RETRY_BACKOFF_MS=20

- KAFKA_CONTROLLER_QUORUM_ELECTION_TIMEOUT_MS=1000

- KAFKA_CONTROLLER_QUORUM_FETCH_TIMEOUT_MS=2000

# ============ 生产环境安全策略 ============

- KAFKA_AUTO_CREATE_TOPICS_ENABLE=false # 生产环境禁止自动创建主题

- KAFKA_DELETE_TOPIC_ENABLE=true # 允许删除(但要有权限控制)

- KAFKA_CONTROLLED_SHUTDOWN_ENABLE=true # 启用受控关闭

- KAFKA_CONTROLLED_SHUTDOWN_MAX_RETRIES=3

- KAFKA_CONTROLLED_SHUTDOWN_RETRY_BACKOFF_MS=5000

# 连接限制

- KAFKA_MAX_CONNECTIONS_PER_IP=2147483647

- KAFKA_MAX_CONNECTIONS=2147483647

- KAFKA_MAX_CONNECTION_CREATION_RATE=2147483647

# ============ 内存与JVM优化 ============

# 堆内存设置(根据物理内存调整,建议4-8G)

- KAFKA_HEAP_OPTS=-Xmx4G -Xms4G

# 直接内存(用于零拷贝)

- KAFKA_JVM_PERFORMANCE_OPTS=-XX:+UseG1GC -XX:MaxGCPauseMillis=20 -XX:InitiatingHeapOccupancyPercent=35 -XX:+ExplicitGCInvokesConcurrent -Djava.awt.headless=true

# GC日志和内存监控

- KAFKA_GC_LOG_OPTS=-Xlog:gc*:file=/opt/kafka/logs/gc.log:time,level,tags:filecount=10,filesize=100M

# ============ 监控与JMX配置 ============

- JMX_PORT=9999

- KAFKA_JMX_OPTS=-Dcom.sun.management.jmxremote -Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=false -Dcom.sun.management.jmxremote.port=9999 -Dcom.sun.management.jmxremote.rmi.port=9999 -Djava.rmi.server.hostname=0.0.0.0

# 指标收集

- KAFKA_METRICS_ENABLED=true

- KAFKA_METRICS_NUM_SAMPLES=2

- KAFKA_METRICS_SAMPLE_WINDOW_MS=30000

- KAFKA_METRICS_REPORTERS=org.apache.kafka.common.metrics.JmxReporter

# ============ 启动和健康检查 ============

- KAFKA_STARTUP_MODE=KRaft # 明确启动模式

restart: unless-stopped # 自动重启策略

healthcheck:

test: ["CMD", "bash", "-c", "echo -e '\\n' | telnet localhost 9092 2>&1 | grep Connected || exit 1"]

interval: 30s

timeout: 10s

retries: 3

start_period: 120s # Kafka启动较慢,给予足够时间

bash

# 停止并删除旧容器和卷(会清空D:/Docker/kafka/data下的测试数据,请确认)

docker-compose down -v

# 使用修改后的配置重新启动

docker-compose up -d场景化配置对比

| 配置维度 | 当前通用配置 | 高吞吐优化 | 低延迟优化 | 数据归档优化 |

|---|---|---|---|---|

| 核心目标 | 平衡性 | 最大化吞吐量 | 最小化延迟 | 长期低成本存储 |

| 适用场景 | 常规业务 | 日志收集、大数据管道 | 交易系统、实时监控 | 合规存储、历史数据 |

| 消息大小 | 5MB | 10MB+ | 1MB | 根据需求 |

| 保留策略 | 7天或50GB | 短期(1-3天) | 短期(1-7天) | 长期(90天+)或压缩 |

| 刷盘策略 | 平衡(1秒) | 批量(高频) | 实时(低频) | 批量(成本优先) |

| 内存重点 | 平衡(4GB) | 大缓冲区 | 低GC | 常规 |

| 监控重点 | 常规指标 | 吞吐量、队列 | 延迟百分位 | 磁盘使用、压缩率 |

1、 高吞吐场景优化(适用于日志收集、数据管道)

XML

environment:

# 保留当前基础配置...

# ============ 高吞吐核心优化 ============

# 增大批处理大小和缓冲区

- KAFKA_MESSAGE_MAX_BYTES=10485760 # 10MB(大数据消息)

- KAFKA_MAX_REQUEST_SIZE=10485760

- KAFKA_REPLICA_FETCH_MAX_BYTES=10485760

- KAFKA_FETCH_MAX_BYTES=10485760

# 批量处理优化

- KAFKA_LINGER_MS=5 # 生产等待时间(增加批处理)

- KAFKA_BATCH_SIZE=1048576 # 1MB批处理大小

- KAFKA_BUFFER_MEMORY=536870912 # 512MB生产者缓冲区

- KAFKA_COMPRESSION_TYPE=snappy # 压缩提高吞吐

# 网络和线程优化

- KAFKA_NUM_NETWORK_THREADS=8 # 网络线程(根据CPU核心数)

- KAFKA_NUM_IO_THREADS=16 # IO线程增加

- KAFKA_QUEUED_MAX_REQUESTS=1000 # 更大请求队列

- KAFKA_QUEUED_MAX_BYTES=1073741824 # 1GB队列字节限制

# Socket缓冲区大幅增加

- KAFKA_SOCKET_RECEIVE_BUFFER_BYTES=1048576 # 1MB接收缓冲区

- KAFKA_SOCKET_SEND_BUFFER_BYTES=1048576 # 1MB发送缓冲区

- KAFKA_SOCKET_REQUEST_MAX_BYTES=10485760 # 10MB最大请求

# 刷盘策略偏向吞吐量

- KAFKA_LOG_FLUSH_INTERVAL_MESSAGES=100000 # 10万条刷盘

- KAFKA_LOG_FLUSH_INTERVAL_MS=5000 # 5秒刷盘(牺牲一点持久性)

- KAFKA_LOG_FLUSH_SCHEDULER_INTERVAL_MS=3000

# 日志段优化

- KAFKA_LOG_SEGMENT_BYTES=2147483648 # 2GB段大小(减少段数量)

- KAFKA_LOG_ROLL_HOURS=24 # 每天滚动新段

- KAFKA_LOG_INDEX_SIZE_MAX_BYTES=10485760 # 10MB索引文件

- KAFKA_LOG_INDEX_INTERVAL_BYTES=4096 # 每4KB建索引

# 短暂保留策略(高吞吐通常短期存储)

- KAFKA_LOG_RETENTION_HOURS=72 # 3天

- KAFKA_LOG_RETENTION_BYTES=107374182400 # 100GB

# JVM优化(大堆内存,并行GC)

- KAFKA_HEAP_OPTS=-Xmx8G -Xms8G

- KAFKA_JVM_PERFORMANCE_OPTS=-XX:+UseParallelGC -XX:+ParallelRefProcEnabled -XX:+UseStringDeduplication -XX:MaxGCPauseMillis=100 -Djava.awt.headless=true

# 监控指标(关注吞吐)

- KAFKA_METRICS_NUM_SAMPLES=3

- KAFKA_METRICS_SAMPLE_WINDOW_MS=10000 # 10秒采样窗口2、低延迟场景优化(适用于交易系统、实时监控)

XML

environment:

# 保留当前基础配置...

# ============ 低延迟核心优化 ============

# 减少批处理和等待时间

- KAFKA_LINGER_MS=0 # 零等待(立即发送)

- KAFKA_BATCH_SIZE=16384 # 16KB小批量

- KAFKA_MAX_REQUEST_SIZE=1048576 # 1MB(减少序列化时间)

- KAFKA_MESSAGE_MAX_BYTES=1048576 # 1MB

# 快速响应配置

- KAFKA_REPLICA_FETCH_WAIT_MAX_MS=100 # 副本等待100ms(默认500ms)

- KAFKA_REPLICA_FETCH_MIN_BYTES=1 # 有数据立即拉取

- KAFKA_REPLICA_FETCH_MAX_BYTES=1048576 # 1MB

- KAFKA_FETCH_MIN_BYTES=1 # 消费者立即获取

- KAFKA_FETCH_MAX_WAIT_MS=100 # 消费者最大等待100ms

# 控制器优化(KRaft模式关键)

- KAFKA_CONTROLLER_QUORUM_ELECTION_TIMEOUT_MS=500 # 500ms选举超时

- KAFKA_CONTROLLER_QUORUM_FETCH_TIMEOUT_MS=1000 # 1秒拉取超时

- KAFKA_CONTROLLER_QUORUM_REQUEST_TIMEOUT_MS=2000 # 2秒请求超时

- KAFKA_CONTROLLER_SOCKET_TIMEOUT_MS=5000

# 快速刷盘(确保数据不丢失但延迟低)

- KAFKA_LOG_FLUSH_INTERVAL_MESSAGES=1000 # 1000条刷盘

- KAFKA_LOG_FLUSH_INTERVAL_MS=100 # 100ms刷盘

- KAFKA_LOG_FLUSH_OFFSET_CHECKPOINT_INTERVAL_MS=30000

# 段文件优化

- KAFKA_LOG_SEGMENT_BYTES=268435456 # 256MB较小段(恢复快)

- KAFKA_LOG_ROLL_HOURS=24

- KAFKA_LOG_INDEX_INTERVAL_BYTES=2048 # 每2KB建索引(查找快)

# 网络线程优化

- KAFKA_NUM_NETWORK_THREADS=4

- KAFKA_NUM_IO_THREADS=8

- KAFKA_QUEUED_MAX_REQUESTS=100

# Socket缓冲区适中

- KAFKA_SOCKET_RECEIVE_BUFFER_BYTES=65536 # 64KB

- KAFKA_SOCKET_SEND_BUFFER_BYTES=65536

# 减少GC暂停(低延迟关键)

- KAFKA_HEAP_OPTS=-Xmx4G -Xms4G

- KAFKA_JVM_PERFORMANCE_OPTS=-XX:+UseZGC -XX:MaxGCPauseMillis=10 -XX:+UseNUMA -XX:+PerfDisableSharedMem -Djava.awt.headless=true

- KAFKA_GC_LOG_OPTS=-Xlog:gc*:file=/opt/kafka/logs/gc.log:time,level,tags:filecount=5,filesize=50M

# 监控(关注延迟百分位)

- KAFKA_METRICS_NUM_SAMPLES=5

- KAFKA_METRICS_SAMPLE_WINDOW_MS=5000 # 5秒采样窗口3、数据归档场景优化(适用于合规存储、历史数据)

XML

environment:

# 保留当前基础配置...

# ============ 数据归档核心优化 ============

# 日志压缩策略(保留key最新值,节省空间)

- KAFKA_LOG_CLEANUP_POLICY=compact,delete # 压缩+删除混合

- KAFKA_LOG_RETENTION_HOURS=2160 # 90天长期保留

- KAFKA_LOG_RETENTION_BYTES=-1 # 不设大小限制(或极大值)

# 压缩相关配置

- KAFKA_LOG_COMPRESSION_TYPE=lz4 # LZ4压缩率高

- KAFKA_LOG_COMPRESS_TYPE=lz4

- KAFKA_LOG_COMPRESSED_TOPIC_COMPRESSION_TYPE=lz4

# 清理器优化

- KAFKA_LOG_CLEANER_THREADS=2 # 清理线程

- KAFKA_LOG_CLEANER_DEDUPE_BUFFER_SIZE=134217728 # 128MB去重缓冲区

- KAFKA_LOG_CLEANER_IO_BUFFER_SIZE=524288 # 512KB IO缓冲区

- KAFKA_LOG_CLEANER_DELETE_RETENTION_MS=86400000 # 删除数据保留1天

# 大段文件(减少文件数量,便于归档)

- KAFKA_LOG_SEGMENT_BYTES=1073741824 # 1GB

- KAFKA_LOG_ROLL_HOURS=168 # 每周滚动

- KAFKA_LOG_SEGMENT_MS=604800000 # 7天

- KAFKA_LOG_INDEX_INTERVAL_BYTES=16384 # 每16KB建索引

# 刷盘策略偏向成本(减少IO)

- KAFKA_LOG_FLUSH_INTERVAL_MESSAGES=1000000 # 100万条刷盘

- KAFKA_LOG_FLUSH_INTERVAL_MS=10000 # 10秒刷盘

- KAFKA_LOG_FLUSH_SCHEDULER_INTERVAL_MS=60000

# 节省内存配置

- KAFKA_HEAP_OPTS=-Xmx2G -Xms2G # 归档不需要大内存

- KAFKA_NUM_NETWORK_THREADS=2

- KAFKA_NUM_IO_THREADS=4

# 消息大小可较大(归档数据可能大)

- KAFKA_MESSAGE_MAX_BYTES=10485760 # 10MB

- KAFKA_MAX_REQUEST_SIZE=10485760

# 禁止自动删除(手动控制)

- KAFKA_DELETE_TOPIC_ENABLE=false

# 监控磁盘使用

- KAFKA_LOG_DIRS_FREE_SPACE_THRESHOLD=0.1 # 磁盘空间低于10%告警组合使用建议

场景1:高吞吐 + 短期归档

XML

# 高吞吐配置基础上,调整保留策略:

- KAFKA_LOG_RETENTION_HOURS=168 # 7天

- KAFKA_LOG_CLEANUP_POLICY=delete # 简单删除

- KAFKA_COMPRESSION_TYPE=lz4 # 压缩节省空间场景2:低延迟 + 关键数据归档

XML

# 低延迟配置基础上,调整:

- KAFKA_LOG_CLEANUP_POLICY=compact # 压缩保留关键数据

- KAFKA_LOG_RETENTION_HOURS=720 # 30天

- KAFKA_LOG_FLUSH_INTERVAL_MS=500 # 500ms刷盘(平衡)场景3:混合工作负载(最常见)

XML

# 按主题设置不同策略,使用以下默认配置:

- KAFKA_NUM_PARTITIONS=6 # 更多分区并行处理

- KAFKA_MESSAGE_MAX_BYTES=5242880 # 5MB适中

- KAFKA_LOG_RETENTION_HOURS=168 # 7天

- KAFKA_LOG_SEGMENT_BYTES=1073741824 # 1GB

- KAFKA_LOG_FLUSH_INTERVAL_MS=1000 # 1秒刷盘(平衡点)在 Kafka 容器中创建一个新主题

bash

docker exec kafka-broker /opt/kafka/bin/kafka-console-producer.sh --topic quick-test --bootstrap-server localhost:9092如果报错:这是docker-compose.yml 配置的中 - "9999:9999" # JMX监控端口 冲突(修改端口或去掉配置当中的MX监控)

bash

PS D:\Docker\kafka> docker exec -it kafka-broker /opt/kafka/bin/kafka-console-producer.sh --topic quick-test --bootstrap-server localhost:9092

Error: JMX connector server communication error: service:jmx:rmi://kafka-broker:9999

jdk.internal.agent.AgentConfigurationError: java.rmi.server.ExportException: Port already in use: 9999; nested exception is:

java.net.BindException: Address in use

at jdk.management.agent/sun.management.jmxremote.ConnectorBootstrap.exportMBeanServer(Unknown Source)

at jdk.management.agent/sun.management.jmxremote.ConnectorBootstrap.startRemoteConnectorServer(Unknown Source)

at jdk.management.agent/jdk.internal.agent.Agent.startAgent(Unknown Source)

at java.management.rmi/javax.management.remote.rmi.RMIConnectorServer.start(Unknown Source)

... 4 more

Caused by: java.net.BindException: Address in use

at java.base/sun.nio.ch.Net.bind0(Native Method)

at java.base/sun.nio.ch.Net.bind(Unknown Source)

at java.base/sun.nio.ch.Net.bind(Unknown Source)

at java.base/sun.nio.ch.NioSocketImpl.bind(Unknown Source)

at java.base/java.net.ServerSocket.bind(Unknown Source)

at java.base/java.net.ServerSocket.<init>(Unknown Source)

at java.base/java.net.ServerSocket.<init>(Unknown Source)

at java.rmi/sun.rmi.transport.tcp.TCPDirectSocketFactory.createServerSocket(Unknown Source)

at java.rmi/sun.rmi.transport.tcp.TCPEndpoint.newServerSocket(Unknown Source)

... 13 more

PS D:\Docker\kafka>去掉JMX监控:

bash

services:

kafka:

image: apache/kafka:4.1.1

container_name: kafka-broker

hostname: kafka-broker # 明确主机名,用于网络识别

ports:

- "9092:9092"

- "9093:9093"

# 已移除 JMX 端口映射:- "9999:9999"

volumes:

- ./data:/opt/kafka/data

- ./logs:/opt/kafka/logs

environment:

# ============ 基础KRaft配置 ============

- KAFKA_NODE_ID=1

- KAFKA_PROCESS_ROLES=broker,controller

- KAFKA_LISTENERS=PLAINTEXT://:9092,CONTROLLER://:9093

- KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://${HOST_IP:-localhost}:9092

- KAFKA_CONTROLLER_LISTENER_NAMES=CONTROLLER

- KAFKA_CONTROLLER_QUORUM_BOOTSTRAP_SERVERS=localhost:9093

- KAFKA_CONTROLLER_QUORUM_VOTERS=1@localhost:9093

- KAFKA_LOG_DIRS=/opt/kafka/data

- CLUSTER_ID=4P7L3w-8Sq1Q2vXcZ5dYyA

# ============ 数据安全与持久化(核心)============

# 单节点必须设置为1

- KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR=1

- KAFKA_TRANSACTION_STATE_LOG_REPLICATION_FACTOR=1

- KAFKA_MIN_INSYNC_REPLICAS=1

# 生产环境数据持久化策略(确保数据不丢失)

- KAFKA_TRANSACTION_STATE_LOG_MIN_ISR=1

- KAFKA_UNCLEAN_LEADER_ELECTION_ENABLE=false # 禁止不完整副本成为Leader

- KAFKA_AUTO_LEADER_REBALANCE_ENABLE=true

# ============ 生产级日志保留策略 ============

# 混合策略:满足任一条件即触发清理(时间+大小双重保障)

- KAFKA_LOG_RETENTION_HOURS=168 # 保留7天(根据业务调整)

- KAFKA_LOG_RETENTION_BYTES=53687091200 # 保留50GB(防止磁盘撑满)

- KAFKA_LOG_CLEANUP_POLICY=delete # 生产环境通常用delete

# 日志段优化(影响性能和恢复时间)

- KAFKA_LOG_SEGMENT_BYTES=1073741824 # 1GB段大小(平衡IO和恢复)

- KAFKA_LOG_ROLL_HOURS=168 # 7天滚动新段

- KAFKA_LOG_SEGMENT_MS=604800000 # 7天(毫秒)

- KAFKA_LOG_RETENTION_CHECK_INTERVAL_MS=300000 # 5分钟检查一次

# 刷盘策略(平衡性能与持久性)

- KAFKA_LOG_FLUSH_INTERVAL_MESSAGES=10000

- KAFKA_LOG_FLUSH_INTERVAL_MS=1000

- KAFKA_LOG_FLUSH_OFFSET_CHECKPOINT_INTERVAL_MS=60000

# ============ 网络与吞吐量优化 ============

# 消息大小调整(根据业务需求)

- KAFKA_MESSAGE_MAX_BYTES=5242880 # 5MB(默认1MB通常过小)

- KAFKA_MAX_REQUEST_SIZE=5242880 # 与message.max.bytes匹配

- KAFKA_REPLICA_FETCH_MAX_BYTES=5242880

- KAFKA_FETCH_MAX_BYTES=5242880

# Socket和网络缓冲区

- KAFKA_SOCKET_REQUEST_MAX_BYTES=5242880

- KAFKA_SOCKET_RECEIVE_BUFFER_BYTES=102400

- KAFKA_SOCKET_SEND_BUFFER_BYTES=102400

# 连接和线程配置

- KAFKA_NUM_NETWORK_THREADS=3 # 网络线程数(默认3,可调至CPU核数)

- KAFKA_NUM_IO_THREADS=8 # IO线程数(默认8)

- KAFKA_NUM_REPLICA_FETCHERS=1 # 单节点1足够

- KAFKA_QUEUED_MAX_REQUESTS=500 # 队列大小

# ============ 主题与分区策略 ============

- KAFKA_NUM_PARTITIONS=3 # 默认分区数(生产环境建议3+)

- KAFKA_DEFAULT_REPLICATION_FACTOR=1 # 单节点必须为1

# 副本和ISR配置

- KAFKA_REPLICA_LAG_TIME_MAX_MS=30000 # 副本延迟超时

- KAFKA_REPLICA_FETCH_WAIT_MAX_MS=500 # 副本拉取最大等待

- KAFKA_REPLICA_SOCKET_TIMEOUT_MS=30000

# ============ 控制器与KRaft优化 ============

- KAFKA_CONTROLLER_SOCKET_TIMEOUT_MS=30000

- KAFKA_CONTROLLER_QUORUM_REQUEST_TIMEOUT_MS=30000

- KAFKA_CONTROLLER_QUORUM_RETRY_BACKOFF_MS=20

- KAFKA_CONTROLLER_QUORUM_ELECTION_TIMEOUT_MS=1000

- KAFKA_CONTROLLER_QUORUM_FETCH_TIMEOUT_MS=2000

# ============ 生产环境安全策略 ============

- KAFKA_AUTO_CREATE_TOPICS_ENABLE=false # 生产环境禁止自动创建主题

- KAFKA_DELETE_TOPIC_ENABLE=true # 允许删除(但要有权限控制)

- KAFKA_CONTROLLED_SHUTDOWN_ENABLE=true # 启用受控关闭

- KAFKA_CONTROLLED_SHUTDOWN_MAX_RETRIES=3

- KAFKA_CONTROLLED_SHUTDOWN_RETRY_BACKOFF_MS=5000

# 连接限制

- KAFKA_MAX_CONNECTIONS_PER_IP=2147483647

- KAFKA_MAX_CONNECTIONS=2147483647

- KAFKA_MAX_CONNECTION_CREATION_RATE=2147483647

# ============ 内存与JVM优化 ============

# 堆内存设置(根据物理内存调整,建议4-8G)

- KAFKA_HEAP_OPTS=-Xmx4G -Xms4G

# 直接内存(用于零拷贝)

- KAFKA_JVM_PERFORMANCE_OPTS=-XX:+UseG1GC -XX:MaxGCPauseMillis=20 -XX:InitiatingHeapOccupancyPercent=35 -XX:+ExplicitGCInvokesConcurrent -Djava.awt.headless=true

# GC日志和内存监控

- KAFKA_GC_LOG_OPTS=-Xlog:gc*:file=/opt/kafka/logs/gc.log:time,level,tags:filecount=10,filesize=100M

# ============ 启动和健康检查 ============

- KAFKA_STARTUP_MODE=KRaft # 明确启动模式

restart: unless-stopped # 自动重启策略

healthcheck:

test: ["CMD", "bash", "-c", "echo -e '\\n' | telnet localhost 9092 2>&1 | grep Connected || exit 1"]

interval: 30s

timeout: 10s

retries: 3

start_period: 120s # Kafka启动较慢,给予足够时间执行命令:

bash

# 1. 停止并删除现有容器

docker-compose down -v

# 2. 完全清理(确保没有残留进程)

docker system prune -f

# 3. 重新启动

docker-compose up -d

# 4. 等待启动(约40秒)

Start-Sleep -Seconds 40

# 5. 测试

docker exec kafka-broker /opt/kafka/bin/kafka-topics.sh --create --topic test-no-jmx --partitions 1 --replication-factor 1 --bootstrap-server localhost:9092验证是否成功:

bash

PS D:\Docker\kafka> docker exec kafka-broker /opt/kafka/bin/kafka-topics.sh --create --topic test-no-jmx --partitions 1 --replication-factor 1 --bootstrap-server localhost:9092

Created topic test-no-jmx.

PS D:\Docker\kafka>| 指标 | 状态 | 说明 |

|---|---|---|

| 命令执行 | ✅ 成功 | 命令被 Kafka 接收并处理 |

| 主题创建 | ✅ 成功 | 新主题 test-no-jmx 已创建 |

| 集群连接 | ✅ 成功 | 能够连接到 localhost:9092 |

| 权限验证 | ✅ 成功 | 有创建主题的权限 |

| 配置有效 | ✅ 成功 | 所有配置参数正确生效 |