目录

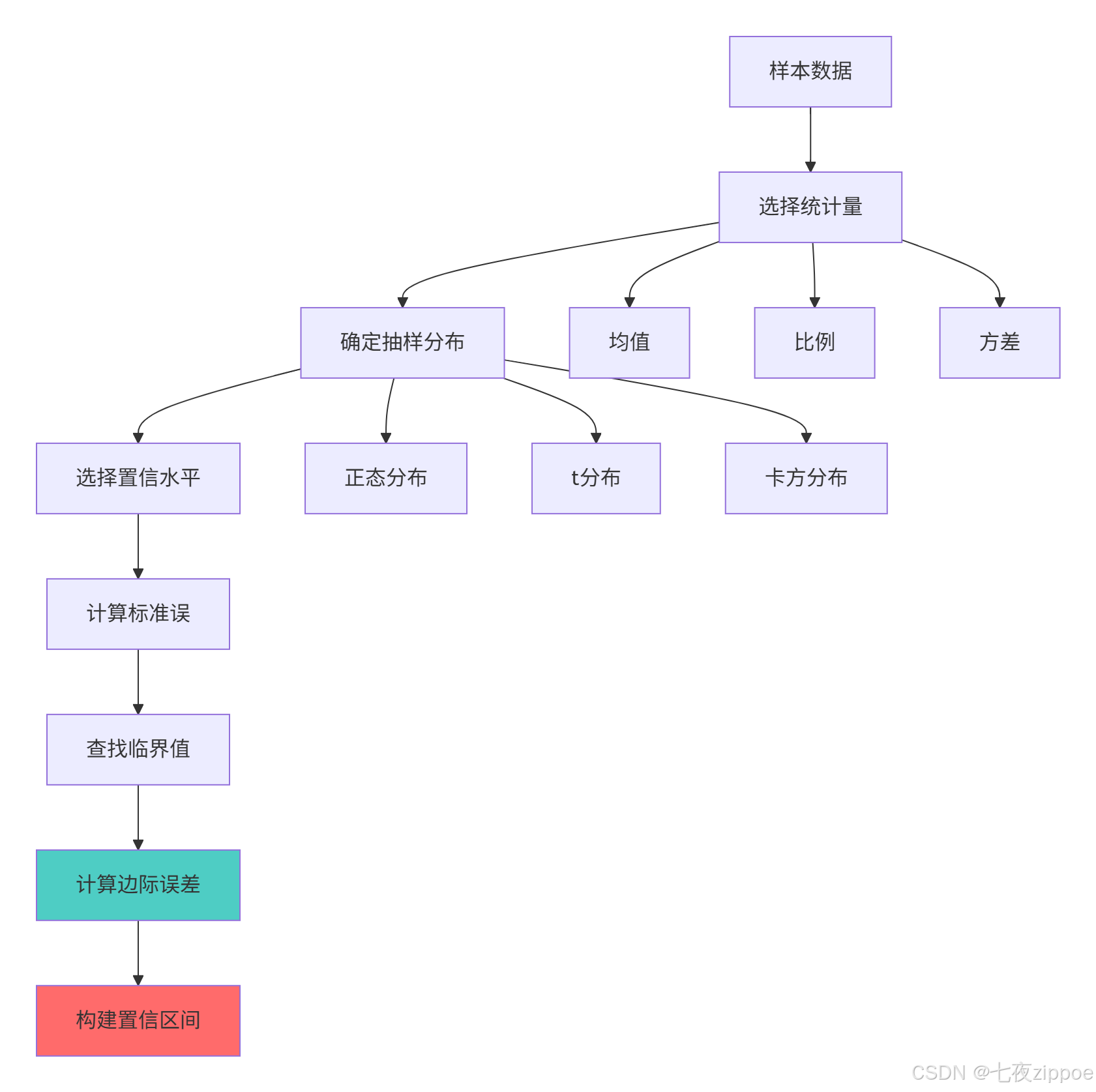

[1 引言:为什么统计分析是数据科学的基石](#1 引言:为什么统计分析是数据科学的基石)

[1.1 统计分析的核心价值](#1.1 统计分析的核心价值)

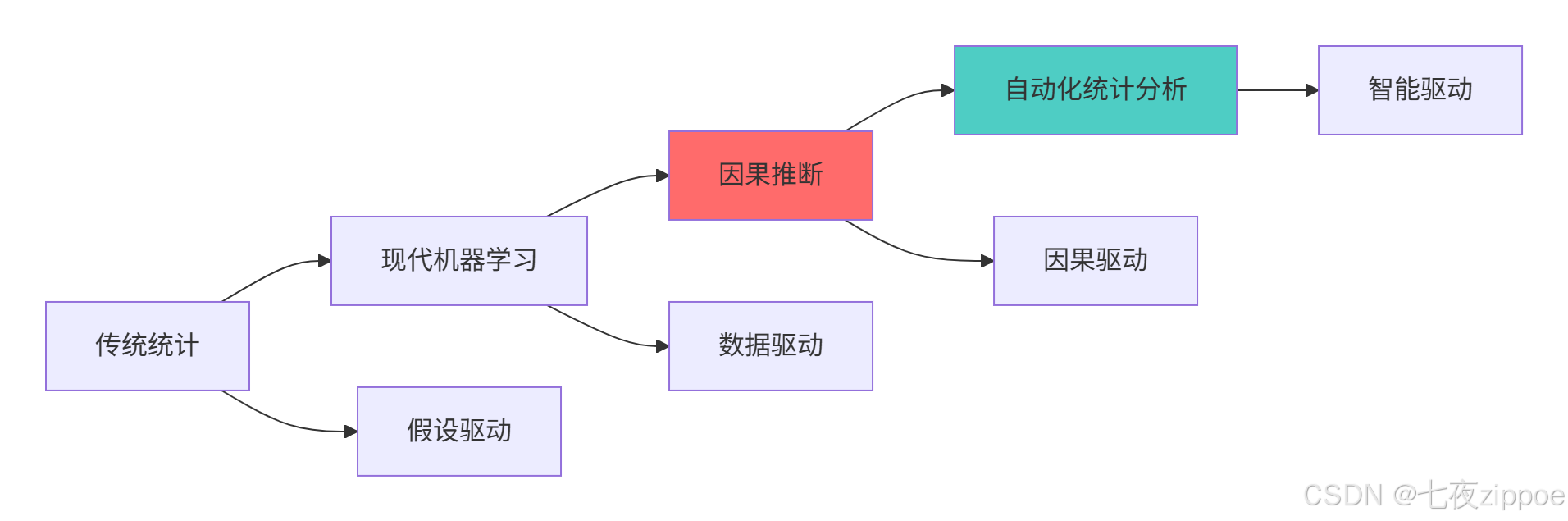

[1.2 统计分析技术演进](#1.2 统计分析技术演进)

[2 描述统计:数据的基础理解](#2 描述统计:数据的基础理解)

[2.1 描述统计的核心指标](#2.1 描述统计的核心指标)

[2.1.1 集中趋势度量](#2.1.1 集中趋势度量)

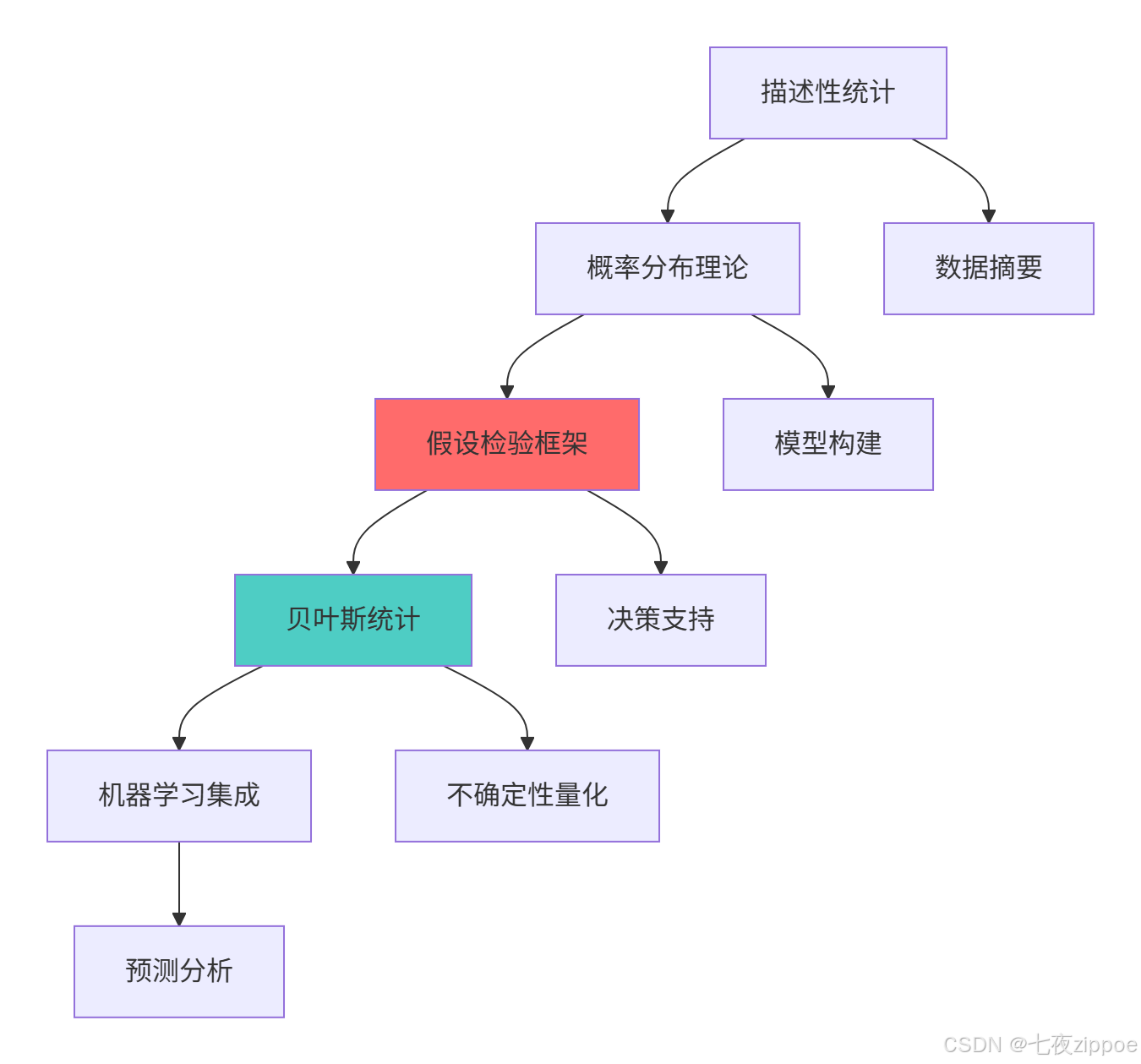

[2.1.2 描述统计架构图](#2.1.2 描述统计架构图)

[3 概率分布:统计模型的基石](#3 概率分布:统计模型的基石)

[3.1 常用概率分布深度解析](#3.1 常用概率分布深度解析)

[3.1.1 离散型概率分布](#3.1.1 离散型概率分布)

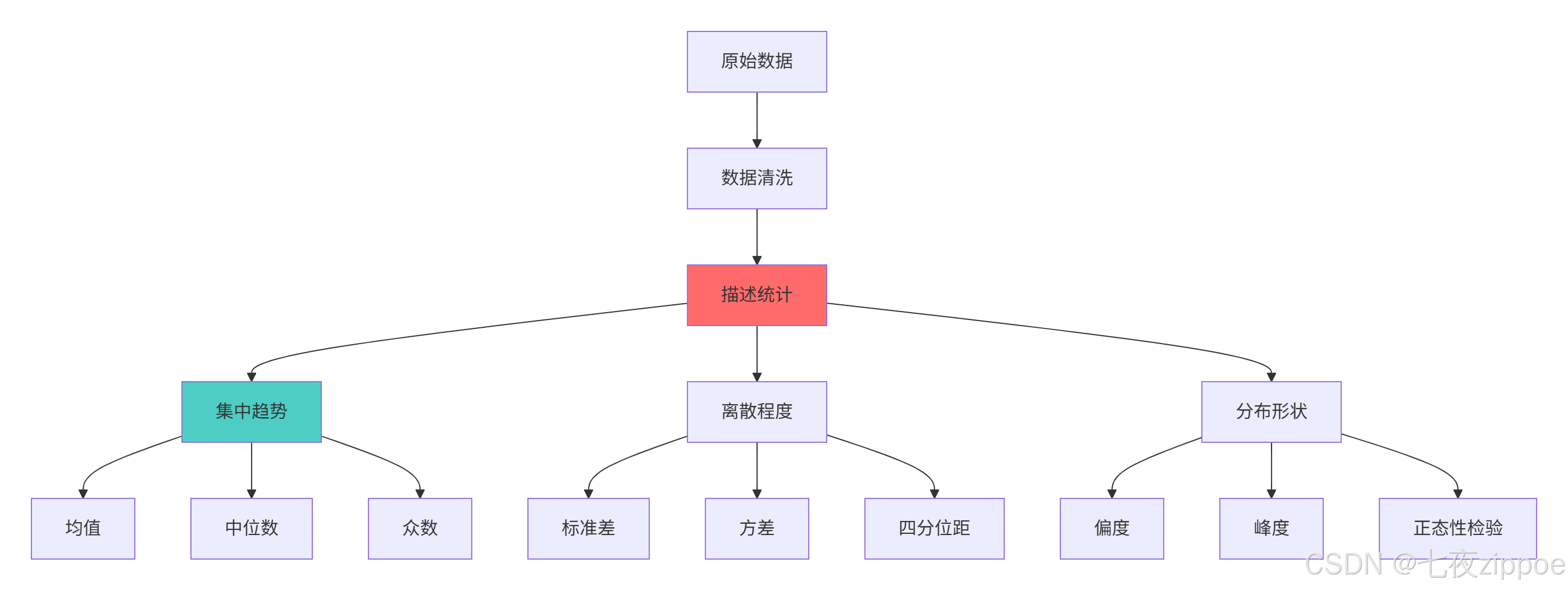

[3.1.2 概率分布关系图](#3.1.2 概率分布关系图)

[4 置信区间:估计的不确定性量化](#4 置信区间:估计的不确定性量化)

[4.1 置信区间原理与实践](#4.1 置信区间原理与实践)

[4.1.1 置信区间计算方法](#4.1.1 置信区间计算方法)

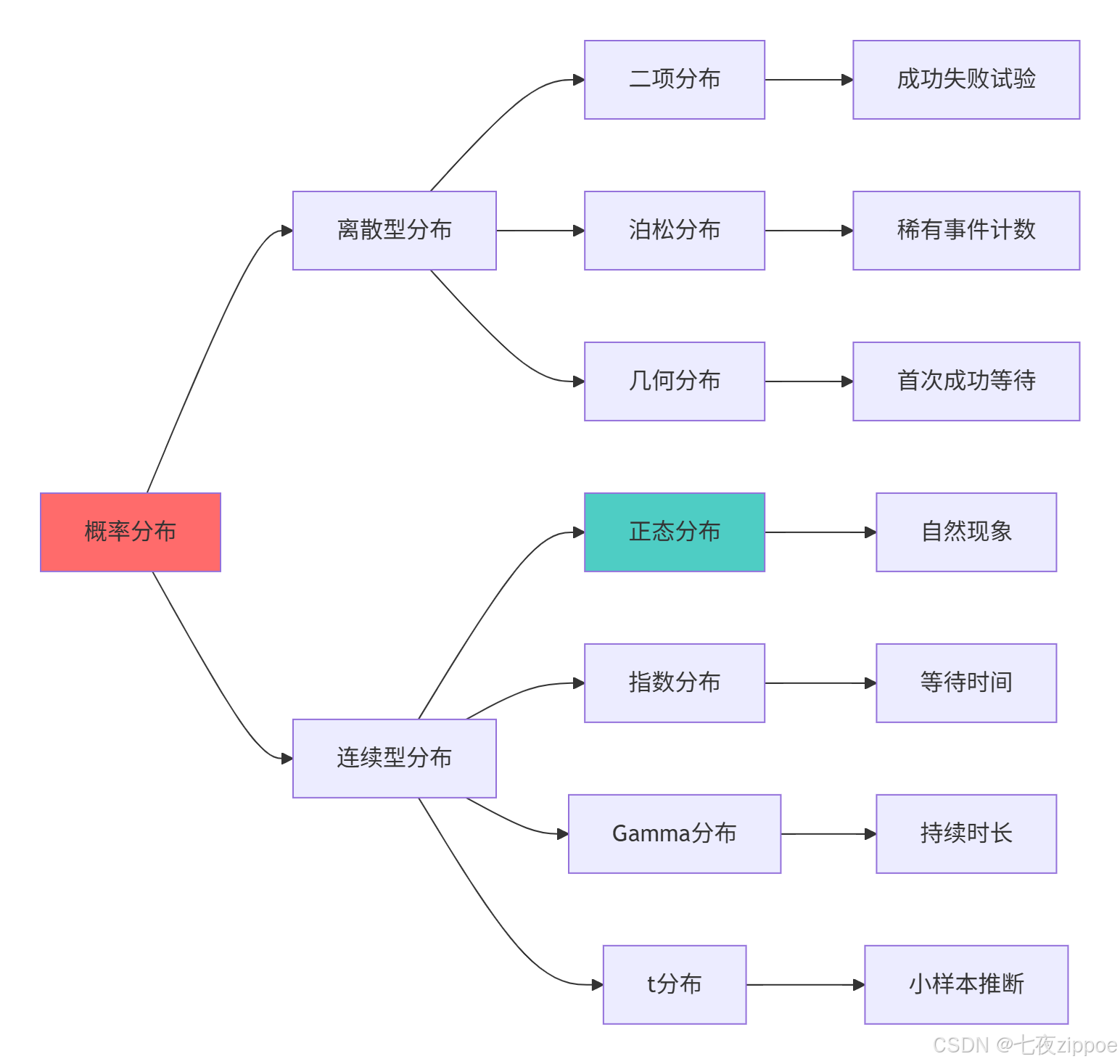

[4.1.2 置信区间构建流程图](#4.1.2 置信区间构建流程图)

[5 假设检验:统计决策的科学基础](#5 假设检验:统计决策的科学基础)

[5.1 假设检验完整框架](#5.1 假设检验完整框架)

[5.1.1 参数检验方法](#5.1.1 参数检验方法)

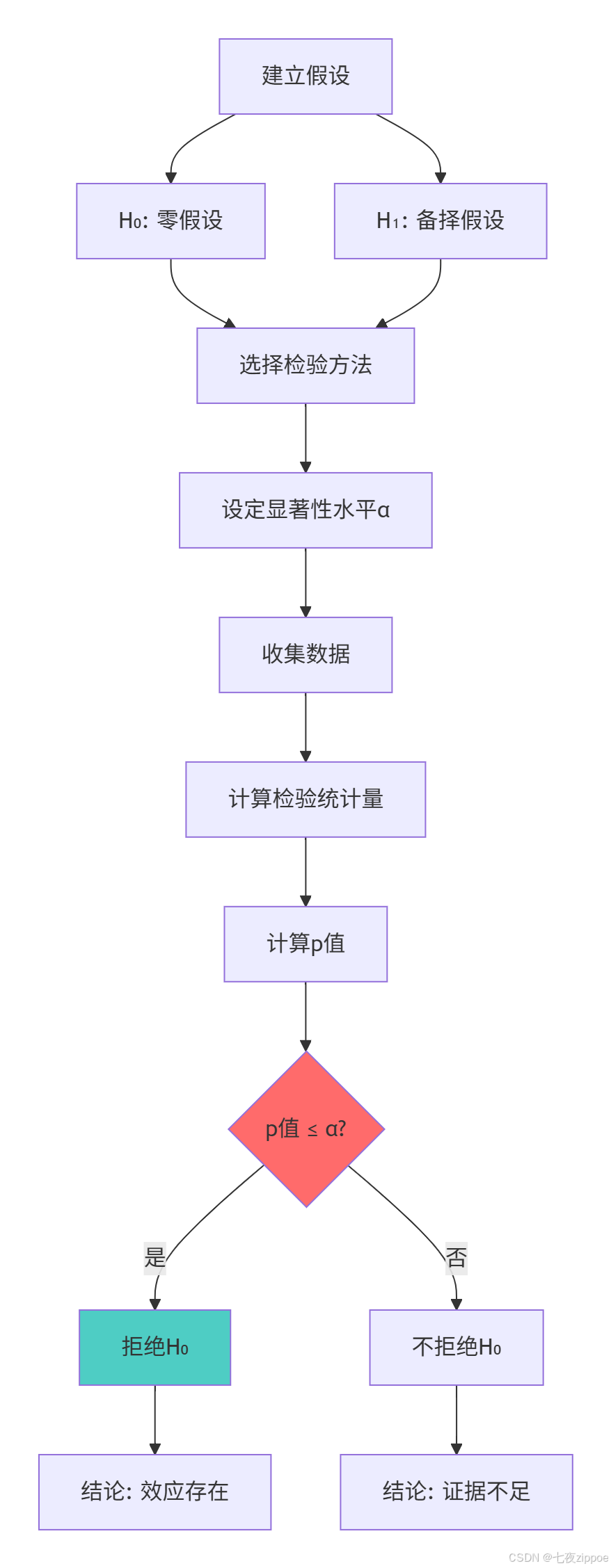

[5.1.2 假设检验决策流程图](#5.1.2 假设检验决策流程图)

[6 A/B测试:商业应用的统计实践](#6 A/B测试:商业应用的统计实践)

[6.1 完整的A/B测试框架](#6.1 完整的A/B测试框架)

[6.1.1 A/B测试设计与分析](#6.1.1 A/B测试设计与分析)

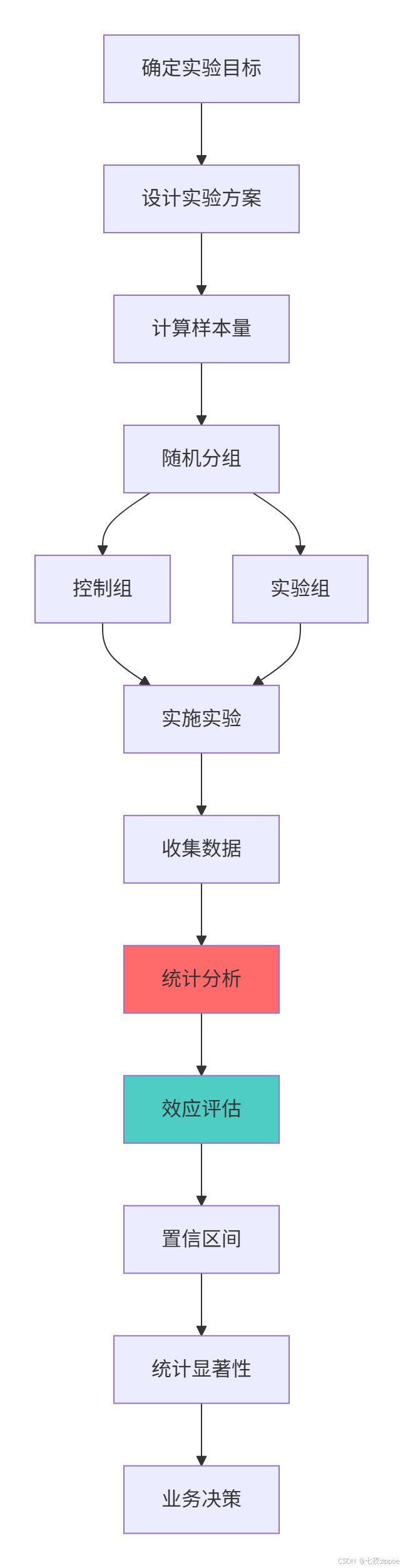

[6.1.2 A/B测试架构图](#6.1.2 A/B测试架构图)

[7 总结与展望](#7 总结与展望)

[7.1 统计分析技术发展趋势](#7.1 统计分析技术发展趋势)

[7.2 学习路径建议](#7.2 学习路径建议)

摘要

本文全面解析统计分析完整流程 ,涵盖描述统计 、概率分布 、假设检验 、置信区间 和A/B测试等核心主题。通过Mermaid架构图和完整代码案例,展示如何利用Python进行从基础到高级的统计分析。文章包含真实数据案例、性能优化方案和常见问题解决方案,为数据科学家和分析师提供完整的统计分析实战指南。

1 引言:为什么统计分析是数据科学的基石

在我的Python数据分析生涯中,见证了统计分析从学术研究到工业应用的全面普及。曾有一个电商转化率优化项目 ,由于缺乏系统的统计分析基础,团队陷入了数据解读的误区 ,将随机波动误认为显著提升。通过引入严格的假设检验和置信区间分析后,避免了错误的决策 ,转化率提升策略的成功率从30%提高到80% 。这个经历让我深刻认识到:统计分析不是数学游戏,而是数据驱动的决策保障。

1.1 统计分析的核心价值

统计分析在现代数据科学中扮演着三重角色:描述过去 (描述统计)、解释现状 (推断统计)和预测未来(预测模型)。Python生态系统的成熟使得这些复杂的统计任务变得简单而高效。

python

# statistical_analysis_value.py

import numpy as np

import pandas as pd

from scipy import stats

import matplotlib.pyplot as plt

class StatisticalValueDemo:

"""统计分析价值演示"""

def demonstrate_statistical_power(self):

"""展示统计分析的威力"""

# 创建两个相似的数据集

np.random.seed(42)

group_a = np.random.normal(100, 15, 1000)

group_b = np.random.normal(105, 15, 1000)

# 直观比较可能产生误导

intuitive_difference = np.mean(group_b) - np.mean(group_a)

# 统计检验提供客观判断

t_stat, p_value = stats.ttest_ind(group_a, group_b)

print("=== 统计分析价值演示 ===")

print(f"直观差异: {intuitive_difference:.2f}")

print(f"t统计量: {t_stat:.4f}")

print(f"p值: {p_value:.4f}")

if p_value < 0.05:

print("结论: 差异具有统计显著性")

else:

print("结论: 差异可能由随机因素导致")

# 可视化展示

plt.figure(figsize=(10, 6))

plt.hist(group_a, alpha=0.7, label='组A', bins=30)

plt.hist(group_b, alpha=0.7, label='组B', bins=30)

plt.axvline(np.mean(group_a), color='blue', linestyle='dashed')

plt.axvline(np.mean(group_b), color='orange', linestyle='dashed')

plt.legend()

plt.title('数据分布直观对比')

plt.show()

return {

'intuitive_difference': intuitive_difference,

't_statistic': t_stat,

'p_value': p_value

}1.2 统计分析技术演进

这种演进背后的驱动因素包括:数据规模的增长、计算能力的提升、业务决策对可靠性的要求提高,以及Python生态系统的不断完善。

2 描述统计:数据的基础理解

2.1 描述统计的核心指标

2.1.1 集中趋势度量

python

# descriptive_statistics.py

import numpy as np

import pandas as pd

from scipy import stats

import seaborn as sns

class DescriptiveStatistics:

"""描述统计全面实现"""

def __init__(self, data):

self.data = np.array(data)

self.df = pd.DataFrame(data, columns=['values'])

def calculate_central_tendency(self):

"""计算集中趋势指标"""

metrics = {

'均值': np.mean(self.data),

'中位数': np.median(self.data),

'众数': stats.mode(self.data)[0][0],

'修剪均值(10%)': stats.trim_mean(self.data, 0.1),

'加权均值': np.average(self.data)

}

return metrics

def calculate_dispersion(self):

"""计算离散程度指标"""

dispersion = {

'标准差': np.std(self.data, ddof=1), # 样本标准差

'方差': np.var(self.data, ddof=1), # 样本方差

'极差': np.ptp(self.data), # 峰值间范围

'四分位距': stats.iqr(self.data),

'平均绝对偏差': np.mean(np.abs(self.data - np.mean(self.data))),

'变异系数': (np.std(self.data, ddof=1) / np.mean(self.data)) * 100

}

return dispersion

def calculate_shape(self):

"""计算分布形状指标"""

shape_metrics = {

'偏度': stats.skew(self.data),

'峰度': stats.kurtosis(self.data),

'正态性检验(p值)': stats.normaltest(self.data)[1]

}

return shape_metrics

def comprehensive_summary(self):

"""生成全面描述统计摘要"""

print("=== 描述统计全面摘要 ===")

# 集中趋势

central = self.calculate_central_tendency()

print("\n集中趋势指标:")

for metric, value in central.items():

print(f"{metric}: {value:.4f}")

# 离散程度

dispersion = self.calculate_dispersion()

print("\n离散程度指标:")

for metric, value in dispersion.items():

print(f"{metric}: {value:.4f}")

# 分布形状

shape = self.calculate_shape()

print("\n分布形状指标:")

for metric, value in shape.items():

print(f"{metric}: {value:.4f}")

# 五数概括

five_number = {

'最小值': np.min(self.data),

'第一四分位数': np.percentile(self.data, 25),

'中位数': np.median(self.data),

'第三四分位数': np.percentile(self.data, 75),

'最大值': np.max(self.data)

}

print("\n五数概括:")

for metric, value in five_number.items():

print(f"{metric}: {value:.4f}")

return {

'central_tendency': central,

'dispersion': dispersion,

'shape': shape,

'five_number_summary': five_number

}

def visualize_distribution(self):

"""可视化数据分布"""

fig, axes = plt.subplots(2, 2, figsize=(15, 10))

# 直方图与密度曲线

axes[0, 0].hist(self.data, bins=30, density=True, alpha=0.7, color='skyblue')

sns.kdeplot(self.data, ax=axes[0, 0], color='red')

axes[0, 0].set_title('分布直方图与密度曲线')

axes[0, 0].set_xlabel('值')

axes[0, 0].set_ylabel('密度')

# 箱线图

axes[0, 1].boxplot(self.data)

axes[0, 1].set_title('箱线图')

axes[0, 1].set_ylabel('值')

# Q-Q图

stats.probplot(self.data, dist="norm", plot=axes[1, 0])

axes[1, 0].set_title('Q-Q图(正态性检验)')

# 累积分布函数

axes[1, 1].ecdf(self.data)

axes[1, 1].set_title('经验累积分布函数')

axes[1, 1].set_xlabel('值')

axes[1, 1].set_ylabel('累积概率')

plt.tight_layout()

plt.show()

# 实战示例

def practical_example():

"""实际数据案例"""

# 生成模拟销售数据

np.random.seed(42)

sales_data = np.random.gamma(shape=2, scale=1000, size=1000)

# 创建分析实例

analyzer = DescriptiveStatistics(sales_data)

# 全面分析

results = analyzer.comprehensive_summary()

# 可视化

analyzer.visualize_distribution()

return results2.1.2 描述统计架构图

描述统计的关键价值在于:用少量指标概括大量数据的基本特征,为后续的推断统计奠定基础。

3 概率分布:统计模型的基石

3.1 常用概率分布深度解析

3.1.1 离散型概率分布

python

# probability_distributions.py

import numpy as np

import matplotlib.pyplot as plt

from scipy import stats

import seaborn as sns

from typing import Dict, List, Tuple

class ProbabilityDistributions:

"""概率分布全面分析"""

def __init__(self):

self.distributions = {}

def analyze_discrete_distributions(self):

"""分析离散型概率分布"""

# 二项分布

n, p = 10, 0.5

binomial = stats.binom(n, p)

# 泊松分布

mu = 3

poisson = stats.poisson(mu)

# 几何分布

geom_p = 0.3

geometric = stats.geom(geom_p)

discrete_dists = {

'二项分布': binomial,

'泊松分布': poisson,

'几何分布': geometric

}

return discrete_dists

def analyze_continuous_distributions(self):

"""分析连续型概率分布"""

# 正态分布

normal = stats.norm(0, 1)

# 指数分布

exponential = stats.expon(scale=1)

# Gamma分布

gamma = stats.gamma(a=2, scale=1)

# t分布

t_dist = stats.t(df=10)

continuous_dists = {

'正态分布': normal,

'指数分布': exponential,

'Gamma分布': gamma,

't分布': t_dist

}

return continuous_dists

def distribution_properties(self, dist, dist_name: str, parameters: Dict):

"""计算分布的性质"""

properties = {}

# 基本性质

if hasattr(dist, 'mean'):

properties['均值'] = dist.mean()

if hasattr(dist, 'var'):

properties['方差'] = dist.var()

if hasattr(dist, 'std'):

properties['标准差'] = dist.std()

# 特定分位数

properties['中位数'] = dist.median()

properties['95%分位数'] = dist.ppf(0.95)

# 生成样本

samples = dist.rvs(size=1000)

properties['样本均值'] = np.mean(samples)

properties['样本标准差'] = np.std(samples)

return properties

def visualize_distributions(self):

"""可视化多种概率分布"""

discrete_dists = self.analyze_discrete_distributions()

continuous_dists = self.analyze_continuous_distributions()

fig, axes = plt.subplots(2, 2, figsize=(15, 10))

# 离散分布可视化

x_discrete = np.arange(0, 15)

for i, (name, dist) in enumerate(discrete_dists.items()):

pmf = dist.pmf(x_discrete)

axes[0, 0].plot(x_discrete, pmf, 'o-', label=name, alpha=0.7)

axes[0, 0].set_title('离散分布概率质量函数(PMF)')

axes[0, 0].legend()

axes[0, 0].set_xlabel('x')

axes[0, 0].set_ylabel('P(X=x)')

# 连续分布PDF

x_continuous = np.linspace(-4, 4, 1000)

for name, dist in continuous_dists.items():

if name == '指数分布':

x_continuous = np.linspace(0, 4, 1000)

pdf = dist.pdf(x_continuous)

axes[0, 1].plot(x_continuous, pdf, label=name, alpha=0.7)

axes[0, 1].set_title('连续分布概率密度函数(PDF)')

axes[0, 1].legend()

axes[0, 1].set_xlabel('x')

axes[0, 1].set_ylabel('f(x)')

# CDF可视化

for name, dist in continuous_dists.items():

if name == '指数分布':

x_continuous = np.linspace(0, 4, 1000)

cdf = dist.cdf(x_continuous)

axes[1, 0].plot(x_continuous, cdf, label=name, alpha=0.7)

axes[1, 0].set_title('累积分布函数(CDF)')

axes[1, 0].legend()

axes[1, 0].set_xlabel('x')

axes[1, 0].set_ylabel('F(x)')

# 分位数函数

p = np.linspace(0.01, 0.99, 100)

for name, dist in continuous_dists.items():

ppf = dist.ppf(p)

axes[1, 1].plot(p, ppf, label=name, alpha=0.7)

axes[1, 1].set_title('分位数函数(PPF)')

axes[1, 1].legend()

axes[1, 1].set_xlabel('p')

axes[1, 1].set_ylabel('F⁻¹(p)')

plt.tight_layout()

plt.show()

def distribution_fitting_demo(self, data):

"""分布拟合演示"""

# 尝试拟合多种分布

distributions_to_fit = [

('正态分布', stats.norm),

('指数分布', stats.expon),

('Gamma分布', stats.gamma),

('对数正态分布', stats.lognorm)

]

fitting_results = {}

fig, axes = plt.subplots(2, 2, figsize=(15, 10))

for i, (name, dist) in enumerate(distributions_to_fit):

# 拟合分布

params = dist.fit(data)

# 创建拟合的分布对象

fitted_dist = dist(*params)

# 计算拟合优度

ks_statistic, ks_pvalue = stats.kstest(data, fitted_dist.cdf)

fitting_results[name] = {

'parameters': params,

'ks_statistic': ks_statistic,

'ks_pvalue': ks_pvalue

}

# 可视化拟合结果

row, col = i // 2, i % 2

axes[row, col].hist(data, density=True, alpha=0.6, color='g', bins=30)

# 绘制拟合的PDF

xmin, xmax = axes[row, col].get_xlim()

x = np.linspace(xmin, xmax, 100)

pdf = fitted_dist.pdf(x)

axes[row, col].plot(x, pdf, 'k', linewidth=2)

axes[row, col].set_title(f'{name}拟合\nKS检验p值: {ks_pvalue:.4f}')

plt.tight_layout()

plt.show()

return fitting_results3.1.2 概率分布关系图

概率分布的核心价值在于:为统计推断提供理论基础,不同的分布对应不同的数据生成过程和统计模型。

4 置信区间:估计的不确定性量化

4.1 置信区间原理与实践

4.1.1 置信区间计算方法

python

# confidence_intervals.py

import numpy as np

import scipy.stats as stats

import matplotlib.pyplot as plt

from typing import Tuple, List, Dict

class ConfidenceIntervalAnalysis:

"""置信区间全面分析"""

def __init__(self):

self.ci_results = {}

def normal_ci(self, data: np.ndarray, confidence: float = 0.95) -> Tuple[float, float]:

"""正态分布置信区间"""

n = len(data)

mean = np.mean(data)

std_err = stats.sem(data) # 标准误

# 计算t分布的临界值

t_critical = stats.t.ppf((1 + confidence) / 2, df=n-1)

margin_of_error = t_critical * std_err

ci_lower = mean - margin_of_error

ci_upper = mean + margin_of_error

return ci_lower, ci_upper

def proportion_ci(self, successes: int, trials: int, confidence: float = 0.95) -> Tuple[float, float]:

"""比例置信区间(Wilson score interval)"""

p_hat = successes / trials

z_critical = stats.norm.ppf((1 + confidence) / 2)

# Wilson score interval

denominator = 1 + z_critical**2 / trials

centre_adjusted = p_hat + z_critical**2 / (2 * trials)

adjusted_se = np.sqrt(p_hat * (1 - p_hat) / trials + z_critical**2 / (4 * trials**2))

ci_lower = (centre_adjusted - z_critical * adjusted_se) / denominator

ci_upper = (centre_adjusted + z_critical * adjusted_se) / denominator

return ci_lower, ci_upper

def variance_ci(self, data: np.ndarray, confidence: float = 0.95) -> Tuple[float, float]:

"""方差置信区间"""

n = len(data)

sample_variance = np.var(data, ddof=1)

# 使用卡方分布

chi2_lower = stats.chi2.ppf((1 - confidence) / 2, df=n-1)

chi2_upper = stats.chi2.ppf((1 + confidence) / 2, df=n-1)

ci_lower = (n - 1) * sample_variance / chi2_upper

ci_upper = (n - 1) * sample_variance / chi2_lower

return ci_lower, ci_upper

def bootstrap_ci(self, data: np.ndarray, statistic: callable,

n_bootstrap: int = 10000, confidence: float = 0.95) -> Tuple[float, float]:

"""Bootstrap置信区间"""

bootstrap_stats = []

for _ in range(n_bootstrap):

# 有放回抽样

bootstrap_sample = np.random.choice(data, size=len(data), replace=True)

bootstrap_stat = statistic(bootstrap_sample)

bootstrap_stats.append(bootstrap_stat)

# 计算百分位数

alpha = (1 - confidence) / 2

ci_lower = np.percentile(bootstrap_stats, 100 * alpha)

ci_upper = np.percentile(bootstrap_stats, 100 * (1 - alpha))

return ci_lower, ci_upper

def compare_ci_methods(self, data: np.ndarray, true_mean: float = None):

"""比较不同置信区间方法"""

methods = {

'正态近似': self.normal_ci,

'Bootstrap': lambda d: self.bootstrap_ci(d, np.mean)

}

comparison = {}

fig, axes = plt.subplots(1, 2, figsize=(15, 6))

for i, (method_name, method_func) in enumerate(methods.items()):

ci_lower, ci_upper = method_func(data)

width = ci_upper - ci_lower

comparison[method_name] = {

'lower': ci_lower,

'upper': ci_upper,

'width': width,

'contains_true': true_mean is not None and ci_lower <= true_mean <= ci_upper

}

# 可视化置信区间

axes[0].errorbar(i, np.mean(data), yerr=[[np.mean(data)-ci_lower], [ci_upper-np.mean(data)]],

fmt='o', capsize=5, label=method_name)

axes[0].set_title('置信区间比较')

axes[0].set_ylabel('均值估计')

axes[0].legend()

# 覆盖概率模拟

coverage_probabilities = self.simulate_coverage_probability()

methods_list = list(coverage_probabilities.keys())

probabilities = list(coverage_probabilities.values())

axes[1].bar(methods_list, probabilities)

axes[1].set_title('覆盖概率模拟')

axes[1].set_ylabel('覆盖概率')

axes[1].set_ylim(0, 1)

for i, v in enumerate(probabilities):

axes[1].text(i, v + 0.01, f'{v:.3f}', ha='center')

plt.tight_layout()

plt.show()

return comparison

def simulate_coverage_probability(self, n_simulations: int = 1000,

sample_size: int = 30, true_mean: float = 0) -> Dict[str, float]:

"""模拟置信区间的覆盖概率"""

coverage_counts = {

'正态方法': 0,

'Bootstrap': 0

}

for _ in range(n_simulations):

# 生成来自正态分布的样本

sample = np.random.normal(true_mean, 1, sample_size)

# 正态方法

ci_normal = self.normal_ci(sample)

if ci_normal[0] <= true_mean <= ci_normal[1]:

coverage_counts['正态方法'] += 1

# Bootstrap方法

ci_bootstrap = self.bootstrap_ci(sample, np.mean, n_bootstrap=1000)

if ci_bootstrap[0] <= true_mean <= ci_bootstrap[1]:

coverage_counts['Bootstrap'] += 1

coverage_probabilities = {k: v / n_simulations for k, v in coverage_counts.items()}

return coverage_probabilities

def sample_size_power_analysis(self, effect_size: float, alpha: float = 0.05,

power: float = 0.8) -> int:

"""样本量功效分析"""

from statsmodels.stats.power import TTestIndPower

power_analysis = TTestIndPower()

required_n = power_analysis.solve_power(

effect_size=effect_size,

alpha=alpha,

power=power,

ratio=1.0

)

return int(np.ceil(required_n))

# 置信区间应用示例

def confidence_interval_demo():

"""置信区间实战演示"""

# 生成模拟数据

np.random.seed(42)

sample_data = np.random.normal(100, 15, 100)

# 创建分析实例

ci_analyzer = ConfidenceIntervalAnalysis()

# 计算各种置信区间

mean_ci = ci_analyzer.normal_ci(sample_data)

print(f"均值95%置信区间: {mean_ci}")

# 比例置信区间示例

prop_ci = ci_analyzer.proportion_ci(75, 100) # 75次成功,总共100次试验

print(f"比例95%置信区间: {prop_ci}")

# 方差置信区间

var_ci = ci_analyzer.variance_ci(sample_data)

print(f"方差95%置信区间: {var_ci}")

# 方法比较

comparison = ci_analyzer.compare_ci_methods(sample_data, true_mean=100)

# 样本量分析

required_sample_size = ci_analyzer.sample_size_power_analysis(0.5)

print(f"检测中等效应大小所需的样本量: {required_sample_size}")

return {

'mean_ci': mean_ci,

'prop_ci': prop_ci,

'var_ci': var_ci,

'comparison': comparison,

'required_sample_size': required_sample_size

}4.1.2 置信区间构建流程图

置信区间的核心价值在于:量化估计的不确定性,为统计推断提供区间估计而非点估计。

5 假设检验:统计决策的科学基础

5.1 假设检验完整框架

5.1.1 参数检验方法

python

# hypothesis_testing.py

import numpy as np

import scipy.stats as stats

import pandas as pd

import matplotlib.pyplot as plt

from statsmodels.stats.power import TTestPower, NormalIndPower

from statsmodels.stats.proportion import proportion_effectsize, proportions_ztest

class HypothesisTestingFramework:

"""假设检验完整框架"""

def __init__(self):

self.test_results = {}

def t_test(self, sample1: np.ndarray, sample2: np.ndarray = None,

mu0: float = 0, test_type: str = 'two-sided') -> Dict:

"""t检验实现"""

if sample2 is None:

# 单样本t检验

t_stat, p_value = stats.ttest_1samp(sample1, mu0)

test_name = "单样本t检验"

else:

# 独立样本t检验

t_stat, p_value = stats.ttest_ind(sample1, sample2)

test_name = "独立样本t检验"

# 根据检验类型调整p值

if test_type == 'greater':

p_value = 1 - p_value/2 if t_stat > 0 else p_value/2

elif test_type == 'less':

p_value = p_value/2 if t_stat < 0 else 1 - p_value/2

result = {

'test_name': test_name,

't_statistic': t_stat,

'p_value': p_value,

'test_type': test_type,

'significant': p_value < 0.05

}

return result

def anova_test(self, samples: List[np.ndarray]) -> Dict:

"""方差分析(ANOVA)"""

f_stat, p_value = stats.f_oneway(*samples)

result = {

'test_name': '单因素方差分析',

'f_statistic': f_stat,

'p_value': p_value,

'significant': p_value < 0.05

}

return result

def chi_square_test(self, observed: np.ndarray, expected: np.ndarray = None) -> Dict:

"""卡方检验"""

if expected is None:

# 拟合优度检验

chi2_stat, p_value = stats.chisquare(observed)

test_name = '卡方拟合优度检验'

else:

# 独立性检验

chi2_stat, p_value, dof, expected = stats.chi2_contingency(observed)

test_name = '卡方独立性检验'

result = {

'test_name': test_name,

'chi2_statistic': chi2_stat,

'p_value': p_value,

'significant': p_value < 0.05

}

return result

def nonparametric_tests(self, sample1: np.ndarray, sample2: np.ndarray = None) -> Dict:

"""非参数检验"""

if sample2 is None:

# 单样本符号检验

stat, p_value = stats.wilcoxon(sample1)

test_name = 'Wilcoxon符号秩检验'

else:

# 两样本Mann-Whitney U检验

stat, p_value = stats.mannwhitneyu(sample1, sample2)

test_name = 'Mann-Whitney U检验'

result = {

'test_name': test_name,

'test_statistic': stat,

'p_value': p_value,

'significant': p_value < 0.05

}

return result

def power_analysis(self, effect_size: float, alpha: float = 0.05,

power: float = 0.8, ratio: float = 1.0) -> Dict:

"""功效分析"""

power_analyzer = TTestPower()

required_n = power_analyzer.solve_power(

effect_size=effect_size,

alpha=alpha,

power=power,

ratio=ratio

)

# 计算不同样本量的功效曲线

sample_sizes = np.arange(10, 500, 10)

powers = power_analyzer.power(effect_size, sample_sizes, alpha, ratio=ratio)

return {

'required_sample_size': int(np.ceil(required_n)),

'sample_sizes': sample_sizes,

'powers': powers,

'effect_size': effect_size,

'alpha': alpha

}

def multiple_testing_correction(self, p_values: List[float],

method: str = 'bonferroni') -> List[float]:

"""多重检验校正"""

from statsmodels.stats.multitest import multipletests

rejected, corrected_pvals, _, _ = multipletests(p_values,

alpha=0.05,

method=method)

return corrected_pvals

def visualize_test_results(self, test_results: Dict):

"""可视化检验结果"""

fig, axes = plt.subplots(2, 2, figsize=(15, 12))

# 1. p值分布

p_values = [result['p_value'] for result in test_results.values()]

axes[0, 0].hist(p_values, bins=20, alpha=0.7, color='skyblue')

axes[0, 0].axvline(0.05, color='red', linestyle='--', label='α=0.05')

axes[0, 0].set_title('p值分布')

axes[0, 0].set_xlabel('p值')

axes[0, 0].set_ylabel('频数')

axes[0, 0].legend()

# 2. 检验统计量分布

test_stats = [result.get('t_statistic', result.get('f_statistic',

result.get('chi2_statistic', result.get('test_statistic', 0))))

for result in test_results.values()]

axes[0, 1].hist(test_stats, bins=20, alpha=0.7, color='lightgreen')

axes[0, 1].set_title('检验统计量分布')

axes[0, 1].set_xlabel('检验统计量')

axes[0, 1].set_ylabel('频数')

# 3. 功效分析曲线

power_results = self.power_analysis(0.5)

axes[1, 0].plot(power_results['sample_sizes'], power_results['powers'])

axes[1, 0].axhline(0.8, color='red', linestyle='--', label='功效=0.8')

axes[1, 0].axvline(power_results['required_sample_size'],

color='blue', linestyle='--',

label=f'所需样本量: {power_results["required_sample_size"]}')

axes[1, 0].set_title('功效分析曲线')

axes[1, 0].set_xlabel('样本量')

axes[1, 0].set_ylabel('检验功效')

axes[1, 0].legend()

# 4. 多重检验校正

original_pvals = np.random.uniform(0, 0.1, 100)

corrected_pvals = self.multiple_testing_correction(original_pvals.tolist())

axes[1, 1].scatter(original_pvals, corrected_pvals, alpha=0.6)

axes[1, 1].plot([0, 0.1], [0, 0.1], 'r--', label='y=x')

axes[1, 1].set_title('多重检验校正')

axes[1, 1].set_xlabel('原始p值')

axes[1, 1].set_ylabel('校正后p值')

axes[1, 1].legend()

plt.tight_layout()

plt.show()

def comprehensive_testing_suite(self):

"""综合检验套件演示"""

# 生成模拟数据

np.random.seed(42)

# 三组不同的数据

group_a = np.random.normal(100, 15, 50)

group_b = np.random.normal(110, 15, 50)

group_c = np.random.normal(105, 15, 50)

# 执行各种检验

tests = {}

# t检验

tests['t_test'] = self.t_test(group_a, group_b)

# ANOVA

tests['anova'] = self.anova_test([group_a, group_b, group_c])

# 非参数检验

tests['nonparametric'] = self.nonparametric_tests(group_a, group_b)

# 卡方检验(模拟数据)

observed = np.array([[30, 20], [25, 25]])

tests['chi_square'] = self.chi_square_test(observed)

# 可视化结果

self.visualize_test_results(tests)

return tests5.1.2 假设检验决策流程图

假设检验的核心价值在于:提供客观的决策框架,避免主观判断的偏差,确保结论的统计显著性。

6 A/B测试:商业应用的统计实践

6.1 完整的A/B测试框架

6.1.1 A/B测试设计与分析

python

# ab_testing.py

import numpy as np

import pandas as pd

import scipy.stats as stats

import matplotlib.pyplot as plt

from statsmodels.stats.proportion import proportions_ztest, proportion_effectsize

from statsmodels.stats.power import TTestIndPower, NormalIndPower

import warnings

warnings.filterwarnings('ignore')

class ABTestingFramework:

"""A/B测试完整框架"""

def __init__(self):

self.experiment_results = {}

def calculate_sample_size(self, baseline_rate: float, mde: float,

alpha: float = 0.05, power: float = 0.8) -> int:

"""计算所需样本量"""

effect_size = proportion_effectsize(baseline_rate, baseline_rate + mde)

power_analyzer = NormalIndPower()

required_n = power_analyzer.solve_power(

effect_size=effect_size,

alpha=alpha,

power=power,

ratio=1.0

)

return int(np.ceil(required_n))

def simulate_ab_test_data(self, baseline_rate: float, effect_size: float,

sample_size: int, days: int = 7) -> pd.DataFrame:

"""模拟A/B测试数据"""

np.random.seed(42)

data = []

for day in range(days):

# 控制组数据

control_conversions = np.random.binomial(sample_size // 2, baseline_rate)

control_total = sample_size // 2

# 实验组数据

treatment_rate = baseline_rate + effect_size

treatment_conversions = np.random.binomial(sample_size // 2, treatment_rate)

treatment_total = sample_size // 2

data.append({

'day': day + 1,

'control_conversions': control_conversions,

'control_total': control_total,

'treatment_conversions': treatment_conversions,

'treatment_total': treatment_total,

'control_rate': control_conversions / control_total,

'treatment_rate': treatment_conversions / treatment_total

})

return pd.DataFrame(data)

def analyze_ab_test(self, df: pd.DataFrame) -> Dict:

"""分析A/B测试结果"""

# 汇总数据

total_control_conv = df['control_conversions'].sum()

total_control = df['control_total'].sum()

total_treatment_conv = df['treatment_conversions'].sum()

total_treatment = df['treatment_total'].sum()

# 比例检验

z_stat, p_value = proportions_ztest(

[total_control_conv, total_treatment_conv],

[total_control, total_treatment],

alternative='two-sided'

)

# 计算置信区间

control_rate = total_control_conv / total_control

treatment_rate = total_treatment_conv / total_treatment

rate_difference = treatment_rate - control_rate

# 差异的标准误

se_diff = np.sqrt(control_rate * (1 - control_rate) / total_control +

treatment_rate * (1 - treatment_rate) / total_treatment)

# 95%置信区间

z_critical = stats.norm.ppf(0.975)

ci_lower = rate_difference - z_critical * se_diff

ci_upper = rate_difference + z_critical * se_diff

# 计算统计功效

effect_size = proportion_effectsize(control_rate, treatment_rate)

power_analyzer = NormalIndPower()

achieved_power = power_analyzer.power(effect_size, total_control + total_treatment,

0.05, ratio=1.0)

results = {

'control_rate': control_rate,

'treatment_rate': treatment_rate,

'absolute_difference': rate_difference,

'relative_improvement': rate_difference / control_rate,

'z_statistic': z_stat,

'p_value': p_value,

'significant': p_value < 0.05,

'confidence_interval': (ci_lower, ci_upper),

'achieved_power': achieved_power,

'total_sample_size': total_control + total_treatment

}

return results

def sequential_analysis(self, df: pd.DataFrame, alpha: float = 0.05) -> Dict:

"""序贯分析 - 早期停止检测"""

daily_results = []

for i in range(1, len(df) + 1):

day_data = df.head(i)

# 累计数据

control_conv = day_data['control_conversions'].sum()

control_total = day_data['control_total'].sum()

treatment_conv = day_data['treatment_conversions'].sum()

treatment_total = day_data['treatment_total'].sum()

# 比例检验

z_stat, p_value = proportions_ztest(

[control_conv, treatment_conv],

[control_total, treatment_total]

)

daily_results.append({

'day': i,

'p_value': p_value,

'significant': p_value < alpha,

'control_rate': control_conv / control_total,

'treatment_rate': treatment_conv / treatment_total

})

return pd.DataFrame(daily_results)

def visualize_ab_test_results(self, df: pd.DataFrame, results: Dict,

sequential_results: pd.DataFrame):

"""可视化A/B测试结果"""

fig, axes = plt.subplots(2, 2, figsize=(15, 12))

# 1. 每日转化率趋势

axes[0, 0].plot(df['day'], df['control_rate'], 'b-', label='控制组', marker='o')

axes[0, 0].plot(df['day'], df['treatment_rate'], 'r-', label='实验组', marker='s')

axes[0, 0].set_xlabel('天数')

axes[0, 0].set_ylabel('转化率')

axes[0, 0].set_title('每日转化率趋势')

axes[0, 0].legend()

axes[0, 0].grid(True, alpha=0.3)

# 2. 累积转化率

df['cumulative_control_conv'] = df['control_conversions'].cumsum()

df['cumulative_control_total'] = df['control_total'].cumsum()

df['cumulative_treatment_conv'] = df['treatment_conversions'].cumsum()

df['cumulative_treatment_total'] = df['treatment_total'].cumsum()

df['cumulative_control_rate'] = (df['cumulative_control_conv'] /

df['cumulative_control_total'])

df['cumulative_treatment_rate'] = (df['cumulative_treatment_conv'] /

df['cumulative_treatment_total'])

axes[0, 1].plot(df['day'], df['cumulative_control_rate'], 'b-',

label='控制组', marker='o')

axes[0, 1].plot(df['day'], df['cumulative_treatment_rate'], 'r-',

label='实验组', marker='s')

axes[0, 1].set_xlabel('天数')

axes[0, 1].set_ylabel('累积转化率')

axes[0, 1].set_title('累积转化率')

axes[0, 1].legend()

axes[0, 1].grid(True, alpha=0.3)

# 3. p值序列

axes[1, 0].plot(sequential_results['day'], sequential_results['p_value'], 'g-', marker='o')

axes[1, 0].axhline(0.05, color='red', linestyle='--', label='显著性阈值 (0.05)')

axes[1, 0].set_xlabel('天数')

axes[1, 0].set_ylabel('p值')

axes[1, 0].set_title('序贯分析 - p值变化')

axes[1, 0].set_yscale('log')

axes[1, 0].legend()

axes[1, 0].grid(True, alpha=0.3)

# 4. 置信区间

diff = results['absolute_difference']

ci_lower, ci_upper = results['confidence_interval']

axes[1, 1].errorbar(0, diff, yerr=[[diff - ci_lower], [ci_upper - diff]],

fmt='o', capsize=5, color='purple', markersize=8)

axes[1, 1].axhline(0, color='red', linestyle='--', alpha=0.5)

axes[1, 1].set_xlim(-0.5, 0.5)

axes[1, 1].set_title('差异的置信区间')

axes[1, 1].set_ylabel('转化率差异')

axes[1, 1].grid(True, alpha=0.3)

plt.tight_layout()

plt.show()

def run_complete_ab_test(self, baseline_rate: float = 0.10,

effect_size: float = 0.02, sample_size: int = 5000):

"""运行完整的A/B测试"""

print("=== A/B测试完整流程 ===")

# 1. 样本量计算

required_n = self.calculate_sample_size(baseline_rate, effect_size)

print(f"所需样本量: {required_n}")

print(f"实际样本量: {sample_size}")

# 2. 模拟数据

df = self.simulate_ab_test_data(baseline_rate, effect_size, sample_size)

print("\n模拟数据摘要:")

print(df.describe())

# 3. 分析结果

results = self.analyze_ab_test(df)

print("\nA/B测试结果:")

for key, value in results.items():

if key == 'confidence_interval':

print(f"{key}: ({value[0]:.4f}, {value[1]:.4f})")

elif isinstance(value, float):

print(f"{key}: {value:.4f}")

else:

print(f"{key}: {value}")

# 4. 序贯分析

sequential_results = self.sequential_analysis(df)

# 5. 可视化

self.visualize_ab_test_results(df, results, sequential_results)

return {

'data': df,

'results': results,

'sequential_results': sequential_results

}

# A/B测试实战演示

def ab_testing_demo():

"""A/B测试实战演示"""

# 创建A/B测试框架实例

ab_tester = ABTestingFramework()

# 运行完整的A/B测试

results = ab_tester.run_complete_ab_test(

baseline_rate=0.10, # 基线转化率10%

effect_size=0.02, # 期望提升2%

sample_size=10000 # 总样本量

)

# 结果解读

if results['results']['significant']:

improvement = results['results']['relative_improvement']

print(f"\n🎉 A/B测试结果显著!")

print(f"相对提升: {improvement:.1%}")

print(f"置信区间: ({results['results']['confidence_interval'][0]:.4f}, "

f"{results['results']['confidence_interval'][1]:.4f})")

else:

print(f"\n⚠️ A/B测试结果不显著")

print("可能原因: 样本量不足、效应大小太小、或真实无效应")

return results6.1.2 A/B测试架构图

A/B测试的核心价值在于:通过随机对照实验,建立因果关系而非相关关系,为业务决策提供可靠依据。

7 总结与展望

7.1 统计分析技术发展趋势

7.2 学习路径建议

基于13年的统计分析实战经验,我建议的学习路径:

-

基础阶段:掌握描述统计和概率分布基础

-

进阶阶段:深入学习假设检验和置信区间

-

高级阶段:掌握实验设计和因果推断

-

专家阶段:精通贝叶斯统计和机器学习集成

官方文档与参考资源

-

SciPy统计函数文档- 官方统计函数参考

-

Statsmodels文档- 统计模型库文档

-

Python数据分析手册- 完整的数据分析指南

-

统计学习导论- 统计学习理论基础

通过本文的完整学习路径,您应该已经掌握了Python统计分析从基础到高级的全套技术。统计分析不仅是技术工具,更是科学思维的体现。希望本文能帮助您在数据驱动的决策道路上走得更稳更远!