文章目录

- 基于TextRNN的中文情感分类项目解析

-

- 一、项目整体思路

- 二、项目架构与代码分析

-

- [1. 词表构建模块 (vocab_create.py)](#1. 词表构建模块 (vocab_create.py))

- [2. 数据集加载与处理模块 (load_dataset.py)](#2. 数据集加载与处理模块 (load_dataset.py))

- [3. TextRNN模型定义 (TextRNN.py)](#3. TextRNN模型定义 (TextRNN.py))

- [4. 训练与评估模块 (train_eval_test.py)](#4. 训练与评估模块 (train_eval_test.py))

- [5. 主程序模块 (main.py)](#5. 主程序模块 (main.py))

- 三、数据处理流程总结

基于TextRNN的中文情感分类项目解析

一、项目整体思路

该项目对中文微博文本进行情感分类,将情感分为四类:喜悦、愤怒、厌恶、低落。项目的核心思想是将文本转换为数值表示,然后通过神经网络学习文本特征与情感标签之间的映射关系。

数据预处理的关键思考

- 词向量转换:每个词/字转换为固定维度(200维)的词向量

- 固定序列长度:统一处理为70个词的固定长度,与图像处理类似

- 超长处理:超过70个词/字时截断,只保留前70个

- 不足处理 :少于70个词/字时用特殊标记

<PAD>填充 - 词表压缩 :只使用频率最高的4760个词/字,低频词用

<UNK>表示 - 词表优化:低频词可能训练不出有效特征,因此选择高频词训练

二、项目架构与代码分析

1. 词表构建模块 (vocab_create.py)

python

from tqdm import tqdm

import pickle as pkl

MAX_VOCAB_SIZE = 4760

UNK, PAD = '<UNK>', '<PAD>'

def build_vocab(file_path, max_size, min_freq):

# 按字符进行分词

tokenizer = lambda x: [y for y in x]

vocab_dict = {}

with open(file_path, 'r', encoding='UTF-8') as f:

i = 0

for line in tqdm(f):

if i == 0: # 跳过标题行

i += 1

continue

lin = line[2:].strip() # 跳过标签部分

if not lin:

continue

# 统计每个字符出现的频率

for word in tokenizer(lin):

vocab_dict[word] = vocab_dict.get(word, 0) + 1

# 筛选出现频率大于min_freq的字符,按频率降序排列,取前max_size个

vocab_list = sorted([_ for _ in vocab_dict.items() if _[1] > min_freq],

key=lambda x: x[1], reverse=True)[:max_size]

# 创建词表字典:字符->索引

vocab_dict = {word_count[0]: idx for idx, word_count in enumerate(vocab_list)}

# 添加特殊标记

vocab_dict.update({UNK: len(vocab_dict), PAD: len(vocab_dict) + 1})

# 保存词表到文件

pkl.dump(vocab_dict, open('simplifyweibo_4_moods.pkl', 'wb'))

print(f"Vocab size: {len(vocab_dict)}")

return vocab_dict

if __name__ == "__main__":

# 构建词表:最多4760个词,最小出现频率为3

vocab = build_vocab('simplifyweibo_4_moods.csv', MAX_VOCAB_SIZE, 3)

print('vocab')方法解析:

build_vocab(): 从原始数据构建词表file_path: 原始数据文件路径max_size: 词表最大容量(4760)min_freq: 最小出现频率(过滤低频词)

- 返回:词表字典,包含字符到索引的映射

2. 数据集加载与处理模块 (load_dataset.py)

python

from tqdm import tqdm

import pickle as pkl

import random

import torch

UNK, PAD = '<UNK>', '<PAD>'

def load_dataset(path, pad_size=70):

contents = []

# 加载预构建的词表

vocab = pkl.load(open('simplifyweibo_4_moods.pkl', 'rb'))

tokenizer = lambda x: [y for y in x] # 字符级分词

with open(path, 'r', encoding='UTF-8') as f:

i = 0

for line in tqdm(f):

if i == 0: # 跳过标题行

i += 1

continue

if not line:

continue

# 解析标签和内容

label = int(line[0])

content = line[2:].strip('\n')

words_line = []

# 分词

token = tokenizer(content)

seq_len = len(token)

# 填充或截断到固定长度

if pad_size:

if len(token) < pad_size:

token.extend([PAD] * (pad_size - len(token)))

else:

token = token[:pad_size]

seq_len = pad_size

# 将字符转换为索引

for word in token:

words_line.append(vocab.get(word, vocab.get(UNK)))

# 存储:索引序列、标签、实际长度

contents.append((words_line, int(label), seq_len))

# 随机打乱并划分数据集

random.shuffle(contents)

train_data = contents[:int(len(contents) * 0.8)] # 80%训练集

dev_data = contents[int(len(contents) * 0.8):int(len(contents) * 0.9)] # 10%验证集

test_data = contents[int(len(contents) * 0.9):] # 10%测试集

return vocab, train_data, dev_data, test_data

class DatasetIterator(object):

def __init__(self, batches, batch_size, device):

self.batch_size = batch_size

self.batches = batches

self.n_batches = len(batches) // batch_size

self.residue = False # 是否有剩余数据

if len(batches) % self.n_batches != 0:

self.residue = True

self.index = 0

self.device = device # 设备:CPU或GPU

def _to_tensor(self, datas):

# 将数据转换为PyTorch张量

x = torch.LongTensor([_[0] for _ in datas]).to(self.device) # 文本索引

y = torch.LongTensor([_[1] for _ in datas]).to(self.device) # 标签

seq_len = torch.LongTensor([_[2] for _ in datas]).to(self.device) # 实际长度

return (x, seq_len), y

def __next__(self):

# 获取下一个批次

if self.residue and self.index == self.n_batches:

# 处理最后一个不完整的批次

batches = self.batches[self.index * self.batch_size:len(self.batches)]

self.index += 1

batches = self._to_tensor(batches)

return batches

elif self.index > self.n_batches:

# 迭代结束

self.index = 0

raise StopIteration

else:

# 正常批次

baches = self.batches[self.index * self.batch_size:(self.index + 1) * self.batch_size]

self.index += 1

baches = self._to_tensor(baches)

return baches

def __iter__(self):

return self

def __len__(self):

# 返回总批次数

if self.residue:

return self.n_batches + 1

else:

return self.n_batches

if __name__ == '__main__':

vocab, train_data, dev_data, test_data = load_dataset('simplifyweibo_4_moods.csv')

print(train_data, dev_data, test_data)

print('结束!')关键方法解析:

load_dataset(): 加载并预处理数据集path: 数据文件路径pad_size: 固定序列长度(默认70)

DatasetIterator: 数据迭代器类__init__(): 初始化批次信息_to_tensor(): 将数据转换为PyTorch张量__next__(): 获取下一个批次数据

3. TextRNN模型定义 (TextRNN.py)

python

import torch.nn as nn

class Model(nn.Module):

def __init__(self, embedding_pretrained, n_vocab, embed, num_classes):

super(Model, self).__init__()

# 嵌入层:将词索引转换为词向量

if embedding_pretrained is not None:

self.embedding = nn.Embedding.from_pretrained(embedding_pretrained,

padding_idx=n_vocab - 1,

freeze=False)

else:

self.embedding = nn.Embedding(n_vocab, embed, padding_idx=n_vocab - 1)

# LSTM层:提取序列特征

# 参数:输入维度200,隐藏层128,3层,双向,批优先,dropout 0.3

self.lstm = nn.LSTM(embed, 128, 3, bidirectional=True,

batch_first=True, dropout=0.3)

# 全连接层:将LSTM输出映射到类别

self.fc = nn.Linear(128 * 2, num_classes) # 双向所以*2

def forward(self, x):

x, _ = x # 解包输入,忽略长度信息

out = self.embedding(x) # (batch_size, seq_len, embed_dim)

out, _ = self.lstm(out) # LSTM处理

out = self.fc(out[:, -1, :]) # 取最后一个时间步的输出

return out模型结构解析:

- 嵌入层: 将词索引转换为200维词向量

- LSTM层 :

- 3层双向LSTM

- 隐藏层维度128(双向共256)

- dropout防止过拟合

- 全连接层: 将LSTM输出映射到4个情感类别

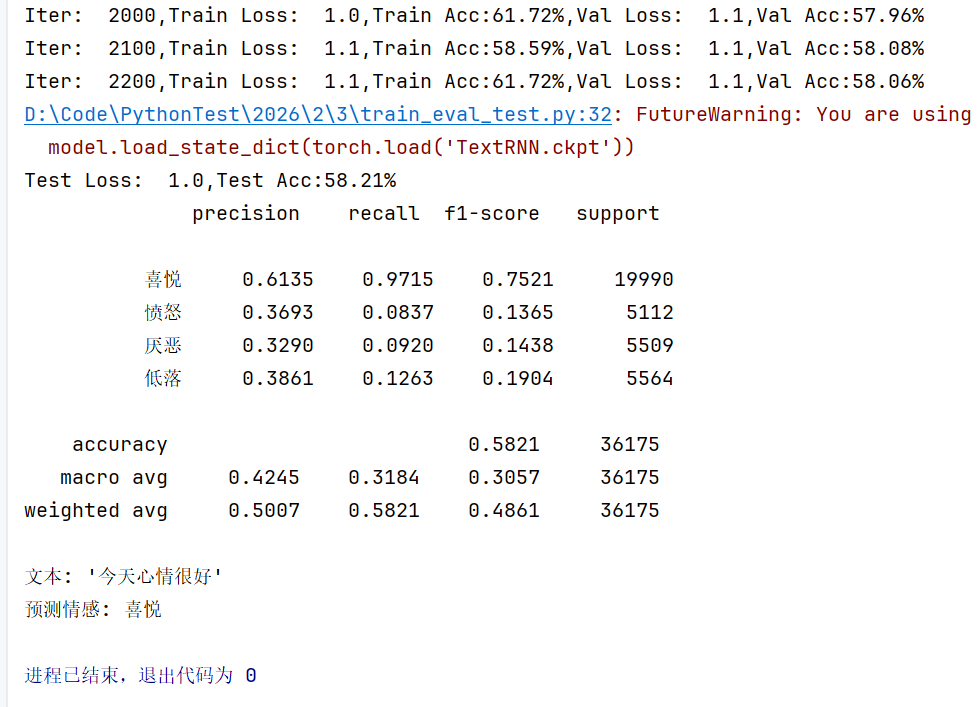

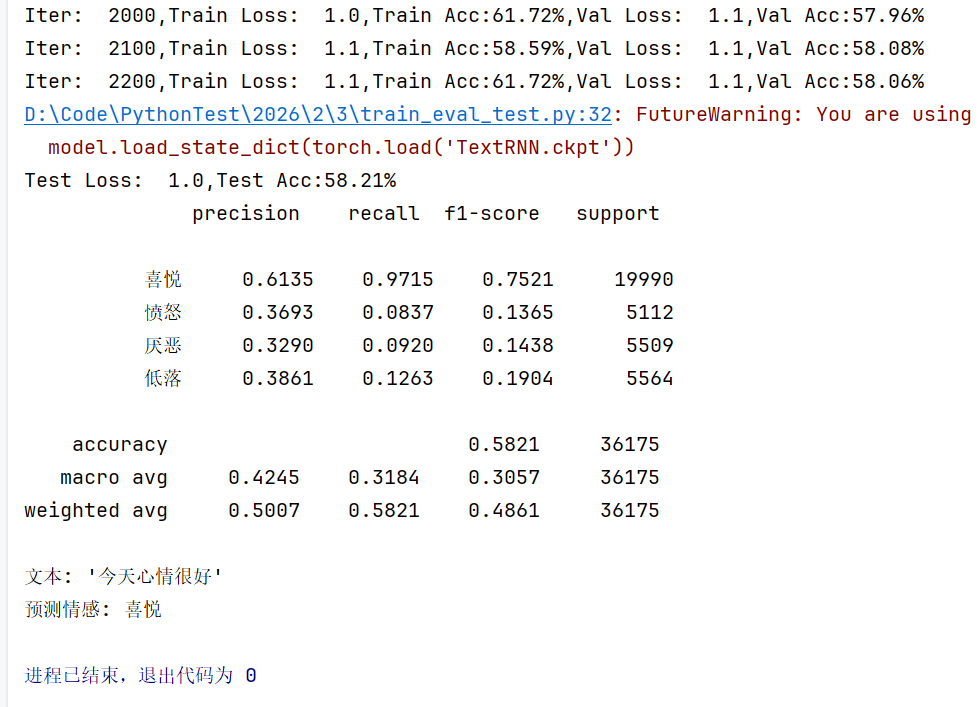

4. 训练与评估模块 (train_eval_test.py)

python

import torch

import torch.nn.functional as F

import numpy as np

from sklearn import metrics

import time

def evaluate(class_list, model, data_iter, test=False):

"""评估模型性能"""

model.eval() # 设置为评估模式

loss_total = 0

predict_all = np.array([], dtype=int)

labels_all = np.array([], dtype=int)

with torch.no_grad(): # 不计算梯度

for texts, labels in data_iter:

outputs = model(texts)

loss = F.cross_entropy(outputs, labels)

loss_total += loss

labels = labels.data.cpu().numpy()

predic = torch.max(outputs.data, 1)[1].cpu().numpy()

labels_all = np.append(labels_all, labels)

predict_all = np.append(predict_all, predic)

acc = metrics.accuracy_score(labels_all, predict_all)

if test:

# 测试时生成详细报告

report = metrics.classification_report(labels_all, predict_all,

target_names=class_list, digits=4)

return acc, loss_total / len(data_iter), report

return acc, loss_total / len(data_iter)

def test(model, test_iter, class_list):

"""测试模型"""

model.load_state_dict(torch.load('TextRNN.ckpt')) # 加载最佳模型

model.eval()

start_time = time.time()

test_acc, test_loss, test_report = evaluate(class_list, model, test_iter, test=True)

msg = "Test Loss:{0:>5.2},Test Acc:{1:6.2%}"

print(msg.format(test_loss, test_acc))

print(test_report)

def train(model, train_iter, dev_iter, test_iter, class_list):

"""训练模型"""

model.train()

optimizer = torch.optim.Adam(model.parameters(), lr=1e-3) # Adam优化器

total_batch = 0

dev_best_loss = float('inf') # 最佳验证损失

last_improve = 0 # 上次改进的批次

flag = False # 早停标志

# epochs = 20

epochs = 1 # 简化训练,实际可使用更多轮次

for epoch in range(epochs):

print('Epoch [{}/{}]'.format(epoch + 1, epochs))

for i, (trains, labels) in enumerate(train_iter):

outputs = model(trains)

loss = F.cross_entropy(outputs, labels) # 交叉熵损失

# 反向传播

model.zero_grad()

loss.backward()

optimizer.step()

# 每100个批次评估一次

if total_batch % 100 == 0:

predic = torch.max(outputs.data, 1)[1].cpu()

train_acc = metrics.accuracy_score(labels.data.cpu(), predic)

dev_acc, dev_loss = evaluate(class_list, model, dev_iter)

# 保存最佳模型

if dev_loss < dev_best_loss:

dev_best_loss = dev_loss

torch.save(model.state_dict(), 'TextRNN.ckpt')

last_improve = total_batch

msg = 'Iter:{0:>6},Train Loss:{1:>5.2},Train Acc:{2:>6.2%},Val Loss:{3:>5.2},Val Acc:{4:>6.2%}'

print(msg.format(total_batch, loss.item(), train_acc, dev_loss, dev_acc))

model.train() # 恢复训练模式

total_batch += 1

# 早停机制:10000个批次没有改进则停止

if total_batch - last_improve > 10000:

print("No optimization for a long time,auto-stopping...")

flag = True

break

if flag:

break

# 训练结束后测试

test(model, test_iter, class_list)训练策略:

- 优化器: Adam优化器,学习率0.001

- 损失函数: 交叉熵损失

- 评估频率: 每100个批次评估一次验证集

- 模型保存: 保存验证损失最低的模型

- 早停机制: 10000个批次无改进则停止训练

5. 主程序模块 (main.py)

python

import torch

import numpy as np

import load_dataset, TextRNN

from train_eval_test import train

# 设备选择:优先GPU,其次MPS(苹果芯片),最后CPU

device = "cuda" if torch.cuda.is_available() else "mpi" if torch.backends.mps.is_available() else "cpu"

# 设置随机种子确保可重复性

np.random.seed(1)

torch.cuda.manual_seed(1)

torch.cuda.device_count()

torch.backends.cudnn.deterministic = True

# 加载数据集

vocab, train_data, dev_data, test_data = load_dataset.load_dataset('simplifyweibo_4_moods.csv')

# 创建数据迭代器

train_iter = load_dataset.DatasetIterator(train_data, 128, device)

dev_iter = load_dataset.DatasetIterator(dev_data, 128, device)

test_iter = load_dataset.DatasetIterator(test_data, 128, device)

# 加载预训练词向量

embedding_pretrained = torch.tensor(np.load('embedding_Tencent.npz')["embeddings"].astype('float32'))

# 模型参数设置

embed = embedding_pretrained.size(1) if embedding_pretrained is not None else 200

class_list = ["喜悦", "愤怒", "厌恶", "低落"]

num_classes = len(class_list)

# 创建模型并移动到设备

model = TextRNN.Model(embedding_pretrained, len(vocab), embed, num_classes).to(device)

# 开始训练

train(model, train_iter, dev_iter, test_iter, class_list)

# print(train_data, dev_data, test_data)

def predict_single(model, text, vocab, device):

"""预测单条文本"""

# 文本处理

tokenizer = lambda x: [y for y in x]

tokens = tokenizer(text)

pad_size = 70

if len(tokens) < pad_size:

tokens.extend([load_dataset.PAD] * (pad_size - len(tokens)))

else:

tokens = tokens[:pad_size]

# 转换为索引

indices = [vocab.get(token, vocab.get(load_dataset.UNK)) for token in tokens]

# 转换为张量

x = torch.LongTensor([indices]).to(device)

seq_len = torch.LongTensor([min(len(tokens), pad_size)]).to(device)

# 预测

model.eval()

with torch.no_grad():

outputs = model((x, seq_len))

predicted = torch.argmax(outputs, dim=1).item()

return class_list[predicted]

# 示例使用

if __name__ == "__main__":

# ... 原有的训练代码 ...

# 测试预测

test_text = "今天心情很好"

emotion = predict_single(model, test_text, vocab, device)

print(f"文本: '{test_text}'")

print(f"预测情感: {emotion}")主程序流程:

- 环境配置: 选择计算设备,设置随机种子

- 数据准备: 加载并预处理数据集

- 模型初始化: 创建TextRNN模型

- 训练: 调用训练函数

- 预测: 提供单文本预测功能

三、数据处理流程总结

- 原始文本 → 字符级分词

- 字符序列 → 固定长度处理(填充/截断)

- 字符 → 词表索引

- 索引序列 → 词向量矩阵(通过嵌入层)

- 词向量 → LSTM特征提取

- LSTM输出 → 情感分类