一:Kafka简介

1:介绍

kafka是由Scala和Java技术栈编写

分布式的、高可靠、高吞吐、高可用、可伸缩的消息中间件。最常适用于分布式系统进行大批量数据交换。

2:JMS模型

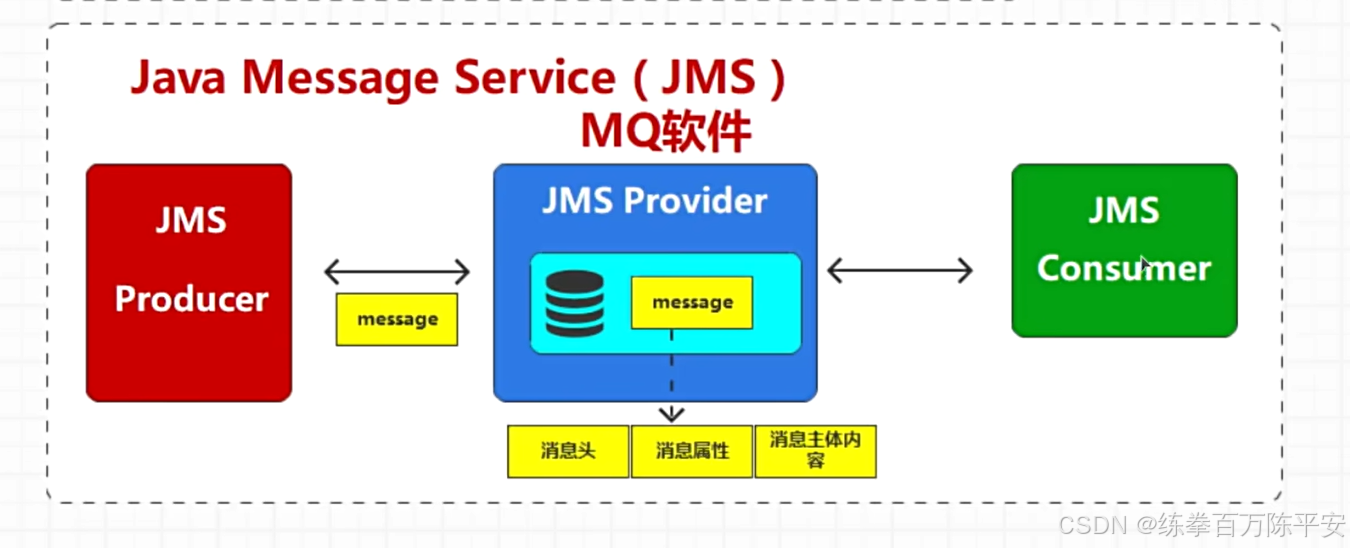

1:JMS基本信息

**JSM是什么:**Java提供的消息服务接口,具体实现由厂商实现。

**MQ名称的得来:**Java通过JMS模型传输数据给MQ的时候,数据临时在Java中使用Queue对象存储,在消息中间件当中也使用Queue数据结构,所以MQ名称得来。

**JMS模型两端:**提供消息的叫提供这、消费消息的叫消费者。

消息的格式:Java中封装消息的格式:消息头+消息属性+消息内容。

2:JMS两种消息发送接收模型

JMS 规范定义了两种主流的消息传递模式,适配不同业务场景:

点对点模型

- 核心组件 :Queue(队列)

- 工作机制 :生产者将消息发送到指定队列,一个消息只会被一个消费者消费,消费完成后消息从队列中移除;多个消费者监听同一个队列时,消息会被轮询分发,实现负载均衡。

- 适用场景 :订单处理、任务分发、一对一指令下发等确保消息仅被处理一次的场景。

发布 / 订阅模型

- 核心组件 :Topic(主题)

- 工作机制 :生产者将消息发布到主题,所有订阅了该主题的消费者都会收到同一条消息(广播模式)。

- 适用场景 :配置推送、日志广播、多系统数据同步等一对多广播通知的场景。

RabbitMQ、ActiveMQ、RocketMQ这三个都直接或者间接遵循了JMS协议Kafka完全没有遵守该协议。这是借鉴了该思想。Kafka功能简单,但是吞吐量嘎嘎猛。

3:kafka组件

服务端组件

作用:集群核心运行节点。负责消息存储、转发与集群管理)

- Broker:Kafka 服务端实例,是集群的基本单元,承担消息接收、持久化存储、请求响应核心工作,多 Broker 组成分布式集群,实现负载均衡与水平扩展。

- Topic:消息的逻辑分类容器,用于隔离不同业务场景的消息,是生产和消费的核心入口,无实际存储能力,依赖分区实现物理存储。

- Partition:Topic 的物理分片,是 Kafka 分布式存储和并行处理的基础,消息以追加方式写入,单个分区内消息严格有序,分区分布在不同 Broker 上实现负载分散。

- Replica:分区的副本,含 Leader 和 Follower 两类,Leader 处理所有读写请求,Follower 同步 Leader 数据,Leader 宕机时自动选举新 Leader,保障数据高可用和服务不中断。

- Controller:集群的核心管理者,由某个 Broker 担任,负责 Broker 上下线检测、分区副本分配、Leader 选举、集群元数据维护,新版本替代 ZooKeeper 的核心集群管理能力。

客户端组件

作用:业务侧对接入口,负责消息生产、消费与交互

- Producer:消息生产者,向 Kafka 集群发送业务消息,支持自定义分区路由策略(轮询、哈希、自定义),可配置 ACK 确认机制,保证消息投递的可靠性和幂等性。

- Consumer:消息消费者,从指定 Topic/Partition 拉取消息并处理,支持单消费和集群消费,通过消费者组(Consumer Group)实现分区负载均衡,同一组内消费者独占不同分区。

- Consumer Group:消费者集群管理单元,多个 Consumer 组成一个组,共同消费一个 Topic,实现消息的分布式消费,提升处理能力,不同组可独立消费同一 Topic,实现数据多端复用。

辅助组件

作用:保障集群稳定运行、简化运维与扩展能力的配套组件

- ZooKeeper:早期 Kafka 的集群依赖组件,负责存储集群元数据、Broker 注册发现、Leader 选举,新版本已弱化依赖,由内置 Controller 替代,仅部分低版本环境使用。

- Kafka Connect:高效的数据同步工具,实现 Kafka 与外部系统(数据库、文件系统、ES 等)的双向数据传输,提供标准化的连接器,无需自定义开发即可完成数据同步。

- Kafka Streams:轻量级流处理库,基于 Kafka 构建实时流处理应用,支持数据过滤、聚合、转换等操作,无需独立部署集群,嵌入业务应用即可运行。

- Schema Registry:模式注册中心,管理消息的结构化 Schema(如 Avro、Protobuf),保证生产和消费端的消息格式一致,实现数据的版本管理和兼容性控制。

二:kafka安装与启动

1:安装

下载地址:https://archive.apache.org/dist/kafka/3.7.0/kafka_2.12-3.7.0.tgz

解压命令:

tar -zxvf kafka_2.12-3.7.0.tgz启动zookeeper

2026-02-06 15:46:56,181 [myid:1] - INFO [QuorumPeer[myid=1]/0:0:0:0:0:0:0:0:2181:ZooKeeperServer@174] - Created server with tickTime 2000 minSessionTimeout 4000 maxSessionTimeout 40000 datadir /usr/local/src/zookeeper-3.4.14/log/version-2 snapdir /usr/local/src/zookeeper-3.4.14/data/version-2 2026-02-06 15:46:56,186 [myid:1] - INFO [QuorumPeer[myid=1]/0:0:0:0:0:0:0:0:2181:Leader@380] - LEADING - LEADER ELECTION TOOK - 231 2026-02-06 15:46:56,195 [myid:1] - INFO [LearnerHandler-/192.168.67.138:59826:LearnerHandler@346] - Follower sid: 2 : info : org.apache.zookeeper.server.quorum.QuorumPeer$QuorumServer@7d1ce49c 2026-02-06 15:46:56,201 [myid:1] - INFO [LearnerHandler-/192.168.67.138:59826:LearnerHandler@401] - Synchronizing with Follower sid: 2 maxCommittedLog=0x0 minCommittedLog=0x0 peerLastZxid=0x100000000 2026-02-06 15:46:56,201 [myid:1] - INFO [LearnerHandler-/192.168.67.138:59826:LearnerHandler@475] - Sending SNAP 2026-02-06 15:46:56,203 [myid:1] - INFO [LearnerHandler-/192.168.67.138:59826:LearnerHandler@499] - Sending snapshot last zxid of peer is 0x100000000 zxid of leader is 0x300000000sent zxid of db as 0x200000000 2026-02-06 15:46:56,206 [myid:1] - INFO [LearnerHandler-/192.168.67.138:59826:LearnerHandler@535] - Received NEWLEADER-ACK message from 2 2026-02-06 15:46:56,207 [myid:1] - INFO [QuorumPeer[myid=1]/0:0:0:0:0:0:0:0:2181:Leader@964] - Have quorum of supporters, sids: [ 1,2 ]; starting up and setting last processed zxid: 0x300000000 2026-02-06 15:47:23,765 [myid:1] - INFO [/192.168.67.137:3881:QuorumCnxManager$Listener@743] - Received connection request /192.168.67.139:41870 2026-02-06 15:47:23,767 [myid:1] - INFO [WorkerReceiver[myid=1]:FastLeaderElection@595] - Notification: 1 (message format version), 3 (n.leader), 0x100000000 (n.zxid), 0x1 (n.round), LOOKING (n.state), 3 (n.sid), 0x2 (n.peerEpoch) LEADING (my state) 2026-02-06 15:47:23,771 [myid:1] - INFO [WorkerReceiver[myid=1]:FastLeaderElection@595] - Notification: 1 (message format version), 1 (n.leader), 0x200000000 (n.zxid), 0x1 (n.round), LOOKING (n.state), 3 (n.sid), 0x2 (n.peerEpoch) LEADING (my state) 2026-02-06 15:47:23,791 [myid:1] - INFO [LearnerHandler-/192.168.67.139:48922:LearnerHandler@346] - Follower sid: 3 : info : org.apache.zookeeper.server.quorum.QuorumPeer$QuorumServer@26b3955d 2026-02-06 15:47:23,796 [myid:1] - INFO [LearnerHandler-/192.168.67.139:48922:LearnerHandler@401] - Synchronizing with Follower sid: 3 maxCommittedLog=0x0 minCommittedLog=0x0 peerLastZxid=0x100000000 2026-02-06 15:47:23,796 [myid:1] - INFO [LearnerHandler-/192.168.67.139:48922:LearnerHandler@475] - Sending SNAP 2026-02-06 15:47:23,796 [myid:1] - INFO [LearnerHandler-/192.168.67.139:48922:LearnerHandler@499] - Sending snapshot last zxid of peer is 0x100000000 zxid of leader is 0x300000000sent zxid of db as 0x300000000 2026-02-06 15:47:23,802 [myid:1] - INFO [LearnerHandler-/192.168.67.139:48922:LearnerHandler@535] - Received NEWLEADER-ACK message from 3 [root@localhost bin]#修改kafka的zookeeper.properties

dataDir=/usr/local/src/kafka_2.12-3.7.0/data clientPort=2181 maxClientCnxns=0 admin.enableServer=false修改kafka的server.proeperties

broker.id=1 num.network.threads=3 num.io.threads=8 socket.send.buffer.bytes=102400 socket.receive.buffer.bytes=102400 socket.request.max.bytes=104857600 log.dirs=/usr/local/src/kafka_2.12-3.7.0/data/log num.partitions=1 num.recovery.threads.per.data.dir=1 offsets.topic.replication.factor=1 transaction.state.log.replication.factor=1 transaction.state.log.min.isr=1 log.retention.hours=168 log.retention.check.interval.ms=300000 zookeeper.connect=192.168.67.137:2181,192.168.67.138:2181,192.168.67.139:2181 zookeeper.connection.timeout.ms=18000 group.initial.rebalance.delay.ms=0启动kafka

[root@localhost bin]# ./kafka-server-start.sh -daemon ../config/server.properties

2:查看启动效果

[root@localhost logs]# ps -ef | grep kafka

root 4385 1 2 16:10 pts/0 00:00:05 java -Xmx1G -Xms1G -server -XX:+UseG1GC -XX:MaxGCPauseMillis=20 -XX:InitiatingHeapOccupancyPercent=35 -XX:+ExplicitGCInvokesConcurrent -XX:MaxInlineLevel=15 -Djava.awt.headless=true -Xloggc:/usr/local/src/kafka_2.12-3.7.0/bin/../logs/kafkaServer-gc.log -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintGCTimeStamps -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=10 -XX:GCLogFileSize=100M -Dcom.sun.management.jmxremote -Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=false -Dkafka.logs.dir=/usr/local/src/kafka_2.12-3.7.0/bin/../logs -Dlog4j.configuration=file:./../config/log4j.properties -cp /usr/local/src/kafka_2.12-3.7.0/bin/../libs/activation-1.1.1.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/aopalliance-repackaged-2.6.1.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/argparse4j-0.7.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/audience-annotations-0.12.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/caffeine-2.9.3.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/checker-qual-3.19.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/commons-beanutils-1.9.4.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/commons-cli-1.4.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/commons-collections-3.2.2.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/commons-digester-2.1.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/commons-io-2.11.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/commons-lang3-3.8.1.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/commons-logging-1.2.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/commons-validator-1.7.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/connect-api-3.7.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/connect-basic-auth-extension-3.7.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/connect-json-3.7.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/connect-mirror-3.7.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/connect-mirror-client-3.7.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/connect-runtime-3.7.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/connect-transforms-3.7.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/error_prone_annotations-2.10.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/hk2-api-2.6.1.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/hk2-locator-2.6.1.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/hk2-utils-2.6.1.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jackson-annotations-2.16.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jackson-core-2.16.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jackson-databind-2.16.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jackson-dataformat-csv-2.16.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jackson-datatype-jdk8-2.16.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jackson-jaxrs-base-2.16.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jackson-jaxrs-json-provider-2.16.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jackson-module-jaxb-annotations-2.16.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jackson-module-scala_2.12-2.16.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jakarta.activation-api-1.2.2.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jakarta.annotation-api-1.3.5.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jakarta.inject-2.6.1.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jakarta.validation-api-2.0.2.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jakarta.ws.rs-api-2.1.6.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jakarta.xml.bind-api-2.3.3.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/javassist-3.29.2-GA.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/javax.activation-api-1.2.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/javax.annotation-api-1.3.2.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/javax.servlet-api-3.1.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/javax.ws.rs-api-2.1.1.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jaxb-api-2.3.1.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jersey-client-2.39.1.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jersey-common-2.39.1.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jersey-container-servlet-2.39.1.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jersey-container-servlet-core-2.39.1.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jersey-hk2-2.39.1.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jersey-server-2.39.1.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jetty-client-9.4.53.v20231009.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jetty-continuation-9.4.53.v20231009.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jetty-http-9.4.53.v20231009.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jetty-io-9.4.53.v20231009.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jetty-security-9.4.53.v20231009.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jetty-server-9.4.53.v20231009.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jetty-servlet-9.4.53.v20231009.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jetty-servlets-9.4.53.v20231009.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jetty-util-9.4.53.v20231009.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jetty-util-ajax-9.4.53.v20231009.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jline-3.22.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jopt-simple-5.0.4.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jose4j-0.9.4.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/jsr305-3.0.2.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/kafka_2.12-3.7.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/kafka-clients-3.7.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/kafka-group-coordinator-3.7.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/kafka-log4j-appender-3.7.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/kafka-metadata-3.7.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/kafka-raft-3.7.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/kafka-server-3.7.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/kafka-server-common-3.7.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/kafka-shell-3.7.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/kafka-storage-3.7.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/kafka-storage-api-3.7.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/kafka-streams-3.7.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/kafka-streams-examples-3.7.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/kafka-streams-scala_2.12-3.7.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/kafka-streams-test-utils-3.7.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/kafka-tools-3.7.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/kafka-tools-api-3.7.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/lz4-java-1.8.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/maven-artifact-3.8.8.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/metrics-core-2.2.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/metrics-core-4.1.12.1.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/netty-buffer-4.1.100.Final.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/netty-codec-4.1.100.Final.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/netty-common-4.1.100.Final.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/netty-handler-4.1.100.Final.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/netty-resolver-4.1.100.Final.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/netty-transport-4.1.100.Final.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/netty-transport-classes-epoll-4.1.100.Final.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/netty-transport-native-epoll-4.1.100.Final.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/netty-transport-native-unix-common-4.1.100.Final.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/opentelemetry-proto-1.0.0-alpha.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/osgi-resource-locator-1.0.3.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/paranamer-2.8.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/pcollections-4.0.1.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/plexus-utils-3.3.1.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/protobuf-java-3.23.4.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/reflections-0.10.2.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/reload4j-1.2.25.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/rocksdbjni-7.9.2.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/scala-collection-compat_2.12-2.10.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/scala-java8-compat_2.12-1.0.2.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/scala-library-2.12.18.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/scala-logging_2.12-3.9.4.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/scala-reflect-2.12.18.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/slf4j-api-1.7.36.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/slf4j-reload4j-1.7.36.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/snappy-java-1.1.10.5.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/swagger-annotations-2.2.8.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/trogdor-3.7.0.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/zookeeper-3.8.3.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/zookeeper-jute-3.8.3.jar:/usr/local/src/kafka_2.12-3.7.0/bin/../libs/zstd-jni-1.5.5-6.jar kafka.Kafka ../config/server.properties

root 4502 2972 0 16:13 pts/0 00:00:00 grep --color=auto kafka

[root@localhost logs]# tail -f -n 100 server.log

ssl.keymanager.algorithm = SunX509

ssl.keystore.certificate.chain = null

ssl.keystore.key = null

ssl.keystore.location = null

ssl.keystore.password = null

ssl.keystore.type = JKS

ssl.principal.mapping.rules = DEFAULT

ssl.protocol = TLSv1.2

ssl.provider = null

ssl.secure.random.implementation = null

ssl.trustmanager.algorithm = PKIX

ssl.truststore.certificates = null

ssl.truststore.location = null

ssl.truststore.password = null

ssl.truststore.type = JKS

telemetry.max.bytes = 1048576

transaction.abort.timed.out.transaction.cleanup.interval.ms = 10000

transaction.max.timeout.ms = 900000

transaction.partition.verification.enable = true

transaction.remove.expired.transaction.cleanup.interval.ms = 3600000

transaction.state.log.load.buffer.size = 5242880

transaction.state.log.min.isr = 1

transaction.state.log.num.partitions = 50

transaction.state.log.replication.factor = 1

transaction.state.log.segment.bytes = 104857600

transactional.id.expiration.ms = 604800000

unclean.leader.election.enable = false

unstable.api.versions.enable = false

unstable.metadata.versions.enable = false

zookeeper.clientCnxnSocket = null

zookeeper.connect = 192.168.67.137:2181,192.168.67.138:2181,192.168.67.139:2181

zookeeper.connection.timeout.ms = 18000

zookeeper.max.in.flight.requests = 10

zookeeper.metadata.migration.enable = false

zookeeper.metadata.migration.min.batch.size = 200

zookeeper.session.timeout.ms = 18000

zookeeper.set.acl = false

zookeeper.ssl.cipher.suites = null

zookeeper.ssl.client.enable = false

zookeeper.ssl.crl.enable = false

zookeeper.ssl.enabled.protocols = null

zookeeper.ssl.endpoint.identification.algorithm = HTTPS

zookeeper.ssl.keystore.location = null

zookeeper.ssl.keystore.password = null

zookeeper.ssl.keystore.type = null

zookeeper.ssl.ocsp.enable = false

zookeeper.ssl.protocol = TLSv1.2

zookeeper.ssl.truststore.location = null

zookeeper.ssl.truststore.password = null

zookeeper.ssl.truststore.type = null

(kafka.server.KafkaConfig)

[2026-02-06 16:10:04,621] INFO [ThrottledChannelReaper-Fetch]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2026-02-06 16:10:04,621] INFO [ThrottledChannelReaper-Produce]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2026-02-06 16:10:04,622] INFO [ThrottledChannelReaper-Request]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2026-02-06 16:10:04,625] INFO [ThrottledChannelReaper-ControllerMutation]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2026-02-06 16:10:04,630] INFO [KafkaServer id=0] Rewriting /usr/local/src/kafka_2.12-3.7.0/data/log/meta.properties (kafka.server.KafkaServer)

[2026-02-06 16:10:04,661] INFO Loading logs from log dirs ArrayBuffer(/usr/local/src/kafka_2.12-3.7.0/data/log) (kafka.log.LogManager)

[2026-02-06 16:10:04,664] INFO No logs found to be loaded in /usr/local/src/kafka_2.12-3.7.0/data/log (kafka.log.LogManager)

[2026-02-06 16:10:04,674] INFO Loaded 0 logs in 13ms (kafka.log.LogManager)

[2026-02-06 16:10:04,677] INFO Starting log cleanup with a period of 300000 ms. (kafka.log.LogManager)

[2026-02-06 16:10:04,677] INFO Starting log flusher with a default period of 9223372036854775807 ms. (kafka.log.LogManager)

[2026-02-06 16:10:04,988] INFO [kafka-log-cleaner-thread-0]: Starting (kafka.log.LogCleaner$CleanerThread)

[2026-02-06 16:10:04,999] INFO [feature-zk-node-event-process-thread]: Starting (kafka.server.FinalizedFeatureChangeListener$ChangeNotificationProcessorThread)

[2026-02-06 16:10:05,005] INFO Feature ZK node at path: /feature does not exist (kafka.server.FinalizedFeatureChangeListener)

[2026-02-06 16:10:05,021] INFO [zk-broker-0-to-controller-forwarding-channel-manager]: Starting (kafka.server.NodeToControllerRequestThread)

[2026-02-06 16:10:05,313] INFO Updated connection-accept-rate max connection creation rate to 2147483647 (kafka.network.ConnectionQuotas)

[2026-02-06 16:10:05,328] INFO [SocketServer listenerType=ZK_BROKER, nodeId=0] Created data-plane acceptor and processors for endpoint : ListenerName(PLAINTEXT) (kafka.network.SocketServer)

[2026-02-06 16:10:05,332] INFO [zk-broker-0-to-controller-alter-partition-channel-manager]: Starting (kafka.server.NodeToControllerRequestThread)

[2026-02-06 16:10:05,377] INFO [ExpirationReaper-0-Produce]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2026-02-06 16:10:05,380] INFO [ExpirationReaper-0-Fetch]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2026-02-06 16:10:05,380] INFO [ExpirationReaper-0-DeleteRecords]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2026-02-06 16:10:05,381] INFO [ExpirationReaper-0-ElectLeader]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2026-02-06 16:10:05,381] INFO [ExpirationReaper-0-RemoteFetch]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2026-02-06 16:10:05,393] INFO [LogDirFailureHandler]: Starting (kafka.server.ReplicaManager$LogDirFailureHandler)

[2026-02-06 16:10:05,395] INFO [AddPartitionsToTxnSenderThread-0]: Starting (kafka.server.AddPartitionsToTxnManager)

[2026-02-06 16:10:05,414] INFO Creating /brokers/ids/0 (is it secure? false) (kafka.zk.KafkaZkClient)

[2026-02-06 16:10:05,433] INFO Stat of the created znode at /brokers/ids/0 is: 12884901913,12884901913,1770365405424,1770365405424,1,0,0,216172816148791296,202,0,12884901913

(kafka.zk.KafkaZkClient)

[2026-02-06 16:10:05,434] INFO Registered broker 0 at path /brokers/ids/0 with addresses: PLAINTEXT://localhost:9092, czxid (broker epoch): 12884901913 (kafka.zk.KafkaZkClient)

[2026-02-06 16:10:05,477] INFO [ExpirationReaper-0-topic]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2026-02-06 16:10:05,478] INFO [ExpirationReaper-0-Heartbeat]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2026-02-06 16:10:05,480] INFO [ExpirationReaper-0-Rebalance]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2026-02-06 16:10:05,491] INFO [GroupCoordinator 0]: Starting up. (kafka.coordinator.group.GroupCoordinator)

[2026-02-06 16:10:05,496] INFO [GroupCoordinator 0]: Startup complete. (kafka.coordinator.group.GroupCoordinator)

[2026-02-06 16:10:05,509] INFO [TransactionCoordinator id=0] Starting up. (kafka.coordinator.transaction.TransactionCoordinator)

[2026-02-06 16:10:05,513] INFO Successfully created /controller_epoch with initial epoch 0 (kafka.zk.KafkaZkClient)

[2026-02-06 16:10:05,515] INFO [TransactionCoordinator id=0] Startup complete. (kafka.coordinator.transaction.TransactionCoordinator)

[2026-02-06 16:10:05,523] INFO [TxnMarkerSenderThread-0]: Starting (kafka.coordinator.transaction.TransactionMarkerChannelManager)

[2026-02-06 16:10:05,543] INFO Feature ZK node created at path: /feature (kafka.server.FinalizedFeatureChangeListener)

[2026-02-06 16:10:05,549] INFO [ExpirationReaper-0-AlterAcls]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2026-02-06 16:10:05,565] INFO [/config/changes-event-process-thread]: Starting (kafka.common.ZkNodeChangeNotificationListener$ChangeEventProcessThread)

[2026-02-06 16:10:05,567] INFO [MetadataCache brokerId=0] Updated cache from existing None to latest Features(version=3.7-IV4, finalizedFeatures={}, finalizedFeaturesEpoch=0). (kafka.server.metadata.ZkMetadataCache)

[2026-02-06 16:10:05,580] INFO [SocketServer listenerType=ZK_BROKER, nodeId=0] Enabling request processing. (kafka.network.SocketServer)

[2026-02-06 16:10:05,583] INFO Awaiting socket connections on 0.0.0.0:9092. (kafka.network.DataPlaneAcceptor)

[2026-02-06 16:10:05,610] INFO Kafka version: 3.7.0 (org.apache.kafka.common.utils.AppInfoParser)

[2026-02-06 16:10:05,610] INFO Kafka commitId: 2ae524ed625438c5 (org.apache.kafka.common.utils.AppInfoParser)

[2026-02-06 16:10:05,610] INFO Kafka startTimeMs: 1770365405606 (org.apache.kafka.common.utils.AppInfoParser)

[2026-02-06 16:10:05,614] INFO [KafkaServer id=0] started (kafka.server.KafkaServer)

[2026-02-06 16:10:05,742] INFO [zk-broker-0-to-controller-alter-partition-channel-manager]: Recorded new controller, from now on will use node localhost:9092 (id: 0 rack: null) (kafka.server.NodeToControllerRequestThread)

[2026-02-06 16:10:05,742] INFO [zk-broker-0-to-controller-forwarding-channel-manager]: Recorded new controller, from now on will use node localhost:9092 (id: 0 rack: null) (kafka.server.NodeToControllerRequestThread)