业务痛点:某区域电网公司(覆盖10个地市,负荷峰值500万千瓦)存在三大问题:

- 预测精度低:传统ARIMA模型预测误差大(MAPE=18%),导致备用容量冗余(年浪费电费2000万元)或供电不足(限电损失年超1500万元)

- 特征利用不足:未充分融合气象(温度/湿度)、节假日、新能源(风电/光伏)出力等动态特征,极端天气下预测偏差超30%

- 动态响应差:负荷模式随季节/产业调整变化快(如夏季空调负荷突增),模型迭代周期长(季度级),无法实时适应

算法团队:时序数据清洗(Spark)、特征工程(滑动窗口/多源特征融合)、LSTM模型构建(PyTorch)、模型训练与评估(MAE/RMSE/MAPE)、模型存储(MinIO)

交付清单:

- 模型文件:model/lstm_best.pth(PyTorch模型)、model/scaler.pkl(特征缩放器)

- 特征数据:feature_path(滑动窗口特征矩阵.npy)、label_path(标签序列.npy)

- 工具:数据清洗脚本(load_cleaning.py)、特征生成脚本(feature_generator.py)

- 文档:《LSTM参数调优报告》《特征工程手册》《评估指标说明》(MAE/RMSE/MAPE定义)

算法性能:

- 测试集MAPE≤8%,RMSE≤5万千瓦,预测响应时间≤5分钟(单次预测)

业务团队:API网关、负荷预测服务(Python/FastAPI)、调度系统集成(Java/Spring Boot)、监控告警;

交付清单:

- 系统:负荷预测API(Python/FastAPI)、调度系统(Java/Spring Boot)、调度终端(Vue前端)

- API文档:《负荷预测API调用手册》(含请求示例:最近72小时288个时间点×8特征)

- 运维文档:《K8s部署手册》《模型热更新流程》

业务价值:备用容量优化年降本2000万元,限电损失减少年1500万元,新能源消纳率提升10%

电网运营团队:通过调度终端查看预测曲线,应急指挥系统基于预测结果制定切负荷策略

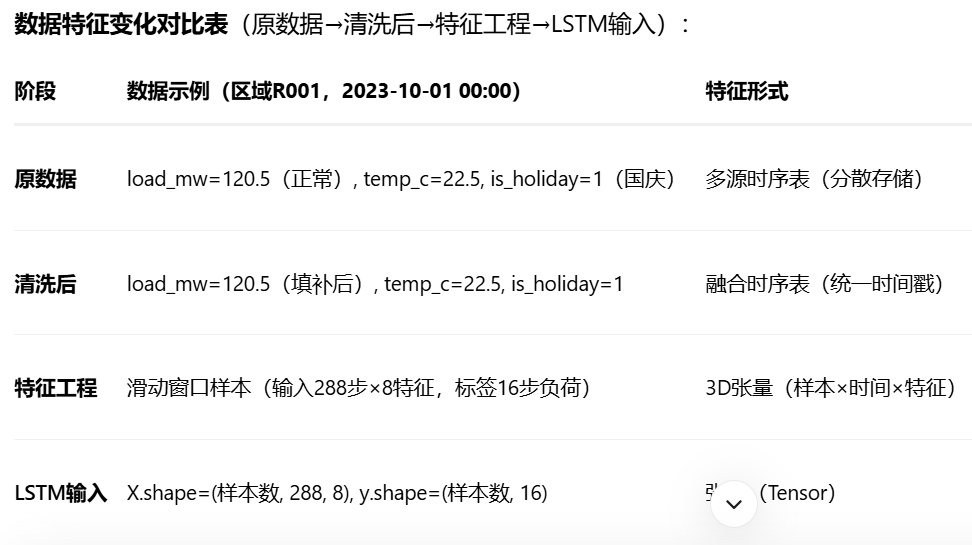

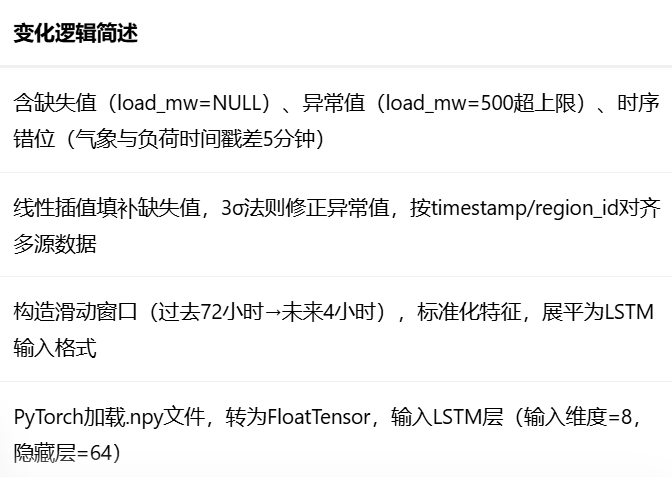

数据准备与特征变化

(1)原数据结构(多源时序数据,来自电网系统)

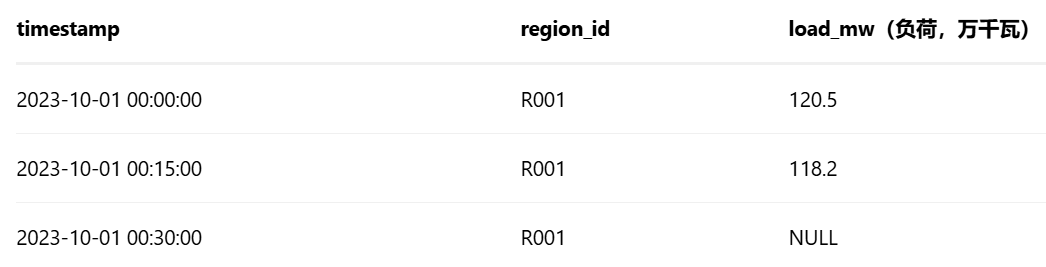

原始数据包括历史负荷、气象、日期、新能源出力,存储于数据湖(MinIO),含缺失值(传感器故障)、异常值(仪表误差)、时序错位等问题

① 历史负荷数据(historical_load,Parquet格式)

② 气象数据(weather_data,CSV格式)

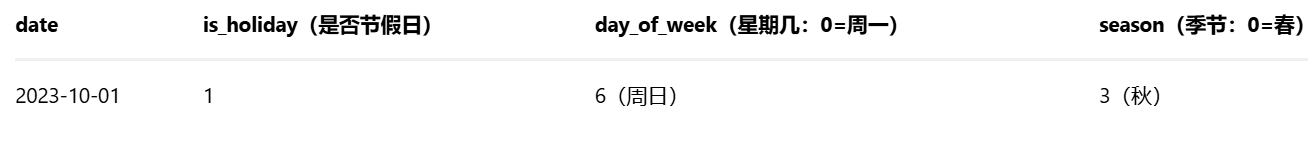

③ 日期特征(date_features,SQL生成)

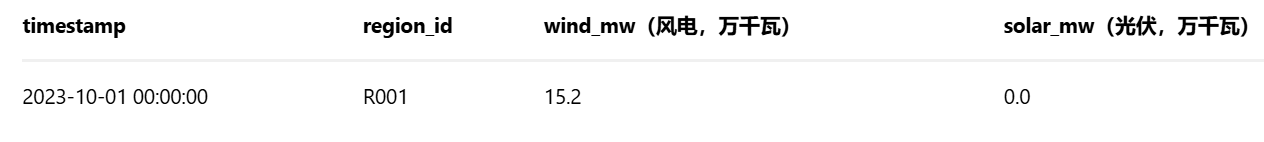

④ 新能源出力(renewable_output,Parquet格式)

(2)数据清洗(详细代码,算法团队负责)

目标:处理缺失值(线性插值)、异常值(3σ法则)、时序对齐(统一时间戳粒度)

代码文件:data_processing/load_cleaning.py(Python)

python

import pandas as pd

import numpy as np

from scipy.interpolate import interpld

import logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

def clean_load_data(raw_load_path:str,output_path:str)->pd.DataFrame:

"""清洗历史负荷数据:缺失值插值、异常值修正、时序对齐"""

# 1.读取原始数据

df = pd.read_parquet(raw_load_path)

df["timestamp"] = pd.to_datetime(df["timestamp"])

df.set_index("timestamp",inplace=True)

logger.info(f"原始负荷数据量:{len(df)}条,缺失值占比:{df['load_mw'].isnull().mean():.2%}")

# 2.缺失值处理(线性插值,优先用同期历史数据填补)

# 按region_id分组处理

cleaned_dfs = []

for region_id in df["region_id"].unique():

region_df = df[df["region_id"]==region_id].copy()

# 线性插值填补短期缺失(≤4个时间点)

region_df["load_mw"] = region_df["load_mw"].interpolate(method="",limit=4)

# 长期缺失(>4个时间点):用同期上周数据填补

mask = region_df["load_mw"].isnull()

if mask.any():

region_df.loc[mask, "load_mw"] = region_df.shift(672)[mask] # 672=7天×96个15分钟点

logger.warning(f"区域{region_id}仍有{mask.sum()}条缺失值,用上周同期填补")

cleaned_dfs.append(region_df)

cleaned_df = pd.concat(cleaned_dfs).reset_index()

# 3.异常值处理(3σ法则:偏离均值3倍标准差视为异常)

mean_load = cleaned_df.groupby("region_id")["load_mw"].mean()

std_load = cleaned_df.groupby("region_id")["load_mw"].std()

cleaned_Df["is_outlier"] = False

for region_id in cleaned_df["region_id"].unique():

region_mask = cleaned_df["region_id"] == region_id

upper_bound = mean_load[region_id] + 3 * std_load[region_id]

lower_bound = mean_load[region_id] - 3 * std_load[region_id]

outlier_mask = region_mask & ((cleaned_df["load_mw"] > upper_bound) | (cleaned_df["load_mw"] < lower_bound))

cleaned_df.loc[outlier_mask,"load_mw"] = np.nan # 先标记为缺失

cleaned_df.loc[outlier_mask,"is_outlier"] = True

# 异常值用插值填补

cleaned_df.loc[region_mask,"load_mw"] = cleaned_df.loc[region_mask, "load_mw"].interpolate()

# 4.保存清洗后数据

cleaned_df.to_parquet(output_path,index=False)

logger.info(f"清洗后负荷数据量:{len(cleaned_df)}条,保存至{output_path}")

return cleaned_df

if __name__ == "__main__":

raw_load_path = "s3://grid-data-lake/raw/historical_load.parquet"

output_path = "s3://grid-data-lake/cleaned/load_cleaned.parquet"

clean_load_data(raw_load_path, output_path)

(3)特征工程与特征数据生成(明确feature_path/label_path)

将清洗后数据转换为LSTM可输入的时序特征矩阵(feature_path)和标签序列(label_path),核心是构造滑动窗口样本(输入历史特征→预测未来负荷)

- 时序特征:历史负荷(过去24小时96个点,即t-96到t-1)、负荷变化率(差分)

- 外部特征:气象(当前时刻温度/湿度)、日期(是否节假日、星期几、季节)、新能源出力(风电/光伏当前出力)

- 滑动窗口构造:用过去72小时(288个点)的特征预测未来4小时(16个点),窗口大小=288(输入步长),预测步长=16

- 特征缩放:对所有数值特征(负荷、温度、出力)做标准化

- 特征数据存储:feature_path指向滑动窗口特征矩阵(Parquet格式,含输入特征和标签),label_path指向标签序列(未来4小时负荷,用于评估)

代码文件:feature_engineering/feature_generator.py(特征生成)、feature_engineering/generate_feature_data.py(明确feature_path/label_path)

① 滑动窗口特征构造(feature_generator.py)

python

import pandas as pd

import numpy as np

from sklearn.preprocessing import StandardScaler

import logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

def create_sliding_window(df: pd.DataFrame, input_steps: int = 288, output_steps: int = 16, target_col: str = "load_mw")-> tuple:

"""构造滑动窗口样本:输入(input_steps个特征)→标签(output_steps个目标值)"""

"""

:param df: 清洗后数据(含timestamp, region_id, load_mw, temp_c, is_holiday等)

:param input_steps: 输入步长(过去72小时=288个15分钟点)

:param output_steps: 预测步长(未来4小时=16个15分钟点)

:param target_col: 目标列(负荷load_mw)

:return: X(特征矩阵,shape=(样本数, input_steps, 特征数)), y(标签矩阵,shape=(样本数, output_steps))

"""

features = []

labels = []

regions = df["region_id"].unique()

for region_id in regions:

region_df = df[df["region_id"] == region_id].sort_values("timestamp").reset_index(drop=True)

values = region_df.drop(columns=["timestamp", "region_id"]).values # 特征矩阵(行:时间点,列:特征)

timestamps = region_df["timestamp"].values

# 遍历所有可能窗口(从第input_steps个点开始,到最后output_steps个点结束)

for i in range(input_steps, len(values) - output_steps):

# 输入特征:过去input_steps个时间点的所有特征(负荷、温度、节假日等)

X_window = values[i-input_steps:i, :] # shape=(input_steps, 特征数)

# 标签:未来output_steps个时间点的目标负荷

y_window = values[i:i+output_steps, region_df.columns.get_loc(target_col)] # shape=(output_steps,)

features.append(X_window)

labels.append(y_window)

# 转换为numpy数组

X = np.array(features, dtype=np.float32) # shape=(样本数, input_steps, 特征数)

y = np.array(labels, dtype=np.float32) # shape=(样本数, output_steps)

logger.info(f"滑动窗口构造完成:样本数={len(X)},输入形状={X.shape},标签形状={y.shape}")

return X, y

def scale_features(X_train: np.ndarray, X_val: np.ndarray, X_test: np.ndarray) -> tuple:

"""特征标准化(按训练集均值/标准差缩放)"""

scaler = StandardScaler()

# 合并训练集所有特征(展平为2D:(样本数×input_steps, 特征数))

train_features_flat = X_train.reshape(-1, X_train.shape[-1])

scaler.fit(train_features_flat)

# 分别缩放训练/验证/测试集

X_train_scaled = scaler.transform(train_features_flat).reshape(X_train.shape)

X_val_scaled = scaler.transform(X_val.reshape(-1, X_val.shape[-1])).reshape(X_val.shape)

X_test_scaled = scaler.transform(X_test.reshape(-1, X_test.shape[-1])).reshape(X_test.shape)

logger.info("特征标准化完成(基于训练集均值/标准差)")

return X_train_scaled, X_val_scaled, X_test_scaled, scaler

② 特征数据生成(generate_feature_data.py,明确feature_path/label_path)

python

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

from feature_generator import create_sliding_window, scale_features

import logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

def generate_feature_data(cleaned_load_path: str, weather_path: str, date_path: str, renewable_path: str) -> tuple:

"""生成LSTM特征数据(feature_path)和标签(label_path)"""

# 1. 加载多源数据并融合

load_df = pd.read_parquet(cleaned_load_path)

weather_df = pd.read_csv(weather_path).merge(load_df[["timestamp", "region_id"]], on=["timestamp", "region_id"])

date_df = pd.read_csv(date_path).merge(load_df[["timestamp", "region_id"]], left_on="date", right_on="timestamp")

renewable_df = pd.read_parquet(renewable_path).merge(load_df[["timestamp", "region_id"]], on=["timestamp", "region_id"])

# 融合所有特征(按timestamp和region_id对齐)

merged_df = load_df.merge(weather_df, on=["timestamp", "region_id"], how="left") \

.merge(date_df, on=["timestamp", "region_id"], how="left") \

.merge(renewable_df, on=["timestamp", "region_id"], how="left")

# 2. 构造滑动窗口样本(输入288步→预测16步)

X, y = create_sliding_window(merged_df, input_steps=288, output_steps=16, target_col="load_mw")

# 3. 划分数据集(train:val:test=7:2:1,按时间顺序划分,避免未来数据泄露)

split_idx1 = int(len(X) * 0.7)

split_idx2 = int(len(X) * 0.9)

X_train, X_val, X_test = X[:split_idx1], X[split_idx1:split_idx2], X[split_idx2:]

y_train, y_val, y_test = y[:split_idx1], y[split_idx1:split_idx2], y[split_idx2:]

# 4. 特征标准化

X_train_scaled, X_val_scaled, X_test_scaled, scaler = scale_features(X_train, X_val, X_test)

# 5. 定义存储路径(算法团队数据湖位置)

feature_path = {

"train": "s3://grid-data-lake/processed/lstm_features_train.npy",

"val": "s3://grid-data-lake/processed/lstm_features_val.npy",

"test": "s3://grid-data-lake/processed/lstm_features_test.npy"

}

label_path = {

"train": "s3://grid-data-lake/processed/lstm_labels_train.npy",

"val": "s3://grid-data-lake/processed/lstm_labels_val.npy",

"test": "s3://grid-data-lake/processed/lstm_labels_test.npy"

}

# 6. 保存数据

np.save(feature_path["train"], X_train_scaled)

np.save(feature_path["val"], X_val_scaled)

np.save(feature_path["test"], X_test_scaled)

np.save(label_path["train"], y_train)

np.save(label_path["val"], y_val)

np.save(label_path["test"], y_test)

logger.info(f"""

【算法团队特征数据存储说明】

- feature_path: {feature_path}

存储内容:滑动窗口特征矩阵(.npy格式),shape=(样本数, input_steps=288, 特征数=8),特征包括:

load_mw(历史负荷)、temp_c(温度)、humidity(湿度)、wind_speed(风速)、is_holiday(节假日)、day_of_week(星期几)、season(季节)、wind_mw(风电)、solar_mw(光伏)

示例(train特征矩阵前1个样本):\n{X_train_scaled[0, :5, :3]}(前5个时间点,前3个特征)

- label_path: {label_path}

存储内容:标签序列(未来16步负荷,.npy格式),shape=(样本数, output_steps=16)

示例(train标签前1个样本):\n{y_train[0]}(未来4小时16个15分钟点负荷)

""")

return feature_path, label_path, X_train_scaled, y_train, X_val_scaled, y_val, X_test_scaled, y_test

代码结构

算法团队仓库(algorithm-load-forecasting,Python)

text

algorithm-load-forecasting/

├── data_processing/ # 数据清洗(缺失值/异常值处理)

│ ├── load_cleaning.py # 负荷数据清洗(含详细注释)

│ ├── weather_cleaning.py # 气象数据清洗

│ └── requirements.txt # 依赖:pandas, numpy, scipy

├── feature_engineering/ # 特征工程(滑动窗口/标准化)

│ ├── feature_generator.py # 滑动窗口构造+特征缩放

│ ├── generate_feature_data.py # 特征数据生成(含feature_path/label_path说明)

│ └── requirements.txt # 依赖:scikit-learn

├── model_training/ # LSTM模型训练(核心)

│ ├── lstm_model.py # LSTM模型定义(PyTorch,含门控机制注释)

│ ├── train_lstm.py # 训练入口(损失函数/优化器/早停)

│ ├── evaluate_model.py # 评估(MAE/RMSE/MAPE计算)

│ └── lstm_params.yaml # 调参记录(hidden_size=64, lr=0.001)

├── model_storage/ # 模型存储(MinIO)

│ ├── save_model.py # 保存模型(.h5/.pt)

│ └── load_model.py # 加载模型(推理用)

└── mlflow_tracking/ # MLflow实验跟踪

└── run_lstm_experiment.py # 记录超参数/指标/模型(1)算法团队:LSTM模型定义(model_training/lstm_model.py,含原理注释)

LSTM层结构(输入门/遗忘门/输出门)、多特征输入处理、预测步长扩展(Seq2Seq思想)

python

import torch

import torch.nn as nn

import logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

class LSTMForecaster(nn.Module):

"""LSTM电力负荷预测模型(Seq2Seq结构:编码器-解码器)"""

def __init__(self, input_size: int, hidden_size: int, output_steps: int, num_layers: int = 2):

"""

:param input_size: 输入特征数(8:负荷、温度、湿度等)

:param hidden_size: LSTM隐藏层神经元数(64)

:param output_steps: 预测步长(16:未来4小时16个点)

:param num_layers: LSTM层数(2层堆叠)

"""

super(LSTMForecaster, self).__init__()

self.hidden_size = hidden_size

self.num_layers = num_layers

self.output_steps = output_steps

# LSTM编码器(输入历史时序特征)

self.lstm_encoder = nn.LSTM(

input_size=input_size,

hidden_size=hidden_size,

num_layers=num_layers,

batch_first=True, # 输入形状(batch_size, seq_len, input_size)

dropout=0.2 # 层间 dropout 防止过拟合

)

# LSTM解码器(输出未来负荷序列)

self.lstm_decoder = nn.LSTM(

input_size=hidden_size, # 解码器输入为编码器输出的隐藏状态

hidden_size=hidden_size,

num_layers=num_layers,

batch_first=True,

dropout=0.2

)

# 全连接层(将解码器输出映射为预测负荷)

self.fc = nn.Linear(hidden_size, 1) # 输出1个值(负荷)

def forward(self, x: torch.Tensor) -> torch.Tensor:

"""前向传播:输入历史特征→输出未来16步负荷"""

# x.shape=(batch_size, input_steps=288, input_size=8)

# 1. 编码器:处理输入序列,输出最后一个时间步的隐藏状态和细胞状态

encoder_out, (hidden, cell) = self.lstm_encoder(x) # encoder_out.shape=(batch_size, 288, hidden_size)

# 2. 解码器:用编码器输出的隐藏状态初始化,逐步预测未来负荷

# 解码器输入:初始用编码器最后一个时间步的输出(重复output_steps次)

decoder_input = encoder_out[:, -1:, :].repeat(1, self.output_steps, 1) # shape=(batch_size, 16, hidden_size)

decoder_out, _ = self.lstm_decoder(decoder_input, (hidden, cell)) # decoder_out.shape=(batch_size, 16, hidden_size)

# 3. 全连接层:输出预测负荷(16步)

output = self.fc(decoder_out) # shape=(batch_size, 16, 1)

return output.squeeze(-1) # 移除最后一维,shape=(batch_size, 16)

# 示例:模型初始化

def init_lstm_model(input_size=8, hidden_size=64, output_steps=16, num_layers=2):

model = LSTMForecaster(input_size, hidden_size, output_steps, num_layers)

logger.info(f"LSTM模型初始化完成:input_size={input_size}, hidden_size={hidden_size}, output_steps={output_steps}")

return model(2)算法团队:模型训练与评估(model_training/train_lstm.py+evaluate_model.py)

- Epoch:整个训练集遍历1次(如100个epoch表示模型看过100遍所有样本)

- Batch Size:每次训练输入的样本数(如32表示每次用32个样本计算梯度更新参数)

- Loss Function:损失函数(MSE均方误差,衡量预测值与真实值的平方差,回归任务常用)

- Optimizer:优化器(Adam,自适应学习率,加速收敛)

- Early Stopping:早停(验证集误差不再下降时停止训练,防止过拟合)

python

# model_training/train_lstm.py(训练循环)

import torch

import torch.nn as nn

from torch.utils.data import DataLoader, TensorDataset

import numpy as np

from lstm_model import init_lstm_model

import mlflow

import logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

def train_lstm(

X_train: np.ndarray, y_train: np.ndarray,

X_val: np.ndarray, y_val: np.ndarray,

hidden_size: int = 64, lr: float = 0.001,

batch_size: int = 32, epochs: int = 100, patience: int = 10

) -> nn.Module:

"""训练LSTM模型"""

# 1. 设备配置(GPU优先)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

logger.info(f"使用设备:{device}")

# 2. 转换为PyTorch张量

X_train_tensor = torch.tensor(X_train, dtype=torch.float32).to(device) # shape=(样本数, 288, 8)

y_train_tensor = torch.tensor(y_train, dtype=torch.float32).to(device) # shape=(样本数, 16)

X_val_tensor = torch.tensor(X_val, dtype=torch.float32).to(device)

y_val_tensor = torch.tensor(y_val, dtype=torch.float32).to(device)

# 3. 创建数据集和数据加载器

train_dataset = TensorDataset(X_train_tensor, y_train_tensor)

val_dataset = TensorDataset(X_val_tensor, y_val_tensor)

train_loader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True)

val_loader = DataLoader(val_dataset, batch_size=batch_size, shuffle=False)

# 4. 初始化模型、损失函数、优化器

model = init_lstm_model(input_size=X_train.shape[-1], hidden_size=hidden_size, output_steps=y_train.shape[-1])

model.to(device)

criterion = nn.MSELoss() # 均方误差损失(回归任务)

optimizer = torch.optim.Adam(model.parameters(), lr=lr) # Adam优化器

scheduler = torch.optim.lr_scheduler.ReduceLROnPlateau(optimizer, mode="min", factor=0.5, patience=5) # 学习率衰减

# 5. 训练循环(含早停)

best_val_loss = float("inf")

early_stop_counter = 0

for epoch in range(epochs):

# 训练模式

model.train()

train_loss = 0.0

for batch_X, batch_y in train_loader:

optimizer.zero_grad() # 清零梯度

outputs = model(batch_X) # 前向传播

loss = criterion(outputs, batch_y) # 计算损失

loss.backward() # 反向传播

optimizer.step() # 更新参数

train_loss += loss.item() * batch_X.size(0)

train_loss /= len(train_dataset)

# 验证模式

model.eval()

val_loss = 0.0

with torch.no_grad():

for batch_X, batch_y in val_loader:

outputs = model(batch_X)

loss = criterion(outputs, batch_y)

val_loss += loss.item() * batch_X.size(0)

val_loss /= len(val_dataset)

# 记录MLflow实验

with mlflow.start_run(run_name=f"lstm_epoch_{epoch}"):

mlflow.log_metric("train_loss", train_loss, step=epoch)

mlflow.log_metric("val_loss", val_loss, step=epoch)

mlflow.log_param("hidden_size", hidden_size)

mlflow.log_param("lr", lr)

# 早停判断

if val_loss < best_val_loss:

best_val_loss = val_loss

torch.save(model.state_dict(), "model/lstm_best.pth") # 保存最佳模型

early_stop_counter = 0

logger.info(f"Epoch {epoch+1}: 验证损失下降至{best_val_loss:.4f},保存模型")

else:

early_stop_counter += 1

if early_stop_counter >= patience:

logger.info(f"早停触发({patience}轮验证损失未下降),停止训练")

break

logger.info(f"Epoch {epoch+1}/{epochs}, Train Loss: {train_loss:.4f}, Val Loss: {val_loss:.4f}")

scheduler.step(val_loss) # 更新学习率

logger.info(f"训练完成,最佳验证损失:{best_val_loss:.4f}")

model.load_state_dict(torch.load("model/lstm_best.pth")) # 加载最佳模型

return model评估代码(model_training/evaluate_model.py,含MAE/RMSE/MAPE计算):

python

import numpy as np

import torch

from sklearn.metrics import mean_absolute_error, mean_squared_error

import logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

def evaluate_model(model: torch.nn.Module, X_test: np.ndarray, y_test: np.ndarray, device: torch.device) -> dict:

"""评估模型性能(MAE/RMSE/MAPE)"""

model.eval()

X_test_tensor = torch.tensor(X_test, dtype=torch.float32).to(device)

y_test_tensor = torch.tensor(y_test, dtype=torch.float32).to(device)

with torch.no_grad():

y_pred = model(X_test_tensor).cpu().numpy() # 预测值(shape=(样本数, 16))

y_true = y_test_tensor.cpu().numpy() # 真实值

# 计算指标(对所有样本、所有预测步长的平均值)

mae = mean_absolute_error(y_true.flatten(), y_pred.flatten())

rmse = np.sqrt(mean_squared_error(y_true.flatten(), y_pred.flatten()))

mape = np.mean(np.abs((y_true - y_pred) / y_true)) * 100 # 百分比

metrics = {"MAE": mae, "RMSE": rmse, "MAPE": mape}

logger.info(f"模型评估结果:MAE={mae:.2f}万千瓦,RMSE={rmse:.2f}万千瓦,MAPE={mape:.2f}%")

return metrics业务团队仓库(business-load-forecasting,Java+Python)

text

business-load-forecasting/

├── api_gateway/ # API网关(Kong配置)

├── load_forecasting_service/ # 负荷预测服务(Python/FastAPI)

│ ├── main.py # FastAPI服务(调用LSTM模型)

│ ├── model_loader.py # 加载MinIO模型(.pt→PyTorch模型)

│ └── Dockerfile # 容器化配置

├── scheduling_system/ # 调度系统(Java/Spring Boot)

│ ├── backend/ # 发电计划/备用容量计算

│ ├── frontend/ # Vue前端(调度终端可视化)

│ └── sql/ # PostgreSQL表结构(预测结果/历史负荷)

├── monitoring/ # 监控告警(Prometheus+Grafana)

└── deployment/ # K8s部署配置(服务/Ingress)load_forecasting_service/main.py(Python API服务)

python

from fastapi import FastAPI, HTTPException

import numpy as np

import torch

from lstm_model import init_lstm_model

from model_loader import load_model_from_minio

import logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

app = FastAPI(title="电网负荷预测API")

# 全局变量:加载模型和scaler

model = None

scaler = None

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

@app.on_event("startup")

def load_resources():

"""启动时加载模型和特征缩放器"""

global model, scaler

model = load_model_from_minio("s3://grid-models/lstm_best.pth") # 从MinIO加载模型

model.to(device)

model.eval()

# 加载训练时的特征缩放器(实际用joblib加载保存的scaler.pkl)

logger.info("模型和缩放器加载完成")

@app.post("/predict")

def predict_load(request: dict):

"""预测接口:输入历史特征→输出未来4小时负荷"""

try:

# 1. 解析请求(包含region_id、最近72小时特征)

region_id = request["region_id"]

recent_features = np.array(request["recent_features"], dtype=np.float32) # shape=(288, 8)

# 2. 特征标准化(用训练时的scaler)

scaled_features = scaler.transform(recent_features.reshape(1, -1)).reshape(1, 288, 8)

# 3. 模型推理

with torch.no_grad():

input_tensor = torch.tensor(scaled_features, dtype=torch.float32).to(device)

prediction = model(input_tensor).cpu().numpy()[0] # shape=(16,)

# 4. 返回预测结果(未来16个15分钟点负荷)

return {

"region_id": region_id,

"prediction": prediction.tolist(),

"timestamp": request["timestamp"] # 预测起始时间

}

except Exception as e:

logger.error(f"预测失败:{str(e)}")

raise HTTPException(status_code=500, detail=str(e))

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="0.0.0.0", port=8080)部署后应用流程

Step 1:实时数据采集与特征构造

- SCADA系统每15分钟采集一次负荷数据,气象/新能源系统实时同步特征,通过Kafka传输至数据湖,构造最近72小时288个时间点的特征矩阵(8特征/点)

Step 2:调用预测API生成未来负荷

- 调度系统每15分钟调用预测API(POST /predict),传入区域ID和最近72小时特征,API返回未来4小时16个点的负荷预测值

Step 3:调度决策与备用容量优化

- 调度终端可视化预测曲线(ECharts),对比实际负荷;调度系统根据预测值调整发电计划(如预测负荷突增时提前启动备用机组),优化备用容量(减少冗余)

Step 4:监控与迭代

- 监控系统跟踪MAPE,若连续3天MAPE>8%,触发Airflow调度算法团队重训模型(用最新7天数据),周级更新模型