一:环境规划

1:官方地址

2:环境规划

pod网段:10.244.0.0/16

service网段:10.10.0.0/16

|-------|----------------|-----------|----------------------------------------------|--------------------|

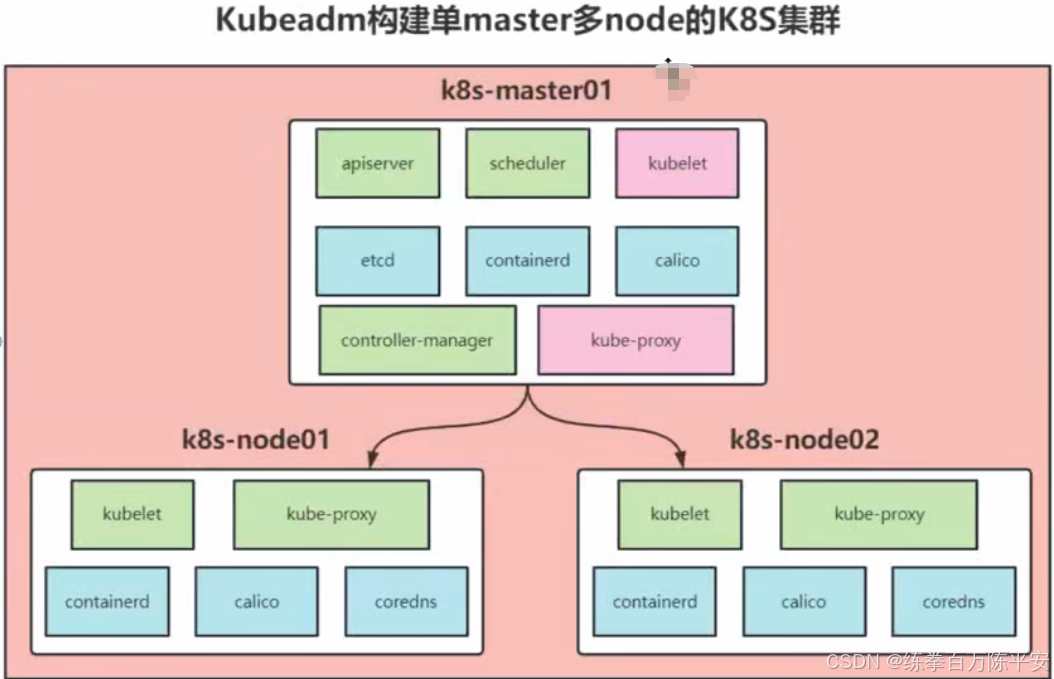

| 角色 | IP地址 | 主机名 | 组件 | 硬件 |

| 控制 节点 | 192.168.67.128 | k8s-node1 | apiserver controller-manager etcd containerd | cpu:4 内存4G 硬盘:20GB |

| 工作 节点 | 192.168.67.129 | k8s-node2 | kubelet kube-proxy containerd calico coredns | cpu:4 内存4G 硬盘:20GB |

| 工作 节点 | 192.168.67.130 | k8s-node3 | kubelet kube-proxy containerd calico网络插件 | cpu:4 内存4G 硬盘:20GB |

我们的容器运行时采用的是containerd所以我们使用containered。

apiserver、controller-manager、etcd他们也是以容器的形式运行,准确来讲是static pod的方式。容器的方式的话,也必须会有containerd或者docker。

coredns k8s集群部署好之后,就已经部署好了,不需要我们额外的进行安装。

3:如何查看当前的k8s版本

[root@k8s-node1 ~]# kubectl version

WARNING: This version information is deprecated and will be replaced with the output from kubectl version --short. Use --output=yaml|json to get the full version.

Client Version: version.Info{Major:"1", Minor:"26", GitVersion:"v1.26.0", GitCommit:"b46a3f887ca979b1a5d14fd39cb1af43e7e5d12d", GitTreeState:"clean", BuildDate:"2022-12-08T19:58:30Z", GoVersion:"go1.19.4", Compiler:"gc", Platform:"linux/amd64"}

Kustomize Version: v4.5.7

Server Version: version.Info{Major:"1", Minor:"26", GitVersion:"v1.26.15", GitCommit:"1649f592f1909b97aa3c2a0a8f968a3fd05a7b8b", GitTreeState:"clean", BuildDate:"2024-03-14T00:54:27Z", GoVersion:"go1.21.8", Compiler:"gc", Platform:"linux/amd64"}4:查看初始化k8s集群都需要哪些镜像?

[root@k8s-node1 ~]# kubeadm config images list

I0217 08:56:57.034180 10672 version.go:256] remote version is much newer: v1.35.1; falling back to: stable-1.26

registry.k8s.io/kube-apiserver:v1.26.15

registry.k8s.io/kube-controller-manager:v1.26.15

registry.k8s.io/kube-scheduler:v1.26.15

registry.k8s.io/kube-proxy:v1.26.15

registry.k8s.io/pause:3.9

registry.k8s.io/etcd:3.5.6-0

registry.k8s.io/coredns/coredns:v1.9.35:查看k8s集群节点

[root@k8s-node1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-node1 Ready control-plane 153d v1.26.0

k8s-node2 Ready <none> 153d v1.26.0

k8s-node3 Ready <none> 153d v1.26.0

k8s-node4 Ready <none> 22d v1.26.0

[root@k8s-node1 ~]#二:安装网络组件Calico

Calico 就是 Kubernetes 里的 "网络管家 + 防火墙"。

Calico 到底是干什么的?(超通俗版)

你可以把 K8s 集群 看成一个 小区、每个 Pod 就是一户人家、每个 Node 就是一栋楼、网络就是小区道路

Calico 干 3 件核心事:

1:给 Pod 分配 IP(最基础)、

让 Pod 之间能互相访问、让 Pod 能访问外部。让外部能访问 Pod这就是 CNI 网络插件 的作用,和 Flannel 功能一样。2. 做路由(比 Flannel 强很多)

Calico 不用隧道,直接用 Linux 原生路由,速度快、性能高。适合生产环境

2:高并发服务

微服务大量通信。Flannel 是 "简单能用" vs Calico 是 "生产级、高性能"

3:网络策略

Calico 最核心优势。这是 Flannel 没有的!你可以理解成:K8s 里的精细防火墙

只允许 A 服务访问 B 服务、禁止外部访问数据库 Pod、不同命名空间不能互通、只允许特定端口通信、企业生产环境必须用这个,不然不安全。

1:安装helm

2:安装Calico

下载地址:https://github.com/projectcalico/calico/releases

接下来进行创建:

## 使用helm工具进行部署,安装calico 安装的名称叫calico 指向这个jar包

## 安装到kube-system这个名称空间下

## 如果没有这个命名空间就创建。

[root@k8s-node1 src]# helm install calico tigera-operator-v3.25.1.tgz -n kube-system --create-namespace

NAME: calico

LAST DEPLOYED: Tue Feb 17 09:16:48 2026

NAMESPACE: kube-system

STATUS: deployed

REVISION: 1

DESCRIPTION: Install complete

TEST SUITE: None

[root@k8s-node1 src]#接下来检查下是否安装成功了,这个安装之后也是Pod的方式运行,接下来我们访问下Pod

[root@k8s-node1 src]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

calico-system calico-kube-controllers-6bb86c78b4-kkx6k 1/1 Running 0 2m49s

calico-system calico-node-hzwwz 0/1 Init:1/2 0 2m49s

calico-system calico-node-k99h5 0/1 Running 0 2m49s

calico-system calico-node-p5t4m 0/1 Running 0 2m49s

calico-system calico-node-rdcv9 1/1 Running 0 2m49s

calico-system calico-typha-6878d54cb-b4hf8 1/1 Running 0 2m40s

calico-system calico-typha-6878d54cb-szrnd 1/1 Running 0 2m49s

calico-system csi-node-driver-6x4dh 0/2 ContainerCreating 0 2m49s

calico-system csi-node-driver-fvsr5 2/2 Running 0 2m49s

calico-system csi-node-driver-lzx47 2/2 Running 0 2m49s

calico-system csi-node-driver-rhb85 2/2 Running 0 2m49s

ems nginx-59dd548446-2mzl8 1/1 Running 3 (46m ago) 20d

ingress-nginx ingress-nginx-admission-create-lpm7z 0/1 Completed 0 20d

ingress-nginx ingress-nginx-admission-patch-49mfg 0/1 Completed 0 20d

ingress-nginx ingress-nginx-controller-c954cf69-spssp 1/1 Running 3 (46m ago) 20d

kube-system coredns-5bbd96d687-5q7vt 1/1 Running 21 (46m ago) 153d

kube-system coredns-5bbd96d687-fj78w 1/1 Running 21 (46m ago) 153d

kube-system etcd-k8s-node1 1/1 Running 21 (47m ago) 153d

kube-system kube-apiserver-k8s-node1 1/1 Running 21 (47m ago) 153d

kube-system kube-controller-manager-k8s-node1 1/1 Running 22 (47m ago) 153d

kube-system kube-proxy-cgn9t 1/1 Running 20 (46m ago) 153d

kube-system kube-proxy-jxkfh 1/1 Running 21 (47m ago) 153d

kube-system kube-proxy-qdp9p 1/1 Running 21 (46m ago) 153d

kube-system kube-proxy-vhhhs 1/1 Running 8 (46m ago) 22d

kube-system kube-scheduler-k8s-node1 1/1 Running 22 (47m ago) 153d

kube-system metrics-server-65cf7984d5-cz7hr 1/1 Running 6 (45m ago) 20d

kube-system tigera-operator-5d6845b496-rdbg2 1/1 Running 0 13m

springboot springboot-b4f4d649d-hqjf8 1/1 Running 3 (46m ago) 20d

[root@k8s-node1 src]#| Pod 名称 | 状态 | 含义 |

|---|---|---|

| calico-kube-controllers | 1/1 Running | Calico 控制面组件(核心),已正常启动 ✅ |

| calico-typha | 1/1 Running | Calico 轻量级数据聚合组件,已正常启动 ✅ |

| tigera-operator | 1/1 Running | Calico 操作器(部署核心),已正常启动 ✅ |

| calico-node(部分) | 0/1 Init:1/2/Running | Calico 节点代理(每个节点一个),正在初始化网络规则 / 路由(正常) ⏳ |

| csi-node-driver(部分) | 0/2 ContainerCreating | Calico CSI 驱动(存储相关,非核心),不影响网络功能 ⏳ |

[root@k8s-node1 src]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

calico-apiserver calico-apiserver-86dd9bc6ff-bjn6s 1/1 Running 0 106s

calico-apiserver calico-apiserver-86dd9bc6ff-wpp2k 1/1 Running 0 106s

calico-system calico-kube-controllers-6bb86c78b4-kkx6k 1/1 Running 0 12m

calico-system calico-node-hzwwz 1/1 Running 0 12m

calico-system calico-node-k99h5 1/1 Running 0 12m

calico-system calico-node-p5t4m 1/1 Running 0 12m

calico-system calico-node-rdcv9 1/1 Running 0 12m

calico-system calico-typha-6878d54cb-b4hf8 1/1 Running 0 12m

calico-system calico-typha-6878d54cb-szrnd 1/1 Running 0 12m

calico-system csi-node-driver-6x4dh 2/2 Running 0 12m

calico-system csi-node-driver-fvsr5 2/2 Running 0 12m

calico-system csi-node-driver-lzx47 2/2 Running 0 12m

calico-system csi-node-driver-rhb85 2/2 Running 0 12m

ems nginx-59dd548446-2mzl8 1/1 Running 3 (55m ago) 20d

ingress-nginx ingress-nginx-admission-create-lpm7z 0/1 Completed 0 20d

ingress-nginx ingress-nginx-admission-patch-49mfg 0/1 Completed 0 20d

ingress-nginx ingress-nginx-controller-c954cf69-spssp 1/1 Running 3 (55m ago) 20d

kube-system coredns-5bbd96d687-5q7vt 1/1 Running 21 (55m ago) 153d

kube-system coredns-5bbd96d687-fj78w 1/1 Running 21 (55m ago) 153d

kube-system etcd-k8s-node1 1/1 Running 21 (56m ago) 153d

kube-system kube-apiserver-k8s-node1 1/1 Running 21 (56m ago) 153d

kube-system kube-controller-manager-k8s-node1 1/1 Running 22 (56m ago) 153d

kube-system kube-proxy-cgn9t 1/1 Running 20 (55m ago) 153d

kube-system kube-proxy-jxkfh 1/1 Running 21 (56m ago) 153d

kube-system kube-proxy-qdp9p 1/1 Running 21 (55m ago) 153d

kube-system kube-proxy-vhhhs 1/1 Running 8 (55m ago) 22d

kube-system kube-scheduler-k8s-node1 1/1 Running 22 (56m ago) 153d

kube-system metrics-server-65cf7984d5-cz7hr 1/1 Running 6 (55m ago) 20d

kube-system tigera-operator-5d6845b496-rdbg2 1/1 Running 0 22m

springboot springboot-b4f4d649d-hqjf8 1/1 Running 3 (55m ago) 20d

[root@k8s-node1 src]# ^C

[root@k8s-node1 src]# kubectl get service -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

calico-apiserver calico-api ClusterIP 10.97.17.252 <none> 443/TCP 2m1s

calico-system calico-kube-controllers-metrics ClusterIP None <none> 9094/TCP 10m

calico-system calico-typha ClusterIP 10.103.233.88 <none> 5473/TCP 12m

default kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 153d

ems springboot ClusterIP 10.107.255.163 <none> 8080/TCP 20d

ingress-nginx ingress-nginx-controller NodePort 10.99.221.247 <none> 80:31452/TCP,443:32503/TCP 20d

ingress-nginx ingress-nginx-controller-admission ClusterIP 10.107.148.119 <none> 443/TCP 20d

kube-system kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 153d

kube-system metrics-server ClusterIP 10.105.9.69 <none> 443/TCP 23d

springboot springboot ClusterIP 10.100.96.205 <none> 8080/TCP 20d

[root@k8s-node1 src]#3:测试k8s集群中创建的pod是否可以上网

在Master上直接启动pod

kubectl run busybox --image busybox:latest --restart=Never --rm -it busybox --sh

先修改下coredns的配置:

[root@k8s-node1 src]# kubectl edit configmap coredns -n kube-system

apiVersion: v1

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

prometheus :9153

forward . /etc/resolv.conf 223.5.5.5 114.114.114.114 {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

kind: ConfigMap

metadata:

creationTimestamp: "2025-09-17T12:30:05Z"

name: coredns

namespace: kube-system

resourceVersion: "531125"

uid: 0270a814-035c-4387-a337-b6c829414c0c然后我们更新一下coredns的配置。

[root@k8s-node1 src]# # 重启 CoreDNS Deployment(滚动重启,不影响集群)

[root@k8s-node1 src]# kubectl rollout restart deployment coredns -n kube-system

deployment.apps/coredns restarted查看一下滚动更新的效果

[root@k8s-node1 src]# kubectl get pods -n kube-system | grep coredns

coredns-5bbd96d687-5q7vt 0/1 Terminating 21 (68m ago) 153d

coredns-5bbd96d687-fj78w 0/1 Terminating 21 (68m ago) 153d

coredns-75944bc9b-ngbjq 1/1 Running 0 2m22s

coredns-75944bc9b-zxrsd 1/1 Running 0 2m22s

[root@k8s-node1 src]# kubectl run busybox --image busybox:latest --restart=Never --rm -it -- sh

If you don't see a command prompt, try pressing enter.

/ # ping www.baidu.com

PING www.baidu.com (39.156.70.46): 56 data bytes

64 bytes from 39.156.70.46: seq=0 ttl=127 time=97.762 ms

64 bytes from 39.156.70.46: seq=1 ttl=127 time=96.999 ms

64 bytes from 39.156.70.46: seq=2 ttl=127 time=16.253 ms

64 bytes from 39.156.70.46: seq=3 ttl=127 time=16.218 ms

64 bytes from 39.156.70.46: seq=4 ttl=127 time=16.429 ms

64 bytes from 39.156.70.46: seq=5 ttl=127 time=20.864 ms

64 bytes from 39.156.70.46: seq=6 ttl=127 time=16.557 ms

64 bytes from 39.156.70.46: seq=7 ttl=127 time=16.955 ms

64 bytes from 39.156.70.46: seq=8 ttl=127 time=20.499 ms

64 bytes from 39.156.70.46: seq=9 ttl=127 time=16.888 ms

64 bytes from 39.156.70.46: seq=10 ttl=127 time=17.619 ms

64 bytes from 39.156.70.46: seq=11 ttl=127 time=17.656 ms

^C

--- www.baidu.com ping statistics ---

12 packets transmitted, 12 packets received, 0% packet loss

round-trip min/avg/max = 16.218/30.891/97.762 ms

/ #4:部署Tomcat集群

查看都有哪些命名空间

[root@k8s-node1 src]# kubectl get namespace

NAME STATUS AGE

calico-apiserver Active 54m

calico-system Active 64m

default Active 153d

ems Active 22d

ingress-nginx Active 20d

kube-node-lease Active 153d

kube-public Active 153d

kube-system Active 153d

springboot Active 20d

[root@k8s-node1 src]#查看容器信息

你执行

nerdctl -n k8s.io ps -a时提示nerdctl: command not found,核心原因是你的 K8s 节点上没有安装 nerdctl 工具。nerdctl 是 containerd 官方的命令行工具 ,作用和

docker命令几乎一样,用来管理 containerd 中的容器 / 镜像。

yum install -y wget tar

# 第二步:下载 nerdctl 二进制包(适配 x86_64 架构,其他架构换链接)

wget https://github.com/containerd/nerdctl/releases/download/v1.7.6/nerdctl-1.7.6-linux-amd64.tar.gz -O /tmp/nerdctl.tar.gz

# 第三步:解压到 /usr/local/bin(系统PATH目录,全局可用)

tar -zxvf /tmp/nerdctl.tar.gz -C /usr/local/bin nerdctl

# 第四步:验证安装

nerdctl version

[root@k8s-node1 src]# nerdctl -n k8s.io ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

05b6f0ef589c registry.aliyuncs.com/google_containers/kube-proxy:v1.26.15 "/usr/local/bin/kube..." 2 hours ago Up k8s://kube-system/kube-proxy-jxkfh/kube-proxy

07e496d3852f registry.aliyuncs.com/google_containers/pause:3.2 "/pause" 2 hours ago Up k8s://kube-system/kube-controller-manager-k8s-node1

0dfddc01a3f4 registry.aliyuncs.com/google_containers/pause:3.2 "/pause" 2 hours ago Up k8s://kube-system/kube-apiserver-k8s-node1

47a0b4a0b113 docker.io/calico/pod2daemon-flexvol:v3.25.1 "/usr/local/bin/flex..." About an hour ago Created k8s://calico-system/calico-node-rdcv9/flexvol-driver

4d14dc938718 registry.aliyuncs.com/google_containers/etcd:3.5.6-0 "etcd --advertise-cl..." 3 days ago Created k8s://kube-system/etcd-k8s-node1/etcd

51d3797f4059 registry.aliyuncs.com/google_containers/kube-controller-manager:v1.26.15 "kube-controller-man..." 3 days ago Created k8s://kube-system/kube-controller-manager-k8s-node1/kube-controller-manager

5fc61b563c16 docker.io/calico/node-driver-registrar:v3.25.1 "/usr/local/bin/node..." About an hour ago Up k8s://calico-system/csi-node-driver-rhb85/csi-node-driver-registrar

63da77bd58c0 registry.aliyuncs.com/google_containers/kube-proxy:v1.26.15 "/usr/local/bin/kube..." 3 days ago Created k8s://kube-system/kube-proxy-jxkfh/kube-proxy

655b7cc0781d registry.aliyuncs.com/google_containers/kube-apiserver:v1.26.15 "kube-apiserver --ad..." 3 days ago Created k8s://kube-system/kube-apiserver-k8s-node1/kube-apiserver

785ebd399790 registry.aliyuncs.com/google_containers/kube-scheduler:v1.26.15 "kube-scheduler --au..." 2 hours ago Up k8s://kube-system/kube-scheduler-k8s-node1/kube-scheduler

81f5442a64e9 registry.aliyuncs.com/google_containers/pause:3.2 "/pause" 3 days ago Created k8s://kube-system/kube-proxy-jxkfh

83492b46e7c0 registry.aliyuncs.com/google_containers/pause:3.2 "/pause" 3 days ago Created k8s://kube-system/kube-apiserver-k8s-node1

8e84882bc9bf registry.aliyuncs.com/google_containers/pause:3.2 "/pause" 2 hours ago Up k8s://kube-system/etcd-k8s-node1

94de64f1ec0e registry.aliyuncs.com/google_containers/pause:3.2 "/pause" 2 hours ago Up k8s://kube-system/kube-scheduler-k8s-node1

9b732da44df4 registry.aliyuncs.com/google_containers/kube-apiserver:v1.26.15 "kube-apiserver --ad..." 2 hours ago Up k8s://kube-system/kube-apiserver-k8s-node1/kube-apiserver

9bc9b3a27683 registry.aliyuncs.com/google_containers/pause:3.2 "/pause" 3 days ago Created k8s://kube-system/kube-controller-manager-k8s-node1

a3f5d96abd41 registry.aliyuncs.com/google_containers/pause:3.2 "/pause" 3 days ago Created k8s://kube-system/etcd-k8s-node1

a69da6b7f63c registry.aliyuncs.com/google_containers/kube-scheduler:v1.26.15 "kube-scheduler --au..." 3 days ago Created k8s://kube-system/kube-scheduler-k8s-node1/kube-scheduler

a7e5a323cbae registry.aliyuncs.com/google_containers/pause:3.2 "/pause" 3 days ago Created k8s://kube-system/kube-scheduler-k8s-node1

a82fb6a9d6c3 registry.aliyuncs.com/google_containers/pause:3.2 "/pause" 2 hours ago Up k8s://kube-system/kube-proxy-jxkfh

ac446d7d5edf registry.aliyuncs.com/google_containers/etcd:3.5.6-0 "etcd --advertise-cl..." 2 hours ago Up k8s://kube-system/etcd-k8s-node1/etcd

b0459176ca63 registry.aliyuncs.com/google_containers/kube-controller-manager:v1.26.15 "kube-controller-man..." 2 hours ago Up k8s://kube-system/kube-controller-manager-k8s-node1/kube-controller-manager

ced2cb4bb4ea registry.aliyuncs.com/google_containers/pause:3.2 "/pause" About an hour ago Up k8s://calico-system/csi-node-driver-rhb85

eb825d153e8a docker.io/calico/node:v3.25.1 "start_runit" About an hour ago Up k8s://calico-system/calico-node-rdcv9/calico-node

f11a821cf277 docker.io/calico/csi:v3.25.1 "/usr/local/bin/csi-..." About an hour ago Up k8s://calico-system/csi-node-driver-rhb85/calico-csi

f998a08f1c95 registry.aliyuncs.com/google_containers/pause:3.2 "/pause" About an hour ago Up k8s://calico-system/calico-node-rdcv9

f9e5c04329ca docker.io/calico/cni:v3.25.1 "/opt/cni/bin/install" About an hour ago Created k8s://calico-system/calico-node-rdcv9/install-cni

[root@k8s-node1 src]#