卷积神经网络

- [一. 卷积神经网络](#一. 卷积神经网络)

-

- [1. 导包:](#1. 导包:)

- [2. 导入数据,并进行观察:](#2. 导入数据,并进行观察:)

- 3.定义u、s、p:

- [4. 卷积层:](#4. 卷积层:)

- 5.输出卷积后的张量大小:

- 6.输出最大汇聚后的张量大小:

- 7.输出平均汇聚后的张量大小:

- [8. 输出全局平均汇聚后的张量大小](#8. 输出全局平均汇聚后的张量大小)

- 9.进行数据集的训练与测试:

- 10.对数据进行预处理:

- 11.打印每个数组的维度信息:

- [12. 添加一个新轴,将数据集的形状从三维数组转换为四维数组:](#12. 添加一个新轴,将数据集的形状从三维数组转换为四维数组:)

- [13. 通过卷积层和池化层提取特征,再通过全连接层进行分类:](#13. 通过卷积层和池化层提取特征,再通过全连接层进行分类:)

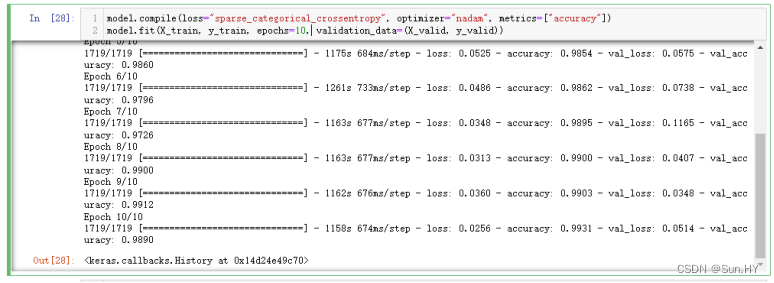

- [14. 编译模型→选择损失函数、优化器和性能指标→对模型进行训练→训练结束评估性能:](#14. 编译模型→选择损失函数、优化器和性能指标→对模型进行训练→训练结束评估性能:)

- [15. 查看准确率:](#15. 查看准确率:)

- 16.调用model_cnn_mnist.summary()函数,得到模型详细概述:

- [二. 利用函数式API与子类API搭建复杂神经网络:](#二. 利用函数式API与子类API搭建复杂神经网络:)

一. 卷积神经网络

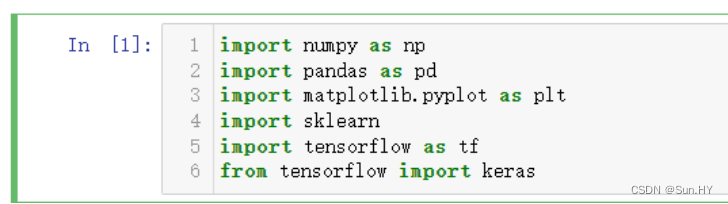

1. 导包:

- 导入运行过程中所需要使用的所有库

python

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import sklearn

import tensorflow as tf

from tensorflow import keras输出结果:

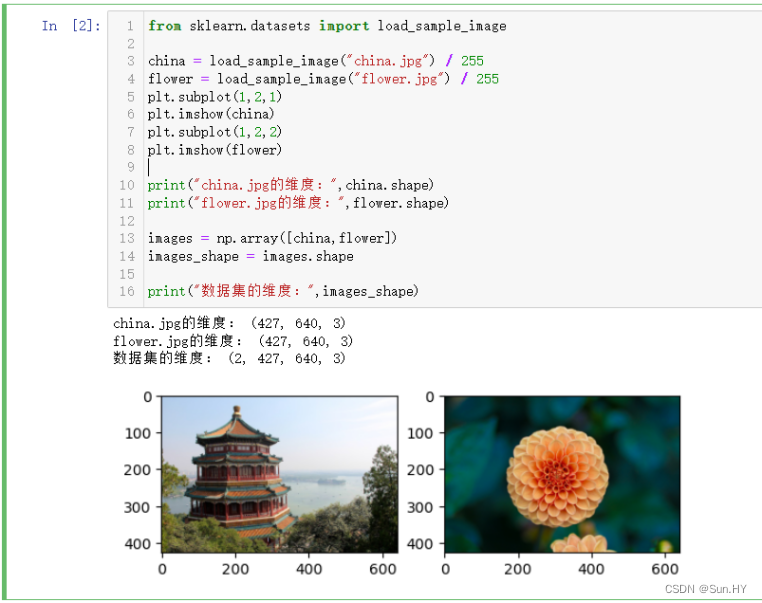

2. 导入数据,并进行观察:

python

from sklearn.datasets import load_sample_image

china = load_sample_image("china.jpg") / 255

flower = load_sample_image("flower.jpg") / 255

plt.subplot(1,2,1)

plt.imshow(china)

plt.subplot(1,2,2)

plt.imshow(flower)

print("china.jpg的维度:",china.shape)

print("flower.jpg的维度:",flower.shape)

images = np.array([china,flower])

images_shape = images.shape

print("数据集的维度:",images_shape)输出结果:

3.定义u、s、p:

- U:卷积核边长

- S:滑动步长

- P:输出特征图数目

python

u = 7 #卷积核边长

s = 1 #滑动步长

p = 5 #输出特征图数目4. 卷积层:

- 卷积层接收形状为images_shape的图像数据,使用卷积核进行卷积操作,使用ReLU激活函数,并确保输出的特征图与输入图像具有相同的空间维度

python

conv = keras.layers.Conv2D(filters= p, kernel_size= u, strides= s,

padding="SAME",activation="relu",input_shape=images_shape)5.输出卷积后的张量大小:

python

image_after_conv = conv(images)

print("卷积后的张量大小:", image_after_conv.shape)输出结果:

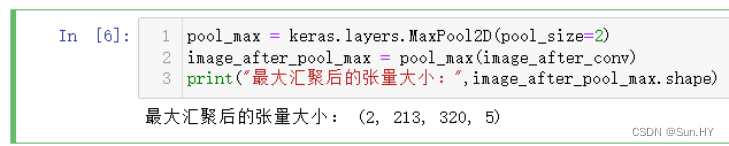

6.输出最大汇聚后的张量大小:

python

pool_max = keras.layers.MaxPool2D(pool_size=2)

image_after_pool_max = pool_max(image_after_conv)

print("最大汇聚后的张量大小:",image_after_pool_max.shape)输出结果:

7.输出平均汇聚后的张量大小:

python

pool_avg = keras.layers.AvgPool2D(pool_size=2)

image_after_pool_avg = pool_avg(image_after_conv)

print("平均汇聚后的张量大小:",image_after_pool_avg.shape)输出结果:

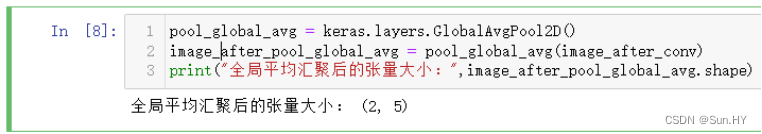

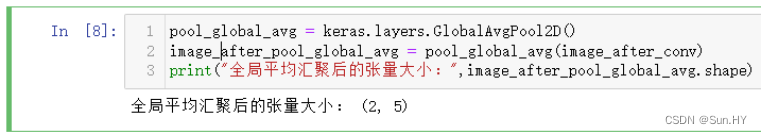

8. 输出全局平均汇聚后的张量大小

python

pool_global_avg = keras.layers.GlobalAvgPool2D()

image_after_pool_global_avg = pool_global_avg(image_after_conv)

print("全局平均汇聚后的张量大小:",image_after_pool_global_avg.shape)输出结果:

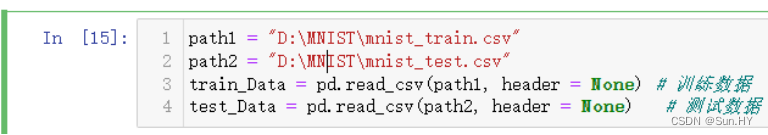

9.进行数据集的训练与测试:

python

path1 = "D:\MNIST\mnist_train.csv"

path2 = "D:\MNIST\mnist_test.csv"

train_Data = pd.read_csv(path1, header = None) # 训练数据

test_Data = pd.read_csv(path2, header = None) # 测试数据 输出结果:

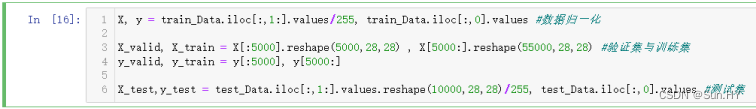

10.对数据进行预处理:

- 数据归一化:

python

X, y = train_Data.iloc[:,1:].values/255, train_Data.iloc[:,0].values- 划分验证集和训练集:

python

X_valid,X_train = X[:5000].reshape(5000,28,28) , X[5000:].reshape(55000,28,28)

y_valid, y_train = y[:5000], y[5000:]- 处理测试集:

python

X_test,y_test=test_Data.iloc[:,1:].values.reshape(10000,28,28)/255,test_Data.iloc[:,0].values #测试集输出结果:

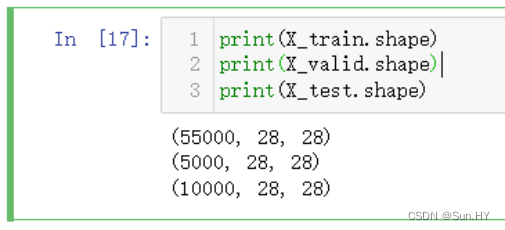

11.打印每个数组的维度信息:

python

print(X_train.shape)

print(X_valid.shape)

print(X_test.shape)输出结果:

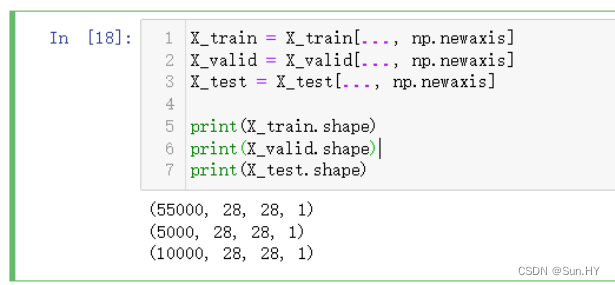

12. 添加一个新轴,将数据集的形状从三维数组转换为四维数组:

python

X_train = X_train[..., np.newaxis]

X_valid = X_valid[..., np.newaxis]

X_test = X_test[..., np.newaxis]

print(X_train.shape)

print(X_valid.shape)

print(X_test.shape)输出结果:

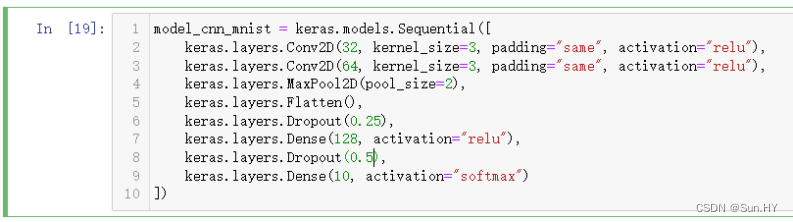

13. 通过卷积层和池化层提取特征,再通过全连接层进行分类:

python

model_cnn_mnist = keras.models.Sequential([

keras.layers.Conv2D(32, kernel_size=3, padding="same", activation="relu"),

keras.layers.Conv2D(64, kernel_size=3, padding="same", activation="relu"),

keras.layers.MaxPool2D(pool_size=2),

keras.layers.Flatten(),

keras.layers.Dropout(0.25),

keras.layers.Dense(128, activation="relu"),

keras.layers.Dropout(0.5),

keras.layers.Dense(10, activation="softmax")

])输出结果:

14. 编译模型→选择损失函数、优化器和性能指标→对模型进行训练→训练结束评估性能:

python

model_cnn_mnist.compile(loss="sparse_categorical_crossentropy", optimizer="nadam", metrics=["accuracy"])

model_cnn_mnist.fit(X_train, y_train, epochs=10, validation_data=(X_valid, y_valid))输出结果:

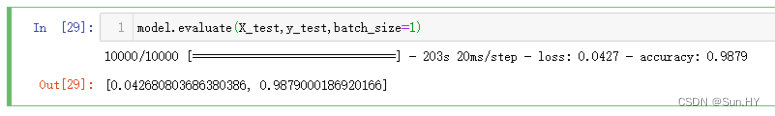

15. 查看准确率:

python

model_cnn_mnist.evaluate(X_test, y_test, batch_size=1)输出结果:

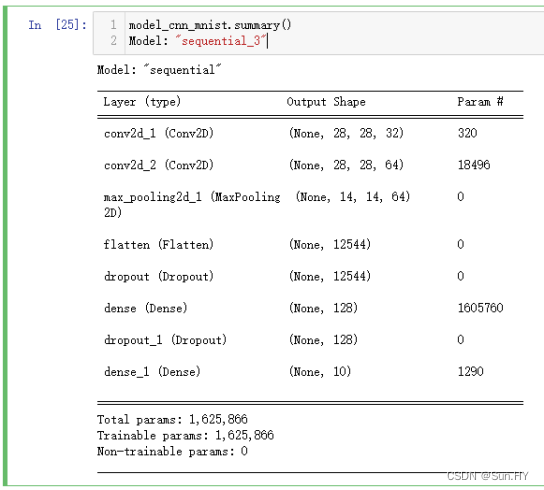

16.调用model_cnn_mnist.summary()函数,得到模型详细概述:

python

model_cnn_mnist.summary()

Model: "sequential_3"输出结果:

二. 利用函数式API与子类API搭建复杂神经网络:

1.

- 初始化方法:

python

class ResidualUnit(keras.layers.Layer):

def __init__(self, filters, strides=1, activation="relu"):

super().__init__()

self.activation = keras.activations.get(activation)

self.main_layers = [

keras.layers.Conv2D(filters, 3, strides=strides, padding = "SAME", use_bias = False),

keras.layers.BatchNormalization(),

self.activation,

keras.layers.Conv2D(filters,3,strides=1,padding="SAME",use_bias = False),

keras.layers.BatchNormalization()]

# 当滑动步长s = 1时,残差连接直接将输入与卷积结果相加,skip_layers为空,即实线连接

self.skip_layers = []

# 当滑动步长s = 2时,残差连接无法直接将输入与卷积结果相加,需要对输入进行卷积处理,即虚线连接- 残差链接:

python

if strides > 1:

self.skip_layers = [

keras.layers.Conv2D(filters, 1, strides=strides, padding = "SAME", use_bias = False),

keras.layers.BatchNormalization()]- 前向传播方法:

python

def call(self, inputs):

Z = inputs

for layer in self.main_layers:

Z = layer(Z)

skip_Z = inputs

for layer in self.skip_layers:

skip_Z = layer(skip_Z)

return self.activation(Z + skip_Z)输出结果:

2. 构建了一个基于残差单元的卷积神经网络模型:

- 初始化模型:

python

model = keras.models.Sequential()- 第一层:

python

model.add(keras.layers.Conv2D(64,7,strides=2,padding="SAME",use_bias=False))

model.add(keras.layers.BatchNormalization())

model.add(keras.layers.Activation("relu"))

model.add(keras.layers.MaxPool2D(pool_size=3, strides=2, padding="SAME"))- 残差单元层:

python

prev_filters = 64

for filters in [64] * 3 + [128] * 4 + [256] * 6 + [512] * 3:

strides = 1 if filters == prev_filters else 2

model.add(ResidualUnit(filters, strides=strides))

prev_filters = filters- 全局平均池化层:

python

model.add(keras.layers.GlobalAvgPool2D())- 展平层:

python

model.add(keras.layers.Flatten())- 全连接层和输出层:

python

model.add(keras.layers.Dense(10, activation="softmax"))输出结果:

3.训练模型:

python

model.compile(loss="sparse_categorical_crossentropy",optimizer="nadam",metrics=["accuracy"])

model.fit(X_train, y_train, epochs=10, validation_data=(X_valid, y_valid))输出结果:

4. 查看准确率:

python

model.evaluate(X_test,y_test,batch_size=1)输出结果: