参考:

https://github.com/naklecha/llama3-from-scratch(一定要看看)

https://github.com/karpathy/build-nanogpt/blob/master/play.ipynb

视频:

https://www.youtube.com/watch?v=l8pRSuU81PU

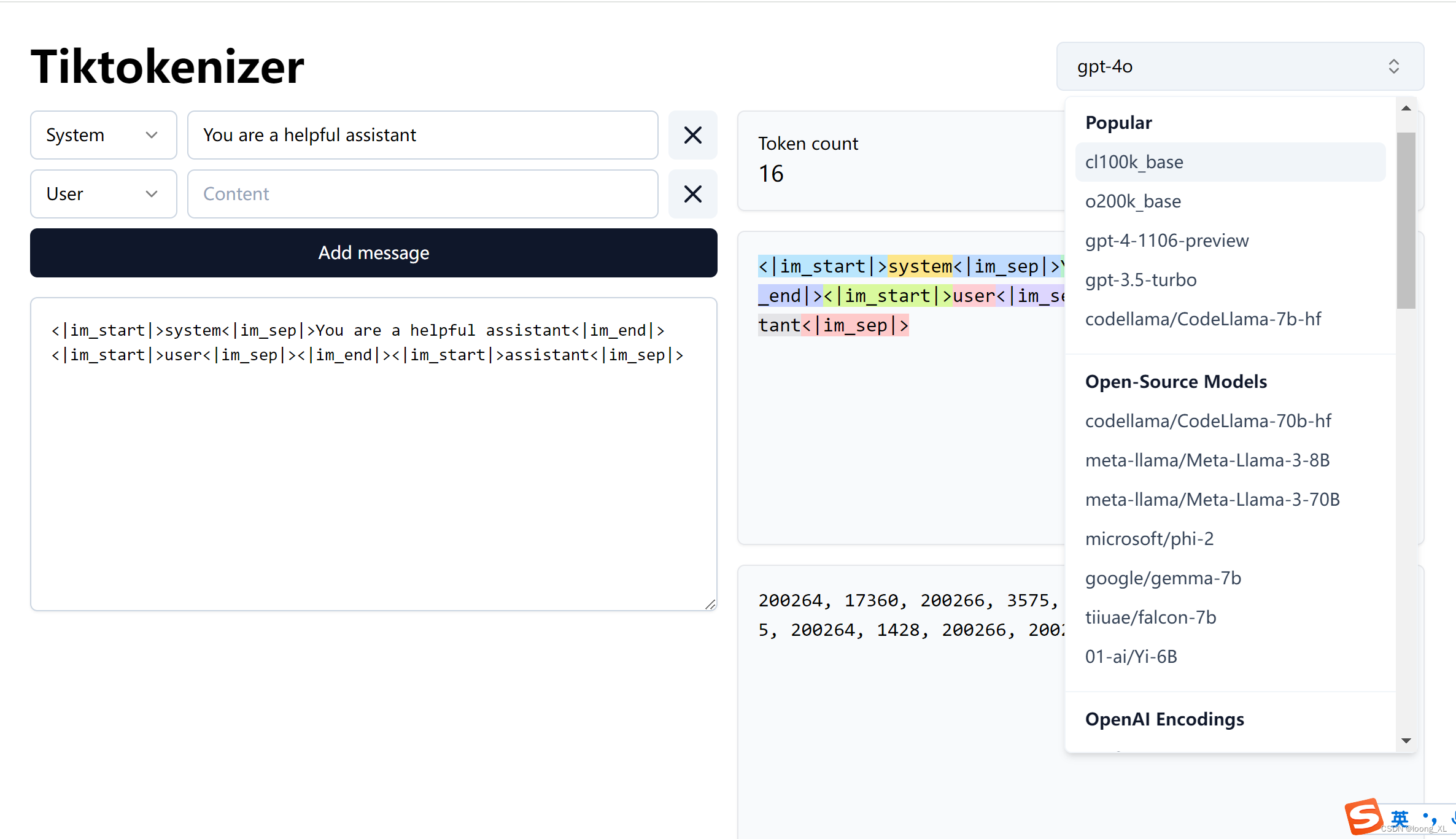

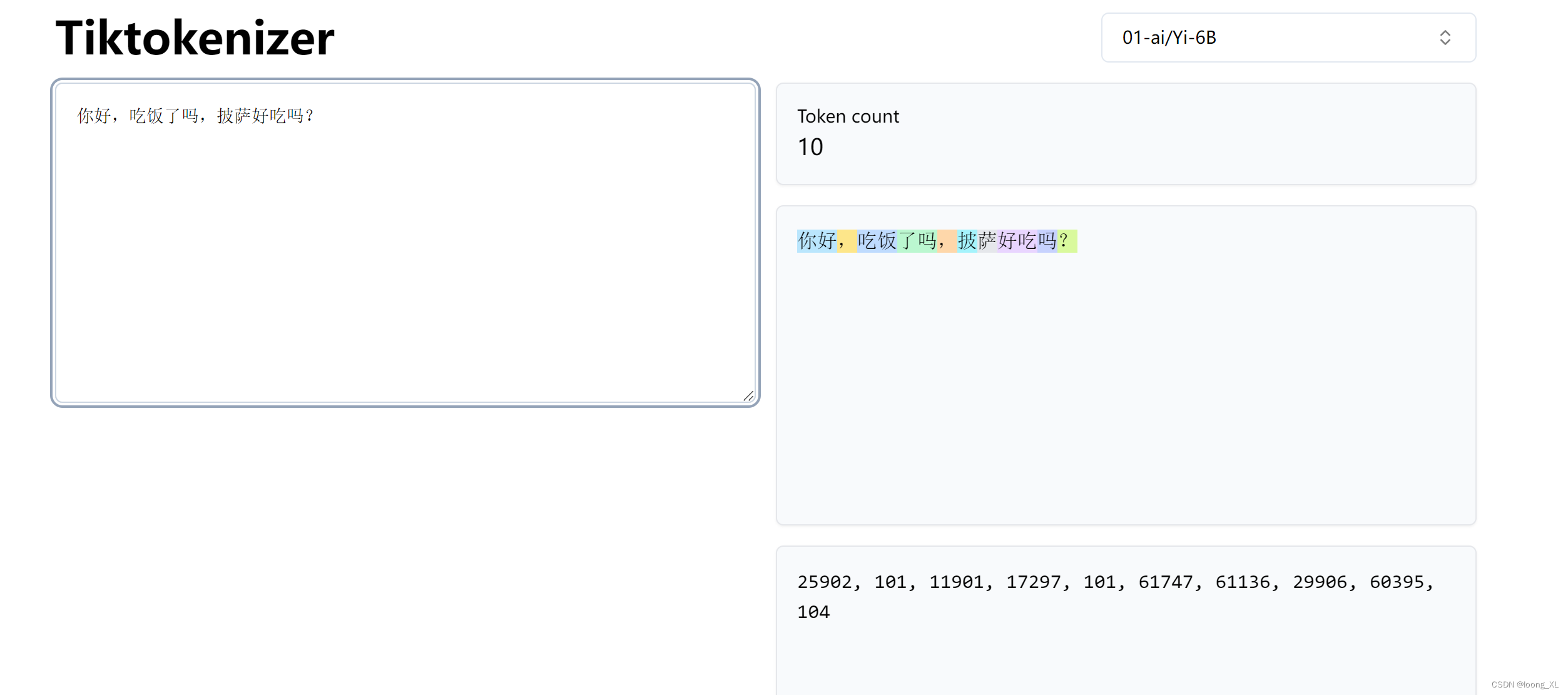

https://tiktokenizer.vercel.app/ (可以查看场景大模型的tiktokenizer具体值encode与decode)

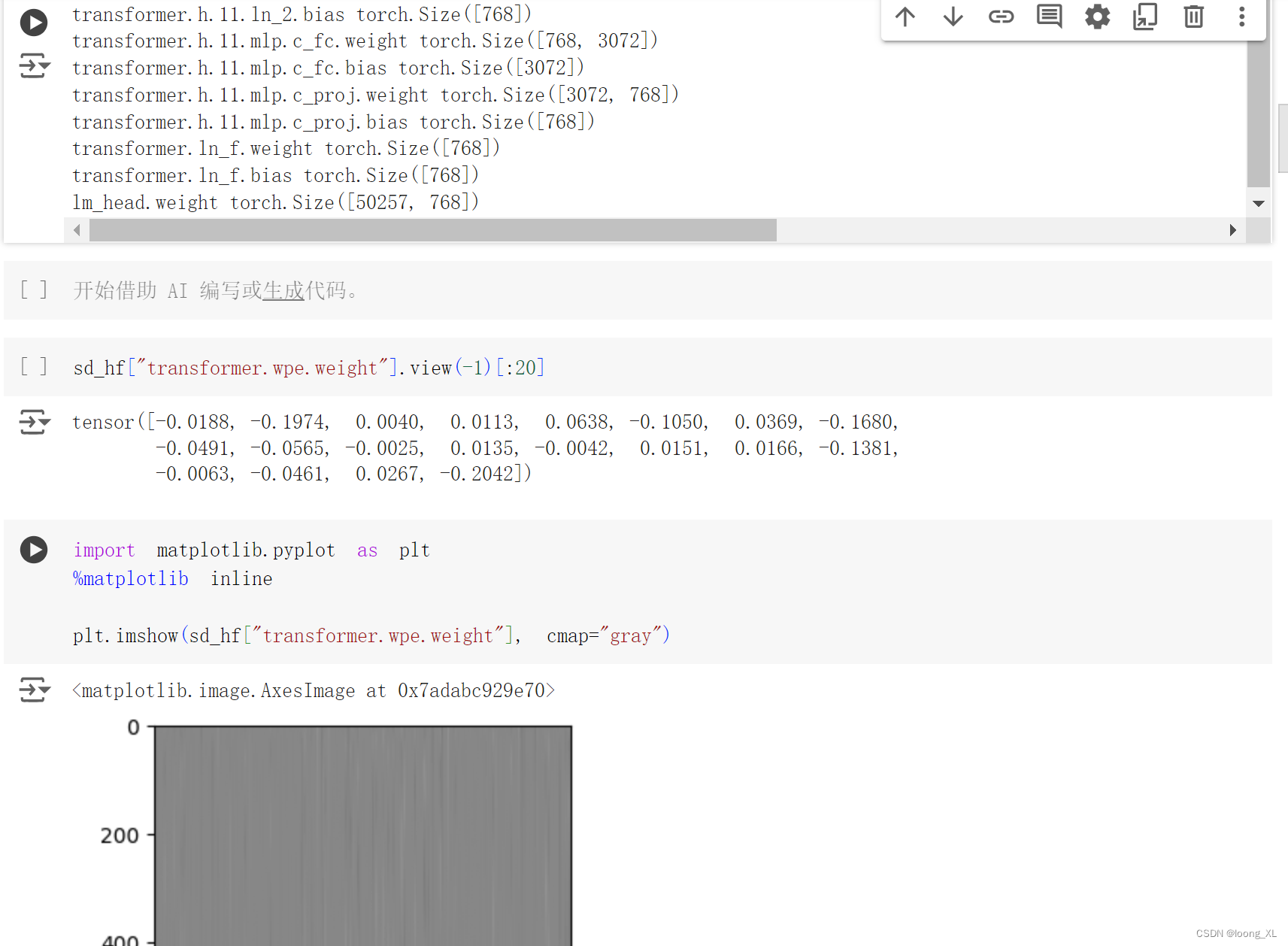

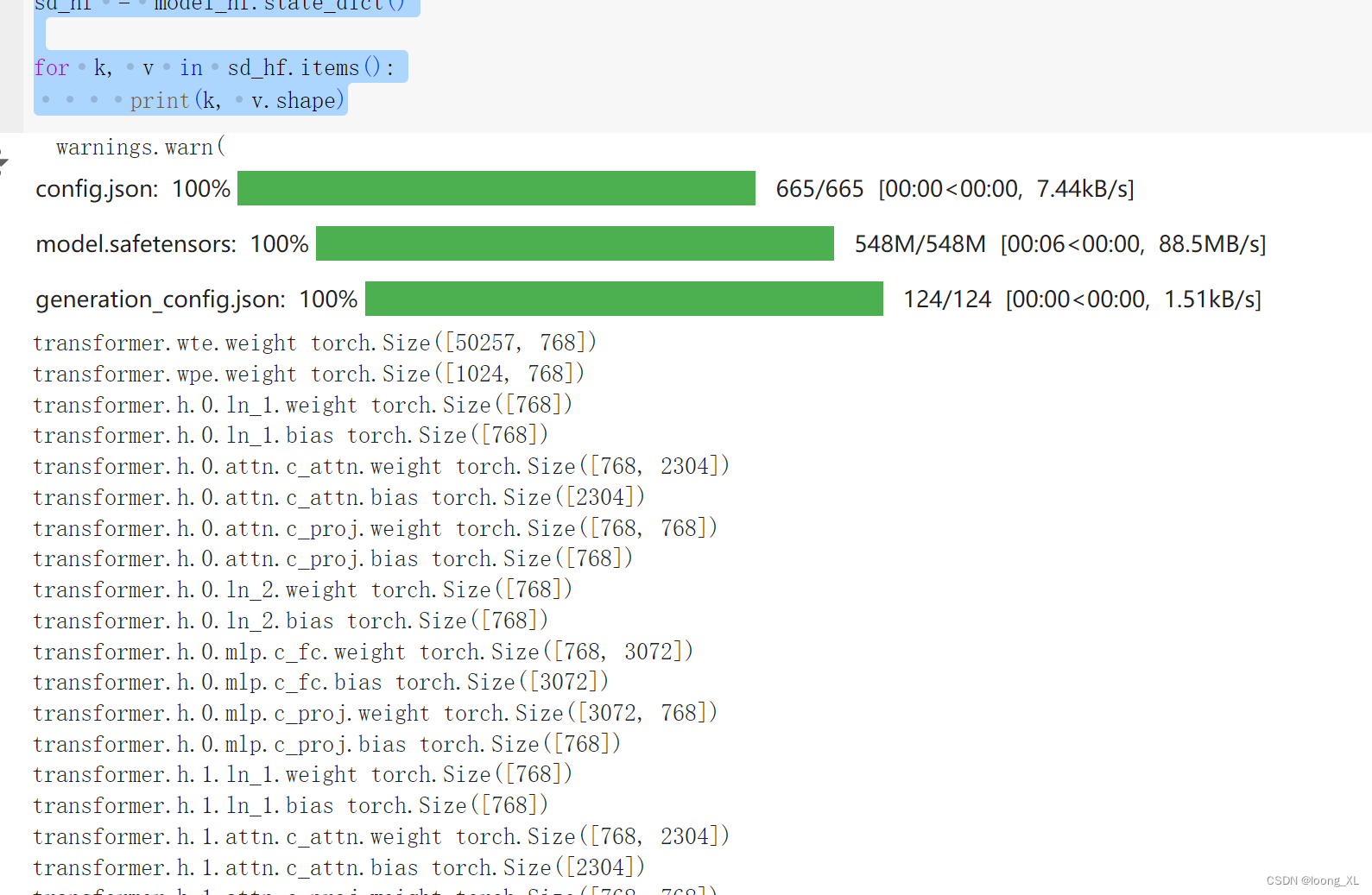

可以通过transformers加载模型查看具体结构和权重情况:

cpp

from transformers import GPT2LMHeadModel

model_hf = GPT2LMHeadModel.from_pretrained("gpt2") # 124M

sd_hf = model_hf.state_dict()

for k, v in sd_hf.items():

print(k, v.shape)

可以查看打印每层权重:

cpp

sd_hf["transformer.wpe.weight"].view(-1)[:20]

import matplotlib.pyplot as plt

%matplotlib inline

plt.imshow(sd_hf["transformer.wpe.weight"], cmap="gray")