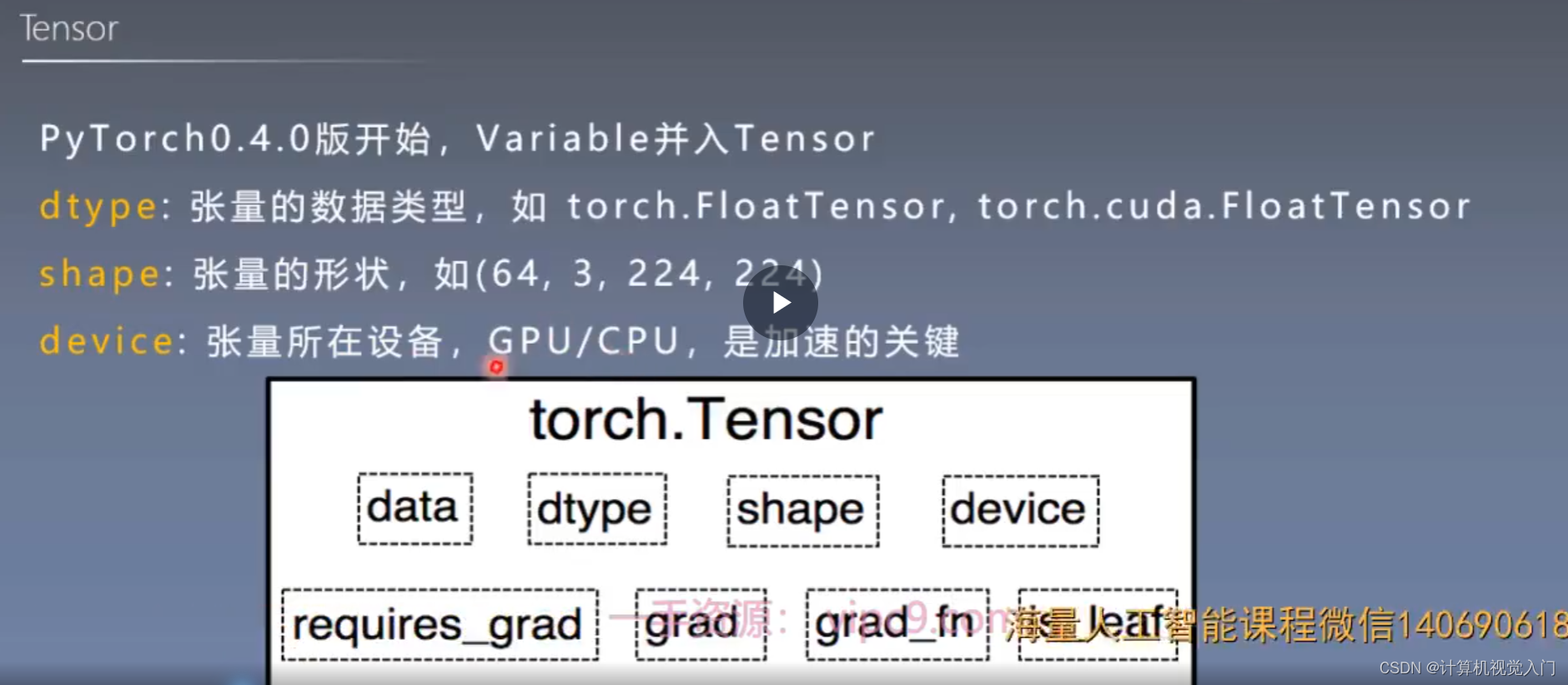

一:老版本的 variable

二:新版 tensor

曾经:求导相关

如今:数据相关

--dtype: 张量的数据类型,三大类,共9种。torch.FloatTensor, torch.cuda.FloatTensor

--shape: 张量的形状。如:(64,3,224,224)

--decive: 所在设备

三:tensor创建方法

Tensor创建一:直接创建 (共享内存:out)

1)

torch.tensor(data, dtype=None, device=None, requires_grad=False, pin_memory=False)2) 从numpy创建tensor : torch.from_numpy(ndarray)

注意:共享内容

python

import torch

import numpy as np

arr = np.array([[1, 2, 3], [4, 5, 6]])

t = torch.from_numpy(arr)

t[0, 0] = -1

print(arr)

print(t)Tensor创建二:依据数值创建(等差,均分,单位矩阵)

4.2 依据数值创建

1)torch.zeros():按照size创建全0张量

torch.zeros(*size, out=None, dtype=None, layout=torch.strided, device=None, requires_grad=False)-

21torch.zeros_like()

torch.zeros_like(input, dtype=None, layout=None, device=None, requires_grad=False)

-

torch.ones()

-

torch.ones_like()

-

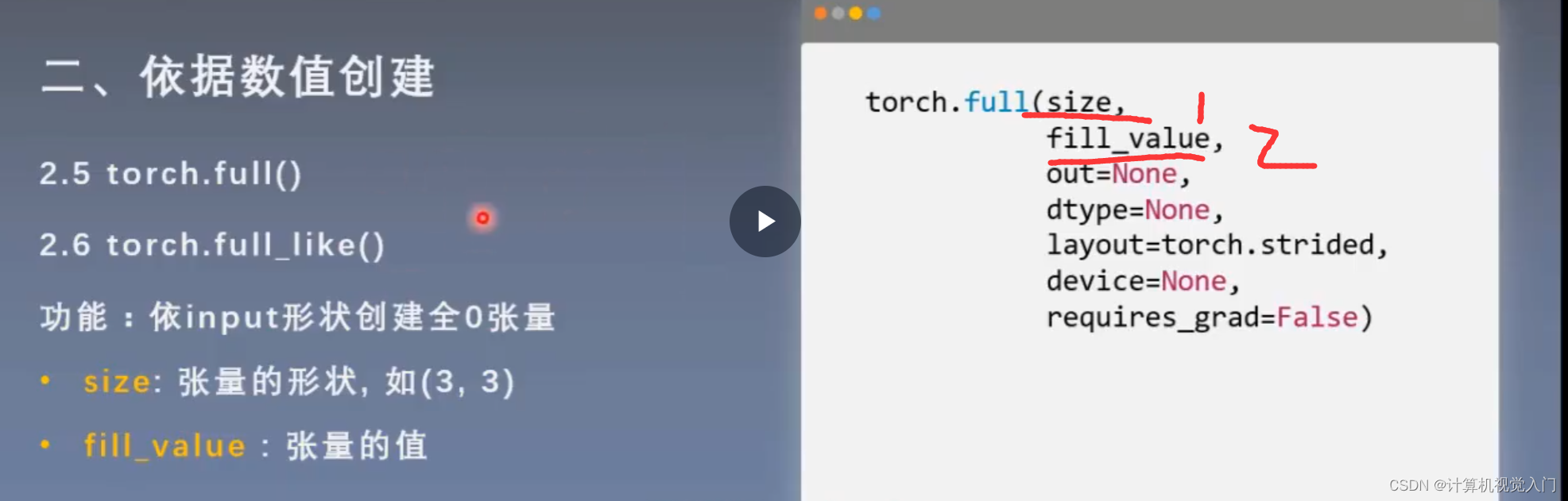

torch.full()

-

torch.full_like()

-

torch.arange(),创建等差数列,区间:[start, end,数值) 等差创建

6.torch.linspace(),创建均分数列,区间:[start, end]

注意:step是步长;steps是长度

t = torch.linspace(start=0, end=100, steps=5, out=None, dtype=None, layout=torch.strided, device=None, requires_grad=False)

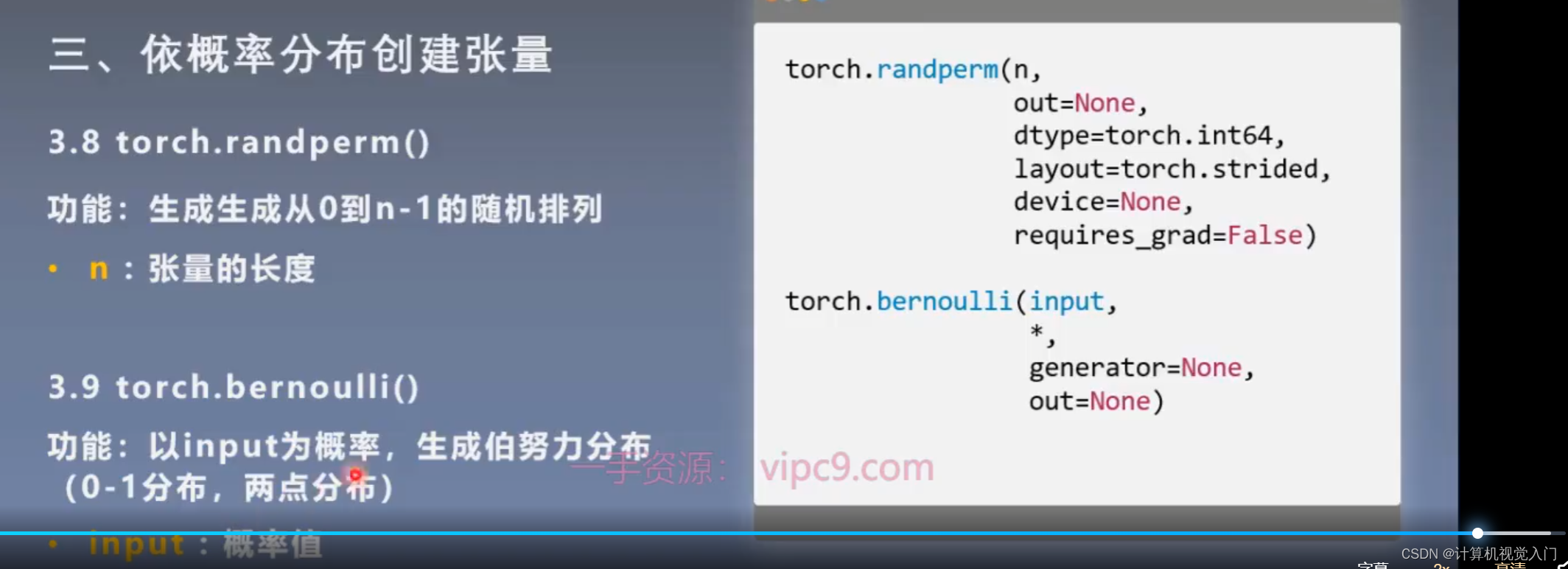

Tensor创建三:依据概率创建(正太,标准正太,伯努利分布)

第二章:Tensor 操作与线性回归

2.1:张量的操作:拼接、切分、索引和变换

2.1.1拼接:

- torch.cat(): 将张量按维度dim进行拼接

python

t_0 = torch.cat([t, t], dim=0)

t_1 = torch.cat([t, t], dim=1)torch.Size([4, 3])

torch.Size([2, 6])

- torch.stack():在新建的维度dim上进行拼接

python

t = torch.ones((2, 3))

t_0 = torch.cat([t, t], dim=0)

t_1 = torch.stack([t, t], dim=0)

print(t_0)

print(t_0.shape)

print(t_1)

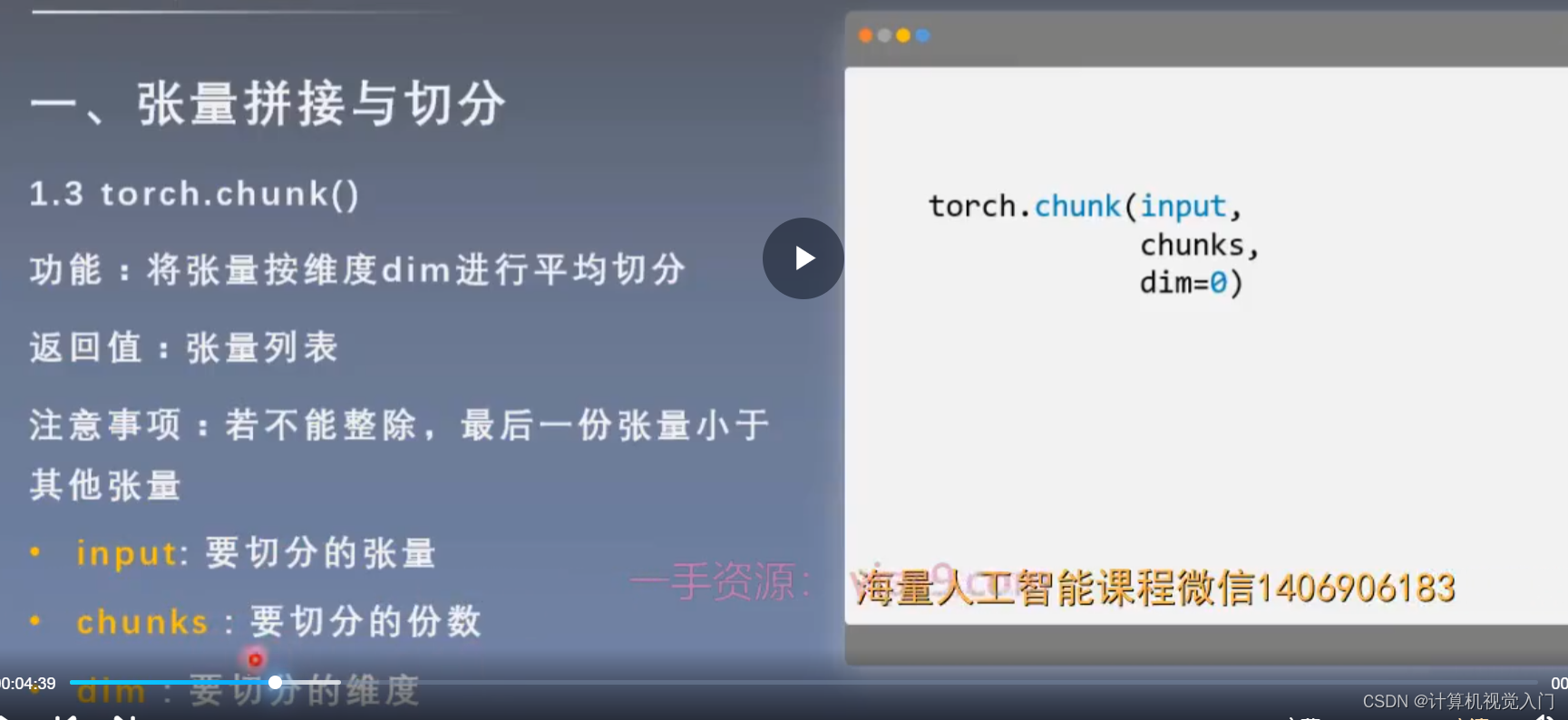

print(t_1.shape)2.1.2 切分

按维度dim进行平均切分: torch.chunk(input, chunks, dim)

python

t = torch.ones((2, 7))

print(t)

list_of_tensor = torch.chunk(t, dim=1, chunks=3)

print(list_of_tensor)指定切分:torch.split() [2,2,3]切分三个,每个都有特定的te'zheng'sh

python

t = torch.ones((2, 7))

print(t)

list_of_tensor_2 = torch.split(t, 3, dim=1)

print(list_of_tensor_2)

list_of_tensor_3 = torch.split(t, [2, 2, 3], dim=1)

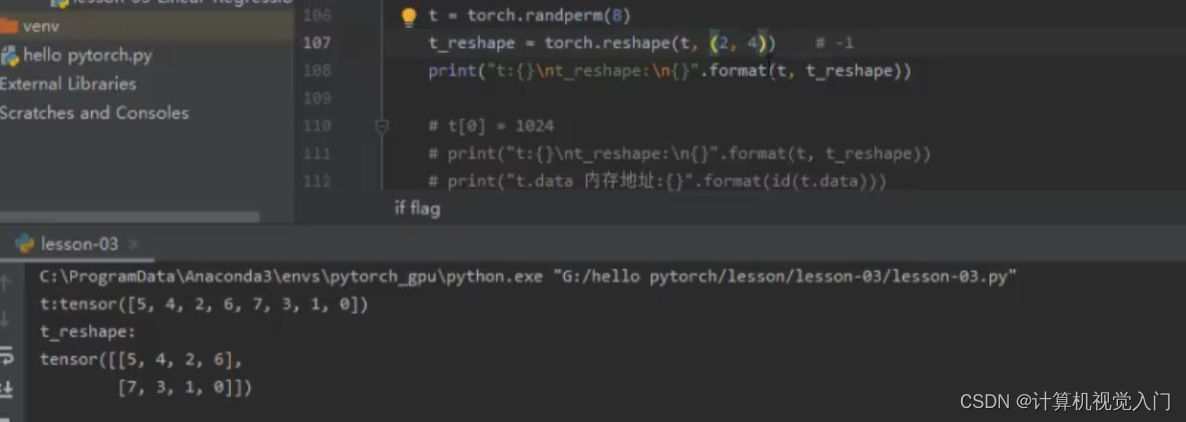

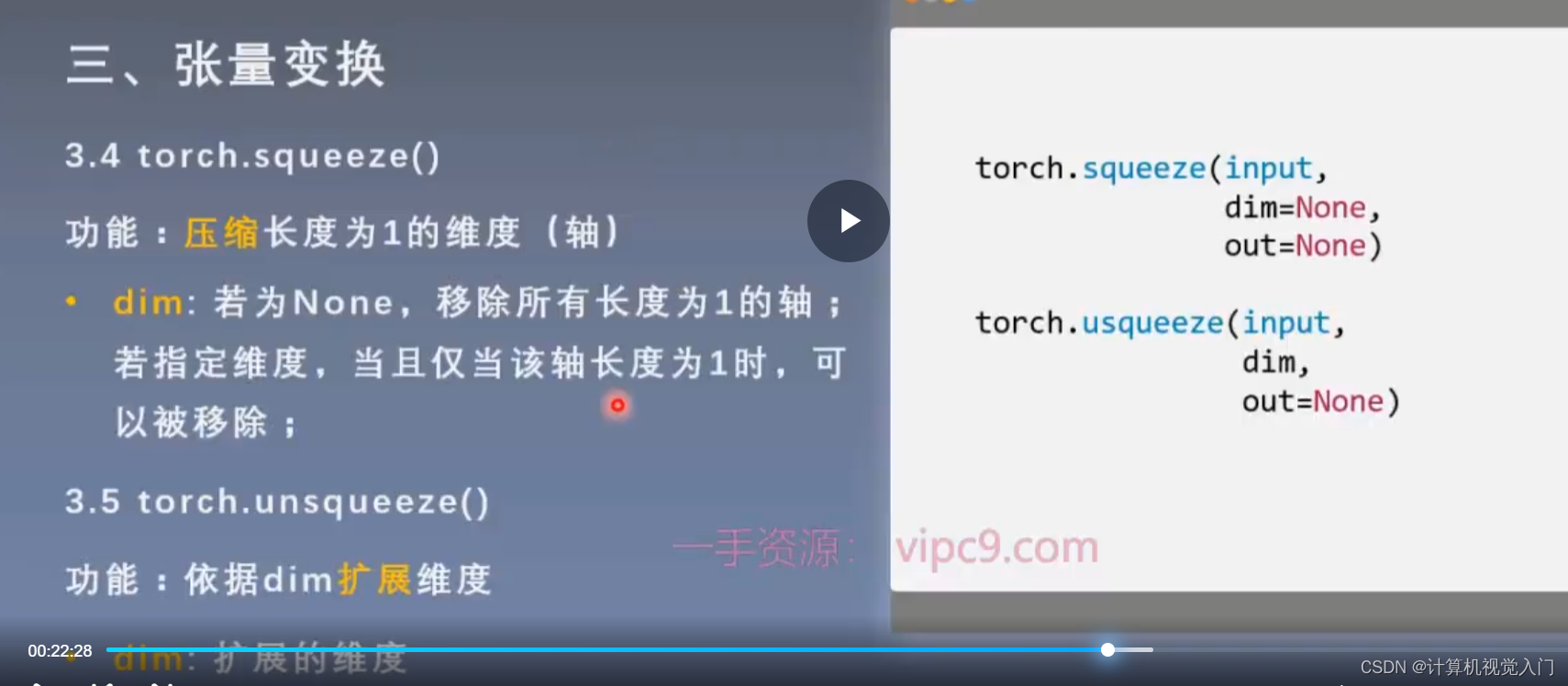

print(list_of_tensor_3)2.1.3 reshape

torch.reshape: 变换张量形状

notice: 注意事项:当张量在内存中是连续时,新张 量与input共享数据内存

python

# torch.reshape

t = torch.randperm(8)

print(t)

t_reshape = torch.reshape(t, (2, 4)) # -1代表不关心

print(t_reshape)

2.2张量的数学运算

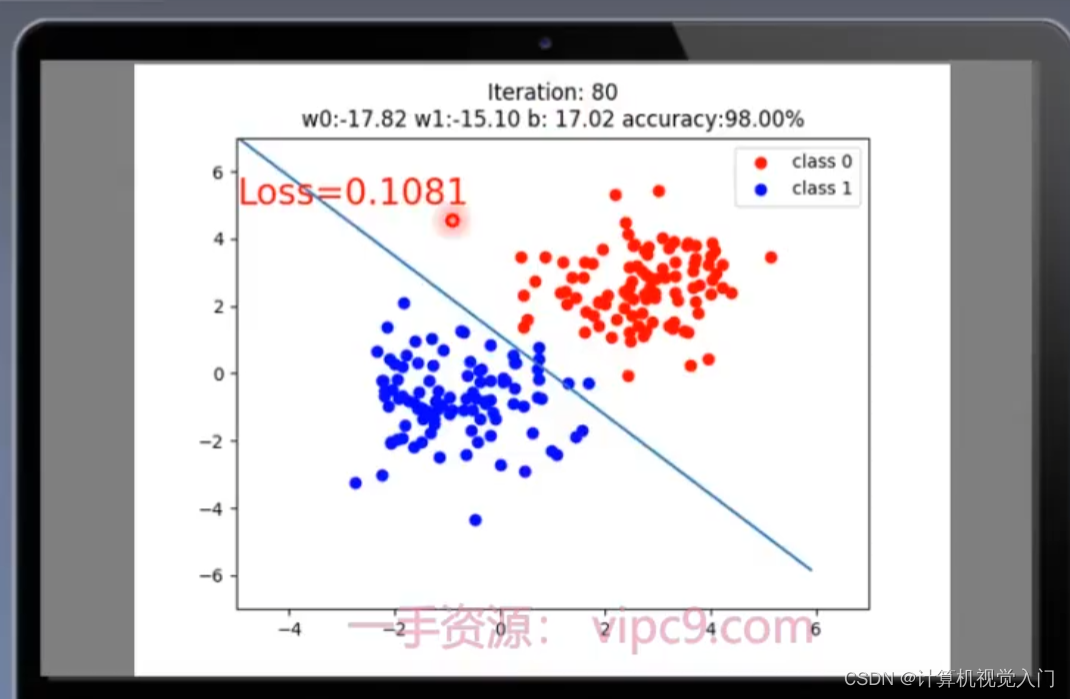

2.3线性回归

用wx+b 去拟合谁?

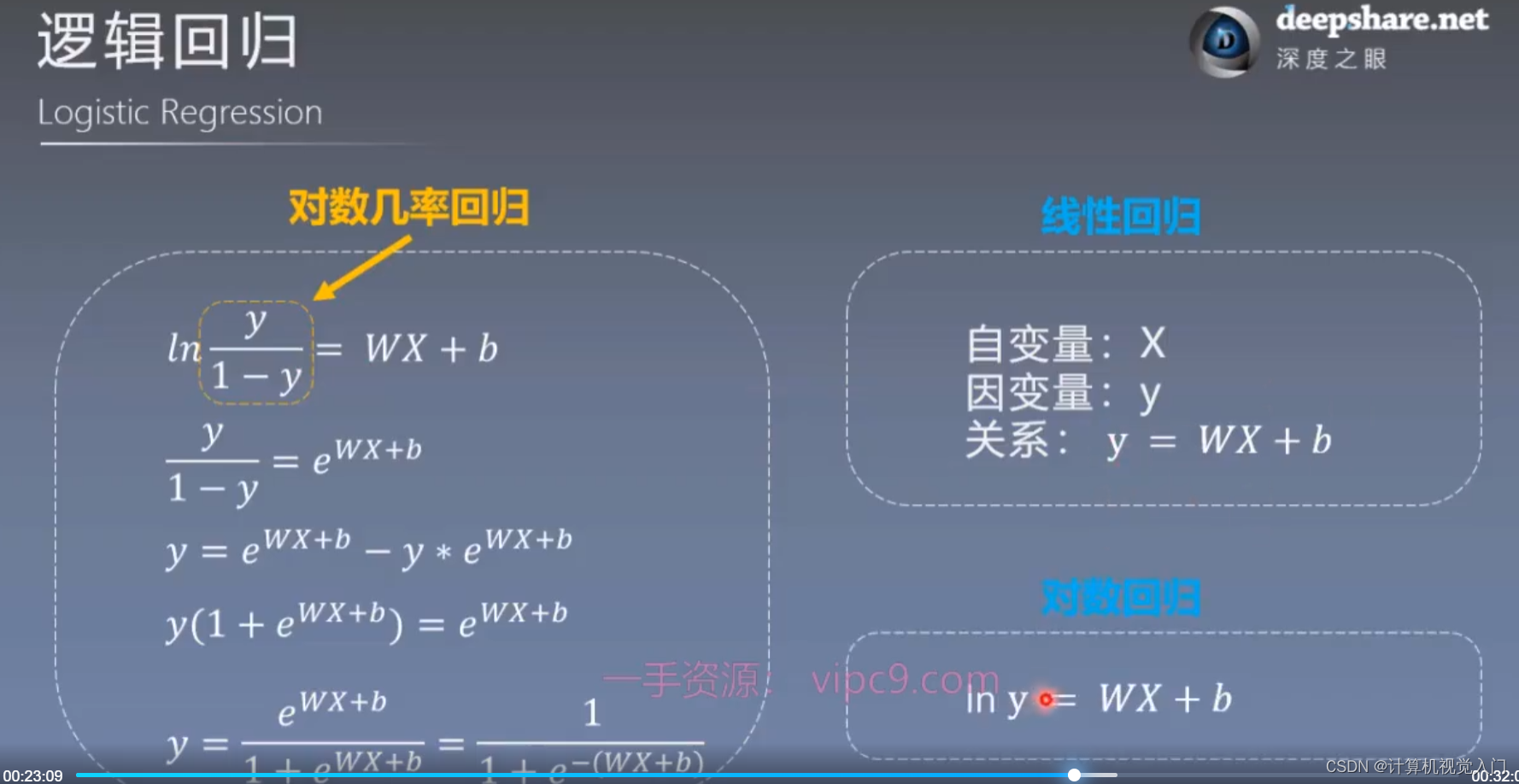

逻辑回归:

Deepshare-Pytorch/作业/第二周作业1/第二周作业1.md at master · 799609164/Deepshare-Pytorch · GitHub