讲述了QLoRA的技术原理。该技术核心思想就是在不降低任何性能的情况下微调量化为 4 bit的模型。光说不练假把式,下面我们对其进行实战演练,相关代码放置在GitHub上面:[llm-action]。

环境搭建

基础环境配置如下:

- 操作系统: CentOS 7

- CPUs: 单个节点具有 1TB 内存的 Intel CPU,物理CPU个数为64,每颗CPU核数为16

- GPUs: 8 卡 A800 80GB GPUs

- Python: 3.10 (需要先升级OpenSSL到1.1.1t版本([点击下载OpenSSL]),然后再编译安装Python),[点击下载Python]

- NVIDIA驱动程序版本: 515.65.01,根据不同型号选择不同的驱动程序,[点击下载]。

- CUDA工具包: 11.7,[点击下载]

- NCCL: nccl_2.14.3-1+cuda11.7,[点击下载]

- cuDNN: 8.8.1.3_cuda11,[点击下载]

上面的NVIDIA驱动、CUDA、Python等工具的安装就不一一赘述了。

创建虚拟环境并激活虚拟环境(qlora-venv-py310-cu117):

cd /home/guodong.li/virtual-venv

virtualenv -p /usr/bin/python3.10 qlora-venv-py310-cu117

source /home/guodong.li/virtual-venv/qlora-venv-py310-cu117/bin/activate安装transformers、accelerate、peft库。

git clone https://github.com/huggingface/transformers.git

cd transformers

git checkout 8f093fb

pip install .

git clone https://github.com/huggingface/accelerate.git

cd accelerate/

git checkout 665d518

pip install .

git clone https://github.com/huggingface/peft.git

cd peft/

git checkout 189a6b8

pip install .安装其他依赖库:

pip install -r requirements.txt其中,requirements.txt内容如下:

bitsandbytes==0.39.0

einops==0.6.1

evaluate==0.4.0

scikit-learn==1.2.2

sentencepiece==0.1.99

tensorboardX数据集准备

数据集直接使用[alpaca-lora]项目提供的alpaca_data.json、alpaca_data_cleaned_archive.json或alpaca_data_gpt4.json即可。

模型权重格式转换

首先,对原始的 LLaMA 30B/65B 大模型进行模型权重格式转换为Huggingface Transformers格式。模型转换的具体步骤请参考之前的文章:从0到1复现斯坦福羊驼(Stanford Alpaca 7B)。

本文会使用到 LLaMA 7B 和 65B 模型,需预先转换好。

模型微调

git clone https://github.com/artidoro/qlora.git

cd qlora

git checkout cc48811

python qlora.py \

--dataset "/data/nfs/guodong.li/data/alpaca_data_cleaned.json" \

--model_name_or_path "/data/nfs/guodong.li/pretrain/hf-llama-model/llama-7b" \

--output_dir "/home/guodong.li/output/llama-7b-qlora" \

--per_device_train_batch_size 1 \

--max_steps 1000 \

--save_total_limit 2模型情况下,会将模型的不同层放置在不同层已进行模型并行。

模型训练过程:

python qlora.py \

> --dataset "/data/nfs/guodong.li/data/alpaca_data_cleaned.json" \

> --model_name_or_path "/data/nfs/guodong.li/pretrain/hf-llama-model/llama-7b" \

> --output_dir "/home/guodong.li/output/llama-7b-qlora" \

> --per_device_train_batch_size 1 \

> --max_steps 1000 \

> --save_total_limit 2

===================================BUG REPORT===================================

Welcome to bitsandbytes. For bug reports, please run

python -m bitsandbytes

and submit this information together with your error trace to: https://github.com/TimDettmers/bitsandbytes/issues

================================================================================

bin /home/guodong.li/virtual-venv/qlora-venv-py310-cu117/lib/python3.10/site-packages/bitsandbytes/libbitsandbytes_cuda117.so

/home/guodong.li/virtual-venv/qlora-venv-py310-cu117/lib/python3.10/site-packages/bitsandbytes/cuda_setup/main.py:149: UserWarning: WARNING: The following directories listed in your path were found to be non-existent: {PosixPath('/opt/rh/devtoolset-9/root/usr/lib/dyninst'), PosixPath('/opt/rh/devtoolset-7/root/usr/lib/dyninst')}

warn(msg)

CUDA SETUP: CUDA runtime path found: /usr/local/cuda-11.7/lib64/libcudart.so.11.0

CUDA SETUP: Highest compute capability among GPUs detected: 8.0

CUDA SETUP: Detected CUDA version 117

CUDA SETUP: Loading binary /home/guodong.li/virtual-venv/qlora-venv-py310-cu117/lib/python3.10/site-packages/bitsandbytes/libbitsandbytes_cuda117.so...

Found a previous checkpoint at: /home/guodong.li/output/llama-7b-qlora/checkpoint-250

loading base model /data/nfs/guodong.li/pretrain/hf-llama-model/llama-7b...

The model weights are not tied. Please use the `tie_weights` method before using the `infer_auto_device` function.

Loading checkpoint shards: 100%|███████████████████████████████████████████████████████████████████| 33/33 [00:17<00:00, 1.93it/s]

Loading adapters from checkpoint.

trainable params: 79953920.0 || all params: 3660320768 || trainable: 2.184341894267557

loaded model

Adding special tokens.

Found cached dataset json (/home/guodong.li/.cache/huggingface/datasets/json/default-3c2be6958ca766f9/0.0.0)

Loading cached split indices for dataset at /home/guodong.li/.cache/huggingface/datasets/json/default-3c2be6958ca766f9/0.0.0/cache-d071c407d9bc0de0.arrow and /home/guodong.li/.cache/huggingface/datasets/json/default-3c2be6958ca766f9/0.0.0/cache-e736a74b2c29e789.arrow

Loading cached processed dataset at /home/guodong.li/.cache/huggingface/datasets/json/default-3c2be6958ca766f9/0.0.0/cache-01d50099f3f094d7.arrow

torch.float32 422326272 0.11537932153507864

torch.uint8 3238002688 0.8846206784649213

{'loss': 1.4282, 'learning_rate': 0.0002, 'epoch': 0.0}

{'loss': 1.469, 'learning_rate': 0.0002, 'epoch': 0.01}

...

{'loss': 1.4002, 'learning_rate': 0.0002, 'epoch': 0.08}

{'loss': 1.4261, 'learning_rate': 0.0002, 'epoch': 0.08}

{'loss': 2.4323, 'learning_rate': 0.0002, 'epoch': 0.09}

25%|██████████████████████▎ | 250/1000 [25:34<1:10:31, 5.64s/it]Saving PEFT checkpoint...

{'loss': 1.6007, 'learning_rate': 0.0002, 'epoch': 0.09}

{'loss': 1.6187, 'learning_rate': 0.0002, 'epoch': 0.09}

...

{'loss': 1.6242, 'learning_rate': 0.0002, 'epoch': 0.16}

{'loss': 1.6073, 'learning_rate': 0.0002, 'epoch': 0.16}

{'loss': 1.6825, 'learning_rate': 0.0002, 'epoch': 0.17}

{'loss': 2.6283, 'learning_rate': 0.0002, 'epoch': 0.17}

50%|█████████████████████████████████████████████▌ | 500/1000 [50:44<49:21, 5.92s/it]Saving PEFT checkpoint...

{'loss': 1.619, 'learning_rate': 0.0002, 'epoch': 0.17}

{'loss': 1.5394, 'learning_rate': 0.0002, 'epoch': 0.18}

...

{'loss': 1.5247, 'learning_rate': 0.0002, 'epoch': 0.25}

{'loss': 1.6054, 'learning_rate': 0.0002, 'epoch': 0.25}

{'loss': 2.3289, 'learning_rate': 0.0002, 'epoch': 0.26}

75%|██████████████████████████████████████████████████████████████████▊ | 750/1000 [1:15:27<23:37, 5.67s/it]Saving PEFT checkpoint...

{'loss': 1.6001, 'learning_rate': 0.0002, 'epoch': 0.26}

...

{'loss': 1.6287, 'learning_rate': 0.0002, 'epoch': 0.34}

{'loss': 2.3511, 'learning_rate': 0.0002, 'epoch': 0.34}

100%|████████████████████████████████████████████████████████████████████████████████████████| 1000/1000 [1:42:08<00:00, 7.34s/it]Saving PEFT checkpoint...

{'train_runtime': 6132.3668, 'train_samples_per_second': 2.609, 'train_steps_per_second': 0.163, 'train_loss': 1.7447978076934814, 'epoch': 0.34}

100%|████████████████████████████████████████████████████████████████████████████████████████| 1000/1000 [1:42:12<00:00, 6.13s/it]

Saving PEFT checkpoint...

***** train metrics *****

epoch = 0.34

train_loss = 1.7448

train_runtime = 1:42:12.36

train_samples_per_second = 2.609

train_steps_per_second = 0.163模型输出权重文件:

tree -h llama-7b-qlora

llama-7b-qlora

├── [ 167] all_results.json

├── [ 316] checkpoint-1000

│ ├── [ 528] adapter_config.json

│ ├── [ 75] adapter_model

│ │ ├── [ 528] adapter_config.json

│ │ ├── [610M] adapter_model.bin

│ │ └── [ 27] README.md

│ ├── [610M] adapter_model.bin

│ ├── [ 21] added_tokens.json

│ ├── [3.1G] optimizer.pt

│ ├── [ 27] README.md

│ ├── [ 14K] rng_state.pth

│ ├── [ 627] scheduler.pt

│ ├── [ 96] special_tokens_map.json

│ ├── [ 742] tokenizer_config.json

│ ├── [488K] tokenizer.model

│ ├── [ 11K] trainer_state.json

│ └── [5.6K] training_args.bin

├── [ 316] checkpoint-750

│ ├── [ 528] adapter_config.json

│ ├── [ 75] adapter_model

│ │ ├── [ 528] adapter_config.json

│ │ ├── [610M] adapter_model.bin

│ │ └── [ 27] README.md

│ ├── [610M] adapter_model.bin

│ ├── [ 21] added_tokens.json

│ ├── [3.1G] optimizer.pt

│ ├── [ 27] README.md

│ ├── [ 14K] rng_state.pth

│ ├── [ 627] scheduler.pt

│ ├── [ 96] special_tokens_map.json

│ ├── [ 742] tokenizer_config.json

│ ├── [488K] tokenizer.model

│ ├── [8.0K] trainer_state.json

│ └── [5.6K] training_args.bin

├── [ 0] completed

├── [ 199] metrics.json

├── [ 11K] trainer_state.json

└── [ 167] train_results.json

4 directories, 35 files显存占用:

> nvidia-smi

Sun Jun 11 19:32:39 2023

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 515.105.01 Driver Version: 515.105.01 CUDA Version: 11.7 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA A800 80G... Off | 00000000:34:00.0 Off | 0 |

| N/A 40C P0 66W / 300W | 3539MiB / 81920MiB | 0% Default |

| | | Disabled |

+-------------------------------+----------------------+----------------------+

| 1 NVIDIA A800 80G... Off | 00000000:35:00.0 Off | 0 |

| N/A 54C P0 77W / 300W | 3077MiB / 81920MiB | 24% Default |

| | | Disabled |

+-------------------------------+----------------------+----------------------+

| 2 NVIDIA A800 80G... Off | 00000000:36:00.0 Off | 0 |

| N/A 55C P0 75W / 300W | 3077MiB / 81920MiB | 8% Default |

| | | Disabled |

+-------------------------------+----------------------+----------------------+

| 3 NVIDIA A800 80G... Off | 00000000:37:00.0 Off | 0 |

| N/A 57C P0 81W / 300W | 3077MiB / 81920MiB | 14% Default |

| | | Disabled |

+-------------------------------+----------------------+----------------------+

| 4 NVIDIA A800 80G... Off | 00000000:9B:00.0 Off | 0 |

| N/A 60C P0 83W / 300W | 3077MiB / 81920MiB | 8% Default |

| | | Disabled |

+-------------------------------+----------------------+----------------------+

| 5 NVIDIA A800 80G... Off | 00000000:9C:00.0 Off | 0 |

| N/A 61C P0 228W / 300W | 3077MiB / 81920MiB | 25% Default |

| | | Disabled |

+-------------------------------+----------------------+----------------------+

| 6 NVIDIA A800 80G... Off | 00000000:9D:00.0 Off | 0 |

| N/A 53C P0 265W / 300W | 3077MiB / 81920MiB | 6% Default |

| | | Disabled |

+-------------------------------+----------------------+----------------------+

| 7 NVIDIA A800 80G... Off | 00000000:9E:00.0 Off | 0 |

| N/A 46C P0 78W / 300W | 6891MiB / 81920MiB | 12% Default |

| | | Disabled |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 37939 C python 2513MiB |

| 1 N/A N/A 37939 C python 2819MiB |

| 2 N/A N/A 37939 C python 2819MiB |

| 3 N/A N/A 37939 C python 2819MiB |

| 4 N/A N/A 37939 C python 2819MiB |

| 5 N/A N/A 37939 C python 2819MiB |

| 6 N/A N/A 37939 C python 2819MiB |

| 7 N/A N/A 37939 C python 3561MiB |

+-----------------------------------------------------------------------------+模型权重合并

新增模型权重合并文件(export_hf_checkpoint.py),将lora权重合并回原始权重。

import os

import torch

import transformers

from peft import PeftModel

from transformers import LlamaForCausalLM, LlamaTokenizer # noqa: F402

BASE_MODEL = os.environ.get("BASE_MODEL", None)

LORA_MODEL = os.environ.get("LORA_MODEL", "tloen/alpaca-lora-7b")

HF_CHECKPOINT = os.environ.get("HF_CHECKPOINT", "./hf_ckpt")

assert (

BASE_MODEL

), "Please specify a value for BASE_MODEL environment variable, e.g. `export BASE_MODEL=decapoda-research/llama-7b-hf`" # noqa: E501

tokenizer = LlamaTokenizer.from_pretrained(BASE_MODEL)

base_model = LlamaForCausalLM.from_pretrained(

BASE_MODEL,

#load_in_8bit=False,

torch_dtype=torch.bfloat16,

device_map={"": "cpu"},

)

first_weight = base_model.model.layers[0].self_attn.q_proj.weight

first_weight_old = first_weight.clone()

lora_model = PeftModel.from_pretrained(

base_model,

# TODO

# "tloen/alpaca-lora-7b",

LORA_MODEL,

#device_map={"": "cpu"},

#torch_dtype=torch.float16,

)

lora_weight = lora_model.base_model.model.model.layers[

0

].self_attn.q_proj.weight

assert torch.allclose(first_weight_old, first_weight)

# merge weights

for layer in lora_model.base_model.model.model.layers:

layer.self_attn.q_proj.merge_weights = True

layer.self_attn.v_proj.merge_weights = True

lora_model.train(False)

# did we do anything?

#assert not torch.allclose(first_weight_old, first_weight)

lora_model_sd = lora_model.state_dict()

deloreanized_sd = {

k.replace("base_model.model.", ""): v

for k, v in lora_model_sd.items()

if "lora" not in k

}

LlamaForCausalLM.save_pretrained(

base_model, HF_CHECKPOINT , state_dict=deloreanized_sd, max_shard_size="400MB"

)接下来,就可以使用合并后的权重文件进行模型推理了。

模型推理

新增推理代码(inference.py):

from transformers import AutoModelForCausalLM, LlamaTokenizer

import torch

model_id = "/data/nfs/guodong.li/pretrain/hf-llama-model/llama-7b"

merge_model_id = "/home/guodong.li/output/llama-7b-merge"

#model = AutoModelForCausalLM.from_pretrained(model_id, load_in_4bit=True)

model = AutoModelForCausalLM.from_pretrained(merge_model_id, load_in_4bit=True, device_map="auto")

tokenizer = LlamaTokenizer.from_pretrained(model_id)

#print(model)

device = torch.device("cuda:0")

#model = model.to(device)

text = "Hello, my name is "

inputs = tokenizer(text, return_tensors="pt").to(device)

outputs = model.generate(**inputs, max_new_tokens=20, do_sample=True, top_k=30, top_p=0.85)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))

print("\n------------------------------------------------\nInput: ")

line = input()

while line:

inputs = tokenizer(line, return_tensors="pt").to(device)

outputs = model.generate(**inputs, max_new_tokens=20, do_sample=True, top_k=30, top_p=0.85)

print("Output: ",tokenizer.decode(outputs[0], skip_special_tokens=True))

print("\n------------------------------------------------\nInput: ")

line = input()运行过程:

> CUDA_VISIBLE_DEVICES=1 python inference.py

===================================BUG REPORT===================================

Welcome to bitsandbytes. For bug reports, please run

python -m bitsandbytes

and submit this information together with your error trace to: https://github.com/TimDettmers/bitsandbytes/issues

================================================================================

bin /home/guodong.li/virtual-venv/qlora-venv-py310-cu117/lib/python3.10/site-packages/bitsandbytes/libbitsandbytes_cuda117.so

/home/guodong.li/virtual-venv/qlora-venv-py310-cu117/lib/python3.10/site-packages/bitsandbytes/cuda_setup/main.py:149: UserWarning: WARNING: The following directories listed in your path were found to be non-existent: {PosixPath('/opt/rh/devtoolset-9/root/usr/lib/dyninst'), PosixPath('/opt/rh/devtoolset-7/root/usr/lib/dyninst')}

warn(msg)

CUDA SETUP: CUDA runtime path found: /usr/local/cuda-11.7/lib64/libcudart.so

CUDA SETUP: Highest compute capability among GPUs detected: 8.0

CUDA SETUP: Detected CUDA version 117

CUDA SETUP: Loading binary /home/guodong.li/virtual-venv/qlora-venv-py310-cu117/lib/python3.10/site-packages/bitsandbytes/libbitsandbytes_cuda117.so...

The model weights are not tied. Please use the `tie_weights` method before using the `infer_auto_device` function.

Loading checkpoint shards: 100%|███████████████████████████████████████████████████████████████████| 39/39 [00:07<00:00, 5.02it/s]

Hello, my name is 23 and i have been doing this for the last 6 months. I have been a great

------------------------------------------------

Input:

Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.\n\n### Instruction:\nGive three tips for staying healthy.\n\n### Input:\n\n\n### Response:

Output: Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.\n\n### Instruction:\nGive three tips for staying healthy.\n\n### Input:\n\n\n### Response: 1. Eat healthy food.\n2. Stay active.\n3. Eat

------------------------------------------------

Input:显存占用:

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 1 N/A N/A 21373 C python 5899MiB |

+-----------------------------------------------------------------------------+除此之外,还可以不进行合并权重,直接进行推理,具体如下所示。

新增推理代码(inference_qlora.py):

from transformers import AutoModelForCausalLM, LlamaTokenizer

import torch

from peft import PeftModel

model_id = "/data/nfs/guodong.li/pretrain/hf-llama-model/llama-7b"

lora_weights = "/home/guodong.li/output/llama-7b-qlora/checkpoint-1000/adapter_model"

#model = AutoModelForCausalLM.from_pretrained(model_id, load_in_4bit=True)

model = AutoModelForCausalLM.from_pretrained(model_id, load_in_4bit=True, device_map="auto")

model = PeftModel.from_pretrained(

model,

lora_weights,

)

tokenizer = LlamaTokenizer.from_pretrained(model_id)

#print(model)

device = torch.device("cuda:0")

#model = model.to(device)

text = "Hello, my name is "

inputs = tokenizer(text, return_tensors="pt").to(device)

outputs = model.generate(**inputs, max_new_tokens=20, do_sample=True, top_k=30, top_p=0.85)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))

print("\n------------------------------------------------\nInput: ")

line = input()

while line:

inputs = tokenizer(line, return_tensors="pt").to(device)

outputs = model.generate(**inputs, max_new_tokens=20, do_sample=True, top_k=30, top_p=0.85)

print("Output: ",tokenizer.decode(outputs[0], skip_special_tokens=True))

print("\n------------------------------------------------\nInput: ")

line = input()显存占用:

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 1 N/A N/A 10500 C python 7073MiB |

+-----------------------------------------------------------------------------+可以看到,此时的模型推理的显存占用会高于合并之后进行模型推理。

当然,将lora权重合并会base模型权重还可以通过merge_and_unload()方法,如下所示:

model = AutoModelForCausalLM.from_pretrained(model_id, torch_dtype=torch.bfloat16, device_map="auto")

model = PeftModel.from_pretrained(

model,

lora_weights,

)

model = model.merge_and_unload()前面仅对7B模型进行了尝试,而LLaMA-65B模型对于显存的占用效果如何呢,是否如官方所说仅需48G显存足矣了呢?带着疑问,接下来我们使用QLoRA对LLaMA-65B进行微调。

微调LLaMA-65B大模型

单GPU运行过程:

CUDA_VISIBLE_DEVICES=0 python qlora.py \

> --model_name_or_path /data/nfs/guodong.li/pretrain/hf-llama-model/llama-65b \

> --dataset /data/nfs/guodong.li/data/alpaca_data_cleaned.json \

> --output_dir /home/guodong.li/output/llama-65b-qlora \

> --logging_steps 10 \

> --save_strategy steps \

> --data_seed 42 \

> --save_steps 100 \

> --save_total_limit 2 \

> --evaluation_strategy steps \

> --eval_dataset_size 128 \

> --max_eval_samples 200 \

> --per_device_eval_batch_size 1 \

> --max_new_tokens 32 \

> --dataloader_num_workers 3 \

> --group_by_length \

> --logging_strategy steps \

> --remove_unused_columns False \

> --do_train \

> --do_eval \

> --do_mmlu_eval \

> --lora_r 64 \

> --lora_alpha 16 \

> --lora_modules all \

> --double_quant \

> --quant_type nf4 \

> --bf16 \

> --bits 4 \

> --warmup_ratio 0.03 \

> --lr_scheduler_type constant \

> --gradient_checkpointing \

> --source_max_len 16 \

> --target_max_len 512 \

> --per_device_train_batch_size 1 \

> --gradient_accumulation_steps 16 \

> --max_steps 200 \

> --eval_steps 50 \

> --learning_rate 0.0001 \

> --adam_beta2 0.999 \

> --max_grad_norm 0.3 \

> --lora_dropout 0.05 \

> --weight_decay 0.0 \

> --seed 0 \

> --report_to tensorboard

===================================BUG REPORT===================================

Welcome to bitsandbytes. For bug reports, please run

python -m bitsandbytes

and submit this information together with your error trace to: https://github.com/TimDettmers/bitsandbytes/issues

================================================================================

bin /home/guodong.li/virtual-venv/qlora-venv-py310-cu117/lib/python3.10/site-packages/bitsandbytes/libbitsandbytes_cuda117.so

/home/guodong.li/virtual-venv/qlora-venv-py310-cu117/lib/python3.10/site-packages/bitsandbytes/cuda_setup/main.py:149: UserWarning: WARNING: The following directories listed in your path were found to be non-existent: {PosixPath('/opt/rh/devtoolset-7/root/usr/lib/dyninst'), PosixPath('/opt/rh/devtoolset-9/root/usr/lib/dyninst')}

warn(msg)

CUDA SETUP: CUDA runtime path found: /usr/local/cuda-11.7/lib64/libcudart.so

CUDA SETUP: Highest compute capability among GPUs detected: 8.0

CUDA SETUP: Detected CUDA version 117

CUDA SETUP: Loading binary /home/guodong.li/virtual-venv/qlora-venv-py310-cu117/lib/python3.10/site-packages/bitsandbytes/libbitsandbytes_cuda117.so...

loading base model /data/nfs/guodong.li/pretrain/hf-llama-model/llama-65b...

The model weights are not tied. Please use the `tie_weights` method before using the `infer_auto_device` function.

Loading checkpoint shards: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 81/81 [01:33<00:00, 1.16s/it]

adding LoRA modules...

trainable params: 399769600.0 || all params: 33705172992 || trainable: 1.1860778762206212

loaded model

Adding special tokens.

Found cached dataset json (/home/guodong.li/.cache/huggingface/datasets/json/default-3c2be6958ca766f9/0.0.0)

Loading cached split indices for dataset at /home/guodong.li/.cache/huggingface/datasets/json/default-3c2be6958ca766f9/0.0.0/cache-298a54784863252c.arrow and /home/guodong.li/.cache/huggingface/datasets/json/default-3c2be6958ca766f9/0.0.0/cache-e827ad98bd5ab470.arrow

Splitting train dataset in train and validation according to `eval_dataset_size`

Found cached dataset json (/home/guodong.li/.cache/huggingface/datasets/json/default-a08e5825b0ce557e/0.0.0/e347ab1c932092252e717ff3f949105a4dd28b27e842dd53157d2f72e276c2e4)

100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 2/2 [00:00<00:00, 704.21it/s]

torch.bfloat16 1323843584 0.039277144217520744

torch.uint8 32380026880 0.9606837249535352

torch.float32 1318912 3.913082894407767e-05

{'loss': 1.5995, 'learning_rate': 0.0001, 'epoch': 0.0}

{'loss': 1.6043, 'learning_rate': 0.0001, 'epoch': 0.01}

{'loss': 1.7943, 'learning_rate': 0.0001, 'epoch': 0.01}

{'loss': 1.9854, 'learning_rate': 0.0001, 'epoch': 0.01}

{'loss': 2.5809, 'learning_rate': 0.0001, 'epoch': 0.02}

{'eval_loss': 2.077033519744873, 'eval_runtime': 101.312, 'eval_samples_per_second': 1.263, 'eval_steps_per_second': 1.263, 'epoch': 0.02}

100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1531/1531 [38:19<00:00, 1.50s/it]

{'mmlu_loss': 0.5965077246348893, 'mmlu_eval_accuracy_professional_accounting': 0.5483870967741935, 'mmlu_eval_accuracy_business_ethics': 0.7272727272727273, 'mmlu_eval_accuracy_international_law': 0.8461538461538461, 'mmlu_eval_accuracy_high_school_world_history': 0.6538461538461539, 'mmlu_eval_accuracy_college_physics': 0.45454545454545453, 'mmlu_eval_accuracy_public_relations': 0.6666666666666666, 'mmlu_eval_accuracy_management': 0.7272727272727273, 'mmlu_eval_accuracy_marketing': 0.88, 'mmlu_eval_accuracy_high_school_microeconomics': 0.5, 'mmlu_eval_accuracy_anatomy': 0.5714285714285714, 'mmlu_eval_accuracy_high_school_european_history': 0.7777777777777778, 'mmlu_eval_accuracy_high_school_government_and_politics': 0.7619047619047619, 'mmlu_eval_accuracy_college_mathematics': 0.2727272727272727, 'mmlu_eval_accuracy_logical_fallacies': 0.7222222222222222, 'mmlu_eval_accuracy_high_school_computer_science': 0.5555555555555556, 'mmlu_eval_accuracy_high_school_us_history': 0.7727272727272727, 'mmlu_eval_accuracy_high_school_biology': 0.625, 'mmlu_eval_accuracy_formal_logic': 0.2857142857142857, 'mmlu_eval_accuracy_computer_security': 0.5454545454545454, 'mmlu_eval_accuracy_security_studies': 0.5185185185185185, 'mmlu_eval_accuracy_human_sexuality': 0.5833333333333334, 'mmlu_eval_accuracy_astronomy': 0.5625, 'mmlu_eval_accuracy_elementary_mathematics': 0.34146341463414637, 'mmlu_eval_accuracy_machine_learning': 0.45454545454545453, 'mmlu_eval_accuracy_moral_scenarios': 0.49, 'mmlu_eval_accuracy_college_chemistry': 0.125, 'mmlu_eval_accuracy_sociology': 0.7727272727272727, 'mmlu_eval_accuracy_high_school_statistics': 0.2608695652173913, 'mmlu_eval_accuracy_high_school_chemistry': 0.3181818181818182, 'mmlu_eval_accuracy_philosophy': 0.7647058823529411, 'mmlu_eval_accuracy_virology': 0.5555555555555556, 'mmlu_eval_accuracy_electrical_engineering': 0.3125, 'mmlu_eval_accuracy_prehistory': 0.6, 'mmlu_eval_accuracy_high_school_mathematics': 0.20689655172413793, 'mmlu_eval_accuracy_professional_law': 0.4176470588235294, 'mmlu_eval_accuracy_high_school_macroeconomics': 0.6046511627906976, 'mmlu_eval_accuracy_world_religions': 0.8421052631578947, 'mmlu_eval_accuracy_college_biology': 0.625, 'mmlu_eval_accuracy_college_computer_science': 0.36363636363636365, 'mmlu_eval_accuracy_college_medicine': 0.36363636363636365, 'mmlu_eval_accuracy_miscellaneous': 0.7093023255813954, 'mmlu_eval_accuracy_professional_medicine': 0.5483870967741935, 'mmlu_eval_accuracy_nutrition': 0.5757575757575758, 'mmlu_eval_accuracy_jurisprudence': 0.5454545454545454, 'mmlu_eval_accuracy_us_foreign_policy': 0.9090909090909091, 'mmlu_eval_accuracy_global_facts': 0.4, 'mmlu_eval_accuracy_medical_genetics': 0.9090909090909091, 'mmlu_eval_accuracy_moral_disputes': 0.5526315789473685, 'mmlu_eval_accuracy_abstract_algebra': 0.18181818181818182, 'mmlu_eval_accuracy_conceptual_physics': 0.38461538461538464, 'mmlu_eval_accuracy_econometrics': 0.5, 'mmlu_eval_accuracy_human_aging': 0.7391304347826086, 'mmlu_eval_accuracy_professional_psychology': 0.5217391304347826, 'mmlu_eval_accuracy_high_school_physics': 0.23529411764705882, 'mmlu_eval_accuracy_clinical_knowledge': 0.4482758620689655, 'mmlu_eval_accuracy_high_school_geography': 0.7727272727272727, 'mmlu_eval_accuracy_high_school_psychology': 0.85, 'mmlu_eval_accuracy': 0.5572183480994843, 'epoch': 0.02}

{'loss': 1.6049, 'learning_rate': 0.0001, 'epoch': 0.02}

{'loss': 1.5043, 'learning_rate': 0.0001, 'epoch': 0.02}

{'loss': 1.5604, 'learning_rate': 0.0001, 'epoch': 0.03}

{'loss': 1.6828, 'learning_rate': 0.0001, 'epoch': 0.03}

{'loss': 2.3214, 'learning_rate': 0.0001, 'epoch': 0.03}

{'eval_loss': 1.8286590576171875, 'eval_runtime': 157.8957, 'eval_samples_per_second': 0.811, 'eval_steps_per_second': 0.811, 'epoch': 0.03}

100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1531/1531 [39:05<00:00, 1.53s/it]

{'mmlu_loss': 0.6160509743618856, 'mmlu_eval_accuracy_professional_accounting': 0.4838709677419355, 'mmlu_eval_accuracy_business_ethics': 0.7272727272727273, 'mmlu_eval_accuracy_international_law': 0.8461538461538461, 'mmlu_eval_accuracy_high_school_world_history': 0.7307692307692307, 'mmlu_eval_accuracy_college_physics': 0.45454545454545453, 'mmlu_eval_accuracy_public_relations': 0.5833333333333334, 'mmlu_eval_accuracy_management': 0.7272727272727273, 'mmlu_eval_accuracy_marketing': 0.84, 'mmlu_eval_accuracy_high_school_microeconomics': 0.5384615384615384, 'mmlu_eval_accuracy_anatomy': 0.5714285714285714, 'mmlu_eval_accuracy_high_school_european_history': 0.8333333333333334, 'mmlu_eval_accuracy_high_school_government_and_politics': 0.8095238095238095, 'mmlu_eval_accuracy_college_mathematics': 0.36363636363636365, 'mmlu_eval_accuracy_logical_fallacies': 0.7222222222222222, 'mmlu_eval_accuracy_high_school_computer_science': 0.5555555555555556, 'mmlu_eval_accuracy_high_school_us_history': 0.7727272727272727, 'mmlu_eval_accuracy_high_school_biology': 0.46875, 'mmlu_eval_accuracy_formal_logic': 0.2857142857142857, 'mmlu_eval_accuracy_computer_security': 0.45454545454545453, 'mmlu_eval_accuracy_security_studies': 0.5555555555555556, 'mmlu_eval_accuracy_human_sexuality': 0.5, 'mmlu_eval_accuracy_astronomy': 0.6875, 'mmlu_eval_accuracy_elementary_mathematics': 0.43902439024390244, 'mmlu_eval_accuracy_machine_learning': 0.5454545454545454, 'mmlu_eval_accuracy_moral_scenarios': 0.4, 'mmlu_eval_accuracy_college_chemistry': 0.0, 'mmlu_eval_accuracy_sociology': 0.8181818181818182, 'mmlu_eval_accuracy_high_school_statistics': 0.2608695652173913, 'mmlu_eval_accuracy_high_school_chemistry': 0.2727272727272727, 'mmlu_eval_accuracy_philosophy': 0.8235294117647058, 'mmlu_eval_accuracy_virology': 0.5555555555555556, 'mmlu_eval_accuracy_electrical_engineering': 0.3125, 'mmlu_eval_accuracy_prehistory': 0.6, 'mmlu_eval_accuracy_high_school_mathematics': 0.20689655172413793, 'mmlu_eval_accuracy_professional_law': 0.38235294117647056, 'mmlu_eval_accuracy_high_school_macroeconomics': 0.5348837209302325, 'mmlu_eval_accuracy_world_religions': 0.7894736842105263, 'mmlu_eval_accuracy_college_biology': 0.75, 'mmlu_eval_accuracy_college_computer_science': 0.18181818181818182, 'mmlu_eval_accuracy_college_medicine': 0.45454545454545453, 'mmlu_eval_accuracy_miscellaneous': 0.6976744186046512, 'mmlu_eval_accuracy_professional_medicine': 0.5806451612903226, 'mmlu_eval_accuracy_nutrition': 0.6060606060606061, 'mmlu_eval_accuracy_jurisprudence': 0.5454545454545454, 'mmlu_eval_accuracy_us_foreign_policy': 0.9090909090909091, 'mmlu_eval_accuracy_global_facts': 0.3, 'mmlu_eval_accuracy_medical_genetics': 1.0, 'mmlu_eval_accuracy_moral_disputes': 0.5526315789473685, 'mmlu_eval_accuracy_abstract_algebra': 0.36363636363636365, 'mmlu_eval_accuracy_conceptual_physics': 0.34615384615384615, 'mmlu_eval_accuracy_econometrics': 0.5, 'mmlu_eval_accuracy_human_aging': 0.8260869565217391, 'mmlu_eval_accuracy_professional_psychology': 0.5507246376811594, 'mmlu_eval_accuracy_high_school_physics': 0.058823529411764705, 'mmlu_eval_accuracy_clinical_knowledge': 0.41379310344827586, 'mmlu_eval_accuracy_high_school_geography': 0.8636363636363636, 'mmlu_eval_accuracy_high_school_psychology': 0.8666666666666667, 'mmlu_eval_accuracy': 0.5582642812271578, 'epoch': 0.03}

50%|███████████████████████████████████████████████████████████████████████████████ | 100/200 [2:29:20<1:21:41, 49.01s/it]Saving PEFT checkpoint...

{'loss': 1.5671, 'learning_rate': 0.0001, 'epoch': 0.04}

{'loss': 1.468, 'learning_rate': 0.0001, 'epoch': 0.04}

{'loss': 1.6495, 'learning_rate': 0.0001, 'epoch': 0.04}

{'loss': 1.6844, 'learning_rate': 0.0001, 'epoch': 0.05}

{'loss': 2.384, 'learning_rate': 0.0001, 'epoch': 0.05}

{'eval_loss': 1.7745016813278198, 'eval_runtime': 105.4656, 'eval_samples_per_second': 1.214, 'eval_steps_per_second': 1.214, 'epoch': 0.05}

100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1531/1531 [38:49<00:00, 1.52s/it]

{'mmlu_loss': 0.6844602518810469, 'mmlu_eval_accuracy_professional_accounting': 0.4838709677419355, 'mmlu_eval_accuracy_business_ethics': 0.7272727272727273, 'mmlu_eval_accuracy_international_law': 0.8461538461538461, 'mmlu_eval_accuracy_high_school_world_history': 0.6923076923076923, 'mmlu_eval_accuracy_college_physics': 0.45454545454545453, 'mmlu_eval_accuracy_public_relations': 0.5833333333333334, 'mmlu_eval_accuracy_management': 0.7272727272727273, 'mmlu_eval_accuracy_marketing': 0.84, 'mmlu_eval_accuracy_high_school_microeconomics': 0.46153846153846156, 'mmlu_eval_accuracy_anatomy': 0.5, 'mmlu_eval_accuracy_high_school_european_history': 0.8333333333333334, 'mmlu_eval_accuracy_high_school_government_and_politics': 0.8095238095238095, 'mmlu_eval_accuracy_college_mathematics': 0.2727272727272727, 'mmlu_eval_accuracy_logical_fallacies': 0.7222222222222222, 'mmlu_eval_accuracy_high_school_computer_science': 0.5555555555555556, 'mmlu_eval_accuracy_high_school_us_history': 0.7727272727272727, 'mmlu_eval_accuracy_high_school_biology': 0.46875, 'mmlu_eval_accuracy_formal_logic': 0.2857142857142857, 'mmlu_eval_accuracy_computer_security': 0.45454545454545453, 'mmlu_eval_accuracy_security_studies': 0.5925925925925926, 'mmlu_eval_accuracy_human_sexuality': 0.5833333333333334, 'mmlu_eval_accuracy_astronomy': 0.6875, 'mmlu_eval_accuracy_elementary_mathematics': 0.4878048780487805, 'mmlu_eval_accuracy_machine_learning': 0.6363636363636364, 'mmlu_eval_accuracy_moral_scenarios': 0.4, 'mmlu_eval_accuracy_college_chemistry': 0.125, 'mmlu_eval_accuracy_sociology': 0.8181818181818182, 'mmlu_eval_accuracy_high_school_statistics': 0.2608695652173913, 'mmlu_eval_accuracy_high_school_chemistry': 0.2727272727272727, 'mmlu_eval_accuracy_philosophy': 0.7941176470588235, 'mmlu_eval_accuracy_virology': 0.5555555555555556, 'mmlu_eval_accuracy_electrical_engineering': 0.375, 'mmlu_eval_accuracy_prehistory': 0.6, 'mmlu_eval_accuracy_high_school_mathematics': 0.20689655172413793, 'mmlu_eval_accuracy_professional_law': 0.38235294117647056, 'mmlu_eval_accuracy_high_school_macroeconomics': 0.5116279069767442, 'mmlu_eval_accuracy_world_religions': 0.8421052631578947, 'mmlu_eval_accuracy_college_biology': 0.625, 'mmlu_eval_accuracy_college_computer_science': 0.2727272727272727, 'mmlu_eval_accuracy_college_medicine': 0.4090909090909091, 'mmlu_eval_accuracy_miscellaneous': 0.6976744186046512, 'mmlu_eval_accuracy_professional_medicine': 0.5806451612903226, 'mmlu_eval_accuracy_nutrition': 0.6060606060606061, 'mmlu_eval_accuracy_jurisprudence': 0.5454545454545454, 'mmlu_eval_accuracy_us_foreign_policy': 0.9090909090909091, 'mmlu_eval_accuracy_global_facts': 0.3, 'mmlu_eval_accuracy_medical_genetics': 1.0, 'mmlu_eval_accuracy_moral_disputes': 0.5263157894736842, 'mmlu_eval_accuracy_abstract_algebra': 0.36363636363636365, 'mmlu_eval_accuracy_conceptual_physics': 0.38461538461538464, 'mmlu_eval_accuracy_econometrics': 0.3333333333333333, 'mmlu_eval_accuracy_human_aging': 0.8260869565217391, 'mmlu_eval_accuracy_professional_psychology': 0.5507246376811594, 'mmlu_eval_accuracy_high_school_physics': 0.11764705882352941, 'mmlu_eval_accuracy_clinical_knowledge': 0.41379310344827586, 'mmlu_eval_accuracy_high_school_geography': 0.8181818181818182, 'mmlu_eval_accuracy_high_school_psychology': 0.8166666666666667, 'mmlu_eval_accuracy': 0.5564941809356316, 'epoch': 0.05}

{'loss': 1.4593, 'learning_rate': 0.0001, 'epoch': 0.05}

{'loss': 1.4768, 'learning_rate': 0.0001, 'epoch': 0.06}

{'loss': 1.4924, 'learning_rate': 0.0001, 'epoch': 0.06}

{'loss': 1.6138, 'learning_rate': 0.0001, 'epoch': 0.07}

{'loss': 2.2459, 'learning_rate': 0.0001, 'epoch': 0.07}

{'eval_loss': 1.798527479171753, 'eval_runtime': 101.7857, 'eval_samples_per_second': 1.258, 'eval_steps_per_second': 1.258, 'epoch': 0.07}

100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1531/1531 [38:25<00:00, 1.51s/it]

{'mmlu_loss': 0.6745707825225292, 'mmlu_eval_accuracy_professional_accounting': 0.4838709677419355, 'mmlu_eval_accuracy_business_ethics': 0.6363636363636364, 'mmlu_eval_accuracy_international_law': 0.8461538461538461, 'mmlu_eval_accuracy_high_school_world_history': 0.6923076923076923, 'mmlu_eval_accuracy_college_physics': 0.36363636363636365, 'mmlu_eval_accuracy_public_relations': 0.6666666666666666, 'mmlu_eval_accuracy_management': 0.8181818181818182, 'mmlu_eval_accuracy_marketing': 0.8, 'mmlu_eval_accuracy_high_school_microeconomics': 0.6153846153846154, 'mmlu_eval_accuracy_anatomy': 0.5714285714285714, 'mmlu_eval_accuracy_high_school_european_history': 0.7777777777777778, 'mmlu_eval_accuracy_high_school_government_and_politics': 0.8095238095238095, 'mmlu_eval_accuracy_college_mathematics': 0.36363636363636365, 'mmlu_eval_accuracy_logical_fallacies': 0.7222222222222222, 'mmlu_eval_accuracy_high_school_computer_science': 0.5555555555555556, 'mmlu_eval_accuracy_high_school_us_history': 0.8181818181818182, 'mmlu_eval_accuracy_high_school_biology': 0.53125, 'mmlu_eval_accuracy_formal_logic': 0.21428571428571427, 'mmlu_eval_accuracy_computer_security': 0.6363636363636364, 'mmlu_eval_accuracy_security_studies': 0.6296296296296297, 'mmlu_eval_accuracy_human_sexuality': 0.5833333333333334, 'mmlu_eval_accuracy_astronomy': 0.75, 'mmlu_eval_accuracy_elementary_mathematics': 0.3902439024390244, 'mmlu_eval_accuracy_machine_learning': 0.5454545454545454, 'mmlu_eval_accuracy_moral_scenarios': 0.41, 'mmlu_eval_accuracy_college_chemistry': 0.125, 'mmlu_eval_accuracy_sociology': 0.7727272727272727, 'mmlu_eval_accuracy_high_school_statistics': 0.34782608695652173, 'mmlu_eval_accuracy_high_school_chemistry': 0.3181818181818182, 'mmlu_eval_accuracy_philosophy': 0.7941176470588235, 'mmlu_eval_accuracy_virology': 0.5555555555555556, 'mmlu_eval_accuracy_electrical_engineering': 0.25, 'mmlu_eval_accuracy_prehistory': 0.6285714285714286, 'mmlu_eval_accuracy_high_school_mathematics': 0.2413793103448276, 'mmlu_eval_accuracy_professional_law': 0.4294117647058823, 'mmlu_eval_accuracy_high_school_macroeconomics': 0.6046511627906976, 'mmlu_eval_accuracy_world_religions': 0.8421052631578947, 'mmlu_eval_accuracy_college_biology': 0.625, 'mmlu_eval_accuracy_college_computer_science': 0.18181818181818182, 'mmlu_eval_accuracy_college_medicine': 0.45454545454545453, 'mmlu_eval_accuracy_miscellaneous': 0.7209302325581395, 'mmlu_eval_accuracy_professional_medicine': 0.5161290322580645, 'mmlu_eval_accuracy_nutrition': 0.6060606060606061, 'mmlu_eval_accuracy_jurisprudence': 0.45454545454545453, 'mmlu_eval_accuracy_us_foreign_policy': 0.8181818181818182, 'mmlu_eval_accuracy_global_facts': 0.5, 'mmlu_eval_accuracy_medical_genetics': 0.9090909090909091, 'mmlu_eval_accuracy_moral_disputes': 0.6052631578947368, 'mmlu_eval_accuracy_abstract_algebra': 0.2727272727272727, 'mmlu_eval_accuracy_conceptual_physics': 0.34615384615384615, 'mmlu_eval_accuracy_econometrics': 0.5, 'mmlu_eval_accuracy_human_aging': 0.8260869565217391, 'mmlu_eval_accuracy_professional_psychology': 0.5652173913043478, 'mmlu_eval_accuracy_high_school_physics': 0.35294117647058826, 'mmlu_eval_accuracy_clinical_knowledge': 0.5172413793103449, 'mmlu_eval_accuracy_high_school_geography': 0.8181818181818182, 'mmlu_eval_accuracy_high_school_psychology': 0.85, 'mmlu_eval_accuracy': 0.5715981488410985, 'epoch': 0.07}

100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 200/200 [4:57:24<00:00, 38.35s/it]Saving PEFT checkpoint...

{'train_runtime': 17857.1127, 'train_samples_per_second': 0.179, 'train_steps_per_second': 0.011, 'train_loss': 1.7639566564559936, 'epoch': 0.07}

100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 200/200 [4:57:37<00:00, 89.29s/it]

Saving PEFT checkpoint...

***** train metrics *****

epoch = 0.07

train_loss = 1.764

train_runtime = 4:57:37.11

train_samples_per_second = 0.179

train_steps_per_second = 0.011

100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 128/128 [01:40<00:00, 1.27it/s]

100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1531/1531 [38:35<00:00, 1.51s/it]

***** eval metrics *****

epoch = 0.07

eval_loss = 1.7985

eval_runtime = 0:01:42.39

eval_samples_per_second = 1.25

eval_steps_per_second = 1.25显存占用:

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 16138 C python 44515MiB |

+-----------------------------------------------------------------------------+可以看到,对于显存的占用不到48G,当然使用QLoRA微调模型的速度要慢于使用LoRA进行微调。

下面是多GPU显存占用:

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 3810 C python 8359MiB |

| 1 N/A N/A 3810 C python 7531MiB |

| 2 N/A N/A 3810 C python 7531MiB |

| 3 N/A N/A 3810 C python 7531MiB |

| 4 N/A N/A 3810 C python 7531MiB |

| 5 N/A N/A 3810 C python 7531MiB |

| 6 N/A N/A 3810 C python 7531MiB |

| 7 N/A N/A 3810 C python 6607MiB |

+-----------------------------------------------------------------------------+可以看到,当使用8张GPU卡微调时,单卡GPU的显存占用不超过10G,这让很多消费级显卡可以轻松微调百亿级大模型了。

结语

本文讲述了高效微调技术QLoRA训练LLaMA大模型并讲述了如何进行推理。

如何学习AI大模型?

我在一线互联网企业工作十余年里,指导过不少同行后辈。帮助很多人得到了学习和成长。

我意识到有很多经验和知识值得分享给大家,也可以通过我们的能力和经验解答大家在人工智能学习中的很多困惑,所以在工作繁忙的情况下还是坚持各种整理和分享。但苦于知识传播途径有限,很多互联网行业朋友无法获得正确的资料得到学习提升,故此将并将重要的AI大模型资料包括AI大模型入门学习思维导图、精品AI大模型学习书籍手册、视频教程、实战学习等录播视频免费分享出来。

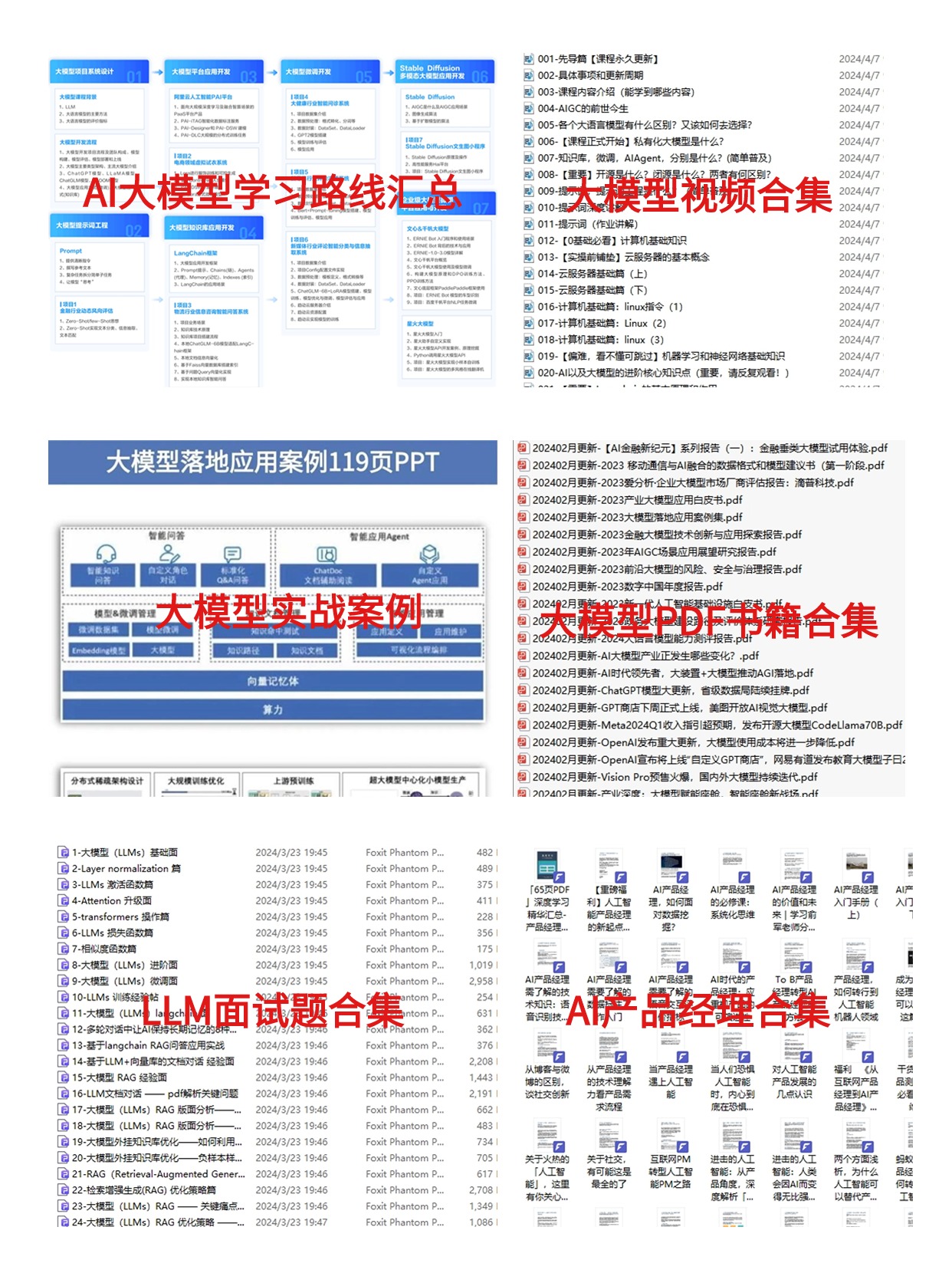

第一阶段: 从大模型系统设计入手,讲解大模型的主要方法;

第二阶段: 在通过大模型提示词工程从Prompts角度入手更好发挥模型的作用;

第三阶段: 大模型平台应用开发借助阿里云PAI平台构建电商领域虚拟试衣系统;

第四阶段: 大模型知识库应用开发以LangChain框架为例,构建物流行业咨询智能问答系统;

第五阶段: 大模型微调开发借助以大健康、新零售、新媒体领域构建适合当前领域大模型;

第六阶段: 以SD多模态大模型为主,搭建了文生图小程序案例;

第七阶段: 以大模型平台应用与开发为主,通过星火大模型,文心大模型等成熟大模型构建大模型行业应用。

👉学会后的收获:👈

• 基于大模型全栈工程实现(前端、后端、产品经理、设计、数据分析等),通过这门课可获得不同能力;

• 能够利用大模型解决相关实际项目需求: 大数据时代,越来越多的企业和机构需要处理海量数据,利用大模型技术可以更好地处理这些数据,提高数据分析和决策的准确性。因此,掌握大模型应用开发技能,可以让程序员更好地应对实际项目需求;

• 基于大模型和企业数据AI应用开发,实现大模型理论、掌握GPU算力、硬件、LangChain开发框架和项目实战技能, 学会Fine-tuning垂直训练大模型(数据准备、数据蒸馏、大模型部署)一站式掌握;

• 能够完成时下热门大模型垂直领域模型训练能力,提高程序员的编码能力: 大模型应用开发需要掌握机器学习算法、深度学习框架等技术,这些技术的掌握可以提高程序员的编码能力和分析能力,让程序员更加熟练地编写高质量的代码。

1.AI大模型学习路线图

2.100套AI大模型商业化落地方案

3.100集大模型视频教程

4.200本大模型PDF书籍

5.LLM面试题合集

6.AI产品经理资源合集

👉获取方式:

😝有需要的小伙伴,可以保存图片到wx扫描二v码免费领取【保证100%免费】🆓