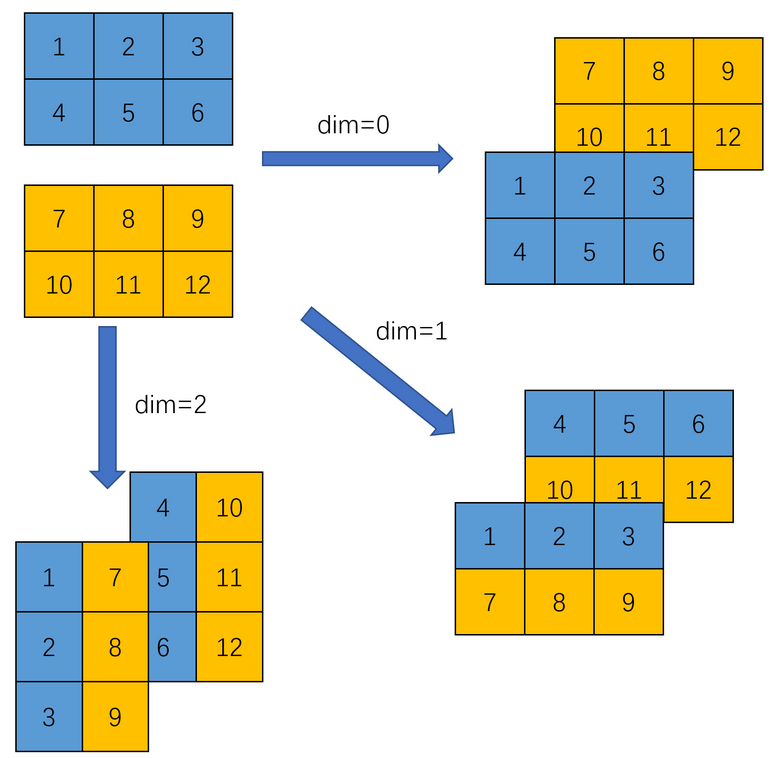

看一下stack的直观解释,动词可以简单理解为:把......放成一堆、把......放成一摞。

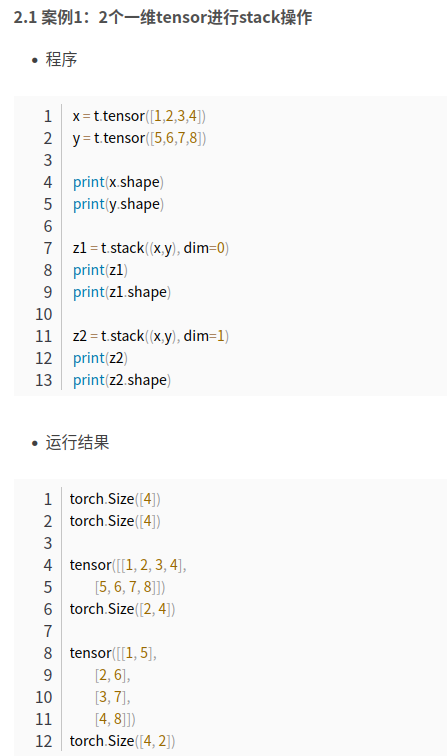

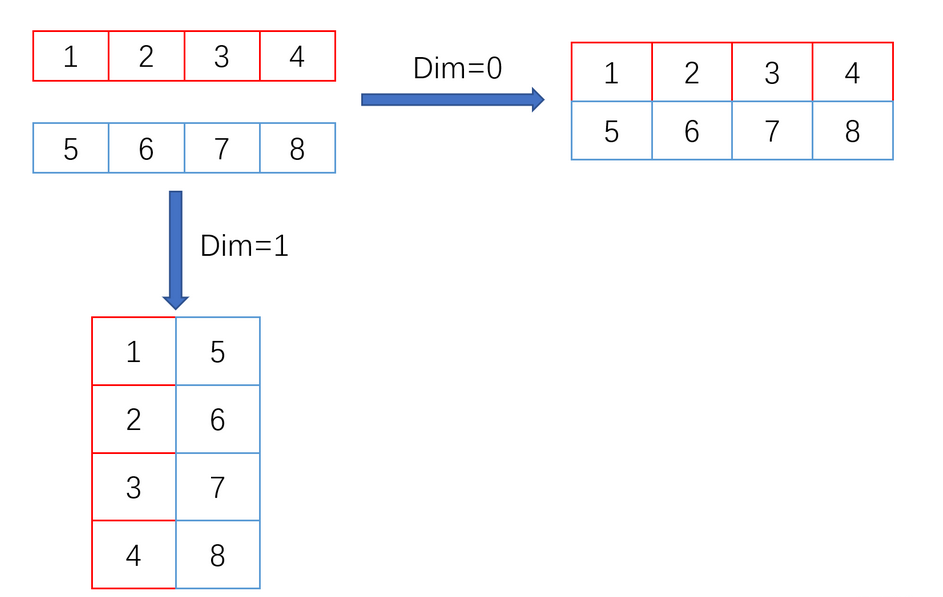

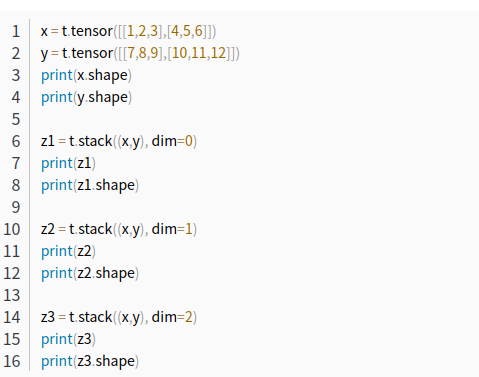

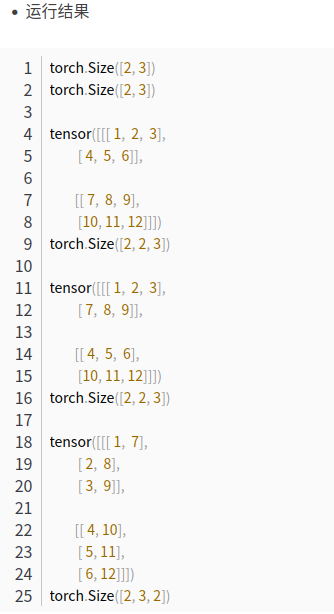

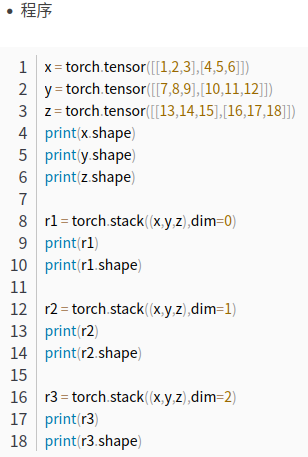

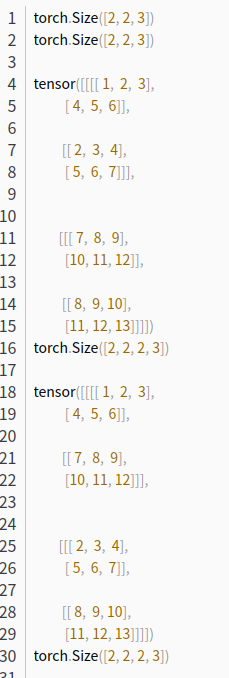

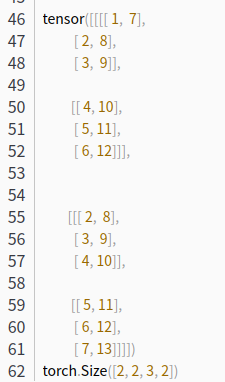

torch.stack方法用于沿着一个新的维度 join(也可称为cat)一系列的张量(可以是2个张量或者是更多),它会插入一个新的维度,并让张量按照这个新的维度进行张量的cat操作。值得注意的是:张量序列中的张量必须要有相同的shape和dimension。

import torch

ogfW = 50

fW = ogfW // 10 #5

ogfH = 40

fH = ogfH // 10 ##4

print("====>>xs"*8)

xs = torch.linspace(0, ogfW - 1, fW, dtype=torch.float).view(1, fW).expand(fH, fW)

print(torch.linspace(0, ogfW - 1, fW, dtype=torch.float))

print(torch.linspace(0, ogfW - 1, fW, dtype=torch.float).view(1, fW))

print(xs)

print("====>>ys"*8)

ys = torch.linspace(0, ogfH - 1, fH, dtype=torch.float).view(fH, 1).expand(fH, fW)

print(torch.linspace(0, ogfH - 1, fH, dtype=torch.float))

print(torch.linspace(0, ogfH - 1, fH, dtype=torch.float).view(fH, 1))

print(ys)

print("====>>frustum"*8)

print("===>>>shape xs=", xs.shape)

print("===>>>shape ys=", ys.shape)

frustum = torch.stack((xs, ys), -1)

print("===>>>shape frustum=", frustum.shape)

print(frustum)

====>>xs====>>xs====>>xs====>>xs====>>xs====>>xs====>>xs====>>xs

tensor([ 0.0000, 12.2500, 24.5000, 36.7500, 49.0000])

tensor([[ 0.0000, 12.2500, 24.5000, 36.7500, 49.0000]])

tensor([[ 0.0000, 12.2500, 24.5000, 36.7500, 49.0000],

[ 0.0000, 12.2500, 24.5000, 36.7500, 49.0000],

[ 0.0000, 12.2500, 24.5000, 36.7500, 49.0000],

[ 0.0000, 12.2500, 24.5000, 36.7500, 49.0000]])

====>>ys====>>ys====>>ys====>>ys====>>ys====>>ys====>>ys====>>ys

tensor([ 0., 13., 26., 39.])

tensor([[ 0.],

[13.],

[26.],

[39.]])

tensor([[ 0., 0., 0., 0., 0.],

[13., 13., 13., 13., 13.],

[26., 26., 26., 26., 26.],

[39., 39., 39., 39., 39.]])

====>>frustum====>>frustum====>>frustum====>>frustum====>>frustum====>>frustum====>>frustum====>>frustum

===>>>shape xs= torch.Size([4, 5])

===>>>shape ys= torch.Size([4, 5])

===>>>shape frustum= torch.Size([4, 5, 2])

tensor([[[ 0.0000, 0.0000],

[12.2500, 0.0000],

[24.5000, 0.0000],

[36.7500, 0.0000],

[49.0000, 0.0000]],

[[ 0.0000, 13.0000],

[12.2500, 13.0000],

[24.5000, 13.0000],

[36.7500, 13.0000],

[49.0000, 13.0000]],

[[ 0.0000, 26.0000],

[12.2500, 26.0000],

[24.5000, 26.0000],

[36.7500, 26.0000],

[49.0000, 26.0000]],

[[ 0.0000, 39.0000],

[12.2500, 39.0000],

[24.5000, 39.0000],

[36.7500, 39.0000],

[49.0000, 39.0000]]])

Process finished with exit code 03维

import torch

D = 3

ogfW = 50

fW = ogfW // 10 #5

ogfH = 40

fH = ogfH // 10 ##4

ds = torch.arange(*[-6,-3,1], dtype=torch.float).view(-1, 1, 1).expand(-1, fH, fW)

print("===>>>ds" * 5)

print(torch.arange(*[-6,-3,1], dtype=torch.float))

print(torch.arange(*[-6,-3,1], dtype=torch.float).view(-1, 1, 1))

print(ds)

print("===>>>xs" * 5)

xs = torch.linspace(0, ogfW - 1, fW, dtype=torch.float).view(1, 1, fW).expand(D, fH, fW)

print(torch.linspace(0, ogfW - 1, fW, dtype=torch.float))

print(torch.linspace(0, ogfW - 1, fW, dtype=torch.float).view(1, 1, fW))

print(xs)

ys = torch.linspace(0, ogfH - 1, fH, dtype=torch.float).view(1, fH, 1).expand(D, fH, fW)

print("===>>>ys" * 5)

print(torch.linspace(0, ogfH - 1, fH, dtype=torch.float))

print(torch.linspace(0, ogfH - 1, fH, dtype=torch.float).view(1, fH, 1))

print(ys)

print("==>> "*20)

print("===>>>shape ds=", ds.shape)

print("===>>>shape xs=", xs.shape)

print("===>>>shape ys=", ys.shape)

frustum = torch.stack((xs, ys, ds), -1)

print("===>>>shape frustum=", frustum.shape)

print(frustum)

===>>>ds===>>>ds===>>>ds===>>>ds===>>>ds

tensor([-6., -5., -4.])

tensor([[[-6.]],

[[-5.]],

[[-4.]]])

tensor([[[-6., -6., -6., -6., -6.],

[-6., -6., -6., -6., -6.],

[-6., -6., -6., -6., -6.],

[-6., -6., -6., -6., -6.]],

[[-5., -5., -5., -5., -5.],

[-5., -5., -5., -5., -5.],

[-5., -5., -5., -5., -5.],

[-5., -5., -5., -5., -5.]],

[[-4., -4., -4., -4., -4.],

[-4., -4., -4., -4., -4.],

[-4., -4., -4., -4., -4.],

[-4., -4., -4., -4., -4.]]])

===>>>xs===>>>xs===>>>xs===>>>xs===>>>xs

tensor([ 0.0000, 12.2500, 24.5000, 36.7500, 49.0000])

tensor([[[ 0.0000, 12.2500, 24.5000, 36.7500, 49.0000]]])

tensor([[[ 0.0000, 12.2500, 24.5000, 36.7500, 49.0000],

[ 0.0000, 12.2500, 24.5000, 36.7500, 49.0000],

[ 0.0000, 12.2500, 24.5000, 36.7500, 49.0000],

[ 0.0000, 12.2500, 24.5000, 36.7500, 49.0000]],

[[ 0.0000, 12.2500, 24.5000, 36.7500, 49.0000],

[ 0.0000, 12.2500, 24.5000, 36.7500, 49.0000],

[ 0.0000, 12.2500, 24.5000, 36.7500, 49.0000],

[ 0.0000, 12.2500, 24.5000, 36.7500, 49.0000]],

[[ 0.0000, 12.2500, 24.5000, 36.7500, 49.0000],

[ 0.0000, 12.2500, 24.5000, 36.7500, 49.0000],

[ 0.0000, 12.2500, 24.5000, 36.7500, 49.0000],

[ 0.0000, 12.2500, 24.5000, 36.7500, 49.0000]]])

===>>>ys===>>>ys===>>>ys===>>>ys===>>>ys

tensor([ 0., 13., 26., 39.])

tensor([[[ 0.],

[13.],

[26.],

[39.]]])

tensor([[[ 0., 0., 0., 0., 0.],

[13., 13., 13., 13., 13.],

[26., 26., 26., 26., 26.],

[39., 39., 39., 39., 39.]],

[[ 0., 0., 0., 0., 0.],

[13., 13., 13., 13., 13.],

[26., 26., 26., 26., 26.],

[39., 39., 39., 39., 39.]],

[[ 0., 0., 0., 0., 0.],

[13., 13., 13., 13., 13.],

[26., 26., 26., 26., 26.],

[39., 39., 39., 39., 39.]]])

==>> ==>> ==>> ==>> ==>> ==>> ==>> ==>> ==>> ==>> ==>> ==>> ==>> ==>> ==>> ==>> ==>> ==>> ==>> ==>>

===>>>shape ds= torch.Size([3, 4, 5])

===>>>shape xs= torch.Size([3, 4, 5])

===>>>shape ys= torch.Size([3, 4, 5])

===>>>shape frustum= torch.Size([3, 4, 5, 3])

tensor([[[[ 0.0000, 0.0000, -6.0000],

[12.2500, 0.0000, -6.0000],

[24.5000, 0.0000, -6.0000],

[36.7500, 0.0000, -6.0000],

[49.0000, 0.0000, -6.0000]],

[[ 0.0000, 13.0000, -6.0000],

[12.2500, 13.0000, -6.0000],

[24.5000, 13.0000, -6.0000],

[36.7500, 13.0000, -6.0000],

[49.0000, 13.0000, -6.0000]],

[[ 0.0000, 26.0000, -6.0000],

[12.2500, 26.0000, -6.0000],

[24.5000, 26.0000, -6.0000],

[36.7500, 26.0000, -6.0000],

[49.0000, 26.0000, -6.0000]],

[[ 0.0000, 39.0000, -6.0000],

[12.2500, 39.0000, -6.0000],

[24.5000, 39.0000, -6.0000],

[36.7500, 39.0000, -6.0000],

[49.0000, 39.0000, -6.0000]]],

[[[ 0.0000, 0.0000, -5.0000],

[12.2500, 0.0000, -5.0000],

[24.5000, 0.0000, -5.0000],

[36.7500, 0.0000, -5.0000],

[49.0000, 0.0000, -5.0000]],

[[ 0.0000, 13.0000, -5.0000],

[12.2500, 13.0000, -5.0000],

[24.5000, 13.0000, -5.0000],

[36.7500, 13.0000, -5.0000],

[49.0000, 13.0000, -5.0000]],

[[ 0.0000, 26.0000, -5.0000],

[12.2500, 26.0000, -5.0000],

[24.5000, 26.0000, -5.0000],

[36.7500, 26.0000, -5.0000],

[49.0000, 26.0000, -5.0000]],

[[ 0.0000, 39.0000, -5.0000],

[12.2500, 39.0000, -5.0000],

[24.5000, 39.0000, -5.0000],

[36.7500, 39.0000, -5.0000],

[49.0000, 39.0000, -5.0000]]],

[[[ 0.0000, 0.0000, -4.0000],

[12.2500, 0.0000, -4.0000],

[24.5000, 0.0000, -4.0000],

[36.7500, 0.0000, -4.0000],

[49.0000, 0.0000, -4.0000]],

[[ 0.0000, 13.0000, -4.0000],

[12.2500, 13.0000, -4.0000],

[24.5000, 13.0000, -4.0000],

[36.7500, 13.0000, -4.0000],

[49.0000, 13.0000, -4.0000]],

[[ 0.0000, 26.0000, -4.0000],

[12.2500, 26.0000, -4.0000],

[24.5000, 26.0000, -4.0000],

[36.7500, 26.0000, -4.0000],

[49.0000, 26.0000, -4.0000]],

[[ 0.0000, 39.0000, -4.0000],

[12.2500, 39.0000, -4.0000],

[24.5000, 39.0000, -4.0000],

[36.7500, 39.0000, -4.0000],

[49.0000, 39.0000, -4.0000]]]])

Process finished with exit code 0

部分转载于:https://blog.csdn.net/dongjinkun/article/details/132590205