介绍

Elasticsearch 是一个开源的分布式搜索和分析引擎,基于 Apache Lucene 构建。它被设计用于处理海量数据,并提供近实时的搜索和分析能力。

| 特性类别 | 核心要点 | 说明与应用价值 |

|---|---|---|

| 核心架构 | 分布式 & 高可用 | 数据自动分片并在集群中多节点存储,易于水平扩展,并能容忍节点故障。 |

| 近实时 (NRT) 搜索 | 数据写入后通常在 1 秒内即可被检索,非常适合监控和日志分析等场景。 | |

| RESTful API | 通过简单的 HTTP 请求和 JSON 数据格式进行操作,极大降低了使用门槛。 | |

| 核心技术 | 倒排索引 | 通过建立"词汇 -> 文档"的映射,实现了全文检索的高速查询。 |

| 强大的查询 DSL | 支持全文搜索、模糊查询、范围过滤、地理位置查询等复杂查询需求。 | |

| 聚合分析 | 提供强大的数据分组统计和计算能力,用于深度数据分析。 | |

| 主要应用场景 | 应用程序/网站搜索 | 为电商平台、内容网站等提供高效、精准的搜索功能。 |

| 日志与指标分析 (ELK Stack) | 与 Logstash、Kibana 等组成 ELK 技术栈,是日志集中管理和分析的经典方案。 | |

| 业务智能与安全分析 | 通过对业务数据或安全事件数据进行聚合分析,发现趋势和异常。 |

核心概念速览

理解以下几个关键术语,能帮助你更好地掌握 Elasticsearch:

- 索引:类似于关系型数据库中的"数据库",是存储相关文档的集合。

- 文档:是信息的基本数据单元,以 JSON 格式表示,类似于数据库中的"一行记录"。

- 节点与集群:一个节点是一个 Elasticsearch 实例,多个节点组成一个集群,共同提供服务。

- 分片与副本:索引可以被分成多个分片,分布到不同节点上。每个分片都可以有副本,同时提供数据冗余和高可用性。

优势与挑战

- 主要优势 :高性能 (尤其擅长全文检索和复杂分析)、高可扩展性 (可处理 PB 级别数据)、灵活性(支持各种数据类型)。

- 需要注意的方面 :资源消耗 相对较高(内存和磁盘 I/O)。学习曲线 有一定坡度,尤其在分布式集群管理和性能调优方面。它不提供完整的事务支持(ACID),因此不适合像金融交易这类需要强一致性的场景。

生态与演进

Elasticsearch 通常与 Logstash (数据采集处理)和 Kibana (数据可视化)等组件共同构成 Elastic Stack(旧称 ELK Stack),提供一个完整的数据处理解决方案。另外,需要注意的是,在新版本中(7.x 以后),索引内的"类型"概念已被废弃,现在更推荐使用独立的索引来组织不同结构的数据。

快速开始

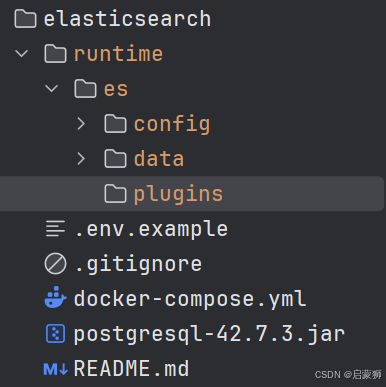

目录结构如下,详见

bash

# .env.example

ELASTIC_PASSWORD=dAUl36wBVN

KIBANA_PASSWORD=CfHhxf8fzv

yml

# docker-compose.yml

services:

elasticsearch:

container_name: elasticsearch

image: elasticsearch:8.12.2

#image: docker.cnb.cool/jinriyaojia_huigu/yaocai/devops/elasticsearch:8.12.2

restart: unless-stopped

user: 1000:1000

environment:

- "ES_JAVA_OPTS=-Xms1g -Xmx1g -XX:+UseG1GC"

- "TZ=Asia/Shanghai"

# 禁用 HTTPS,启用 HTTP

- "xpack.security.http.ssl.enabled=false"

- "xpack.security.enabled=true" # 保持安全启用,但使用 HTTP

- "discovery.type=single-node"

- "ELASTIC_PASSWORD=${ELASTIC_PASSWORD}"

ports:

- "19200:9200" # Elasticsearch 默认的 HTTP 端口,用于接收 REST API 请求,比如搜索、索引文档等操作

# - "19300:9300" # Elasticsearch 默认的 Transport 端口,用于节点间通信和 Java 客户端的传输层连接

volumes:

- ./runtime/es/config:/usr/share/elasticsearch/config

- ./runtime/es/data:/usr/share/elasticsearch/data

- ./runtime/es/plugins:/usr/share/elasticsearch/plugins

- ./runtime/es/logs:/usr/share/elasticsearch/logs # 挂载日志目录

networks:

- elastic_net

ulimits:

nofile:

soft: 65535

hard: 65535

nproc:

soft: 4096

hard: 4096

memlock:

soft: -1

hard: -1

deploy:

resources:

limits:

memory: 2G

cpus: '2.0'

reservations:

memory: 1G

cpus: '1.0'

logging:

driver: json-file

options:

max-size: "30m"

max-file: "10"

kibana:

container_name: kibana

image: kibana:8.12.2

#image: docker.cnb.cool/jinriyaojia_huigu/yaocai/devops/kibana:8.12.2

restart: unless-stopped

environment:

- "TZ=Asia/Shanghai"

- "I18N_LOCALE=zh-CN"

- "ELASTICSEARCH_SSL_VERIFICATIONMODE=none" # 开发环境禁用证书验证

# 禁用不需要的功能

- "XPACK_SECURITY_LOGIN_HELP_ENABLED=false"

- "XPACK_SECURITY_PASSWORD_RESET_ENABLED=false"

- "XPACK_SECURITY_SESSION_IDLE_TIMEOUT=1h"

- "SERVER_HOST=0.0.0.0"

- "SERVER_NAME=kibana"

- "NODE_OPTIONS=--max-old-space-size=800" # 设置 Node.js 最大堆内存为 800MB

- "ELASTICSEARCH_HOSTS=http://elasticsearch:9200"

# 1.1账号验证

- "ELASTICSEARCH_USERNAME=kibana_system"

- "ELASTICSEARCH_PASSWORD=${KIBANA_PASSWORD}"

# 1.2服务账户令牌

# - "ELASTICSEARCH_SERVICEACCOUNTTOKEN=AAEAAWVsYXN0aWMva2liYW5hL2tpYmFuYS10b2tlbjpWckFhYWl4U1EyNmNRMGR4OGtXYmVR"

ports:

- "5601:5601"

deploy:

resources:

limits:

memory: 1G

cpus: '0.5'

reservations:

memory: 512M

cpus: '0.25'

logging:

driver: json-file

options:

max-size: "30m"

max-file: "10"

networks:

- elastic_net

depends_on:

- elasticsearch

# 网络配置

networks:

elastic_net:

driver: bridge准备基础配置文件

bash

mkdir -p runtime/es/plugins && mkdir -p runtime/es/data && mkdir -p runtime/es/config

# 修改文件权限,解决配置文件复制和IK中文分词插件安装权限问题

chown -R 1000:1000 runtime

docker run --rm -v ./runtime/es/config:/temp-config elasticsearch:8.12.2 cp -r /usr/share/elasticsearch/config/. /temp-config/

# 创建同义词文件

mkdir -p runtime/es/config/certs && touch runtime/es/config/certs/synonym.txt启动

bash

# 设置环境变量,修改.env文件中的变量

cp .env.example .env

cat .env

docker compose up -d首次启动初始化配置

安装插件

安装IK中文分词插件、拼音分词插件

bash

[root@yaocai-local-dev elasticsearch]# docker compose exec elasticsearch bash

elasticsearch@33d5877b32ba:~$ ./bin/elasticsearch-plugin install https://get.infini.cloud/elasticsearch/analysis-ik/8.12.2

-> Installing https://get.infini.cloud/elasticsearch/analysis-ik/8.12.2

-> Downloading https://get.infini.cloud/elasticsearch/analysis-ik/8.12.2

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

@ WARNING: plugin requires additional permissions @

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

* java.net.SocketPermission * connect,resolve

See https://docs.oracle.com/javase/8/docs/technotes/guides/security/permissions.html

for descriptions of what these permissions allow and the associated risks.

Continue with installation? [y/N]y

-> Installed analysis-ik

-> Please restart Elasticsearch to activate any plugins installed

elasticsearch@33d5877b32ba:~$

elasticsearch@33d5877b32ba:~$

elasticsearch@33d5877b32ba:~$ ./bin/elasticsearch-plugin install https://get.infini.cloud/elasticsearch/analysis-pinyin/8.12.2

-> Installing https://get.infini.cloud/elasticsearch/analysis-pinyin/8.12.2

-> Downloading https://get.infini.cloud/elasticsearch/analysis-pinyin/8.12.2

-> Installed analysis-pinyin

-> Please restart Elasticsearch to activate any plugins installed

elasticsearch@33d5877b32ba:~$ exit

exit

[root@yaocai-local-dev elasticsearch]# 配置Kibana 连接 Elasticsearch 的身份验证

账号或token均可

1.1创建kibana需要的es账号

设置docker-compose中指的es内置账号(kibana_system)用于kibana访问es

密码:CfHhxf8fzv,修改密码需同步修改docker-compose.yml中kibana指定的账号密码

bash

[root@VM-4-10-centos elasticsearch]# docker compose exec elasticsearch bash

elasticsearch@0a5d8d64466e:~$ elasticsearch-reset-password -i -u kibana_system

This tool will reset the password of the [kibana_system] user.

You will be prompted to enter the password.

Please confirm that you would like to continue [y/N]y

Enter password for [kibana_system]:

Re-enter password for [kibana_system]:

Password for the [kibana_system] user successfully reset.

elasticsearch@0a5d8d64466e:~$ 创建账号示例

bash

[root@yaocai-local-dev elasticsearch]# docker compose exec elasticsearch bash

elasticsearch@33d5877b32ba:~$

elasticsearch@33d5877b32ba:~$ elasticsearch-users useradd elastic-test -p 123456 -r kibana_system

WARNING: Group of file [/usr/share/elasticsearch/config/users] used to be [root], but now is [elasticsearch]

WARNING: Group of file [/usr/share/elasticsearch/config/users_roles] used to be [root], but now is [elasticsearch]

elasticsearch@33d5877b32ba:~$ exit

exit

[root@yaocai-local-dev elasticsearch]#1.2创建 Kibana 服务账户令牌

创建docker-compose.yml中kibana指定的账户

bash

PS D:\workspace\devops\elasticsearch> docker compose exec -it elasticsearch elasticsearch-service-tokens create elastic/kibana kibana-token

SERVICE_TOKEN elastic/kibana/kibana-token = AAEAAWVsYXN0aWMva2liYW5hL2tpYmFuYS10b2tlbjpWckFhYWl4U1EyNmNRMGR4OGtXYmVR

PS D:\workspace\devops\elasticsearch>配置完成后重启es

bash

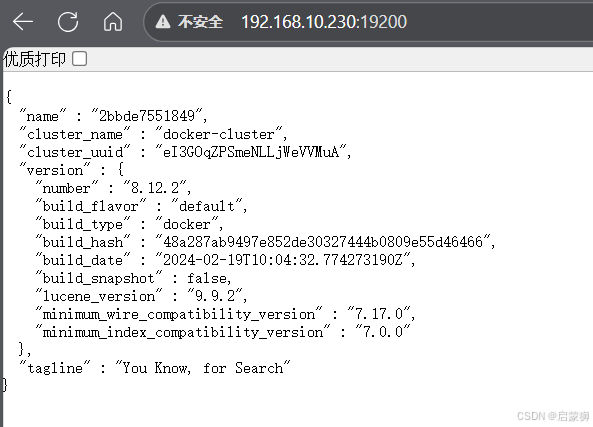

docker compost researt elasticsearch访问服务

ES

bash

http://127.0.0.1:19200

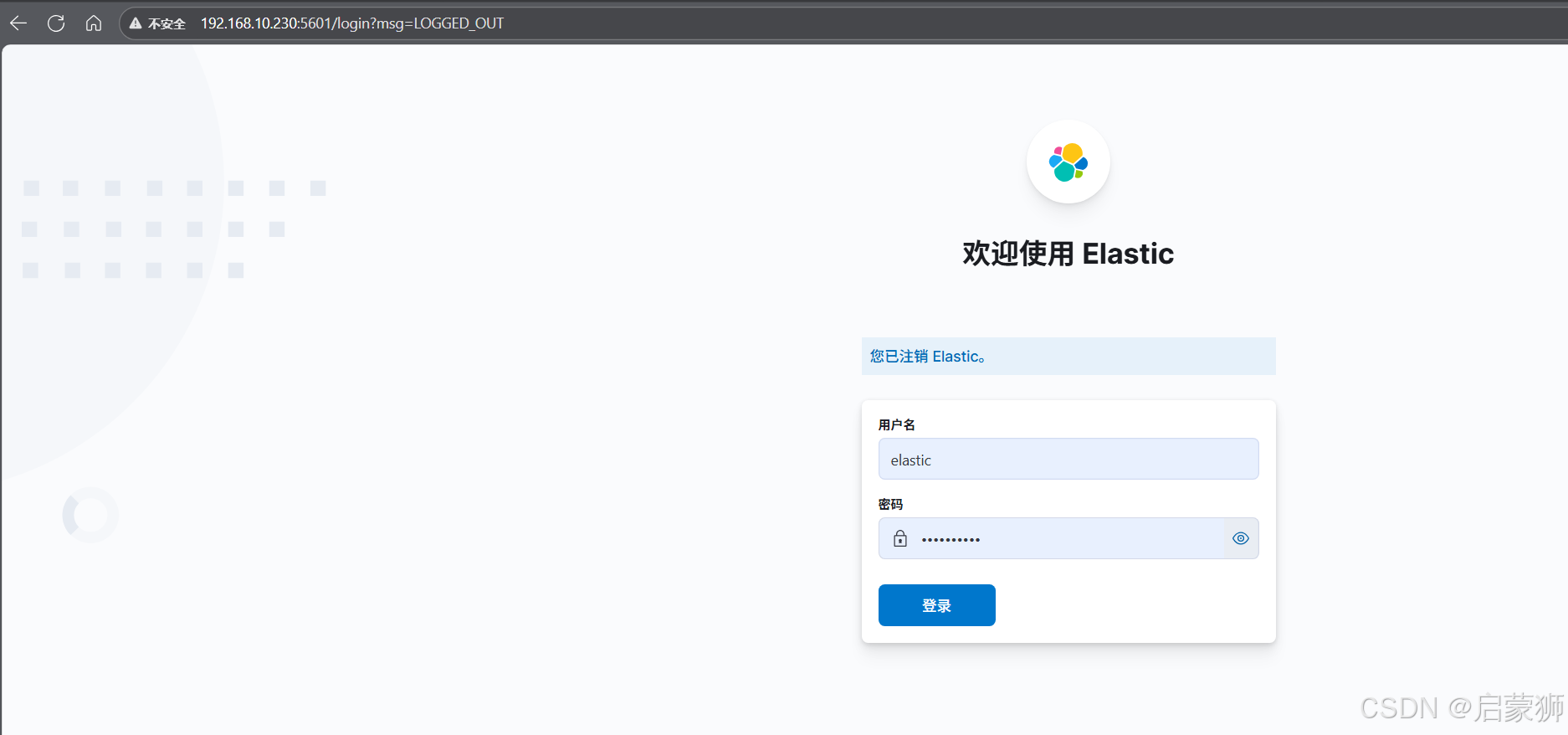

kibana

bash

http://127.0.0.1:5601

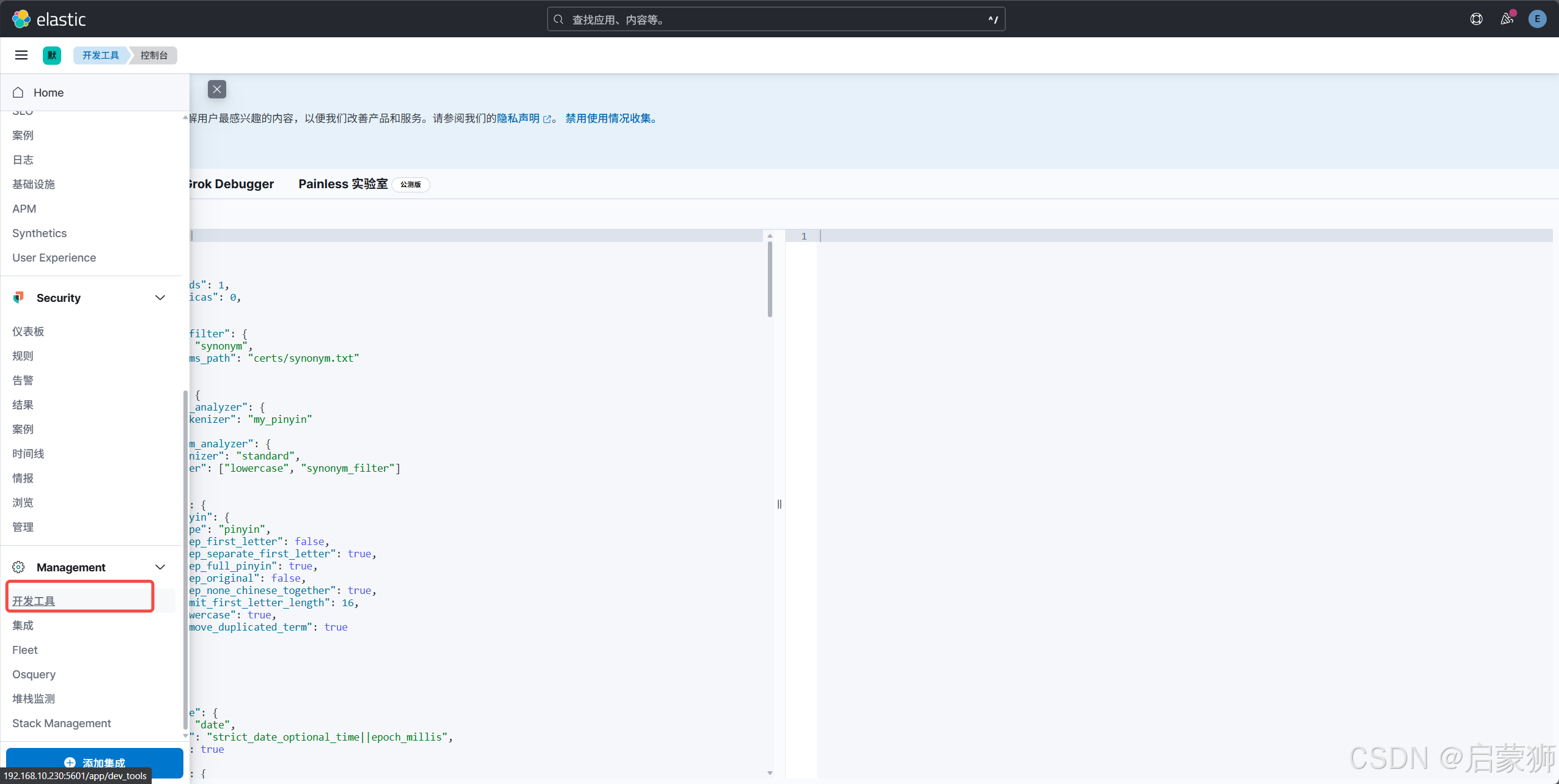

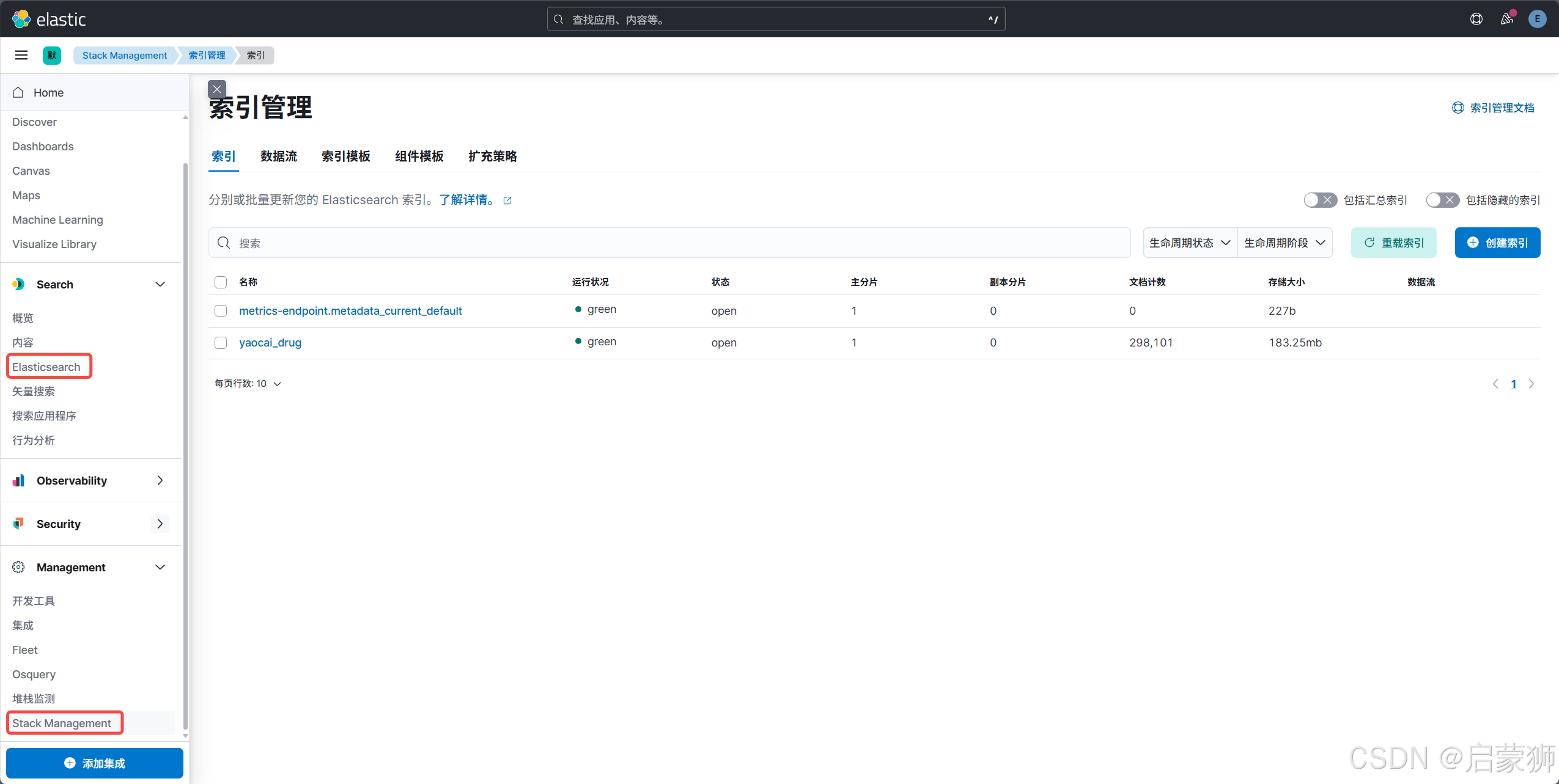

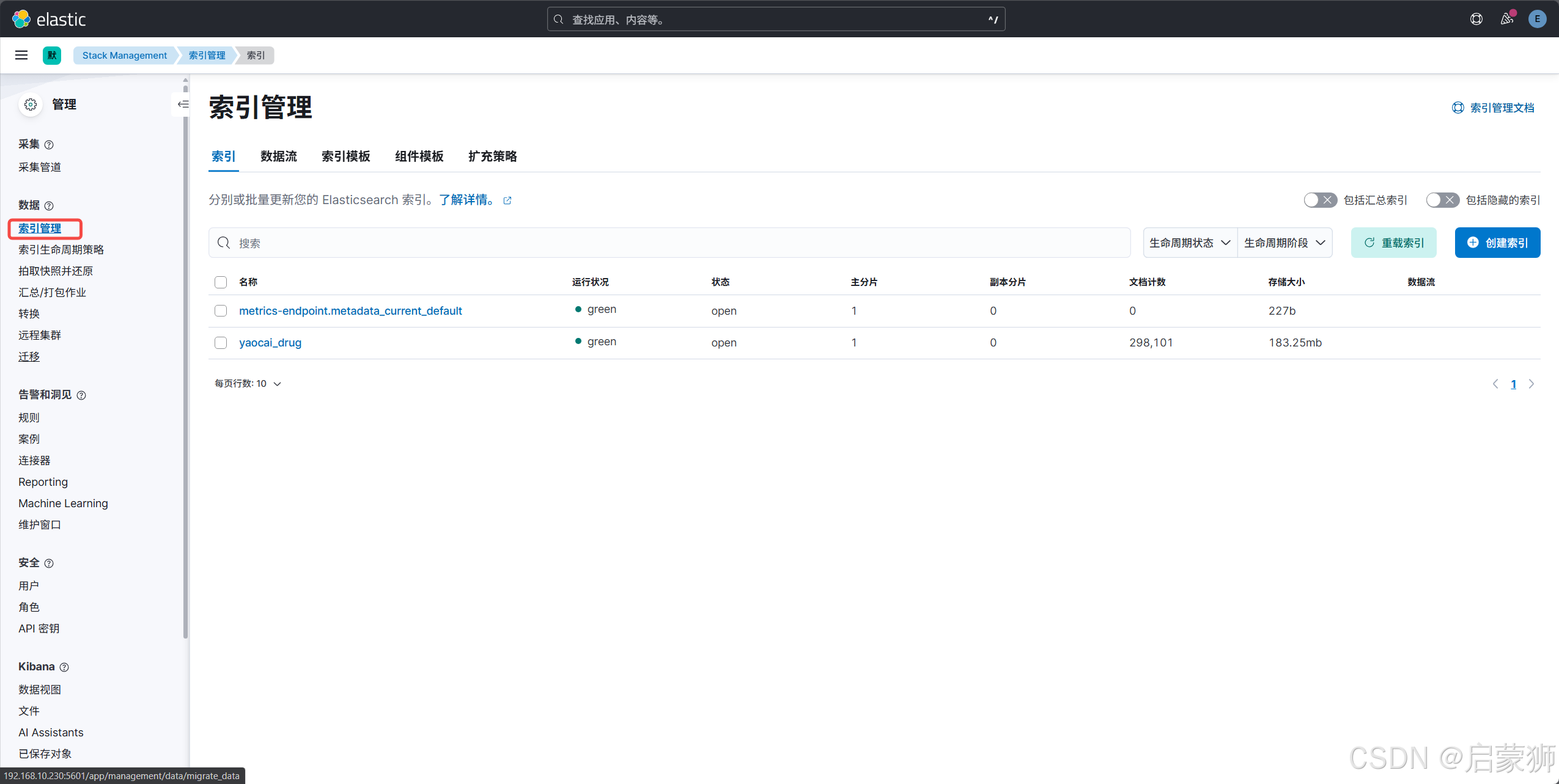

- 开发工具控制台

- 索引管理

操作示例

kibana创建索引

开发工具控制台执行

bash

# 为所有 .kibana*索引(如 .kibana, .kibana_1等)统一应用配置,解决磁盘空间问题:通过减少分片和副本数量(1主分片+0副本),显著降低存储开销

PUT _index_template/.kibana

{

"index_patterns": [".kibana*"],

"template": {

"settings": {

"number_of_shards": 1,

"number_of_replicas": 0,

"auto_expand_replicas": false

}

},

"priority": 1

}

# 新建索引

PUT /yaocai_drug

{

"settings": {

"number_of_shards": 1,

"number_of_replicas": 0,

"analysis": {

"filter": {

"synonym_filter": {

"type": "synonym",

"synonyms_path": "certs/synonym.txt"

}

},

"analyzer": {

"pinyin_analyzer": {

"tokenizer": "my_pinyin"

},

"synonym_analyzer": {

"tokenizer": "standard",

"filter": ["lowercase", "synonym_filter"]

}

},

"tokenizer": {

"my_pinyin": {

"type": "pinyin",

"keep_first_letter": false,

"keep_separate_first_letter": true,

"keep_full_pinyin": true,

"keep_original": false,

"keep_none_chinese_together": true,

"limit_first_letter_length": 16,

"lowercase": true,

"remove_duplicated_term": true

}

}

}

},

"mappings": {

"properties": {

"update_time": {

"type": "date",

"format": "strict_date_optional_time||epoch_millis",

"store": true

},

"is_delete": {

"type": "boolean",

"store": true

},

"name": {

"type": "text",

"fields": {

"keyword": {

"type": "keyword",

"ignore_above": 256

},

"pinyin": {

"type": "text",

"store": false,

"term_vector": "with_offsets",

"analyzer": "pinyin_analyzer"

}

}

},

"trade_name": {

"type": "text",

"fields": {

"keyword": {

"type": "keyword",

"ignore_above": 256

},

"pinyin": {

"type": "text",

"store": false,

"term_vector": "with_offsets",

"analyzer": "pinyin_analyzer"

}

}

},

"alias_name": {

"type": "text",

"fields": {

"keyword": {

"type": "keyword",

"ignore_above": 256

},

"pinyin": {

"type": "text",

"store": false,

"term_vector": "with_offsets",

"analyzer": "pinyin_analyzer"

}

}

},

"specification": {

"type": "text",

"fields": {

"keyword": {

"type": "keyword",

"ignore_above": 256

},

"pinyin": {

"type": "text",

"store": false,

"term_vector": "with_offsets",

"analyzer": "pinyin_analyzer"

}

}

},

"price": {

"type": "float",

"store": true

},

"factory": {

"type": "text",

"fields": {

"keyword": {

"type": "keyword",

"ignore_above": 256

},

"pinyin": {

"type": "text",

"store": false,

"term_vector": "with_offsets",

"analyzer": "pinyin_analyzer"

}

}

},

"barcode": {

"type": "keyword",

"store": true

},

"classify_id": {

"type": "keyword",

"store": true

},

"status": {

"type": "integer",

"store": true

}

}

}

}

# 删除索引

DELETE /yaocai_drug

# 查询文档总数

GET /yaocai_drug/_count

# 查询示例

GET /yaocai_drug/_search

{

"query": {

"bool": {

"should": [

{

"match_phrase": {

"name": {

"query": "感冒颗粒",

"boost": 10,

"slop": 15

}

}

},

{

"match_phrase": {

"factory": {

"query": "感冒颗粒",

"boost": 1,

"slop": 15

}

}

},

{

"match_phrase": {

"factory.pinyin": {

"query": "感冒颗粒",

"boost": 1,

"slop": 15

}

}

},

{

"match_phrase": {

"name.pinyin": {

"query": "感冒颗粒",

"boost": 1,

"slop": 15

}

}

},

{

"match_phrase": {

"brand": {

"query": "感冒颗粒",

"boost": 1,

"slop": 15

}

}

}

],

"minimum_should_match": 1

}

},

"aggs": {

"group_by_name_keyword": {

"terms": {

"field": "name.keyword",

"size": 100

}

},

"group_by_factory_keyword": {

"terms": {

"field": "factory.keyword",

"size": 100

}

},

"group_by_specification_keyword": {

"terms": {

"field": "specification.keyword",

"size": 100

}

}

}

}数据同步(logstash)

参考

全量

bash

/usr/share/logstash/bin/logstash -f all_yaocai_drug.conf

config

# all_yaocai_drug.conf

# pipeline.ecs_compatibility: disabled

input {

# 全量同步

jdbc {

jdbc_connection_string => "jdbc:postgresql://192.168.10.230:5432/yaocai_dev"

jdbc_user => "yaocai_dev"

jdbc_password => "hEFWbfsEkzEEMcj6"

jdbc_driver_library => "/root/logstash/postgresql-42.7.3.jar"

jdbc_driver_class => "org.postgresql.Driver"

jdbc_page_size => 1000

jdbc_fetch_size => 1000

statement => "SELECT id,update_time,is_delete,name,specification,price,factory,classify_id,barcode,status,is_otc,in_medical_insurance,medical_insurance_code,brand,unit,approval,business_type_text,images,upc,trade_name,alias_name FROM yaocai_drug where is_delete = false order by id asc;"

# 重复执行的周期,每分钟执行1次

#schedule => "* * * * *"

# 是否将字段名小写化

#lowercase_column_names => false

# 增量同步的参数

#use_column_value => true

#tracking_column => "id"

#record_last_run => true

#last_run_metadata_path => "/root/logstash/your_last_run_metadata_file"

# 启用调试日志

#jdbc_validate_connection => true

#jdbc_pagination_enabled => false

}

}

# 规格添加一个新的格式,数字与英文分隔

filter {

if [specification] {

ruby {

code => "

spec = event.get('specification')

# 使用正则表达式将所有的数字和字母之间插入空格

spec_modified = spec.gsub(/(\d+(\.\d+)?)([a-zA-Z*]+)/, '\\1 \\3')

event.set('specification2', spec_modified)

"

}

}

}

output {

elasticsearch {

hosts => ["http://127.0.0.1:19200"]

index => "yaocai_drug"

document_id => "%{id}"

manage_template => false

user => "elastic"

password => "dAUl36wBVN"

}

# 可以添加stdout输出用于调试

#stdout { codec => rubydebug }

}增量

config

# pipeline.ecs_compatibility: disabled

input {

# 增量同步

jdbc {

jdbc_connection_string => "jdbc:postgresql://192.168.10.230:5432/yaocai_dev2"

jdbc_user => "yaocai_dev"

jdbc_password => "hEFWbfsEkzEEMcj6"

jdbc_driver_library => "/root/logstash/postgresql-42.7.3.jar"

jdbc_driver_class => "org.postgresql.Driver"

jdbc_page_size => 1000

jdbc_fetch_size => 1000

statement => "SELECT id,update_time,is_delete,name,specification,price,factory,classify_id,barcode,status,is_otc,in_medical_insurance,medical_insurance_code,brand,unit,approval,business_type_text,images,upc,trade_name,alias_name FROM yaocai_drug where update_time > :sql_last_value order by update_time asc;"

# 重复执行的周期,每分钟执行1次

schedule => "*/3 * * * *"

# 是否将字段名小写化

#lowercase_column_names => false

# 增量同步的参数

use_column_value => true

tracking_column => "update_time"

tracking_column_type => "timestamp"

record_last_run => true

last_run_metadata_path => "/root/logstash/your_last_run_metadata_file"

# 启用调试日志

#jdbc_validate_connection => true

#jdbc_pagination_enabled => false

}

}

# 规格添加一个新的格式,数字与英文分隔

filter {

if [specification] {

ruby {

code => "

spec = event.get('specification')

# 使用正则表达式将所有的数字和字母之间插入空格

spec_modified = spec.gsub(/(\d+(\.\d+)?)([a-zA-Z*]+)/, '\\1 \\3')

event.set('specification2', spec_modified)

"

}

}

}

output {

elasticsearch {

hosts => ["http://127.0.0.1:19200"]

index => "yaocai_drug"

document_id => "%{id}"

manage_template => false

user => "elastic"

password => "dAUl36wBVN"

}

# 可以添加stdout输出用于调试

#stdout { codec => rubydebug }

}