《OpenShift / RHEL / DevSecOps 汇总目录》

文章目录

- [Lightspeed 命令行助手离线运行架构](#Lightspeed 命令行助手离线运行架构)

- [安装 Lightspeed 命令行助手](#安装 Lightspeed 命令行助手)

- [使用 Lightspeed 命令行助手](#使用 Lightspeed 命令行助手)

- 参考

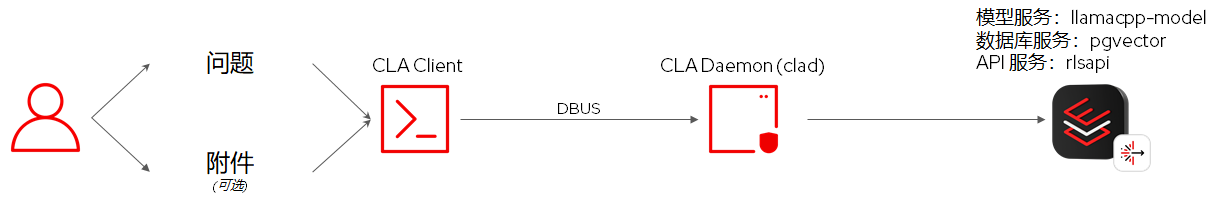

Lightspeed 命令行助手离线运行架构

安装 Lightspeed 命令行助手

以下环境为 RHEL 10 或 RHEL 9.6,命令默认使用 root 用户运行。

- 在系统中创建必要的目录。

bash

$ mkdir ~/.config

$ mkdir -p ~/.local/bin- 基于容器安装 Lightspeed 命令行助手离线环境。注意:可将 registry.redhat.io 改为离线镜像库的地址。

bash

$ podman login registry.redhat.io

$ podman run -u : --rm -v $HOME/.config:/config:Z -v $HOME/.local/bin:/config/.local/bin:Z registry.redhat.io/rhel-cla/installer-rhel10:latest install-systemd

⚠️ Red Hat Enterprise Linux (RHEL) command line assistant is a tool that uses AI technology. Do not include any personal information or other sensitive information in your input. Results should not be relied on without human review. More information available: https://red.ht/3JqbWuu.

ℹ️ 🚀 Installing rhel-cla command...

✅ 🎉 RHEL CLA installed!

ℹ️ 📁 Files installed:

• Command: ~/.local/bin/rhel-cla

• Config: ~/.config/rhel-cla/.env

• Runner: ~/.config/rhel-cla/rhel-cla-runner.sh

⚠️ ⚠️ NVIDIA GPU users (Linux): Edit ~/.config/rhel-cla/.env and uncomment:

LLAMACPP_IMAGE="quay.io/ramalama/cuda:latest"

⚠️ ⚠️ If running MacOS or Windows, make the command executable:

chmod +x ~/.local/bin/rhel-cla

⚠️ ⚠️ Make sure ~/.local/bin is in your PATH

# For bash:

echo 'export PATH="$HOME/.local/bin:$PATH"' >> ~/.bashrc && source ~/.bashrc

# For zsh:

echo 'export PATH="$HOME/.local/bin:$PATH"' >> ~/.zshrc && source ~/.zshrc

# For fish:

fish_add_path ~/.local/bin

ℹ️ 🚀 Quick start:

rhel-cla start

rhel-cla status

rhel-cla stop

ℹ️ 🗑️ Uninstall RHEL CLA:

rhel-cla uninstall- 修改配置文件。可将 LLM,RLSAPI_IMAGE,PGV_DB_IMAGE,LLAMACPP_IMAGE 使用的资源改为离线地址。另外如果有 NVIDIA GPU,可使用

LLAMACPP_IMAGE="quay.io/ramalama/cuda:latest"一行的配置,并且使用HOST_DEVICE="/dev/nvidia0"配置。

bash

$ vi /root/.config/rhel-cla/.env- 启动 RHEL 命令行助手的离线环境。

bash

$ echo 'export PATH="$HOME/.local/bin:$PATH"' >> ~/.bashrc && source ~/.bashrc

$ rhel-cla start

ℹ️ Starting RHEL CLA...

Loading configuration from: /root/.config/rhel-cla/.env

Using configured device: /dev/dri

Cleaning up any existing rhel-cla pod...

157ed9ec610c06ad2dc1e92c62244466c108c0011d6182d4a5df0b55fe182408

Creating model volume...

Checking for model from registry.redhat.io/rhel-cla/installer-rhel10:latest: Phi-4-mini-instruct-Q4_K_M.gguf

✅ Model Phi-4-mini-instruct-Q4_K_M.gguf already present in volume

Creating rhel-cla pod...

564ba035768f6d9ab0030d54f97ac195b152fdaeeeb4acadedeb23d35055d36b

Starting pgvector database...

Trying to pull registry.redhat.io/rhel-cla/rag-database-rhel10:latest...

Getting image source signatures

Checking if image destination supports signatures

Copying blob e08da0a07a49 done |

Copying blob a9031b52db2b done |

Copying config bfc8ae290c done |

Writing manifest to image destination

Storing signatures

ae823ab0e3e2c2376be37392111efe43a22e68748077f1ab494964511faf10ce

Starting model server (this may take time to download the model)...

Using extracted model: file:///models/Phi-4-mini-instruct-Q4_K_M.gguf

Trying to pull quay.io/ramalama/ramalama:latest...

Getting image source signatures

Copying blob 6b0210dfe625 done |

Copying blob bacb9627c0f0 done |

Copying config 845fddd033 done |

Writing manifest to image destination

e9c3f8472260d86a1a875b7a7372e4f8bf34a9724a75a3b7a713d156a1112d3c

Waiting for model server to be ready...

Attempt 1/60 - Waiting for model server...

Attempt 2/60 - Waiting for model server...

Attempt 3/60 - Waiting for model server...

Attempt 4/60 - Waiting for model server...

Attempt 5/60 - Waiting for model server...

Attempt 6/60 - Waiting for model server...

Attempt 7/60 - Waiting for model server...

Attempt 8/60 - Waiting for model server...

Attempt 9/60 - Waiting for model server...

Attempt 10/60 - Waiting for model server...

Attempt 11/60 - Waiting for model server...

Attempt 12/60 - Waiting for model server...

Attempt 13/60 - Waiting for model server...

Attempt 14/60 - Waiting for model server...

Attempt 15/60 - Waiting for model server...

Attempt 16/60 - Waiting for model server...

Attempt 17/60 - Waiting for model server...

Attempt 18/60 - Waiting for model server...

Attempt 19/60 - Waiting for model server...

Attempt 20/60 - Waiting for model server...

Model server is ready!

Detected model name: /models/Phi-4-mini-instruct-Q4_K_M.gguf

Starting API server...

Trying to pull registry.redhat.io/rhel-cla/rlsapi-rhel10:latest...

Getting image source signatures

Checking if image destination supports signatures

Copying blob c34187e1de1c done |

Copying blob 3a82aec6fcfc done |

Copying config d3d0d1c0a1 done |

Writing manifest to image destination

Storing signatures

994fcf0454d5cced426c6914db3c651c89afef3a6668ceca44fa609cfaed1d74

✅ RHEL CLA pod is running!

Services available at:

• API: http://localhost:8000

• Model Server: http://localhost:8888

• Database: http://localhost:5432

Useful commands:

podman logs rhel-cla-llamacpp-model # View model server logs

podman logs rhel-cla-rlsapi # View API server logs

podman logs rhel-cla-pgvector # View pgvector database logs

podman pod stop rhel-cla # Stop all containers

podman pod rm rhel-cla # Remove pod and containers

ℹ️ Install the RHEL command-line-assistant client package on your RHEL system and configure the endpoint as described here: https://red.ht/3JqbWuu- 查看运行的容器,其中包括模型 rhel-cla-llamacpp-model、数据库 rhel-cla-pgvector、API 服务 rhel-cla-rlsapi。

bash

$ podman ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6d12206bc5c2 localhost/podman-pause:5.4.0-1744156800 11 minutes ago Up 10 minutes 0.0.0.0:8000->8000/tcp, 0.0.0.0:8888->8888/tcp 564ba035768f-infra

ae823ab0e3e2 registry.redhat.io/rhel-cla/rag-database-rhel10:latest 10 minutes ago Up 10 minutes 0.0.0.0:8000->8000/tcp, 0.0.0.0:8888->8888/tcp rhel-cla-pgvector

e9c3f8472260 quay.io/ramalama/ramalama:latest ramalama --store ... 9 minutes ago Up 9 minutes 0.0.0.0:8000->8000/tcp, 0.0.0.0:8888->8888/tcp rhel-cla-llamacpp-model

994fcf0454d5 registry.redhat.io/rhel-cla/rlsapi-rhel10:latest ./start_uvicorn.s... 7 minutes ago Up 7 minutes 0.0.0.0:8000->8000/tcp, 0.0.0.0:8888->8888/tcp rhel-cla-rlsapi- 安装命令行助手

bash

$ dnf install command-line-assistant- 修改 /etc/xdg/command-line-assistant/config.toml 文件。

bash

$ vi /etc/xdg/command-line-assistant/config.toml- 将 endpoint 指向本地。

bash

endpoint = "http://127.0.0.1:8000/"- 重启 clad 服务。

bash

$ systemctl restart clad使用 Lightspeed 命令行助手

- 问一个英文问题。

bash

$ c "How do I list all active systemd services?"

⁻₊+ Asking RHEL Lightspeed

────────────────────────────────────────────────────────────────────────

You can list all active systemd services by using the following command in your terminal:

Snippet ────────────────

systemctl list-units --type=service --state=active

────────────────────────

This command will display all services that are currently active and running on your system.

────────────────────────────────────────────────────────────────────────

Always review AI-generated content prior to use.- 问一个中文问题。

bash

c "如何设置主机名?"

⁺₊+ Asking RHEL Lightspeed

This feature uses AI technology. Do not include any personal information or other sensitive information in your input. Interactions may be used to improve Red Hat's products or services.

────────────────────────────────────────────────────────────────────────

Hello! I'd be happy to help you with setting up your hostname on Red Hat Enterprise Linux. Please follow the instructions below:

1. Open your terminal.

2. Type the following command to edit the hostname file:

Snippet ────────────────

sudo vi /etc/hostname

────────────────────────

3. In the file, replace the existing hostname with your desired hostname. Save and exit the file.

4. Next, type the following command to edit the hosts file:

Snippet ────────────────

sudo vi /etc/hosts

────────────────────────

5. In the file, add a new line with your IP address followed by your desired hostname. For example:

Snippet ────────────────

192.168.1.10 myhostname

────────────────────────

6. Save and exit the file.

7. Finally, reboot your system for the changes to take effect.

I hope this helps! Let me know if you have any further questions.

────────────────────────────────────────────────────────────────────────

Always review AI-generated content prior to use.参考

https://www.redhat.com/en/blog/use-rhel-command-line-assistant-offline-new-developer-preview