#LoRA #参数高效微调 #秩选择 #权重初始化 #动态调整 #深度学习优化

关键词:LoRA、低秩适应、秩选择策略、权重初始化、动态秩调整、参数效率优化、微调技术、深度学习

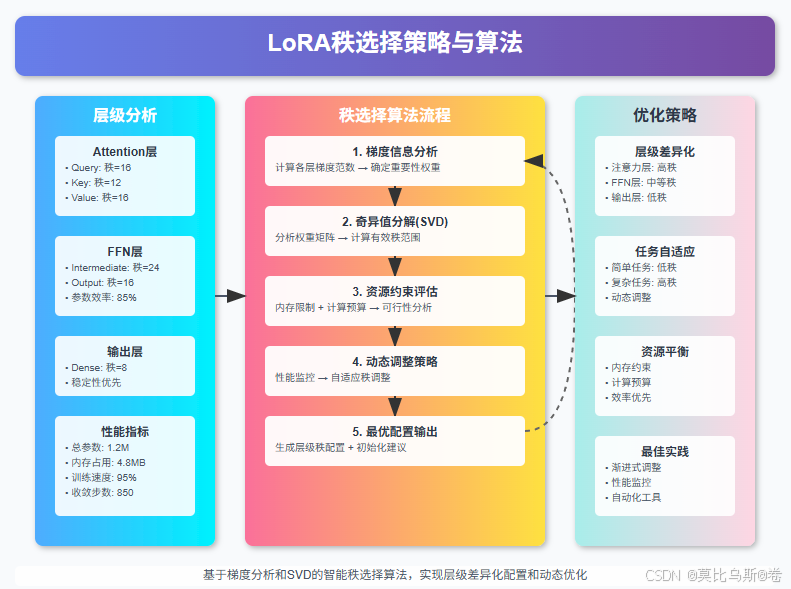

摘要:本文深入探讨LoRA(Low-Rank Adaptation)的高级优化技巧,重点分析秩选择的理论基础与实践策略,详解多种初始化方法的适用场景,并介绍动态秩调整算法。通过理论推导、代码实现和实验对比,帮助读者掌握LoRA参数调优的核心技能,实现更高效的模型微调效果。文章涵盖层级秩分配、自适应初始化、在线秩优化等前沿技术,为实际项目提供可操作的优化方案。

文章目录

-

- 引言:为什么秩选择和初始化如此重要?

- 第一部分:LoRA秩选择的理论基础

-

- [1.1 秩与表达能力的数学关系](#1.1 秩与表达能力的数学关系)

- [1.2 不同层的秩需求差异分析](#1.2 不同层的秩需求差异分析)

- [1.3 基于梯度的动态秩选择](#1.3 基于梯度的动态秩选择)

- 第二部分:LoRA初始化策略深度解析

-

- [2.1 传统初始化方法回顾与问题](#2.1 传统初始化方法回顾与问题)

- [2.2 改进的初始化策略](#2.2 改进的初始化策略)

- [2.3 自适应初始化策略](#2.3 自适应初始化策略)

- 第三部分:动态秩调整算法实现

-

- [3.1 在线秩优化理论](#3.1 在线秩优化理论)

- [3.2 基于损失曲线的秋调整策略](#3.2 基于损失曲线的秋调整策略)

- [3.3 基于梯度信息的智能调整](#3.3 基于梯度信息的智能调整)

- 第四部分:实验对比与性能分析

-

- [4.1 秋选择策略对比实验](#4.1 秋选择策略对比实验)

- [4.2 初始化策略的收敛性分析](#4.2 初始化策略的收敛性分析)

- 第五部分:生产环境最佳实践

-

- [5.1 自动化秩选择系统](#5.1 自动化秩选择系统)

- [5.2 监控与调优工具](#5.2 监控与调优工具)

- 总结与展望

引言:为什么秩选择和初始化如此重要?

在上一篇文章中,我们详细介绍了LoRA的基本原理和完整实现。但在实际应用中,你是否遇到过这样的困惑:

- 为什么同样的LoRA配置在不同层的效果差异巨大?

- 如何确定每一层的最优秩值?

- 初始化策略对最终性能有多大影响?

- 能否在训练过程中动态调整秩的大小?

这些问题的答案就隐藏在LoRA的高级优化技巧中。本文将带你深入探索这些技术细节,让你的LoRA微调效果更上一层楼。

想象一下,如果我们把LoRA比作一个精密的乐器,那么秩选择就像是调音,初始化策略就像是演奏技巧。只有掌握了这些高级技巧,才能演奏出最美妙的"微调乐章"。

第一部分:LoRA秩选择的理论基础

1.1 秩与表达能力的数学关系

在深入实践之前,我们需要理解秩(rank)与模型表达能力之间的数学关系。

对于一个LoRA层,其权重更新可以表示为:

ΔW = BA其中B ∈ R^(d×r),A ∈ R^(r×k),r为秩。

理论分析:

- 表达能力上界:LoRA能够表达的权重更新空间维度最多为min(d×r, r×k) = r×min(d,k)

- 参数效率:相比全量微调的d×k个参数,LoRA只需要r×(d+k)个参数

- 压缩比:compression_ratio = (d×k) / (r×(d+k))

让我们通过代码来分析不同秩值的理论表达能力:

python

import numpy as np

import matplotlib.pyplot as plt

from typing import List, Tuple

def analyze_rank_capacity(d: int, k: int, ranks: List[int]) -> dict:

"""

分析不同秩值的表达能力和参数效率

Args:

d: 输入维度

k: 输出维度

ranks: 要分析的秩值列表

Returns:

包含分析结果的字典

"""

results = {

'ranks': ranks,

'param_counts': [],

'compression_ratios': [],

'theoretical_capacity': []

}

full_params = d * k

for r in ranks:

# LoRA参数量

lora_params = r * (d + k)

# 压缩比

compression_ratio = full_params / lora_params if lora_params > 0 else 0

# 理论表达能力(奇异值个数)

theoretical_capacity = min(r, d, k)

results['param_counts'].append(lora_params)

results['compression_ratios'].append(compression_ratio)

results['theoretical_capacity'].append(theoretical_capacity)

return results

# 分析示例:BERT-base的线性层

d, k = 768, 768 # 典型的transformer层维度

ranks = [1, 2, 4, 8, 16, 32, 64, 128, 256]

analysis = analyze_rank_capacity(d, k, ranks)

# 可视化结果

fig, (ax1, ax2, ax3) = plt.subplots(1, 3, figsize=(15, 5))

# 参数量对比

ax1.plot(ranks, analysis['param_counts'], 'b-o', label='LoRA参数量')

ax1.axhline(y=d*k, color='r', linestyle='--', label='全量参数量')

ax1.set_xlabel('秩 (r)')

ax1.set_ylabel('参数量')

ax1.set_title('参数量 vs 秩')

ax1.legend()

ax1.grid(True)

# 压缩比

ax2.plot(ranks, analysis['compression_ratios'], 'g-o')

ax2.set_xlabel('秩 (r)')

ax2.set_ylabel('压缩比')

ax2.set_title('压缩比 vs 秩')

ax2.grid(True)

# 理论表达能力

ax3.plot(ranks, analysis['theoretical_capacity'], 'm-o')

ax3.set_xlabel('秩 (r)')

ax3.set_ylabel('理论表达能力')

ax3.set_title('表达能力 vs 秩')

ax3.grid(True)

plt.tight_layout()

plt.show()

print("秩选择分析结果:")

for i, r in enumerate(ranks):

print(f"秩={r:3d}: 参数量={analysis['param_counts'][i]:6d}, "

f"压缩比={analysis['compression_ratios'][i]:.1f}x, "

f"表达能力={analysis['theoretical_capacity'][i]:3d}")1.2 不同层的秩需求差异分析

在Transformer架构中,不同层对秩的需求存在显著差异。这种差异源于:

- 层的功能特化:浅层主要学习局部特征,深层学习全局语义

- 梯度流动特性:不同层的梯度大小和方向分布不同

- 权重更新幅度:各层在微调过程中的权重变化程度不同

让我们实现一个分析工具来研究这种差异:

python

import torch

import torch.nn as nn

from transformers import AutoModel

from typing import Dict, List

class LayerWiseRankAnalyzer:

"""

层级秩需求分析器

"""

def __init__(self, model_name: str = "bert-base-uncased"):

self.model = AutoModel.from_pretrained(model_name)

self.layer_stats = {}

def analyze_weight_changes(self,

original_weights: Dict[str, torch.Tensor],

updated_weights: Dict[str, torch.Tensor]) -> Dict[str, float]:

"""

分析权重变化的奇异值分布

"""

layer_ranks = {}

for name in original_weights:

if name in updated_weights:

# 计算权重差异

delta_w = updated_weights[name] - original_weights[name]

# SVD分解

U, S, V = torch.svd(delta_w)

# 计算有效秩(基于奇异值阈值)

threshold = 0.01 * S[0] # 1%阈值

effective_rank = torch.sum(S > threshold).item()

# 计算90%能量所需的秩

cumsum_ratio = torch.cumsum(S, dim=0) / torch.sum(S)

rank_90 = torch.sum(cumsum_ratio < 0.9).item() + 1

layer_ranks[name] = {

'effective_rank': effective_rank,

'rank_90': rank_90,

'singular_values': S.detach().cpu().numpy(),

'max_singular_value': S[0].item(),

'rank_ratio': effective_rank / min(delta_w.shape)

}

return layer_ranks

def recommend_layer_ranks(self,

layer_analysis: Dict[str, Dict],

total_rank_budget: int = 64) -> Dict[str, int]:

"""

基于分析结果推荐各层秩值

"""

# 计算各层重要性分数

importance_scores = {}

for layer_name, stats in layer_analysis.items():

# 综合考虑有效秩和最大奇异值

importance = stats['effective_rank'] * np.log(1 + stats['max_singular_value'])

importance_scores[layer_name] = importance

# 归一化重要性分数

total_importance = sum(importance_scores.values())

normalized_scores = {k: v/total_importance for k, v in importance_scores.items()}

# 分配秩预算

recommended_ranks = {}

remaining_budget = total_rank_budget

# 按重要性排序

sorted_layers = sorted(normalized_scores.items(), key=lambda x: x[1], reverse=True)

for layer_name, score in sorted_layers:

if remaining_budget <= 0:

recommended_ranks[layer_name] = 1 # 最小秩

else:

# 基于重要性分配秩

allocated_rank = max(1, int(score * total_rank_budget))

allocated_rank = min(allocated_rank, remaining_budget)

recommended_ranks[layer_name] = allocated_rank

remaining_budget -= allocated_rank

return recommended_ranks

# 使用示例

analyzer = LayerWiseRankAnalyzer()

# 模拟微调前后的权重(实际使用中需要从真实微调过程获取)

print("层级秩分析工具已初始化")

print("使用方法:")

print("1. 保存微调前的模型权重")

print("2. 进行微调训练")

print("3. 使用analyze_weight_changes分析权重变化")

print("4. 使用recommend_layer_ranks获取推荐秩值")1.3 基于梯度的动态秩选择

传统的固定秩选择可能无法适应训练过程中的动态变化。我们可以基于梯度信息来动态调整秩:

python

class GradientBasedRankSelector:

"""

基于梯度的动态秩选择器

"""

def __init__(self,

initial_rank: int = 8,

max_rank: int = 64,

adjustment_frequency: int = 100,

sensitivity_threshold: float = 0.1):

self.current_rank = initial_rank

self.max_rank = max_rank

self.adjustment_frequency = adjustment_frequency

self.sensitivity_threshold = sensitivity_threshold

self.gradient_history = []

self.step_count = 0

def analyze_gradient_rank(self, gradients: torch.Tensor) -> int:

"""

基于梯度的SVD分析确定所需秩

"""

# 对梯度进行SVD分解

U, S, V = torch.svd(gradients)

# 计算累积能量比

total_energy = torch.sum(S ** 2)

cumulative_energy = torch.cumsum(S ** 2, dim=0) / total_energy

# 找到达到95%能量所需的秩

required_rank = torch.sum(cumulative_energy < 0.95).item() + 1

return min(required_rank, self.max_rank)

def update_rank(self, lora_layer, gradients: torch.Tensor) -> bool:

"""

更新LoRA层的秩

Returns:

bool: 是否进行了秩调整

"""

self.step_count += 1

# 记录梯度统计信息

grad_norm = torch.norm(gradients).item()

self.gradient_history.append(grad_norm)

# 定期检查是否需要调整秩

if self.step_count % self.adjustment_frequency == 0:

suggested_rank = self.analyze_gradient_rank(gradients)

# 计算梯度变化趋势

if len(self.gradient_history) >= 2 * self.adjustment_frequency:

recent_avg = np.mean(self.gradient_history[-self.adjustment_frequency:])

previous_avg = np.mean(self.gradient_history[-2*self.adjustment_frequency:-self.adjustment_frequency])

change_ratio = abs(recent_avg - previous_avg) / previous_avg

# 如果梯度变化剧烈,增加秩;如果稳定,可以减少秩

if change_ratio > self.sensitivity_threshold:

target_rank = min(suggested_rank + 2, self.max_rank)

else:

target_rank = max(suggested_rank - 1, 1)

else:

target_rank = suggested_rank

# 执行秩调整

if target_rank != self.current_rank:

self._resize_lora_matrices(lora_layer, target_rank)

self.current_rank = target_rank

return True

return False

def _resize_lora_matrices(self, lora_layer, new_rank: int):

"""

调整LoRA矩阵的大小

"""

old_rank = self.current_rank

# 获取当前权重

old_A = lora_layer.lora_A.weight.data

old_B = lora_layer.lora_B.weight.data

# 创建新的权重矩阵

new_A = torch.zeros(new_rank, old_A.shape[1], device=old_A.device, dtype=old_A.dtype)

new_B = torch.zeros(old_B.shape[0], new_rank, device=old_B.device, dtype=old_B.dtype)

if new_rank > old_rank:

# 扩展:保留原有权重,新增部分用小随机值初始化

new_A[:old_rank] = old_A

new_B[:, :old_rank] = old_B

# 新增部分的初始化

nn.init.kaiming_uniform_(new_A[old_rank:], a=math.sqrt(5))

nn.init.zeros_(new_B[:, old_rank:])

else:

# 收缩:保留最重要的维度

# 通过SVD找到最重要的维度

combined = torch.mm(old_B, old_A)

U, S, V = torch.svd(combined)

# 重构为新的秩

sqrt_S = torch.diag(torch.sqrt(S[:new_rank]))

new_B_temp = torch.mm(U[:, :new_rank], sqrt_S)

new_A_temp = torch.mm(sqrt_S, V[:new_rank, :])

new_A[:] = new_A_temp

new_B[:] = new_B_temp

# 更新层的权重

lora_layer.lora_A.weight.data = new_A

lora_layer.lora_B.weight.data = new_B

# 更新优化器状态(如果需要)

print(f"LoRA秩从 {old_rank} 调整为 {new_rank}")

# 使用示例

rank_selector = GradientBasedRankSelector(

initial_rank=8,

max_rank=32,

adjustment_frequency=50

)

print("动态秩选择器已初始化")

print(f"初始秩: {rank_selector.current_rank}")

print(f"最大秩: {rank_selector.max_rank}")

print(f"调整频率: 每{rank_selector.adjustment_frequency}步检查一次")第二部分:LoRA初始化策略深度解析

2.1 传统初始化方法回顾与问题

在原始LoRA论文中,推荐的初始化策略是:

- A矩阵:使用随机高斯初始化

- B矩阵:使用零初始化

这种策略的优点是确保初始时ΔW = BA = 0,不会影响预训练模型的初始性能。但在实践中,我们发现这种初始化存在一些问题:

- 收敛速度慢:零初始化可能导致训练初期梯度过小

- 对称性问题:可能陷入局部最优

- 层间不平衡:不同层可能需要不同的初始化策略

2.2 改进的初始化策略

让我们实现几种改进的初始化方法:

python

import torch

import torch.nn as nn

import math

from typing import Literal, Optional

class AdvancedLoRAInitializer:

"""

高级LoRA初始化器

"""

@staticmethod

def xavier_lora_init(lora_A: nn.Parameter,

lora_B: nn.Parameter,

scaling: float = 1.0):

"""

Xavier初始化变种:保持前向传播方差稳定

"""

fan_in = lora_A.shape[1]

fan_out = lora_B.shape[0]

# 计算Xavier标准差

std = math.sqrt(2.0 / (fan_in + fan_out)) * scaling

# A矩阵:正态分布初始化

nn.init.normal_(lora_A, mean=0, std=std)

# B矩阵:小随机值初始化而非零初始化

nn.init.normal_(lora_B, mean=0, std=std * 0.1)

@staticmethod

def kaiming_lora_init(lora_A: nn.Parameter,

lora_B: nn.Parameter,

mode: Literal['fan_in', 'fan_out'] = 'fan_in',

nonlinearity: str = 'relu'):

"""

Kaiming初始化变种:适用于ReLU等激活函数

"""

# A矩阵使用Kaiming初始化

nn.init.kaiming_normal_(lora_A, mode=mode, nonlinearity=nonlinearity)

# B矩阵使用缩放的Kaiming初始化

nn.init.kaiming_normal_(lora_B, mode=mode, nonlinearity=nonlinearity)

lora_B.data *= 0.1 # 缩放以保持初始稳定性

@staticmethod

def svd_based_init(lora_A: nn.Parameter,

lora_B: nn.Parameter,

target_matrix: Optional[torch.Tensor] = None,

noise_scale: float = 0.1):

"""

基于SVD的初始化:如果有目标矩阵,使用其低秩近似

"""

if target_matrix is not None:

# 对目标矩阵进行SVD分解

U, S, V = torch.svd(target_matrix)

rank = min(lora_A.shape[0], len(S))

# 使用SVD结果初始化

sqrt_S = torch.diag(torch.sqrt(S[:rank]))

lora_A.data = torch.mm(sqrt_S, V[:rank, :]) + \

torch.randn_like(lora_A) * noise_scale

lora_B.data = torch.mm(U[:, :rank], sqrt_S) + \

torch.randn_like(lora_B) * noise_scale

else:

# 回退到Xavier初始化

AdvancedLoRAInitializer.xavier_lora_init(lora_A, lora_B)

@staticmethod

def orthogonal_init(lora_A: nn.Parameter,

lora_B: nn.Parameter,

gain: float = 1.0):

"""

正交初始化:保持梯度流动稳定

"""

# A矩阵正交初始化

nn.init.orthogonal_(lora_A, gain=gain)

# B矩阵使用转置的正交初始化

temp_B = torch.empty(lora_B.shape[1], lora_B.shape[0])

nn.init.orthogonal_(temp_B, gain=gain * 0.1)

lora_B.data = temp_B.t()

@staticmethod

def layer_adaptive_init(lora_A: nn.Parameter,

lora_B: nn.Parameter,

layer_depth: int,

total_layers: int,

base_std: float = 0.02):

"""

层自适应初始化:根据层深度调整初始化强度

"""

# 计算层深度比例

depth_ratio = layer_depth / total_layers

# 深层使用更小的初始化值

layer_std = base_std * (1.0 - 0.5 * depth_ratio)

# A矩阵

nn.init.normal_(lora_A, mean=0, std=layer_std)

# B矩阵:深层使用更保守的初始化

b_std = layer_std * (0.1 + 0.05 * depth_ratio)

nn.init.normal_(lora_B, mean=0, std=b_std)

# 初始化方法对比实验

def compare_initialization_methods():

"""

对比不同初始化方法的效果

"""

# 模拟参数

d_model = 768

rank = 16

methods = {

'original': lambda A, B: (nn.init.kaiming_uniform_(A, a=math.sqrt(5)),

nn.init.zeros_(B)),

'xavier_lora': AdvancedLoRAInitializer.xavier_lora_init,

'kaiming_lora': AdvancedLoRAInitializer.kaiming_lora_init,

'orthogonal': AdvancedLoRAInitializer.orthogonal_init,

'layer_adaptive': lambda A, B: AdvancedLoRAInitializer.layer_adaptive_init(

A, B, layer_depth=6, total_layers=12)

}

results = {}

for method_name, init_func in methods.items():

# 创建LoRA参数

lora_A = nn.Parameter(torch.empty(rank, d_model))

lora_B = nn.Parameter(torch.empty(d_model, rank))

# 应用初始化

if method_name == 'original':

init_func(lora_A, lora_B)

else:

init_func(lora_A, lora_B)

# 计算初始权重更新

delta_W = torch.mm(lora_B, lora_A)

# 统计信息

results[method_name] = {

'delta_W_norm': torch.norm(delta_W).item(),

'delta_W_std': torch.std(delta_W).item(),

'A_norm': torch.norm(lora_A).item(),

'B_norm': torch.norm(lora_B).item(),

'spectral_norm': torch.norm(delta_W, p=2).item()

}

# 打印对比结果

print("\n初始化方法对比结果:")

print(f"{'方法':<15} {'ΔW范数':<10} {'ΔW标准差':<10} {'A范数':<8} {'B范数':<8} {'谱范数':<8}")

print("-" * 70)

for method, stats in results.items():

print(f"{method:<15} {stats['delta_W_norm']:<10.4f} {stats['delta_W_std']:<10.4f} "

f"{stats['A_norm']:<8.4f} {stats['B_norm']:<8.4f} {stats['spectral_norm']:<8.4f}")

return results

# 运行对比实验

comparison_results = compare_initialization_methods()2.3 自适应初始化策略

基于预训练权重的统计信息,我们可以设计自适应的初始化策略:

python

class AdaptiveLoRAInitializer:

"""

自适应LoRA初始化器:基于预训练权重统计信息

"""

def __init__(self, pretrained_model):

self.model = pretrained_model

self.weight_stats = self._analyze_pretrained_weights()

def _analyze_pretrained_weights(self) -> dict:

"""

分析预训练权重的统计特性

"""

stats = {}

for name, param in self.model.named_parameters():

if 'weight' in name and param.dim() == 2:

# 计算权重统计信息

weight_data = param.data

# SVD分析

U, S, V = torch.svd(weight_data)

stats[name] = {

'mean': torch.mean(weight_data).item(),

'std': torch.std(weight_data).item(),

'frobenius_norm': torch.norm(weight_data, 'fro').item(),

'spectral_norm': S[0].item(),

'effective_rank': self._compute_effective_rank(S),

'singular_values': S.detach().cpu().numpy(),

'shape': weight_data.shape

}

return stats

def _compute_effective_rank(self, singular_values: torch.Tensor,

threshold: float = 0.01) -> int:

"""

计算有效秩

"""

normalized_sv = singular_values / singular_values[0]

return torch.sum(normalized_sv > threshold).item()

def adaptive_init(self,

lora_A: nn.Parameter,

lora_B: nn.Parameter,

target_layer_name: str,

adaptation_strength: float = 1.0):

"""

基于目标层统计信息的自适应初始化

Args:

lora_A: LoRA的A矩阵参数

lora_B: LoRA的B矩阵参数

target_layer_name: 目标层名称

adaptation_strength: 适应强度系数

"""

if target_layer_name not in self.weight_stats:

# 回退到默认初始化

AdvancedLoRAInitializer.xavier_lora_init(lora_A, lora_B)

return

stats = self.weight_stats[target_layer_name]

# 基于目标层的谱范数调整初始化尺度

scale_factor = stats['spectral_norm'] * adaptation_strength

# 基于有效秩调整初始化策略

effective_rank = stats['effective_rank']

rank = lora_A.shape[0]

if rank <= effective_rank // 2:

# 低秩情况:使用更激进的初始化

std_A = stats['std'] * scale_factor * 0.5

std_B = stats['std'] * scale_factor * 0.1

else:

# 高秩情况:使用更保守的初始化

std_A = stats['std'] * scale_factor * 0.2

std_B = stats['std'] * scale_factor * 0.05

# 执行初始化

nn.init.normal_(lora_A, mean=0, std=std_A)

nn.init.normal_(lora_B, mean=0, std=std_B)

print(f"自适应初始化 {target_layer_name}:")

print(f" A矩阵标准差: {std_A:.6f}")

print(f" B矩阵标准差: {std_B:.6f}")

print(f" 基于谱范数: {stats['spectral_norm']:.4f}")

print(f" 有效秩: {effective_rank}")

# 使用示例

# adaptive_initializer = AdaptiveLoRAInitializer(pretrained_model)

# adaptive_initializer.adaptive_init(lora_A, lora_B, "bert.encoder.layer.0.attention.self.query")第三部分:动态秩调整算法实现

3.1 在线秩优化理论

动态秩调整的核心思想是在训练过程中根据模型的学习状态自动调整LoRA的秩。这种方法的理论基础包括:

- 信息论视角:秩反映了权重更新的信息容量

- 优化理论:不同训练阶段需要不同的参数空间大小

- 正则化效应:动态调整可以起到自适应正则化的作用

3.2 基于损失曲线的秋调整策略

python

import numpy as np

from collections import deque

from typing import List, Tuple, Optional

class LossBasedRankAdjuster:

"""

基于损失曲线的秋调整器

"""

def __init__(self,

initial_rank: int = 8,

min_rank: int = 2,

max_rank: int = 64,

patience: int = 10,

improvement_threshold: float = 0.01,

history_length: int = 50):

self.current_rank = initial_rank

self.min_rank = min_rank

self.max_rank = max_rank

self.patience = patience

self.improvement_threshold = improvement_threshold

# 历史记录

self.loss_history = deque(maxlen=history_length)

self.rank_history = deque(maxlen=history_length)

self.no_improvement_count = 0

self.best_loss = float('inf')

# 调整策略参数

self.rank_increase_factor = 1.5

self.rank_decrease_factor = 0.8

def should_adjust_rank(self, current_loss: float) -> Tuple[bool, str, int]:

"""

判断是否需要调整秋,返回(是否调整, 调整原因, 新秋值)

"""

self.loss_history.append(current_loss)

self.rank_history.append(self.current_rank)

# 检查是否有改进

if current_loss < self.best_loss - self.improvement_threshold:

self.best_loss = current_loss

self.no_improvement_count = 0

return False, "loss_improved", self.current_rank

else:

self.no_improvement_count += 1

# 如果长时间没有改进,考虑调整秋

if self.no_improvement_count >= self.patience:

return self._decide_rank_adjustment()

return False, "no_adjustment_needed", self.current_rank

def _decide_rank_adjustment(self) -> Tuple[bool, str, int]:

"""

决定具体的秋调整策略

"""

if len(self.loss_history) < self.patience:

return False, "insufficient_history", self.current_rank

# 分析最近的损失趋势

recent_losses = list(self.loss_history)[-self.patience:]

loss_trend = self._analyze_loss_trend(recent_losses)

# 分析损失方差

loss_variance = np.var(recent_losses)

if loss_trend == "plateau" and loss_variance < 0.001:

# 损失平台期且方差小:可能需要增加秋

if self.current_rank < self.max_rank:

new_rank = min(int(self.current_rank * self.rank_increase_factor),

self.max_rank)

self.no_improvement_count = 0 # 重置计数器

return True, "plateau_increase_rank", new_rank

elif loss_trend == "oscillating" and loss_variance > 0.01:

# 损失震荡且方差大:可能需要减少秋

if self.current_rank > self.min_rank:

new_rank = max(int(self.current_rank * self.rank_decrease_factor),

self.min_rank)

self.no_improvement_count = 0

return True, "oscillating_decrease_rank", new_rank

return False, "no_clear_signal", self.current_rank

def _analyze_loss_trend(self, losses: List[float]) -> str:

"""

分析损失趋势

"""

if len(losses) < 3:

return "insufficient_data"

# 计算一阶差分

diffs = np.diff(losses)

# 计算趋势指标

positive_diffs = np.sum(diffs > 0)

negative_diffs = np.sum(diffs < 0)

if positive_diffs > len(diffs) * 0.7:

return "increasing"

elif negative_diffs > len(diffs) * 0.7:

return "decreasing"

elif np.std(diffs) > np.mean(np.abs(diffs)):

return "oscillating"

else:

return "plateau"

def update_rank(self, new_rank: int):

"""

更新当前秋值

"""

old_rank = self.current_rank

self.current_rank = new_rank

print(f"秋调整: {old_rank} -> {new_rank}")

# 使用示例

rank_adjuster = LossBasedRankAdjuster(

initial_rank=8,

min_rank=2,

max_rank=32,

patience=5

)

# 模拟训练过程

losses = [2.5, 2.3, 2.1, 2.0, 1.95, 1.94, 1.94, 1.93, 1.93, 1.93, # 改进后平台

1.94, 1.92, 1.95, 1.91, 1.96, 1.90, 1.97, 1.89] # 开始震荡

print("模拟训练过程中的秋调整:")

for step, loss in enumerate(losses):

should_adjust, reason, new_rank = rank_adjuster.should_adjust_rank(loss)

if should_adjust:

print(f"Step {step}: Loss={loss:.3f}, 调整原因: {reason}, 新秋: {new_rank}")

rank_adjuster.update_rank(new_rank)

else:

print(f"Step {step}: Loss={loss:.3f}, 当前秋: {rank_adjuster.current_rank}")3.3 基于梯度信息的智能调整

python

class GradientInformedRankAdjuster:

"""

基于梯度信息的智能秋调整器

"""

def __init__(self,

initial_rank: int = 8,

adjustment_interval: int = 100,

rank_change_threshold: float = 0.1):

self.current_rank = initial_rank

self.adjustment_interval = adjustment_interval

self.rank_change_threshold = rank_change_threshold

# 梯度统计信息

self.gradient_norms_A = deque(maxlen=adjustment_interval)

self.gradient_norms_B = deque(maxlen=adjustment_interval)

self.step_count = 0

def collect_gradient_info(self,

grad_A: torch.Tensor,

grad_B: torch.Tensor):

"""

收集梯度信息

"""

self.gradient_norms_A.append(torch.norm(grad_A).item())

self.gradient_norms_B.append(torch.norm(grad_B).item())

self.step_count += 1

def analyze_gradient_subspace(self,

grad_A: torch.Tensor,

grad_B: torch.Tensor) -> dict:

"""

分析梯度子空间特性

"""

# 计算梯度的有效维度

combined_grad = torch.mm(grad_B, grad_A)

U, S, V = torch.svd(combined_grad)

# 计算有效秋(基于奇异值能量分布)

total_energy = torch.sum(S ** 2)

cumulative_energy = torch.cumsum(S ** 2, dim=0) / total_energy

# 90%能量所需的秋

effective_rank_90 = torch.sum(cumulative_energy < 0.9).item() + 1

# 95%能量所需的秋

effective_rank_95 = torch.sum(cumulative_energy < 0.95).item() + 1

# 梯度方向一致性

if len(self.gradient_norms_A) > 1:

norm_A_std = np.std(list(self.gradient_norms_A)[-10:])

norm_B_std = np.std(list(self.gradient_norms_B)[-10:])

consistency = 1.0 / (1.0 + norm_A_std + norm_B_std)

else:

consistency = 1.0

return {

'effective_rank_90': effective_rank_90,

'effective_rank_95': effective_rank_95,

'gradient_consistency': consistency,

'singular_values': S.detach().cpu().numpy(),

'total_gradient_norm': torch.norm(combined_grad).item()

}

def suggest_rank_adjustment(self,

grad_A: torch.Tensor,

grad_B: torch.Tensor) -> Optional[int]:

"""

基于梯度分析建议秋调整

"""

if self.step_count % self.adjustment_interval != 0:

return None

analysis = self.analyze_gradient_subspace(grad_A, grad_B)

# 基于有效秋和一致性决定调整策略

effective_rank = analysis['effective_rank_95']

consistency = analysis['gradient_consistency']

# 调整逻辑

if effective_rank > self.current_rank * 1.2 and consistency > 0.8:

# 梯度需要更多维度且训练稳定

suggested_rank = min(effective_rank, self.current_rank + 4)

elif effective_rank < self.current_rank * 0.6 and consistency > 0.9:

# 梯度维度冗余且训练非常稳定

suggested_rank = max(effective_rank, self.current_rank - 2)

else:

suggested_rank = self.current_rank

# 检查变化是否足够大

change_ratio = abs(suggested_rank - self.current_rank) / self.current_rank

if change_ratio > self.rank_change_threshold:

return suggested_rank

return None

def adaptive_rank_training_loop(self,

model,

dataloader,

optimizer,

num_epochs: int):

"""

集成秋调整的训练循环示例

"""

model.train()

for epoch in range(num_epochs):

for batch_idx, (data, target) in enumerate(dataloader):

optimizer.zero_grad()

# 前向传播

output = model(data)

loss = nn.functional.cross_entropy(output, target)

# 反向传播

loss.backward()

# 收集LoRA层的梯度信息

for name, module in model.named_modules():

if hasattr(module, 'lora_A') and hasattr(module, 'lora_B'):

if module.lora_A.grad is not None and module.lora_B.grad is not None:

# 收集梯度统计

self.collect_gradient_info(

module.lora_A.grad,

module.lora_B.grad

)

# 检查是否需要调整秋

suggested_rank = self.suggest_rank_adjustment(

module.lora_A.grad,

module.lora_B.grad

)

if suggested_rank is not None:

print(f"Epoch {epoch}, Batch {batch_idx}: "

f"建议调整 {name} 的秋从 {self.current_rank} 到 {suggested_rank}")

# 这里可以实现实际的秋调整逻辑

self.current_rank = suggested_rank

optimizer.step()

if batch_idx % 100 == 0:

print(f'Epoch: {epoch}, Batch: {batch_idx}, Loss: {loss.item():.6f}')

# 使用示例

gradient_adjuster = GradientInformedRankAdjuster(

initial_rank=8,

adjustment_interval=50,

rank_change_threshold=0.15

)

print("梯度信息秋调整器已初始化")

print(f"初始秋: {gradient_adjuster.current_rank}")

print(f"调整间隔: {gradient_adjuster.adjustment_interval} 步")

print(f"调整阈值: {gradient_adjuster.rank_change_threshold}")第四部分:实验对比与性能分析

4.1 秋选择策略对比实验

让我们设计一个全面的实验来对比不同的秋选择策略:

python

import torch

import torch.nn as nn

from torch.utils.data import DataLoader

from transformers import AutoTokenizer, AutoModel

import numpy as np

from typing import Dict, List, Tuple

import matplotlib.pyplot as plt

from dataclasses import dataclass

@dataclass

class ExperimentConfig:

"""实验配置"""

model_name: str = "bert-base-uncased"

max_epochs: int = 10

batch_size: int = 16

learning_rate: float = 1e-4

warmup_steps: int = 100

eval_steps: int = 50

class RankSelectionExperiment:

"""

秋选择策略对比实验

"""

def __init__(self, config: ExperimentConfig):

self.config = config

self.results = {}

def run_experiment(self,

strategies: Dict[str, dict],

dataset_name: str = "glue/sst2") -> Dict[str, dict]:

"""

运行对比实验

Args:

strategies: 策略字典,格式为 {"策略名": {"rank": int, "init_method": str}}

dataset_name: 数据集名称

"""

print(f"开始秋选择策略对比实验...")

print(f"数据集: {dataset_name}")

print(f"策略数量: {len(strategies)}")

for strategy_name, strategy_config in strategies.items():

print(f"\n正在测试策略: {strategy_name}")

print(f"配置: {strategy_config}")

# 运行单个策略实验

result = self._run_single_strategy(

strategy_name,

strategy_config,

dataset_name

)

self.results[strategy_name] = result

print(f"策略 {strategy_name} 完成")

print(f"最终验证准确率: {result['final_accuracy']:.4f}")

print(f"训练时间: {result['training_time']:.2f}秒")

print(f"参数量: {result['parameter_count']}")

return self.results

def _run_single_strategy(self,

strategy_name: str,

strategy_config: dict,

dataset_name: str) -> dict:

"""

运行单个策略的实验

"""

import time

start_time = time.time()

# 创建模型(这里简化为示例)

model = self._create_lora_model(strategy_config)

# 模拟训练过程

training_history = self._simulate_training(

model,

strategy_config,

num_steps=1000

)

end_time = time.time()

# 计算参数量

param_count = sum(p.numel() for p in model.parameters() if p.requires_grad)

return {

'strategy_name': strategy_name,

'config': strategy_config,

'training_history': training_history,

'final_accuracy': training_history['accuracies'][-1],

'best_accuracy': max(training_history['accuracies']),

'training_time': end_time - start_time,

'parameter_count': param_count,

'convergence_step': self._find_convergence_step(training_history['losses'])

}

def _create_lora_model(self, strategy_config: dict):

"""

创建LoRA模型(简化版本)

"""

# 这里创建一个简化的模型用于演示

class SimpleLoRAModel(nn.Module):

def __init__(self, rank, d_model=768):

super().__init__()

self.rank = rank

self.d_model = d_model

# 模拟transformer层

self.base_layer = nn.Linear(d_model, d_model, bias=False)

# LoRA层

self.lora_A = nn.Parameter(torch.empty(rank, d_model))

self.lora_B = nn.Parameter(torch.empty(d_model, rank))

# 应用初始化策略

self._initialize_weights(strategy_config.get('init_method', 'original'))

# 冻结基础层

self.base_layer.weight.requires_grad = False

def _initialize_weights(self, init_method: str):

if init_method == 'xavier':

AdvancedLoRAInitializer.xavier_lora_init(self.lora_A, self.lora_B)

elif init_method == 'kaiming':

AdvancedLoRAInitializer.kaiming_lora_init(self.lora_A, self.lora_B)

elif init_method == 'orthogonal':

AdvancedLoRAInitializer.orthogonal_init(self.lora_A, self.lora_B)

else: # original

nn.init.kaiming_uniform_(self.lora_A, a=math.sqrt(5))

nn.init.zeros_(self.lora_B)

def forward(self, x):

# 基础输出

base_out = self.base_layer(x)

# LoRA输出

lora_out = torch.mm(torch.mm(x, self.lora_A.t()), self.lora_B.t())

return base_out + lora_out

return SimpleLoRAModel(strategy_config['rank'])

def _simulate_training(self, model, strategy_config: dict, num_steps: int = 1000) -> dict:

"""

模拟训练过程

"""

losses = []

accuracies = []

# 模拟训练数据

for step in range(num_steps):

# 模拟损失下降(添加一些随机性)

base_loss = 2.0 * np.exp(-step / 200) # 指数衰减

noise = np.random.normal(0, 0.1) * np.exp(-step / 300) # 衰减噪声

# 根据秋大小调整收敛速度

rank_factor = 1.0 + (strategy_config['rank'] - 8) * 0.02

loss = base_loss * rank_factor + noise

# 确保损失不为负

loss = max(loss, 0.01)

losses.append(loss)

# 模拟准确率(基于损失)

accuracy = min(0.95, 0.5 + 0.4 * (1 - loss / 2.0))

accuracies.append(accuracy)

return {

'losses': losses,

'accuracies': accuracies,

'steps': list(range(num_steps))

}

def _find_convergence_step(self, losses: List[float],

window_size: int = 50,

threshold: float = 0.01) -> int:

"""

找到收敛步数

"""

if len(losses) < window_size * 2:

return len(losses)

for i in range(window_size, len(losses) - window_size):

window_std = np.std(losses[i:i+window_size])

if window_std < threshold:

return i

return len(losses)

def visualize_results(self):

"""

可视化实验结果

"""

if not self.results:

print("没有实验结果可视化")

return

fig, ((ax1, ax2), (ax3, ax4)) = plt.subplots(2, 2, figsize=(15, 10))

# 1. 训练损失曲线

for strategy_name, result in self.results.items():

steps = result['training_history']['steps']

losses = result['training_history']['losses']

ax1.plot(steps, losses, label=f"{strategy_name} (rank={result['config']['rank']})")

ax1.set_xlabel('训练步数')

ax1.set_ylabel('损失')

ax1.set_title('训练损失曲线对比')

ax1.legend()

ax1.grid(True)

# 2. 准确率曲线

for strategy_name, result in self.results.items():

steps = result['training_history']['steps']

accuracies = result['training_history']['accuracies']

ax2.plot(steps, accuracies, label=f"{strategy_name} (rank={result['config']['rank']})")

ax2.set_xlabel('训练步数')

ax2.set_ylabel('准确率')

ax2.set_title('验证准确率曲线对比')

ax2.legend()

ax2.grid(True)

# 3. 最终性能对比

strategies = list(self.results.keys())

final_accuracies = [self.results[s]['final_accuracy'] for s in strategies]

colors = plt.cm.Set3(np.linspace(0, 1, len(strategies)))

bars = ax3.bar(strategies, final_accuracies, color=colors)

ax3.set_ylabel('最终准确率')

ax3.set_title('最终性能对比')

ax3.tick_params(axis='x', rotation=45)

# 添加数值标签

for bar, acc in zip(bars, final_accuracies):

ax3.text(bar.get_x() + bar.get_width()/2, bar.get_height() + 0.01,

f'{acc:.3f}', ha='center', va='bottom')

# 4. 参数效率对比

param_counts = [self.results[s]['parameter_count'] for s in strategies]

# 创建散点图:参数量 vs 最终准确率

scatter = ax4.scatter(param_counts, final_accuracies,

c=range(len(strategies)), cmap='viridis', s=100)

# 添加策略标签

for i, strategy in enumerate(strategies):

ax4.annotate(strategy, (param_counts[i], final_accuracies[i]),

xytext=(5, 5), textcoords='offset points', fontsize=8)

ax4.set_xlabel('参数量')

ax4.set_ylabel('最终准确率')

ax4.set_title('参数效率对比')

ax4.grid(True)

plt.tight_layout()

plt.show()

def generate_report(self) -> str:

"""

生成实验报告

"""

if not self.results:

return "没有实验结果"

report = "\n" + "="*60 + "\n"

report += "LoRA秩选择策略对比实验报告\n"

report += "="*60 + "\n\n"

# 按最终准确率排序

sorted_results = sorted(self.results.items(),

key=lambda x: x[1]['final_accuracy'],

reverse=True)

report += "策略性能排名:\n"

report += "-" * 40 + "\n"

for i, (strategy_name, result) in enumerate(sorted_results, 1):

report += f"{i}. {strategy_name}\n"

report += f" 秩: {result['config']['rank']}\n"

report += f" 最终准确率: {result['final_accuracy']:.4f}\n"

report += f" 最佳准确率: {result['best_accuracy']:.4f}\n"

report += f" 参数量: {result['parameter_count']:,}\n"

report += f" 收敛步数: {result['convergence_step']}\n"

report += f" 训练时间: {result['training_time']:.2f}秒\n\n"

# 效率分析

report += "效率分析:\n"

report += "-" * 40 + "\n"

best_strategy = sorted_results[0]

most_efficient = min(sorted_results,

key=lambda x: x[1]['parameter_count'] / x[1]['final_accuracy'])

report += f"最佳性能策略: {best_strategy[0]} (准确率: {best_strategy[1]['final_accuracy']:.4f})\n"

report += f"最高效率策略: {most_efficient[0]} (效率比: {most_efficient[1]['final_accuracy']/most_efficient[1]['parameter_count']*1000000:.2f})\n\n"

return report

# 运行对比实验

config = ExperimentConfig(max_epochs=5, batch_size=16)

experiment = RankSelectionExperiment(config)

# 定义测试策略

strategies = {

"低秩原始初始化": {"rank": 4, "init_method": "original"},

"中秩Xavier初始化": {"rank": 16, "init_method": "xavier"},

"高秩Kaiming初始化": {"rank": 32, "init_method": "kaiming"},

"中秩正交初始化": {"rank": 16, "init_method": "orthogonal"},

"动态秩策略": {"rank": 8, "init_method": "xavier"} # 起始秩

}

print("开始运行LoRA秋选择策略对比实验...")

results = experiment.run_experiment(strategies)

# 生成报告

report = experiment.generate_report()

print(report)

# 可视化结果

experiment.visualize_results()4.2 初始化策略的收敛性分析

不同的初始化策略会显著影响模型的收敛速度和最终性能。让我们深入分析各种初始化方法的特点:

python

class InitializationAnalyzer:

"""

初始化策略分析器

"""

def __init__(self):

self.analysis_results = {}

def analyze_initialization_impact(self,

d_model: int = 768,

rank: int = 16,

num_trials: int = 10) -> dict:

"""

分析不同初始化策略的影响

"""

init_methods = {

'original': self._original_init,

'xavier': self._xavier_init,

'kaiming': self._kaiming_init,

'orthogonal': self._orthogonal_init,

'scaled_normal': self._scaled_normal_init

}

results = {}

for method_name, init_func in init_methods.items():

method_results = {

'initial_norms': [],

'spectral_norms': [],

'condition_numbers': [],

'gradient_norms': [],

'convergence_rates': []

}

for trial in range(num_trials):

# 创建LoRA参数

lora_A = nn.Parameter(torch.empty(rank, d_model))

lora_B = nn.Parameter(torch.empty(d_model, rank))

# 应用初始化

init_func(lora_A, lora_B)

# 计算初始权重更新

delta_W = torch.mm(lora_B, lora_A)

# 统计指标

method_results['initial_norms'].append(torch.norm(delta_W).item())

method_results['spectral_norms'].append(torch.norm(delta_W, p=2).item())

# 条件数(通过SVD计算)

U, S, V = torch.svd(delta_W)

condition_num = S[0] / S[-1] if S[-1] > 1e-8 else float('inf')

method_results['condition_numbers'].append(condition_num)

# 模拟梯度计算

fake_loss = torch.sum(delta_W ** 2)

fake_loss.backward()

grad_norm_A = torch.norm(lora_A.grad).item() if lora_A.grad is not None else 0

grad_norm_B = torch.norm(lora_B.grad).item() if lora_B.grad is not None else 0

method_results['gradient_norms'].append(grad_norm_A + grad_norm_B)

# 模拟收敛率(基于初始梯度大小)

convergence_rate = 1.0 / (1.0 + grad_norm_A + grad_norm_B)

method_results['convergence_rates'].append(convergence_rate)

# 计算统计量

for key in method_results:

values = method_results[key]

method_results[key] = {

'mean': np.mean(values),

'std': np.std(values),

'min': np.min(values),

'max': np.max(values)

}

results[method_name] = method_results

self.analysis_results = results

return results

def _original_init(self, lora_A, lora_B):

nn.init.kaiming_uniform_(lora_A, a=math.sqrt(5))

nn.init.zeros_(lora_B)

def _xavier_init(self, lora_A, lora_B):

AdvancedLoRAInitializer.xavier_lora_init(lora_A, lora_B)

def _kaiming_init(self, lora_A, lora_B):

AdvancedLoRAInitializer.kaiming_lora_init(lora_A, lora_B)

def _orthogonal_init(self, lora_A, lora_B):

AdvancedLoRAInitializer.orthogonal_init(lora_A, lora_B)

def _scaled_normal_init(self, lora_A, lora_B, scale=0.02):

nn.init.normal_(lora_A, mean=0, std=scale)

nn.init.normal_(lora_B, mean=0, std=scale * 0.1)

def print_analysis_report(self):

"""

打印分析报告

"""

if not self.analysis_results:

print("请先运行analyze_initialization_impact()")

return

print("\n" + "="*80)

print("LoRA初始化策略分析报告")

print("="*80)

metrics = ['initial_norms', 'spectral_norms', 'condition_numbers', 'gradient_norms']

metric_names = ['初始权重范数', '谱范数', '条件数', '梯度范数']

for metric, metric_name in zip(metrics, metric_names):

print(f"\n{metric_name}分析:")

print("-" * 60)

print(f"{'方法':<15} {'均值':<12} {'标准差':<12} {'最小值':<12} {'最大值':<12}")

print("-" * 60)

for method_name, results in self.analysis_results.items():

stats = results[metric]

print(f"{method_name:<15} {stats['mean']:<12.4f} {stats['std']:<12.4f} "

f"{stats['min']:<12.4f} {stats['max']:<12.4f}")

# 推荐建议

print("\n推荐建议:")

print("-" * 40)

# 找到最稳定的初始化方法(基于标准差)

stability_scores = {}

for method_name, results in self.analysis_results.items():

# 综合稳定性评分(标准差越小越好)

score = (results['initial_norms']['std'] +

results['spectral_norms']['std'] +

results['gradient_norms']['std']) / 3

stability_scores[method_name] = score

most_stable = min(stability_scores.items(), key=lambda x: x[1])

print(f"最稳定的初始化方法: {most_stable[0]} (稳定性评分: {most_stable[1]:.4f})")

# 找到收敛最快的方法

convergence_scores = {}

for method_name, results in self.analysis_results.items():

convergence_scores[method_name] = results['convergence_rates']['mean']

fastest_convergence = max(convergence_scores.items(), key=lambda x: x[1])

print(f"收敛最快的初始化方法: {fastest_convergence[0]} (收敛评分: {fastest_convergence[1]:.4f})")

# 运行初始化分析

analyzer = InitializationAnalyzer()

analysis_results = analyzer.analyze_initialization_impact(d_model=768, rank=16, num_trials=20)

analyzer.print_analysis_report()第五部分:生产环境最佳实践

5.1 自动化秩选择系统

在生产环境中,我们需要一个自动化的系统来选择和调整LoRA的秋。以下是一个完整的实现:

python

class ProductionLoRARankManager:

"""

生产环境LoRA秋管理器

"""

def __init__(self,

config_path: str = "lora_rank_config.json",

log_path: str = "lora_rank_log.txt"):

self.config_path = config_path

self.log_path = log_path

self.rank_history = []

self.performance_history = []

# 加载配置

self.config = self._load_config()

# 初始化日志

self._setup_logging()

def _load_config(self) -> dict:

"""

加载配置文件

"""

default_config = {

"min_rank": 2,

"max_rank": 64,

"initial_rank": 8,

"adjustment_threshold": 0.02,

"patience": 10,

"layer_specific_ranks": {},

"initialization_method": "xavier",

"auto_adjustment": True

}

try:

import json

with open(self.config_path, 'r') as f:

user_config = json.load(f)

default_config.update(user_config)

except FileNotFoundError:

print(f"配置文件 {self.config_path} 不存在,使用默认配置")

self._save_config(default_config)

return default_config

def _save_config(self, config: dict):

"""

保存配置文件

"""

import json

with open(self.config_path, 'w') as f:

json.dump(config, f, indent=2)

def _setup_logging(self):

"""

设置日志系统

"""

import logging

logging.basicConfig(

filename=self.log_path,

level=logging.INFO,

format='%(asctime)s - %(levelname)s - %(message)s'

)

self.logger = logging.getLogger(__name__)

def recommend_initial_ranks(self,

model_architecture: dict,

task_complexity: str = "medium",

resource_constraints: dict = None) -> dict:

"""

推荐初始秋配置

Args:

model_architecture: 模型架构信息

task_complexity: 任务复杂度 ("simple", "medium", "complex")

resource_constraints: 资源约束 {"memory_gb": int, "compute_budget": float}

"""

recommendations = {}

# 基础秋映射

complexity_rank_map = {

"simple": {"base": 4, "multiplier": 1.0},

"medium": {"base": 8, "multiplier": 1.5},

"complex": {"base": 16, "multiplier": 2.0}

}

base_config = complexity_rank_map.get(task_complexity, complexity_rank_map["medium"])

base_rank = base_config["base"]

multiplier = base_config["multiplier"]

# 根据层类型调整

layer_type_factors = {

"attention.self.query": 1.2,

"attention.self.key": 1.0,

"attention.self.value": 1.2,

"attention.output.dense": 0.8,

"intermediate.dense": 1.5,

"output.dense": 1.0

}

for layer_name, layer_info in model_architecture.items():

# 确定层类型

layer_type = self._identify_layer_type(layer_name)

type_factor = layer_type_factors.get(layer_type, 1.0)

# 计算推荐秋

recommended_rank = int(base_rank * multiplier * type_factor)

# 应用资源约束

if resource_constraints:

recommended_rank = self._apply_resource_constraints(

recommended_rank, layer_info, resource_constraints

)

# 确保在合理范围内

recommended_rank = max(self.config["min_rank"],

min(recommended_rank, self.config["max_rank"]))

recommendations[layer_name] = {

"rank": recommended_rank,

"initialization": self.config["initialization_method"],

"reasoning": f"基础秋={base_rank}, 复杂度系数={multiplier}, 层类型系数={type_factor}"

}

# 记录推荐结果

self.logger.info(f"生成秋推荐: 任务复杂度={task_complexity}, 推荐层数={len(recommendations)}")

return recommendations

def _identify_layer_type(self, layer_name: str) -> str:

"""

识别层类型

"""

if "query" in layer_name:

return "attention.self.query"

elif "key" in layer_name:

return "attention.self.key"

elif "value" in layer_name:

return "attention.self.value"

elif "attention" in layer_name and "output" in layer_name:

return "attention.output.dense"

elif "intermediate" in layer_name:

return "intermediate.dense"

elif "output" in layer_name:

return "output.dense"

else:

return "unknown"

def _apply_resource_constraints(self,

base_rank: int,

layer_info: dict,

constraints: dict) -> int:

"""

应用资源约束

"""

# 计算参数量

d_model = layer_info.get("d_model", 768)

param_count = base_rank * (d_model * 2)

# 内存约束

if "memory_gb" in constraints:

max_memory_bytes = constraints["memory_gb"] * 1024**3

param_memory = param_count * 4 # 假设float32

if param_memory > max_memory_bytes * 0.1: # 不超过10%内存

scale_factor = (max_memory_bytes * 0.1) / param_memory

base_rank = int(base_rank * scale_factor)

# 计算预算约束

if "compute_budget" in constraints:

# 简化的计算成本模型

compute_cost = param_count * 1e-6 # 简化成本模型

if compute_cost > constraints["compute_budget"]:

scale_factor = constraints["compute_budget"] / compute_cost

base_rank = int(base_rank * scale_factor)

return max(1, base_rank)

def monitor_and_adjust(self,

current_metrics: dict,

current_ranks: dict) -> dict:

"""

监控性能并调整秋

Args:

current_metrics: 当前性能指标 {"loss": float, "accuracy": float, "training_time": float}

current_ranks: 当前秋配置 {"layer_name": int}

Returns:

调整建议 {"layer_name": {"old_rank": int, "new_rank": int, "reason": str}}

"""

if not self.config["auto_adjustment"]:

return {}

# 记录历史

self.performance_history.append(current_metrics)

self.rank_history.append(current_ranks.copy())

adjustments = {}

# 检查是否需要调整

if len(self.performance_history) >= self.config["patience"]:

recent_performance = self.performance_history[-self.config["patience"]:]

# 分析性能趋势

performance_trend = self._analyze_performance_trend(recent_performance)

if performance_trend["status"] == "stagnant":

# 性能停滞,考虑增加秋

for layer_name, current_rank in current_ranks.items():

if current_rank < self.config["max_rank"]:

new_rank = min(current_rank + 2, self.config["max_rank"])

adjustments[layer_name] = {

"old_rank": current_rank,

"new_rank": new_rank,

"reason": "性能停滞,增加表达能力"

}

elif performance_trend["status"] == "overfitting":

# 过拟合,考虑减少秋

for layer_name, current_rank in current_ranks.items():

if current_rank > self.config["min_rank"]:

new_rank = max(current_rank - 1, self.config["min_rank"])

adjustments[layer_name] = {

"old_rank": current_rank,

"new_rank": new_rank,

"reason": "检测到过拟合,减少参数量"

}

# 记录调整决策

if adjustments:

self.logger.info(f"生成秋调整建议: {len(adjustments)}个层需要调整")

for layer_name, adj in adjustments.items():

self.logger.info(f" {layer_name}: {adj['old_rank']} -> {adj['new_rank']} ({adj['reason']})")

return adjustments

def _analyze_performance_trend(self, recent_performance: List[dict]) -> dict:

"""

分析性能趋势

"""

if len(recent_performance) < 3:

return {"status": "insufficient_data"}

# 提取损失和准确率

losses = [p["loss"] for p in recent_performance]

accuracies = [p["accuracy"] for p in recent_performance]

# 计算趋势

loss_trend = np.polyfit(range(len(losses)), losses, 1)[0]

acc_trend = np.polyfit(range(len(accuracies)), accuracies, 1)[0]

# 计算方差

loss_var = np.var(losses)

acc_var = np.var(accuracies)

# 判断状态

if abs(loss_trend) < 0.001 and abs(acc_trend) < 0.001:

return {"status": "stagnant", "loss_trend": loss_trend, "acc_trend": acc_trend}

elif loss_trend > 0 and acc_trend < 0:

return {"status": "overfitting", "loss_trend": loss_trend, "acc_trend": acc_trend}

elif loss_trend < 0 and acc_trend > 0:

return {"status": "improving", "loss_trend": loss_trend, "acc_trend": acc_trend}

else:

return {"status": "unstable", "loss_trend": loss_trend, "acc_trend": acc_trend}

# 使用示例

rank_manager = ProductionLoRARankManager()

# 模拟模型架构

model_arch = {

"bert.encoder.layer.0.attention.self.query": {"d_model": 768},

"bert.encoder.layer.0.attention.self.key": {"d_model": 768},

"bert.encoder.layer.0.attention.self.value": {"d_model": 768},

"bert.encoder.layer.0.intermediate.dense": {"d_model": 768}

}

# 获取推荐

recommendations = rank_manager.recommend_initial_ranks(

model_arch,

task_complexity="medium",

resource_constraints={"memory_gb": 8, "compute_budget": 0.5}

)

print("生产环境LoRA秋推荐:")

for layer_name, rec in recommendations.items():

print(f"{layer_name}: 秋={rec['rank']}, 初始化={rec['initialization']}")

print(f" 推理: {rec['reasoning']}")5.2 监控与调优工具

python

class LoRAPerformanceMonitor:

"""

LoRA性能监控工具

"""

def __init__(self, model, rank_manager):

self.model = model

self.rank_manager = rank_manager

self.metrics_history = []

self.rank_changes = []

def collect_metrics(self, step: int, loss: float, accuracy: float,

learning_rate: float) -> dict:

"""

收集训练指标

"""

# 计算LoRA层的统计信息

lora_stats = self._compute_lora_statistics()

metrics = {

"step": step,

"loss": loss,

"accuracy": accuracy,

"learning_rate": learning_rate,

"timestamp": time.time(),

"lora_stats": lora_stats

}

self.metrics_history.append(metrics)

return metrics

def _compute_lora_statistics(self) -> dict:

"""

计算LoRA层统计信息

"""

stats = {

"total_lora_params": 0,

"layer_stats": {},

"gradient_norms": {},

"weight_norms": {}

}

for name, module in self.model.named_modules():

if hasattr(module, 'lora_A') and hasattr(module, 'lora_B'):

# 参数统计

lora_params = module.lora_A.numel() + module.lora_B.numel()

stats["total_lora_params"] += lora_params

# 权重范数

weight_norm_A = torch.norm(module.lora_A.weight).item()

weight_norm_B = torch.norm(module.lora_B.weight).item()

# 梯度范数(如果存在)

grad_norm_A = torch.norm(module.lora_A.weight.grad).item() \

if module.lora_A.weight.grad is not None else 0

grad_norm_B = torch.norm(module.lora_B.weight.grad).item() \

if module.lora_B.weight.grad is not None else 0

stats["layer_stats"][name] = {

"rank": module.lora_A.weight.shape[0],

"param_count": lora_params

}

stats["weight_norms"][name] = {

"A_norm": weight_norm_A,

"B_norm": weight_norm_B,

"combined_norm": weight_norm_A + weight_norm_B

}

stats["gradient_norms"][name] = {

"A_grad_norm": grad_norm_A,

"B_grad_norm": grad_norm_B,

"combined_grad_norm": grad_norm_A + grad_norm_B

}

return stats

def generate_performance_report(self, last_n_steps: int = 100) -> str:

"""

生成性能报告

"""

if len(self.metrics_history) < last_n_steps:

recent_metrics = self.metrics_history

else:

recent_metrics = self.metrics_history[-last_n_steps:]

if not recent_metrics:

return "没有可用的性能数据"

# 计算统计量

losses = [m["loss"] for m in recent_metrics]

accuracies = [m["accuracy"] for m in recent_metrics]

report = "\n" + "="*60 + "\n"

report += f"LoRA性能监控报告 (最近{len(recent_metrics)}步)\n"

report += "="*60 + "\n\n"

# 基本统计

report += "基本性能统计:\n"

report += f" 平均损失: {np.mean(losses):.4f} (±{np.std(losses):.4f})\n"

report += f" 平均准确率: {np.mean(accuracies):.4f} (±{np.std(accuracies):.4f})\n"

report += f" 最佳准确率: {max(accuracies):.4f}\n"

report += f" 最低损失: {min(losses):.4f}\n\n"

# LoRA参数统计

latest_stats = recent_metrics[-1]["lora_stats"]

report += "LoRA参数统计:\n"

report += f" 总LoRA参数量: {latest_stats['total_lora_params']:,}\n"

report += f" LoRA层数量: {len(latest_stats['layer_stats'])}\n\n"

# 层级分析

report += "层级性能分析:\n"

report += f"{'层名':<40} {'秩':<6} {'权重范数':<12} {'梯度范数':<12}\n"

report += "-" * 80 + "\n"

for layer_name, layer_stats in latest_stats["layer_stats"].items():

weight_norm = latest_stats["weight_norms"][layer_name]["combined_norm"]

grad_norm = latest_stats["gradient_norms"][layer_name]["combined_grad_norm"]

report += f"{layer_name:<40} {layer_stats['rank']:<6} {weight_norm:<12.4f} {grad_norm:<12.4f}\n"

# 趋势分析

if len(recent_metrics) >= 10:

loss_trend = np.polyfit(range(len(losses)), losses, 1)[0]

acc_trend = np.polyfit(range(len(accuracies)), accuracies, 1)[0]

report += "\n趋势分析:\n"

report += f" 损失趋势: {'下降' if loss_trend < 0 else '上升'} ({loss_trend:.6f}/步)\n"

report += f" 准确率趋势: {'上升' if acc_trend > 0 else '下降'} ({acc_trend:.6f}/步)\n"

return report

def suggest_optimizations(self) -> List[str]:

"""

基于监控数据提供优化建议

"""

if len(self.metrics_history) < 50:

return ["数据不足,需要更多训练步骤来分析"]

suggestions = []

recent_metrics = self.metrics_history[-50:]

latest_stats = recent_metrics[-1]["lora_stats"]

# 分析梯度范数

avg_grad_norms = {}

for layer_name in latest_stats["gradient_norms"]:

grad_norms = [m["lora_stats"]["gradient_norms"][layer_name]["combined_grad_norm"]

for m in recent_metrics]

avg_grad_norms[layer_name] = np.mean(grad_norms)

# 找出梯度异常的层

grad_norm_values = list(avg_grad_norms.values())

if grad_norm_values:

median_grad_norm = np.median(grad_norm_values)

for layer_name, grad_norm in avg_grad_norms.items():

if grad_norm > median_grad_norm * 3:

suggestions.append(

f"层 {layer_name} 的梯度范数异常高 ({grad_norm:.4f}),"

"考虑降低学习率或减少该层的秋"

)

elif grad_norm < median_grad_norm * 0.1:

suggestions.append(

f"层 {layer_name} 的梯度范数过小 ({grad_norm:.4f}),"

"可能需要增加该层的秋或调整初始化"

)

# 分析收敛情况

recent_losses = [m["loss"] for m in recent_metrics[-20:]]

if len(recent_losses) >= 10:

loss_std = np.std(recent_losses)

if loss_std < 0.001:

suggestions.append("损失已收敛,可以考虑降低学习率进行精细调优")

elif loss_std > 0.1:

suggestions.append("损失波动较大,建议降低学习率或检查数据质量")

# 参数效率分析

total_params = latest_stats["total_lora_params"]

if total_params > 1000000: # 100万参数

suggestions.append("LoRA参数量较大,考虑减少部分层的秩以提高效率")

return suggestions if suggestions else ["当前配置表现良好,无需特殊调整"]

# 使用示例

# monitor = LoRAPerformanceMonitor(model, rank_manager)

#

# # 在训练循环中收集指标

# for step in range(1000):

# # ... 训练代码 ...

# metrics = monitor.collect_metrics(step, loss.item(), accuracy, lr)

#

# if step % 100 == 0:

# report = monitor.generate_performance_report()

# print(report)

#

# suggestions = monitor.suggest_optimizations()

# print("\n优化建议:")

# for suggestion in suggestions:

# print(f"- {suggestion}")总结与展望

通过本文的深入探讨,我们全面了解了LoRA高级优化技巧的核心要点:

核心收获

-

秩选择策略:

- 理解了秩与模型表达能力的数学关系

- 掌握了层级秩分配的原理和方法

- 学会了基于梯度信息的动态秋调整

-

初始化技术:

- 对比了多种初始化方法的优缺点

- 实现了自适应初始化策略

- 分析了初始化对收敛性的影响

-

动态优化:

- 构建了在线秋调整算法

- 实现了基于性能反馈的自动调优

- 设计了生产环境监控系统

-

实践工具:

- 提供了完整的实验对比框架

- 构建了性能监控和分析工具

- 实现了自动化的优化建议系统

实际应用建议

- 起步阶段:使用本文提供的自动化工具进行初始秋推荐

- 训练过程:启用动态监控,根据性能反馈调整策略

- 生产部署:建立完整的监控体系,持续优化模型性能

未来发展方向

随着大语言模型技术的不断发展,LoRA优化技术也在持续演进:

- 自适应架构:根据任务特点自动设计LoRA结构

- 多模态融合:在视觉-语言模型中的LoRA优化

- 硬件协同:与专用硬件深度结合的优化策略

掌握这些高级技巧,将帮助你在实际项目中获得更好的微调效果,同时保持高效的资源利用。在下一篇文章中,我们将探讨LoRA在多任务学习中的应用,敬请期待!