k8s storageclasses部署存储由nfs-provisioner提供

- [1. 部署nfs-server服务器](#1. 部署nfs-server服务器)

- [2. 所有k8s节点部署nfs客户端工具](#2. 所有k8s节点部署nfs客户端工具)

- [3. 部署nfs storageclasses](#3. 部署nfs storageclasses)

-

- [3.1. 编写NFS驱动资源文件](#3.1. 编写NFS驱动资源文件)

- [3.2. 编写nfs storageclasses资源文件](#3.2. 编写nfs storageclasses资源文件)

- [3.3. 创建所有资源](#3.3. 创建所有资源)

- [4. 创建PVC使用nfs-provisioner StorageClass来自动创建PV](#4. 创建PVC使用nfs-provisioner StorageClass来自动创建PV)

- [5. 创建pod挂载pvc](#5. 创建pod挂载pvc)

- [6. 删除pvc](#6. 删除pvc)

1. 部署nfs-server服务器

安装nfs服务

yum -y install nfs-utils创建共享目录

mkdir /data配置共享目录

echo "/data 192.168.25.*(rw,sync,no_root_squash)" > /etc/exports- 192.168.25.* : 允许访问的地址

启动nfs服务

systemctl restart rpcbind

systemctl restart nfsshowmount查看:

showmount -e

clike

Export list for 192.168.25.247:

/data 192.168.25.*我的nfs服务器地址是 192.168.25.247

2. 所有k8s节点部署nfs客户端工具

yum -y install nfs-utils

showmount -e 192.168.25.247确保所有节点都能访问到nfs服务器

3. 部署nfs storageclasses

3.1. 编写NFS驱动资源文件

nfs-provisioner-namespace.yaml:

clike

apiVersion: v1

kind: Namespace

metadata:

name: nfs-provisionernfs-provisioner-rbal.yaml:

clike

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

namespace: nfs-provisioner

---

# 集群角色定义

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

# 集群角色绑定

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: nfs-provisioner

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

# 角色定义

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

namespace: nfs-provisioner

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

# 角色绑定

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

namespace: nfs-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: nfs-provisioner

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.ionfs-provisioner-deploy.yaml:

clike

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

namespace: nfs-provisioner

spec:

replicas: 2

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: registry.k8s.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner # nfs provisioner 名称,在部署nfs storageclasses需要指的。

- name: NFS_SERVER

value: 192.168.25.247 # NFS服务器地址

- name: NFS_PATH

value: /data # NFS服务器共享路径

volumes:

- name: nfs-client-root

nfs:

server: 192.168.25.247

path: /data # 与上方NFS_PATH一致由于国内无法拉取

registry.k8s.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2镜像,所以可以拉取docker-hub中的镜像,然后使用docker tag修改镜像名称。

$ docker pull eipwork/nfs-subdir-external-provisioner:v4.0.2

$ docker tag eipwork/nfs-subdir-external-provisioner:v4.0.2 registry.k8s.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

3.2. 编写nfs storageclasses资源文件

nfs-provisioner-storageclasses.yaml:

clike

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-provisioner

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner # 正确设置provisioner值

parameters:

server: 192.168.25.247 # nfs服务器地址

path: /data # 共享目录

readOnly: "false"

reclaimPolicy: Delete # 回收策略

allowVolumeExpansion: true # 允许PVC扩容3.3. 创建所有资源

kubectl apply -f ./ 查看NFS驱动pod是否正常运行:

kubectl get pod -n nfs-provisioner -o wide

clike

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nfs-client-provisioner-675b648f9f-6tnkm 1/1 Running 0 26m 10.224.58.229 k8s-node02 <none> <none>

nfs-client-provisioner-675b648f9f-q9hdw 1/1 Running 0 26m 10.224.85.216 k8s-node01 <none> <none>目前nfs pod运行在

k8s-node02k8s-node01所以会在这两台主机挂载nfs目录

查看nfs storageclasses资源是否正常创建

kubectl get sc

clike

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-provisioner k8s-sigs.io/nfs-subdir-external-provisioner Delete Immediate true 99s4. 创建PVC使用nfs-provisioner StorageClass来自动创建PV

nginx-pvc.yaml:

clike

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi # 请求的存储容量

storageClassName: nfs-provisioner # 使用nfs-provisioner storageClasskubectl apply -f nginx-pvc.yaml

kubectl get pvc

clike

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

nginx-pvc Bound pvc-2e842830-0385-41ea-9630-5de671a238e8 1Gi RWX nfs-provisioner <unset> 5skubectl get pv

clike

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

pvc-2e842830-0385-41ea-9630-5de671a238e8 1Gi RWX Delete Bound default/nginx-pvc nfs-provisioner <unset> 21s可以看出创建的pvcnginx-pvc 绑定到了自动创建的pvpvc-69f5e4ab-1257-4115-8e8a-f62111408758

5. 创建pod挂载pvc

nginx-pod.yaml:

clike

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

volumeMounts: # 绑定卷

- name: html # 与下面声明的卷的名称一样

mountPath: /usr/share/nginx/html # 挂载容器挂载路径

volumes: # 声明卷

- name: html # 卷的名称

persistentVolumeClaim:

claimName: nginx-pvc # 已经存在的pvc的名称kubectl apply -f nginx-pod.yaml

kubectl get pod

clike

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 52s创建index.html文件:

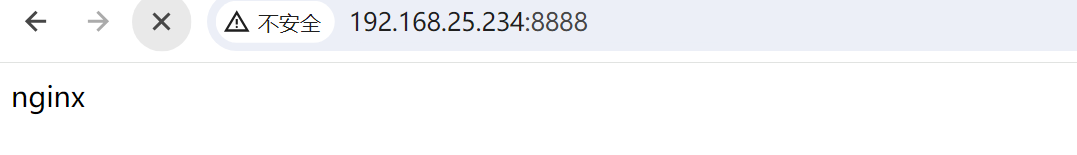

kubectl exec nginx -- /bin/bash -c "hostname > /usr/share/nginx/html/index.html"使用port-forward将端口转发出来访问测试:

clike

kubectl port-forward --address 0.0.0.0 pod/nginx 8888:80

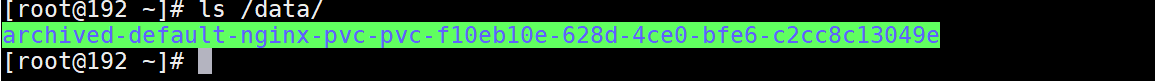

6. 删除pvc

先删除pod

kubectl delete -f nginx-pod.yaml删除pvc

kubectl delete -f nginx-pvc.yaml查看pvc和pv

kubectl get pv,pvc

clike

No resources found查看nfs目录文件:

数据仍然保存。