大模型流式语音识别

背景

在人与人的交流以及传播知识过程中,大约70%的信息是来自于语音。未来,语音识别将必然成为智能生活里重要的一部分,它可以为个人语音助手、语音输入、智能音箱等应用场景提供相关必不可少的技术基础,而且,这还将会成为未来一种新的人机交互方式。

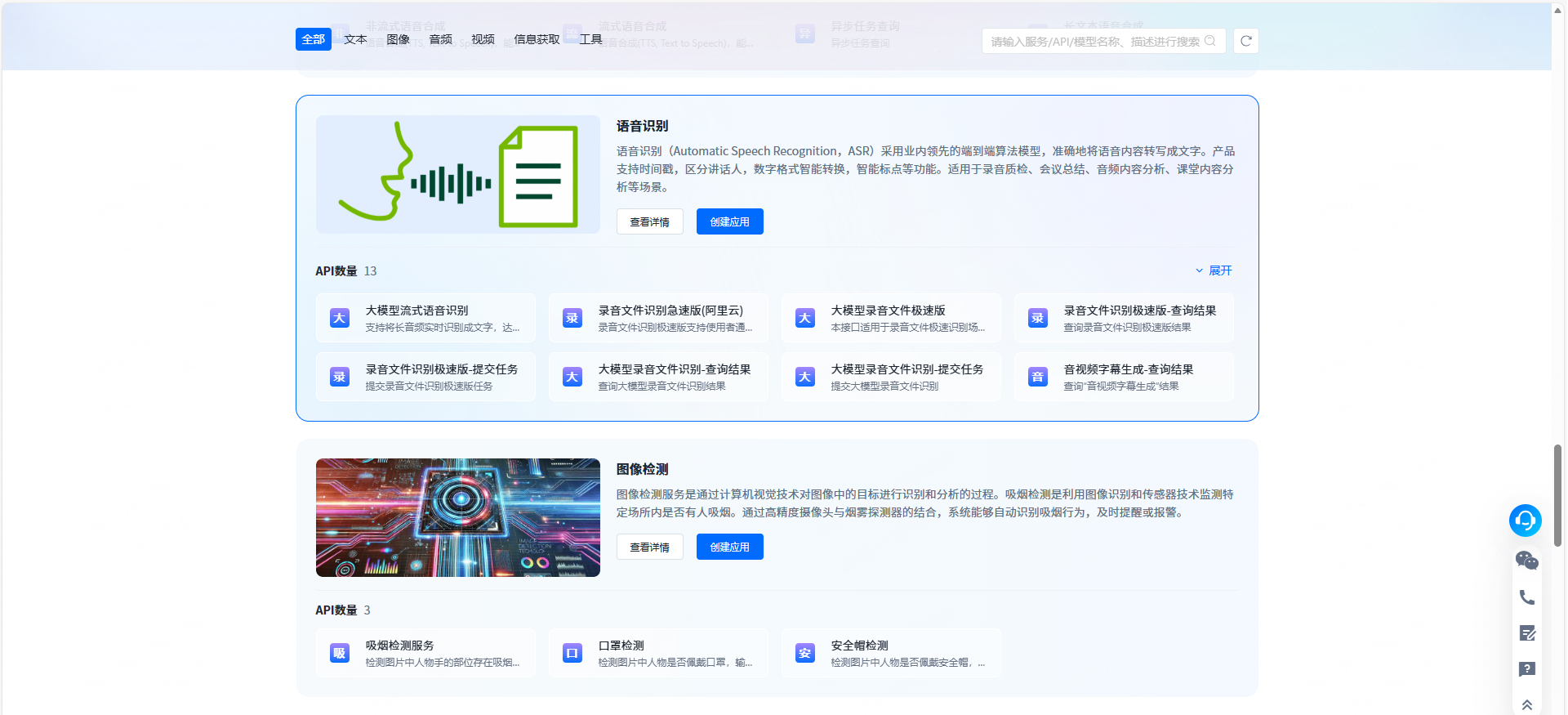

基于此背景,我们在API市场中新增了语音识别服务,在此服务中新增了大模型流式语音识别API接口。

概述

语音识别技术,也被称为语音转文本技术,是人工智能领域的一个重要分支。它通过计算机程序将人类的语音信号转换为文本信息,从而实现人机交互。在智能客服系统中,语音识别技术可以让客户通过语音来与智能客服进行交互,从而提高客户体验和服务效率。

大模型流式语音识别API接口,基于业界先进的语音识别、自然语言理解等技术,广泛应用于智能客服、小说阅读、在线教育、会议纪要、音视频字幕等多个企业应用场景。相比于普通的语音识别技术,大模型流式语音识别技术可提供更高的准确率以及上下文感知能力。

大模型流式语音识别API接口使用的是双向流式模式(优化版本),该模式下,不再是每一包输入对应一包返回,只有当结果有变化时才会返回新的数据包(性能优化 rtf 和首字、尾字时延均有一定程度提升)。

接口文档

使用说明

调用大模型流式语音识别API接口的步骤如下:

1、申请 API Key

登陆智汇云官网 https://zyun.360.cn/,点击产品 -> API市场 -> API市场 APIMKT :

在页面中找到语音识别 点击查看详情 :

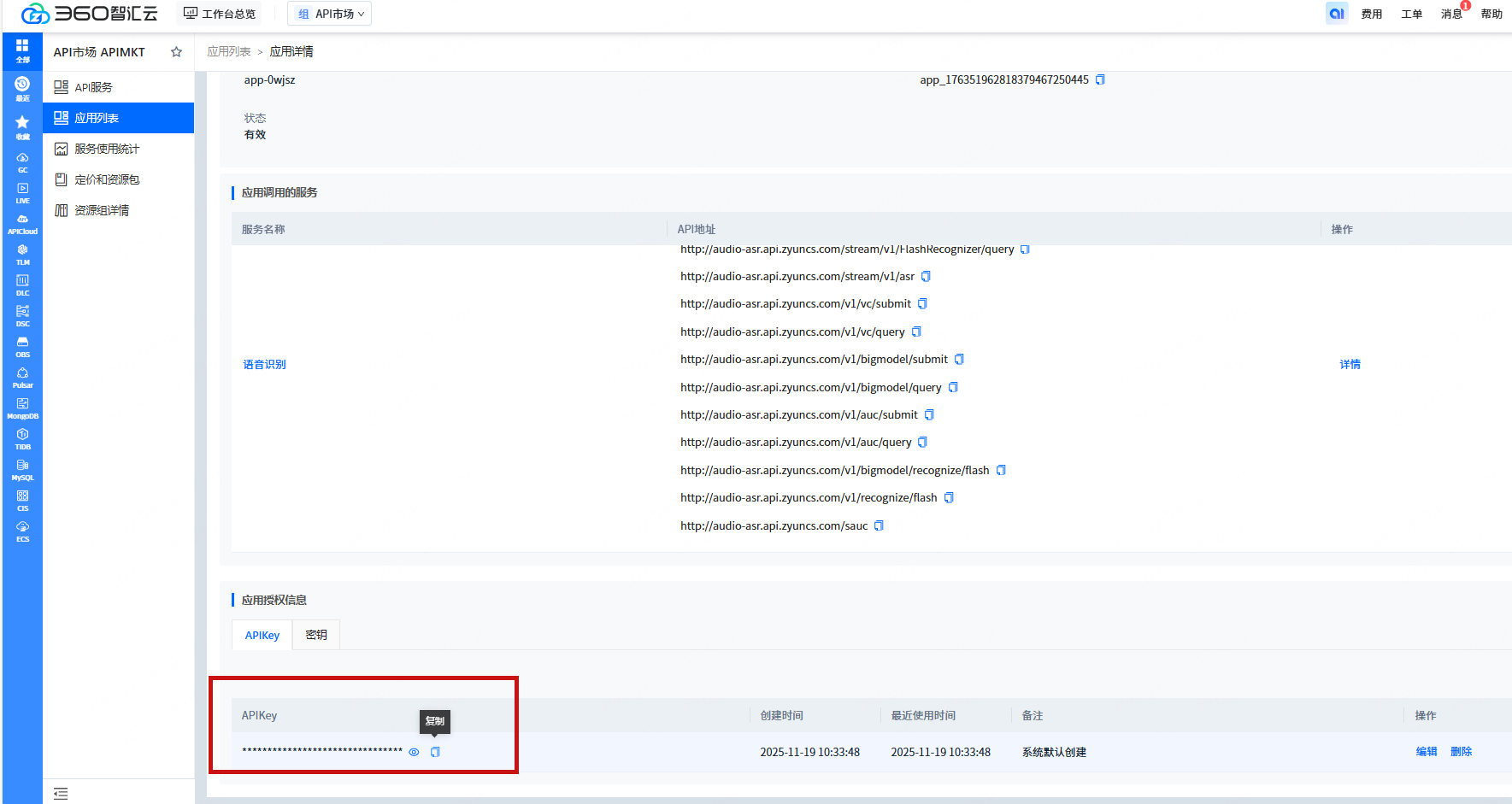

根据详情页的接口调用规范 中的说明申请 API Key:

申请 API Key 的具体方法如下:

在语音识别详情页点击创建应用

选择服务语音识别 后点击确定:

点击对应的应用名称进入详情页:

复制 API Key 并自行保存备用:

2、调用 API 接口

调用 API 接口的流程即接口文档中描述的请求流程,此处结合 golang 代码说明关键步骤,完整的示例代码(golang 和 python )可参考接口文档。

建立连接

使用 API Key 建立 websocket 连接(此处代码中的 "your token" 需替换为您在 API 市场中申请的 API Key):

func (c *AsrWsClient) createConnection() error {

var tokenHeader = http.Header{"Authorization": []string{fmt.Sprintf("Bearer %s", "your token")}}

fmt.Println("Connecting to ws://audio-asr.api.zyuncs.com/sauc ...")

conn, resp, err := websocket.DefaultDialer.Dial("ws://audio-asr.api.zyuncs.com/sauc", tokenHeader)

if err != nil {

fmt.Println(err)

return err

}

log.Printf("logid: %s\n", resp.Header.Get("X-Tt-Logid"))

c.connect = conn

return nil

}发送 full client request

WebSocket 建立连接后,发送的第一个请求是 full client request 。full client request 中包含以下信息:

-

用户相关配置,提供后可供服务端过滤日志

-

音频相关配置,包含您将要发送并进行识别的音频的元数据

-

请求相关配置,包含server所需的相关参数,可以对用于语音识别的大模型进行参数配置

full client request 的详细信息见 接口文档。

构造 full client request 请求数据代码如下(请求中的 AudioMeta 结构中的音频信息需要和实际发送的音频信息一致):

func NewFullClientRequest() []byte {

var request bytes.Buffer

request.Write(DefaultHeader().WithMessageTypeSpecificFlags(POS_SEQUENCE).toBytes())

payload := AsrRequestPayload{

User: UserMeta{

Uid: "demo_uid",

},

Audio: AudioMeta{

Format: "wav",

Codec: "raw",

Rate: 16000,

Bits: 16,

Channel: 1,

},

Request: RequestMeta{

ModelName: "bigmodel",

EnableITN: true,

EnablePUNC: true,

EnableDDC: true,

ShowUtterances: true,

EnableNonstream: false,

},

}

payloadArr, _ := sonic.Marshal(payload)

payloadArr = GzipCompress(payloadArr)

payloadSize := len(payloadArr)

payloadSizeArr := make([]byte, 4)

binary.BigEndian.PutUint32(payloadSizeArr, uint32(payloadSize))

_ = binary.Write(&request, binary.BigEndian, int32(1))

request.Write(payloadSizeArr)

request.Write(payloadArr)

return request.Bytes()

}发送 audio only request

Client 发送 full client request 后,再发送包含音频数据的 audio-only client request 。音频应采用full client request 中指定的格式(音频格式、编解码器、采样率、声道)。

audio-only client request 中的 payload 是使用指定压缩方法,压缩音频数据后的内容。可以多次发送 audio only request 请求,如果是从文件读取的音频数据,需要使用一个 ticker 模拟真实的语音输入场景,如果每次发送 100ms 的音频数据,那么 ticker 的周期为100ms,每一个 audio only request 中的 payload 就是 100ms 的音频数据。

发送 audio only request 请求时需要维护一个序列号并将其添加到请求中发送,序列号从1开始,最后一个请求中需要将序列号设置为负数,表示流结束,比如可能的序列为:1, 2, 3,... 35, 36, -37。

func (c *AsrWsClient) sendMessages(segmentSize int, content []byte, stopChan <-chan struct{}) error {

messageChan := make(chan []byte)

go func() {

for message := range messageChan {

err := c.connect.WriteMessage(websocket.TextMessage, message)

if err != nil {

log.Printf("write message err: %s", err)

return

}

}

}()

audioSegments := splitAudio(content, segmentSize)

ticker := time.NewTicker(time.Duration(c.segmentDuration) * time.Millisecond)

defer ticker.Stop()

defer close(messageChan)

for _, segment := range audioSegments {

select {

case <-ticker.C:

if c.seq == len(audioSegments)+1 {

c.seq = -c.seq

}

message := NewAudioOnlyRequest(c.seq, segment)

messageChan <- message

log.Printf("send message: seq: %d", c.seq)

c.seq++

case <-stopChan:

return nil

}

}

return nil

}

func NewAudioOnlyRequest(seq int, segment []byte) []byte {

var request bytes.Buffer

header := DefaultHeader()

if seq < 0 {

header.WithMessageTypeSpecificFlags(NEG_WITH_SEQUENCE)

} else {

header.WithMessageTypeSpecificFlags(POS_SEQUENCE)

}

header.WithMessageType(CLIENT_AUDIO_ONLY_REQUEST)

request.Write(header.toBytes())

// write seq

_ = binary.Write(&request, binary.BigEndian, int32(seq))

// write payload size

payload := GzipCompress(segment)

_ = binary.Write(&request, binary.BigEndian, int32(len(payload)))

// write payload

request.Write(payload)

return request.Bytes()

}full server response

对于 client 发送的 full client request 和 audio only request ,服务端都会返回 full server response 。 full server response 中的 payload 内容是包含识别结果的 JSON 格式。您可以按照接口文档中描述的响应格式对 full server response 进行解析,获取语音识别的结果,具体代码见 接口文档。

运行结果

此处展示接口文档中的demo运行的输出,因篇幅限制,此处仅显示部分内容:

segmentSize is 6400

Connecting to ws://audio-asr.api.zyuncs.com/sauc ...

14:38:23.731697 asr_go_demo.go:209: logid:

14:38:23.911335 asr_go_demo.go:228: &{0 0 false 0 72 0xc0000b25c0}

14:38:24.113983 asr_go_demo.go:257: send message: seq: 2

14:38:24.313035 asr_go_demo.go:257: send message: seq: 3

14:38:24.513480 asr_go_demo.go:257: send message: seq: 4

14:38:24.712579 asr_go_demo.go:257: send message: seq: 5

14:38:24.715211 asr_go_demo.go:602: {"code":0,"event":0,"is_last_package":false,"payload_sequence":1,"payload_size":299,"payload_msg":{"audio_info":{"duration":598},"result":{"text":"华为","utterances":[{"definite":false,"end_time":420,"start_time":340,"text":"华为","words":[{"end_time":420,"start_time":340,"text":"华为"}]}]}}}

14:38:24.913544 asr_go_demo.go:257: send message: seq: 6

14:38:25.112376 asr_go_demo.go:257: send message: seq: 7

14:38:25.260040 asr_go_demo.go:602: {"code":0,"event":0,"is_last_package":false,"payload_sequence":2,"payload_size":362,"payload_msg":{"audio_info":{"duration":1198},"result":{"text":"华为致力","utterances":[{"definite":false,"end_time":980,"start_time":200,"text":"华为致力","words":[{"end_time":280,"start_time":200,"text":"华为"},{"end_time":980,"start_time":900,"text":"致力"}]}]}}}

// 此处省略部分内容 ...

14:38:30.712394 asr_go_demo.go:257: send message: seq: 35

14:38:30.913576 asr_go_demo.go:257: send message: seq: 36

14:38:31.111732 asr_go_demo.go:257: send message: seq: -37

14:38:31.139794 asr_go_demo.go:602: {"code":0,"event":0,"is_last_package":false,"payload_sequence":20,"payload_size":1586,"payload_msg":{"audio_info":{"duration":6998},"result":{"text":"华为致力于把数字世界带入每个人、每个家庭、每个组织,构建万物互联的智能世界","utterances":[{"definite":false,"end_time":6660,"start_time":200,"text":"华为致力于把数字世界带入每个人、每个家庭、每个组织,构建万物互联的智能世界","words":[{"end_time":280,"start_time":200,"text":"华为"},{"end_time":840,"start_time":760,"text":"致力"},{"end_time":1000,"start_time":920,"text":"于"},{"end_time":1480,"start_time":1400,"text":"把"},{"end_time":1640,"start_time":1560,"text":"数字"},{"end_time":1960,"start_time":1880,"text":"世界"},{"end_time":2280,"start_time":2200,"text":"带"},{"end_time":2440,"start_time":2360,"text":"入"},{"end_time":2680,"start_time":2600,"text":"每"},{"end_time":2760,"start_time":2680,"text":"个人"},{"end_time":3320,"start_time":3240,"text":"每个"},{"end_time":3560,"start_time":3480,"text":"家庭"},{"end_time":4120,"start_time":4040,"text":"每个"},{"end_time":4440,"start_time":4360,"text":"组织"},{"end_time":5080,"start_time":5000,"text":"构建"},{"end_time":5400,"start_time":5320,"text":"万"},{"end_time":5480,"start_time":5400,"text":"物"},{"end_time":5800,"start_time":5720,"text":"互"},{"end_time":5880,"start_time":5800,"text":"联"},{"end_time":6040,"start_time":5960,"text":"的"},{"end_time":6360,"start_time":6280,"text":"智能"},{"end_time":6660,"start_time":6580,"text":"世界"}]}]}}}

14:38:31.220097 asr_go_demo.go:602: {"code":0,"event":0,"is_last_package":true,"payload_sequence":21,"payload_size":1575,"payload_msg":{"audio_info":{"duration":7025},"result":{"text":"华为致力于把数字世界带入每个人、每个家庭、每个组织,构建万物互联的智能世界。","utterances":[{"definite":true,"end_time":6660,"start_time":200,"text":"华为致力于把数字世界带入每个人、每个家庭、每个组织,构建万物互联的智能世界。","words":[{"end_time":280,"start_time":200,"text":"华为"},{"end_time":840,"start_time":760,"text":"致力"},{"end_time":1000,"start_time":920,"text":"于"},{"end_time":1480,"start_time":1400,"text":"把"},{"end_time":1640,"start_time":1560,"text":"数字"},{"end_time":1960,"start_time":1880,"text":"世界"},{"end_time":2280,"start_time":2200,"text":"带"},{"end_time":2440,"start_time":2360,"text":"入"},{"end_time":2680,"start_time":2600,"text":"每"},{"end_time":2760,"start_time":2680,"text":"个人"},{"end_time":3320,"start_time":3240,"text":"每个"},{"end_time":3560,"start_time":3480,"text":"家庭"},{"end_time":4120,"start_time":4040,"text":"每个"},{"end_time":4440,"start_time":4360,"text":"组织"},{"end_time":5080,"start_time":5000,"text":"构建"},{"end_time":5400,"start_time":5320,"text":"万"},{"end_time":5480,"start_time":5400,"text":"物"},{"end_time":5800,"start_time":5720,"text":"互"},{"end_time":5880,"start_time":5800,"text":"联"},{"end_time":6040,"start_time":5960,"text":"的"},{"end_time":6360,"start_time":6280,"text":"智能"},{"end_time":6660,"start_time":6580,"text":"世界"}]}]}}}总结

大模型流式语音识别API接口基于目前的流式语音识别技术在智能生活中的需求和应用场景,提供了业界先进的解决方案,并对识别过程进行了适当的优化,使语音识别技术更广泛和高效的适应用户需求。