typora-copy-images-to: upload

效果挺牛的,而且不需要给缺陷打标签,只训练正样本,站在巨人的肩膀上学习

环境部署

新建python 3.10环境conda create -n anomalib python=3.10

安装pip install anomalib==2.2.0 -i https://pypi.tuna.tsinghua.edu.cn/simple

pip install torch==2.1.0 torchvision==0.16.0 torchaudio==2.1.0 --index-url https://download.pytorch.org/whl/cu118

pip install numpy==1.24.4 -i https://pypi.tuna.tsinghua.edu.cn/simple

训练参数设置

数据集组织方式,good里边放合格的产品,defect里边放不合格的图片,模型只会拿合格的图片进行训练

python

from anomalib.engine import Engine

from anomalib.data import Folder

from pathlib import Path

from anomalib.models import Stfpm,Patchcore

from anomalib.deploy import ExportType

from torchvision import transforms

if __name__ == "__main__":

#打印可用模型

# print(dir(M))

train_transform = transforms.Compose([

transforms.Resize((256, 256)),

# transforms.ColorJitter(brightness=0.2, contrast=0.2, saturation=0.2, hue=0.1),

# transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

])

model = Patchcore(

backbone="wide_resnet50_2",

coreset_sampling_ratio=0.1,

num_neighbors=5

)

datamodule = Folder(

name="Tire",

root=Path("./dataset/Tire"),

normal_dir="good", # Subfolder containing normal images

abnormal_dir="defect", # Subfolder containing anomalous images

train_batch_size=8, #可调整

eval_batch_size=8, # 可调整

# num_workers=1,

train_augmentations=train_transform,

)

datamodule.setup()

# 4. Create the training engine

engine = Engine(

max_epochs=50, # Override default trainer settings

)

engine.fit(datamodule=datamodule, model=model) # 训练(只用 train/good)

# test_results = engine.test(datamodule=datamodule, model=model)

#转换模型

engine.export(

model=model,

export_type=ExportType.ONNX,

# ckpt_path="./PatchCore_0.05_5.ckpt",

export_root="./"

)模型导出onnx

安装pip install onnx==1.17.0 onnxruntime==1.18.0 -i https://pypi.tuna.tsinghua.edu.cn/simple

# 转换模型

engine.export(

model=model,

export_type=ExportType.ONNX,

)检测效果

python

# 1. Import required modules

from anomalib.data import PredictDataset

from anomalib.engine import Engine

from anomalib.models import Stfpm,Patchcore

from torchvision import transforms

model = Patchcore(

backbone="wide_resnet50_2",

coreset_sampling_ratio=0.05,

num_neighbors=5

)

engine = Engine()

train_transform = transforms.Compose([

transforms.Resize((256, 256)),

# transforms.ColorJitter(brightness=0.2, contrast=0.2, saturation=0.2, hue=0.1),

# transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

])

# 3. Prepare test data

# You can use a single image or a folder of images

dataset = PredictDataset(

path="./dataset/Tire/good-1",

transform=train_transform,

)

# 4. Get predictions

predictions = engine.predict(

model=model,

dataset=dataset,

ckpt_path="./results/Patchcore/Tire/latest/weights/lightning/model.ckpt",

)

# 5. Access the results

norma,anomalous=0,0

if predictions is not None:

for prediction in predictions:

image_path = prediction.image_path

anomaly_map = prediction.anomaly_map # Pixel-level anomaly heatmap

pred_label = prediction.pred_label # Image-level label (0: normal, 1: anomalous)

pred_score = prediction.pred_score # Image-level anomaly score

pred_mask = prediction.pred_mask # Pixel-level binary mask

print(image_path,pred_label,pred_score)

if(pred_label==0):

norma+=1

else:

anomalous+=1

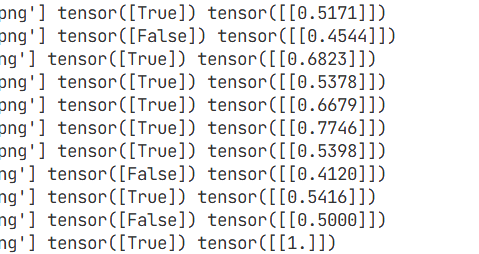

print(f"norma:{norma},anomalous:{anomalous}")置信度大于0.5的被视为异常类

Patchcore

自己测试效果比STFPM好

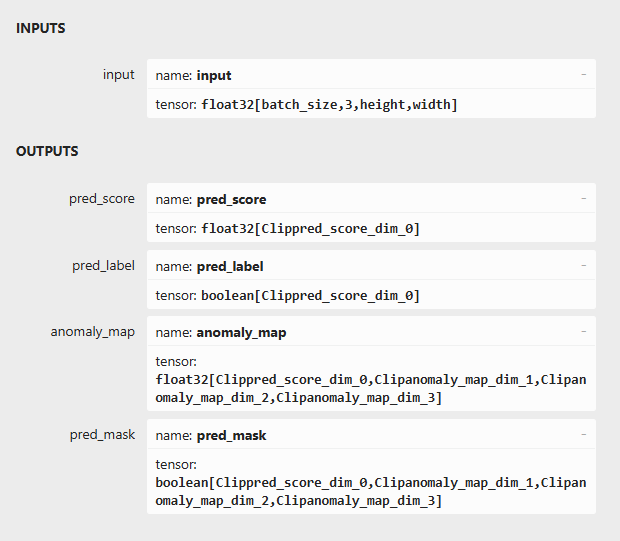

onnx模型的输入输出,那看来可以直接用

onnx

python 验证onnx,效果基本一致,同时可视化结果也可以展示

python

import onnxruntime

import numpy as np

import cv2

from PIL import Image

from pathlib import Path

import os

# ===================== 配置 =====================

MODEL_PATH = "./weights/onnx/PatchCore.onnx"

IMAGE_DIR = "./dataset/Tire/defect"

OUTPUT_DIR = "./results/onnx_vis"

INPUT_SIZE = (256, 256)

THRESHOLD = 0.5

os.makedirs(OUTPUT_DIR, exist_ok=True)

# ===================== 工具函数 =====================

def preprocess_image(img_path):

img = Image.open(img_path).convert("RGB")

img_resized = img.resize(INPUT_SIZE, Image.BILINEAR)

img_np = np.array(img_resized).astype(np.float32) / 255.0

img_chw = img_np.transpose(2, 0, 1)

img_chw = np.expand_dims(img_chw, axis=0)

return img_chw, np.array(img) # 返回原图(RGB)用于可视化

def visualize_pred_mask(orig_img, anomaly_map, save_path_overlay):

"""

orig_img: HWC, RGB, uint8

anomaly_map: HxW, float32 (已归一化到 0-1)

"""

# 1. 生成热力图

# 映射到 0-255 并转为 uint8

anomaly_map_uint8 = (anomaly_map * 255).astype(np.uint8)

# 应用伪彩色 (蓝=冷, 红=热)

heatmap = cv2.applyColorMap(anomaly_map_uint8, cv2.COLORMAP_JET)

# 2. 调整尺寸对齐

h, w = orig_img.shape[:2]

if heatmap.shape[:2] != (h, w):

heatmap = cv2.resize(heatmap, (w, h))

# 3. 叠加显示

orig_bgr = cv2.cvtColor(orig_img, cv2.COLOR_RGB2BGR)

overlay = cv2.addWeighted(orig_bgr, 0.6, heatmap, 0.4, 0)

cv2.imwrite(save_path_overlay, overlay)

# ===================== 创建 ONNX Runtime 会话 =====================

session = onnxruntime.InferenceSession(MODEL_PATH, providers=['CPUExecutionProvider'])

input_name = session.get_inputs()[0].name

# 自动获取输出名称,防止索引错误

output_names = [node.name for node in session.get_outputs()]

print(f"模型输出列表: {output_names}")

# 预期应该是 ['pred_score', 'pred_label', 'anomaly_map', 'pred_mask']

# ===================== 遍历图片推理 =====================

for img_path in Path(IMAGE_DIR).glob("*.*"):

if img_path.suffix.lower() not in ['.jpg', '.png', '.jpeg', '.bmp']:

continue

img_input, orig_img_vis = preprocess_image(img_path)

# === 关键修改 1: 明确指定要获取的输出变量名 ===

# 我们同时获取 'anomaly_map' 和 'pred_score'

# 注意:session.run 第一个参数是列表,指定我们要拿回哪些结果

outputs = session.run(

["anomaly_map", "pred_score"],

{input_name: img_input}

)

# 根据请求的顺序获取结果

raw_anomaly_map = outputs[0] # Shape: (1, 1, 256, 256) 或 (1, 256, 256)

pred_score = outputs[1] # Shape: (1,)

# === 数据后处理 ===

# 1. 去掉多余的维度 (Batch, Channel) -> 变成 (256, 256)

anomaly_map = raw_anomaly_map.squeeze()

# 2. 拿到标量分数

score_val = float(pred_score[0]) if isinstance(pred_score, (list, np.ndarray)) else float(pred_score)

pred_label = 1 if score_val > THRESHOLD else 0

# === 关键修改 2: 归一化 (Min-Max Normalization) ===

# PatchCore 输出的是距离,不是概率,必须归一化才能画热力图

min_val, max_val = anomaly_map.min(), anomaly_map.max()

# 简单的单图自适应归一化 (将当前图最蓝的地方定为0,最红定为1)

if max_val - min_val > 0:

anomaly_map_norm = (anomaly_map - min_val) / (max_val - min_val)

else:

anomaly_map_norm = anomaly_map

print(f"{img_path.name}: Label={pred_label}, Score={score_val:.4f}")

# === 可视化 ===

save_overlay = os.path.join(OUTPUT_DIR, img_path.stem + "_vis.png")

visualize_pred_mask(orig_img_vis, anomaly_map_norm, save_overlay)

print("处理完成!")C++ onnxRuntime部署

版本1.18.0

参照onnx输出,拿到对应的值即可,应用可视化

c

#pragma once

#include <opencv2/opencv.hpp>

// ONNX Runtime C++ API

#define ORT_DISABLE_FP16

#include <onnxruntime_cxx_api.h> // C++ 封装接口

#include <chrono> // 用于高精度计时

#include <iostream>

#include <QObject>

#include <vector>

class tireDefectDet : public QObject

{

Q_OBJECT // Qt 宏,必须写在类里开头

public:

//外部访问接口----获取单例

static tireDefectDet* getInstance();

//禁止拷贝和赋值

tireDefectDet(const tireDefectDet&) = delete;

tireDefectDet& operator = (const tireDefectDet&) = delete;

//运行流程

bool runDet(const std::string& imgPath);

private:

explicit tireDefectDet(QObject *parent = nullptr);

static tireDefectDet* m_pInstance;

// ====== ONNX Runtime 基础对象(全局只能一个 Env)======

Ort::Env env;

Ort::SessionOptions sessionOptions;

Ort::Session m_detSession;

std::string m_strInputName;

std::string m_strPredScore;

std::string m_strPredLabel;

std::string m_strAnomalyMap;

std::string m_strPredMask;

};cpp

c

#include "tireDefectDet.h"

// 与 Python 配置保持一致

const int INPUT_W = 256;

const int INPUT_H = 256;

// 定义常用的 ImageNet 均值和方差

const float mean_vals[3] = { 0.485f, 0.456f, 0.406f };

const float std_vals[3] = { 0.229f, 0.224f, 0.225f };

//类外实例化

tireDefectDet* tireDefectDet::m_pInstance = nullptr;

/***********************************************

* @功能描述 : 返回单例指针

* @参数 : none

* @返回值 : none

***********************************************/

tireDefectDet* tireDefectDet::getInstance()

{

if (m_pInstance == nullptr)

{

m_pInstance = new tireDefectDet();

}

return m_pInstance;

}

/***********************************************

* @功能描述 : 初始化onnx,加载模型

* @参数 : none

* @返回值 : none

***********************************************/

tireDefectDet::tireDefectDet(QObject * parent)

:QObject(parent),

env(ORT_LOGGING_LEVEL_WARNING, "TireDefectDet"),

sessionOptions(),

m_detSession(nullptr) // 先占位,稍后再真正构造

{

//设置线程优化数

sessionOptions.SetIntraOpNumThreads(4);

//设置图像优化等级

sessionOptions.SetGraphOptimizationLevel(GraphOptimizationLevel::ORT_ENABLE_BASIC);

//加载GPU

try

{

OrtSessionOptionsAppendExecutionProvider_CUDA(sessionOptions, 0);

}

catch (...)

{

std::cout << "⚠️ CUDA not available, fallback to CPU" << std::endl;

}

//====加载模型====

m_detSession = Ort::Session(env, L"./model/PatchCore.onnx",sessionOptions);

Ort::AllocatorWithDefaultOptions allocator;

m_strInputName = m_detSession.GetInputNameAllocated(0, allocator).get();

m_strPredScore = m_detSession.GetOutputNameAllocated(0, allocator).get(); // pred_score

m_strPredLabel = m_detSession.GetOutputNameAllocated(1, allocator).get(); // pred_label

m_strAnomalyMap = m_detSession.GetOutputNameAllocated(2, allocator).get(); // anomaly map

m_strPredMask = m_detSession.GetOutputNameAllocated(3, allocator).get(); // pred_mask

}

/***********************************************

* @功能描述 : 运行监测流程

* @参数 : bool 判断是否合格

* @返回值 : none

***********************************************/

bool tireDefectDet::runDet(const std::string & imgPath)

{

const char* inputNames[] = { m_strInputName.c_str() };

std::vector<const char*> outputNames = {

m_strPredScore.c_str(), // pred_score

m_strPredLabel.c_str(), // pred_label

m_strAnomalyMap.c_str(),// anomaly map

m_strPredMask.c_str() // pred_mask

};

cv::Mat image = cv::imread(imgPath); //BGR格式

if (image.empty()) {

std::cerr << "Error: Image not found." << std::endl;

return false;

}

//resize

cv::Mat resizeImg;

cv::resize(image, resizeImg, cv::Size(INPUT_W,INPUT_H));

//BGR 转 RGB

cv::cvtColor(resizeImg, resizeImg, cv::COLOR_BGR2RGB);

// 归一化(0 - 1) & HWC->CHW

std::vector<float> inputTensorValues;

inputTensorValues.reserve(INPUT_H * INPUT_W * 3);

// 遍历顺序:Channel->Height->Width(CHW) OpenCV 默认是 BGR,分别提取 B, G, R 通道

for (int c = 0; c < 3; c++)

{

for (int h = 0; h < INPUT_H; h++)

{

for (int w = 0; w < INPUT_W; w++)

{

// at<cv::Vec3b> 返回的是 BGR

float pixel = resizeImg.at<cv::Vec3b>(h, w)[c];

//inputTensorValues.push_back(pixel/255.0f);

inputTensorValues.push_back(pixel);

}

}

}

//构造输入tensor

std::array<int64_t, 4> inputShape = { 1,3,INPUT_H,INPUT_W };

Ort::MemoryInfo memoryInfo = Ort::MemoryInfo::CreateCpu(OrtArenaAllocator, OrtMemTypeDefault);

Ort::Value inputTensor = Ort::Value::CreateTensor<float>(

memoryInfo,

inputTensorValues.data(),

inputTensorValues.size(),

inputShape.data(),

inputShape.size()

);

//模型推理,获取三个输出

auto outputTensor = m_detSession.Run(

Ort::RunOptions{nullptr},

inputNames,

&inputTensor,

1,

outputNames.data(),

outputNames.size()

);

// ==== 解析输出 ====

// 1.得分 不合格率 [1]

float predScore = outputTensor[0].GetTensorMutableData<float>()[0];

std::cout << predScore << " ";

//2.标签是否合格 0合格 ,1 不合格 [1]

int predLabel = (int)outputTensor[1].GetTensorMutableData<int64_t>()[0];

std::cout << predLabel << " " << std::endl;

//3. anomaly map [1,1,256,256]

float* anomalyMapData = outputTensor[2].GetTensorMutableData<float>();

std::vector<int64_t> anomalyMapShape = outputTensor[2].GetTensorTypeAndShapeInfo().GetShape();

//for (auto s : anomalyMapShape) std::cout << s << " "; //打印维度

int h = anomalyMapShape[2];

int w = anomalyMapShape[3];

// 转成 Mat

cv::Mat anomalyMap(h, w, CV_32FC1, anomalyMapData);

// 归一化 0~255

cv::Mat normMap;

cv::normalize(anomalyMap, normMap, 0, 255, cv::NORM_MINMAX);

normMap.convertTo(normMap, CV_8UC1);

// 应用伪彩色

cv::Mat heatmap;

cv::applyColorMap(normMap, heatmap, cv::COLORMAP_JET);

// ============== 叠加热力图到原图 ================

// 注意:image 是 runDet 开头读取的原始 BGR 图片

cv::Mat overlay;

// 1. 调整 heatmap 尺寸到原图大小

cv::Mat resizedHeatmap;

// 原始图片 image 是 BGR 格式

cv::resize(heatmap, resizedHeatmap, image.size());

// 2. 加权叠加

// 权重:原图 0.6, 热力图 0.4。可以根据需要调整。

cv::addWeighted(image, 0.6, resizedHeatmap, 0.4, 0, overlay);

// 显示或保存

cv::imshow("Overlay", overlay); // 显示叠加图

cv::imwrite("overlay.jpg", overlay); // 保存叠加图

return false;

}