k8s-Pod详解-1

Pod配置

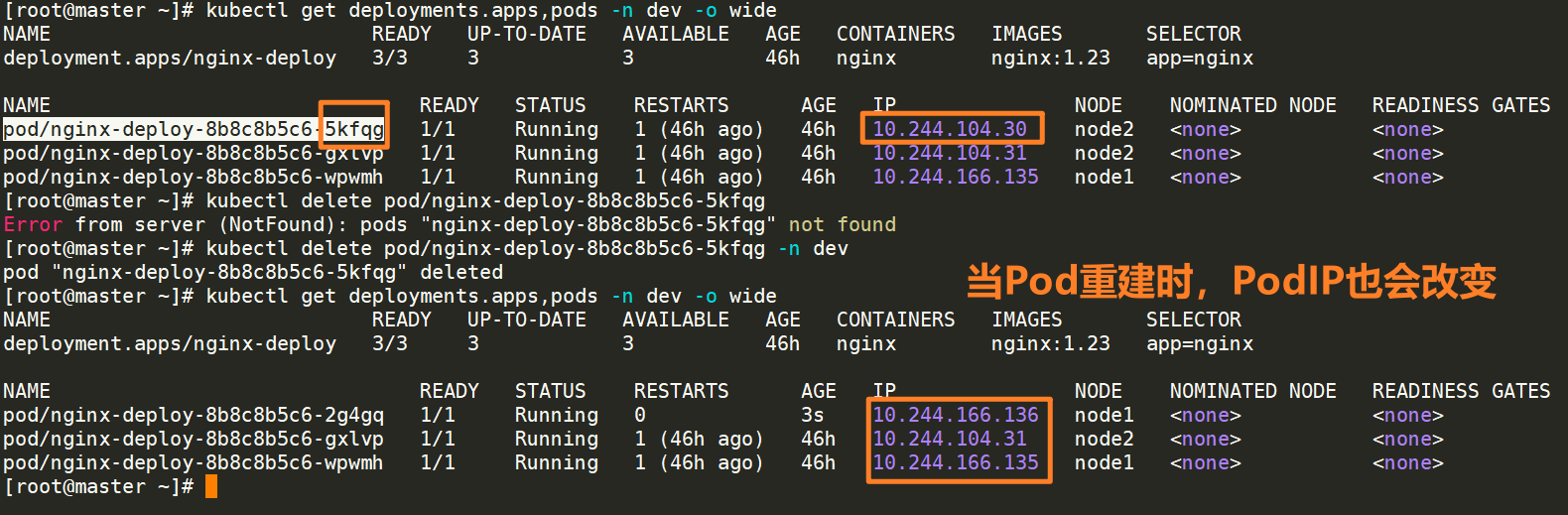

通过创建deployment控制器来创建一组pod来提供具有高可用性的服务,虽然每个Pod都会分配一个独立的Pod IP,然而却存在以下两个问题:

- Pod IP会随着Pod的重建产生变化

- Pod IP仅仅是集群内可见的虚拟IP,外部无法访问

这样对于访问这个服务带来难度,kubernetes设计了Service来解决这个问题

Service可以看作一组同类Pod对外的访问接口,借助Service,应用可以方便地实现服务发现和负载均衡。

操作一:创建集群内部可访问的Service

实现了负载均衡和暴露端口,以及有了ClusterIP

kubectl expose deployment nginx-deploy --name=svc-ng1 --type=ClusterIP --port=80 --target-port 80 -n dev

[root@master ~]# kubectl get svc -n dev

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc-ng1 ClusterIP 10.98.92.161 <none> 80/TCP 46h

[root@master ~]# kubectl get deployments.apps,pods -n dev -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/nginx-deploy 3/3 3 3 46h nginx nginx:1.23 app=nginx

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/nginx-deploy-8b8c8b5c6-2g4gq 1/1 Running 0 9m52s 10.244.166.136 node1 <none> <none>

pod/nginx-deploy-8b8c8b5c6-gxlvp 1/1 Running 1 (46h ago) 46h 10.244.104.31 node2 <none> <none>

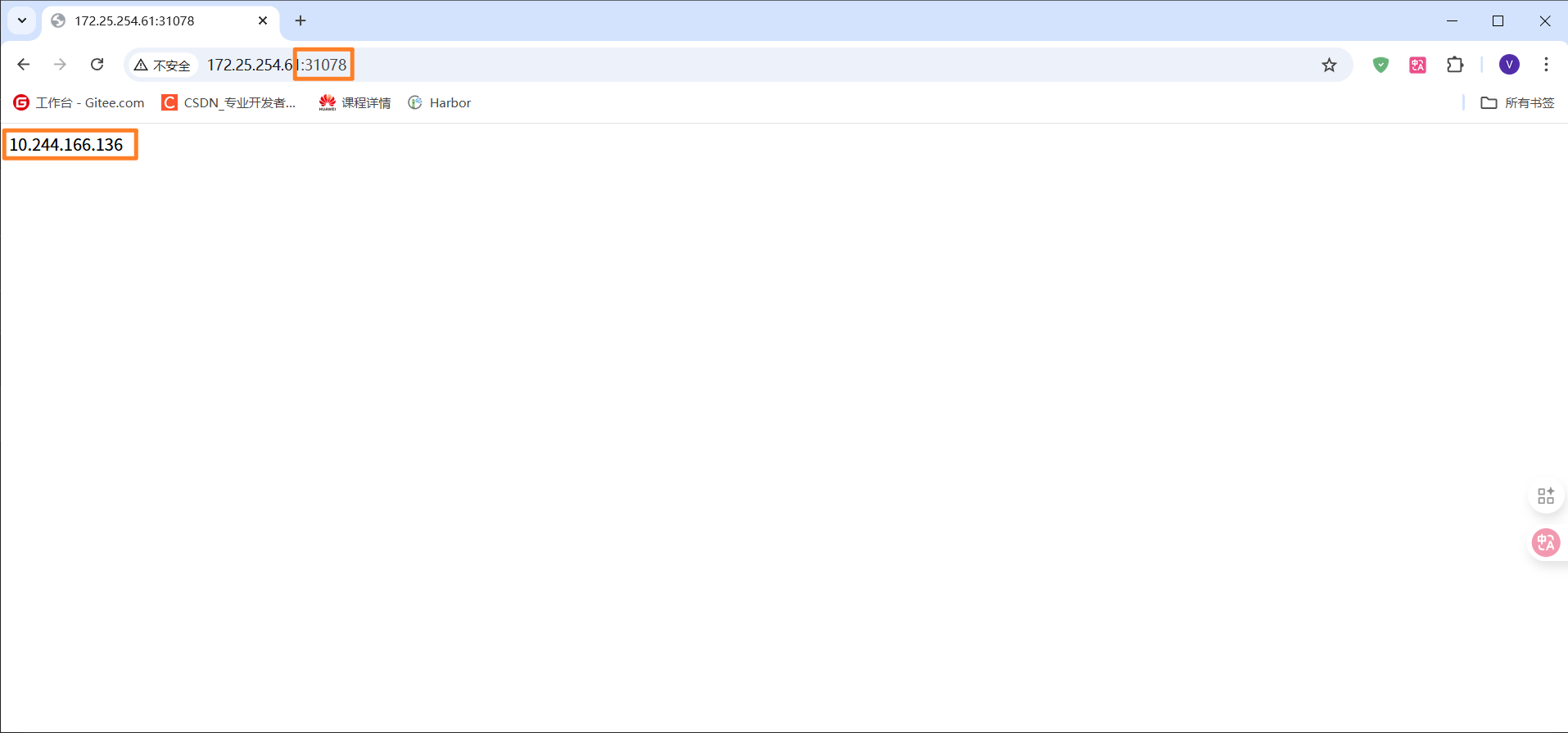

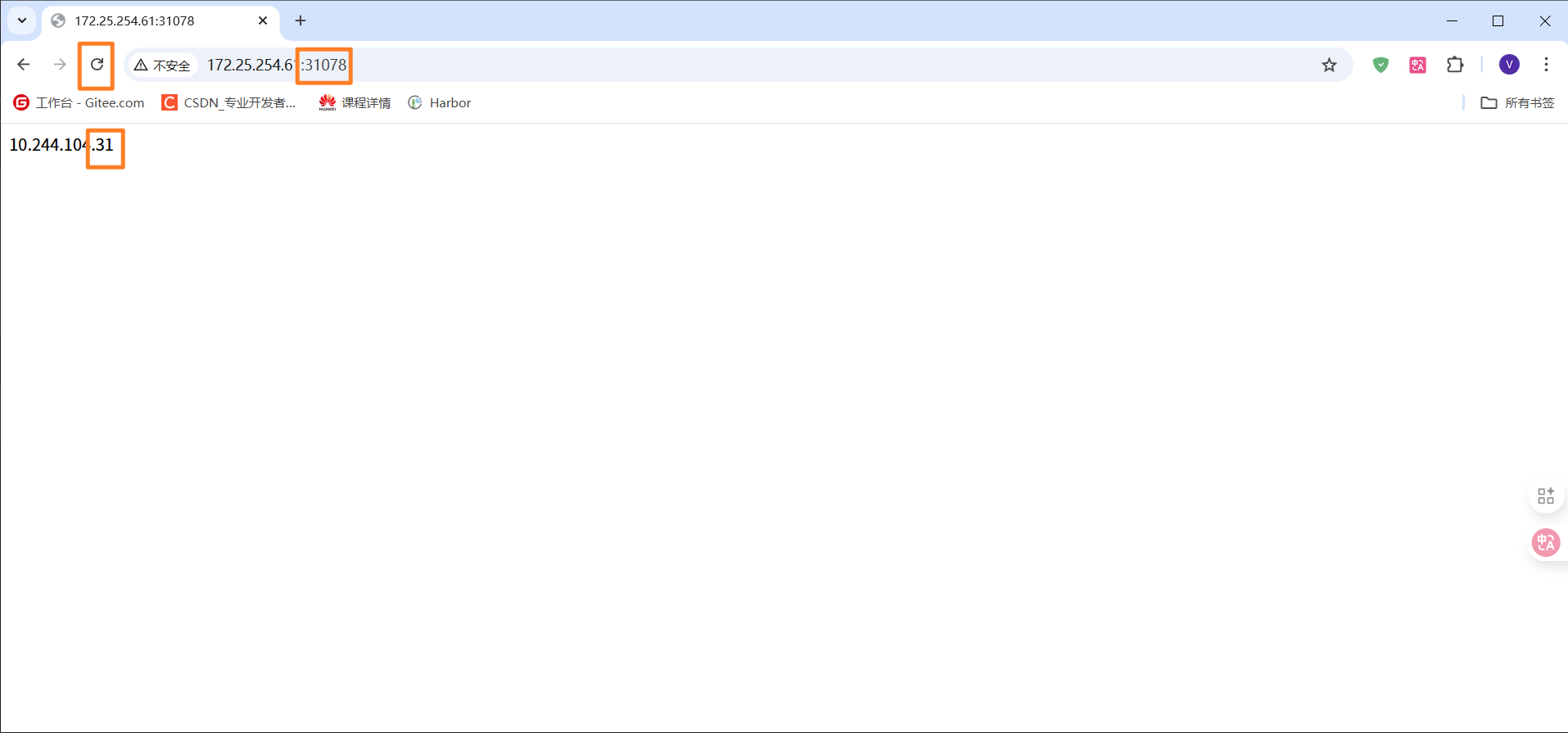

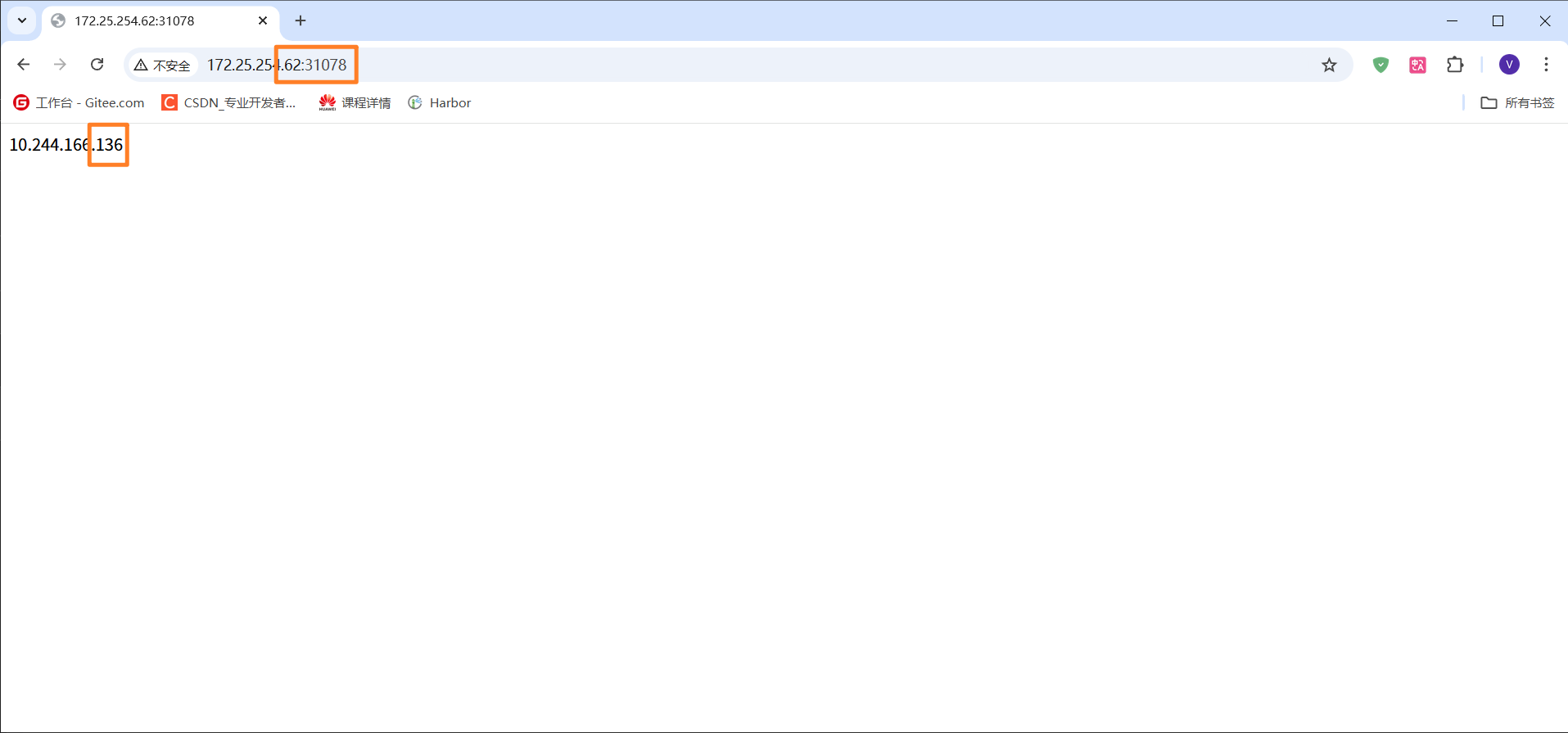

pod/nginx-deploy-8b8c8b5c6-wpwmh 1/1 Running 1 (46h ago) 46h 10.244.166.135 node1 <none> <none>看看负载均衡的效果

进入三个pod,并分别修改nginx的默认发布文件内容

[root@master ~]# kubectl exec -it pod/nginx-deploy-8b8c8b5c6-2g4gq -n dev -- bash

root@nginx-deploy-8b8c8b5c6-2g4gq:/# ip a

bash: ip: command not found

root@nginx-deploy-8b8c8b5c6-2g4gq:/# hostname -I

10.244.166.136

root@nginx-deploy-8b8c8b5c6-2g4gq:/# cd /usr/share/nginx/html/

root@nginx-deploy-8b8c8b5c6-2g4gq:/usr/share/nginx/html# ls

50x.html index.html

root@nginx-deploy-8b8c8b5c6-2g4gq:/usr/share/nginx/html# cp index.html index.html.bak

root@nginx-deploy-8b8c8b5c6-2g4gq:/usr/share/nginx/html# echo 10.244.166.136 > index.html

root@nginx-deploy-8b8c8b5c6-2g4gq:/usr/share/nginx/html# exit

exit

[root@master ~]# kubectl exec -it pod/nginx-deploy-8b8c8b5c6-gxlvp -n dev -- bash

root@nginx-deploy-8b8c8b5c6-gxlvp:/# hostname -I

10.244.104.31

root@nginx-deploy-8b8c8b5c6-gxlvp:/# cp /usr/share/nginx/html/index.html /usr/share/nginx/html/index.html.bak

root@nginx-deploy-8b8c8b5c6-gxlvp:/# echo 10.244.104.31 > /usr/share/nginx/html/index.html

root@nginx-deploy-8b8c8b5c6-gxlvp:/# exit

exit

[root@master ~]# kubectl exec -it pod/nginx-deploy-8b8c8b5c6-wpwmh -n dev -- bash

root@nginx-deploy-8b8c8b5c6-wpwmh:/# hostname -I

10.244.166.135

root@nginx-deploy-8b8c8b5c6-wpwmh:/# cp /usr/share/nginx/html/index.html /usr/share/nginx/html/index.html.bak

root@nginx-deploy-8b8c8b5c6-wpwmh:/# echo 10.244.166.135 > /usr/share/nginx/html/index.html

root@nginx-deploy-8b8c8b5c6-wpwmh:/# exit

exit

[root@master ~]# kubectl get svc -o wide -n dev

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc-ng1 ClusterIP 10.98.92.161 <none> 80/TCP 46h app=nginx

[root@master ~]# curl 10.98.92.161

10.244.166.136

[root@master ~]# curl 10.98.92.161

10.244.166.135

[root@master ~]# curl 10.98.92.161

10.244.104.31

[root@master ~]# curl 10.98.92.161

10.244.166.136

[root@master ~]# curl 10.98.92.161

10.244.166.135

[root@master ~]# curl 10.98.92.161

10.244.104.31可以看出已经实现了负载均衡的效果

但是现在Service实现的还不能在集群外访问

操作二:创建集群外部也可以访问的Service

[root@master ~]# kubectl expose deployment nginx-deploy --name=svc-ng2 --type=NodePort --port=80 --target-port 80 -n dev

service/svc-ng2 exposed

[root@master ~]# kubectl get svc -n dev

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc-ng1 ClusterIP 10.98.92.161 <none> 80/TCP 46h

svc-ng2 NodePort 10.103.210.164 <none> 80:31078/TCP 9s访问集群内任意IP加给定的NodePort来进行访问,同样能负载均衡,而且现在能改在外界通过集群ip加NodePort来访问这个集群服务

yaml配置方式

模板

[root@master ~]# kubectl create service nodeport svc1 --tcp 80:80 --dry-run=client -o yaml

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

app: svc1

name: svc1

spec:

ports:

- name: 80-80

port: 80

protocol: TCP

targetPort: 80

selector:

app: svc1

type: NodePort

status:

loadBalancer: {}

[root@master ~]# kubectl create deployment deploy1 --image nginx:1.23 --replicas 2 --port 80 -n dev --dry-run=client -o yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: deploy1

name: deploy1

namespace: dev

spec:

replicas: 2

selector:

matchLabels:

app: deploy1

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: deploy1

spec:

containers:

- image: nginx:1.23

name: nginx

ports:

- containerPort: 80

resources: {}

status: {}

apiVersion: v1

kind: Service

metadata:

name: svc-ng2 #名字

namespace: dev #指定命名空间,默认是default

spec:

clusterIP: 10.103.210.164 #可以写,可以不写自动分配

ports:

- nodePort: 31078 #可以写,可以不写自动分配

port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx

type: NodePort

[root@master ~]# kubectl get svc svc-ng2 -n dev -o yaml

apiVersion: v1

kind: Service

metadata:

creationTimestamp: "2025-12-08T12:22:43Z"

name: svc-ng2

namespace: dev

resourceVersion: "51591"

uid: 511f75ca-1f03-4b21-833d-e6c78507e347

spec:

clusterIP: 10.103.210.164

clusterIPs:

- 10.103.210.164

externalTrafficPolicy: Cluster

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- nodePort: 31078

port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx

sessionAffinity: None

type: NodePort

status:

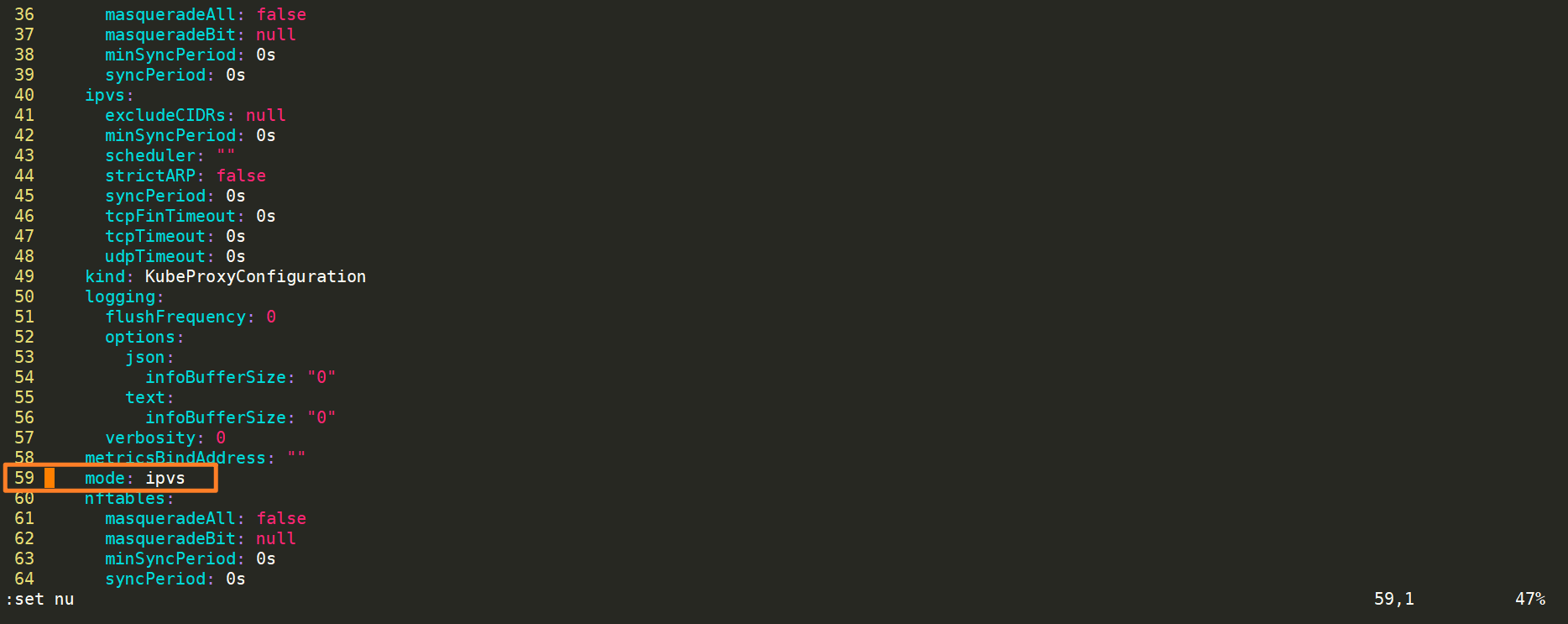

loadBalancer: {}k8s开启ipvs

[root@master ~]# kubectl edit configmaps kube-proxy -n kube-system

mode:ipvs #更改为ipvs

查看更改成功没有

[root@master ~]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.17.0.1:31078 rr

-> 10.244.104.31:80 Masq 1 0 0

-> 10.244.166.135:80 Masq 1 0 0

-> 10.244.166.136:80 Masq 1 0 0

TCP 172.25.254.61:31078 rr

-> 10.244.104.31:80 Masq 1 0 0

-> 10.244.166.135:80 Masq 1 0 0

-> 10.244.166.136:80 Masq 1 0 0

TCP 10.96.0.1:443 rr

-> 172.25.254.61:6443 Masq 1 3 0

TCP 10.96.0.10:53 rr

-> 10.244.235.205:53 Masq 1 0 0

-> 10.244.235.206:53 Masq 1 0 0

TCP 10.96.0.10:9153 rr

-> 10.244.235.205:9153 Masq 1 0 0

-> 10.244.235.206:9153 Masq 1 0 0

TCP 10.98.92.161:80 rr

-> 10.244.104.31:80 Masq 1 0 0

-> 10.244.166.135:80 Masq 1 0 0

-> 10.244.166.136:80 Masq 1 0 0

TCP 10.103.210.164:80 rr

-> 10.244.104.31:80 Masq 1 0 0

-> 10.244.166.135:80 Masq 1 0 0

-> 10.244.166.136:80 Masq 1 0 0

TCP 10.104.48.199:5473 rr

-> 172.25.254.62:5473 Masq 1 0 0

TCP 10.244.235.192:31078 rr

-> 10.244.104.31:80 Masq 1 0 0

-> 10.244.166.135:80 Masq 1 0 0

-> 10.244.166.136:80 Masq 1 0 0

UDP 10.96.0.10:53 rr

-> 10.244.235.205:53 Masq 1 0 0

-> 10.244.235.206:53 Masq 1 0 0

[root@master ~]# lsmod | grep ip_vs

ip_vs_ftp 12288 0

nf_nat 65536 3 nft_chain_nat,xt_MASQUERADE,ip_vs_ftp

ip_vs_sed 12288 0

ip_vs_nq 12288 0

ip_vs_fo 12288 0

ip_vs_sh 12288 0

ip_vs_dh 12288 0

ip_vs_lblcr 12288 0

ip_vs_lblc 12288 0

ip_vs_wrr 12288 0

ip_vs_rr 12288 10

ip_vs_wlc 12288 0

ip_vs_lc 12288 0

ip_vs 237568 38 ip_vs_wlc,ip_vs_rr,ip_vs_dh,ip_vs_lblcr,ip_vs_sh,ip_vs_fo,ip_vs_nq,ip_vs_lblc,ip_vs_wrr,ip_vs_lc,ip_vs_sed,ip_vs_ftp

nf_conntrack 229376 5 xt_conntrack,nf_nat,nf_conntrack_netlink,xt_MASQUERADE,ip_vs

nf_defrag_ipv6 24576 2 nf_conntrack,ip_vs

libcrc32c 12288 5 nf_conntrack,nf_nat,nf_tables,xfs,ip_vsPod结构

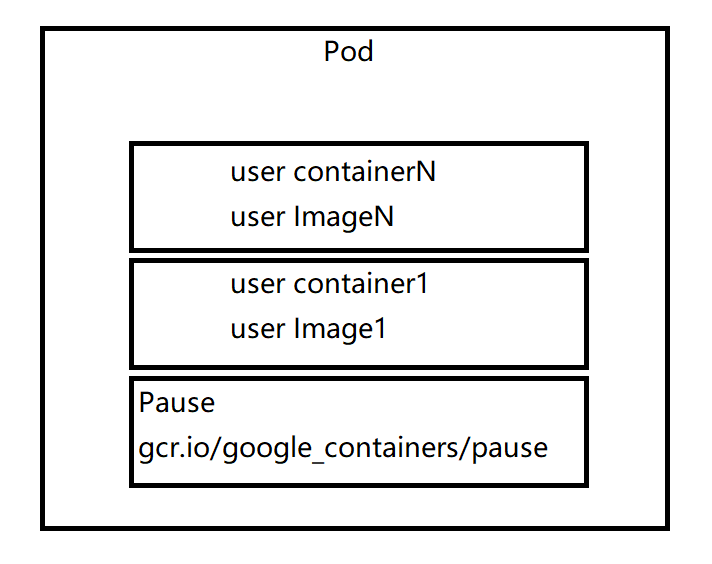

每个Pod中都可以包含一个或者多个容器,这些容器可以分为两类:

-

用户程序所在的容器,数量可多可少

-

Pause容器,这是每个Pod都会有的一个根容器,它的作用有两个:

-

可以以它为依据,评估整个Pod的健康状态

-

可以在根容器上设置IP地址,其他容器都以此IP(Pod IP),以实现Pod内部的网络通信

这里是Pod内部的通讯,Pod的之间的通讯采用虚拟二层网络技术来实现,我们当前环境用的是calico

-

Pod工作方式

- 自主式Pod(不推荐):

- 就是直接kubectl run 创建pod,如果我们不小心删除了pod,那么pod就彻底删除了

- 控制器管理Pod(推荐):

- 常见的管理Pod的控制器:Replicaset、Deployment、job、Cronjob、Daemonset、Statefulset。控制器管理的Pod可以确保Pod始终维持在指定的副本数运行。如通过Deployment管理Pod

Pod定义

在kubernetes中基本所有资源的一级属性都是一样的,主要包含5部分:

- apiVersion <String> 版本,由kubernetes内部定义,版本号必须可以用kubectl api-version查询到

- kind

- metadata

- spec

- status

在上面的属性中,spec是接下来研究的重点,继续看下它的常见子属性:

-

containers

-

nodeName

-

nodeSelector

-

hostNetwork

-

volumes

-

restartPolicy

[root@master dev]# vim pod-base.yml

apiVersion: v1

kind: Pod

metadata:

name: pod-base

namespace: dev

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.23

- name: busybox

image: reg.harbor.org/library/busybox:latest[root@master dev]# kubectl apply -f pod-base.yml

pod/pod-base created[root@master dev]# kubectl get pod -n dev

NAME READY STATUS RESTARTS AGE

pod-base 1/2 NotReady 2 (17s ago) 18s[root@master dev]# kubectl describe pod pod-base -n dev

Name: pod-base

Namespace: dev

Priority: 0

Service Account: default

Node: node2/172.25.254.63

Start Time: Mon, 08 Dec 2025 21:09:43 +0800

Labels: app=nginx

Annotations: cni.projectcalico.org/containerID: 9d0f1f9a8a7a4ac70552bf1d02f07ba9b33a21807f02aef821304bb77a70e65b

cni.projectcalico.org/podIP: 10.244.104.34/32

cni.projectcalico.org/podIPs: 10.244.104.34/32

Status: Running

IP: 10.244.104.34

IPs:

IP: 10.244.104.34

Containers:

nginx:

Container ID: docker://b01f3cb0adbfdde181cbc472e5ad702d524742d4b75b98539cf0f4ee700bc596

Image: nginx:1.23

Image ID: docker-pullable://nginx@sha256:f5747a42e3adcb3168049d63278d7251d91185bb5111d2563d58729a5c9179b0

Port: <none>

Host Port: <none>

State: Running

Started: Mon, 08 Dec 2025 21:09:43 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-2jkc6 (ro)

busybox:

Container ID: docker://97dfa7a3ed256ccd86d9d31971c5e98d680bc090ee85debe29dc5de031a9ed41

Image: reg.harbor.org/library/busybox:latest

Image ID: docker-pullable://reg.harbor.org/library/busybox@sha256:40680ace50cfe34f2180f482e3e8ee0dc8f87bb9b752da3a3a0dcc4616e78933

Port: <none>

Host Port: <none>

State: Waiting

Reason: CrashLoopBackOff

Last State: Terminated

Reason: Completed

Exit Code: 0

Started: Mon, 08 Dec 2025 21:09:59 +0800

Finished: Mon, 08 Dec 2025 21:09:59 +0800

Ready: False

Restart Count: 2

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-2jkc6 (ro)

Conditions:

Type Status

PodReadyToStartContainers True

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

kube-api-access-2jkc6:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 36s default-scheduler Successfully assigned dev/pod-base to node2

Normal Pulled 36s kubelet Container image "nginx:1.23" already present on machine

Normal Created 36s kubelet Created container: nginx

Normal Started 36s kubelet Started container nginx

Normal Pulled 36s kubelet Successfully pulled image "reg.harbor.org/library/busybox:latest" in 65ms (65ms including waiting). Image size: 4496132 bytes.

Normal Pulling 20s (x3 over 36s) kubelet Pulling image "reg.harbor.org/library/busybox:latest"

Normal Created 20s (x3 over 36s) kubelet Created container: busybox

Normal Started 20s (x3 over 36s) kubelet Started container busybox

Normal Pulled 20s (x2 over 35s) kubelet Successfully pulled image "reg.harbor.org/library/busybox:latest" in 39ms (39ms including waiting). Image size: 4496132 bytes.

Warning BackOff 7s (x4 over 34s) kubelet Back-off restarting failed container busybox in pod pod-base_dev(2d1d40da-5e76-4f0a-94b9-87151388c8f3)

镜像拉取策略

imagePullPolicy有三种拉取策略

- Always:始终从远程仓库拉取镜像

- IfNotPresent:本地有就用本地,本地没有就从远程仓库拉取

- Never:只使用本地镜像,从不去远程仓库拉取,本地没有就报错

默认值说明:

如果镜像tag为具体版本号,默认策略使IfNotPresent

如果镜像tag为latest,默认策略是Always

nginx:1.23我指定了版本号,则默认拉取策略是IfNotPresent

而且恰好我本地有这个版本的镜像,所以不再拉取,直接用本地镜像

busybox:latest我指定版本为最新,则默认拉取策略为Always

就算我本地有这个镜像,也会从远程仓库进行拉取默认

Normal Pulled 5m48s kubelet Container image "nginx:1.23" already present on machine

Normal Pulled 5m48s kubelet Successfully pulled image "reg.harbor.org/library/busybox:latest" in 65ms (65ms including waiting). Image size: 4496132 bytes.Always

[root@master dev]# kubectl delete -f pod-base.yml

pod "pod-base" deleted

[root@master dev]# vim pod-base.yml

imagePullPolicy: Always

[root@master dev]# kubectl apply -f pod-base.yml

pod/pod-base created

[root@master dev]# kubectl describe pod pod-base -n dev

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 3s default-scheduler Successfully assigned dev/pod-base to node2

Normal Pulling 2s kubelet Pulling image "nginx:1.23"Never

[root@node1 ~]# docker rmi nginx:1.23 -f

Untagged: nginx:1.23

[root@node1 ~]# docker images

i Info → U In Use

IMAGE ID DISK USAGE CONTENT SIZE EXTRA

busybox:latest e3652a00a2fa 6.71MB 2.22MB

calico/cni:v3.28.0 cef0c907b8f4 304MB 94.5MB

calico/cni:v3.28.1 e486870cfde8 304MB 94.6MB U

calico/kube-controllers:v3.28.0 8f04e4772a2b 114MB 35MB

calico/node:v3.28.0 385bf6391fea 472MB 115MB

calico/node:v3.28.1 d8c644a8a3ee 487MB 118MB U

calico/typha:v3.28.1 720c4e50d46e 102MB 31MB U

reg.harbor.org/library/busybox:latest 40680ace50cf 8.82MB 4.5MB

registry.aliyuncs.com/google_containers/kube-proxy:v1.32.10 f6fb081f408c 129MB 31.2MB U

registry.aliyuncs.com/google_containers/pause:3.9 7031c1b28338 1.06MB 319kB U

[root@node2 ~]# docker rmi -f nginx:1.23

Untagged: nginx:1.23

[root@node2 ~]# docker images

i Info → U In Use

IMAGE ID DISK USAGE CONTENT SIZE EXTRA

calico/cni:v3.28.0 cef0c907b8f4 304MB 94.5MB

calico/cni:v3.28.1 e486870cfde8 304MB 94.6MB U

calico/kube-controllers:v3.28.0 8f04e4772a2b 114MB 35MB

calico/kube-controllers:v3.28.1 eadb3a25109a 114MB 35MB

calico/node:v3.28.0 385bf6391fea 472MB 115MB

calico/node:v3.28.1 d8c644a8a3ee 487MB 118MB U

reg.harbor.org/library/busybox:latest 40680ace50cf 8.82MB 4.5MB

reg.harbor.org/library/nginx:1.23 a087ed751769 9.07kB 9.07kB

registry.aliyuncs.com/google_containers/coredns:v1.11.3 6662e5928ea0 84.5MB 18.6MB

registry.aliyuncs.com/google_containers/kube-proxy:v1.32.10 f6fb081f408c 129MB 31.2MB U

registry.aliyuncs.com/google_containers/pause:3.9 7031c1b28338 1.06MB 319kB U

[root@master dev]# kubectl describe pod pod-base -n dev

...

Warning ErrImageNeverPull 1s (x3 over 3s) kubelet Container image "nginx:1.23" is not present with pull policy of Never

Warning Failed 1s (x3 over 3s) kubelet Error: ErrImageNeverPull

Warning BackOff 1s kubelet Back-off restarting failed container busybox in pod pod-base_dev(a4f940a8-4683-4bb7-87b6-1119a10faaaa)启动命令

有一个问题就是busybox容器一直没有成功运行,那么到底是什么原因导致这个容器的故障呢?

原来busybox并不是一个程序,而是类似于工具类的集合,kubernetes集群启动管理后,它会自动关闭,解决方法就是让它一直在运行,这就用到了**command**配置

在没有给busybox指定commend配置时,一启动就会自动关闭然后报错

apiVersion: v1

kind: Pod

metadata:

name: pod-base

namespace: dev

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.23

- name: busybox

image: reg.harbor.org/library/busybox:latest报错

[root@master dev]# kubectl describe pod pod-base -n dev

Warning BackOff 0s kubelet Back-off restarting failed container busybox in pod pod-base_dev(e8f8881b-2dfd-40c6-896a-0715ff252dbc)

[root@master dev]# vim pod-base.yml

apiVersion: v1

kind: Pod

metadata:

name: pod-base

namespace: dev

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.23

- name: busybox

image: reg.harbor.org/library/busybox:latest

command: ["/bin/sh","-c","sleep 1000"] #新加的命令command,用于在pod中的容器初始化完毕之后运行命令

稍微解释一下这个命令的意思:

"/bin/sh","-c" 使用sh执行命令

sleep 1000 睡眠1000秒

[root@master dev]# kubectl apply -f pod-base.yml

[root@master dev]# kubectl get pod pod-base -n dev

NAME READY STATUS RESTARTS AGE

pod-base 2/2 Running 0 47s

[root@master dev]# kubectl describe pod pod-base -n dev

Normal Pulling 13s kubelet Pulling image "reg.harbor.org/library/busybox:latest"

Normal Pulled 13s kubelet Successfully pulled image "reg.harbor.org/library/busybox:latest" in 40ms (40ms including waiting). Image size: 4496132 bytes.

Normal Created 13s kubelet Created container: busybox

Normal Started 13s kubelet Started container busybox在容器外查看日志

[root@master dev]# kubectl get pods -n dev -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod-base 2/2 Running 0 11m 10.244.104.38 node2 <none> <none>

[root@master dev]# curl 10.244.104.38

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@master dev]# curl 10.244.104.38

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@master dev]# curl 10.244.104.38

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@master dev]# curl 10.244.104.38

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@master dev]# curl 10.244.104.38

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@master dev]# curl 10.244.104.38

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@master dev]# kubectl logs -f pod-base -c nginx -n dev

/docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration

/docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/

/docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh

10-listen-on-ipv6-by-default.sh: info: Getting the checksum of /etc/nginx/conf.d/default.conf

10-listen-on-ipv6-by-default.sh: info: Enabled listen on IPv6 in /etc/nginx/conf.d/default.conf

/docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/30-tune-worker-processes.sh

/docker-entrypoint.sh: Configuration complete; ready for start up

2025/12/08 13:36:08 [notice] 1#1: using the "epoll" event method

2025/12/08 13:36:08 [notice] 1#1: nginx/1.23.4

2025/12/08 13:36:08 [notice] 1#1: built by gcc 10.2.1 20210110 (Debian 10.2.1-6)

2025/12/08 13:36:08 [notice] 1#1: OS: Linux 5.14.0-570.17.1.el9_6.x86_64

2025/12/08 13:36:08 [notice] 1#1: getrlimit(RLIMIT_NOFILE): 1024:524288

2025/12/08 13:36:08 [notice] 1#1: start worker processes

2025/12/08 13:36:08 [notice] 1#1: start worker process 29

2025/12/08 13:36:08 [notice] 1#1: start worker process 30

2025/12/08 13:36:08 [notice] 1#1: start worker process 31

2025/12/08 13:36:08 [notice] 1#1: start worker process 32

10.244.235.192 - - [08/Dec/2025:13:47:48 +0000] "GET / HTTP/1.1" 200 615 "-" "curl/7.76.1" "-"

10.244.235.192 - - [08/Dec/2025:13:47:49 +0000] "GET / HTTP/1.1" 200 615 "-" "curl/7.76.1" "-"

10.244.235.192 - - [08/Dec/2025:13:47:50 +0000] "GET / HTTP/1.1" 200 615 "-" "curl/7.76.1" "-"

10.244.235.192 - - [08/Dec/2025:13:47:50 +0000] "GET / HTTP/1.1" 200 615 "-" "curl/7.76.1" "-"

10.244.235.192 - - [08/Dec/2025:13:47:50 +0000] "GET / HTTP/1.1" 200 615 "-" "curl/7.76.1" "-"

10.244.235.192 - - [08/Dec/2025:13:47:51 +0000] "GET / HTTP/1.1" 200 615 "-" "curl/7.76.1" "-"

特别说明:

通过上面发现command已经可以完成启动命令和传递参数的功能,为什么这里还要提供一个args选项,用于传递参数呢?这其实跟docker有点关系,kubernetes中的command、args两项其实是实现覆盖Dockerfile中ENTRYPOINT的功能。

1.如果command和args均没有写,那么用Dockerfile的配置

2.如果command写了,但args没有写,那么Dockerfile默认的配置会被忽略,执行输入的command

3.如果command没写,但args写了,那么Dockerfile中配置的ENTERPOINT的命令会被执行,使用当前args的参数

4.如果command和args都写了,那么Dockerfile的配置会被忽略,执行command并追加上args参数环境变量

[root@master dev]# vim pod-env.yml

apiVersion: v1

kind: Pod

metadata:

name: pod-env

namespace: dev

labels:

app: busybox

spec:

containers:

- name: busybox

image: reg.harbor.org/library/busybox:latest

command: ["/bin/sh","-c","sleep 1000"]

env:

- name: "user"

value: "tom"

- name: "password"

value: "123"

[root@master dev]# kubectl exec -it -n dev pod-env -c busybox -- /bin/sh

/ # echo $user

tom

/ # echo $password

123

/ # exit端口设置

首先看下ports支持的子选项:

[root@master dev]# kubectl explain pod.spec.containers.ports

KIND: Pod

VERSION: v1

FIELD: ports <[]ContainerPort>

FIELDS:

containerPort <integer> # 容器要监听的端口(0<x<65536)(必须要写)

hostIP <string> # 要将外部端口绑定的主机IP(一般省略)

hostPort <integer> # 容器要在主机上公开的端口,如果设置,主机上只能运行容器的一个副本

name <string> # 端口名称,如果指定,必须保证name在pod中是唯一的

protocol <string> # 端口协议,必须是UDP、TCP或SCTP,默认是TCP

[root@master dev]# vim pod-port.yml

apiVersion: v1

kind: Pod

metadata:

name: pod-port

namespace: dev

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.23

ports:

- containerPort: 80

[root@master dev]# kubectl get pod pod-port -n dev -o yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

cni.projectcalico.org/containerID: d1780b9799b84d1abff67aa6cff7f538b83823cc23dfc98fcd7dcb1bcc91c057

cni.projectcalico.org/podIP: 10.244.166.143/32

cni.projectcalico.org/podIPs: 10.244.166.143/32

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"Pod","metadata":{"annotations":{},"labels":{"app":"nginx"},"name":"pod-port","namespace":"dev"},"spec":{"containers":[{"image":"nginx:1.23","name":"nginx","ports":[{"containerPort":80}]}]}}

creationTimestamp: "2025-12-09T08:44:46Z"

labels:

app: nginx

name: pod-port

namespace: dev

resourceVersion: "67538"

uid: c8356ce0-148a-4070-9329-0196d4f35c10

spec:

containers:

- image: nginx:1.23

imagePullPolicy: IfNotPresent

name: nginx

ports:

- containerPort: 80

protocol: TCP

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: kube-api-access-569x9

readOnly: true

dnsPolicy: ClusterFirst

enableServiceLinks: true

nodeName: node1

preemptionPolicy: PreemptLowerPriority

priority: 0

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: default

serviceAccountName: default

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoExecute

key: node.kubernetes.io/not-ready

operator: Exists

tolerationSeconds: 300

- effect: NoExecute

key: node.kubernetes.io/unreachable

operator: Exists

tolerationSeconds: 300

volumes:

- name: kube-api-access-569x9

projected:

defaultMode: 420

sources:

- serviceAccountToken:

expirationSeconds: 3607

path: token

- configMap:

items:

- key: ca.crt

path: ca.crt

name: kube-root-ca.crt

- downwardAPI:

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

path: namespace

status:

conditions:

- lastProbeTime: null

lastTransitionTime: "2025-12-09T08:44:48Z"

status: "True"

type: PodReadyToStartContainers

- lastProbeTime: null

lastTransitionTime: "2025-12-09T08:44:46Z"

status: "True"

type: Initialized

- lastProbeTime: null

lastTransitionTime: "2025-12-09T08:44:48Z"

status: "True"

type: Ready

- lastProbeTime: null

lastTransitionTime: "2025-12-09T08:44:48Z"

status: "True"

type: ContainersReady

- lastProbeTime: null

lastTransitionTime: "2025-12-09T08:44:46Z"

status: "True"

type: PodScheduled

containerStatuses:

- containerID: docker://d97289931aefae17b135ce17610fd3795d632f091ad7f130ce5680e1218c785e

image: nginx:1.23

imageID: docker-pullable://nginx@sha256:f5747a42e3adcb3168049d63278d7251d91185bb5111d2563d58729a5c9179b0

lastState: {}

name: nginx

ready: true

restartCount: 0

started: true

state:

running:

startedAt: "2025-12-09T08:44:47Z"

volumeMounts:

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: kube-api-access-569x9

readOnly: true

recursiveReadOnly: Disabled

hostIP: 172.25.254.62

hostIPs:

- ip: 172.25.254.62

phase: Running

podIP: 10.244.166.143

podIPs:

- ip: 10.244.166.143

qosClass: BestEffort

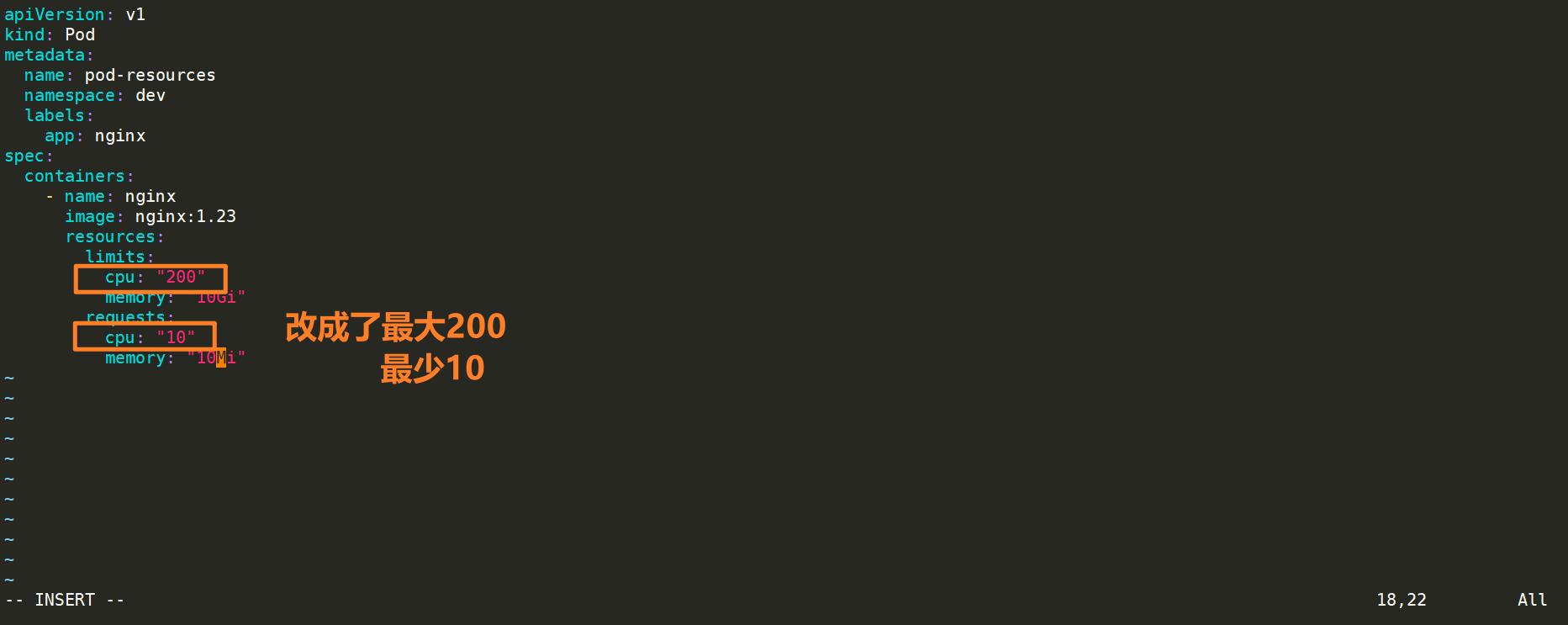

startTime: "2025-12-09T08:44:46Z"资源配额

资源限制会影响pod的Qos Class资源优先级,资源优先级分为Guaranteed > Burstable > BestEffort

QoS(Quality of Service)即服务质量

| 资源设定 | 优先级类型 |

|---|---|

| 资源限定未设定 | BestEffort |

| 资源限定设定且最大和最小不一致 | Burstable |

| 资源限定设定且最大和最小一致 | Guaranteed |

[root@master dev]# vim pod-resources.yml

apiVersion: v1

kind: Pod

metadata:

name: pod-resources

namespace: dev

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.23

resources:

limits:

cpu: "2"

memory: "10Gi"

requests:

cpu: "1"

memory: "10Mi"

[root@master dev]# kubectl apply -f pod-resources.yml

pod/pod-resources created

[root@master dev]# kubectl top pod -n dev

NAME CPU(cores) MEMORY(bytes)

pod-resources 0m 4Mi

[root@master dev]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

pod-resources 1/1 Running 0 5m30s

[root@master dev]# vim pod-resources.yml

apiVersion: v1

kind: Pod

metadata:

name: pod-resources

namespace: dev

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.23

resources:

limits:

cpu: "200"

memory: "10Gi"

requests:

cpu: "10"

memory: "10Mi"

[root@master dev]# kubectl apply -f pod-resources.yml

pod/pod-resources created

[root@master dev]# kubectl get pod -n dev

NAME READY STATUS RESTARTS AGE

pod-resources 0/1 Pending 0 10s

[root@master dev]# kubectl describe pod -n dev

...

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 23s default-scheduler 0/3 nodes are available: 1 node(s) had untolerated taint {node-role.kubernetes.io/control-plane: }, 2 Insufficient cpu. preemption: 0/3 nodes are available: 1 Preemption is not helpful for scheduling, 2 No preemption victims found for incoming pod.

#其中

1 node(s) had untolerated taint {node-role.kubernetes.io/control-plane: }, 2 Insufficient cpu.

1 个节点有无法容忍的污点 {node-role.kubernetes.io/control-plane: },2 个 cpu 不足。

因为master节点是不可被调度的,master/control-plane 节点带有 control-plane 污点,默认不允许普通 Pod 调度(防止控制面资源被占用)

而剩下的两个节点的cpu资源不足,因为我们设置的是最少10,不满足所以创建pod的状态会处于pending,创建不成功

[root@master dev]# kubectl delete -f pod-resources.yml

pod "pod-resources" deleted

[root@master dev]# vim pod-resources.yml

#将原来的改回来

[root@master dev]# kubectl apply -f pod-resources.yml

pod/pod-resources created

[root@master dev]# kubectl get pod pod-resources -n dev

NAME READY STATUS RESTARTS AGE

pod-resources 1/1 Running 0 18s

[root@master dev]# kubectl describe pod -n dev

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 29s default-scheduler Successfully assigned dev/pod-resources to node1

Normal Pulled 29s kubelet Container image "nginx:1.23" already present on machine

Normal Created 29s kubelet Created container: nginx

Normal Started 29s kubelet Started container nginx

#就能起起来了

untolerated taint {node-role.kubernetes.io/control-plane: }, 2 Insufficient cpu.

1 个节点有无法容忍的污点 {node-role.kubernetes.io/control-plane: },2 个 cpu 不足。

因为master节点是不可被调度的,master/control-plane 节点带有 control-plane 污点,默认不允许普通 Pod 调度(防止控制面资源被占用)

而剩下的两个节点的cpu资源不足,因为我们设置的是最少10,不满足所以创建pod的状态会处于pending,创建不成功

[root@master dev]# kubectl delete -f pod-resources.yml

pod "pod-resources" deleted

[root@master dev]# vim pod-resources.yml

#将原来的改回来

[root@master dev]# kubectl apply -f pod-resources.yml

pod/pod-resources created

[root@master dev]# kubectl get pod pod-resources -n dev

NAME READY STATUS RESTARTS AGE

pod-resources 1/1 Running 0 18s

[root@master dev]# kubectl describe pod -n dev

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 29s default-scheduler Successfully assigned dev/pod-resources to node1

Normal Pulled 29s kubelet Container image "nginx:1.23" already present on machine

Normal Created 29s kubelet Created container: nginx

Normal Started 29s kubelet Started container nginx

#就能起起来了