前言

本文重点介绍压缩中的KeyValueFileStoreWrite类,该类和compact很多流程都相关

一.KeyValueFileStoreWrite类

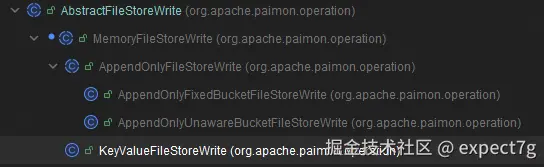

该类的继承路线如下

1.代码解析

(0) 核心参数

java

// Factory读写器工厂,根据具体的 partition 和 bucket 动态创建

private final KeyValueFileReaderFactory.Builder readerFactoryBuilder;

private final KeyValueFileWriterFactory.Builder writerFactoryBuilder;

// 三个关键比较器Supplier

private final Supplier<Comparator<InternalRow>> keyComparatorSupplier; // 主键比较器

private final Supplier<FieldsComparator> udsComparatorSupplier; // 自定义序列字段比较器,如sequnce-group和sequnce-field标记字段

private final Supplier<RecordEqualiser> logDedupEqualSupplier; // 日志相等比较器

// 合并函数工厂 - 根据配置的merge-engine,去创建不同的MergeFunction实现类

private final MergeFunctionFactory<KeyValue> mfFactory;

private final CoreOptions options; // CoreOptions配置

private final FileIO fileIO; // 文件IO

private final RowType keyType; // Key的RowType信息

private final RowType valueType; // Value的RowType信息

private final RowType partitionType; // 分区信息

private final String commitUser;

@Nullable private final RecordLevelExpire recordLevelExpire; // 记录Level的过期机制

@Nullable private Cache<String, LookupFile> lookupFileCache; // lookup缓存(1) bufferSpillable() -- 是否允许write-buffer溢出磁盘

java

@VisibleForTesting

public boolean bufferSpillable() {

// 由参数'write-buffer-spillable'绑定,没有则赋值为 (fileIO.isObjectStore() || !isStreamingMode)

return options.writeBufferSpillable(fileIO.isObjectStore(), isStreamingMode);

}(2) createWriter() -- 核心

创建写入器的核心流程如下:

- 创建

KeyValueFileWriterFactory对象-- WriterFactory - 根据主键比较器、存储文件、num-levels参数,去初始化 LSM Tree 层级结构,得到

Levels对象-- levels - 创建通用压缩策略

UniversalCompaction,后续由该类的pick()去指定哪些文件该被压缩 (核心)

注意:通用压缩策略与下游四个参数有关,详情请看Paimon源码解读 -- Compaction-CompactStrategy

- 参数'compaction.max-size-amplification-percent'绑定,默认200

- 参数'compaction.size-ratio'绑定,默认1

- 参数'num-sorted-run.compaction-trigger'绑定,默认5

- 参数'compaction.optimization-interval'绑定,没有默认值

- 选择压缩策略

如果needLookup()=true,则使用ForceUpLevel0Compaction强制L0压缩策略;否则,使用UniversalCompaction

规则如下: 下面任意一个为true,则needLookup()返回true

- merge-engine = first-row

- changelog-producer = lookup

- deletion-vectors.enabled = true

- force-lookup = true

- 调

createCompactManager()去创建对应的CompactManager实现类 - 根据上面创建的所有参数,创建

MergeTreeWriter

java

// 创建写入器Writer

@Override

protected MergeTreeWriter createWriter(

@Nullable Long snapshotId,

BinaryRow partition,

int bucket,

List<DataFileMeta> restoreFiles,

long restoredMaxSeqNumber,

@Nullable CommitIncrement restoreIncrement,

ExecutorService compactExecutor,

@Nullable DeletionVectorsMaintainer dvMaintainer) {

if (LOG.isDebugEnabled()) {

LOG.debug(

"Creating merge tree writer for partition {} bucket {} from restored files {}",

partition,

bucket,

restoreFiles);

}

// 步骤 1: 创建 KeyValueFileWriterFactory对象 -- WriterFactory

KeyValueFileWriterFactory writerFactory =

writerFactoryBuilder.build(partition, bucket, options);

// 步骤 2: 根据主键比较器、存储文件、num-levels参数,去初始化 LSM Tree 层级结构,得到Levels对象 -- levels

Comparator<InternalRow> keyComparator = keyComparatorSupplier.get();

Levels levels = new Levels(keyComparator, restoreFiles, options.numLevels());

// 步骤 3: 创建通用压缩策略UniversalCompaction,后续由该类的pick()去指定哪些文件该被压缩 (核心)

UniversalCompaction universalCompaction =

new UniversalCompaction(

options.maxSizeAmplificationPercent(), // 由参数'compaction.max-size-amplification-percent'绑定,默认200

options.sortedRunSizeRatio(), // 由参数'compaction.size-ratio'绑定,默认1

options.numSortedRunCompactionTrigger(), // 由参数'num-sorted-run.compaction-trigger'绑定,默认5

options.optimizedCompactionInterval()); // 由参数'compaction.optimization-interval'绑定,没有默认值

/* 步骤 4: 如果needLookup(),则使用ForceUpLevel0Compaction强制L0压缩策略;否则,使用UniversalCompaction

规则如下: 下面任意一个为true,则needLookup()返回true

1. merge-engine = first-row

2. changelog-producer = lookup

3. deletion-vectors.enabled = true

4. force-lookup = true

*/

CompactStrategy compactStrategy =

options.needLookup()

? new ForceUpLevel0Compaction(universalCompaction)

: universalCompaction;

// 步骤 5: 调createCompactManager()去创建对应的CompactManager实现类

CompactManager compactManager =

createCompactManager(

partition, bucket, compactStrategy, compactExecutor, levels, dvMaintainer);

// 步骤 6: 根据上面创建的所有参数,创建MergeTreeWriter()

return new MergeTreeWriter(

bufferSpillable(),

options.writeBufferSpillDiskSize(),

options.localSortMaxNumFileHandles(),

options.spillCompressOptions(),

ioManager,

compactManager,

restoredMaxSeqNumber,

keyComparator,

mfFactory.create(),

writerFactory,

options.commitForceCompact(),

options.changelogProducer(),

restoreIncrement,

UserDefinedSeqComparator.create(valueType, options));

}(3) createCompactManager() -- 创建压缩管理器CompactManager

CASE-1: write-only = true,用NoopCompactManager,不执行任何压缩操作

CASE-2: write-only = false,用MergeTreeCompactManager去管理压缩任务 关于这俩CompactManager实现类详情请看Paimon源码解读 -- Compaction-5.CompactManager

java

private CompactManager createCompactManager(

BinaryRow partition,

int bucket,

CompactStrategy compactStrategy,

ExecutorService compactExecutor,

Levels levels,

@Nullable DeletionVectorsMaintainer dvMaintainer) {

// CASE-1: write-only = true,用NoopCompactManager,不执行任何压缩操作

if (options.writeOnly()) {

return new NoopCompactManager();

}

// CASE-2: write-only = false,用MergeTreeCompactManager去管理压缩任务

else {

Comparator<InternalRow> keyComparator = keyComparatorSupplier.get();

@Nullable FieldsComparator userDefinedSeqComparator = udsComparatorSupplier.get();

// 创建CompactRewriter(核心重写器)

CompactRewriter rewriter =

createRewriter(

partition,

bucket,

keyComparator,

userDefinedSeqComparator,

levels,

dvMaintainer);

// 创建并返回 MergeTreeCompactManager

return new MergeTreeCompactManager(

compactExecutor,

levels,

compactStrategy,

keyComparator,

options.compactionFileSize(true), // target-file-size的70%这个是压缩的目标文件大小,以它为界限区分大文件和小文件

options.numSortedRunStopTrigger(),

rewriter,

compactionMetrics == null

? null

: compactionMetrics.createReporter(partition, bucket),

dvMaintainer,

options.prepareCommitWaitCompaction());

}

}(4) createRewriter() -- 合并重写文件的核心入口

流程如下:

- 创建读写工厂

- 创建核心合并排序算法类

MergeSorter(这是核心),以及其他合并相关参数 - 分情况去创建MergeTreeCompactRewriter对象

- CASE-1: changelog-producer = full-compaction ,创建并返回

FullChangelogMergeTreeCompactRewriter - CASE-2: needLookup为true(规则在上面createWriter()已经介绍了) ,进一步区分内部工厂,然后创建并返回

LookupMergeTreeCompactRewriter - CASE-3: 默认情况 ,采用

MergeTreeCompactRewriter

- CASE-1: changelog-producer = full-compaction ,创建并返回

java

// 核心代码:合并重写文件的核心入口方法

private MergeTreeCompactRewriter createRewriter(

BinaryRow partition,

int bucket,

Comparator<InternalRow> keyComparator,

@Nullable FieldsComparator userDefinedSeqComparator,

Levels levels,

@Nullable DeletionVectorsMaintainer dvMaintainer) {

DeletionVector.Factory dvFactory = DeletionVector.factory(dvMaintainer);

FileReaderFactory<KeyValue> readerFactory =

readerFactoryBuilder.build(partition, bucket, dvFactory);

if (recordLevelExpire != null) {

readerFactory = recordLevelExpire.wrap(readerFactory);

}

// 步1. 创建读写工厂

KeyValueFileWriterFactory writerFactory =

writerFactoryBuilder.build(partition, bucket, options);

// 步2. 创建核心合并排序算法类MergeSorter(这是核心),以及其他合并相关参数

MergeSorter mergeSorter = new MergeSorter(options, keyType, valueType, ioManager);

int maxLevel = options.numLevels() - 1;

MergeEngine mergeEngine = options.mergeEngine();

ChangelogProducer changelogProducer = options.changelogProducer();

LookupStrategy lookupStrategy = options.lookupStrategy();

// CASE-1: changelog-producer = full-compaction,创建并返回FullChangelogMergeTreeCompactRewriter

if (changelogProducer.equals(FULL_COMPACTION)) {

return new FullChangelogMergeTreeCompactRewriter(

maxLevel,

mergeEngine,

readerFactory,

writerFactory,

keyComparator,

userDefinedSeqComparator,

mfFactory,

mergeSorter,

logDedupEqualSupplier.get());

}

// CASE-2: needLookup为true,进一步区分内部工厂,然后创建并返回LookupMergeTreeCompactRewriter

/* 规则如下: 下面任意一个为true,则needLookup()返回true

1. merge-engine = first-row

2. changelog-producer = lookup

3. deletion-vectors.enabled = true

4. force-lookup = true

*/

else if (lookupStrategy.needLookup) {

LookupLevels.ValueProcessor<?> processor;

LookupMergeTreeCompactRewriter.MergeFunctionWrapperFactory<?> wrapperFactory;

FileReaderFactory<KeyValue> lookupReaderFactory = readerFactory;

// SUB-CASE-1: merge-engine为first-row的不需要deletion-vectors.enabled为true

if (lookupStrategy.isFirstRow) {

if (options.deletionVectorsEnabled()) {

throw new UnsupportedOperationException(

"First row merge engine does not need deletion vectors because there is no deletion of old data in this merge engine.");

}

// 创建简化的读取器(无需读取值,仅判断是否存在)

lookupReaderFactory =

readerFactoryBuilder

.copyWithoutProjection()

.withReadValueType(RowType.of())

.build(partition, bucket, dvFactory);

processor = new ContainsValueProcessor();

wrapperFactory = new FirstRowMergeFunctionWrapperFactory(); // 适配first-row合并逻辑

}

// SUB-CASE-2: 其他需要lookup的场景

else {

// 根据是否有删除向量,创建处理键值对位置/内容的处理器

processor =

lookupStrategy.deletionVector

? new PositionedKeyValueProcessor(

valueType,

lookupStrategy.produceChangelog

|| mergeEngine != DEDUPLICATE

|| !options.sequenceField().isEmpty())

: new KeyValueProcessor(valueType);

// 创建包装工厂(适配去重、序列比较等合并逻辑)

wrapperFactory =

new LookupMergeFunctionWrapperFactory<>(

logDedupEqualSupplier.get(),

lookupStrategy,

UserDefinedSeqComparator.create(valueType, options));

}

// 返回Lookup专用重写器

return new LookupMergeTreeCompactRewriter(

maxLevel,

mergeEngine,

createLookupLevels(partition, bucket, levels, processor, lookupReaderFactory),

readerFactory,

writerFactory,

keyComparator,

userDefinedSeqComparator,

mfFactory,

mergeSorter,

wrapperFactory,

lookupStrategy.produceChangelog,

dvMaintainer,

options);

}

// CASE-3: 默认情况,采用MergeTreeCompactRewriter

else {

return new MergeTreeCompactRewriter(

readerFactory,

writerFactory,

keyComparator,

userDefinedSeqComparator,

mfFactory,

mergeSorter);

}

}(5) createLookupLevels()

java

// 创建Lookup层级

private <T> LookupLevels<T> createLookupLevels(

BinaryRow partition,

int bucket,

Levels levels,

LookupLevels.ValueProcessor<T> valueProcessor,

FileReaderFactory<KeyValue> readerFactory) {

// 1. 检查临时磁盘目录

if (ioManager == null) {

throw new RuntimeException(

"Can not use lookup, there is no temp disk directory to use.");

}

// 2. 创建 LookupStoreFactory

LookupStoreFactory lookupStoreFactory =

LookupStoreFactory.create(

options,

cacheManager,

new RowCompactedSerializer(keyType).createSliceComparator());

Options options = this.options.toConfiguration();

// 3. 创建Lookup文件缓存

if (lookupFileCache == null) {

lookupFileCache =

LookupFile.createCache(

options.get(CoreOptions.LOOKUP_CACHE_FILE_RETENTION), // 由参数'lookup.cache-file-retention'绑定,默认1h

options.get(CoreOptions.LOOKUP_CACHE_MAX_DISK_SIZE)); // 由参数'lookup.cache-max-disk-size'绑定,默认Long最大值

}

// 4.创建并返回LookupLevels

return new LookupLevels<>(

levels,

keyComparatorSupplier.get(),

keyType,

valueProcessor,

readerFactory::createRecordReader,

file ->

ioManager

.createChannel(

localFilePrefix(partitionType, partition, bucket, file))

.getPathFile(),

lookupStoreFactory,

bfGenerator(options),

lookupFileCache);

}二.总结

1.KeyValueFileStoreWrite总结

KeyValueFileStoreWrite是 Paimon Primary Key 表写入和压缩流程的中央协调者,承担以下核心职责:

- Writer 生命周期管理 :为每个 partition-bucket 创建、复用、销毁

MergeTreeWriter - 压缩管理器工厂 :根据

write-only配置选择NoopCompactManager或MergeTreeCompactManager - 压缩重写器工厂 :根据 changelog-producer、lookup 等配置选择最优的

CompactRewriter - 内存池协调 :继承

MemoryFileStoreWrite的共享内存池和抢占机制

2.三种重写器对比

- MergeTreeCompactRewriter (标准重写器)

- 适用场景: 标准的 Primary Key 表,无特殊优化需求

- 核心流程: 读取 SortedRuns → 归并排序 → 应用 MergeFunction → 写入新文件

- 特点 :

- 简单高效

- 适合大部分场景

- 在

rewriteCompaction()中实现核心逻辑

- FullChangelogMergeTreeCompactRewriter (全量 Changelog 重写器)

-

适用场景 :

changelog-producer = full-compaction -

核心特性 :

- 只在最高层级 (maxLevel) 生成 changelog

- 使用

FullChangelogMergeFunctionWrapper包装合并函数 - 判断逻辑在

rewriteChangelog()中

-

升级策略 :

javaprotected UpgradeStrategy upgradeStrategy(int outputLevel, DataFileMeta file) { return outputLevel == maxLevel ? CHANGELOG_NO_REWRITE : // 最高层: 生成 changelog, 不重写 NO_CHANGELOG_NO_REWRITE; // 其他层: 不生成, 不重写 }

- LookupMergeTreeCompactRewriter (Lookup 优化重写器)

- 适用场景 :

lookup.cache-rows > 0或lookup.cache-file-retention > 0- 需要通过 Lookup 加速查询

- 核心机制 :

- 构建

LookupLevels用于快速查找 - 支持两种处理器:

- FirstRowMergeFunctionWrapperFactory: 只保留首行

- LookupMergeFunctionWrapperFactory: 标准 Lookup 合并

- 使用

createLookupLevels()创建 Lookup 层级

- 构建