python

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import MinMaxScaler

import torch

import torch.nn as nn

import torch.optim as optim

iris = load_iris()

X = iris.data

y = iris.target

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

scaler = MinMaxScaler()

X_train = scaler.fit_transform(X_train)

X_test = scaler.transform(X_test)

X_train = torch.FloatTensor(X_train)

X_test = torch.FloatTensor(X_test)

y_train = torch.LongTensor(y_train)

y_test = torch.LongTensor(y_test)

class MLP(nn.Module):

def __init__(self):

super().__init__()

self.fc1 = nn.Linear(4, 10)

self.relu = nn.ReLU()

self.fc2 = nn.Linear(10, 3)

def forward(self, x):

out = self.fc1(x)

out = self.relu(out)

out = self.fc2(out)

return out

model = MLP()

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01)

epoches = 20000

losses = []

for epoch in range(epoches):

outputs = model.forward(X_train)

loss = criterion(outputs, y_train)

losses.append(loss.item())

optimizer.zero_grad()

loss.backward()

optimizer.step()

if (epoch + 1) % 100 == 0:

print(f"Epoch [{epoch + 1}/{epoches}], Loss: {loss.item():.6f}")

python

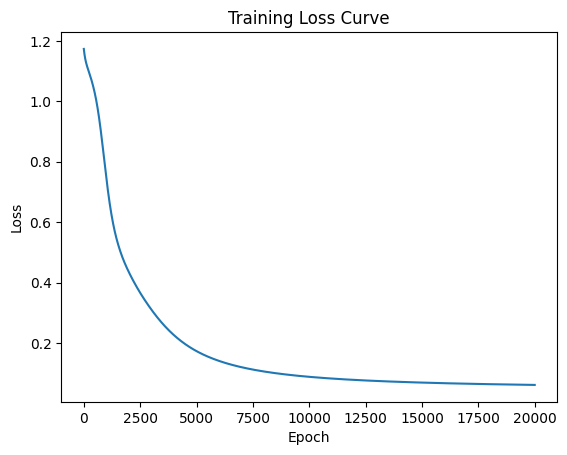

import matplotlib.pyplot as plt

plt.figure()

plt.plot(losses)

plt.xlabel("Epoch")

plt.ylabel("Loss")

plt.title("Training Loss Curve")

plt.show()