最近看了一下Spring AI Alibaba项目,也拿了官方的示例跑起来,发现官方示例里面流式接口返回值是Flux,就研究了一下。

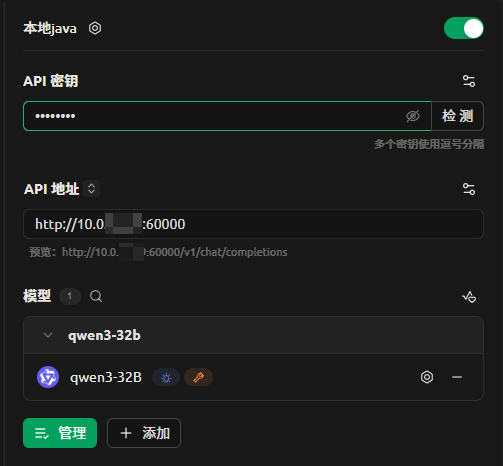

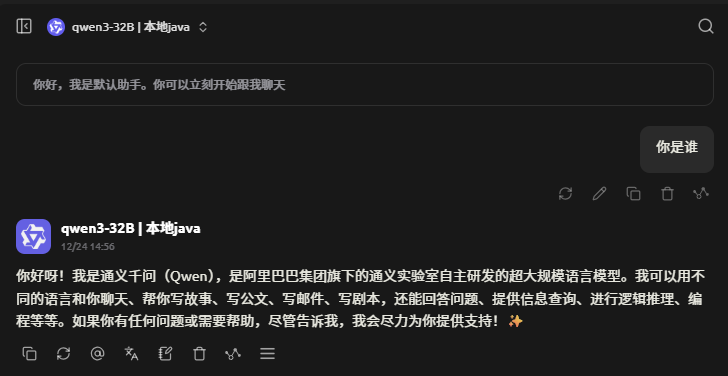

然后结合之前的代码,再问了问AI ,写一个/v1/chat/completions接口,用Cherry Studio调试起来也方便。

环境

Java 21、Spring Boot 3.5.8、Spring AI 1.1.1、Qwen3

控制层代码

所有用到的类都弄成了内部类,所以只需要复制这一个代码就好了。

ChatCompletionRequest类只是从Cherry Studio请求体里面拷贝过来的属性,这里只用到了消息属性,实际上是否流式、模型都没有处理。如果需要可以自行处理。

注意:

- 如果方法返回值类型的是Flux,则使用response设置Content-Type没用,必须使用produces = MediaType.TEXT_EVENT_STREAM_VALUE。

- 方法返回值类型改成Object,return返回Flux的实例,response设置Content-Type,实测这样会报错

- MVC依赖里面就有处理返回值类型为Flux的代码,最后会使用SseEmitter来处理,和spring-boot-starter-webflux依赖无关,问AI的时候要跟他说明一下,不然他会胡说八道。

代码如下:

java

import com.fasterxml.jackson.annotation.JsonInclude;

import com.fasterxml.jackson.core.JsonProcessingException;

import com.fasterxml.jackson.databind.ObjectMapper;

import jakarta.validation.Valid;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

import lombok.extern.slf4j.Slf4j;

import org.springframework.ai.chat.messages.Message;

import org.springframework.ai.chat.messages.SystemMessage;

import org.springframework.ai.chat.messages.UserMessage;

import org.springframework.ai.chat.model.StreamingChatModel;

import org.springframework.ai.chat.prompt.Prompt;

import org.springframework.http.MediaType;

import org.springframework.web.bind.annotation.PostMapping;

import org.springframework.web.bind.annotation.RequestBody;

import org.springframework.web.bind.annotation.RestController;

import reactor.core.publisher.Flux;

import reactor.core.publisher.SignalType;

import java.util.List;

import java.util.UUID;

import java.util.concurrent.atomic.AtomicBoolean;

import java.util.concurrent.atomic.AtomicReference;

import java.util.stream.Collectors;

/**

* ai测试接口

*

* @author fhf

* @since 7/17/2025

*/

@RestController

@AllArgsConstructor

@Slf4j

public class AiController {

private StreamingChatModel streamingChatModel;

private final ObjectMapper objectMapper;

/**

* 兼容OpenAI标准的聊天完成接口<br>

* 这里只处理流式输出的情况(就是打字机效果),produces = MediaType.TEXT_EVENT_STREAM_VALUE记得要加

*/

@PostMapping(value = "/v1/chat/completions", produces = MediaType.TEXT_EVENT_STREAM_VALUE)

public Flux<String> chatCompletions(@Valid @RequestBody ChatCompletionRequest request) {

// 1. 转换请求中的messages为Spring AI的Message列表

List<Message> messages = convertToAiMessages(request.getMessages());

// 2. 构建Prompt(可根据request的参数调整模型配置,这里简化处理)

Prompt prompt = new Prompt(messages);

// 3. 生成千问风格32位UUID

String responseId = UUID.randomUUID().toString().replace("-", "");

// 固定时间戳

long created = System.currentTimeMillis() / 1000;

// 标记首块

AtomicBoolean isFirstChunk = new AtomicBoolean(true);

// 标记结束

AtomicBoolean isFinished = new AtomicBoolean(false);

// 累计tokens(模拟,可根据实际模型返回的token数调整)

AtomicReference<Usage> usageRef = new AtomicReference<>(new Usage(null,

request.getMessages().stream().mapToInt(m -> m.getContent().length()).sum() / 2, // 模拟prompt tokens

0, 0, null, 0));

// 用streamingChatModel,别用chatModel

return streamingChatModel.stream(prompt)

.map(chatResponse -> {

String content = chatResponse.getResult().getOutput().getText();

// 首块处理

if (isFirstChunk.getAndSet(false)) {

OpenAiStreamResponse firstResp = buildBaseResp(responseId, created, request.getModel());

OpenAiChoice firstChoice = new OpenAiChoice();

firstChoice.setIndex(0);

firstChoice.setDelta(new OpenAiDelta("assistant", "", null, null));

firstChoice.setLogprobs(null);

firstChoice.setFinish_reason(null);

firstChoice.setMatched_stop(null);

firstResp.setChoices(List.of(firstChoice));

firstResp.setUsage(null);

return serialize(firstResp);

}

// 中间块处理

if (content == null || content.isBlank()) {

// 不能return null,不然会导致 returned a null value异常,onErrorResume

return "";

}

// 累计tokens

Usage usage = usageRef.get();

usage.setCompletion_tokens(usage.getCompletion_tokens() + 1);

usage.setTotal_tokens(usage.getPrompt_tokens() + usage.getCompletion_tokens());

usageRef.set(usage);

OpenAiStreamResponse resp = buildBaseResp(responseId, created, request.getModel());

OpenAiChoice choice = new OpenAiChoice();

choice.setIndex(0);

choice.setDelta(new OpenAiDelta(null, content, null, null));

choice.setLogprobs(null);

choice.setFinish_reason(null);

choice.setMatched_stop(null);

resp.setChoices(List.of(choice));

resp.setUsage(null);

return serialize(resp);

})

.filter(s -> s != null && !s.isBlank())

.doFinally(signalType -> {

if (signalType == SignalType.ON_COMPLETE) {

isFinished.set(true);

}

})

// 拼接结束块

.concatWith(Flux.create(sink -> {

if (isFinished.get() && request.getStream_options() != null && request.getStream_options().getInclude_usage()) {

// 结束块1:finish_reason=stop

OpenAiStreamResponse stopResp = buildBaseResp(responseId, created, request.getModel());

OpenAiChoice stopChoice = new OpenAiChoice();

stopChoice.setIndex(0);

stopChoice.setDelta(new OpenAiDelta(null, null, null, null));

stopChoice.setLogprobs(null);

stopChoice.setFinish_reason("stop");

stopChoice.setMatched_stop(151645);

stopResp.setChoices(List.of(stopChoice));

stopResp.setUsage(null);

sink.next(serialize(stopResp));

// 结束块2:返回usage(如果开启include_usage)

OpenAiStreamResponse usageResp = buildBaseResp(responseId, created, request.getModel());

usageResp.setChoices(List.of());

usageResp.setUsage(usageRef.get());

sink.next(serialize(usageResp));

}

// todo 最终结束标识 只发送[DONE],千万别加\n\n,不然会导致Cherry Studio解析这个[DONE],

// 然后报错JSON parsing failed: Text: [DONE] . Error message: Unexpected token 'D', "[DONE] " is not valid JSON,

// 暂时不清楚为啥

sink.next("[DONE]");

sink.complete();

}))

// 异常处理

.onErrorResume(e -> {

log.error("发生异常:{}", e.getMessage(), e);

return Flux.just("{\"error\": \"" + e.getMessage() + "\"}", "[DONE]");

});

}

/**

* 将前端传递的Message转换为Spring AI的Message列表

*/

private List<Message> convertToAiMessages(List<ChatCompletionRequest.Message> requestMessages) {

return requestMessages.stream()

.map(msg -> switch (msg.getRole()) {

case "system" -> new SystemMessage(msg.getContent());

case "assistant" -> new AssistantMessage(msg.getContent());

default -> new UserMessage(msg.getContent());

})

.collect(Collectors.toList());

}

/**

* 构建基础响应(支持自定义模型名)

*/

private OpenAiStreamResponse buildBaseResp(String id, long created, String model) {

OpenAiStreamResponse resp = new OpenAiStreamResponse();

resp.setId(id);

resp.setObject("chat.completion.chunk");

resp.setCreated(created);

resp.setModel(model);

return resp;

}

/**

* 统一序列化 + SSE包装

*/

private String serialize(OpenAiStreamResponse resp) {

try {

return objectMapper.writeValueAsString(resp);

} catch (JsonProcessingException e) {

throw new RuntimeException("JSON序列化失败", e);

}

}

// ========== 严格对齐千问流式格式的实体类 ==========

@Data

@NoArgsConstructor

@AllArgsConstructor

@JsonInclude(JsonInclude.Include.NON_NULL) // null字段正常显示,不忽略

static class OpenAiStreamResponse {

private String id;

private String object;

private long created;

private String model;

private List<OpenAiChoice> choices;

private Usage usage;

}

@Data

@NoArgsConstructor

@AllArgsConstructor

@JsonInclude(JsonInclude.Include.NON_NULL)

static class OpenAiChoice {

private int index;

private OpenAiDelta delta;

private Object logprobs; // 固定null,用Object兼容

private String finish_reason;

private Integer matched_stop;

}

@Data

@NoArgsConstructor

@AllArgsConstructor

@JsonInclude(JsonInclude.Include.NON_NULL)

static class OpenAiDelta {

private String role;

private String content;

private Object reasoning_content;

private Object tool_calls;

}

@Data

@NoArgsConstructor

@AllArgsConstructor

@JsonInclude(JsonInclude.Include.NON_NULL)

static class Usage {

private Object prompt_tokens_details;

private Integer prompt_tokens;

private Integer total_tokens;

private Integer completion_tokens;

private Object reasoning_tokens_details;

private Integer reasoning_tokens;

}

@Data

public static class ChatCompletionRequest {

/**

* 模型名称

*/

private String model = "qwen3-32B";

/**

* 最大生成token数

*/

private Integer max_tokens = 4096;

/**

* 温度

*/

private Float temperature = 1.0f;

/**

* 核采样

*/

private Float top_p = 1.0f;

/**

* 是否启用思考

*/

private Boolean enable_thinking = true;

/**

* 思考预算

*/

private Integer thinking_budget = 2918;

/**

* 对话消息列表

*/

private List<Message> messages;

/**

* 是否流式返回

*/

private Boolean stream = true;

/**

* 流式选项

*/

private StreamOptions stream_options;

/**

* 对话消息体

*/

@Data

static class Message {

private String role; // user/assistant/system

private String content;

}

/**

* 流式选项

*/

@Data

static class StreamOptions {

/**

* 是否返回usage信息

*/

private Boolean include_usage = true;

}

}

}pom.xml

放一个我的pom.xml,删除了一些依赖。也可以用自己的pom弄,需要注意的一点是spring-boot-starter-webflux依赖,如果不是webflux项目,这个就不用加了。

问AI的时候也需要注意一些,不然他会告诉你,没加这个依赖,就算控制层返回的是Flux也会弄成同步输出。

xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>3.5.8</version>

<relativePath/>

</parent>

<groupId>com.xxx.xxx</groupId>

<artifactId>ai</artifactId>

<version>1.0.1</version>

<name>aiTest</name>

<description>测试llm调用</description>

<properties>

<java.version>21</java.version>

<spring-ai.version>1.1.1</spring-ai.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-validation</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-model-openai</artifactId>

</dependency>

<!-- 这个不用加,spring ai已经引入了一个spring-webflux依赖,而mvc也对Flux等返回值做了处理-->

<!-- <dependency>-->

<!-- <groupId>org.springframework.boot</groupId>-->

<!-- <artifactId>spring-boot-starter-webflux</artifactId>-->

<!-- </dependency>-->

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>com.baomidou</groupId>

<artifactId>mybatis-plus-spring-boot3-starter</artifactId>

<version>3.5.12</version>

</dependency>

<dependency>

<groupId>org.apache.httpcomponents.client5</groupId>

<artifactId>httpclient5</artifactId>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<configuration>

<source>21</source>

<target>21</target>

</configuration>

</plugin>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<version>3.4.7</version>

<executions>

<execution>

<goals>

<goal>repackage</goal>

</goals>

<configuration>

<!-- <classifier>exec</classifier>-->

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-bom</artifactId>

<version>${spring-ai.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

</project>效果截图